Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Research bias

Types of Bias in Research | Definition & Examples

Research bias results from any deviation from the truth, causing distorted results and wrong conclusions. Bias can occur at any phase of your research, including during data collection , data analysis , interpretation, or publication. Research bias can occur in both qualitative and quantitative research .

Understanding research bias is important for several reasons.

- Bias exists in all research, across research designs , and is difficult to eliminate.

- Bias can occur at any stage of the research process .

- Bias impacts the validity and reliability of your findings, leading to misinterpretation of data.

It is almost impossible to conduct a study without some degree of research bias. It’s crucial for you to be aware of the potential types of bias, so you can minimize them.

For example, the success rate of the program will likely be affected if participants start to drop out ( attrition ). Participants who become disillusioned due to not losing weight may drop out, while those who succeed in losing weight are more likely to continue. This in turn may bias the findings towards more favorable results.

Table of contents

Information bias, interviewer bias.

- Publication bias

Researcher bias

Response bias.

Selection bias

Cognitive bias

How to avoid bias in research

Other types of research bias, frequently asked questions about research bias.

Information bias , also called measurement bias, arises when key study variables are inaccurately measured or classified. Information bias occurs during the data collection step and is common in research studies that involve self-reporting and retrospective data collection. It can also result from poor interviewing techniques or differing levels of recall from participants.

The main types of information bias are:

- Recall bias

- Observer bias

Performance bias

Regression to the mean (rtm).

Over a period of four weeks, you ask students to keep a journal, noting how much time they spent on their smartphones along with any symptoms like muscle twitches, aches, or fatigue.

Recall bias is a type of information bias. It occurs when respondents are asked to recall events in the past and is common in studies that involve self-reporting.

As a rule of thumb, infrequent events (e.g., buying a house or a car) will be memorable for longer periods of time than routine events (e.g., daily use of public transportation). You can reduce recall bias by running a pilot survey and carefully testing recall periods. If possible, test both shorter and longer periods, checking for differences in recall.

- A group of children who have been diagnosed, called the case group

- A group of children who have not been diagnosed, called the control group

Since the parents are being asked to recall what their children generally ate over a period of several years, there is high potential for recall bias in the case group.

The best way to reduce recall bias is by ensuring your control group will have similar levels of recall bias to your case group. Parents of children who have childhood cancer, which is a serious health problem, are likely to be quite concerned about what may have contributed to the cancer.

Thus, if asked by researchers, these parents are likely to think very hard about what their child ate or did not eat in their first years of life. Parents of children with other serious health problems (aside from cancer) are also likely to be quite concerned about any diet-related question that researchers ask about.

Observer bias is the tendency of research participants to see what they expect or want to see, rather than what is actually occurring. Observer bias can affect the results in observationa l and experimental studies, where subjective judgment (such as assessing a medical image) or measurement (such as rounding blood pressure readings up or down) is part of the d ata collection process.

Observer bias leads to over- or underestimation of true values, which in turn compromise the validity of your findings. You can reduce observer bias by using double-blinded and single-blinded research methods.

Based on discussions you had with other researchers before starting your observations , you are inclined to think that medical staff tend to simply call each other when they need specific patient details or have questions about treatments.

At the end of the observation period, you compare notes with your colleague. Your conclusion was that medical staff tend to favor phone calls when seeking information, while your colleague noted down that medical staff mostly rely on face-to-face discussions. Seeing that your expectations may have influenced your observations, you and your colleague decide to conduct semi-structured interviews with medical staff to clarify the observed events. Note: Observer bias and actor–observer bias are not the same thing.

Performance bias is unequal care between study groups. Performance bias occurs mainly in medical research experiments, if participants have knowledge of the planned intervention, therapy, or drug trial before it begins.

Studies about nutrition, exercise outcomes, or surgical interventions are very susceptible to this type of bias. It can be minimized by using blinding , which prevents participants and/or researchers from knowing who is in the control or treatment groups. If blinding is not possible, then using objective outcomes (such as hospital admission data) is the best approach.

When the subjects of an experimental study change or improve their behavior because they are aware they are being studied, this is called the Hawthorne effect (or observer effect). Similarly, the John Henry effect occurs when members of a control group are aware they are being compared to the experimental group. This causes them to alter their behavior in an effort to compensate for their perceived disadvantage.

Regression to the mean (RTM) is a statistical phenomenon that refers to the fact that a variable that shows an extreme value on its first measurement will tend to be closer to the center of its distribution on a second measurement.

Medical research is particularly sensitive to RTM. Here, interventions aimed at a group or a characteristic that is very different from the average (e.g., people with high blood pressure) will appear to be successful because of the regression to the mean. This can lead researchers to misinterpret results, describing a specific intervention as causal when the change in the extreme groups would have happened anyway.

In general, among people with depression, certain physical and mental characteristics have been observed to deviate from the population mean .

This could lead you to think that the intervention was effective when those treated showed improvement on measured post-treatment indicators, such as reduced severity of depressive episodes.

However, given that such characteristics deviate more from the population mean in people with depression than in people without depression, this improvement could be attributed to RTM.

Interviewer bias stems from the person conducting the research study. It can result from the way they ask questions or react to responses, but also from any aspect of their identity, such as their sex, ethnicity, social class, or perceived attractiveness.

Interviewer bias distorts responses, especially when the characteristics relate in some way to the research topic. Interviewer bias can also affect the interviewer’s ability to establish rapport with the interviewees, causing them to feel less comfortable giving their honest opinions about sensitive or personal topics.

Participant: “I like to solve puzzles, or sometimes do some gardening.”

You: “I love gardening, too!”

In this case, seeing your enthusiastic reaction could lead the participant to talk more about gardening.

Establishing trust between you and your interviewees is crucial in order to ensure that they feel comfortable opening up and revealing their true thoughts and feelings. At the same time, being overly empathetic can influence the responses of your interviewees, as seen above.

Publication bias occurs when the decision to publish research findings is based on their nature or the direction of their results. Studies reporting results that are perceived as positive, statistically significant , or favoring the study hypotheses are more likely to be published due to publication bias.

Publication bias is related to data dredging (also called p -hacking ), where statistical tests on a set of data are run until something statistically significant happens. As academic journals tend to prefer publishing statistically significant results, this can pressure researchers to only submit statistically significant results. P -hacking can also involve excluding participants or stopping data collection once a p value of 0.05 is reached. However, this leads to false positive results and an overrepresentation of positive results in published academic literature.

Researcher bias occurs when the researcher’s beliefs or expectations influence the research design or data collection process. Researcher bias can be deliberate (such as claiming that an intervention worked even if it didn’t) or unconscious (such as letting personal feelings, stereotypes, or assumptions influence research questions ).

The unconscious form of researcher bias is associated with the Pygmalion effect (or Rosenthal effect ), where the researcher’s high expectations (e.g., that patients assigned to a treatment group will succeed) lead to better performance and better outcomes.

Researcher bias is also sometimes called experimenter bias, but it applies to all types of investigative projects, rather than only to experimental designs .

- Good question: What are your views on alcohol consumption among your peers?

- Bad question: Do you think it’s okay for young people to drink so much?

Response bias is a general term used to describe a number of different situations where respondents tend to provide inaccurate or false answers to self-report questions, such as those asked on surveys or in structured interviews .

This happens because when people are asked a question (e.g., during an interview ), they integrate multiple sources of information to generate their responses. Because of that, any aspect of a research study may potentially bias a respondent. Examples include the phrasing of questions in surveys, how participants perceive the researcher, or the desire of the participant to please the researcher and to provide socially desirable responses.

Response bias also occurs in experimental medical research. When outcomes are based on patients’ reports, a placebo effect can occur. Here, patients report an improvement despite having received a placebo, not an active medical treatment.

While interviewing a student, you ask them:

“Do you think it’s okay to cheat on an exam?”

Common types of response bias are:

Acquiescence bias

Demand characteristics.

- Social desirability bias

Courtesy bias

- Question-order bias

Extreme responding

Acquiescence bias is the tendency of respondents to agree with a statement when faced with binary response options like “agree/disagree,” “yes/no,” or “true/false.” Acquiescence is sometimes referred to as “yea-saying.”

This type of bias occurs either due to the participant’s personality (i.e., some people are more likely to agree with statements than disagree, regardless of their content) or because participants perceive the researcher as an expert and are more inclined to agree with the statements presented to them.

Q: Are you a social person?

People who are inclined to agree with statements presented to them are at risk of selecting the first option, even if it isn’t fully supported by their lived experiences.

In order to control for acquiescence, consider tweaking your phrasing to encourage respondents to make a choice truly based on their preferences. Here’s an example:

Q: What would you prefer?

- A quiet night in

- A night out with friends

Demand characteristics are cues that could reveal the research agenda to participants, risking a change in their behaviors or views. Ensuring that participants are not aware of the research objectives is the best way to avoid this type of bias.

On each occasion, patients reported their pain as being less than prior to the operation. While at face value this seems to suggest that the operation does indeed lead to less pain, there is a demand characteristic at play. During the interviews, the researcher would unconsciously frown whenever patients reported more post-op pain. This increased the risk of patients figuring out that the researcher was hoping that the operation would have an advantageous effect.

Social desirability bias is the tendency of participants to give responses that they believe will be viewed favorably by the researcher or other participants. It often affects studies that focus on sensitive topics, such as alcohol consumption or sexual behavior.

You are conducting face-to-face semi-structured interviews with a number of employees from different departments. When asked whether they would be interested in a smoking cessation program, there was widespread enthusiasm for the idea.

Note that while social desirability and demand characteristics may sound similar, there is a key difference between them. Social desirability is about conforming to social norms, while demand characteristics revolve around the purpose of the research.

Courtesy bias stems from a reluctance to give negative feedback, so as to be polite to the person asking the question. Small-group interviewing where participants relate in some way to each other (e.g., a student, a teacher, and a dean) is especially prone to this type of bias.

Question order bias

Question order bias occurs when the order in which interview questions are asked influences the way the respondent interprets and evaluates them. This occurs especially when previous questions provide context for subsequent questions.

When answering subsequent questions, respondents may orient their answers to previous questions (called a halo effect ), which can lead to systematic distortion of the responses.

Extreme responding is the tendency of a respondent to answer in the extreme, choosing the lowest or highest response available, even if that is not their true opinion. Extreme responding is common in surveys using Likert scales , and it distorts people’s true attitudes and opinions.

Disposition towards the survey can be a source of extreme responding, as well as cultural components. For example, people coming from collectivist cultures tend to exhibit extreme responses in terms of agreement, while respondents indifferent to the questions asked may exhibit extreme responses in terms of disagreement.

Selection bias is a general term describing situations where bias is introduced into the research from factors affecting the study population.

Common types of selection bias are:

Sampling or ascertainment bias

- Attrition bias

- Self-selection (or volunteer) bias

- Survivorship bias

- Nonresponse bias

- Undercoverage bias

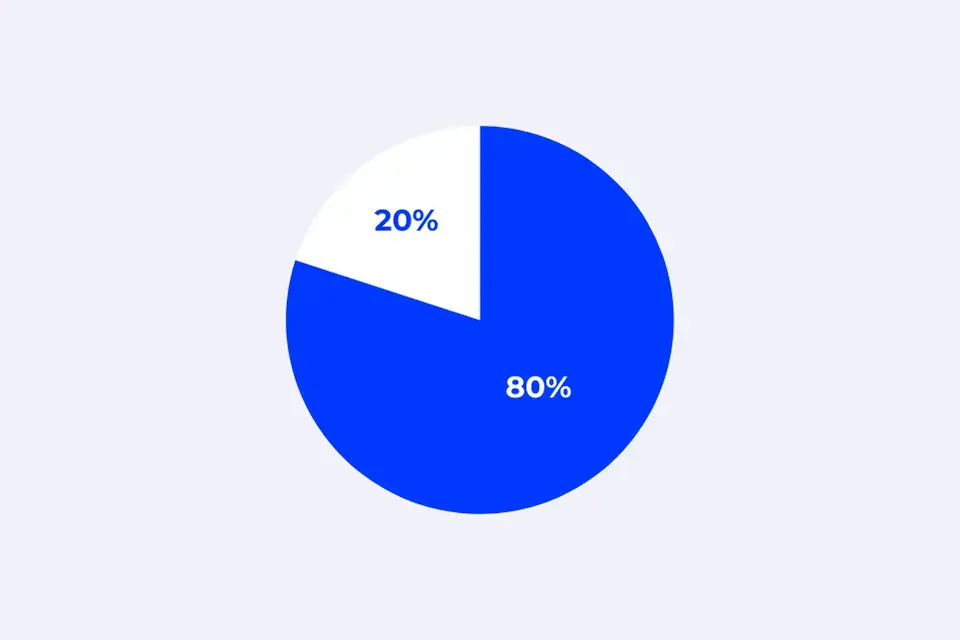

Sampling bias occurs when your sample (the individuals, groups, or data you obtain for your research) is selected in a way that is not representative of the population you are analyzing. Sampling bias threatens the external validity of your findings and influences the generalizability of your results.

The easiest way to prevent sampling bias is to use a probability sampling method . This way, each member of the population you are studying has an equal chance of being included in your sample.

Sampling bias is often referred to as ascertainment bias in the medical field.

Attrition bias occurs when participants who drop out of a study systematically differ from those who remain in the study. Attrition bias is especially problematic in randomized controlled trials for medical research because participants who do not like the experience or have unwanted side effects can drop out and affect your results.

You can minimize attrition bias by offering incentives for participants to complete the study (e.g., a gift card if they successfully attend every session). It’s also a good practice to recruit more participants than you need, or minimize the number of follow-up sessions or questions.

You provide a treatment group with weekly one-hour sessions over a two-month period, while a control group attends sessions on an unrelated topic. You complete five waves of data collection to compare outcomes: a pretest survey, three surveys during the program, and a posttest survey.

Self-selection or volunteer bias

Self-selection bias (also called volunteer bias ) occurs when individuals who volunteer for a study have particular characteristics that matter for the purposes of the study.

Volunteer bias leads to biased data, as the respondents who choose to participate will not represent your entire target population. You can avoid this type of bias by using random assignment —i.e., placing participants in a control group or a treatment group after they have volunteered to participate in the study.

Closely related to volunteer bias is nonresponse bias , which occurs when a research subject declines to participate in a particular study or drops out before the study’s completion.

Considering that the hospital is located in an affluent part of the city, volunteers are more likely to have a higher socioeconomic standing, higher education, and better nutrition than the general population.

Survivorship bias occurs when you do not evaluate your data set in its entirety: for example, by only analyzing the patients who survived a clinical trial.

This strongly increases the likelihood that you draw (incorrect) conclusions based upon those who have passed some sort of selection process—focusing on “survivors” and forgetting those who went through a similar process and did not survive.

Note that “survival” does not always mean that participants died! Rather, it signifies that participants did not successfully complete the intervention.

However, most college dropouts do not become billionaires. In fact, there are many more aspiring entrepreneurs who dropped out of college to start companies and failed than succeeded.

Nonresponse bias occurs when those who do not respond to a survey or research project are different from those who do in ways that are critical to the goals of the research. This is very common in survey research, when participants are unable or unwilling to participate due to factors like lack of the necessary skills, lack of time, or guilt or shame related to the topic.

You can mitigate nonresponse bias by offering the survey in different formats (e.g., an online survey, but also a paper version sent via post), ensuring confidentiality , and sending them reminders to complete the survey.

You notice that your surveys were conducted during business hours, when the working-age residents were less likely to be home.

Undercoverage bias occurs when you only sample from a subset of the population you are interested in. Online surveys can be particularly susceptible to undercoverage bias. Despite being more cost-effective than other methods, they can introduce undercoverage bias as a result of excluding people who do not use the internet.

Cognitive bias refers to a set of predictable (i.e., nonrandom) errors in thinking that arise from our limited ability to process information objectively. Rather, our judgment is influenced by our values, memories, and other personal traits. These create “ mental shortcuts” that help us process information intuitively and decide faster. However, cognitive bias can also cause us to misunderstand or misinterpret situations, information, or other people.

Because of cognitive bias, people often perceive events to be more predictable after they happen.

Although there is no general agreement on how many types of cognitive bias exist, some common types are:

- Anchoring bias

- Framing effect

- Actor-observer bias

- Availability heuristic (or availability bias)

- Confirmation bias

- Halo effect

- The Baader-Meinhof phenomenon

Anchoring bias

Anchoring bias is people’s tendency to fixate on the first piece of information they receive, especially when it concerns numbers. This piece of information becomes a reference point or anchor. Because of that, people base all subsequent decisions on this anchor. For example, initial offers have a stronger influence on the outcome of negotiations than subsequent ones.

- Framing effect

Framing effect refers to our tendency to decide based on how the information about the decision is presented to us. In other words, our response depends on whether the option is presented in a negative or positive light, e.g., gain or loss, reward or punishment, etc. This means that the same information can be more or less attractive depending on the wording or what features are highlighted.

Actor–observer bias

Actor–observer bias occurs when you attribute the behavior of others to internal factors, like skill or personality, but attribute your own behavior to external or situational factors.

In other words, when you are the actor in a situation, you are more likely to link events to external factors, such as your surroundings or environment. However, when you are observing the behavior of others, you are more likely to associate behavior with their personality, nature, or temperament.

One interviewee recalls a morning when it was raining heavily. They were rushing to drop off their kids at school in order to get to work on time. As they were driving down the highway, another car cut them off as they were trying to merge. They tell you how frustrated they felt and exclaim that the other driver must have been a very rude person.

At another point, the same interviewee recalls that they did something similar: accidentally cutting off another driver while trying to take the correct exit. However, this time, the interviewee claimed that they always drive very carefully, blaming their mistake on poor visibility due to the rain.

- Availability heuristic

Availability heuristic (or availability bias) describes the tendency to evaluate a topic using the information we can quickly recall to our mind, i.e., that is available to us. However, this is not necessarily the best information, rather it’s the most vivid or recent. Even so, due to this mental shortcut, we tend to think that what we can recall must be right and ignore any other information.

- Confirmation bias

Confirmation bias is the tendency to seek out information in a way that supports our existing beliefs while also rejecting any information that contradicts those beliefs. Confirmation bias is often unintentional but still results in skewed results and poor decision-making.

Let’s say you grew up with a parent in the military. Chances are that you have a lot of complex emotions around overseas deployments. This can lead you to over-emphasize findings that “prove” that your lived experience is the case for most families, neglecting other explanations and experiences.

The halo effect refers to situations whereby our general impression about a person, a brand, or a product is shaped by a single trait. It happens, for instance, when we automatically make positive assumptions about people based on something positive we notice, while in reality, we know little about them.

The Baader-Meinhof phenomenon

The Baader-Meinhof phenomenon (or frequency illusion) occurs when something that you recently learned seems to appear “everywhere” soon after it was first brought to your attention. However, this is not the case. What has increased is your awareness of something, such as a new word or an old song you never knew existed, not their frequency.

While very difficult to eliminate entirely, research bias can be mitigated through proper study design and implementation. Here are some tips to keep in mind as you get started.

- Clearly explain in your methodology section how your research design will help you meet the research objectives and why this is the most appropriate research design.

- In quantitative studies , make sure that you use probability sampling to select the participants. If you’re running an experiment, make sure you use random assignment to assign your control and treatment groups.

- Account for participants who withdraw or are lost to follow-up during the study. If they are withdrawing for a particular reason, it could bias your results. This applies especially to longer-term or longitudinal studies .

- Use triangulation to enhance the validity and credibility of your findings.

- Phrase your survey or interview questions in a neutral, non-judgmental tone. Be very careful that your questions do not steer your participants in any particular direction.

- Consider using a reflexive journal. Here, you can log the details of each interview , paying special attention to any influence you may have had on participants. You can include these in your final analysis.

- Baader–Meinhof phenomenon

- Sampling bias

- Ascertainment bias

- Self-selection bias

- Hawthorne effect

- Omitted variable bias

- Pygmalion effect

- Placebo effect

Research bias affects the validity and reliability of your research findings , leading to false conclusions and a misinterpretation of the truth. This can have serious implications in areas like medical research where, for example, a new form of treatment may be evaluated.

Observer bias occurs when the researcher’s assumptions, views, or preconceptions influence what they see and record in a study, while actor–observer bias refers to situations where respondents attribute internal factors (e.g., bad character) to justify other’s behavior and external factors (difficult circumstances) to justify the same behavior in themselves.

Response bias is a general term used to describe a number of different conditions or factors that cue respondents to provide inaccurate or false answers during surveys or interviews. These factors range from the interviewer’s perceived social position or appearance to the the phrasing of questions in surveys.

Nonresponse bias occurs when the people who complete a survey are different from those who did not, in ways that are relevant to the research topic. Nonresponse can happen because people are either not willing or not able to participate.

Is this article helpful?

Other students also liked.

- Attrition Bias | Examples, Explanation, Prevention

- Observer Bias | Definition, Examples, Prevention

- What Is Social Desirability Bias? | Definition & Examples

More interesting articles

- Demand Characteristics | Definition, Examples & Control

- Hostile Attribution Bias | Definition & Examples

- Regression to the Mean | Definition & Examples

- Representativeness Heuristic | Example & Definition

- Sampling Bias and How to Avoid It | Types & Examples

- Self-Fulfilling Prophecy | Definition & Examples

- The Availability Heuristic | Example & Definition

- The Baader–Meinhof Phenomenon Explained

- What Is a Ceiling Effect? | Definition & Examples

- What Is Actor-Observer Bias? | Definition & Examples

- What Is Affinity Bias? | Definition & Examples

- What Is Anchoring Bias? | Definition & Examples

- What Is Ascertainment Bias? | Definition & Examples

- What Is Belief Bias? | Definition & Examples

- What Is Bias for Action? | Definition & Examples

- What Is Cognitive Bias? | Definition, Types, & Examples

- What Is Confirmation Bias? | Definition & Examples

- What Is Conformity Bias? | Definition & Examples

- What Is Correspondence Bias? | Definition & Example

- What Is Explicit Bias? | Definition & Examples

- What Is Generalizability? | Definition & Examples

- What Is Hindsight Bias? | Definition & Examples

- What Is Implicit Bias? | Definition & Examples

- What Is Information Bias? | Definition & Examples

- What Is Ingroup Bias? | Definition & Examples

- What Is Negativity Bias? | Definition & Examples

- What Is Nonresponse Bias? | Definition & Example

- What Is Normalcy Bias? | Definition & Example

- What Is Omitted Variable Bias? | Definition & Examples

- What Is Optimism Bias? | Definition & Examples

- What Is Outgroup Bias? | Definition & Examples

- What Is Overconfidence Bias? | Definition & Examples

- What Is Perception Bias? | Definition & Examples

- What Is Primacy Bias? | Definition & Example

- What Is Publication Bias? | Definition & Examples

- What Is Recall Bias? | Definition & Examples

- What Is Recency Bias? | Definition & Examples

- What Is Response Bias? | Definition & Examples

- What Is Selection Bias? | Definition & Examples

- What Is Self-Selection Bias? | Definition & Example

- What Is Self-Serving Bias? | Definition & Example

- What Is Status Quo Bias? | Definition & Examples

- What Is Survivorship Bias? | Definition & Examples

- What Is the Affect Heuristic? | Example & Definition

- What Is the Egocentric Bias? | Definition & Examples

- What Is the Framing Effect? | Definition & Examples

- What Is the Halo Effect? | Definition & Examples

- What Is the Hawthorne Effect? | Definition & Examples

- What Is the Placebo Effect? | Definition & Examples

- What Is the Pygmalion Effect? | Definition & Examples

- What Is Unconscious Bias? | Definition & Examples

- What Is Undercoverage Bias? | Definition & Example

- What Is Vividness Bias? | Definition & Examples

The Ultimate Guide to Qualitative Research - Part 1: The Basics

- Introduction and overview

- What is qualitative research?

- What is qualitative data?

- Examples of qualitative data

- Qualitative vs. quantitative research

- Mixed methods

- Qualitative research preparation

- Theoretical perspective

- Theoretical framework

- Literature reviews

- Research question

- Conceptual framework

- Conceptual vs. theoretical framework

- Data collection

- Qualitative research methods

- Focus groups

- Observational research

- Case studies

- Ethnographical research

- Ethical considerations

- Confidentiality and privacy

What is research bias?

Understanding unconscious bias, how to avoid bias in research, bias and subjectivity in research.

- Power dynamics

- Reflexivity

Bias in research

In a purely objective world, research bias would not exist because knowledge would be a fixed and unmovable resource; either one knows about a particular concept or phenomenon, or they don't. However, qualitative research and the social sciences both acknowledge that subjectivity and bias exist in every aspect of the social world, which naturally includes the research process too. This bias is manifest in the many different ways that knowledge is understood, constructed, and negotiated, both in and out of research.

Understanding research bias has profound implications for data collection methods and data analysis , requiring researchers to take particular care of how to account for the insights generated from their data .

Research bias, often unavoidable, is a systematic error that can creep into any stage of the research process , skewing our understanding and interpretation of findings. From data collection to analysis, interpretation , and even publication , bias can distort the truth we seek to capture and communicate in our research.

It’s also important to distinguish between bias and subjectivity, especially when engaging in qualitative research . Most qualitative methodologies are based on epistemological and ontological assumptions that there is no such thing as a fixed or objective world that exists “out there” that can be empirically measured and understood through research. Rather, many qualitative researchers embrace the socially constructed nature of our reality and thus recognize that all data is produced within a particular context by participants with their own perspectives and interpretations. Moreover, the researcher’s own subjective experiences inevitably shape how they make sense of the data. These subjectivities are considered to be strengths, not limitations, of qualitative research approaches, because they open new avenues for knowledge generation. This is also why reflexivity is so important in qualitative research. When we refer to bias in this guide, on the other hand, we are referring to systematic errors that can negatively affect the research process but that can be mitigated through researchers’ careful efforts.

To fully grasp what research bias is, it's essential to understand the dual nature of bias. Bias is not inherently evil. It's simply a tendency, inclination, or prejudice for or against something. In our daily lives, we're subject to countless biases, many of which are unconscious. They help us navigate our world, make quick decisions, and understand complex situations. But when conducting research, these same biases can cause significant issues.

Research bias can affect the validity and credibility of research findings, leading to erroneous conclusions. It can emerge from the researcher's subconscious preferences or the methodological design of the study itself. For instance, if a researcher unconsciously favors a particular outcome of the study, this preference could affect how they interpret the results, leading to a type of bias known as confirmation bias.

Research bias can also arise due to the characteristics of study participants. If the researcher selectively recruits participants who are more likely to produce desired outcomes, this can result in selection bias.

Another form of bias can stem from data collection methods . If a survey question is phrased in a way that encourages a particular response, this can introduce response bias. Moreover, inappropriate survey questions can have a detrimental effect on future research if such studies are seen by the general population as biased toward particular outcomes depending on the preferences of the researcher.

Bias can also occur during data analysis . In qualitative research for instance, the researcher's preconceived notions and expectations can influence how they interpret and code qualitative data, a type of bias known as interpretation bias. It's also important to note that quantitative research is not free of bias either, as sampling bias and measurement bias can threaten the validity of any research findings.

Given these examples, it's clear that research bias is a complex issue that can take many forms and emerge at any stage in the research process. This section will delve deeper into specific types of research bias, provide examples, discuss why it's an issue, and provide strategies for identifying and mitigating bias in research.

What is an example of bias in research?

Bias can appear in numerous ways. One example is confirmation bias, where the researcher has a preconceived explanation for what is going on in their data, and any disconfirming evidence is (unconsciously) ignored. For instance, a researcher conducting a study on daily exercise habits might be inclined to conclude that meditation practices lead to greater engagement in exercise because that researcher has personally experienced these benefits. However, conducting rigorous research entails assessing all the data systematically and verifying one’s conclusions by checking for both supporting and refuting evidence.

What is a common bias in research?

Confirmation bias is one of the most common forms of bias in research. It happens when researchers unconsciously focus on data that supports their ideas while ignoring or undervaluing data that contradicts their ideas. This bias can lead researchers to mistakenly confirm their theories, despite having insufficient or conflicting evidence.

What are the different types of bias?

There are several types of research bias, each presenting unique challenges. Some common types include:

Confirmation bias: As already mentioned, this happens when a researcher focuses on evidence supporting their theory while overlooking contradictory evidence.

Selection bias: This occurs when the researcher's method of choosing participants skews the sample in a particular direction.

Response bias: This happens when participants in a study respond inaccurately or falsely, often due to misleading or poorly worded questions.

Observer bias (or researcher bias): This occurs when the researcher unintentionally influences the results because of their expectations or preferences.

Publication bias: This type of bias arises when studies with positive results are more likely to get published, while studies with negative or null results are often ignored.

Analysis bias: This type of bias occurs when the data is manipulated or analyzed in a way that leads to a particular result, whether intentionally or unintentionally.

What is an example of researcher bias?

Researcher bias, also known as observer bias, can occur when a researcher's expectations or personal beliefs influence the results of a study. For instance, if a researcher believes that a particular therapy is effective, they might unconsciously interpret ambiguous results in a way that supports the efficacy of the therapy, even if the evidence is not strong enough.

Even quantitative research methodologies are not immune from bias from researchers. Market research surveys or clinical trial research, for example, may encounter bias when the researcher chooses a particular population or methodology to achieve a specific research outcome. Questions in customer feedback surveys whose data is employed in quantitative analysis can be structured in such a way as to bias survey respondents toward certain desired answers.

Turn your data into findings with ATLAS.ti

Key insights are at your fingertips with our powerful interface. See how with a free trial.

Identifying and avoiding bias in research

As we will remind you throughout this chapter, bias is not a phenomenon that can be removed altogether, nor should we think of it as something that should be eliminated. In a subjective world involving humans as researchers and research participants, bias is unavoidable and almost necessary for understanding social behavior. The section on reflexivity later in this guide will highlight how different perspectives among researchers and human subjects are addressed in qualitative research. That said, bias in excess can place the credibility of a study's findings into serious question. Scholars who read your research need to know what new knowledge you are generating, how it was generated, and why the knowledge you present should be considered persuasive. With that in mind, let's look at how bias can be identified and, where it interferes with research, minimized.

How do you identify bias in research?

Identifying bias involves a critical examination of your entire research study involving the formulation of the research question and hypothesis , the selection of study participants, the methods for data collection, and the analysis and interpretation of data. Researchers need to assess whether each stage has been influenced by bias that may have skewed the results. Tools such as bias checklists or guidelines, peer review , and reflexivity (reflecting on one's own biases) can be instrumental in identifying bias.

How do you identify research bias?

Identifying research bias often involves careful scrutiny of the research methodology and the researcher's interpretations. Was the sample of participants relevant to the research question ? Were the interview or survey questions leading? Were there any conflicts of interest that could have influenced the results? It also requires an understanding of the different types of bias and how they might manifest in a research context. Does the bias occur in the data collection process or when the researcher is analyzing data?

Research transparency requires a careful accounting of how the study was designed, conducted, and analyzed. In qualitative research involving human subjects, the researcher is responsible for documenting the characteristics of the research population and research context. With respect to research methods, the procedures and instruments used to collect and analyze data are described in as much detail as possible.

While describing study methodologies and research participants in painstaking detail may sound cumbersome, a clear and detailed description of the research design is necessary for good research. Without this level of detail, it is difficult for your research audience to identify whether bias exists, where bias occurs, and to what extent it may threaten the credibility of your findings.

How to recognize bias in a study?

Recognizing bias in a study requires a critical approach. The researcher should question every step of the research process: Was the sample of participants selected with care? Did the data collection methods encourage open and sincere responses? Did personal beliefs or expectations influence the interpretation of the results? External peer reviews can also be helpful in recognizing bias, as others might spot potential issues that the original researcher missed.

The subsequent sections of this chapter will delve into the impacts of research bias and strategies to avoid it. Through these discussions, researchers will be better equipped to handle bias in their work and contribute to building more credible knowledge.

Unconscious biases, also known as implicit biases, are attitudes or stereotypes that influence our understanding, actions, and decisions in an unconscious manner. These biases can inadvertently infiltrate the research process, skewing the results and conclusions. This section aims to delve deeper into understanding unconscious bias, its impact on research, and strategies to mitigate it.

What is unconscious bias?

Unconscious bias refers to prejudices or social stereotypes about certain groups that individuals form outside their conscious awareness. Everyone holds unconscious beliefs about various social and identity groups, and these biases stem from a tendency to organize social worlds into categories.

How does unconscious bias infiltrate research?

Unconscious bias can infiltrate research in several ways. It can affect how researchers formulate their research questions or hypotheses , how they interact with participants, their data collection methods, and how they interpret their data . For instance, a researcher might unknowingly favor participants who share similar characteristics with them, which could lead to biased results.

Implications of unconscious bias

The implications of unconscious research bias are far-reaching. It can compromise the validity of research findings , influence the choice of research topics, and affect peer review processes . Unconscious bias can also lead to a lack of diversity in research, which can severely limit the value and impact of the findings.

Strategies to mitigate unconscious research bias

While it's challenging to completely eliminate unconscious bias, several strategies can help mitigate its impact. These include being aware of potential unconscious biases, practicing reflexivity , seeking diverse perspectives for your study, and engaging in regular bias-checking activities, such as bias training and peer debriefing .

By understanding and acknowledging unconscious bias, researchers can take steps to limit its impact on their work, leading to more robust findings.

Why is researcher bias an issue?

Research bias is a pervasive issue that researchers must diligently consider and address. It can significantly impact the credibility of findings. Here, we break down the ramifications of bias into two key areas.

How bias affects validity

Research validity refers to the accuracy of the study findings, or the coherence between the researcher’s findings and the participants’ actual experiences. When bias sneaks into a study, it can distort findings and move them further away from the realities that were shared by the research participants. For example, if a researcher's personal beliefs influence their interpretation of data , the resulting conclusions may not reflect what the data show or what participants experienced.

The transferability problem

Transferability is the extent to which your study's findings can be applied beyond the specific context or sample studied. Applying knowledge from one context to a different context is how we can progress and make informed decisions. In quantitative research , the generalizability of a study is a key component that shapes the potential impact of the findings. In qualitative research , all data and knowledge that is produced is understood to be embedded within a particular context, so the notion of generalizability takes on a slightly different meaning. Rather than assuming that the study participants are statistically representative of the entire population, qualitative researchers can reflect on which aspects of their research context bear the most weight on their findings and how these findings may be transferable to other contexts that share key similarities.

How does bias affect research?

Research bias, if not identified and mitigated, can significantly impact research outcomes. The ripple effects of research bias extend beyond individual studies, impacting the body of knowledge in a field and influencing policy and practice. Here, we delve into three specific ways bias can affect research.

Distortion of research results

Bias can lead to a distortion of your study's findings. For instance, confirmation bias can cause a researcher to focus on data that supports their interpretation while disregarding data that contradicts it. This can skew the results and create a misleading picture of the phenomenon under study.

Undermining scientific progress

When research is influenced by bias, it not only misrepresents participants’ realities but can also impede scientific progress. Biased studies can lead researchers down the wrong path, resulting in wasted resources and efforts. Moreover, it could contribute to a body of literature that is skewed or inaccurate, misleading future research and theories.

Influencing policy and practice based on flawed findings

Research often informs policy and practice. If the research is biased, it can lead to the creation of policies or practices that are ineffective or even harmful. For example, a study with selection bias might conclude that a certain intervention is effective, leading to its broad implementation. However, suppose the transferability of the study's findings was not carefully considered. In that case, it may be risky to assume that the intervention will work as well in different populations, which could lead to ineffective or inequitable outcomes.

While it's almost impossible to eliminate bias in research entirely, it's crucial to mitigate its impact as much as possible. By employing thoughtful strategies at every stage of research, we can strive towards rigor and transparency , enhancing the quality of our findings. This section will delve into specific strategies for avoiding bias.

How do you know if your research is biased?

Determining whether your research is biased involves a careful review of your research design, data collection , analysis , and interpretation . It might require you to reflect critically on your own biases and expectations and how these might have influenced your research. External peer reviews can also be instrumental in spotting potential bias.

Strategies to mitigate bias

Minimizing bias involves careful planning and execution at all stages of a research study. These strategies could include formulating clear, unbiased research questions , ensuring that your sample meaningfully represents the research problem you are studying, crafting unbiased data collection instruments, and employing systematic data analysis techniques. Transparency and reflexivity throughout the process can also help minimize bias.

Mitigating bias in data collection

To mitigate bias in data collection, ensure your questions are clear, neutral, and not leading. Triangulation, or using multiple methods or data sources, can also help to reduce bias and increase the credibility of your findings.

Mitigating bias in data analysis

During data analysis , maintaining a high level of rigor is crucial. This might involve using systematic coding schemes in qualitative research or appropriate statistical tests in quantitative research . Regularly questioning your interpretations and considering alternative explanations can help reduce bias. Peer debriefing , where you discuss your analysis and interpretations with colleagues, can also be a valuable strategy.

By using these strategies, researchers can significantly reduce the impact of bias on their research, enhancing the quality and credibility of their findings and contributing to a more robust and meaningful body of knowledge.

Impact of cultural bias in research

Cultural bias is the tendency to interpret and judge phenomena by standards inherent to one's own culture. Given the increasingly multicultural and global nature of research, understanding and addressing cultural bias is paramount. This section will explore the concept of cultural bias, its impacts on research, and strategies to mitigate it.

What is cultural bias in research?

Cultural bias refers to the potential for a researcher's cultural background, experiences, and values to influence the research process and findings. This can occur consciously or unconsciously and can lead to misinterpretation of data, unfair representation of cultures, and biased conclusions.

How does cultural bias infiltrate research?

Cultural bias can infiltrate research at various stages. It can affect the framing of research questions , the design of the study, the methods of data collection , and the interpretation of results . For instance, a researcher might unintentionally design a study that does not consider the cultural context of the participants, leading to a biased understanding of the phenomenon being studied.

Implications of cultural bias

The implications of cultural bias are profound. Cultural bias can skew your findings, limit the transferability of results, and contribute to cultural misunderstandings and stereotypes. This can ultimately lead to inaccurate or ethnocentric conclusions, further perpetuating cultural bias and inequities.

As a result, many social science fields like sociology and anthropology have been critiqued for cultural biases in research. Some of the earliest research inquiries in anthropology, for example, have had the potential to reduce entire cultures to simplistic stereotypes when compared to mainstream norms. A contemporary researcher respecting ethical and cultural boundaries, on the other hand, should seek to properly place their understanding of social and cultural practices in sufficient context without inappropriately characterizing them.

Strategies to mitigate cultural bias

Mitigating cultural bias requires a concerted effort throughout the research study. These efforts could include educating oneself about other cultures, being aware of one's own cultural biases, incorporating culturally diverse perspectives into the research process, and being sensitive and respectful of cultural differences. It might also involve including team members with diverse cultural backgrounds or seeking external cultural consultants to challenge assumptions and provide alternative perspectives.

By acknowledging and addressing cultural bias, researchers can contribute to more culturally competent, equitable, and valid research. This not only enriches the scientific body of knowledge but also promotes cultural understanding and respect.

Ready to jumpstart your research with ATLAS.ti?

Conceptualize your research project with our intuitive data analysis interface. Download a free trial today.

Keep in mind that bias is a force to be mitigated, not a phenomenon that can be eliminated altogether, and the subjectivities of each person are what make our world so complex and interesting. As things are continuously changing and adapting, research knowledge is also continuously being updated as we further develop our understanding of the world around us.

Ready to analyze your data with ATLAS.ti?

See how our intuitive software can draw key insights from your data with a free trial today.

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- Write for Us

- BMJ Journals

You are here

- Volume 17, Issue 4

- Bias in research

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- Joanna Smith 1 ,

- Helen Noble 2

- 1 School of Human and Health Sciences, University of Huddersfield , Huddersfield , UK

- 2 School of Nursing and Midwifery, Queens's University Belfast , Belfast , UK

- Correspondence to : Dr Joanna Smith , School of Human and Health Sciences, University of Huddersfield, Huddersfield HD1 3DH, UK; j.e.smith{at}hud.ac.uk

https://doi.org/10.1136/eb-2014-101946

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

The aim of this article is to outline types of ‘bias’ across research designs, and consider strategies to minimise bias. Evidence-based nursing, defined as the “process by which evidence, nursing theory, and clinical expertise are critically evaluated and considered, in conjunction with patient involvement, to provide the delivery of optimum nursing care,” 1 is central to the continued development of the nursing professional. Implementing evidence into practice requires nurses to critically evaluate research, in particular assessing the rigour in which methods were undertaken and factors that may have biased findings.

What is bias in relation to research and why is understanding bias important?

Although different study designs have specific methodological challenges and constraints, bias can occur at each stage of the research process ( table 1 ). In quantitative research, the validity and reliability are assessed using statistical tests that estimate the size of error in samples and calculating the significance of findings (typically p values or CIs). The tests and measures used to establish the validity and reliability of quantitative research cannot be applied to qualitative research. However, in the broadest context, these terms are applicable, with validity referring to the integrity and application of the methods and the precision in which the findings accurately reflect the data, and reliability referring to the consistency within the analytical processes. 4

- View inline

Types of research bias

How is bias minimised when undertaken research?

Bias exists in all study designs, and although researchers should attempt to minimise bias, outlining potential sources of bias enables greater critical evaluation of the research findings and conclusions. Researchers bring to each study their experiences, ideas, prejudices and personal philosophies, which if accounted for in advance of the study, enhance the transparency of possible research bias. Clearly articulating the rationale for and choosing an appropriate research design to meet the study aims can reduce common pitfalls in relation to bias. Ethics committees have an important role in considering whether the research design and methodological approaches are biased, and suitable to address the problem being explored. Feedback from peers, funding bodies and ethics committees is an essential part of designing research studies, and often provides valuable practical guidance in developing robust research.

In quantitative studies, selection bias is often reduced by the random selection of participants, and in the case of clinical trials randomisation of participants into comparison groups. However, not accounting for participants who withdraw from the study or are lost to follow-up can result in sample bias or change the characteristics of participants in comparison groups. 7 In qualitative research, purposeful sampling has advantages when compared with convenience sampling in that bias is reduced because the sample is constantly refined to meet the study aims. Premature closure of the selection of participants before analysis is complete can threaten the validity of a qualitative study. This can be overcome by continuing to recruit new participants into the study during data analysis until no new information emerges, known as data saturation. 8

In quantitative studies having a well-designed research protocol explicitly outlining data collection and analysis can assist in reducing bias. Feasibility studies are often undertaken to refine protocols and procedures. Bias can be reduced by maximising follow-up and where appropriate in randomised control trials analysis should be based on the intention-to-treat principle, a strategy that assesses clinical effectiveness because not everyone complies with treatment and the treatment people receive may be changed according to how they respond. Qualitative research has been criticised for lacking transparency in relation to the analytical processes employed. 4 Qualitative researchers must demonstrate rigour, associated with openness, relevance to practice and congruence of the methodological approach. Although other researchers may interpret the data differently, appreciating and understanding how the themes were developed is an essential part of demonstrating the robustness of the findings. Reducing bias can include respondent validation, constant comparisons across participant accounts, representing deviant cases and outliers, prolonged involvement or persistent observation of participants, independent analysis of the data by other researchers and triangulation. 4

In summary, minimising bias is a key consideration when designing and undertaking research. Researchers have an ethical duty to outline the limitations of studies and account for potential sources of bias. This will enable health professionals and policymakers to evaluate and scrutinise study findings, and consider these when applying findings to practice or policy.

- Wakefield AJ ,

- Anthony A ,

- ↵ The Lancet . Retraction—ileal-lymphoid-nodular hyperplasia, non-specific colitis, and pervasive developmental disorder in children . Lancet 2010 ; 375 : 445 . OpenUrl CrossRef PubMed Web of Science

- Easterbrook PJ ,

- Berlin JA ,

- Gopalan R ,

- Petticrew M ,

- Thomson H ,

- Francis J ,

- Johnston M ,

- Robertson C ,

Competing interests None.

Read the full text or download the PDF:

University Libraries

- Research Guides

- Blackboard Learn

- Interlibrary Loan

- Study Rooms

- University of Arkansas

Confronting Bias

Bias in research.

- Bias in Media

- Bias in Search Tools

- Discovering Your Own Biases

- Reducing Bias in Your Writing

- Writing Tips

Additional Reading

- Bias in research Smith J, Noble H. Bias in research. Evidence-Based Nursing 2014;17:100-101.

- Bias in Research Simundić, A. (2013). Bias in research. Biochemia Medica, 23(1), 12. doi:10.11613/BM.2013.003

- Big Pharma Entanglement with Biomedical Science in James, Jack. The Health of Populations : Beyond Medicine, Elsevier Science & Technology, 2015.

- How to Limit Bias in Experimental Research in Experimental Research Methods in Orthopedics and Trauma, edited by Hamish Simpson, and Peter Augat, Thieme Medical Publishers, Incorporated, 2015.

- Sources of method bias in social science research and recommendations on how to control it Podsakoff, P. M., MacKenzie, S. B., & Podsakoff, N. P. (2012). Sources of method bias in social science research and recommendations on how to control it. Annual Review of Psychology, 63(1), 539-569. doi:10.1146/annurev-psych-120710-100452

Words to Know

Expectancy Effect -- A particular type of experimenter effect in which the expectations of the experimenter as to the likely outcome of the experiment acts as a self-fulfilling prophecy, biasing the results in the direction of the expectation. Experimenter Effect -- A biasing effect on the results of an experiment caused by expectations or preconceptions on the part of the experimenter. Also called experimenter bias. Response Bias -- In psychometrics, any systematic tendency of a respondent to choose a particular response category in a multiple-choice questionnaire for an extraneous reason, unrelated to the variable that the response is supposed to indicate but related to the content or meaning of the question.

Definitions from Colman, A.(2015).A Dictionary of Psychology.: Oxford University Press.

Understanding research bias is important for several reasons: first, bias exists in all research, across research designs and is difficult to eliminate; second, bias can occur at each stage of the research process; third, bias impacts on the validity and reliability of study findings and misinterpretation of data can have important consequences for practice. The controversial study that suggested a link between the measles-mumps-rubella vaccine and autism in children 2 resulted in a rare retraction of the published study because of media reports that highlighted significant bias in the research process. 3 Bias occurred on several levels: the process of selecting participants was misrepresented; the sample size was too small to infer any firm conclusion from the data analysis and the results were overstated which suggested caution against widespread vaccination and an urgent need for further research. However, in the time between the original publication, and later research refuting the original findings, the uptake of measles-mumps-rubella vaccine in Britain declined, resulting in a 25-fold increases in measles in the 10-year period following the original publication.

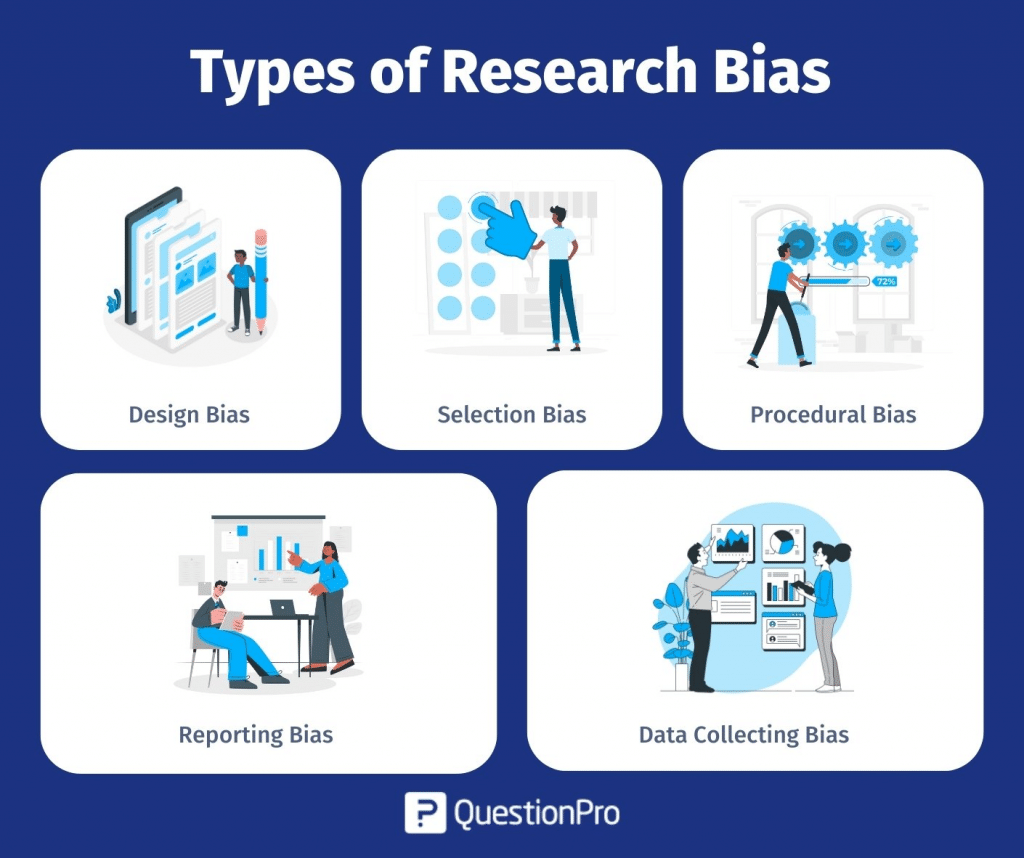

Design Bias

Researchers may engage in poorly designed research, which could increase the likelihood of bias. Poor research design may occur when the research questions and aims are not aligned with the research methods, or when researchers choose a biased research question.

Selection or Participant Bias

Research which relies on recruiting or selecting participants may results in selection or participant bias in a number of ways. For instance, participant recruitment might unintentionally target or exclude a specific population, or researchers may not appropriately account for participant withdrawal.

Analysis Bias

Researchers may unknowingly bias their results during data analysis by looking for or focusing on results that support their hypotheses or personal beliefs.

Publication Bias

Not all research articles are published. Publication or reporting bias occurs when publishers are more likely to publish articles showing positive results or statistically significant findings. Research showing negative results may be equally important to the contribution of knowledge in the field but may be less likely to be published.

Conflict of Interest

Bias in research may occur when researchers have a conflict of interest, a person interest that conflicts with their professional obligation. Researchers should always be transparent in disclosing how their work was funded and what, if any, conflicts of interest exist.

This content inspired and informed by the following resources: Smith J, Noble H. Bias in research. Evidence-Based Nursing 2014;17:100-101. ; Research Bias ; Academic Integrity: Avoiding Plagiarism and Understanding Research Ethics: Research Ethics (University of Pittsburgh Libraries)

- << Previous: Bias in Media

- Next: Bias in Search Tools >>

- Last Updated: May 21, 2024 3:25 PM

- URL: https://uark.libguides.com/bias

- See us on Instagram

- Follow us on Twitter

- Phone: 479-575-4104

Research Bias 101: What You Need To Know

By: Derek Jansen (MBA) | Expert Reviewed By: Dr Eunice Rautenbach | September 2022

If you’re new to academic research, research bias (also sometimes called researcher bias) is one of the many things you need to understand to avoid compromising your study. If you’re not careful, research bias can ruin the credibility of your study.

In this post, we’ll unpack the thorny topic of research bias. We’ll explain what it is , look at some common types of research bias and share some tips to help you minimise the potential sources of bias in your research.

Overview: Research Bias 101

- What is research bias (or researcher bias)?

- Bias #1 – Selection bias

- Bias #2 – Analysis bias

- Bias #3 – Procedural (admin) bias

So, what is research bias?

Well, simply put, research bias is when the researcher – that’s you – intentionally or unintentionally skews the process of a systematic inquiry , which then of course skews the outcomes of the study . In other words, research bias is what happens when you affect the results of your research by influencing how you arrive at them.

For example, if you planned to research the effects of remote working arrangements across all levels of an organisation, but your sample consisted mostly of management-level respondents , you’d run into a form of research bias. In this case, excluding input from lower-level staff (in other words, not getting input from all levels of staff) means that the results of the study would be ‘biased’ in favour of a certain perspective – that of management.

Of course, if your research aims and research questions were only interested in the perspectives of managers, this sampling approach wouldn’t be a problem – but that’s not the case here, as there’s a misalignment between the research aims and the sample .

Now, it’s important to remember that research bias isn’t always deliberate or intended. Quite often, it’s just the result of a poorly designed study, or practical challenges in terms of getting a well-rounded, suitable sample. While perfect objectivity is the ideal, some level of bias is generally unavoidable when you’re undertaking a study. That said, as a savvy researcher, it’s your job to reduce potential sources of research bias as much as possible.

To minimize potential bias, you first need to know what to look for . So, next up, we’ll unpack three common types of research bias we see at Grad Coach when reviewing students’ projects . These include selection bias , analysis bias , and procedural bias . Keep in mind that there are many different forms of bias that can creep into your research, so don’t take this as a comprehensive list – it’s just a useful starting point.

Bias #1 – Selection Bias

First up, we have selection bias . The example we looked at earlier (about only surveying management as opposed to all levels of employees) is a prime example of this type of research bias. In other words, selection bias occurs when your study’s design automatically excludes a relevant group from the research process and, therefore, negatively impacts the quality of the results.

With selection bias, the results of your study will be biased towards the group that it includes or favours, meaning that you’re likely to arrive at prejudiced results . For example, research into government policies that only includes participants who voted for a specific party is going to produce skewed results, as the views of those who voted for other parties will be excluded.

Selection bias commonly occurs in quantitative research , as the sampling strategy adopted can have a major impact on the statistical results . That said, selection bias does of course also come up in qualitative research as there’s still plenty room for skewed samples. So, it’s important to pay close attention to the makeup of your sample and make sure that you adopt a sampling strategy that aligns with your research aims. Of course, you’ll seldom achieve a perfect sample, and that okay. But, you need to be aware of how your sample may be skewed and factor this into your thinking when you analyse the resultant data.

Need a helping hand?

Bias #2 – Analysis Bias

Next up, we have analysis bias . Analysis bias occurs when the analysis itself emphasises or discounts certain data points , so as to favour a particular result (often the researcher’s own expected result or hypothesis). In other words, analysis bias happens when you prioritise the presentation of data that supports a certain idea or hypothesis , rather than presenting all the data indiscriminately .

For example, if your study was looking into consumer perceptions of a specific product, you might present more analysis of data that reflects positive sentiment toward the product, and give less real estate to the analysis that reflects negative sentiment. In other words, you’d cherry-pick the data that suits your desired outcomes and as a result, you’d create a bias in terms of the information conveyed by the study.

Although this kind of bias is common in quantitative research, it can just as easily occur in qualitative studies, given the amount of interpretive power the researcher has. This may not be intentional or even noticed by the researcher, given the inherent subjectivity in qualitative research. As humans, we naturally search for and interpret information in a way that confirms or supports our prior beliefs or values (in psychology, this is called “confirmation bias”). So, don’t make the mistake of thinking that analysis bias is always intentional and you don’t need to worry about it because you’re an honest researcher – it can creep up on anyone .

To reduce the risk of analysis bias, a good starting point is to determine your data analysis strategy in as much detail as possible, before you collect your data . In other words, decide, in advance, how you’ll prepare the data, which analysis method you’ll use, and be aware of how different analysis methods can favour different types of data. Also, take the time to reflect on your own pre-conceived notions and expectations regarding the analysis outcomes (in other words, what do you expect to find in the data), so that you’re fully aware of the potential influence you may have on the analysis – and therefore, hopefully, can minimize it.

Bias #3 – Procedural Bias

Last but definitely not least, we have procedural bias , which is also sometimes referred to as administration bias . Procedural bias is easy to overlook, so it’s important to understand what it is and how to avoid it. This type of bias occurs when the administration of the study, especially the data collection aspect, has an impact on either who responds or how they respond.

A practical example of procedural bias would be when participants in a study are required to provide information under some form of constraint. For example, participants might be given insufficient time to complete a survey, resulting in incomplete or hastily-filled out forms that don’t necessarily reflect how they really feel. This can happen really easily, if, for example, you innocently ask your participants to fill out a survey during their lunch break.

Another form of procedural bias can happen when you improperly incentivise participation in a study. For example, offering a reward for completing a survey or interview might incline participants to provide false or inaccurate information just to get through the process as fast as possible and collect their reward. It could also potentially attract a particular type of respondent (a freebie seeker), resulting in a skewed sample that doesn’t really reflect your demographic of interest.

The format of your data collection method can also potentially contribute to procedural bias. If, for example, you decide to host your survey or interviews online, this could unintentionally exclude people who are not particularly tech-savvy, don’t have a suitable device or just don’t have a reliable internet connection. On the flip side, some people might find in-person interviews a bit intimidating (compared to online ones, at least), or they might find the physical environment in which they’re interviewed to be uncomfortable or awkward (maybe the boss is peering into the meeting room, for example). Either way, these factors all result in less useful data.

Although procedural bias is more common in qualitative research, it can come up in any form of fieldwork where you’re actively collecting data from study participants. So, it’s important to consider how your data is being collected and how this might impact respondents. Simply put, you need to take the respondent’s viewpoint and think about the challenges they might face, no matter how small or trivial these might seem. So, it’s always a good idea to have an informal discussion with a handful of potential respondents before you start collecting data and ask for their input regarding your proposed plan upfront.

Let’s Recap

Ok, so let’s do a quick recap. Research bias refers to any instance where the researcher, or the research design , negatively influences the quality of a study’s results, whether intentionally or not.

The three common types of research bias we looked at are:

- Selection bias – where a skewed sample leads to skewed results

- Analysis bias – where the analysis method and/or approach leads to biased results – and,

- Procedural bias – where the administration of the study, especially the data collection aspect, has an impact on who responds and how they respond.

As I mentioned, there are many other forms of research bias, but we can only cover a handful here. So, be sure to familiarise yourself with as many potential sources of bias as possible to minimise the risk of research bias in your study.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

This is really educational and I really like the simplicity of the language in here, but i would like to know if there is also some guidance in regard to the problem statement and what it constitutes.

Do you have a blog or video that differentiates research assumptions, research propositions and research hypothesis?

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

8 Types of Research Bias and How to Avoid Them?

Appinio Research · 18.10.2023 · 39min read

Curious about how to ensure the integrity of your research ? Ever wondered how research bias can impact your findings? How might it affect your data-driven decisions?

Join us on a journey through the intricate landscape of unbiased research as we delve deep into strategies and real-world examples to guide you toward more reliable insights.

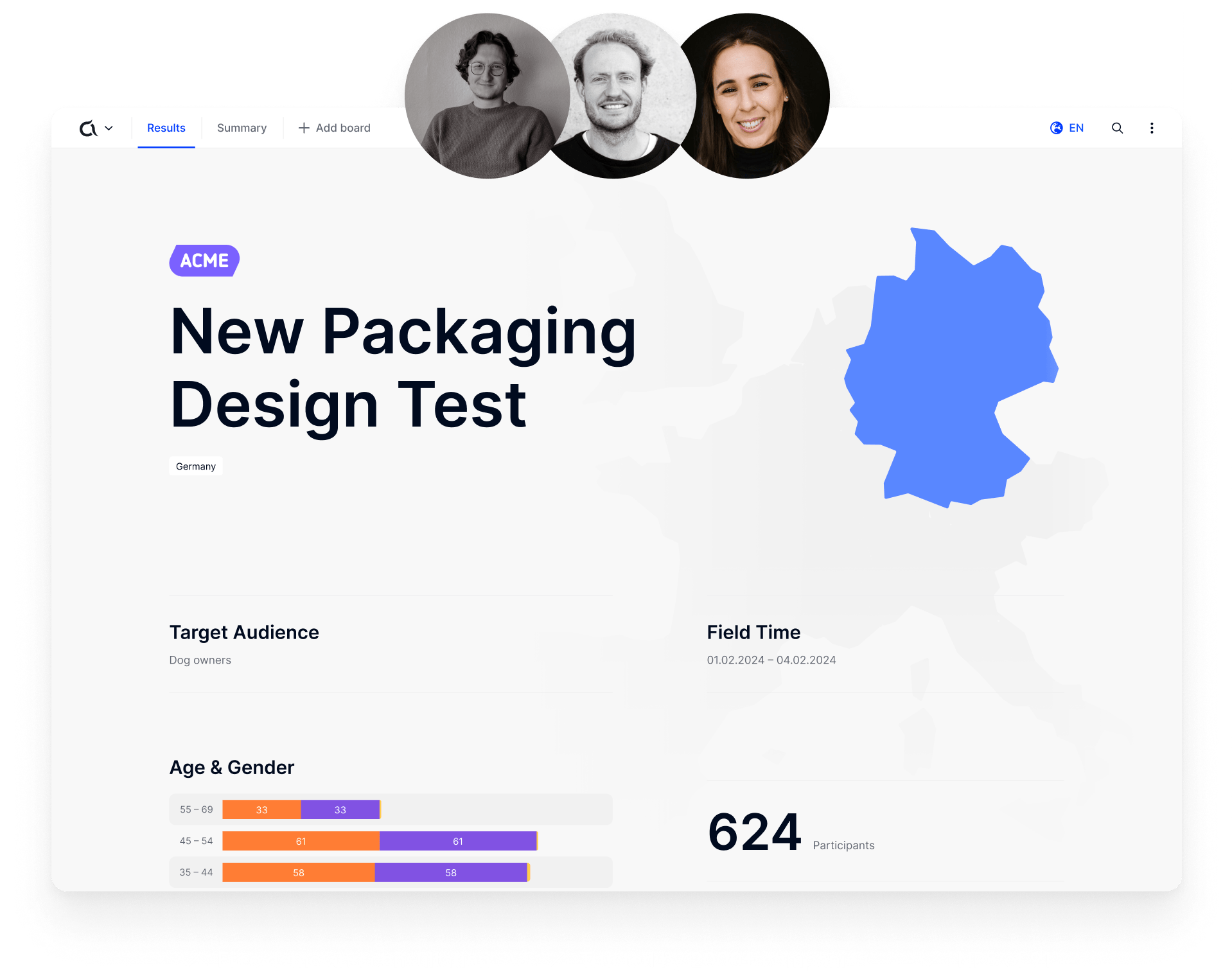

What is Bias in Research?

Research bias, often simply referred to as bias, is a systematic error or deviation from the true results or inferences in research. It occurs when the design, conduct, or interpretation of a study systematically skews the findings in a particular direction, leading to inaccurate or misleading results. Bias can manifest in various forms and at different stages of the research process, and it can compromise the validity and reliability of research outcomes.

Key Aspects of Research Bias

- Systematic Error: Bias is not a random occurrence but a systematic error that consistently influences research outcomes.

- Influence on Results: Bias can lead to overestimating or underestimating effects, associations, or relationships studied.

- Unintentional or Intentional: Bias can be unintentional, stemming from flaws in study design, data collection, or analysis. In some cases, it can also be introduced intentionally, leading to deliberate distortion of results.

- Impact on Decision-Making: Research bias can have significant consequences, affecting decisions in fields ranging from healthcare and policy to marketing and academia.

Understanding and recognizing the various types and sources of bias is crucial for researchers to minimize its impact and produce credible, objective, and actionable research findings.

Importance of Avoiding Research Bias

Avoiding research bias is paramount for several compelling reasons, as it directly affects the quality and integrity of research outcomes. Here's why researchers and decision-makers should prioritize bias mitigation:

- Credibility and Trustworthiness: Research bias undermines the credibility and trustworthiness of research findings. Biased results can erode public trust, damage an organization's reputation, and hinder the acceptance of research in the scientific community.

- Informed Decision-Making: Research serves as the foundation for informed decision-making in various fields. Bias can lead to erroneous conclusions, potentially leading to misguided policies, ineffective treatments, or poor business strategies.

- Resource Allocation: Bias can result in the misallocation of valuable resources. When resources are allocated based on biased research, they may not effectively address the intended issues or challenges.