Data Science Process: 7 Steps explained with a Comprehensive Case Study

Sharing is Caring!

Are you feeling overwhelmed by the ever-growing employment/entrepreneurship opportunities for data scientists, and aim to dive into this field to make your professional career a success?

Or, are you more concerned to know what a data scientist does, and how day-to-day activities of a data scientist differ from yours?

Maybe, you just want an in-depth introduction and overview of the complete data science process to help you get started with your first-ever data science project…

Well, whatever your reason may be about exploring the data science process. you have already taken the first steps to start digging into the gold mine of the most sought-after technology!

Referred to as the “Oil of the 21 st Century”, Data Science has a profound impact on the development of a business model in the light of customer satisfaction, and their purchasing habits.

Harvard calls the Data Scientist “Sexiest Job of the 21 st Century” , mainly because they empower businesses to adjust to an ever-changing industrial landscape.

Contrary to the beliefs of many, Data Scientists are neither magicians nor possess any superficial powers. However, they do possess the power of Intelligent Analysis on a large stream of data, which ultimately gives them a notable edge over their contemporaries.

With every business employing a slightly different operating strategy even in the same niche, different data science models should exist for each business. Right?

No! That’s not how it works…

A data scientist is more concerned about the Data, and not its Nature. More importantly, the step-by-step process of analysing the data remains almost the same, irrespective of the nature of the data.

This article entails the step-by-step explanation of each stage a data scientist needs to go through to interpret even the most complex and unique data sets.

Besides, a Case Study is provided for a Real-Estate Agency in which a data scientist analyzes the customer’s purchasing history, and highlights some actionable insights to be incorporated within the business model to generate more leads and revenue.

Before we get into the nitty-gritty details of the data science process, you need to know What is Data Science, Why the world needs it, and What are different frameworks/tools you need to learn to become a proficient data scientist.

Without any further ado, let’s get started…

Article Contents

What is Data Science

In its simplest form, data science is used to extract meaningful and relevant information from the raw data to come up with some actionable insights.

At its core, a data scientist always strives to find hidden patterns between the seemingly independent variables of the raw data.

For example, can you think of any relation between paying bills on time and road accidents? Without data, you cannot even imagine that such a relationship would exist. However, using the data from an Insurance company, it was observed that the people who pay bills on time are less prone to accidents.

Interesting… Right?

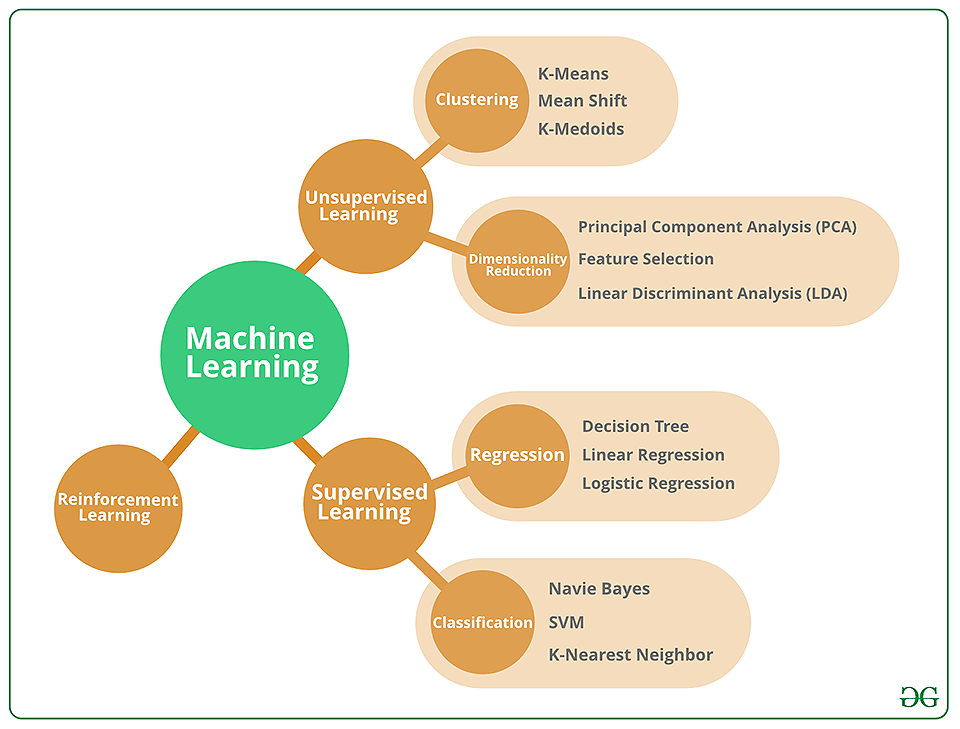

Data science applies the algorithms of Artificial Intelligence , especially Machine Learning and Deep Learning to different forms of data i.e., textual, images, video, and audio. It helps in forecasting the future from the past using a data-driven approach.

From the business perspective, this practice uncovers the secret patterns within the data which are not visible to the non-data-scientist eye.

Such meaningful information is then used to make critical decisions that can translate into a tangible value for your business in terms of better customer awareness , focused efforts , and higher revenue .

Let’s suppose that you are selling hygiene products to men/women in three states (California, Texas, Florida) and recording your customer’s data as well. Your data scientist tells you that the conversion rate for women is 70% as compared to only 30% for men. Moreover, California and Texas drive the major chunk (almost 83%) of your revenue.

So, what steps can you take to maximize your revenue?

For me, you may want to focus your marketing efforts on the Women who are living in either California or Texas!

Without the analysis, you would not have been able to explain such a frenzy decision to the board members. But now, DATA has Got your BACK…

All in all, data science enables you to make informed and data-driven decisions by discovering the hidden patterns within the structured or unstructured data from the past.

Why Data Science

During the 20 th century, most of the businesses were mainly operated using a brick-and-mortar model. Such a business model only catered to the demographics of the local consumer and did not incorporate the far-off customers.

Besides, customer data was either not collected at all, or just collected for book-keeping purposes . Given the limited scope of customer awareness and reachability, such a business model performed pretty well.

However, the advent of the internet brought a whole lot of changes to the business landscape. With the power of e-commerce, a customer sitting anywhere in the world could knock at the virtual door of any business and ask, “How much for your Product X and Product Y?”

Such a massive consumer reach forced the businesses to collect relevant information from the purchasing customers, as well as by running virtual marketing campaigns .

This, in turn, helped the businesses expand globally just by looking at the demand of their products using the country-wise demographics.

Turns out, this was not enough for long because Social Media soon took over, and customers started to spend more time scrolling on social media rather than the boring Search Engines.

Out of the blue, a real problem emerged for the businesses in terms of marketing and customer awareness. Previously, directed marketing campaigns were run via e-mail or ad-placement on different websites.

Now, the customer base was scattered across different social media platforms i.e., Facebook, Twitter, Instagram, LinkedIn, Pinterest, etc . And, the next mandatory step for brands was to market themselves on social media as well as develop their social presence.

Since the brands only had a limited amount of budget allocated to marketing, they needed to optimize their marketing campaigns in terms of two-factors:

- Which Marketing Channels to use?

- Which Customers to target?

To find the solution to this problem, brands had to adopt a data-driven approach since it allowed them to direct their marketing campaigns to the specific customers on the specific marketing channels. After all, no one likes to shoot arrows in the air!

To tap into this emerging market potential, data science stepped in and announced to every business owner, “I will take your unstructured data, process it, analyze it, and then unlock hidden patterns within your data to come up with some actionable insights for you to target Right Customers and generate more revenue.”

Well, who would have rejected this proposal? And as you know it, the rest is history…

To date, data scientists have helped major brands in understanding their customers from existing data such as age, demographics, purchase history, and income, etc.

The best part is that data science has not been confined to the business world only. Instead, it is also used to make an instant decision in self-driving cars.

Moreover, it helps in forecasting using the data from ships, satellites, and radars. In rare cases, the weather data can also be used to predict any natural calamities well in advance.

Irrespective of the application, the main steps of the data science process remain almost always the same, and are discussed further…

Data Science Process: A Step-by-Step Approach

If you try to interview every other Data Scientist in this world and ask them about the steps to ensure the smooth flow of data science projects, I am pretty sure that you are going to elicit a unique response from each one of them.

The main reason being that the scope of each data science project is unique in terms of the nature of available data, its analysis, and the patterns/insights which are to be extracted from it.

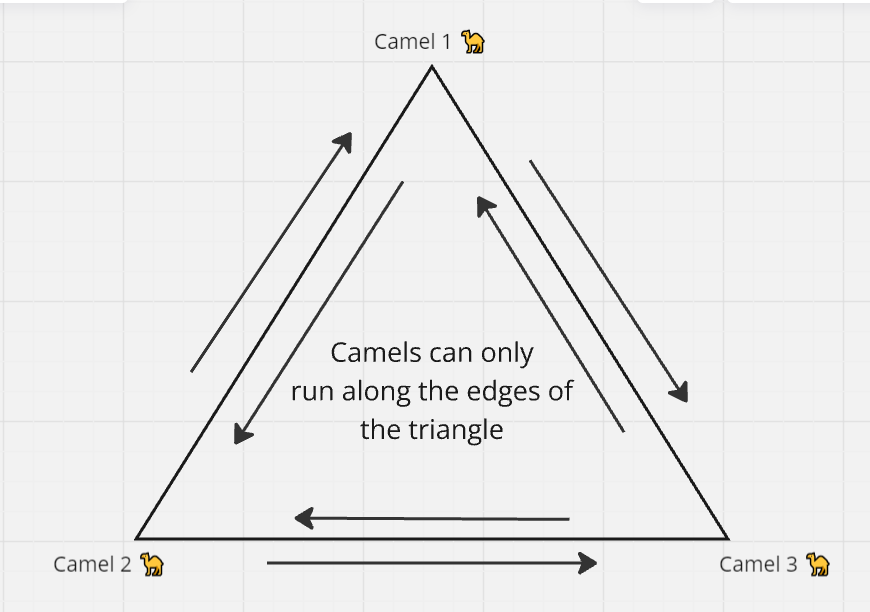

There are, however, 7 steps that remain a constant part of the complete data science process:

- Problem Framing

- Data Acquisition

- Data Preparation

- Model Planning

- Model Building

- Visualization and Communication

- Deployment and Maintenance

These steps are explained further along with a case study of a Real Estate agency looking to optimize their sales funnel by directing their marketing campaign to a customer base, who are more likely to respond to their offerings.

Step 1: Problem Framing

If you need to travel to New York, you must board the plane which is flying to that specific city. If you get on a random plane, chances are that you will end up somewhere other than New York. On top of that, you will inevitably waste valuable resources such as your time, money, and health.

The same concept forms the foundation of success/failure on any of the data science project. You may have a million bytes of data, but if you don’t know exactly what to do with this data, you are never going to end-up anywhere.

In simple words, you need to have a clear, concise, and concrete end-goal in mind before you even start working on any of the data science project.

And to set an end-goal, you must try to get into the head of your client and gather as much information as possible about the problem at hand.

Most of the industries use Key Performance Indicators (KPIs) to gauge their performance at a macro-level. To leverage this detail, you may want to come up with an insight that affects a major KPI directly.

In essence, you must try to frame a business problem and then formulate an initial hypothesis test . You should start by asking just the Right Questions from all the major stakeholders such as:

- Is there any specific business goal you want to achieve?

- Do you want to evaluate whether your offering is suitable for people with different demographics, age, and gender, etc?

- Is there any specific purchase pattern you have seen among your customers? If yes, would you like to uncover all the dots behind such purchase decisions?

- Do you want to know which customers are more likely to convert just by looking at their email response?

Once you start asking such questions, an automatic trigger will happen at some point which will help you finalize the problem statement more vividly.

Step 2: Data Acquisition

The next major step is to collect the data relevant to the problem statement you are targeting.

If you want to forecast a general behavior among a large group of population, you may want to explore the Open-Source or Paid data sets available from various resources such as:

- Online Data Set Repositories

- Web-Servers

- Research Labs

- Social Institutions

- Survey Repositories

- Public Institutions

On the contrary, if you just want to achieve a specific business goal, you should be focused on acquiring the data from the company’s Customer Relationship Management (CRM) software.

Ideally, you should aim to fetch the relevant data from the Application Program Interface (API) of the CRM, such as:

- Customer Information

- Sales Information

- Email Open Rate

- Follow-Up Emails Response

- Social Media Ad Engagement and Analysis

- Website Traffic Analytics and Visitor’s Demographics

Essentially, you should try and collect as much data as possible to help you extract meaningful patterns and actionable insights using a more comprehensive approach.

Step 3: Data Preparation

Once you have acquired the raw data, the first thing you will come across is the need to prepare the data for further processing.

If the data is in a highly structured form , consider yourself lucky. Else, you must organize the unstructured data into a specific format which you can later import into your programs i.e., Comma-Separated Files, Tab-Separated Columns, Column-Row Structure, etc .

After re-conditioning the data, you will need to remove all the underlying problems with the data entries themselves:

- Identify any data categories which don’t add value, and remove them from the data set e.g., the name of the customer and their phone number won’t play a role in most of the cases. If you are selling an item only suitable for the ages of 18 and above, then including the data of teens won’t do any good

- Identify any potential gaps in the data i.e., if it is biased towards a specific ethnicity, race, or religion. Also try to find out if there is any social group missing from this data i.e., people with different demographics, locations, age groups, gender, etc.

- Resolve conflicting values in the data set e.g., human age cannot be negative, an email address must contain the ‘@’ symbol, the phone number must include the city/country code

- Make sure each column of the data set follows a specified format e.g., time of purchase should either be in 12-hour or 24-hour format, age should be written in roman numerals, phone number should not contain any dashes

- Remove the inconsistencies in data i.e., add the missing values, remove the bogus values, smooth out the data noise

- Join data from multiple sources in a logical manner such that the format remains the same e.g., you may want to combine the sales data from a Facebook Store, Personal Website, and different affiliate websites

Once you have resolved all the anomalies and unusual patterns in the data set, you need to ensure that the data is formatted in a consistent and homogenous manner.

During all the data transformation and cleaning, you must comply with the data usage license as well as all the legislations concerning the privacy and protection of personal data.

Step 4: Model Planning

At this stage, you have the data formatted and sorted out in a large number of categories or variables . Now is the time to start exploring and drawing the relationship between these variables.

Model Planning helps you analyze and understand data from a visual perspective . Besides, it provides an in-depth overview of the data, which helps you choose a better model to represent the data.

For example, if you want to evaluate whether email marketing or social marketing works best for you, Classification is the way to go.

However, if you want to predict the revenue of your business in the upcoming quarter by analyzing the financial data of the previous three fiscal years, you must choose Regression over Classification.

In most cases, you will need to apply Exploratory Data Analytics (EDA) to finalize a training model for your data.

You can use Visualization and Statistical Formulas to use various EDA techniques including Bar Graphs, Line Charts, Pie Charts, Histograms, Box Plots, and Trend Analysis, etc.

Some of the popular tools for model planning include, but not limited to:

- SQL Analysis Services

- SAS: Proprietary Tool for a comprehensive Statistical Analysis

- R: Machine Learning Algorithms, Statistical Analysis, Visualization

- Python: Data Analysis, Machine Learning Algorithms

- MATLAB: Proprietary Tool for Statistical Analysis, Visualization, and Data Analysis

Step 5: Model Building

Once you have performed a thorough analysis of data and decided on a suitable model/algorithm , it’s time to develop the real model and test it.

Before building the model, you need to divide the data into Training and Testing data sets . In normal circumstances, the Training data set constitutes 80% of the data, whereas, Testing data set consists of the remaining 20% .

Firstly, Training data is employed to build the model , and then Testing data is used to evaluate whether the developed model works correctly or not.

There are various packaged libraries in different programming languages (R, Python, MATLAB) , which you can use to build the model just by inputting the labeled training data .

If you train a model and it does not fit well for the testing data, you need to re-train your model either using more Training Data or try out a different learning technique i.e., regression, classification, association, etc .

If you have unlabeled data, you would want to consider unsupervised learning algorithms such as K-Means, PCA, and SVD .

Some tools which can help you build and test the models are listed as under:

- Alpine Miner

- SAS enterprise miner

Step 6: Visualization and Communication

Once you have trained a model and successfully tested it, you still need to break one last barrier known as the communication gap .

You may understand technical terminologies related to Data Science, but your manager who has a degree in English Literature won’t even understand a dime if you try to explain your findings in a more Data Scientist way!

You need to elaborate your findings in a more Humanly manner. And the best way to do that is to use Visualization Graphs and to Communicate the Results in a language, which was used to frame the problem at first.

For example, if you test a model and tell your manager that this model detects 90% True Positives and 88% True Negatives for the testing data set, chances are that you will only get a bewildered look on the manager’s face in response.

However, if you say that this model has a prediction accuracy of 90% for the positive class and 88% for the negative class, your manager is likely to praise your work.

Since a picture is worth a thousand words, you need to back your communication skills with interesting visuals and graphics.

You can make use of the following tools to convey your results more convincingly:

- Microsoft Excel

- Google Sheets

- MATLAB Visualizations and Graphs

Step 7: Deployment and Maintenance

After you have explained the results to all the major stakeholders and earned their trust with the model you have developed, you need to deploy it to the company’s mainline frame.

However, before you do that, you need to test your model in a pre-production setting . You may bring in some volunteers or ask some employees of the company to act as real-time test subjects.

Once you are confident that the model will serve the underlying problem statement well, you should deploy it for real customers and keep on tracking its performance over time.

Besides, you need to check back regularly to evaluate if there have been significant changes within the environment in which the model was operating.

Maybe the company has released a new product, or acquiring customers from a unique location, or started their marketing campaigns on a new social media platform.

In that case, you need to retrain your model to incorporate all the significant changes that happened during the past period of innovation and commercialization .

You need to stay vigilant and informed about any changes happening in the technology globe to earn the trust and backing of all the major stakeholders.

That’ll serve you well in the long run, and help you become a more proficient and knowledgeable data scientist.

Data Science Process: A Case-Study

Now that you know about a step-by-step plan of action for a data scientist to extract meaningful and actionable insights from the raw data, I am going to explain all of these important steps using a brief case-study .

This case study is about a Real Estate Agency whose marketing campaigns are not converting prospective leads into purchasing customers.

One of their partner agencies has told them about this new technology in the market, which can help them optimize their sales funnel to target just the Right Customers .

To take advantage of this technology, Real Estate Agency hires a recent graduate from a competitive Computer Science program to assist them optimize their marketing campaigns.

Let’s suppose that it is you who have been hired to fill this job role. You are very enthusiastic about your new job, and looking forward to new challenges ahead in your life.

Real-Estate Agency: Problem Framing

It is your first day in the office, and you are ready to embrace anything which comes your way. Soon after you are done with all the complementary welcomes and greetings, the CEO of the agency calls for you.

Upon having a conversation with the CEO, you sense that he is slightly worried and concerned due to the shrinking volume of sales.

Suddenly, he erupts something elusive:

“Our competitor seems to have some sort of a magical wand. They only target the customers who are most likely to buy from them. In our case, only 40% of the targeted customers convert, 15% only respond to what we have to offer, and the rest 35% don’t even bother to respond.”

At the end of the conversation, he asks you to look for such a magic wand if it, somehow, exists on the internet.

Initially, you expected to develop a more strategic sales funnel for the agency, but now, here you are, looking for a Revenue Generating Magical Wand .

How Interesting… Right?

Instead of thinking in terms of magic, you decide to review the marketing strategy of the competitor. You visit their website, social media pages, and go through their weekly/monthly newsletter.

After reviewing the competitor’s customer profile for the past three quarters, you come across an interesting pattern . The competitor is only targeting specific customers in each of their marketing campaigns based on their demographics, income, and age.

Besides, they seem to have figure out a formula to target the Right Customer at the Right Time on the Right Channel!

Once you tell all about your findings to the CEO, he praises you for the work done and hesitantly asks you:

“Can you do the same for our Real Estate Agency?”

VOILA… You have got your first real-world data science project!

You readily agree to this assignment because you are already in love with data science.

More importantly, you have already framed the problem during your initial conversations with the CEO.

You just need to find out the Right Customers who are likely to buy from the agency and direct the agency’s marketing campaigns towards those customers.

Simple… Right?

To analyze the previous purchase data and make future predictions, you need to first acquire the data …

Real-Estate Agency: Data Acquisition

As you aim to optimize the sales funnel for the real-estate agency, you only need the data of the customers who were targeted in the recent marketing campaigns , plus their conversion rates .

To acquire the data, you to visit the IT office and ask the database administrator to provide you with an API of the CRM software.

The administrator negates the availability of any such API but instead provides you with an excel file containing the data of customers targeted in the recent marketing campaign.

If you would have been working on a large-scale project, you would also need to collect the data from other sources. But, in this case, that’s all you need!

Here is the data to be used for this case study. You can download the data here !

It includes the name, age, income, registered properties, city, campaign, and the conversion status of each targeted customer.

As an end-goal, you need to extract some hidden patterns from data to explain why some customers converted, and why others didn’t.

Before all that, you need to process, transform and clean the data first…

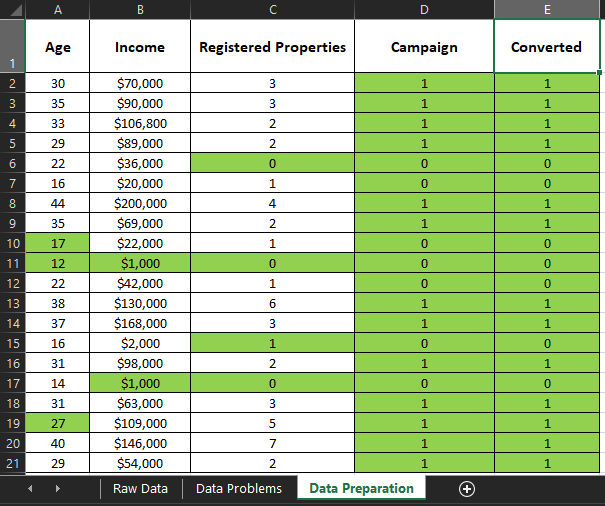

Real-Estate Agency: Data Preparation

This data has a lot of anomalies and redundancies , which must be resolve first.

On the first look, data is in a structured form with the first column entry representing the category itself, and the remaining entries depicting the individual customer data.

Below-mentioned is some of the pre-processing actions which you need to perform on data:

- The conversion rate is unaffected by the NAME and CITY of the customer, so you need to remove these columns altogether from the data. Both of these columns are highlighted with blue color

- The columns for Campaign and Converted are represented using textual values instead of numeric ones. Unfortunately, any model building algorithm won’t be able to comprehend the textual values. Thus, you need to assign numerical classes (‘0’ or ‘1’ or ‘2’) to the corresponding textual entries. Both of the columns are highlighted with Grey color

- Some missing values in the Income and Registered Properties columns are highlighted with the Red color. You need to fill in these with suitable entries

- The Age column has some conflicting entries and highlighted with the Yellow Color

- Two entries in Age and Registered Properties column are textual entries instead of numeric ones and highlighted with the Grey color

The data inconsistencies must be addressed to ensure the smooth flow of the next steps, which involve extensive data processing.

Real-Estate Agency: Model Planning

Once you are done performing the required pre-processing operations on the data, it should look like this:

Since you are looking for customers who are most likely to convert, you need to find a pattern within the details of customers who have converted in the past.

For this case study, you need to train your model using input entries from 4 columns ‘Age’, ‘Income’, ‘Registered Properties’, ‘Campaign’, and assign the output labels from the ‘Converted’ column.

As the ‘Converted’ column only has 2 output classes, that too in discrete form, you can use a Classification Algorithm to train your model i.e., Logistic Regression, Decision Trees, Naïve Bayes Classifier, Artificial Neural Network, K-Nearest Neighbour, etc.

To keep things simple, you decide to use Logistic Regression because the dependent variable ‘Converted’ is in a binary form.

Now that you have planned to use Logistic Regression as the training model for your data set, you need to build the real model itself…

Real-Estate Agency: Model Building

To build a model, you firstly need to decide on the platform to be used for data fetching, data processing, and computation purposes.

Since you will also need to visualize the data in the later stages, you decide to use MATLAB which comes packaged with data processing and visualization tools.

As far as Logistic Regression is concerned, you can train and build a model using two approaches:

- Mono-Variable Approach: In this approach, a single independent variable is used to predict the dependent variable. For example, how the factor of ‘age’ or ‘income’ alone would affect a customer’s conversion rate.

- Dual-Variable Approach: As the name applies, this approach uses the combined data of two independent variables to forecast the output of the dependent variable. For example, how the data from ‘age’ and ‘income’ can be combined to predict whether the prospective customer will convert or not.

For this project, you decide to implement both approaches to get extensive insights on different factors contributing to customer’s conversion rates.

1. Mono-Variable Approach:

Using this approach, you need to train your model for each independent variable in the dataset (Age, Income, Registered Properties, Campaign) such that it maps well onto the output classes of the dependent variable ‘Converted’.

Here is a simple code template to train and test your model using the mono-variable approach. Just un-comment the 2 Lines for the specific independent variable:

2. Dual-Variable Approach:

In this approach, you will train your model using combine data from two independent variables (Age & Income, Age & Registered Properties, Age & Campaign, Income & Registered Properties, Income & Campaign, Registered Properties & Campaign) .

This model will help you figure out a pattern to predict whether a customer will convert or not just by looking at the combined input of two independent variables.

You can use this sample code to train the Logistic Regression model using a dual-variable approach . Similar to the mono-variable code, you need to uncomment the input of the relevant variables to train a model for them:

Real-Estate Agency: Visualization and Communication

After building the real mathematical model from the data set, you need to explain all the hidden patterns related to customer conversion in front of your CEO.

However, you need to be aware of the fact that your CEO cannot understand the mathematical terms, and won’t understand a dime if you try and explain your model using analytical reasoning.

Thus, you need to communicate all the results in a format in which the main problem was framed first hand.

Moreover, you need contrasting Visuals to make your communication clearer and more concise.

Since you have trained the classification model using mono and dual variable approaches, you need to explain your findings for each variable in both approaches…

1. Age vs Conversion

People who have passed their mid-20s are more likely to convert, and should be targeted rather than people in their teens or early 20s:

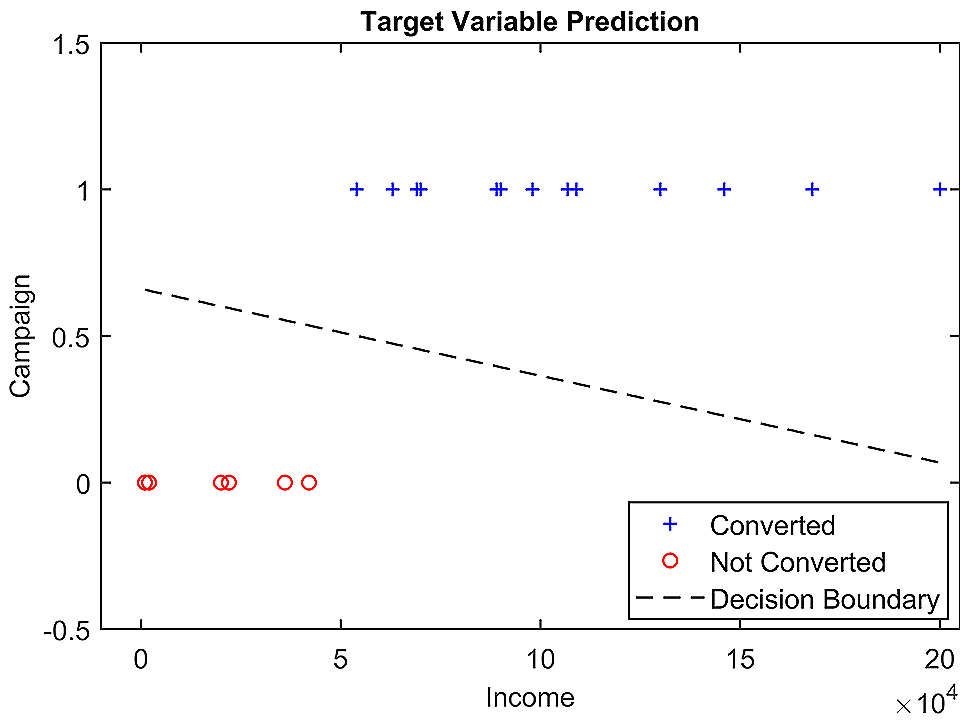

2. Income vs Conversion

People with a yearly income of more than $50,000 are the ideal target candidates:

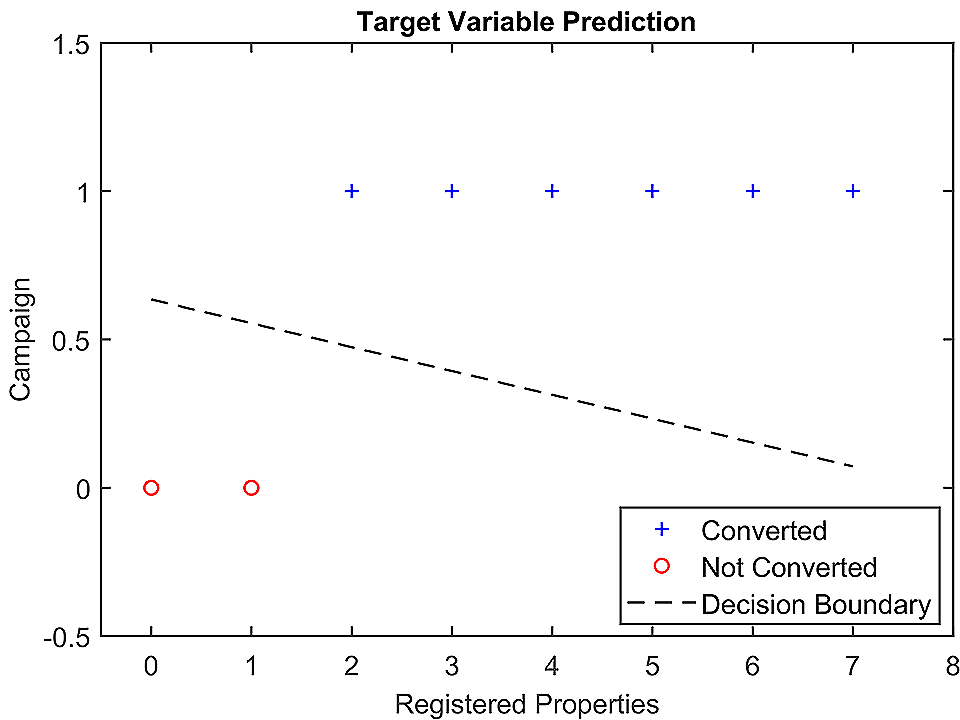

3. Real Estate vs Conversion

Those already having 2 or more real estate properties registered to their name are likely to convert, and should be considered as priority targets:

4. Marketing Campaign vs Conversion

Customers targeted using Email are more likely to convert rather than the ones targeted using Social Media channels. On a psychological note, people sign up for social media to enjoy and not to buy real estate:

5. Age-Income vs Conversion

People over 30 years of age, and having a yearly income of $50,000 or more are the ideal customers to target:

6. Age-Registered Properties vs Conversion

People who are in their 30s, and possess more than 1 real estates are likely to convert:

7. Age-Campaign vs Conversion

People over 25 years of age, and targeted using email marketing funnels are more likely to convert:

8. Income-Registered Properties vs Conversion

People with a yearly income over $50,000 and has more than a single property registered to their name are likely to buy from the Real Estate Agency:

9. Income-Campaign vs Conversion

People targeted through email, and earning more than $50,000 should be targeted at the top-most priority:

10. Campaign-Registered Properties vs Conversion

People having 2 or more real estate properties assigned to their name, and targeted through email are most likely to convert:

Real-Estate Agency: Deployment and Maintenance

Now that the CEO of the Real Estate Agency is aware of at least 10 factors to optimize the sales funnel, he can direct the marketing team to aim the agency’s marketing campaigns at the RIGHT customers.

In simple words, you have found that Magic Wand which will help increase the sales and hence the revenue of the agency.

However, you are not done yet…

Once your developed model is incorporated into the mainline sales funnel, the agency will attract new customers.

The variety in the data of newly acquired customers will inevitably make your trained model obsolete.

Thus, after every 500 or 1000 new sales, you will need to train your model again by combining the old data with the details of new customers, and then deploy it.

Over time, this iterative practice of training and deploying a new model will lead to a more robust sales funnel and optimized marketing strategy for the real estate agency.

Case Study Challenge

Did you guys think, I will let you leave without participating in a challenge?

No Way… That’s not me!

While cleaning and preparing the data for this case study, I got rid of the column ‘City’ claiming it was redundant data and won’t give you an insight into the customer purchase pattern.

Believe me… I did that on purpose.

Now you need to find out a Hidden Pattern for the customer’s conversion from the ‘City’ column.

Once you find the pattern, mention it in the comments along with a brief explanation of your approach to solve this problem.

Data Science is a way of analyzing and exploiting the raw data to extract meaningful and actionable insights from it. Moreover, it enables you to recognize some hidden patterns in the data which you, otherwise, would not have.

You can use the insights and patterns are drawn from data to help businesses optimize their sales funnel and marketing strategy, to forecast the stock prices and weather, to predict the winning probability of a sports team, etc.

With data science, possibilities are endless and to be a Data Scientist is considered to be the sexist job of the 21 st century.

The data science process mainly consists of seven discrete steps including Problem Framing, Data Acquisition, Data Preparation, Model Planning, Model Building, Visualization and Communication, Deployment and Maintenance, etc.

All of the steps in the data science process are evaluated using the analytical case-study of a Real Estate Agency . The extracted insights are then used to optimize the marketing strategy of the agency to attract the RIGHT customers.

More or less, it is the need of the hour to understand and implement the complete data science process because data scientists are in hot demand, and they are the most sexist people living on this planet right now.

He is the owner and founder of Embedded Robotics and a health based start-up called Nema Loss . He is very enthusiastic and passionate about Business Development, Fitness, and Technology. Read more about his struggles, and how he went from being called a Weak Electrical Engineer to founder of Embedded Robotics .

Subscribe for Latest Articles

Don't miss new updates on your email, leave a comment cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

The Data Science Process: What a data scientist actually does day-to-day

Raj Bandyopadhyay

Springboard

As a data scientist, I often get the question, “What do you actually do?”

Data scientists can appear to be wizards who pull out their crystal balls (MacBook Pros), chant a bunch of mumbo-jumbo (machine learning, random forests, deep networks, Bayesian posteriors) and produce amazingly detailed predictions of what the future will hold. However, as much as we’d like to believe it was, data science is not magic. The power of data science comes from a deep understanding of statistics and algorithms, programming and hacking, and communication skills. More importantly, data science is about applying these three skill sets in a disciplined and systematic manner.

Over the last few years, I’ve not only worked as an individual data scientist in several companies, but also led a team of data scientists as chief data scientist at Pindrop Security, a hot Andreessen-Horowitz funded cybersecurity startup. My team worked on several cutting-edge projects using a wide variety of tools and techniques. Over time, I realized that despite the variation in the details of different projects, the steps that data scientists use to work through a complex business problem remain more or less the same.

After Pindrop, I joined Springboard as the director of data science education. In this capacity, my role is to design and maintain our data science courses for students, such as our Data Science Career Track bootcamp . Designing these courses compelled me to reflect on the systematic process that data scientists use at work, and to make sure that I incorporated those steps in each of our data science courses. In this article, I explain this data science process through an example case study. By the end of the article, I hope that you will have a high-level understanding of the day-to-day job of a data scientist, and see why this role is in such high demand.

The Data Science Process

Congratulations! You’ve just been hired for your first job as a data scientist at Hotshot, a startup in San Francisco that is the toast of Silicon Valley. It’s your first day at work. You’re excited to go and crunch some data and wow everyone around you with the insights you discover. But where do you start?

Over the (deliciously catered) lunch, you run into the VP of Sales, introduce yourself and ask her, “What kinds of data challenges do you think I should be working on?”

The VP of Sales thinks carefully. You’re on the edge of your seat, waiting for her answer, the answer that will tell you exactly how you’re going to have this massive impact on the company of your dreams.

And she says, “Can you help us optimize our sales funnel and improve our conversion rates?”

The first thought that comes to your mind is: What? Is that a data science problem? You didn’t even mention the word ‘data’. What do I need to analyze? What does this mean?

Fortunately, your data scientist mentors have warned you already: this initial ambiguity is a regular situation that data scientists encounter frequently. All you have to do is systematically apply the data science process to figure out exactly what you need to do.

The data science process: a quick outline

When a non-technical supervisor asks you to solve a data problem, the description of your task can be quite ambiguous at first. It is up to you, as the data scientist, to translate the task into a concrete problem, figure out how to solve it and present the solution back to all of your stakeholders. We call the steps involved in this workflow the “Data Science Process.” This process involves several important steps:

- Frame the problem: Who is your client? What exactly is the client asking you to solve? How can you translate their ambiguous request into a concrete, well-defined problem?

- Collect the raw data needed to solve the problem: Is this data already available? If so, what parts of the data are useful? If not, what more data do you need? What kind of resources (time, money, infrastructure) would it take to collect this data in a usable form?

- Process the data (data wrangling): Real, raw data is rarely usable out of the box. There are errors in data collection, corrupt records, missing values and many other challenges you will have to manage. You will first need to clean the data to convert it to a form that you can further analyze.

- Explore the data: Once you have cleaned the data, you have to understand the information contained within at a high level. What kinds of obvious trends or correlations do you see in the data? What are the high-level characteristics and are any of them more significant than others?

- Perform in-depth analysis (machine learning, statistical models, algorithms): This step is usually the meat of your project,where you apply all the cutting-edge machinery of data analysis to unearth high-value insights and predictions.

- Communicate results of the analysis: All the analysis and technical results that you come up with are of little value unless you can explain to your stakeholders what they mean, in a way that’s comprehensible and compelling. Data storytelling is a critical and underrated skill that you will build and use here.

So how can you help the VP of Sales at hotshot.io? In the next few sections, we will walk you through each step in the data science process, showing you how it plays out in practice. Stay tuned!

Step 1 of 6: Frame the problem (a.k.a. “ask the right questions”)

The VP of Sales at hotshot.io, where you just started as a data scientist, has asked you to help optimize the sales funnel and improve conversion rates. Where do you start?

You start by asking a lot of questions.

- Who are the customers, and how do you identify them?

- What does the sales process look like right now?

- What kind of information do you collect about potential customers?

- What are the different tiers of service right now?

Your goal is to get into your client’s (the VP in this case) head and understand their view of the problem as well as you can. This knowledge will be invaluable later when you analyze your data and present the insights you find within.

Once you have a reasonable grasp of the domain, you should ask more pointed questions to understand exactly what your client wants you to solve. For example, you ask the VP of Sales, “What does optimizing the funnel look like for you? What part of the funnel is not optimized right now?”

She responds, “I feel like my sales team is spending a lot of time chasing down customers who won’t buy the product. I’d rather they spent their time with customers who are likely to convert. I also want to figure out if there are customer segments who are not converting well and figure out why that is.”

Bingo! You can now see the data science in the problem. Here are some ways you can frame the VP’s request into data science questions:

- What are some important customer segments?

- How do conversion rates differ across these segments? Do some segments perform significantly better or worse than others?

- How can we predict if a prospective customer is going to buy the product?

- Can we identify customers who might be on the fence?

- What is the return on investment (ROI) for different kinds of customers?

Spend a few minutes and think about any other questions you’d ask.

Now that you have a few concrete questions, you go back to the VP Sales and show her your questions. She agrees that these are all important questions, but adds: “I’m particularly interested in having a sense of how likely a customer is to convert. The other questions are pretty interesting too!” You make a mental note to prioritize questions 3 and 4 in your story.

The next step for you is to figure out what data you have available to answer these questions. Stay tuned, we’ll talk about that next time!

Step 2 of 6: Collect the right data

You’ve decided on your very first data science project for hotshot.io: predicting the likelihood that a prospective customer will buy the product.

Now’s the time to start thinking about data. What data do you have available to you?

You find out that most of the customer data generated by the sales department is stored in the company’s CRM software, and managed by the Sales Operations team. The backend for the CRM tool is a SQL database with several tables. However, the tool also provides a very convenient web-based API that returns data in the popular JSON format.

What data from the CRM database do you need? How should you extract it? What format should you store the data in to perform your analysis?

You decide to roll up your sleeves and dive into the SQL database. You find that the system stores detailed identity, contact and demographic information about customers, in addition to details of the sales process for each of them. You decide that since the dataset is not too large, you’ll extract it to CSV files for further analysis.

As an ethical data scientist concerned with both security and privacy, you are careful not to extract any personally identifiable information from the database. All the information in the CSV file is anonymized, and cannot be traced back to any specific customer.

In most data science industry projects, you will be using data that already exists and is being collected. Occasionally, you’ll be leading efforts to collect new data, but that can be a lot of engineering work and it can take a while to bear fruit.

Well, now you have your data. Are you ready to start diving into it and cranking out insights? Not yet. The data you have collected is still ‘raw data’ — which is very likely to contain mistakes, missing and corrupt values. Before you draw any conclusions from the data, you need to subject it to some data wrangling , which is the subject of our next section.

Step 3 of 6: How to process (or “wrangle”) your data

As a brand-new data scientist at hotshot.io, you’re helping the VP of Sales by predicting which prospective customers are likely to buy the product. To do so, you’ve extracted data from the company’s CRM into CSV files.

But, despite all your work, you’re not ready to use the data yet. First, you need to make sure the data is clean! Data cleaning and wrangling often takes up the bulk of time in a data scientist’s day-to-day work, and it’s a step that requires patience and focus.

First, you need to look through the data that you’ve extracted, and make sure you understand what every column means. One of the columns is called ‘FIRST_CONTACT_TS’, representing the date and time the customer was first contacted by hotshot.io. You automatically ask the following questions:

- Are there missing values i.e. are there customers without a first contact date? If not, why not? Is that a good or a bad thing?

- What’s the time zone represented by these values? Do all the entries represent the same time zone?

- What is the date range? Is the date range valid? For example, if hotshot.io has been around since 2011, are there dates before 2011? Do they mean anything special or are they mistakes? It might be worth verifying the answer with a member of the sales team.

Once you have uncovered missing or corrupt values in your data, what do you do with them? You may throw away those records completely, or you may decide to use reasonable default values (based on feedback from your client). There are many options available here, and as a data scientist, your job is to decide which of them makes sense for your specific problem.

You’ll have to repeat these steps for every field in your CSV file: you can begin to see why data cleaning is time-consuming. Still, this is a worthy investment of your time, and you patiently ensure that you get the data as clean as possible.

This is also a time when you make sure that you have all of the critical pieces of data you need. In order to predict which future customers will convert, you need to know which customers have converted in the past. Conveniently enough, you find a column called ‘CONVERTED’ in your data, with a simple ‘Yes/No’ value.

Finally, after a lot of data wrangling, you’re done cleaning your dataset, and you’re ready to start drawing some insights from the data. Time for some exploratory data analysis!

Step 4 of 6: Explore your data

You’ve extracted data and spent a lot of time cleaning it up.

And now, you’re finally ready to dive into the data! You’re eager to find out what information the data contains, and which parts of the data are significant in answering your questions. This step is called exploratory data analysis.

What are some things you’d like to explore? You could spend days and weeks of your time aimlessly plotting away. But you don’t have that much time. Your client, the VP of Sales, would love to present some of your results at the board meeting next week. The pressure is on!

You look at the original question: predict which future prospects are likely to convert. What if you split the data into two segments based on whether the customer converted or not and examine differences between the two groups? Of course!

Right away, you start noticing some interesting patterns. When you plot the age distributions of customers on a histogram for the two categories, you notice that there are a large number of customers in their early 30s who seem to buy the product and far fewer customers in their 20s. This is surprising, since the product targets people in their 20s. Hmm, interesting …

Furthermore, many of the customers who convert were targeted via email marketing campaigns as opposed to social media. The social media campaigns make little difference. It’s also clear that customers in their 20s are being targeted mostly via social media. You verify these assertions visually through plots, as well as by using some statistical tests from your knowledge of inferential statistics.

The next day, you walk up to the VP of Sales at her desk and show her your preliminary findings. She’s intrigued and can’t wait to see more! We’ll show you how to present your results to her in our next section.

Step 5 of 6: Analyze Your Data In Depth

In the previous section, we explored a dataset to find a set of factors that could solve your original problem: predicting which customers at hotshot.io will buy the product. Now you have enough information to create a model to answer that question.

In order to create a predictive model, you must use techniques from machine learning. A machine learning model takes a set of data points, where each data point is expressed as a feature vector.

How do you come up with these feature vectors? In our EDA phase, we identified several factors that could be significant in predicting customer conversion, in particular, age and marketing method (email vs. social media). Notice an important difference between the two factors we’ve talked about: age is a numeric value whereas marketing method is a categorical value. As a data scientist, you know how to treat these values differently and how to correctly convert them to features.

Besides features, you also need labels. Labels tell the model which data points correspond to each category you want to predict. For this, you simply use the CONVERTED field in your data as a boolean label (converted or not converted). 1 indicates that the customer converted, and 0 indicates that they did not.

Now that you have features and labels, you decide to use a simple machine learning classifier algorithm called logistic regression. A classifier is an instance of a broad category of machine learning techniques called ‘ supervised learning, ’where the algorithm learns a model from labeled examples. Contrary to supervised learning, unsupervised learning techniques extract information from data without any labels supplied.

You choose logistic regression because it’s a technique that’s simple, fast and it gives you not only a binary prediction about whether a customer will convert or not, but also a probability of conversion. You apply the method to your data, tune the parameters, and soon, you’re jumping up and down at your computer.

The VP of Sales is passing by, notices your excitement and asks, “So, do you have something for me?” And you burst out, “Yes, the predictive model I created with logistic regression has a TPR of 95% and an FPR of 0.5%!”

She looks at you as if you’ve sprouted a couple of extra heads and are talking to her in Martian.

You realize you haven’t finished the job. You need to do the last critical step, which is making sure that you communicate your results to your client in a way that is compelling and comprehensible for them.

Step 6 of 6: Visualize and Communicate Your Findings

You now have an amazing machine learning model that can predict, with high accuracy, how likely a prospective customer is to buy Hotshot’s product. But how do you convey its awesomeness to your client, the VP of Sales? How do you present your results to her in a form that she can use?

Communication is one of the most underrated skills a data scientist can have. While some of your colleagues (engineers, for example) can get away with being siloed in their technical bubbles, data scientists must be able to communicate with other teams and effectively translate their work for maximum impact. This set of skills is often called ‘data storytelling.’

So what kind of story can you tell based on the work you’ve done so far? Your story will include important conclusions that you can draw based on your exploratory analysis phase and the predictive model you’ve built. Crucially, you want the story to answer the questions that are most important to your client!

First and foremost, you take the data on the current prospects that the sales team is pursuing, run it through your model, and rank them in a spreadsheet in the order of most to least likely to convert. You provide the spreadsheet to your VP of Sales.

Next, you decide to highlight a couple of your most relevant results:

- Age: We’re selling a lot more to prospects in their early 30s, rather than those in their mid-20s. This is unexpected since our product is targets people in their mid-20s!

- Marketing methods: We use social media marketing to target people in their 20s, but email campaigns to people in their 30s. This appears to be a significant factor behind the difference in conversion rates.

The following week, you meet with her and walk her through your conclusions. She’s ecstatic about the results you’ve given her! But then she asks you, “How can we best use these findings?”

Technically, your job as a data scientist is about analyzing the data and showing what’s happening. But as part of your role as the interpreter of data, you’ll be often called upon to make recommendations about how others should use your results.

In response to the VP’s question, you think for a moment and say, “Well, first, I’d recommend using the spreadsheet with prospect predictions for the next week or two to focus on the most likely targets and see how well that performs. That’ll make your sales team more productive right away, and tell me if the predictive model needs more fine-tuning.

Second, we should also look into what’s happening with our marketing and figure out whether we should be targeting the mid-20s crowd with email campaigns, or making our social media campaigns more effective.”

The VP of Sales nods enthusiastically in agreement and immediately sets you up in a meeting with the VP of Marketing so you can demonstrate your results to him. Moreover, she asks you to send a couple of slides summarizing your results and recommendations so she can present them at the board meeting.

Boom! You’ve had an amazing impact on your first project!

You’ve successfully finished your first data science project at work, and you finally understand what your mentors have always said: data science is not just about the techniques, the algorithms or the math. It’s not just about the programming and implementation. It’s a true multi-disciplinary field, one that requires the practitioner to translate between technology and business concerns. This is what makes the career path of data science so challenging, and so valuable.

If you enjoyed reading this and are curious about a career in data science, check out some of our awesome programs and resources:

- Data Science Career Track : a self-guided, mentor-led bootcamp with a job guarantee.

- Data science interview guide : more than 100 question and answers that will prepare you to nail a data science job interview.

Written by Raj Bandyopadhyay

Data Science, Powerlifting, Argentine Tango

More from Raj Bandyopadhyay and Springboard

On Paris and ‘selective outrage’

In 1991, when i was 13, the us attacked iraq in operation desert storm. this war, also nicknamed the ‘first cable tv war’, was shown in….

Nick Babich

Red, White, and Blue

Seven rules about color palettes that everyone (including non-designers) should know.

Getting Typography Right in Digital Design

Typography can make or break a product. here’s how to excel at it.

On writing a letter

When i was in elementary and middle school in india, our english class included a topic called ‘letter writing’. as the name suggests, this…, recommended from medium.

Abdur Rahman

Stackademic

Python is No More The King of Data Science

5 reasons why python is losing its crown.

Matt Bentley

Level Up Coding

My Favourite Software Architecture Patterns

Exploring my most loved software architecture patterns and their practical applications..

Predictive Modeling w/ Python

Practical Guides to Machine Learning

Natural Language Processing

data science and AI

Mark Manson

40 Life Lessons I Know at 40 (That I Wish I Knew at 20)

Today is my 40th birthday..

Lucas Samba

3 Probability Questions I was asked in Walmart Data Scientist Interview

Recently i got an opportunity to interview at walmart for data scientist — 3 position. all thanks to a referral by my friend working at….

Write A Catalyst

How I Study Consistently With A Full-Time Job

Don’t rely on motivation. try this instead..

Towards Data Science

Techniques for Exploratory Data Analysis and Interpretation of Statistical Graphs

Practical approaches for uncovering insights and patterns in statistical visualizations.

Text to speech