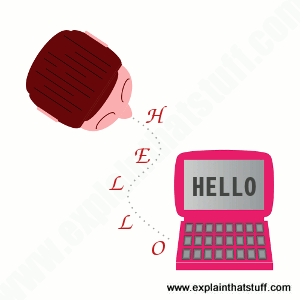

Speech recognition, also known as automatic speech recognition (ASR), computer speech recognition or speech-to-text, is a capability that enables a program to process human speech into a written format.

While speech recognition is commonly confused with voice recognition, speech recognition focuses on the translation of speech from a verbal format to a text one whereas voice recognition just seeks to identify an individual user’s voice.

IBM has had a prominent role within speech recognition since its inception, releasing of “Shoebox” in 1962. This machine had the ability to recognize 16 different words, advancing the initial work from Bell Labs from the 1950s. However, IBM didn’t stop there, but continued to innovate over the years, launching VoiceType Simply Speaking application in 1996. This speech recognition software had a 42,000-word vocabulary, supported English and Spanish, and included a spelling dictionary of 100,000 words.

While speech technology had a limited vocabulary in the early days, it is utilized in a wide number of industries today, such as automotive, technology, and healthcare. Its adoption has only continued to accelerate in recent years due to advancements in deep learning and big data. Research (link resides outside ibm.com) shows that this market is expected to be worth USD 24.9 billion by 2025.

Explore the free O'Reilly ebook to learn how to get started with Presto, the open source SQL engine for data analytics.

Register for the guide on foundation models

Many speech recognition applications and devices are available, but the more advanced solutions use AI and machine learning . They integrate grammar, syntax, structure, and composition of audio and voice signals to understand and process human speech. Ideally, they learn as they go — evolving responses with each interaction.

The best kind of systems also allow organizations to customize and adapt the technology to their specific requirements — everything from language and nuances of speech to brand recognition. For example:

- Language weighting: Improve precision by weighting specific words that are spoken frequently (such as product names or industry jargon), beyond terms already in the base vocabulary.

- Speaker labeling: Output a transcription that cites or tags each speaker’s contributions to a multi-participant conversation.

- Acoustics training: Attend to the acoustical side of the business. Train the system to adapt to an acoustic environment (like the ambient noise in a call center) and speaker styles (like voice pitch, volume and pace).

- Profanity filtering: Use filters to identify certain words or phrases and sanitize speech output.

Meanwhile, speech recognition continues to advance. Companies, like IBM, are making inroads in several areas, the better to improve human and machine interaction.

The vagaries of human speech have made development challenging. It’s considered to be one of the most complex areas of computer science – involving linguistics, mathematics and statistics. Speech recognizers are made up of a few components, such as the speech input, feature extraction, feature vectors, a decoder, and a word output. The decoder leverages acoustic models, a pronunciation dictionary, and language models to determine the appropriate output.

Speech recognition technology is evaluated on its accuracy rate, i.e. word error rate (WER), and speed. A number of factors can impact word error rate, such as pronunciation, accent, pitch, volume, and background noise. Reaching human parity – meaning an error rate on par with that of two humans speaking – has long been the goal of speech recognition systems. Research from Lippmann (link resides outside ibm.com) estimates the word error rate to be around 4 percent, but it’s been difficult to replicate the results from this paper.

Various algorithms and computation techniques are used to recognize speech into text and improve the accuracy of transcription. Below are brief explanations of some of the most commonly used methods:

- Natural language processing (NLP): While NLP isn’t necessarily a specific algorithm used in speech recognition, it is the area of artificial intelligence which focuses on the interaction between humans and machines through language through speech and text. Many mobile devices incorporate speech recognition into their systems to conduct voice search—e.g. Siri—or provide more accessibility around texting.

- Hidden markov models (HMM): Hidden Markov Models build on the Markov chain model, which stipulates that the probability of a given state hinges on the current state, not its prior states. While a Markov chain model is useful for observable events, such as text inputs, hidden markov models allow us to incorporate hidden events, such as part-of-speech tags, into a probabilistic model. They are utilized as sequence models within speech recognition, assigning labels to each unit—i.e. words, syllables, sentences, etc.—in the sequence. These labels create a mapping with the provided input, allowing it to determine the most appropriate label sequence.

- N-grams: This is the simplest type of language model (LM), which assigns probabilities to sentences or phrases. An N-gram is sequence of N-words. For example, “order the pizza” is a trigram or 3-gram and “please order the pizza” is a 4-gram. Grammar and the probability of certain word sequences are used to improve recognition and accuracy.

- Neural networks: Primarily leveraged for deep learning algorithms, neural networks process training data by mimicking the interconnectivity of the human brain through layers of nodes. Each node is made up of inputs, weights, a bias (or threshold) and an output. If that output value exceeds a given threshold, it “fires” or activates the node, passing data to the next layer in the network. Neural networks learn this mapping function through supervised learning, adjusting based on the loss function through the process of gradient descent. While neural networks tend to be more accurate and can accept more data, this comes at a performance efficiency cost as they tend to be slower to train compared to traditional language models.

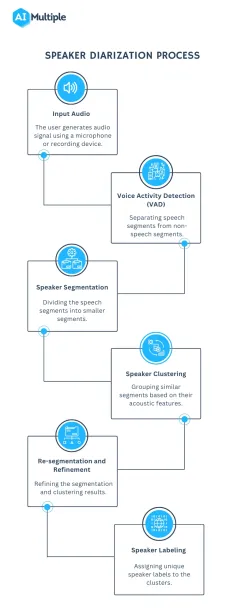

- Speaker Diarization (SD): Speaker diarization algorithms identify and segment speech by speaker identity. This helps programs better distinguish individuals in a conversation and is frequently applied at call centers distinguishing customers and sales agents.

A wide number of industries are utilizing different applications of speech technology today, helping businesses and consumers save time and even lives. Some examples include:

Automotive: Speech recognizers improves driver safety by enabling voice-activated navigation systems and search capabilities in car radios.

Technology: Virtual agents are increasingly becoming integrated within our daily lives, particularly on our mobile devices. We use voice commands to access them through our smartphones, such as through Google Assistant or Apple’s Siri, for tasks, such as voice search, or through our speakers, via Amazon’s Alexa or Microsoft’s Cortana, to play music. They’ll only continue to integrate into the everyday products that we use, fueling the “Internet of Things” movement.

Healthcare: Doctors and nurses leverage dictation applications to capture and log patient diagnoses and treatment notes.

Sales: Speech recognition technology has a couple of applications in sales. It can help a call center transcribe thousands of phone calls between customers and agents to identify common call patterns and issues. AI chatbots can also talk to people via a webpage, answering common queries and solving basic requests without needing to wait for a contact center agent to be available. It both instances speech recognition systems help reduce time to resolution for consumer issues.

Security: As technology integrates into our daily lives, security protocols are an increasing priority. Voice-based authentication adds a viable level of security.

Convert speech into text using AI-powered speech recognition and transcription.

Convert text into natural-sounding speech in a variety of languages and voices.

AI-powered hybrid cloud software.

Enable speech transcription in multiple languages for a variety of use cases, including but not limited to customer self-service, agent assistance and speech analytics.

Learn how to keep up, rethink how to use technologies like the cloud, AI and automation to accelerate innovation, and meet the evolving customer expectations.

IBM watsonx Assistant helps organizations provide better customer experiences with an AI chatbot that understands the language of the business, connects to existing customer care systems, and deploys anywhere with enterprise security and scalability. watsonx Assistant automates repetitive tasks and uses machine learning to resolve customer support issues quickly and efficiently.

- Engineering Mathematics

- Discrete Mathematics

- Operating System

- Computer Networks

- Digital Logic and Design

- C Programming

- Data Structures

- Theory of Computation

- Compiler Design

- Computer Org and Architecture

What is Speech Recognition?

- What is Image Recognition?

- Automatic Speech Recognition using Whisper

- Speech Recognition Module Python

- Speech Recognition in Python using CMU Sphinx

- What is Recognition vs Recall in UX Design?

- How to Set Up Speech Recognition on Windows?

- What is Machine Learning?

- Python | Speech recognition on large audio files

- What is a Microphone?

- What is Optical Character Recognition (OCR)?

- Audio Recognition in Tensorflow

- What is a Speaker?

- What is Memory Decoding?

- Automatic Speech Recognition using CTC

- Speech Recognition in Hindi using Python

- What is Communication?

- Restart your Computer with Speech Recognition

- Speech Recognition in Python using Google Speech API

- Convert Text to Speech in Python

Speech recognition or speech-to-text recognition, is the capacity of a machine or program to recognize spoken words and transform them into text. Speech Recognition is an important feature in several applications used such as home automation, artificial intelligence, etc. In this article, we are going to discuss every point about What is Speech Recognition.

What is speech recognition in a Computer?

Speech Recognition , also known as automatic speech recognition ( ASR ), computer speech recognition, or speech-to-text, focuses on enabling computers to understand and interpret human speech. Speech recognition involves converting spoken language into text or executing commands based on the recognized words. This technology relies on sophisticated algorithms and machine learning models to process and understand human speech in real-time , despite the variations in accents , pitch , speed , and slang .

Key Features of Speech Recognition

- Accuracy and Speed: They can process speech in real-time or near real-time, providing quick responses to user inputs.

- Natural Language Understanding (NLU): NLU enables systems to handle complex commands and queries , making technology more intuitive and user-friendly .

- Multi-Language Support: Support for multiple languages and dialects , allowing users from different linguistic backgrounds to interact with technology in their native language.

- Background Noise Handling: This feature is crucial for voice-activated systems used in public or outdoor settings.

Speech Recognition Algorithms

Speech recognition technology relies on complex algorithms to translate spoken language into text or commands that computers can understand and act upon. Here are the algorithms and approaches used in speech recognition:

1. Hidden Markov Models (HMM)

Hidden Markov Models have been the backbone of speech recognition for many years. They model speech as a sequence of states, with each state representing a phoneme (basic unit of sound) or group of phonemes. HMMs are used to estimate the probability of a given sequence of sounds, making it possible to determine the most likely words spoken. Usage : Although newer methods have surpassed HMM in performance, it remains a fundamental concept in speech recognition, often used in combination with other techniques.

2. Natural language processing (NLP)

NLP is the area of artificial intelligence which focuses on the interaction between humans and machines through language through speech and text. Many mobile devices incorporate speech recognition into their systems to conduct voice search. Example such as : Siri or provide more accessibility around texting.

3. Deep Neural Networks (DNN)

DNNs have improved speech recognition’s accuracy a lot. These networks can learn hierarchical representations of data, making them particularly effective at modeling complex patterns like those found in human speech. DNNs are used both for acoustic modeling , to better understand the sound of speech , and for language modeling, to predict the likelihood of certain word sequences.

4. End-to-End Deep Learning

Now, the trend has shifted towards end-to-end deep learning models , which can directly map speech inputs to text outputs without the need for intermediate phonetic representations. These models, often based on advanced RNNs , Transformers, or Attention Mechanisms , can learn more complex patterns and dependencies in the speech signal.

What is Automatic Speech Recognition?

Automatic Speech Recognition (ASR) is a technology that enables computers to understand and transcribe spoken language into text. It works by analyzing audio input, such as spoken words, and converting them into written text , typically in real-time. ASR systems use algorithms and machine learning techniques to recognize and interpret speech patterns , phonemes, and language models to accurately transcribe spoken words. This technology is widely used in various applications, including virtual assistants , voice-controlled devices , dictation software , customer service automation , and language translation services .

What is Dragon speech recognition software?

Dragon speech recognition software is a program developed by Nuance Communications that allows users to dictate text and control their computer using voice commands. It transcribes spoken words into written text in real-time , enabling hands-free operation of computers and devices. Dragon software is widely used for various purposes, including dictating documents , composing emails , navigating the web , and controlling applications . It also features advanced capabilities such as voice commands for editing and formatting text , as well as custom vocabulary and voice profiles for improved accuracy and personalization.

What is a normal speech recognition threshold?

The normal speech recognition threshold refers to the level of sound, typically measured in decibels (dB) , at which a person can accurately recognize speech. In quiet environments, this threshold is typically around 0 to 10 dB for individuals with normal hearing. However, in noisy environments or for individuals with hearing impairments , the threshold may be higher, meaning they require a louder volume to accurately recognize speech .

Speech Recognition Use Cases

- Virtual Assistants: These are like digital helpers that understand what you say. They can do things like set reminders, search the internet, and control smart home devices, all without you having to touch anything. Examples include Siri , Alexa , and Google Assistant .

- Accessibility Tools: Speech recognition makes technology easier to use for people with disabilities. Features like voice control on phones and computers help them interact with devices more easily. There are also special apps for people with disabilities.

- Automotive Systems: In cars, you can use your voice to control things like navigation and music. This helps drivers stay focused and safe on the road. Examples include voice-activated navigation systems in cars.

- Healthcare: Doctors use speech recognition to quickly write down notes about patients, so they have more time to spend with them. There are also voice-controlled bots that help with patient care. For example, doctors use dictation tools to write down patient information quickly.

- Customer Service: Speech recognition is used to direct customer calls to the right place or provide automated help. This makes things run smoother and keeps customers happy. Examples include call centers that you can talk to and customer service bots .

- Education and E-Learning: Speech recognition helps people learn languages by giving them feedback on their pronunciation. It also transcribes lectures, making them easier to understand. Examples include language learning apps and lecture transcribing services.

- Security and Authentication: Voice recognition, combined with biometrics , keeps things secure by making sure it’s really you accessing your stuff. This is used in banking and for secure facilities. For example, some banks use your voice to make sure it’s really you logging in.

- Entertainment and Media: Voice recognition helps you find stuff to watch or listen to by just talking. This makes it easier to use things like TV and music services . There are also games you can play using just your voice.

Speech recognition is a powerful technology that lets computers understand and process human speech. It’s used everywhere, from asking your smartphone for directions to controlling your smart home devices with just your voice. This tech makes life easier by helping with tasks without needing to type or press buttons, making gadgets like virtual assistants more helpful. It’s also super important for making tech accessible to everyone, including those who might have a hard time using keyboards or screens. As we keep finding new ways to use speech recognition, it’s becoming a big part of our daily tech life, showing just how much we can do when we talk to our devices.

What is Speech Recognition?- FAQs

What are examples of speech recognition.

Note Taking/Writing: An example of speech recognition technology in use is speech-to-text platforms such as Speechmatics or Google’s speech-to-text engine. In addition, many voice assistants offer speech-to-text translation.

Is speech recognition secure?

Security concerns related to speech recognition primarily involve the privacy and protection of audio data collected and processed by speech recognition systems. Ensuring secure data transmission, storage, and processing is essential to address these concerns.

What is speech recognition in AI?

Speech recognition is the process of converting sound signals to text transcriptions. Steps involved in conversion of a sound wave to text transcription in a speech recognition system are: Recording: Audio is recorded using a voice recorder. Sampling: Continuous audio wave is converted to discrete values.

How accurate is speech recognition technology?

The accuracy of speech recognition technology can vary depending on factors such as the quality of audio input , language complexity , and the specific application or system being used. Advances in machine learning and deep learning have improved accuracy significantly in recent years.

Please Login to comment...

Similar reads.

- tech-updates

- Computer Subject

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Speech Recognition: Everything You Need to Know in 2024

Speech recognition, also known as automatic speech recognition (ASR) , enables seamless communication between humans and machines. This technology empowers organizations to transform human speech into written text. Speech recognition technology can revolutionize many business applications , including customer service, healthcare, finance and sales.

In this comprehensive guide, we will explain speech recognition, exploring how it works, the algorithms involved, and the use cases of various industries.

If you require training data for your speech recognition system, here is a guide to finding the right speech data collection services.

What is speech recognition?

Speech recognition, also known as automatic speech recognition (ASR), speech-to-text (STT), and computer speech recognition, is a technology that enables a computer to recognize and convert spoken language into text.

Speech recognition technology uses AI and machine learning models to accurately identify and transcribe different accents, dialects, and speech patterns.

What are the features of speech recognition systems?

Speech recognition systems have several components that work together to understand and process human speech. Key features of effective speech recognition are:

- Audio preprocessing: After you have obtained the raw audio signal from an input device, you need to preprocess it to improve the quality of the speech input The main goal of audio preprocessing is to capture relevant speech data by removing any unwanted artifacts and reducing noise.

- Feature extraction: This stage converts the preprocessed audio signal into a more informative representation. This makes raw audio data more manageable for machine learning models in speech recognition systems.

- Language model weighting: Language weighting gives more weight to certain words and phrases, such as product references, in audio and voice signals. This makes those keywords more likely to be recognized in a subsequent speech by speech recognition systems.

- Acoustic modeling : It enables speech recognizers to capture and distinguish phonetic units within a speech signal. Acoustic models are trained on large datasets containing speech samples from a diverse set of speakers with different accents, speaking styles, and backgrounds.

- Speaker labeling: It enables speech recognition applications to determine the identities of multiple speakers in an audio recording. It assigns unique labels to each speaker in an audio recording, allowing the identification of which speaker was speaking at any given time.

- Profanity filtering: The process of removing offensive, inappropriate, or explicit words or phrases from audio data.

What are the different speech recognition algorithms?

Speech recognition uses various algorithms and computation techniques to convert spoken language into written language. The following are some of the most commonly used speech recognition methods:

- Hidden Markov Models (HMMs): Hidden Markov model is a statistical Markov model commonly used in traditional speech recognition systems. HMMs capture the relationship between the acoustic features and model the temporal dynamics of speech signals.

- Estimate the probability of word sequences in the recognized text

- Convert colloquial expressions and abbreviations in a spoken language into a standard written form

- Map phonetic units obtained from acoustic models to their corresponding words in the target language.

- Speaker Diarization (SD): Speaker diarization, or speaker labeling, is the process of identifying and attributing speech segments to their respective speakers (Figure 1). It allows for speaker-specific voice recognition and the identification of individuals in a conversation.

Figure 1: A flowchart illustrating the speaker diarization process

- Dynamic Time Warping (DTW): Speech recognition algorithms use Dynamic Time Warping (DTW) algorithm to find an optimal alignment between two sequences (Figure 2).

Figure 2: A speech recognizer using dynamic time warping to determine the optimal distance between elements

5. Deep neural networks: Neural networks process and transform input data by simulating the non-linear frequency perception of the human auditory system.

6. Connectionist Temporal Classification (CTC): It is a training objective introduced by Alex Graves in 2006. CTC is especially useful for sequence labeling tasks and end-to-end speech recognition systems. It allows the neural network to discover the relationship between input frames and align input frames with output labels.

Speech recognition vs voice recognition

Speech recognition is commonly confused with voice recognition, yet, they refer to distinct concepts. Speech recognition converts spoken words into written text, focusing on identifying the words and sentences spoken by a user, regardless of the speaker’s identity.

On the other hand, voice recognition is concerned with recognizing or verifying a speaker’s voice, aiming to determine the identity of an unknown speaker rather than focusing on understanding the content of the speech.

What are the challenges of speech recognition with solutions?

While speech recognition technology offers many benefits, it still faces a number of challenges that need to be addressed. Some of the main limitations of speech recognition include:

Acoustic Challenges:

- Assume a speech recognition model has been primarily trained on American English accents. If a speaker with a strong Scottish accent uses the system, they may encounter difficulties due to pronunciation differences. For example, the word “water” is pronounced differently in both accents. If the system is not familiar with this pronunciation, it may struggle to recognize the word “water.”

Solution: Addressing these challenges is crucial to enhancing speech recognition applications’ accuracy. To overcome pronunciation variations, it is essential to expand the training data to include samples from speakers with diverse accents. This approach helps the system recognize and understand a broader range of speech patterns.

- For instance, you can use data augmentation techniques to reduce the impact of noise on audio data. Data augmentation helps train speech recognition models with noisy data to improve model accuracy in real-world environments.

Figure 3: Examples of a target sentence (“The clown had a funny face”) in the background noise of babble, car and rain.

Linguistic Challenges:

- Out-of-vocabulary words: Since the speech recognizers model has not been trained on OOV words, they may incorrectly recognize them as different or fail to transcribe them when encountering them.

Figure 4: An example of detecting OOV word

Solution: Word Error Rate (WER) is a common metric that is used to measure the accuracy of a speech recognition or machine translation system. The word error rate can be computed as:

Figure 5: Demonstrating how to calculate word error rate (WER)

- Homophones: Homophones are words that are pronounced identically but have different meanings, such as “to,” “too,” and “two”. Solution: Semantic analysis allows speech recognition programs to select the appropriate homophone based on its intended meaning in a given context. Addressing homophones improves the ability of the speech recognition process to understand and transcribe spoken words accurately.

Technical/System Challenges:

- Data privacy and security: Speech recognition systems involve processing and storing sensitive and personal information, such as financial information. An unauthorized party could use the captured information, leading to privacy breaches.

Solution: You can encrypt sensitive and personal audio information transmitted between the user’s device and the speech recognition software. Another technique for addressing data privacy and security in speech recognition systems is data masking. Data masking algorithms mask and replace sensitive speech data with structurally identical but acoustically different data.

Figure 6: An example of how data masking works

- Limited training data: Limited training data directly impacts the performance of speech recognition software. With insufficient training data, the speech recognition model may struggle to generalize different accents or recognize less common words.

Solution: To improve the quality and quantity of training data, you can expand the existing dataset using data augmentation and synthetic data generation technologies.

13 speech recognition use cases and applications

In this section, we will explain how speech recognition revolutionizes the communication landscape across industries and changes the way businesses interact with machines.

Customer Service and Support

- Interactive Voice Response (IVR) systems: Interactive voice response (IVR) is a technology that automates the process of routing callers to the appropriate department. It understands customer queries and routes calls to the relevant departments. This reduces the call volume for contact centers and minimizes wait times. IVR systems address simple customer questions without human intervention by employing pre-recorded messages or text-to-speech technology . Automatic Speech Recognition (ASR) allows IVR systems to comprehend and respond to customer inquiries and complaints in real time.

- Customer support automation and chatbots: According to a survey, 78% of consumers interacted with a chatbot in 2022, but 80% of respondents said using chatbots increased their frustration level.

- Sentiment analysis and call monitoring: Speech recognition technology converts spoken content from a call into text. After speech-to-text processing, natural language processing (NLP) techniques analyze the text and assign a sentiment score to the conversation, such as positive, negative, or neutral. By integrating speech recognition with sentiment analysis, organizations can address issues early on and gain valuable insights into customer preferences.

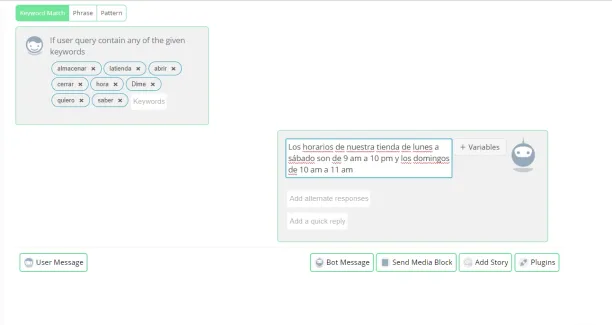

- Multilingual support: Speech recognition software can be trained in various languages to recognize and transcribe the language spoken by a user accurately. By integrating speech recognition technology into chatbots and Interactive Voice Response (IVR) systems, organizations can overcome language barriers and reach a global audience (Figure 7). Multilingual chatbots and IVR automatically detect the language spoken by a user and switch to the appropriate language model.

Figure 7: Showing how a multilingual chatbot recognizes words in another language

- Customer authentication with voice biometrics: Voice biometrics use speech recognition technologies to analyze a speaker’s voice and extract features such as accent and speed to verify their identity.

Sales and Marketing:

- Virtual sales assistants: Virtual sales assistants are AI-powered chatbots that assist customers with purchasing and communicate with them through voice interactions. Speech recognition allows virtual sales assistants to understand the intent behind spoken language and tailor their responses based on customer preferences.

- Transcription services : Speech recognition software records audio from sales calls and meetings and then converts the spoken words into written text using speech-to-text algorithms.

Automotive:

- Voice-activated controls: Voice-activated controls allow users to interact with devices and applications using voice commands. Drivers can operate features like climate control, phone calls, or navigation systems.

- Voice-assisted navigation: Voice-assisted navigation provides real-time voice-guided directions by utilizing the driver’s voice input for the destination. Drivers can request real-time traffic updates or search for nearby points of interest using voice commands without physical controls.

Healthcare:

- Recording the physician’s dictation

- Transcribing the audio recording into written text using speech recognition technology

- Editing the transcribed text for better accuracy and correcting errors as needed

- Formatting the document in accordance with legal and medical requirements.

- Virtual medical assistants: Virtual medical assistants (VMAs) use speech recognition, natural language processing, and machine learning algorithms to communicate with patients through voice or text. Speech recognition software allows VMAs to respond to voice commands, retrieve information from electronic health records (EHRs) and automate the medical transcription process.

- Electronic Health Records (EHR) integration: Healthcare professionals can use voice commands to navigate the EHR system , access patient data, and enter data into specific fields.

Technology:

- Virtual agents: Virtual agents utilize natural language processing (NLP) and speech recognition technologies to understand spoken language and convert it into text. Speech recognition enables virtual agents to process spoken language in real-time and respond promptly and accurately to user voice commands.

Further reading

- Top 5 Speech Recognition Data Collection Methods in 2023

- Top 11 Speech Recognition Applications in 2023

External Links

- 1. Databricks

- 2. PubMed Central

- 3. Qin, L. (2013). Learning Out-of-vocabulary Words in Automatic Speech Recognition . Carnegie Mellon University.

- 4. Wikipedia

Next to Read

10+ speech data collection services in 2024, top 5 speech recognition data collection methods in 2024, top 4 speech recognition challenges & solutions in 2024.

Your email address will not be published. All fields are required.

Related research

Top 11 Voice Recognition Applications in 2024

Speech Recognition

Speech recognition is the capability of an electronic device to understand spoken words. A microphone records a person's voice and the hardware converts the signal from analog sound waves to digital audio. The audio data is then processed by software , which interprets the sound as individual words.

A common type of speech recognition is "speech-to-text" or "dictation" software, such as Dragon Naturally Speaking, which outputs text as you speak. While you can buy speech recognition programs, modern versions of the Macintosh and Windows operating systems include a built-in dictation feature. This capability allows you to record text as well as perform basic system commands.

In Windows, some programs support speech recognition automatically while others do not. You can enable speech recognition for all applications by selecting All Programs → Accessories → Ease of Access → Windows Speech Recognition and clicking "Enable dictation everywhere." In OS X, you can enable dictation in the "Dictation & Speech" system preference pane. Simply check the "On" button next to Dictation to turn on the speech-to-text capability. To start dictating in a supported program, select Edit → Start Dictation . You can also view and edit spoken commands in OS X by opening the "Accessibility" system preference pane and selecting "Speakable Items."

Another type of speech recognition is interactive speech, which is common on mobile devices, such as smartphones and tablets . Both iOS and Android devices allow you to speak to your phone and receive a verbal response. The iOS version is called "Siri," and serves as a personal assistant. You can ask Siri to save a reminder on your phone, tell you the weather forecast, give you directions, or answer many other questions. This type of speech recognition is considered a natural user interface (or NUI ), since it responds naturally to your spoken input .

While many speech recognition systems only support English, some speech recognition software supports multiple languages. This requires a unique dictionary for each language and extra algorithms to understand and process different accents. Some dictation systems, such as Dragon Naturally Speaking, can be trained to understand your voice and will adapt over time to understand you more accurately.

Test Your Knowledge

Real-time graphics performance is measured by what metric?

Tech Factor

The tech terms computer dictionary.

The definition of Speech Recognition on this page is an original definition written by the TechTerms.com team . If you would like to reference this page or cite this definition, please use the green citation links above.

The goal of TechTerms.com is to explain computer terminology in a way that is easy to understand. We strive for simplicity and accuracy with every definition we publish. If you have feedback about this definition or would like to suggest a new technical term, please contact us .

Sign up for the free TechTerms Newsletter

You can unsubscribe or change your frequency setting at any time using the links available in each email. Questions? Please contact us .

We just sent you an email to confirm your email address. Once you confirm your address, you will begin to receive the newsletter.

If you have any questions, please contact us .

How Does Speech Recognition Work? (9 Simple Questions Answered)

- by Team Experts

- July 2, 2023 July 3, 2023

Discover the Surprising Science Behind Speech Recognition – Learn How It Works in 9 Simple Questions!

Speech recognition is the process of converting spoken words into written or machine-readable text. It is achieved through a combination of natural language processing , audio inputs, machine learning , and voice recognition . Speech recognition systems analyze speech patterns to identify phonemes , the basic units of sound in a language. Acoustic modeling is used to match the phonemes to words , and word prediction algorithms are used to determine the most likely words based on context analysis . Finally, the words are converted into text.

What is Natural Language Processing and How Does it Relate to Speech Recognition?

How do audio inputs enable speech recognition, what role does machine learning play in speech recognition, how does voice recognition work, what are the different types of speech patterns used for speech recognition, how is acoustic modeling used for accurate phoneme detection in speech recognition systems, what is word prediction and why is it important for effective speech recognition technology, how can context analysis improve accuracy of automatic speech recognition systems, common mistakes and misconceptions.

Natural language processing (NLP) is a branch of artificial intelligence that deals with the analysis and understanding of human language. It is used to enable machines to interpret and process natural language, such as speech, text, and other forms of communication . NLP is used in a variety of applications , including automated speech recognition , voice recognition technology , language models, text analysis , text-to-speech synthesis , natural language understanding , natural language generation, semantic analysis , syntactic analysis, pragmatic analysis, sentiment analysis, and speech-to-text conversion. NLP is closely related to speech recognition , as it is used to interpret and understand spoken language in order to convert it into text.

Audio inputs enable speech recognition by providing digital audio recordings of spoken words . These recordings are then analyzed to extract acoustic features of speech, such as pitch, frequency, and amplitude. Feature extraction techniques , such as spectral analysis of sound waves, are used to identify and classify phonemes . Natural language processing (NLP) and machine learning models are then used to interpret the audio recordings and recognize speech. Neural networks and deep learning architectures are used to further improve the accuracy of voice recognition . Finally, Automatic Speech Recognition (ASR) systems are used to convert the speech into text, and noise reduction techniques and voice biometrics are used to improve accuracy .

Machine learning plays a key role in speech recognition , as it is used to develop algorithms that can interpret and understand spoken language. Natural language processing , pattern recognition techniques , artificial intelligence , neural networks, acoustic modeling , language models, statistical methods , feature extraction , hidden Markov models (HMMs), deep learning architectures , voice recognition systems, speech synthesis , and automatic speech recognition (ASR) are all used to create machine learning models that can accurately interpret and understand spoken language. Natural language understanding is also used to further refine the accuracy of the machine learning models .

Voice recognition works by using machine learning algorithms to analyze the acoustic properties of a person’s voice. This includes using voice recognition software to identify phonemes , speaker identification, text normalization , language models, noise cancellation techniques , prosody analysis , contextual understanding , artificial neural networks, voice biometrics , speech synthesis , and deep learning . The data collected is then used to create a voice profile that can be used to identify the speaker .

The different types of speech patterns used for speech recognition include prosody , contextual speech recognition , speaker adaptation , language models, hidden Markov models (HMMs), neural networks, Gaussian mixture models (GMMs) , discrete wavelet transform (DWT), Mel-frequency cepstral coefficients (MFCCs), vector quantization (VQ), dynamic time warping (DTW), continuous density hidden Markov model (CDHMM), support vector machines (SVM), and deep learning .

Acoustic modeling is used for accurate phoneme detection in speech recognition systems by utilizing statistical models such as Hidden Markov Models (HMMs) and Gaussian Mixture Models (GMMs). Feature extraction techniques such as Mel-frequency cepstral coefficients (MFCCs) are used to extract relevant features from the audio signal . Context-dependent models are also used to improve accuracy . Discriminative training techniques such as maximum likelihood estimation and the Viterbi algorithm are used to train the models. In recent years, neural networks and deep learning algorithms have been used to improve accuracy , as well as natural language processing techniques .

Word prediction is a feature of natural language processing and artificial intelligence that uses machine learning algorithms to predict the next word or phrase a user is likely to type or say. It is used in automated speech recognition systems to improve the accuracy of the system by reducing the amount of user effort and time spent typing or speaking words. Word prediction also enhances the user experience by providing faster response times and increased efficiency in data entry tasks. Additionally, it reduces errors due to incorrect spelling or grammar, and improves the understanding of natural language by machines. By using word prediction, speech recognition technology can be more effective , providing improved accuracy and enhanced ability for machines to interpret human speech.

Context analysis can improve the accuracy of automatic speech recognition systems by utilizing language models, acoustic models, statistical methods , and machine learning algorithms to analyze the semantic , syntactic, and pragmatic aspects of speech. This analysis can include word – level , sentence- level , and discourse-level context, as well as utterance understanding and ambiguity resolution. By taking into account the context of the speech, the accuracy of the automatic speech recognition system can be improved.

- Misconception : Speech recognition requires a person to speak in a robotic , monotone voice. Correct Viewpoint: Speech recognition technology is designed to recognize natural speech patterns and does not require users to speak in any particular way.

- Misconception : Speech recognition can understand all languages equally well. Correct Viewpoint: Different speech recognition systems are designed for different languages and dialects, so the accuracy of the system will vary depending on which language it is programmed for.

- Misconception: Speech recognition only works with pre-programmed commands or phrases . Correct Viewpoint: Modern speech recognition systems are capable of understanding conversational language as well as specific commands or phrases that have been programmed into them by developers.

Automatic Speech Recognition

What is Automatic Speech Recognition?

Automatic Speech Recognition (ASR), also known as speech-to-text, is the process by which a computer or electronic device converts human speech into written text. This technology is a subset of computational linguistics that deals with the interpretation and translation of spoken language into text by computers. It enables humans to speak commands into devices, dictate documents, and interact with computer-based systems through natural language.

How Does Automatic Speech Recognition Work?

ASR systems typically involve several processing stages to accurately transcribe speech. The process begins with the acoustic signal being captured by a microphone. This signal is then digitized and processed to filter out noise and improve clarity.

The core of ASR technology involves two main models:

- Acoustic Model: This model is trained to recognize the basic units of sound in speech, known as phonemes. It maps segments of audio to these phonemes and considers variations in pronunciation, accent, and intonation.

- Language Model: This model is used to understand the context and semantics of the spoken words. It predicts the sequence of words that form a sentence, based on the likelihood of word sequences in the language. This helps in distinguishing between words that sound similar but have different meanings.

Once the audio has been processed through these models, the ASR system generates a transcription of the spoken words. Advanced systems may also include additional components, such as a dialogue manager in interactive voice response systems, or a natural language understanding module to interpret the intent behind the words.

Challenges in Automatic Speech Recognition

Despite significant advancements, ASR systems face numerous challenges that can affect their accuracy and performance:

- Variability in Speech: Differences in accents, dialects, and individual speaker characteristics can make it difficult for ASR systems to accurately recognize words.

- Background Noise: Noisy environments can interfere with the system's ability to capture clear audio, leading to transcription errors.

- Homophones and Context: Words that sound the same but have different meanings can be challenging for ASR systems to differentiate without understanding the context.

- Continuous Speech: Unlike written text, spoken language does not have clear boundaries between words, making it challenging to segment speech accurately.

- Colloquialisms and Slang: Everyday speech often includes informal language and slang, which may not be present in the training data used for ASR models.

Applications of Automatic Speech Recognition

ASR technology has a wide range of applications across various industries:

- Virtual Assistants: Devices like smartphones and smart speakers use ASR to enable voice commands and provide user assistance.

- Accessibility: ASR helps individuals with disabilities by enabling voice control over devices and converting speech to text for those who are deaf or hard of hearing.

- Transcription Services: ASR is used to automatically transcribe meetings, lectures, and interviews, saving time and effort in documentation.

- Customer Service: Call centers use ASR to route calls and handle inquiries through interactive voice response systems.

- Healthcare: ASR enables hands-free documentation for medical professionals, allowing them to dictate notes and records.

The Future of Automatic Speech Recognition

The future of ASR is promising, with ongoing research focused on improving accuracy, reducing latency, and understanding natural language more effectively. As machine learning algorithms become more sophisticated, we can expect ASR systems to become more reliable and integrated into an even broader array of applications, making human-computer interaction more seamless and natural.

Automatic Speech Recognition technology has revolutionized the way we interact with machines, making it possible to communicate with computers using our most natural form of communication: speech. While challenges remain, the continuous improvements in ASR systems are opening up new possibilities for innovation and convenience in our daily lives.

The world's most comprehensive data science & artificial intelligence glossary

Please sign up or login with your details

Generation Overview

AI Generator calls

AI Video Generator calls

AI Chat messages

Genius Mode messages

Genius Mode images

AD-free experience

Private images

- Includes 500 AI Image generations, 1750 AI Chat Messages, 30 AI Video generations, 60 Genius Mode Messages and 60 Genius Mode Images per month. If you go over any of these limits, you will be charged an extra $5 for that group.

- For example: if you go over 500 AI images, but stay within the limits for AI Chat and Genius Mode, you'll be charged $5 per additional 500 AI Image generations.

- Includes 100 AI Image generations and 300 AI Chat Messages. If you go over any of these limits, you will have to pay as you go.

- For example: if you go over 100 AI images, but stay within the limits for AI Chat, you'll have to reload on credits to generate more images. Choose from $5 - $1000. You'll only pay for what you use.

Out of credits

Refill your membership to continue using DeepAI

Share your generations with friends

- Random article

- Teaching guide

- Privacy & cookies

Speech recognition software

by Chris Woodford . Last updated: August 17, 2023.

I t's just as well people can understand speech. Imagine if you were like a computer: friends would have to "talk" to you by prodding away at a plastic keyboard connected to your brain by a long, curly wire. If you wanted to say "hello" to someone, you'd have to reach out, chatter your fingers over their keyboard, and wait for their eyes to light up; they'd have to do the same to you. Conversations would be a long, slow, elaborate nightmare—a silent dance of fingers on plastic; strange, abstract, and remote. We'd never put up with such clumsiness as humans, so why do we talk to our computers this way?

Scientists have long dreamed of building machines that can chatter and listen just like humans. But although computerized speech recognition has been around for decades, and is now built into most smartphones and PCs, few of us actually use it. Why? Possibly because we never even bother to try it out, working on the assumption that computers could never pull off a trick so complex as understanding the human voice. It's certainly true that speech recognition is a complex problem that's challenged some of the world's best computer scientists, mathematicians, and linguists. How well are they doing at cracking the problem? Will we all be chatting to our PCs one day soon? Let's take a closer look and find out!

Photo: A court reporter dictates notes into a laptop with a noise-cancelling microphone and speech-recogition software. Photo by Micha Pierce courtesy of US Marine Corps and DVIDS .

What is speech?

Language sets people far above our creeping, crawling animal friends. While the more intelligent creatures, such as dogs and dolphins, certainly know how to communicate with sounds, only humans enjoy the rich complexity of language. With just a couple of dozen letters, we can build any number of words (most dictionaries contain tens of thousands) and express an infinite number of thoughts.

Photo: Speech recognition has been popping up all over the place for quite a few years now. Even my old iPod Touch (dating from around 2012) has a built-in "voice control" program that let you pick out music just by saying "Play albums by U2," or whatever band you're in the mood for.

When we speak, our voices generate little sound packets called phones (which correspond to the sounds of letters or groups of letters in words); so speaking the word cat produces phones that correspond to the sounds "c," "a," and "t." Although you've probably never heard of these kinds of phones before, you might well be familiar with the related concept of phonemes : simply speaking, phonemes are the basic LEGO™ blocks of sound that all words are built from. Although the difference between phones and phonemes is complex and can be very confusing, this is one "quick-and-dirty" way to remember it: phones are actual bits of sound that we speak (real, concrete things), whereas phonemes are ideal bits of sound we store (in some sense) in our minds (abstract, theoretical sound fragments that are never actually spoken).

Computers and computer models can juggle around with phonemes, but the real bits of speech they analyze always involves processing phones. When we listen to speech, our ears catch phones flying through the air and our leaping brains flip them back into words, sentences, thoughts, and ideas—so quickly, that we often know what people are going to say before the words have fully fled from their mouths. Instant, easy, and quite dazzling, our amazing brains make this seem like a magic trick. And it's perhaps because listening seems so easy to us that we think computers (in many ways even more amazing than brains) should be able to hear, recognize, and decode spoken words as well. If only it were that simple!

Why is speech so hard to handle?

The trouble is, listening is much harder than it looks (or sounds): there are all sorts of different problems going on at the same time... When someone speaks to you in the street, there's the sheer difficulty of separating their words (what scientists would call the acoustic signal ) from the background noise —especially in something like a cocktail party, where the "noise" is similar speech from other conversations. When people talk quickly, and run all their words together in a long stream, how do we know exactly when one word ends and the next one begins? (Did they just say "dancing and smile" or "dance, sing, and smile"?) There's the problem of how everyone's voice is a little bit different, and the way our voices change from moment to moment. How do our brains figure out that a word like "bird" means exactly the same thing when it's trilled by a ten year-old girl or boomed by her forty-year-old father? What about words like "red" and "read" that sound identical but mean totally different things (homophones, as they're called)? How does our brain know which word the speaker means? What about sentences that are misheard to mean radically different things? There's the age-old military example of "send reinforcements, we're going to advance" being misheard for "send three and fourpence, we're going to a dance"—and all of us can probably think of song lyrics we've hilariously misunderstood the same way (I always chuckle when I hear Kate Bush singing about "the cattle burning over your shoulder"). On top of all that stuff, there are issues like syntax (the grammatical structure of language) and semantics (the meaning of words) and how they help our brain decode the words we hear, as we hear them. Weighing up all these factors, it's easy to see that recognizing and understanding spoken words in real time (as people speak to us) is an astonishing demonstration of blistering brainpower.

It shouldn't surprise or disappoint us that computers struggle to pull off the same dazzling tricks as our brains; it's quite amazing that they get anywhere near!

Photo: Using a headset microphone like this makes a huge difference to the accuracy of speech recognition: it reduces background sound, making it much easier for the computer to separate the signal (the all-important words you're speaking) from the noise (everything else).

How do computers recognize speech?

Speech recognition is one of the most complex areas of computer science —and partly because it's interdisciplinary: it involves a mixture of extremely complex linguistics, mathematics, and computing itself. If you read through some of the technical and scientific papers that have been published in this area (a few are listed in the references below), you may well struggle to make sense of the complexity. My objective is to give a rough flavor of how computers recognize speech, so—without any apology whatsoever—I'm going to simplify hugely and miss out most of the details.

Broadly speaking, there are four different approaches a computer can take if it wants to turn spoken sounds into written words:

1: Simple pattern matching

Ironically, the simplest kind of speech recognition isn't really anything of the sort. You'll have encountered it if you've ever phoned an automated call center and been answered by a computerized switchboard. Utility companies often have systems like this that you can use to leave meter readings, and banks sometimes use them to automate basic services like balance inquiries, statement orders, checkbook requests, and so on. You simply dial a number, wait for a recorded voice to answer, then either key in or speak your account number before pressing more keys (or speaking again) to select what you want to do. Crucially, all you ever get to do is choose one option from a very short list, so the computer at the other end never has to do anything as complex as parsing a sentence (splitting a string of spoken sound into separate words and figuring out their structure), much less trying to understand it; it needs no knowledge of syntax (language structure) or semantics (meaning). In other words, systems like this aren't really recognizing speech at all: they simply have to be able to distinguish between ten different sound patterns (the spoken words zero through nine) either using the bleeping sounds of a Touch-Tone phone keypad (technically called DTMF ) or the spoken sounds of your voice.

From a computational point of view, there's not a huge difference between recognizing phone tones and spoken numbers "zero", "one," "two," and so on: in each case, the system could solve the problem by comparing an entire chunk of sound to similar stored patterns in its memory. It's true that there can be quite a bit of variability in how different people say "three" or "four" (they'll speak in a different tone, more or less slowly, with different amounts of background noise) but the ten numbers are sufficiently different from one another for this not to present a huge computational challenge. And if the system can't figure out what you're saying, it's easy enough for the call to be transferred automatically to a human operator.

Photo: Voice-activated dialing on cellphones is little more than simple pattern matching. You simply train the phone to recognize the spoken version of a name in your phonebook. When you say a name, the phone doesn't do any particularly sophisticated analysis; it simply compares the sound pattern with ones you've stored previously and picks the best match. No big deal—which explains why even an old phone like this 2001 Motorola could do it.

2: Pattern and feature analysis

Automated switchboard systems generally work very reliably because they have such tiny vocabularies: usually, just ten words representing the ten basic digits. The vocabulary that a speech system works with is sometimes called its domain . Early speech systems were often optimized to work within very specific domains, such as transcribing doctor's notes, computer programming commands, or legal jargon, which made the speech recognition problem far simpler (because the vocabulary was smaller and technical terms were explicitly trained beforehand). Much like humans, modern speech recognition programs are so good that they work in any domain and can recognize tens of thousands of different words. How do they do it?

Most of us have relatively large vocabularies, made from hundreds of common words ("a," "the," "but" and so on, which we hear many times each day) and thousands of less common ones (like "discombobulate," "crepuscular," "balderdash," or whatever, which we might not hear from one year to the next). Theoretically, you could train a speech recognition system to understand any number of different words, just like an automated switchboard: all you'd need to do would be to get your speaker to read each word three or four times into a microphone, until the computer generalized the sound pattern into something it could recognize reliably.

The trouble with this approach is that it's hugely inefficient. Why learn to recognize every word in the dictionary when all those words are built from the same basic set of sounds? No-one wants to buy an off-the-shelf computer dictation system only to find they have to read three or four times through a dictionary, training it up to recognize every possible word they might ever speak, before they can do anything useful. So what's the alternative? How do humans do it? We don't need to have seen every Ford, Chevrolet, and Cadillac ever manufactured to recognize that an unknown, four-wheeled vehicle is a car: having seen many examples of cars throughout our lives, our brains somehow store what's called a prototype (the generalized concept of a car, something with four wheels, big enough to carry two to four passengers, that creeps down a road) and we figure out that an object we've never seen before is a car by comparing it with the prototype. In much the same way, we don't need to have heard every person on Earth read every word in the dictionary before we can understand what they're saying; somehow we can recognize words by analyzing the key features (or components) of the sounds we hear. Speech recognition systems take the same approach.

The recognition process

Practical speech recognition systems start by listening to a chunk of sound (technically called an utterance ) read through a microphone. The first step involves digitizing the sound (so the up-and-down, analog wiggle of the sound waves is turned into digital format, a string of numbers) by a piece of hardware (or software) called an analog-to-digital (A/D) converter (for a basic introduction, see our article on analog versus digital technology ). The digital data is converted into a spectrogram (a graph showing how the component frequencies of the sound change in intensity over time) using a mathematical technique called a Fast Fourier Transform (FFT) ), then broken into a series of overlapping chunks called acoustic frames , each one typically lasting 1/25 to 1/50 of a second. These are digitally processed in various ways and analyzed to find the components of speech they contain. Assuming we've separated the utterance into words, and identified the key features of each one, all we have to do is compare what we have with a phonetic dictionary (a list of known words and the sound fragments or features from which they're made) and we can identify what's probably been said. Probably is always the word in speech recognition: no-one but the speaker can ever know exactly what was said.)

Seeing speech

In theory, since spoken languages are built from only a few dozen phonemes (English uses about 46, while Spanish has only about 24), you could recognize any possible spoken utterance just by learning to pick out phones (or similar key features of spoken language such as formants , which are prominent frequencies that can be used to help identify vowels). Instead of having to recognize the sounds of (maybe) 40,000 words, you'd only need to recognize the 46 basic component sounds (or however many there are in your language), though you'd still need a large phonetic dictionary listing the phonemes that make up each word. This method of analyzing spoken words by identifying phones or phonemes is often called the beads-on-a-string model : a chunk of unknown speech (the string) is recognized by breaking it into phones or bits of phones (the beads); figure out the phones and you can figure out the words.

Most speech recognition programs get better as you use them because they learn as they go along using feedback you give them, either deliberately (by correcting mistakes) or by default (if you don't correct any mistakes, you're effectively saying everything was recognized perfectly—which is also feedback). If you've ever used a program like one of the Dragon dictation systems, you'll be familiar with the way you have to correct your errors straight away to ensure the program continues to work with high accuracy. If you don't correct mistakes, the program assumes it's recognized everything correctly, which means similar mistakes are even more likely to happen next time. If you force the system to go back and tell it which words it should have chosen, it will associate those corrected words with the sounds it heard—and do much better next time.

Screenshot: With speech dictation programs like Dragon NaturallySpeaking, shown here, it's important to go back and correct your mistakes if you want your words to be recognized accurately in future.

3: Statistical analysis

In practice, recognizing speech is much more complex than simply identifying phones and comparing them to stored patterns, and for a whole variety of reasons: Speech is extremely variable: different people speak in different ways (even though we're all saying the same words and, theoretically, they're all built from a standard set of phonemes) You don't always pronounce a certain word in exactly the same way; even if you did, the way you spoke a word (or even part of a word) might vary depending on the sounds or words that came before or after. As a speaker's vocabulary grows, the number of similar-sounding words grows too: the digits zero through nine all sound different when you speak them, but "zero" sounds like "hero," "one" sounds like "none," "two" could mean "two," "to," or "too"... and so on. So recognizing numbers is a tougher job for voice dictation on a PC, with a general 50,000-word vocabulary, than for an automated switchboard with a very specific, 10-word vocabulary containing only the ten digits. The more speakers a system has to recognize, the more variability it's going to encounter and the bigger the likelihood of making mistakes. For something like an off-the-shelf voice dictation program (one that listens to your voice and types your words on the screen), simple pattern recognition is clearly going to be a bit hit and miss. The basic principle of recognizing speech by identifying its component parts certainly holds good, but we can do an even better job of it by taking into account how language really works. In other words, we need to use what's called a language model .

When people speak, they're not simply muttering a series of random sounds. Every word you utter depends on the words that come before or after. For example, unless you're a contrary kind of poet, the word "example" is much more likely to follow words like "for," "an," "better," "good", "bad," and so on than words like "octopus," "table," or even the word "example" itself. Rules of grammar make it unlikely that a noun like "table" will be spoken before another noun ("table example" isn't something we say) while—in English at least—adjectives ("red," "good," "clear") come before nouns and not after them ("good example" is far more probable than "example good"). If a computer is trying to figure out some spoken text and gets as far as hearing "here is a ******* example," it can be reasonably confident that ******* is an adjective and not a noun. So it can use the rules of grammar to exclude nouns like "table" and the probability of pairs like "good example" and "bad example" to make an intelligent guess. If it's already identified a "g" sound instead of a "b", that's an added clue.

Virtually all modern speech recognition systems also use a bit of complex statistical hocus-pocus to help figure out what's being said. The probability of one phone following another, the probability of bits of silence occurring in between phones, and the likelihood of different words following other words are all factored in. Ultimately, the system builds what's called a hidden Markov model (HMM) of each speech segment, which is the computer's best guess at which beads are sitting on the string, based on all the things it's managed to glean from the sound spectrum and all the bits and pieces of phones and silence that it might reasonably contain. It's called a Markov model (or Markov chain), for Russian mathematician Andrey Markov , because it's a sequence of different things (bits of phones, words, or whatever) that change from one to the next with a certain probability. Confusingly, it's referred to as a "hidden" Markov model even though it's worked out in great detail and anything but hidden! "Hidden," in this case, simply means the contents of the model aren't observed directly but figured out indirectly from the sound spectrum. From the computer's viewpoint, speech recognition is always a probabilistic "best guess" and the right answer can never be known until the speaker either accepts or corrects the words that have been recognized. (Markov models can be processed with an extra bit of computer jiggery pokery called the Viterbi algorithm , but that's beyond the scope of this article.)

4: Artificial neural networks

HMMs have dominated speech recognition since the 1970s—for the simple reason that they work so well. But they're by no means the only technique we can use for recognizing speech. There's no reason to believe that the brain itself uses anything like a hidden Markov model. It's much more likely that we figure out what's being said using dense layers of brain cells that excite and suppress one another in intricate, interlinked ways according to the input signals they receive from our cochleas (the parts of our inner ear that recognize different sound frequencies).

Back in the 1980s, computer scientists developed "connectionist" computer models that could mimic how the brain learns to recognize patterns, which became known as artificial neural networks (sometimes called ANNs). A few speech recognition scientists explored using neural networks, but the dominance and effectiveness of HMMs relegated alternative approaches like this to the sidelines. More recently, scientists have explored using ANNs and HMMs side by side and found they give significantly higher accuracy over HMMs used alone.

Artwork: Neural networks are hugely simplified, computerized versions of the brain—or a tiny part of it that have inputs (where you feed in information), outputs (where results appear), and hidden units (connecting the two). If you train them with enough examples, they learn by gradually adjusting the strength of the connections between the different layers of units. Once a neural network is fully trained, if you show it an unknown example, it will attempt to recognize what it is based on the examples it's seen before.

Speech recognition: a summary

Artwork: A summary of some of the key stages of speech recognition and the computational processes happening behind the scenes.

What can we use speech recognition for?

We've already touched on a few of the more common applications of speech recognition, including automated telephone switchboards and computerized voice dictation systems. But there are plenty more examples where those came from.

Many of us (whether we know it or not) have cellphones with voice recognition built into them. Back in the late 1990s, state-of-the-art mobile phones offered voice-activated dialing , where, in effect, you recorded a sound snippet for each entry in your phonebook, such as the spoken word "Home," or whatever that the phone could then recognize when you spoke it in future. A few years later, systems like SpinVox became popular helping mobile phone users make sense of voice messages by converting them automatically into text (although a sneaky BBC investigation eventually claimed that some of its state-of-the-art speech automated speech recognition was actually being done by humans in developing countries!).

Today's smartphones make speech recognition even more of a feature. Apple's Siri , Google Assistant ("Hey Google..."), and Microsoft's Cortana are smartphone "personal assistant apps" who'll listen to what you say, figure out what you mean, then attempt to do what you ask, whether it's looking up a phone number or booking a table at a local restaurant. They work by linking speech recognition to complex natural language processing (NLP) systems, so they can figure out not just what you say , but what you actually mean , and what you really want to happen as a consequence. Pressed for time and hurtling down the street, mobile users theoretically find this kind of system a boon—at least if you believe the hype in the TV advertisements that Google and Microsoft have been running to promote their systems. (Google quietly incorporated speech recognition into its search engine some time ago, so you can Google just by talking to your smartphone, if you really want to.) If you have one of the latest voice-powered electronic assistants, such as Amazon's Echo/Alexa or Google Home, you don't need a computer of any kind (desktop, tablet, or smartphone): you just ask questions or give simple commands in your natural language to a thing that resembles a loudspeaker ... and it answers straight back.

Screenshot: When I asked Google "does speech recognition really work," it took it three attempts to recognize the question correctly.

Will speech recognition ever take off?

I'm a huge fan of speech recognition. After suffering with repetitive strain injury on and off for some time, I've been using computer dictation to write quite a lot of my stuff for about 15 years, and it's been amazing to see the improvements in off-the-shelf voice dictation over that time. The early Dragon NaturallySpeaking system I used on a Windows 95 laptop was fairly reliable, but I had to speak relatively slowly, pausing slightly between each word or word group, giving a horribly staccato style that tended to interrupt my train of thought. This slow, tedious one-word-at-a-time approach ("can – you – tell – what – I – am – saying – to – you") went by the name discrete speech recognition . A few years later, things had improved so much that virtually all the off-the-shelf programs like Dragon were offering continuous speech recognition , which meant I could speak at normal speed, in a normal way, and still be assured of very accurate word recognition. When you can speak normally to your computer, at a normal talking pace, voice dictation programs offer another advantage: they give clumsy, self-conscious writers a much more attractive, conversational style: "write like you speak" (always a good tip for writers) is easy to put into practice when you speak all your words as you write them!

Despite the technological advances, I still generally prefer to write with a keyboard and mouse . Ironically, I'm writing this article that way now. Why? Partly because it's what I'm used to. I often write highly technical stuff with a complex vocabulary that I know will defeat the best efforts of all those hidden Markov models and neural networks battling away inside my PC. It's easier to type "hidden Markov model" than to mutter those words somewhat hesitantly, watch "hiccup half a puddle" pop up on screen and then have to make corrections.

Screenshot: You an always add more words to a speech recognition program. Here, I've decided to train the Microsoft Windows built-in speech recognition engine to spot the words 'hidden Markov model.'

Mobile revolution?

You might think mobile devices—with their slippery touchscreens —would benefit enormously from speech recognition: no-one really wants to type an essay with two thumbs on a pop-up QWERTY keyboard. Ironically, mobile devices are heavily used by younger, tech-savvy kids who still prefer typing and pawing at screens to speaking out loud. Why? All sorts of reasons, from sheer familiarity (it's quick to type once you're used to it—and faster than fixing a computer's goofed-up guesses) to privacy and consideration for others (many of us use our mobile phones in public places and we don't want our thoughts wide open to scrutiny or howls of derision), and the sheer difficulty of speaking clearly and being clearly understood in noisy environments. Recently, I was walking down a street and overheard a small garden party where the sounds of happy laughter, drinking, and discreet background music were punctuated by a sudden grunt of "Alexa play Copacabana by Barry Manilow"—which silenced the conversation entirely and seemed jarringly out of place. Speech recognition has never been so indiscreet. What you're doing with your computer also makes a difference. If you've ever used speech recognition on a PC, you'll know that writing something like an essay (dictating hundreds or thousands of words of ordinary text) is a whole lot easier than editing it afterwards (where you laboriously try to select words or sentences and move them up or down so many lines with awkward cut and paste commands). And trying to open and close windows, start programs, or navigate around a computer screen by voice alone is clumsy, tedious, error-prone, and slow. It's far easier just to click your mouse or swipe your finger.

Photo: Here I'm using Google's Live Transcribe app to dictate the last paragraph of this article. As you can see, apart from the punctuation, the transcription is flawless, without any training at all. This is the fastest and most accurate speech recognition software I've ever used. It's mainly designed as an accessibility aid for deaf and hard of hearing people, but it can be used for dictation too.