- Multi-Tiered System of Supports Build effective, district-wide MTSS

- School Climate & Culture Create a safe, supportive learning environment

- Positive Behavior Interventions & Supports Promote positive behavior and climate

- Family Engagement Engage families as partners in education

- Platform Holistic data and student support tools

- Integrations Daily syncs with district data systems and assessments

- Professional Development Strategic advising, workshop facilitation, and ongoing support

- Success Stories

- Surveys and Toolkits

- Product Demos

- Events and Conferences

AIM FOR IMPACT

Join us to hear from AI visionaries and education leaders on building future-ready schools.

- Connecticut

- Massachusetts

- Mississippi

- New Hampshire

- North Carolina

- North Dakota

- Pennsylvania

- Rhode Island

- South Carolina

- South Dakota

- West Virginia

- Testimonials

- About Panorama

- Data Privacy

- Leadership Team

- In the Press

- Request a Demo

- Popular Posts

- Multi-Tiered System of Supports

- Family Engagement

- Social-Emotional Well-Being

- College and Career Readiness

Show Categories

School Climate

45 survey questions to understand student engagement in online learning.

In our work with K-12 school districts during the COVID-19 pandemic, countless district leaders and school administrators have told us how challenging it's been to build student engagement outside of the traditional classroom.

Not only that, but the challenges associated with online learning may have the largest impact on students from marginalized communities. Research suggests that some groups of students experience more difficulty with academic performance and engagement when course content is delivered online vs. face-to-face.

As you look to improve the online learning experience for students, take a moment to understand how students, caregivers, and staff are currently experiencing virtual learning. Where are the areas for improvement? How supported do students feel in their online coursework? Do teachers feel equipped to support students through synchronous and asynchronous facilitation? How confident do families feel in supporting their children at home?

Below, we've compiled a bank of 45 questions to understand student engagement in online learning. Interested in running a student, family, or staff engagement survey? Click here to learn about Panorama's survey analytics platform for K-12 school districts.

Download Toolkit: 9 Virtual Learning Resources to Engage Students, Families, and Staff

45 Questions to Understand Student Engagement in Online Learning

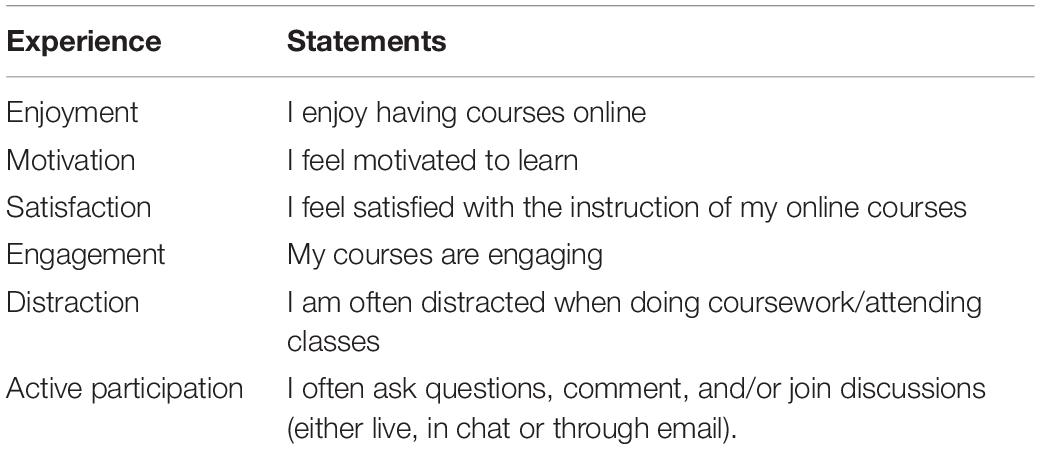

For students (grades 3-5 and 6-12):.

1. How excited are you about going to your classes?

2. How often do you get so focused on activities in your classes that you lose track of time?

3. In your classes, how eager are you to participate?

4. When you are not in school, how often do you talk about ideas from your classes?

5. Overall, how interested are you in your classes?

6. What are the most engaging activities that happen in this class?

7. Which aspects of class have you found least engaging?

8. If you were teaching class, what is the one thing you would do to make it more engaging for all students?

9. How do you know when you are feeling engaged in class?

10. What projects/assignments/activities do you find most engaging in this class?

11. What does this teacher do to make this class engaging?

12. How much effort are you putting into your classes right now?

13. How difficult or easy is it for you to try hard on your schoolwork right now?

14. How difficult or easy is it for you to stay focused on your schoolwork right now?

15. If you have missed in-person school recently, why did you miss school?

16. If you have missed online classes recently, why did you miss class?

17. How would you like to be learning right now?

18. How happy are you with the amount of time you spend speaking with your teacher?

19. How difficult or easy is it to use the distance learning technology (computer, tablet, video calls, learning applications, etc.)?

20. What do you like about school right now?

21. What do you not like about school right now?

22. When you have online schoolwork, how often do you have the technology (laptop, tablet, computer, etc) you need?

23. How difficult or easy is it for you to connect to the internet to access your schoolwork?

24. What has been the hardest part about completing your schoolwork?

25. How happy are you with how much time you spend in specials or enrichment (art, music, PE, etc.)?

26. Are you getting all the help you need with your schoolwork right now?

27. How sure are you that you can do well in school right now?

28. Are there adults at your school you can go to for help if you need it right now?

29. If you are participating in distance learning, how often do you hear from your teachers individually?

For Families, Parents, and Caregivers:

30 How satisfied are you with the way learning is structured at your child’s school right now?

31. Do you think your child should spend less or more time learning in person at school right now?

32. How difficult or easy is it for your child to use the distance learning tools (video calls, learning applications, etc.)?

33. How confident are you in your ability to support your child's education during distance learning?

34. How confident are you that teachers can motivate students to learn in the current model?

35. What is working well with your child’s education that you would like to see continued?

36. What is challenging with your child’s education that you would like to see improved?

37. Does your child have their own tablet, laptop, or computer available for schoolwork when they need it?

38. What best describes your child's typical internet access?

39. Is there anything else you would like us to know about your family’s needs at this time?

For Teachers and Staff:

40. In the past week, how many of your students regularly participated in your virtual classes?

41. In the past week, how engaged have students been in your virtual classes?

42. In the past week, how engaged have students been in your in-person classes?

43. Is there anything else you would like to share about student engagement at this time?

44. What is working well with the current learning model that you would like to see continued?

45. What is challenging about the current learning model that you would like to see improved?

Elevate Student, Family, and Staff Voices This Year With Panorama

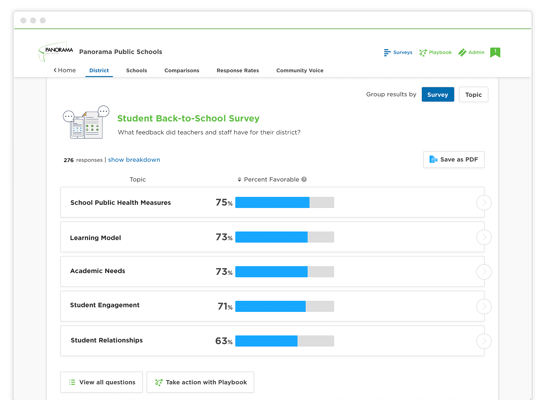

Schools and districts can use Panorama’s leading survey administration and analytics platform to quickly gather and take action on information from students, families, teachers, and staff. The questions are applicable to all types of K-12 school settings and grade levels, as well as to communities serving students from a range of socioeconomic backgrounds.

In the Panorama platform, educators can view and disaggregate results by topic, question, demographic group, grade level, school, and more to inform priority areas and action plans. Districts may use the data to improve teaching and learning models, build stronger academic and social-emotional support systems, improve stakeholder communication, and inform staff professional development.

To learn more about Panorama's survey platform, get in touch with our team.

Related Articles

44 Questions to Ask Students, Families, and Staff During the Pandemic

Identify ways to support students, families, and staff in your school district during the pandemic with these 44 questions.

Engaging Your School Community in Survey Results (Q&A Ep. 4)

Learn how to engage principals, staff, families, and students in the survey results when running a stakeholder feedback program around school climate.

Strategies to Promote Positive Student-Teacher Relationships

Explore four strategies for building strong student-teacher relationships in your school.

Featured Resource

9 virtual learning resources to connect with students, families, and staff.

We've bundled our top resources for building belonging in hybrid or distance learning environments.

Join 90,000+ education leaders on our weekly newsletter.

Numbers, Facts and Trends Shaping Your World

Read our research on:

Full Topic List

Regions & Countries

- Publications

- Our Methods

- Short Reads

- Tools & Resources

Read Our Research On:

What we know about online learning and the homework gap amid the pandemic

America’s K-12 students are returning to classrooms this fall after 18 months of virtual learning at home during the COVID-19 pandemic. Some students who lacked the home internet connectivity needed to finish schoolwork during this time – an experience often called the “ homework gap ” – may continue to feel the effects this school year.

Here is what Pew Research Center surveys found about the students most likely to be affected by the homework gap and their experiences learning from home.

Children across the United States are returning to physical classrooms this fall after 18 months at home, raising questions about how digital disparities at home will affect the existing homework gap between certain groups of students.

Methodology for each Pew Research Center poll can be found at the links in the post.

With the exception of the 2018 survey, everyone who took part in the surveys is a member of the Center’s American Trends Panel (ATP), an online survey panel that is recruited through national, random sampling of residential addresses. This way nearly all U.S. adults have a chance of selection. The survey is weighted to be representative of the U.S. adult population by gender, race, ethnicity, partisan affiliation, education and other categories. Read more about the ATP’s methodology .

The 2018 data on U.S. teens comes from a Center poll of 743 U.S. teens ages 13 to 17 conducted March 7 to April 10, 2018, using the NORC AmeriSpeak panel. AmeriSpeak is a nationally representative, probability-based panel of the U.S. household population. Randomly selected U.S. households are sampled with a known, nonzero probability of selection from the NORC National Frame, and then contacted by U.S. mail, telephone or face-to-face interviewers. Read more details about the NORC AmeriSpeak panel methodology .

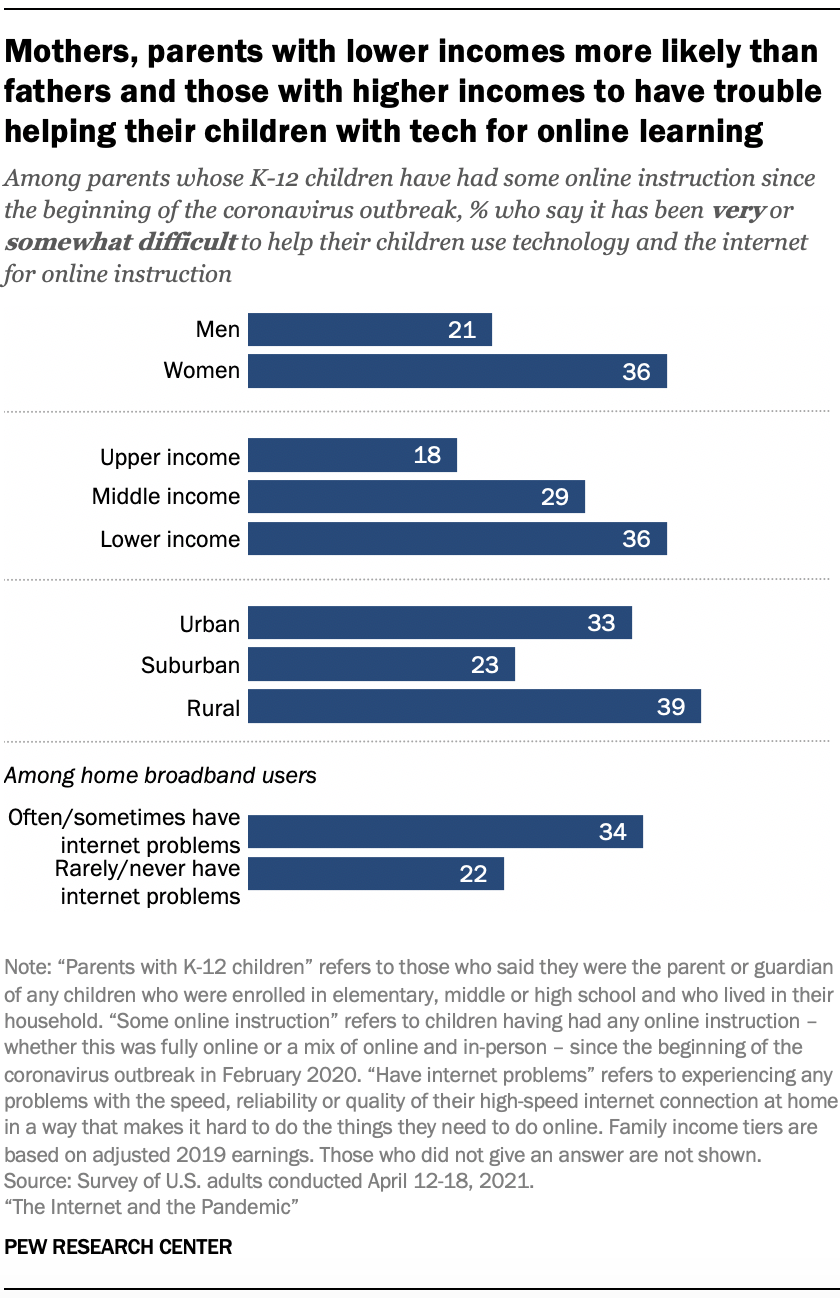

Around nine-in-ten U.S. parents with K-12 children at home (93%) said their children have had some online instruction since the coronavirus outbreak began in February 2020, and 30% of these parents said it has been very or somewhat difficult for them to help their children use technology or the internet as an educational tool, according to an April 2021 Pew Research Center survey .

Gaps existed for certain groups of parents. For example, parents with lower and middle incomes (36% and 29%, respectively) were more likely to report that this was very or somewhat difficult, compared with just 18% of parents with higher incomes.

This challenge was also prevalent for parents in certain types of communities – 39% of rural residents and 33% of urban residents said they have had at least some difficulty, compared with 23% of suburban residents.

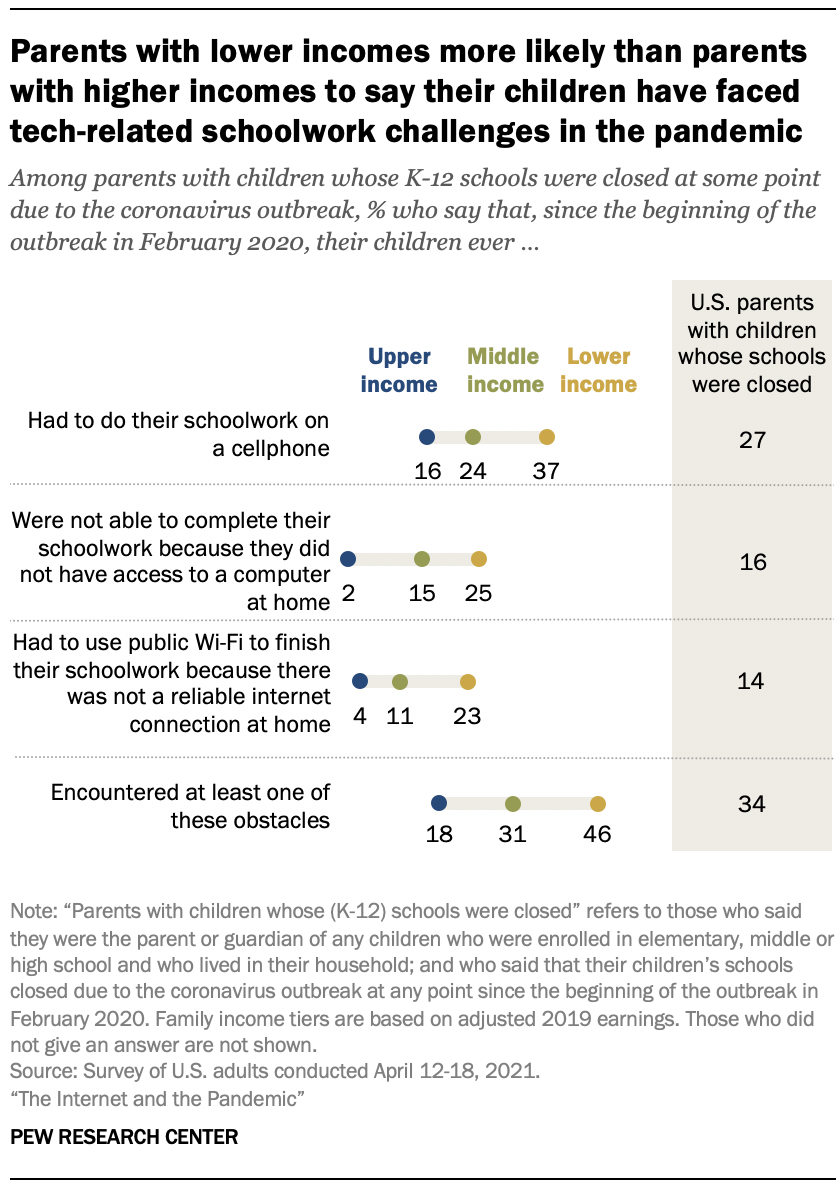

Around a third of parents with children whose schools were closed during the pandemic (34%) said that their child encountered at least one technology-related obstacle to completing their schoolwork during that time. In the April 2021 survey, the Center asked parents of K-12 children whose schools had closed at some point about whether their children had faced three technology-related obstacles. Around a quarter of parents (27%) said their children had to do schoolwork on a cellphone, 16% said their child was unable to complete schoolwork because of a lack of computer access at home, and another 14% said their child had to use public Wi-Fi to finish schoolwork because there was no reliable connection at home.

Parents with lower incomes whose children’s schools closed amid COVID-19 were more likely to say their children faced technology-related obstacles while learning from home. Nearly half of these parents (46%) said their child faced at least one of the three obstacles to learning asked about in the survey, compared with 31% of parents with midrange incomes and 18% of parents with higher incomes.

Of the three obstacles asked about in the survey, parents with lower incomes were most likely to say that their child had to do their schoolwork on a cellphone (37%). About a quarter said their child was unable to complete their schoolwork because they did not have computer access at home (25%), or that they had to use public Wi-Fi because they did not have a reliable internet connection at home (23%).

A Center survey conducted in April 2020 found that, at that time, 59% of parents with lower incomes who had children engaged in remote learning said their children would likely face at least one of the obstacles asked about in the 2021 survey.

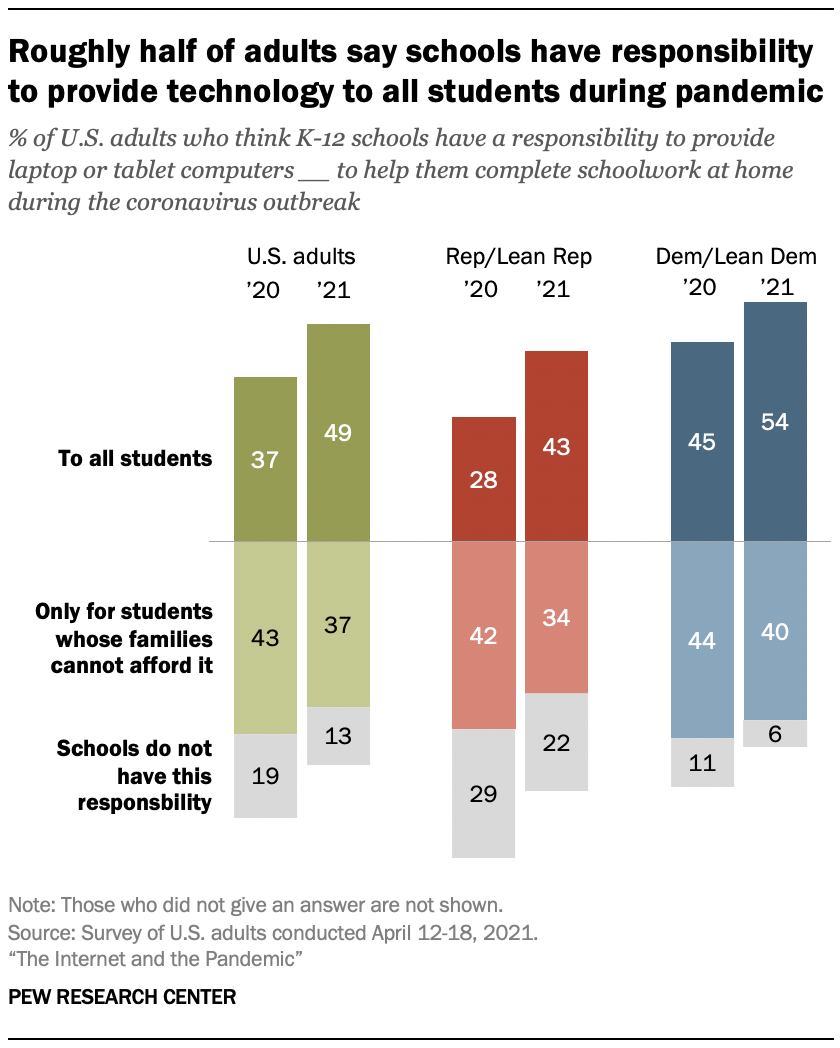

A year into the outbreak, an increasing share of U.S. adults said that K-12 schools have a responsibility to provide all students with laptop or tablet computers in order to help them complete their schoolwork at home during the pandemic. About half of all adults (49%) said this in the spring 2021 survey, up 12 percentage points from a year earlier. An additional 37% of adults said that schools should provide these resources only to students whose families cannot afford them, and just 13% said schools do not have this responsibility.

While larger shares of both political parties in April 2021 said K-12 schools have a responsibility to provide computers to all students in order to help them complete schoolwork at home, there was a 15-point change among Republicans: 43% of Republicans and those who lean to the Republican Party said K-12 schools have this responsibility, compared with 28% last April. In the 2021 survey, 22% of Republicans also said schools do not have this responsibility at all, compared with 6% of Democrats and Democratic leaners.

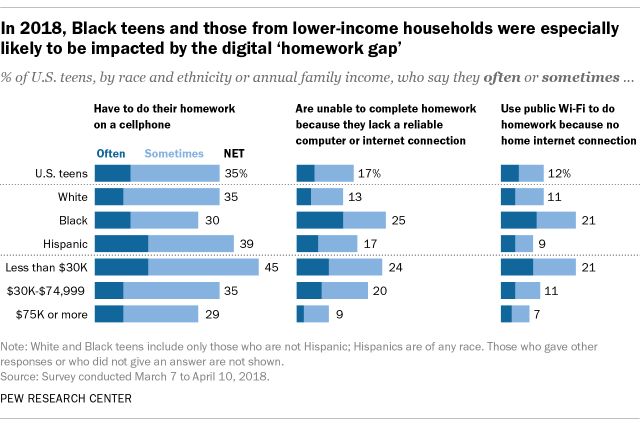

Even before the pandemic, Black teens and those living in lower-income households were more likely than other groups to report trouble completing homework assignments because they did not have reliable technology access. Nearly one-in-five teens ages 13 to 17 (17%) said they are often or sometimes unable to complete homework assignments because they do not have reliable access to a computer or internet connection, a 2018 Center survey of U.S. teens found.

One-quarter of Black teens said they were at least sometimes unable to complete their homework due to a lack of digital access, including 13% who said this happened to them often. Just 4% of White teens and 6% of Hispanic teens said this often happened to them. (There were not enough Asian respondents in the survey sample to be broken out into a separate analysis.)

A wide gap also existed by income level: 24% of teens whose annual family income was less than $30,000 said the lack of a dependable computer or internet connection often or sometimes prohibited them from finishing their homework, but that share dropped to 9% among teens who lived in households earning $75,000 or more a year.

- Coronavirus (COVID-19)

- COVID-19 & Technology

- Digital Divide

- Education & Learning Online

Katherine Schaeffer is a research analyst at Pew Research Center .

How Americans View the Coronavirus, COVID-19 Vaccines Amid Declining Levels of Concern

Online religious services appeal to many americans, but going in person remains more popular, about a third of u.s. workers who can work from home now do so all the time, how the pandemic has affected attendance at u.s. religious services, mental health and the pandemic: what u.s. surveys have found, most popular.

901 E St. NW, Suite 300 Washington, DC 20004 USA (+1) 202-419-4300 | Main (+1) 202-857-8562 | Fax (+1) 202-419-4372 | Media Inquiries

Research Topics

- Email Newsletters

ABOUT PEW RESEARCH CENTER Pew Research Center is a nonpartisan, nonadvocacy fact tank that informs the public about the issues, attitudes and trends shaping the world. It does not take policy positions. The Center conducts public opinion polling, demographic research, computational social science research and other data-driven research. Pew Research Center is a subsidiary of The Pew Charitable Trusts , its primary funder.

© 2024 Pew Research Center

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

The PMC website is updating on October 15, 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Elsevier - PMC COVID-19 Collection

A systematic review of research on online teaching and learning from 2009 to 2018

Associated data.

Systematic reviews were conducted in the nineties and early 2000's on online learning research. However, there is no review examining the broader aspect of research themes in online learning in the last decade. This systematic review addresses this gap by examining 619 research articles on online learning published in twelve journals in the last decade. These studies were examined for publication trends and patterns, research themes, research methods, and research settings and compared with the research themes from the previous decades. While there has been a slight decrease in the number of studies on online learning in 2015 and 2016, it has then continued to increase in 2017 and 2018. The majority of the studies were quantitative in nature and were examined in higher education. Online learning research was categorized into twelve themes and a framework across learner, course and instructor, and organizational levels was developed. Online learner characteristics and online engagement were examined in a high number of studies and were consistent with three of the prior systematic reviews. However, there is still a need for more research on organization level topics such as leadership, policy, and management and access, culture, equity, inclusion, and ethics and also on online instructor characteristics.

- • Twelve online learning research themes were identified in 2009–2018.

- • A framework with learner, course and instructor, and organizational levels was used.

- • Online learner characteristics and engagement were the mostly examined themes.

- • The majority of the studies used quantitative research methods and in higher education.

- • There is a need for more research on organization level topics.

1. Introduction

Online learning has been on the increase in the last two decades. In the United States, though higher education enrollment has declined, online learning enrollment in public institutions has continued to increase ( Allen & Seaman, 2017 ), and so has the research on online learning. There have been review studies conducted on specific areas on online learning such as innovations in online learning strategies ( Davis et al., 2018 ), empirical MOOC literature ( Liyanagunawardena et al., 2013 ; Veletsianos & Shepherdson, 2016 ; Zhu et al., 2018 ), quality in online education ( Esfijani, 2018 ), accessibility in online higher education ( Lee, 2017 ), synchronous online learning ( Martin et al., 2017 ), K-12 preparation for online teaching ( Moore-Adams et al., 2016 ), polychronicity in online learning ( Capdeferro et al., 2014 ), meaningful learning research in elearning and online learning environments ( Tsai, Shen, & Chiang, 2013 ), problem-based learning in elearning and online learning environments ( Tsai & Chiang, 2013 ), asynchronous online discussions ( Thomas, 2013 ), self-regulated learning in online learning environments ( Tsai, Shen, & Fan, 2013 ), game-based learning in online learning environments ( Tsai & Fan, 2013 ), and online course dropout ( Lee & Choi, 2011 ). While there have been review studies conducted on specific online learning topics, very few studies have been conducted on the broader aspect of online learning examining research themes.

2. Systematic Reviews of Distance Education and Online Learning Research

Distance education has evolved from offline to online settings with the access to internet and COVID-19 has made online learning the common delivery method across the world. Tallent-Runnels et al. (2006) reviewed research late 1990's to early 2000's, Berge and Mrozowski (2001) reviewed research 1990 to 1999, and Zawacki-Richter et al. (2009) reviewed research in 2000–2008 on distance education and online learning. Table 1 shows the research themes from previous systematic reviews on online learning research. There are some themes that re-occur in the various reviews, and there are also new themes that emerge. Though there have been reviews conducted in the nineties and early 2000's, there is no review examining the broader aspect of research themes in online learning in the last decade. Hence, the need for this systematic review which informs the research themes in online learning from 2009 to 2018. In the following sections, we review these systematic review studies in detail.

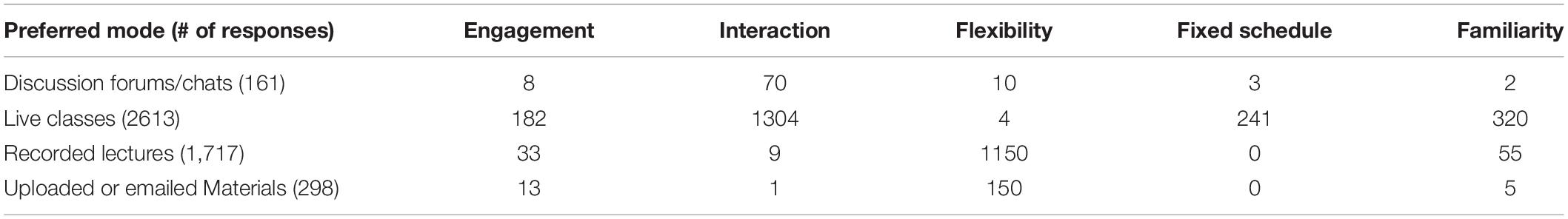

Comparison of online learning research themes from previous studies.

| 1990–1999 ( ) | 1993–2004 ( ) | 2000–2008 (Zawacki-Richter et al., 2009) | |

|---|---|---|---|

| Most Number of Studies | |||

| Lowest Number of Studies |

2.1. Distance education research themes, 1990 to 1999 ( Berge & Mrozowski, 2001 )

Berge and Mrozowski (2001) reviewed 890 research articles and dissertation abstracts on distance education from 1990 to 1999. The four distance education journals chosen by the authors to represent distance education included, American Journal of Distance Education, Distance Education, Open Learning, and the Journal of Distance Education. This review overlapped in the dates of the Tallent-Runnels et al. (2006) study. Berge and Mrozowski (2001) categorized the articles according to Sherry's (1996) ten themes of research issues in distance education: redefining roles of instructor and students, technologies used, issues of design, strategies to stimulate learning, learner characteristics and support, issues related to operating and policies and administration, access and equity, and costs and benefits.

In the Berge and Mrozowski (2001) study, more than 100 studies focused on each of the three themes: (1) design issues, (2) learner characteristics, and (3) strategies to increase interactivity and active learning. By design issues, the authors focused on instructional systems design and focused on topics such as content requirement, technical constraints, interactivity, and feedback. The next theme, strategies to increase interactivity and active learning, were closely related to design issues and focused on students’ modes of learning. Learner characteristics focused on accommodating various learning styles through customized instructional theory. Less than 50 studies focused on the three least examined themes: (1) cost-benefit tradeoffs, (2) equity and accessibility, and (3) learner support. Cost-benefit trade-offs focused on the implementation costs of distance education based on school characteristics. Equity and accessibility focused on the equity of access to distance education systems. Learner support included topics such as teacher to teacher support as well as teacher to student support.

2.2. Online learning research themes, 1993 to 2004 ( Tallent-Runnels et al., 2006 )

Tallent-Runnels et al. (2006) reviewed research on online instruction from 1993 to 2004. They reviewed 76 articles focused on online learning by searching five databases, ERIC, PsycINFO, ContentFirst, Education Abstracts, and WilsonSelect. Tallent-Runnels et al. (2006) categorized research into four themes, (1) course environment, (2) learners' outcomes, (3) learners’ characteristics, and (4) institutional and administrative factors. The first theme that the authors describe as course environment ( n = 41, 53.9%) is an overarching theme that includes classroom culture, structural assistance, success factors, online interaction, and evaluation.

Tallent-Runnels et al. (2006) for their second theme found that studies focused on questions involving the process of teaching and learning and methods to explore cognitive and affective learner outcomes ( n = 29, 38.2%). The authors stated that they found the research designs flawed and lacked rigor. However, the literature comparing traditional and online classrooms found both delivery systems to be adequate. Another research theme focused on learners’ characteristics ( n = 12, 15.8%) and the synergy of learners, design of the online course, and system of delivery. Research findings revealed that online learners were mainly non-traditional, Caucasian, had different learning styles, and were highly motivated to learn. The final theme that they reported was institutional and administrative factors (n = 13, 17.1%) on online learning. Their findings revealed that there was a lack of scholarly research in this area and most institutions did not have formal policies in place for course development as well as faculty and student support in training and evaluation. Their research confirmed that when universities offered online courses, it improved student enrollment numbers.

2.3. Distance education research themes 2000 to 2008 ( Zawacki-Richter et al., 2009 )

Zawacki-Richter et al. (2009) reviewed 695 articles on distance education from 2000 to 2008 using the Delphi method for consensus in identifying areas and classified the literature from five prominent journals. The five journals selected due to their wide scope in research in distance education included Open Learning, Distance Education, American Journal of Distance Education, the Journal of Distance Education, and the International Review of Research in Open and Distributed Learning. The reviewers examined the main focus of research and identified gaps in distance education research in this review.

Zawacki-Richter et al. (2009) classified the studies into macro, meso and micro levels focusing on 15 areas of research. The five areas of the macro-level addressed: (1) access, equity and ethics to deliver distance education for developing nations and the role of various technologies to narrow the digital divide, (2) teaching and learning drivers, markets, and professional development in the global context, (3) distance delivery systems and institutional partnerships and programs and impact of hybrid modes of delivery, (4) theoretical frameworks and models for instruction, knowledge building, and learner interactions in distance education practice, and (5) the types of preferred research methodologies. The meso-level focused on seven areas that involve: (1) management and organization for sustaining distance education programs, (2) examining financial aspects of developing and implementing online programs, (3) the challenges and benefits of new technologies for teaching and learning, (4) incentives to innovate, (5) professional development and support for faculty, (6) learner support services, and (7) issues involving quality standards and the impact on student enrollment and retention. The micro-level focused on three areas: (1) instructional design and pedagogical approaches, (2) culturally appropriate materials, interaction, communication, and collaboration among a community of learners, and (3) focus on characteristics of adult learners, socio-economic backgrounds, learning preferences, and dispositions.

The top three research themes in this review by Zawacki-Richter et al. (2009) were interaction and communities of learning ( n = 122, 17.6%), instructional design ( n = 121, 17.4%) and learner characteristics ( n = 113, 16.3%). The lowest number of studies (less than 3%) were found in studies examining the following research themes, management and organization ( n = 18), research methods in DE and knowledge transfer ( n = 13), globalization of education and cross-cultural aspects ( n = 13), innovation and change ( n = 13), and costs and benefits ( n = 12).

2.4. Online learning research themes

These three systematic reviews provide a broad understanding of distance education and online learning research themes from 1990 to 2008. However, there is an increase in the number of research studies on online learning in this decade and there is a need to identify recent research themes examined. Based on the previous systematic reviews ( Berge & Mrozowski, 2001 ; Hung, 2012 ; Tallent-Runnels et al., 2006 ; Zawacki-Richter et al., 2009 ), online learning research in this study is grouped into twelve different research themes which include Learner characteristics, Instructor characteristics, Course or program design and development, Course Facilitation, Engagement, Course Assessment, Course Technologies, Access, Culture, Equity, Inclusion, and Ethics, Leadership, Policy and Management, Instructor and Learner Support, and Learner Outcomes. Table 2 below describes each of the research themes and using these themes, a framework is derived in Fig. 1 .

Research themes in online learning.

| Research Theme | Description | |

|---|---|---|

| 1 | Learner Characteristics | Focuses on understanding the learner characteristics and how online learning can be designed and delivered to meet their needs. Online learner characteristics can be broadly categorized into demographic characteristics, academic characteristics, cognitive characteristics, affective, self-regulation, and motivational characteristics. |

| 2 | Learner Outcomes | Learner outcomes are statements that specify what the learner will achieve at the end of the course or program. Examining learner outcomes such as success, retention, and dropouts are critical in online courses. |

| 3 | Engagement | Engaging the learner in the online course is vitally important as they are separated from the instructor and peers in the online setting. Engagement is examined through the lens of interaction, participation, community, collaboration, communication, involvement and presence. |

| 4 | Course or Program Design and Development | Course design and development is critical in online learning as it engages and assists the students in achieving the learner outcomes. Several models and processes are used to develop the online course, employing different design elements to meet student needs. |

| 5 | Course Facilitation | The delivery or facilitation of the course is as important as course design. Facilitation strategies used in delivery of the course such as in communication and modeling practices are examined in course facilitation. |

| 6 | Course Assessment | Course Assessments are adapted and delivered in an online setting. Formative assessments, peer assessments, differentiated assessments, learner choice in assessments, feedback system, online proctoring, plagiarism in online learning, and alternate assessments such as eportfolios are examined. |

| 7 | Evaluation and Quality Assurance | Evaluation is making a judgment either on the process, the product or a program either during or at the end. There is a need for research on evaluation and quality in the online courses. This has been examined through course evaluations, surveys, analytics, social networks, and pedagogical assessments. Quality assessment rubrics such as Quality Matters have also been researched. |

| 8 | Course Technologies | A number of online course technologies such as learning management systems, online textbooks, online audio and video tools, collaborative tools, social networks to build online community have been the focus of research. |

| 9 | Instructor Characteristics | With the increase in online courses, there has also been an increase in the number of instructors teaching online courses. Instructor characteristics can be examined through their experience, satisfaction, and roles in online teaching. |

| 10 | Institutional Support | The support for online learning is examined both as learner support and instructor support. Online students need support to be successful online learners and this could include social, academic, and cognitive forms of support. Online instructors need support in terms of pedagogy and technology to be successful online instructors. |

| 11 | Access, Culture, Equity, Inclusion, and Ethics | Cross-cultural online learning is gaining importance along with access in global settings. In addition, providing inclusive opportunities for all learners and in ethical ways is being examined. |

| 12 | Leadership, Policy and Management | Leadership support is essential for success of online learning. Leaders perspectives, challenges and strategies used are examined. Policies and governance related research are also being studied. |

Online learning research themes framework.

The collection of research themes is presented as a framework in Fig. 1 . The themes are organized by domain or level to underscore the nested relationship that exists. As evidenced by the assortment of themes, research can focus on any domain of delivery or associated context. The “Learner” domain captures characteristics and outcomes related to learners and their interaction within the courses. The “Course and Instructor” domain captures elements about the broader design of the course and facilitation by the instructor, and the “Organizational” domain acknowledges the contextual influences on the course. It is important to note as well that due to the nesting, research themes can cross domains. For example, the broader cultural context may be studied as it pertains to course design and development, and institutional support can include both learner support and instructor support. Likewise, engagement research can involve instructors as well as learners.

In this introduction section, we have reviewed three systematic reviews on online learning research ( Berge & Mrozowski, 2001 ; Tallent-Runnels et al., 2006 ; Zawacki-Richter et al., 2009 ). Based on these reviews and other research, we have derived twelve themes to develop an online learning research framework which is nested in three levels: learner, course and instructor, and organization.

2.5. Purpose of this research

In two out of the three previous reviews, design, learner characteristics and interaction were examined in the highest number of studies. On the other hand, cost-benefit tradeoffs, equity and accessibility, institutional and administrative factors, and globalization and cross-cultural aspects were examined in the least number of studies. One explanation for this may be that it is a function of nesting, noting that studies falling in the Organizational and Course levels may encompass several courses or many more participants within courses. However, while some research themes re-occur, there are also variations in some themes across time, suggesting the importance of research themes rise and fall over time. Thus, a critical examination of the trends in themes is helpful for understanding where research is needed most. Also, since there is no recent study examining online learning research themes in the last decade, this study strives to address that gap by focusing on recent research themes found in the literature, and also reviewing research methods and settings. Notably, one goal is to also compare findings from this decade to the previous review studies. Overall, the purpose of this study is to examine publication trends in online learning research taking place during the last ten years and compare it with the previous themes identified in other review studies. Due to the continued growth of online learning research into new contexts and among new researchers, we also examine the research methods and settings found in the studies of this review.

The following research questions are addressed in this study.

- 1. What percentage of the population of articles published in the journals reviewed from 2009 to 2018 were related to online learning and empirical?

- 2. What is the frequency of online learning research themes in the empirical online learning articles of journals reviewed from 2009 to 2018?

- 3. What is the frequency of research methods and settings that researchers employed in the empirical online learning articles of the journals reviewed from 2009 to 2018?

This five-step systematic review process described in the U.S. Department of Education, Institute of Education Sciences, What Works Clearinghouse Procedures and Standards Handbook, Version 4.0 ( 2017 ) was used in this systematic review: (a) developing the review protocol, (b) identifying relevant literature, (c) screening studies, (d) reviewing articles, and (e) reporting findings.

3.1. Data sources and search strategies

The Education Research Complete database was searched using the keywords below for published articles between the years 2009 and 2018 using both the Title and Keyword function for the following search terms.

“online learning" OR "online teaching" OR "online program" OR "online course" OR “online education”

3.2. Inclusion/exclusion criteria

The initial search of online learning research among journals in the database resulted in more than 3000 possible articles. Therefore, we limited our search to select journals that focus on publishing peer-reviewed online learning and educational research. Our aim was to capture the journals that published the most articles in online learning. However, we also wanted to incorporate the concept of rigor, so we used expert perception to identify 12 peer-reviewed journals that publish high-quality online learning research. Dissertations and conference proceedings were excluded. To be included in this systematic review, each study had to meet the screening criteria as described in Table 3 . A research study was excluded if it did not meet all of the criteria to be included.

Inclusion/Exclusion criteria.

| Criteria | Inclusion | Exclusion |

|---|---|---|

| Focus of the article | Online learning | Articles that did not focus on online learning |

| Journals Published | Twelve identified journals | Journals outside of the 12 journals |

| Publication date | 2009 to 2018 | Prior to 2009 and after 2018 |

| Publication type | Scholarly articles of original research from peer reviewed journals | Book chapters, technical reports, dissertations, or proceedings |

| Research Method and Results | There was an identifiable method and results section describing how the study was conducted and included the findings. Quantitative and qualitative methods were included. | Reviews of other articles, opinion, or discussion papers that do not include a discussion of the procedures of the study or analysis of data such as product reviews or conceptual articles. |

| Language | Journal article was written in English | Other languages were not included |

3.3. Process flow selection of articles

Fig. 2 shows the process flow involved in the selection of articles. The search in the database Education Research Complete yielded an initial sample of 3332 articles. Targeting the 12 journals removed 2579 articles. After reviewing the abstracts, we removed 134 articles based on the inclusion/exclusion criteria. The final sample, consisting of 619 articles, was entered into the computer software MAXQDA ( VERBI Software, 2019 ) for coding.

Flowchart of online learning research selection.

3.4. Developing review protocol

A review protocol was designed as a codebook in MAXQDA ( VERBI Software, 2019 ) by the three researchers. The codebook was developed based on findings from the previous review studies and from the initial screening of the articles in this review. The codebook included 12 research themes listed earlier in Table 2 (Learner characteristics, Instructor characteristics, Course or program design and development, Course Facilitation, Engagement, Course Assessment, Course Technologies, Access, Culture, Equity, Inclusion, and Ethics, Leadership, Policy and Management, Instructor and Learner Support, and Learner Outcomes), four research settings (higher education, continuing education, K-12, corporate/military), and three research designs (quantitative, qualitative and mixed methods). Fig. 3 below is a screenshot of MAXQDA used for the coding process.

Codebook from MAXQDA.

3.5. Data coding

Research articles were coded by two researchers in MAXQDA. Two researchers independently coded 10% of the articles and then discussed and updated the coding framework. The second author who was a doctoral student coded the remaining studies. The researchers met bi-weekly to address coding questions that emerged. After the first phase of coding, we found that more than 100 studies fell into each of the categories of Learner Characteristics or Engagement, so we decided to pursue a second phase of coding and reexamine the two themes. Learner Characteristics were classified into the subthemes of Academic, Affective, Motivational, Self-regulation, Cognitive, and Demographic Characteristics. Engagement was classified into the subthemes of Collaborating, Communication, Community, Involvement, Interaction, Participation, and Presence.

3.6. Data analysis

Frequency tables were generated for each of the variables so that outliers could be examined and narrative data could be collapsed into categories. Once cleaned and collapsed into a reasonable number of categories, descriptive statistics were used to describe each of the coded elements. We first present the frequencies of publications related to online learning in the 12 journals. The total number of articles for each journal (collectively, the population) was hand-counted from journal websites, excluding editorials and book reviews. The publication trend of online learning research was also depicted from 2009 to 2018. Then, the descriptive information of the 12 themes, including the subthemes of Learner Characteristics and Engagement were provided. Finally, research themes by research settings and methodology were elaborated.

4.1. Publication trends on online learning

Publication patterns of the 619 articles reviewed from the 12 journals are presented in Table 4 . International Review of Research in Open and Distributed Learning had the highest number of publications in this review. Overall, about 8% of the articles appearing in these twelve journals consisted of online learning publications; however, several journals had concentrations of online learning articles totaling more than 20%.

Empirical online learning research articles by journal, 2009–2018.

| Journal Name | Frequency of Empirical Online Learning Research | Percent of Sample | Percent of Journal's Total Articles |

|---|---|---|---|

| International Review of Research in Open and Distributed Learning | 152 | 24.40 | 22.55 |

| Internet & Higher Education | 84 | 13.48 | 26.58 |

| Computers & Education | 75 | 12.04 | 18.84 |

| Online Learning | 72 | 11.56 | 3.25 |

| Distance Education | 64 | 10.27 | 25.10 |

| Journal of Online Learning & Teaching | 39 | 6.26 | 11.71 |

| Journal of Educational Technology & Society | 36 | 5.78 | 3.63 |

| Quarterly Review of Distance Education | 24 | 3.85 | 4.71 |

| American Journal of Distance Education | 21 | 3.37 | 9.17 |

| British Journal of Educational Technology | 19 | 3.05 | 1.93 |

| Educational Technology Research & Development | 19 | 3.05 | 10.80 |

| Australasian Journal of Educational Technology | 14 | 2.25 | 2.31 |

| Total | 619 | 100.0 | 8.06 |

Note . Journal's Total Article count excludes reviews and editorials.

The publication trend of online learning research is depicted in Fig. 4 . When disaggregated by year, the total frequency of publications shows an increasing trend. Online learning articles increased throughout the decade and hit a relative maximum in 2014. The greatest number of online learning articles ( n = 86) occurred most recently, in 2018.

Online learning publication trends by year.

4.2. Online learning research themes that appeared in the selected articles

The publications were categorized into the twelve research themes identified in Fig. 1 . The frequency counts and percentages of the research themes are provided in Table 5 below. A majority of the research is categorized into the Learner domain. The fewest number of articles appears in the Organization domain.

Research themes in the online learning publications from 2009 to 2018.

| Research Themes | Frequency | Percentage |

|---|---|---|

| Engagement | 179 | 28.92 |

| Learner Characteristics | 134 | 21.65 |

| Learner Outcome | 32 | 5.17 |

| Evaluation and Quality Assurance | 38 | 6.14 |

| Course Technologies | 35 | 5.65 |

| Course Facilitation | 34 | 5.49 |

| Course Assessment | 30 | 4.85 |

| Course Design and Development | 27 | 4.36 |

| Instructor Characteristics | 21 | 3.39 |

| Institutional Support | 33 | 5.33 |

| Access, Culture, Equity, Inclusion, and Ethics | 29 | 4.68 |

| Leadership, Policy, and Management | 27 | 4.36 |

The specific themes of Engagement ( n = 179, 28.92%) and Learner Characteristics ( n = 134, 21.65%) were most often examined in publications. These two themes were further coded to identify sub-themes, which are described in the next two sections. Publications focusing on Instructor Characteristics ( n = 21, 3.39%) were least common in the dataset.

4.2.1. Research on engagement

The largest number of studies was on engagement in online learning, which in the online learning literature is referred to and examined through different terms. Hence, we explore this category in more detail. In this review, we categorized the articles into seven different sub-themes as examined through different lenses including presence, interaction, community, participation, collaboration, involvement, and communication. We use the term “involvement” as one of the terms since researchers sometimes broadly used the term engagement to describe their work without further description. Table 6 below provides the description, frequency, and percentages of the various studies related to engagement.

Research sub-themes on engagement.

| Description | Frequency | Percentage | |

|---|---|---|---|

| Presence | Learning experience through social, cognitive, and teaching presence. | 50 | 8.08 |

| Interaction | Process of interacting with peers, instructor, or content that results in learners understanding or behavior | 43 | 6.95 |

| Community | Sense of belonging within a group | 25 | 4.04 |

| Participation | Process of being actively involved | 21 | 3.39 |

| Collaboration | Working with someone to create something | 17 | 2.75 |

| Involvement | Involvement in learning. This includes articles that focused broadly on engagement of learners. | 14 | 2.26 |

| Communication | Process of exchanging information with the intent to share information | 9 | 1.45 |

In the sections below, we provide several examples of the different engagement sub-themes that were studied within the larger engagement theme.

Presence. This sub-theme was the most researched in engagement. With the development of the community of inquiry framework most of the studies in this subtheme examined social presence ( Akcaoglu & Lee, 2016 ; Phirangee & Malec, 2017 ; Wei et al., 2012 ), teaching presence ( Orcutt & Dringus, 2017 ; Preisman, 2014 ; Wisneski et al., 2015 ) and cognitive presence ( Archibald, 2010 ; Olesova et al., 2016 ).

Interaction . This was the second most studied theme under engagement. Researchers examined increasing interpersonal interactions ( Cung et al., 2018 ), learner-learner interactions ( Phirangee, 2016 ; Shackelford & Maxwell, 2012 ; Tawfik et al., 2018 ), peer-peer interaction ( Comer et al., 2014 ), learner-instructor interaction ( Kuo et al., 2014 ), learner-content interaction ( Zimmerman, 2012 ), interaction through peer mentoring ( Ruane & Koku, 2014 ), interaction and community building ( Thormann & Fidalgo, 2014 ), and interaction in discussions ( Ruane & Lee, 2016 ; Tibi, 2018 ).

Community. Researchers examined building community in online courses ( Berry, 2017 ), supporting a sense of community ( Jiang, 2017 ), building an online learning community of practice ( Cho, 2016 ), building an academic community ( Glazer & Wanstreet, 2011 ; Nye, 2015 ; Overbaugh & Nickel, 2011 ), and examining connectedness and rapport in an online community ( Bolliger & Inan, 2012 ; Murphy & Rodríguez-Manzanares, 2012 ; Slagter van Tryon & Bishop, 2012 ).

Participation. Researchers examined engagement through participation in a number of studies. Some of the topics include, participation patterns in online discussion ( Marbouti & Wise, 2016 ; Wise et al., 2012 ), participation in MOOCs ( Ahn et al., 2013 ; Saadatmand & Kumpulainen, 2014 ), features that influence students’ online participation ( Rye & Støkken, 2012 ) and active participation.

Collaboration. Researchers examined engagement through collaborative learning. Specific studies focused on cross-cultural collaboration ( Kumi-Yeboah, 2018 ; Yang et al., 2014 ), how virtual teams collaborate ( Verstegen et al., 2018 ), types of collaboration teams ( Wicks et al., 2015 ), tools for collaboration ( Boling et al., 2014 ), and support for collaboration ( Kopp et al., 2012 ).

Involvement. Researchers examined engaging learners through involvement in various learning activities ( Cundell & Sheepy, 2018 ), student engagement through various measures ( Dixson, 2015 ), how instructors included engagement to involve students in learning ( O'Shea et al., 2015 ), different strategies to engage the learner ( Amador & Mederer, 2013 ), and designed emotionally engaging online environments ( Koseoglu & Doering, 2011 ).

Communication. Researchers examined communication in online learning in studies using social network analysis ( Ergün & Usluel, 2016 ), using informal communication tools such as Facebook for class discussion ( Kent, 2013 ), and using various modes of communication ( Cunningham et al., 2010 ; Rowe, 2016 ). Studies have also focused on both asynchronous and synchronous aspects of communication ( Swaggerty & Broemmel, 2017 ; Yamagata-Lynch, 2014 ).

4.2.2. Research on learner characteristics

The second largest theme was learner characteristics. In this review, we explore this further to identify several aspects of learner characteristics. In this review, we categorized the learner characteristics into self-regulation characteristics, motivational characteristics, academic characteristics, affective characteristics, cognitive characteristics, and demographic characteristics. Table 7 provides the number of studies and percentages examining the various learner characteristics.

Research sub-themes on learner characteristics.

| Learner Characteristics | Description | Frequency | Percentage |

|---|---|---|---|

| Self-regulation Characteristics | Involves controlling learner's behavior, emotions, and thoughts to achieve specific learning and performance goals | 54 | 8.72 |

| Motivational Characteristics | Learners goal-directed activity instigated and sustained such as beliefs, and behavioral change | 23 | 3.72 |

| Academic Characteristics | Education characteristics such as educational type and educational level | 19 | 3.07 |

| Affective Characteristics | Learner characteristics that describe learners' feelings or emotions such as satisfaction | 17 | 2.75 |

| Cognitive Characteristics | Learner characteristics related to cognitive elements such as attention, memory, and intellect (e.g., learning strategies, learning skills, etc.) | 14 | 2.26 |

| Demographic Characteristics | Learner characteristics that relate to information as age, gender, language, social economic status, and cultural background. | 7 | 1.13 |

Online learning has elements that are different from the traditional face-to-face classroom and so the characteristics of the online learners are also different. Yukselturk and Top (2013) categorized online learner profile into ten aspects: gender, age, work status, self-efficacy, online readiness, self-regulation, participation in discussion list, participation in chat sessions, satisfaction, and achievement. Their categorization shows that there are differences in online learner characteristics in these aspects when compared to learners in other settings. Some of the other aspects such as participation and achievement as discussed by Yukselturk and Top (2013) are discussed in different research themes in this study. The sections below provide examples of the learner characteristics sub-themes that were studied.

Self-regulation. Several researchers have examined self-regulation in online learning. They found that successful online learners are academically motivated ( Artino & Stephens, 2009 ), have academic self-efficacy ( Cho & Shen, 2013 ), have grit and intention to succeed ( Wang & Baker, 2018 ), have time management and elaboration strategies ( Broadbent, 2017 ), set goals and revisit course content ( Kizilcec et al., 2017 ), and persist ( Glazer & Murphy, 2015 ). Researchers found a positive relationship between learner's self-regulation and interaction ( Delen et al., 2014 ) and self-regulation and communication and collaboration ( Barnard et al., 2009 ).

Motivation. Researchers focused on motivation of online learners including different motivation levels of online learners ( Li & Tsai, 2017 ), what motivated online learners ( Chaiprasurt & Esichaikul, 2013 ), differences in motivation of online learners ( Hartnett et al., 2011 ), and motivation when compared to face to face learners ( Paechter & Maier, 2010 ). Harnett et al. (2011) found that online learner motivation was complex, multifaceted, and sensitive to situational conditions.

Academic. Several researchers have focused on academic aspects for online learner characteristics. Readiness for online learning has been examined as an academic factor by several researchers ( Buzdar et al., 2016 ; Dray et al., 2011 ; Wladis & Samuels, 2016 ; Yu, 2018 ) specifically focusing on creating and validating measures to examine online learner readiness including examining students emotional intelligence as a measure of student readiness for online learning. Researchers have also examined other academic factors such as academic standing ( Bradford & Wyatt, 2010 ), course level factors ( Wladis et al., 2014 ) and academic skills in online courses ( Shea & Bidjerano, 2014 ).

Affective. Anderson and Bourke (2013) describe affective characteristics through which learners express feelings or emotions. Several research studies focused on the affective characteristics of online learners. Learner satisfaction for online learning has been examined by several researchers ( Cole et al., 2014 ; Dziuban et al., 2015 ; Kuo et al., 2013 ; Lee, 2014a ) along with examining student emotions towards online assessment ( Kim et al., 2014 ).

Cognitive. Researchers have also examined cognitive aspects of learner characteristics including meta-cognitive skills, cognitive variables, higher-order thinking, cognitive density, and critical thinking ( Chen & Wu, 2012 ; Lee, 2014b ). Lee (2014b) examined the relationship between cognitive presence density and higher-order thinking skills. Chen and Wu (2012) examined the relationship between cognitive and motivational variables in an online system for secondary physical education.

Demographic. Researchers have examined various demographic factors in online learning. Several researchers have examined gender differences in online learning ( Bayeck et al., 2018 ; Lowes et al., 2016 ; Yukselturk & Bulut, 2009 ), ethnicity, age ( Ke & Kwak, 2013 ), and minority status ( Yeboah & Smith, 2016 ) of online learners.

4.2.3. Less frequently studied research themes

While engagement and learner characteristics were studied the most, other themes were less often studied in the literature and are presented here, according to size, with general descriptions of the types of research examined for each.

Evaluation and Quality Assurance. There were 38 studies (6.14%) published in the theme of evaluation and quality assurance. Some of the studies in this theme focused on course quality standards, using quality matters to evaluate quality, using the CIPP model for evaluation, online learning system evaluation, and course and program evaluations.

Course Technologies. There were 35 studies (5.65%) published in the course technologies theme. Some of the studies examined specific technologies such as Edmodo, YouTube, Web 2.0 tools, wikis, Twitter, WebCT, Screencasts, and Web conferencing systems in the online learning context.

Course Facilitation. There were 34 studies (5.49%) published in the course facilitation theme. Some of the studies in this theme examined facilitation strategies and methods, experiences of online facilitators, and online teaching methods.

Institutional Support. There were 33 studies (5.33%) published in the institutional support theme which included support for both the instructor and learner. Some of the studies on instructor support focused on training new online instructors, mentoring programs for faculty, professional development resources for faculty, online adjunct faculty training, and institutional support for online instructors. Studies on learner support focused on learning resources for online students, cognitive and social support for online learners, and help systems for online learner support.

Learner Outcome. There were 32 studies (5.17%) published in the learner outcome theme. Some of the studies that were examined in this theme focused on online learner enrollment, completion, learner dropout, retention, and learner success.

Course Assessment. There were 30 studies (4.85%) published in the course assessment theme. Some of the studies in the course assessment theme examined online exams, peer assessment and peer feedback, proctoring in online exams, and alternative assessments such as eportfolio.

Access, Culture, Equity, Inclusion, and Ethics. There were 29 studies (4.68%) published in the access, culture, equity, inclusion, and ethics theme. Some of the studies in this theme examined online learning across cultures, multi-cultural effectiveness, multi-access, and cultural diversity in online learning.

Leadership, Policy, and Management. There were 27 studies (4.36%) published in the leadership, policy, and management theme. Some of the studies on leadership, policy, and management focused on online learning leaders, stakeholders, strategies for online learning leadership, resource requirements, university policies for online course policies, governance, course ownership, and faculty incentives for online teaching.

Course Design and Development. There were 27 studies (4.36%) published in the course design and development theme. Some of the studies examined in this theme focused on design elements, design issues, design process, design competencies, design considerations, and instructional design in online courses.

Instructor Characteristics. There were 21 studies (3.39%) published in the instructor characteristics theme. Some of the studies in this theme were on motivation and experiences of online instructors, ability to perform online teaching duties, roles of online instructors, and adjunct versus full-time online instructors.

4.3. Research settings and methodology used in the studies

The research methods used in the studies were classified into quantitative, qualitative, and mixed methods ( Harwell, 2012 , pp. 147–163). The research setting was categorized into higher education, continuing education, K-12, and corporate/military. As shown in Table A in the appendix, the vast majority of the publications used higher education as the research setting ( n = 509, 67.6%). Table B in the appendix shows that approximately half of the studies adopted the quantitative method ( n = 324, 43.03%), followed by the qualitative method ( n = 200, 26.56%). Mixed methods account for the smallest portion ( n = 95, 12.62%).

Table A shows that the patterns of the four research settings were approximately consistent across the 12 themes except for the theme of Leaner Outcome and Institutional Support. Continuing education had a higher relative frequency in Learner Outcome (0.28) and K-12 had a higher relative frequency in Institutional Support (0.33) compared to the frequencies they had in the total themes (0.09 and 0.08 respectively). Table B in the appendix shows that the distribution of the three methods were not consistent across the 12 themes. While quantitative studies and qualitative studies were roughly evenly distributed in Engagement, they had a large discrepancy in Learner Characteristics. There were 100 quantitative studies; however, only 18 qualitative studies published in the theme of Learner Characteristics.

In summary, around 8% of the articles published in the 12 journals focus on online learning. Online learning publications showed a tendency of increase on the whole in the past decade, albeit fluctuated, with the greatest number occurring in 2018. Among the 12 research themes related to online learning, the themes of Engagement and Learner Characteristics were studied the most and the theme of Instructor Characteristics was studied the least. Most studies were conducted in the higher education setting and approximately half of the studies used the quantitative method. Looking at the 12 themes by setting and method, we found that the patterns of the themes by setting or by method were not consistent across the 12 themes.

The quality of our findings was ensured by scientific and thorough searches and coding consistency. The selection of the 12 journals provides evidence of the representativeness and quality of primary studies. In the coding process, any difficulties and questions were resolved by consultations with the research team at bi-weekly meetings, which ensures the intra-rater and interrater reliability of coding. All these approaches guarantee the transparency and replicability of the process and the quality of our results.

5. Discussion

This review enabled us to identify the online learning research themes examined from 2009 to 2018. In the section below, we review the most studied research themes, engagement and learner characteristics along with implications, limitations, and directions for future research.

5.1. Most studied research themes

Three out of the four systematic reviews informing the design of the present study found that online learner characteristics and online engagement were examined in a high number of studies. In this review, about half of the studies reviewed (50.57%) focused on online learner characteristics or online engagement. This shows the continued importance of these two themes. In the Tallent-Runnels et al.’s (2006) study, the learner characteristics theme was identified as least studied for which they state that researchers are beginning to investigate learner characteristics in the early days of online learning.

One of the differences found in this review is that course design and development was examined in the least number of studies in this review compared to two prior systematic reviews ( Berge & Mrozowski, 2001 ; Zawacki-Richter et al., 2009 ). Zawacki-Richter et al. did not use a keyword search but reviewed all the articles in five different distance education journals. Berge and Mrozowski (2001) included a research theme called design issues to include all aspects of instructional systems design in distance education journals. In our study, in addition to course design and development, we also had focused themes on learner outcomes, course facilitation, course assessment and course evaluation. These are all instructional design focused topics and since we had multiple themes focusing on instructional design topics, the course design and development category might have resulted in fewer studies. There is still a need for more studies to focus on online course design and development.

5.2. Least frequently studied research themes

Three out of the four systematic reviews discussed in the opening of this study found management and organization factors to be least studied. In this review, Leadership, Policy, and Management was studied among 4.36% of the studies and Access, Culture, Equity, Inclusion, and Ethics was studied among 4.68% of the studies in the organizational level. The theme on Equity and accessibility was also found to be the least studied theme in the Berge and Mrozowski (2001) study. In addition, instructor characteristics was the least examined research theme among the twelve themes studied in this review. Only 3.39% of the studies were on instructor characteristics. While there were some studies examining instructor motivation and experiences, instructor ability to teach online, online instructor roles, and adjunct versus full-time online instructors, there is still a need to examine topics focused on instructors and online teaching. This theme was not included in the prior reviews as the focus was more on the learner and the course but not on the instructor. While it is helpful to see research evolving on instructor focused topics, there is still a need for more research on the online instructor.

5.3. Comparing research themes from current study to previous studies

The research themes from this review were compared with research themes from previous systematic reviews, which targeted prior decades. Table 8 shows the comparison.

Comparison of most and least studied online learning research themes from current to previous reviews.

| Level | 1990–1999 ( ) | 1993–2004 ( ) | 2000–2008 ( ) | 2009–2018 (Current Study) | |

|---|---|---|---|---|---|

| Learner Characteristics | L | X | X | X | |

| Engagement and Interaction | L | X | X | X | |

| Design Issues/Instructional Design | C | X | X | ||

| Course Environment Learner Outcomes | C L | X X | |||

| Learner Support | L | X | |||

| Equity and Accessibility | O | X | X | ||

| Institutional& Administrative Factors | O | X | X | ||

| Management and Organization | O | X | X | ||

| Cost-Benefit | O | X | |||

L = Learner, C=Course O=Organization.

5.4. Need for more studies on organizational level themes of online learning

In this review there is a greater concentration of studies focused on Learner domain topics, and reduced attention to broader more encompassing research themes that fall into the Course and Organization domains. There is a need for organizational level topics such as Access, Culture, Equity, Inclusion and Ethics, and Leadership, Policy and Management to be researched on within the context of online learning. Examination of access, culture, equity, inclusion and ethics is very important to support diverse online learners, particularly with the rapid expansion of online learning across all educational levels. This was also least studied based on Berge and Mrozowski (2001) systematic review.

The topics on leadership, policy and management were least studied both in this review and also in the Tallent-Runnels et al. (2006) and Zawacki-Richter et al. (2009) study. Tallent-Runnels categorized institutional and administrative aspects into institutional policies, institutional support, and enrollment effects. While we included support as a separate category, in this study leadership, policy and management were combined. There is still a need for research on leadership of those who manage online learning, policies for online education, and managing online programs. In the Zawacki-Richter et al. (2009) study, only a few studies examined management and organization focused topics. They also found management and organization to be strongly correlated with costs and benefits. In our study, costs and benefits were collectively included as an aspect of management and organization and not as a theme by itself. These studies will provide research-based evidence for online education administrators.

6. Limitations

As with any systematic review, there are limitations to the scope of the review. The search is limited to twelve journals in the field that typically include research on online learning. These manuscripts were identified by searching the Education Research Complete database which focuses on education students, professionals, and policymakers. Other discipline-specific journals as well as dissertations and proceedings were not included due to the volume of articles. Also, the search was performed using five search terms “online learning" OR "online teaching" OR "online program" OR "online course" OR “online education” in title and keyword. If authors did not include these terms, their respective work may have been excluded from this review even if it focused on online learning. While these terms are commonly used in North America, it may not be commonly used in other parts of the world. Additional studies may exist outside this scope.

The search strategy also affected how we presented results and introduced limitations regarding generalization. We identified that only 8% of the articles published in these journals were related to online learning; however, given the use of search terms to identify articles within select journals it was not feasible to identify the total number of research-based articles in the population. Furthermore, our review focused on the topics and general methods of research and did not systematically consider the quality of the published research. Lastly, some journals may have preferences for publishing studies on a particular topic or that use a particular method (e.g., quantitative methods), which introduces possible selection and publication biases which may skew the interpretation of results due to over/under representation. Future studies are recommended to include more journals to minimize the selection bias and obtain a more representative sample.

Certain limitations can be attributed to the coding process. Overall, the coding process for this review worked well for most articles, as each tended to have an individual or dominant focus as described in the abstracts, though several did mention other categories which likely were simultaneously considered to a lesser degree. However, in some cases, a dominant theme was not as apparent and an effort to create mutually exclusive groups for clearer interpretation the coders were occasionally forced to choose between two categories. To facilitate this coding, the full-texts were used to identify a study focus through a consensus seeking discussion among all authors. Likewise, some studies focused on topics that we have associated with a particular domain, but the design of the study may have promoted an aggregated examination or integrated factors from multiple domains (e.g., engagement). Due to our reliance on author descriptions, the impact of construct validity is likely a concern that requires additional exploration. Our final grouping of codes may not have aligned with the original author's description in the abstract. Additionally, coding of broader constructs which disproportionately occur in the Learner domain, such as learner outcomes, learner characteristics, and engagement, likely introduced bias towards these codes when considering studies that involved multiple domains. Additional refinement to explore the intersection of domains within studies is needed.

7. Implications and future research

One of the strengths of this review is the research categories we have identified. We hope these categories will support future researchers and identify areas and levels of need for future research. Overall, there is some agreement on research themes on online learning research among previous reviews and this one, at the same time there are some contradicting findings. We hope the most-researched themes and least-researched themes provide authors a direction on the importance of research and areas of need to focus on.

The leading themes found in this review is online engagement research. However, presentation of this research was inconsistent, and often lacked specificity. This is not unique to online environments, but the nuances of defining engagement in an online environment are unique and therefore need further investigation and clarification. This review points to seven distinct classifications of online engagement. Further research on engagement should indicate which type of engagement is sought. This level of specificity is necessary to establish instruments for measuring engagement and ultimately testing frameworks for classifying engagement and promoting it in online environments. Also, it might be of importance to examine the relationship between these seven sub-themes of engagement.

Additionally, this review highlights growing attention to learner characteristics, which constitutes a shift in focus away from instructional characteristics and course design. Although this is consistent with the focus on engagement, the role of the instructor, and course design with respect to these outcomes remains important. Results of the learner characteristics and engagement research paired with course design will have important ramifications for the use of teaching and learning professionals who support instruction. Additionally, the review also points to a concentration of research in the area of higher education. With an immediate and growing emphasis on online learning in K-12 and corporate settings, there is a critical need for further investigation in these settings.

Lastly, because the present review did not focus on the overall effect of interventions, opportunities exist for dedicated meta-analyses. Particular attention to research on engagement and learner characteristics as well as how these vary by study design and outcomes would be logical additions to the research literature.

8. Conclusion

This systematic review builds upon three previous reviews which tackled the topic of online learning between 1990 and 2010 by extending the timeframe to consider the most recent set of published research. Covering the most recent decade, our review of 619 articles from 12 leading online learning journal points to a more concentrated focus on the learner domain including engagement and learner characteristics, with more limited attention to topics pertaining to the classroom or organizational level. The review highlights an opportunity for the field to clarify terminology concerning online learning research, particularly in the areas of learner outcomes where there is a tendency to classify research more generally (e.g., engagement). Using this sample of published literature, we provide a possible taxonomy for categorizing this research using subcategories. The field could benefit from a broader conversation about how these categories can shape a comprehensive framework for online learning research. Such efforts will enable the field to effectively prioritize research aims over time and synthesize effects.

Credit author statement

Florence Martin: Conceptualization; Writing - original draft, Writing - review & editing Preparation, Supervision, Project administration. Ting Sun: Methodology, Formal analysis, Writing - original draft, Writing - review & editing. Carl Westine: Methodology, Formal analysis, Writing - original draft, Writing - review & editing, Supervision

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

1 Includes articles that are cited in this manuscript and also included in the systematic review. The entire list of 619 articles used in the systematic review can be obtained by emailing the authors.*

Appendix B Supplementary data to this article can be found online at https://doi.org/10.1016/j.compedu.2020.104009 .

Appendix A.

Research Themes by the Settings in the Online Learning Publications

| Research Theme | Higher Ed ( = 506) | Continuing Education ( = 58) | K-12 ( = 53) | Corporate/Military ( = 3) |

|---|---|---|---|---|

| Engagement | 153 | 15 | 12 | 0 |

| Presence | 46 | 2 | 3 | 0 |

| Interaction | 35 | 4 | 4 | 0 |

| Community | 19 | 2 | 4 | 0 |

| Participation | 16 | 5 | 0 | 0 |

| Collaboration | 16 | 1 | 0 | 0 |

| Involvement | 13 | 0 | 1 | 0 |

| Communication | 8 | 1 | 0 | 0 |

| Learner Characteristics | 106 | 18 | 9 | 1 |

| Self-regulation Characteristics | 43 | 9 | 2 | 0 |

| Motivation Characteristics | 18 | 3 | 2 | 0 |

| Academic Characteristics | 17 | 0 | 2 | 0 |

| Affective Characteristics | 12 | 3 | 1 | 1 |

| Cognitive Characteristics | 11 | 1 | 2 | 0 |

| Demographic Characteristics | 5 | 2 | 0 | 0 |

| Evaluation and Quality Assurance | 33 | 3 | 2 | 0 |

| Course Technologies | 33 | 2 | 0 | 0 |

| Course Facilitation | 30 | 3 | 1 | 0 |

| Institutional Support | 24 | 0 | 8 | 1 |

| Learner Outcome | 24 | 7 | 1 | 0 |

| Course Assessment | 23 | 2 | 5 | 0 |

| Access, Culture, Equity, Inclusion and Ethics | 26 | 1 | 2 | 0 |

| Leadership, Policy and Management | 17 | 5 | 5 | 0 |

| Course Design and Development | 21 | 1 | 4 | 1 |

| Instructor Characteristics | 16 | 1 | 4 | 0 |

Research Themes by the Methodology in the Online Learning Publications

| Research Theme | Mixed Method ( = 95) | Quantitative ( = 324) | Qualitative ( = 200) |

|---|---|---|---|

| Engagement | 32 | 78 | 69 |

| Presence | 11 | 25 | 14 |

| Interaction | 9 | 20 | 14 |

| Community | 2 | 9 | 14 |

| Participation | 6 | 8 | 7 |

| Collaboration | 2 | 5 | 10 |

| Involvement | 2 | 6 | 6 |

| Communication | 0 | 5 | 4 |

| Learner Characteristics | 16 | 100 | 18 |

| Self-regulation Characteristics | 5 | 43 | 6 |

| Motivation Characteristics | 4 | 15 | 4 |

| Academic Characteristics | 1 | 15 | 3 |

| Affective Characteristics | 2 | 12 | 3 |