Understanding Assignments

What this handout is about.

The first step in any successful college writing venture is reading the assignment. While this sounds like a simple task, it can be a tough one. This handout will help you unravel your assignment and begin to craft an effective response. Much of the following advice will involve translating typical assignment terms and practices into meaningful clues to the type of writing your instructor expects. See our short video for more tips.

Basic beginnings

Regardless of the assignment, department, or instructor, adopting these two habits will serve you well :

- Read the assignment carefully as soon as you receive it. Do not put this task off—reading the assignment at the beginning will save you time, stress, and problems later. An assignment can look pretty straightforward at first, particularly if the instructor has provided lots of information. That does not mean it will not take time and effort to complete; you may even have to learn a new skill to complete the assignment.

- Ask the instructor about anything you do not understand. Do not hesitate to approach your instructor. Instructors would prefer to set you straight before you hand the paper in. That’s also when you will find their feedback most useful.

Assignment formats

Many assignments follow a basic format. Assignments often begin with an overview of the topic, include a central verb or verbs that describe the task, and offer some additional suggestions, questions, or prompts to get you started.

An Overview of Some Kind

The instructor might set the stage with some general discussion of the subject of the assignment, introduce the topic, or remind you of something pertinent that you have discussed in class. For example:

“Throughout history, gerbils have played a key role in politics,” or “In the last few weeks of class, we have focused on the evening wear of the housefly …”

The Task of the Assignment

Pay attention; this part tells you what to do when you write the paper. Look for the key verb or verbs in the sentence. Words like analyze, summarize, or compare direct you to think about your topic in a certain way. Also pay attention to words such as how, what, when, where, and why; these words guide your attention toward specific information. (See the section in this handout titled “Key Terms” for more information.)

“Analyze the effect that gerbils had on the Russian Revolution”, or “Suggest an interpretation of housefly undergarments that differs from Darwin’s.”

Additional Material to Think about

Here you will find some questions to use as springboards as you begin to think about the topic. Instructors usually include these questions as suggestions rather than requirements. Do not feel compelled to answer every question unless the instructor asks you to do so. Pay attention to the order of the questions. Sometimes they suggest the thinking process your instructor imagines you will need to follow to begin thinking about the topic.

“You may wish to consider the differing views held by Communist gerbils vs. Monarchist gerbils, or Can there be such a thing as ‘the housefly garment industry’ or is it just a home-based craft?”

These are the instructor’s comments about writing expectations:

“Be concise”, “Write effectively”, or “Argue furiously.”

Technical Details

These instructions usually indicate format rules or guidelines.

“Your paper must be typed in Palatino font on gray paper and must not exceed 600 pages. It is due on the anniversary of Mao Tse-tung’s death.”

The assignment’s parts may not appear in exactly this order, and each part may be very long or really short. Nonetheless, being aware of this standard pattern can help you understand what your instructor wants you to do.

Interpreting the assignment

Ask yourself a few basic questions as you read and jot down the answers on the assignment sheet:

Why did your instructor ask you to do this particular task?

Who is your audience.

- What kind of evidence do you need to support your ideas?

What kind of writing style is acceptable?

- What are the absolute rules of the paper?

Try to look at the question from the point of view of the instructor. Recognize that your instructor has a reason for giving you this assignment and for giving it to you at a particular point in the semester. In every assignment, the instructor has a challenge for you. This challenge could be anything from demonstrating an ability to think clearly to demonstrating an ability to use the library. See the assignment not as a vague suggestion of what to do but as an opportunity to show that you can handle the course material as directed. Paper assignments give you more than a topic to discuss—they ask you to do something with the topic. Keep reminding yourself of that. Be careful to avoid the other extreme as well: do not read more into the assignment than what is there.

Of course, your instructor has given you an assignment so that they will be able to assess your understanding of the course material and give you an appropriate grade. But there is more to it than that. Your instructor has tried to design a learning experience of some kind. Your instructor wants you to think about something in a particular way for a particular reason. If you read the course description at the beginning of your syllabus, review the assigned readings, and consider the assignment itself, you may begin to see the plan, purpose, or approach to the subject matter that your instructor has created for you. If you still aren’t sure of the assignment’s goals, try asking the instructor. For help with this, see our handout on getting feedback .

Given your instructor’s efforts, it helps to answer the question: What is my purpose in completing this assignment? Is it to gather research from a variety of outside sources and present a coherent picture? Is it to take material I have been learning in class and apply it to a new situation? Is it to prove a point one way or another? Key words from the assignment can help you figure this out. Look for key terms in the form of active verbs that tell you what to do.

Key Terms: Finding Those Active Verbs

Here are some common key words and definitions to help you think about assignment terms:

Information words Ask you to demonstrate what you know about the subject, such as who, what, when, where, how, and why.

- define —give the subject’s meaning (according to someone or something). Sometimes you have to give more than one view on the subject’s meaning

- describe —provide details about the subject by answering question words (such as who, what, when, where, how, and why); you might also give details related to the five senses (what you see, hear, feel, taste, and smell)

- explain —give reasons why or examples of how something happened

- illustrate —give descriptive examples of the subject and show how each is connected with the subject

- summarize —briefly list the important ideas you learned about the subject

- trace —outline how something has changed or developed from an earlier time to its current form

- research —gather material from outside sources about the subject, often with the implication or requirement that you will analyze what you have found

Relation words Ask you to demonstrate how things are connected.

- compare —show how two or more things are similar (and, sometimes, different)

- contrast —show how two or more things are dissimilar

- apply—use details that you’ve been given to demonstrate how an idea, theory, or concept works in a particular situation

- cause —show how one event or series of events made something else happen

- relate —show or describe the connections between things

Interpretation words Ask you to defend ideas of your own about the subject. Do not see these words as requesting opinion alone (unless the assignment specifically says so), but as requiring opinion that is supported by concrete evidence. Remember examples, principles, definitions, or concepts from class or research and use them in your interpretation.

- assess —summarize your opinion of the subject and measure it against something

- prove, justify —give reasons or examples to demonstrate how or why something is the truth

- evaluate, respond —state your opinion of the subject as good, bad, or some combination of the two, with examples and reasons

- support —give reasons or evidence for something you believe (be sure to state clearly what it is that you believe)

- synthesize —put two or more things together that have not been put together in class or in your readings before; do not just summarize one and then the other and say that they are similar or different—you must provide a reason for putting them together that runs all the way through the paper

- analyze —determine how individual parts create or relate to the whole, figure out how something works, what it might mean, or why it is important

- argue —take a side and defend it with evidence against the other side

More Clues to Your Purpose As you read the assignment, think about what the teacher does in class:

- What kinds of textbooks or coursepack did your instructor choose for the course—ones that provide background information, explain theories or perspectives, or argue a point of view?

- In lecture, does your instructor ask your opinion, try to prove their point of view, or use keywords that show up again in the assignment?

- What kinds of assignments are typical in this discipline? Social science classes often expect more research. Humanities classes thrive on interpretation and analysis.

- How do the assignments, readings, and lectures work together in the course? Instructors spend time designing courses, sometimes even arguing with their peers about the most effective course materials. Figuring out the overall design to the course will help you understand what each assignment is meant to achieve.

Now, what about your reader? Most undergraduates think of their audience as the instructor. True, your instructor is a good person to keep in mind as you write. But for the purposes of a good paper, think of your audience as someone like your roommate: smart enough to understand a clear, logical argument, but not someone who already knows exactly what is going on in your particular paper. Remember, even if the instructor knows everything there is to know about your paper topic, they still have to read your paper and assess your understanding. In other words, teach the material to your reader.

Aiming a paper at your audience happens in two ways: you make decisions about the tone and the level of information you want to convey.

- Tone means the “voice” of your paper. Should you be chatty, formal, or objective? Usually you will find some happy medium—you do not want to alienate your reader by sounding condescending or superior, but you do not want to, um, like, totally wig on the man, you know? Eschew ostentatious erudition: some students think the way to sound academic is to use big words. Be careful—you can sound ridiculous, especially if you use the wrong big words.

- The level of information you use depends on who you think your audience is. If you imagine your audience as your instructor and they already know everything you have to say, you may find yourself leaving out key information that can cause your argument to be unconvincing and illogical. But you do not have to explain every single word or issue. If you are telling your roommate what happened on your favorite science fiction TV show last night, you do not say, “First a dark-haired white man of average height, wearing a suit and carrying a flashlight, walked into the room. Then a purple alien with fifteen arms and at least three eyes turned around. Then the man smiled slightly. In the background, you could hear a clock ticking. The room was fairly dark and had at least two windows that I saw.” You also do not say, “This guy found some aliens. The end.” Find some balance of useful details that support your main point.

You’ll find a much more detailed discussion of these concepts in our handout on audience .

The Grim Truth

With a few exceptions (including some lab and ethnography reports), you are probably being asked to make an argument. You must convince your audience. It is easy to forget this aim when you are researching and writing; as you become involved in your subject matter, you may become enmeshed in the details and focus on learning or simply telling the information you have found. You need to do more than just repeat what you have read. Your writing should have a point, and you should be able to say it in a sentence. Sometimes instructors call this sentence a “thesis” or a “claim.”

So, if your instructor tells you to write about some aspect of oral hygiene, you do not want to just list: “First, you brush your teeth with a soft brush and some peanut butter. Then, you floss with unwaxed, bologna-flavored string. Finally, gargle with bourbon.” Instead, you could say, “Of all the oral cleaning methods, sandblasting removes the most plaque. Therefore it should be recommended by the American Dental Association.” Or, “From an aesthetic perspective, moldy teeth can be quite charming. However, their joys are short-lived.”

Convincing the reader of your argument is the goal of academic writing. It doesn’t have to say “argument” anywhere in the assignment for you to need one. Look at the assignment and think about what kind of argument you could make about it instead of just seeing it as a checklist of information you have to present. For help with understanding the role of argument in academic writing, see our handout on argument .

What kind of evidence do you need?

There are many kinds of evidence, and what type of evidence will work for your assignment can depend on several factors–the discipline, the parameters of the assignment, and your instructor’s preference. Should you use statistics? Historical examples? Do you need to conduct your own experiment? Can you rely on personal experience? See our handout on evidence for suggestions on how to use evidence appropriately.

Make sure you are clear about this part of the assignment, because your use of evidence will be crucial in writing a successful paper. You are not just learning how to argue; you are learning how to argue with specific types of materials and ideas. Ask your instructor what counts as acceptable evidence. You can also ask a librarian for help. No matter what kind of evidence you use, be sure to cite it correctly—see the UNC Libraries citation tutorial .

You cannot always tell from the assignment just what sort of writing style your instructor expects. The instructor may be really laid back in class but still expect you to sound formal in writing. Or the instructor may be fairly formal in class and ask you to write a reflection paper where you need to use “I” and speak from your own experience.

Try to avoid false associations of a particular field with a style (“art historians like wacky creativity,” or “political scientists are boring and just give facts”) and look instead to the types of readings you have been given in class. No one expects you to write like Plato—just use the readings as a guide for what is standard or preferable to your instructor. When in doubt, ask your instructor about the level of formality they expect.

No matter what field you are writing for or what facts you are including, if you do not write so that your reader can understand your main idea, you have wasted your time. So make clarity your main goal. For specific help with style, see our handout on style .

Technical details about the assignment

The technical information you are given in an assignment always seems like the easy part. This section can actually give you lots of little hints about approaching the task. Find out if elements such as page length and citation format (see the UNC Libraries citation tutorial ) are negotiable. Some professors do not have strong preferences as long as you are consistent and fully answer the assignment. Some professors are very specific and will deduct big points for deviations.

Usually, the page length tells you something important: The instructor thinks the size of the paper is appropriate to the assignment’s parameters. In plain English, your instructor is telling you how many pages it should take for you to answer the question as fully as you are expected to. So if an assignment is two pages long, you cannot pad your paper with examples or reword your main idea several times. Hit your one point early, defend it with the clearest example, and finish quickly. If an assignment is ten pages long, you can be more complex in your main points and examples—and if you can only produce five pages for that assignment, you need to see someone for help—as soon as possible.

Tricks that don’t work

Your instructors are not fooled when you:

- spend more time on the cover page than the essay —graphics, cool binders, and cute titles are no replacement for a well-written paper.

- use huge fonts, wide margins, or extra spacing to pad the page length —these tricks are immediately obvious to the eye. Most instructors use the same word processor you do. They know what’s possible. Such tactics are especially damning when the instructor has a stack of 60 papers to grade and yours is the only one that low-flying airplane pilots could read.

- use a paper from another class that covered “sort of similar” material . Again, the instructor has a particular task for you to fulfill in the assignment that usually relates to course material and lectures. Your other paper may not cover this material, and turning in the same paper for more than one course may constitute an Honor Code violation . Ask the instructor—it can’t hurt.

- get all wacky and “creative” before you answer the question . Showing that you are able to think beyond the boundaries of a simple assignment can be good, but you must do what the assignment calls for first. Again, check with your instructor. A humorous tone can be refreshing for someone grading a stack of papers, but it will not get you a good grade if you have not fulfilled the task.

Critical reading of assignments leads to skills in other types of reading and writing. If you get good at figuring out what the real goals of assignments are, you are going to be better at understanding the goals of all of your classes and fields of study.

You may reproduce it for non-commercial use if you use the entire handout and attribute the source: The Writing Center, University of North Carolina at Chapel Hill

Make a Gift

- Systematic review

- Open access

- Published: 24 June 2024

A systematic review of experimentally tested implementation strategies across health and human service settings: evidence from 2010-2022

- Laura Ellen Ashcraft ORCID: orcid.org/0000-0001-9957-0617 1 , 2 ,

- David E. Goodrich 3 , 4 , 5 ,

- Joachim Hero 6 ,

- Angela Phares 3 ,

- Rachel L. Bachrach 7 , 8 ,

- Deirdre A. Quinn 3 , 4 ,

- Nabeel Qureshi 6 ,

- Natalie C. Ernecoff 6 ,

- Lisa G. Lederer 5 ,

- Leslie Page Scheunemann 9 , 10 ,

- Shari S. Rogal 3 , 11 na1 &

- Matthew J. Chinman 3 , 4 , 6 na1

Implementation Science volume 19 , Article number: 43 ( 2024 ) Cite this article

1958 Accesses

18 Altmetric

Metrics details

Studies of implementation strategies range in rigor, design, and evaluated outcomes, presenting interpretation challenges for practitioners and researchers. This systematic review aimed to describe the body of research evidence testing implementation strategies across diverse settings and domains, using the Expert Recommendations for Implementing Change (ERIC) taxonomy to classify strategies and the Reach Effectiveness Adoption Implementation and Maintenance (RE-AIM) framework to classify outcomes.

We conducted a systematic review of studies examining implementation strategies from 2010-2022 and registered with PROSPERO (CRD42021235592). We searched databases using terms “implementation strategy”, “intervention”, “bundle”, “support”, and their variants. We also solicited study recommendations from implementation science experts and mined existing systematic reviews. We included studies that quantitatively assessed the impact of at least one implementation strategy to improve health or health care using an outcome that could be mapped to the five evaluation dimensions of RE-AIM. Only studies meeting prespecified methodologic standards were included. We described the characteristics of studies and frequency of implementation strategy use across study arms. We also examined common strategy pairings and cooccurrence with significant outcomes.

Our search resulted in 16,605 studies; 129 met inclusion criteria. Studies tested an average of 6.73 strategies (0-20 range). The most assessed outcomes were Effectiveness ( n =82; 64%) and Implementation ( n =73; 56%). The implementation strategies most frequently occurring in the experimental arm were Distribute Educational Materials ( n =99), Conduct Educational Meetings ( n =96), Audit and Provide Feedback ( n =76), and External Facilitation ( n =59). These strategies were often used in combination. Nineteen implementation strategies were frequently tested and associated with significantly improved outcomes. However, many strategies were not tested sufficiently to draw conclusions.

This review of 129 methodologically rigorous studies built upon prior implementation science data syntheses to identify implementation strategies that had been experimentally tested and summarized their impact on outcomes across diverse outcomes and clinical settings. We present recommendations for improving future similar efforts.

Peer Review reports

Contributions to the literature

While many implementation strategies exist, it has been challenging to compare their effectiveness across a wide range of trial designs and practice settings

This systematic review provides a transdisciplinary evaluation of implementation strategies across population, practice setting, and evidence-based interventions using a standardized taxonomy of strategies and outcomes.

Educational strategies were employed ubiquitously; nineteen other commonly used implementation strategies, including External Facilitation and Audit and Provide Feedback, were associated with positive outcomes in these experimental trials.

This review offers guidance for scholars and practitioners alike in selecting implementation strategies and suggests a roadmap for future evidence generation.

Implementation strategies are “methods or techniques used to enhance the adoption, implementation, and sustainment of evidence-based practices or programs” (EBPs) [ 1 ]. In 2015, the Expert Recommendations for Implementing Change (ERIC) study organized a panel of implementation scientists to compile a standardized set of implementation strategy terms and definitions [ 2 , 3 , 4 ]. These 73 strategies were then organized into nine “clusters” [ 5 ]. The ERIC taxonomy has been widely adopted and further refined [ 6 , 7 , 8 , 9 , 10 , 11 , 12 , 13 ]. However, much of the evidence for individual or groups of ERIC strategies remains narrowly focused. Prior systematic reviews and meta-analyses have assessed strategy effectiveness, but have generally focused on a specific strategy, (e.g., Audit and Provide Feedback) [ 14 , 15 , 16 ], subpopulation, disease (e.g., individuals living with dementia) [ 16 ], outcome [ 15 ], service setting (e.g., primary care clinics) [ 17 , 18 , 19 ] or geography [ 20 ]. Given that these strategies are intended to have broad applicability, there remains a need to understand how well implementation strategies work across EBPs and settings and the extent to which implementation knowledge is generalizable.

There are challenges in assessing the evidence of implementation strategies across many EBPs, populations, and settings. Heterogeneity in population characteristics, study designs, methods, and outcomes have made it difficult to quantitatively compare which strategies work and under which conditions [ 21 ]. Moreover, there remains significant variability in how researchers operationalize, apply, and report strategies (individually or in combination) and outcomes [ 21 , 22 ]. Still, synthesizing data related to using individual strategies would help researchers replicate findings and better understand possible mediating factors including the cost, timing, and delivery by specific types of health providers or key partners [ 23 , 24 , 25 ]. Such an evidence base would also aid practitioners with implementation planning such as when and how to deploy a strategy for optimal impact.

Building upon previous efforts, we therefore conducted a systematic review to evaluate the level of evidence supporting the ERIC implementation strategies across a broad array of health and human service settings and outcomes, as organized by the evaluation framework, RE-AIM (Reach, Effectiveness, Adoption, Implementation, Maintenance) [ 26 , 27 , 28 ]. A secondary aim of this work was to identify patterns in scientific reporting of strategy use that could not only inform reporting standards for strategies but also the methods employed in future. The current study was guided by the following research questions Footnote 1 :

What implementation strategies have been most commonly and rigorously tested in health and human service settings?

Which implementation strategies were commonly paired?

What is the evidence supporting commonly tested implementation strategies?

We used the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA-P) model [ 29 , 30 , 31 ] to develop and report on the methods for this systematic review (Additional File 1). This study was considered to be non-human subjects research by the RAND institutional review board.

Registration

The protocol was registered with PROSPERO (PROSPERO 2021 CRD42021235592).

Eligibility criteria

This review sought to synthesize evidence for implementation strategies from research studies conducted across a wide range of health-related settings and populations. Inclusion criteria required studies to: 1) available in English; 2) published between January 1, 2010 and September 20, 2022; 3) based on experimental research (excluded protocols, commentaries, conference abstracts, or proposed frameworks); 4) set in a health or human service context (described below); 5) tested at least one quantitative outcome that could be mapped to the RE-AIM evaluation framework [ 26 , 27 , 28 ]; and 6) evaluated the impact of an implementation strategy that could be classified using the ERIC taxonomy [ 2 , 32 ]. We defined health and human service setting broadly, including inpatient and outpatient healthcare settings, specialty clinics, mental health treatment centers, long-term care facilities, group homes, correctional facilities, child welfare or youth services, aging services, and schools, and required that the focus be on a health outcome. We excluded hybrid type I trials that primarily focused on establishing EBP effectiveness, qualitative studies, studies that described implementation barriers and facilitators without assessing implementation strategy impact on an outcome, and studies not meeting standardized rigor criteria defined below.

Information sources

Our three-pronged search strategy included searching academic databases (i.e., CINAHL, PubMed, and Web of Science for replicability and transparency), seeking recommendations from expert implementation scientists, and assessing existing, relevant systematic reviews and meta-analyses.

Search strategy

Search terms included “implementation strateg*” OR “implementation intervention*” OR “implementation bundl*” OR “implementation support*.” The search, conducted on September 20, 2022, was limited to English language and publication between 2010 and 2022, similar to other recent implementation science reviews [ 22 ]. This timeframe was selected to coincide with the advent of Implementation Science and when the term “implementation strategy” became conventionally used [ 2 , 4 , 33 ]. A full search strategy can be found in Additional File 2.

Title and abstract screening process

Each study’s title and abstract were read by two reviewers, who dichotomously scored studies on each of the six eligibility criteria described above as yes=1 or no=0, resulting in a score ranging from 1 to 6. Abstracts receiving a six from both reviewers were included in the full text review. Those with only one score of six were adjudicated by a senior member of the team (MJC, SSR, DEG). The study team held weekly meetings to troubleshoot and resolve any ongoing issues noted through the abstract screening process.

Full text screening

During the full text screening process, we reviewed, in pairs, each article that had progressed through abstract screening. Conflicts between reviewers were adjudicated by a senior member of the team for a final inclusion decision (MJC, SSR, DEG).

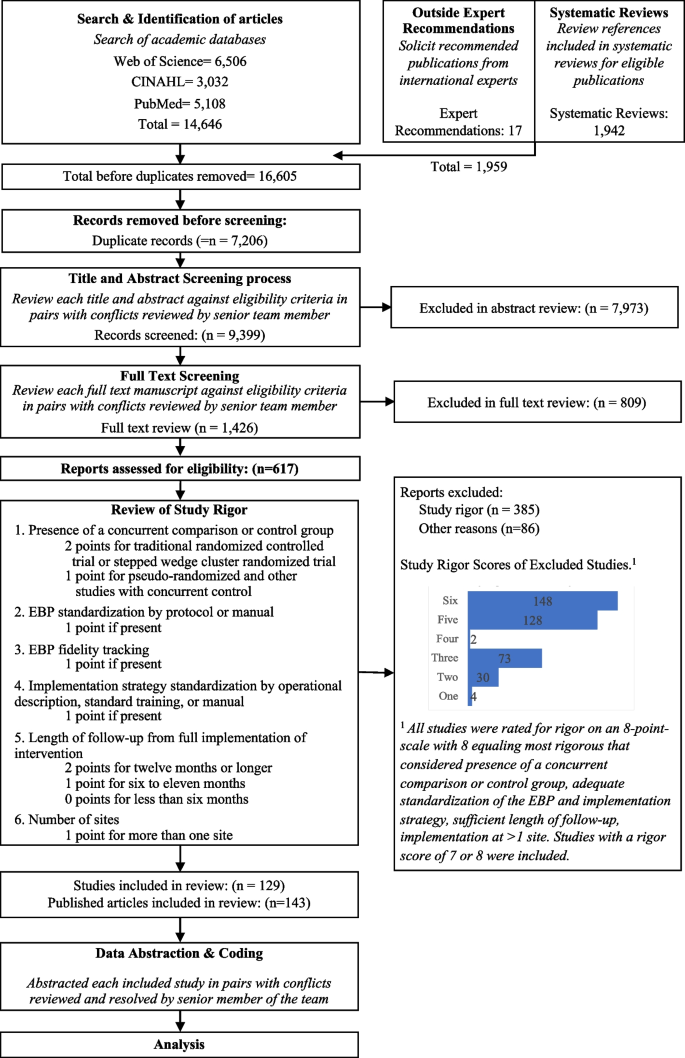

Review of study rigor

After reviewing published rigor screening tools [ 34 , 35 , 36 ], we developed an assessment of study rigor that was appropriate for the broad range of reviewed implementation studies. Reviewers evaluated studies on the following: 1) presence of a concurrent comparison or control group (=2 for traditional randomized controlled trial or stepped wedge cluster randomized trial and =1 for pseudo-randomized and other studies with concurrent control); 2) EBP standardization by protocol or manual (=1 if present); 3) EBP fidelity tracking (=1 if present); 4) implementation strategy standardization by operational description, standard training, or manual (=1 if present); 5) length of follow-up from full implementation of intervention (=2 for twelve months or longer, =1 for six to eleven months, or =0 for less than six months); and 6) number of sites (=1 for more than one site). Rigor scores ranged from 0 to 8, with 8 indicating the most rigorous. Articles were included if they 1) included a concurrent control group, 2) had an experimental design, and 3) received a score of 7 or 8 from two independent reviewers.

Outside expert consultation

We contacted 37 global implementation science experts who were recognized by our study team as leaders in the field or who were commonly represented among first or senior authors in the included abstracts. We asked each expert for recommendations of publications meeting study inclusion criteria (i.e., quantitatively evaluating the effectiveness of an implementation strategy). Recommendations were recorded and compared to the full abstract list.

Systematic reviews

Eighty-four systematic reviews were identified through the initial search strategy (See Additional File 3). Systematic reviews that examined the effectiveness of implementation strategies were reviewed in pairs for studies that were not found through our initial literature search.

Data abstraction and coding

Data from the full text review were abstracted in pairs, with conflicts resolved by senior team members (DEG, MJC) using a standard Qualtrics abstraction form. The form captured the setting, number of sites and participants studied, evidence-based practice/program of focus, outcomes assessed (based on RE-AIM), strategies used in each study arm, whether the study took place in the U.S. or outside of the U.S., and the findings (i.e., was there significant improvement in the outcome(s)?). We coded implementation strategies used in the Control and Experimental Arms. We defined the Control Arm as receiving the lowest number of strategies (which could mean zero strategies or care as usual) and the Experimental Arm as the most intensive arm (i.e., receiving the highest number of strategies). When studies included multiple Experimental Arms, the Experimental Arm with the least intensive implementation strategy(ies) was classified as “Control” and the Experimental Arm with the most intensive implementation strategy(ies) was classified as the “Experimental” Arm.

Implementation strategies were classified using standard definitions (MJC, SSR, DEG), based on minor modifications to the ERIC taxonomy [ 2 , 3 , 4 ]. Modifications resulted in 70 named strategies and were made to decrease redundancy and improve clarity. These modifications were based on input from experts, cognitive interview data, and team consensus [ 37 ] (See Additional File 4). Outcomes were then coded into RE-AIM outcome domains following best practices as recommended by framework experts [ 26 , 27 , 28 ]. We coded the RE-AIM domain of Effectiveness as either an assessment of the effectiveness of the EBP or the implementation strategy. We did not assess implementation strategy fidelity or effects on health disparities as these are recently adopted reporting standards [ 27 , 28 ] and not yet widely implemented in current publications. Further, we did not include implementation costs as an outcome because reporting guidelines have not been standardized [ 38 , 39 ].

Assessment and minimization of bias

Assessment and minimization of bias is an important component of high-quality systematic reviews. The Cochrane Collaboration guidance for conducting high-quality systematic reviews recommends including a specific assessment of bias for individual studies by assessing the domains of randomization, deviations of intended intervention, missing data, measurement of the outcome, and selection of the reported results (e.g., following a pre-specified analysis plan) [ 40 , 41 ]. One way we addressed bias was by consolidating multiple publications from the same study into a single finding (i.e., N =1), so-as to avoid inflating estimates due to multiple publications on different aspects of a single trial. We also included high-quality studies only, as described above. However, it was not feasible to consistently apply an assessment of bias tool due to implementation science’s broad scope and the heterogeneity of study design, context, outcomes, and variable measurement, etc. For example, most implementation studies reviewed had many outcomes across the RE-AIM framework, with no one outcome designated as primary, precluding assignment of a single score across studies.

We used descriptive statistics to present the distribution of health or healthcare area, settings, outcomes, and the median number of included patients and sites per study, overall and by country (classified as U.S. vs. non-U.S.). Implementation strategies were described individually, using descriptive statistics to summarize the frequency of strategy use “overall” (in any study arm), and the mean number of strategies reported in the Control and Experimental Arms. We additionally described the strategies that were only in the experimental (and not control) arm, defining these as strategies that were “tested” and may have accounted for differences in outcomes between arms.

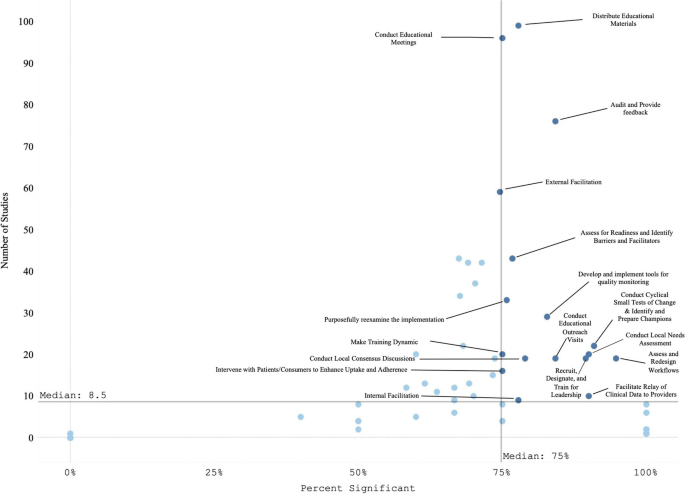

We described frequencies of pair-wise combinations of implementation strategies in the Experimental Arm. To assess the strength of the evidence supporting implementation strategies that were used in the Experimental Arm, study outcomes were categorized by RE-AIM and coded based on whether the association between use of the strategies resulted in a significantly positive effect (yes=1; no=0). We then created an indicator variable if at least one RE-AIM outcome in the study was significantly positive (yes=1; no=0). We plotted strategies on a graph with quadrants based on the combination of median number of studies in which a strategy appears and the median percent of studies in which a strategy was associated with at least one positive RE-AIM outcome. The upper right quadrant—higher number of studies overall and higher percent of studies with a significant RE-AIM outcome—represents a superior level of evidence. For implementation strategies in the upper right quadrant, we describe each RE-AIM outcome and the proportion of studies which have a significant outcome.

Search results

We identified 14,646 articles through the initial literature search, 17 articles through expert recommendation (three of which were not included in the initial search), and 1,942 articles through reviewing prior systematic reviews (Fig. 1 ). After removing duplicates, 9,399 articles were included in the initial abstract screening. Of those, 48% ( n =4,075) abstracts were reviewed in pairs for inclusion. Articles with a score of five or six were reviewed a second time ( n =2,859). One quarter of abstracts that scored lower than five were reviewed for a second time at random. We screened the full text of 1,426 articles in pairs. Common reasons for exclusion were 1) study rigor, including no clear delineation between the EBP and implementation strategy, 2) not testing an implementation strategy, and 3) article type that did not meet inclusion criteria (e.g., commentary, protocol, etc.). Six hundred seventeen articles were reviewed for study rigor with 385 excluded for reasons related to study design and rigor, and 86 removed for other reasons (e.g., not a research article). Among the three additional expert-recommended articles, one met inclusion criteria and was added to the analysis. The final number of studies abstracted was 129 representing 143 publications.

Expanded PRISMA Flow Diagram

The expanded PRISMA flow diagram provides a description of each step in the review and abstraction process for the systematic review

Descriptive results

Of 129 included studies (Table 1 ; see also Additional File 5 for Summary of Included Studies), 103 (79%) were conducted in a healthcare setting. EBP health care setting varied and included primary care ( n =46; 36%), specialty care ( n =27; 21%), mental health ( n =11; 9%), and public health ( n =30; 23%), with 64 studies (50%) occurring in an outpatient health care setting. Studies included a median of 29 sites and 1,419 target population (e.g., patients or students). The number of strategies varied widely across studies, with Control Arms averaging approximately two strategies (Range = 0-20, including studies with no strategy in the comparison group) and Experimental Arms averaging eight strategies (Range = 1-21). Non-US studies ( n =73) included more sites and target population on average, with an overall median of 32 sites and 1,531 patients assessed in each study.

Organized by RE-AIM, the most evaluated outcomes were Effectiveness ( n = 82, 64%) and Implementation ( n = 73, 56%); followed by Maintenance ( n =40; 31%), Adoption ( n =33; 26%), and Reach ( n =31; 24%). Most studies ( n = 98, 76%) reported at least one significantly positive outcome. Adoption and Implementation outcomes showed positive change in three-quarters of studies ( n =78), while Reach ( n =18; 58%), Effectiveness ( n =44; 54%), and Maintenance ( n =23; 58%) outcomes evidenced positive change in approximately half of studies.

The following describes the results for each research question.

Table 2 shows the frequency of studies within which an implementation strategy was used in the Control Arm, Experimental Arm(s), and tested strategies (those used exclusively in the Experimental Arm) grouped by strategy type, as specified by previous ERIC reports [ 2 , 6 ].

Control arm

In about half the studies (53%; n =69), the Control Arms were “active controls” that included at least one strategy, with an average of 1.64 (and up to 20) strategies reported in control arms. The two most common strategies used in Control Arms were: Distribute Educational Materials ( n =52) and Conduct Educational Meetings ( n =30).

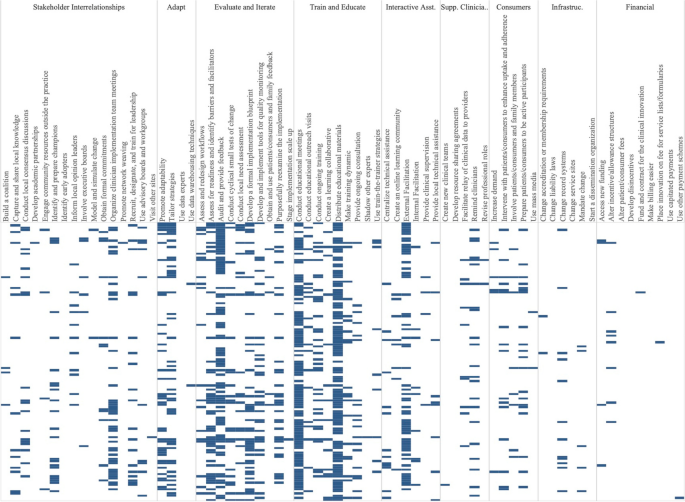

Experimental arm

Experimental conditions included an average of 8.33 implementation strategies per study (Range = 1-21). Figure 2 shows a heat map of the strategies that were used in the Experimental Arms in each study. The most common strategies in the Experimental Arm were Distribute Educational Materials ( n =99), Conduct Educational Meetings ( n =96), Audit and Provide Feedback ( n =76), and External Facilitation ( n =59).

Implementation strategies used in the Experimental Arm of included studies. Explore more here: https://public.tableau.com/views/Figure2_16947070561090/Figure2?:language=en-US&:display_count=n&:origin=viz_share_link

Tested strategies

The average number of implementation strategies that were included in the Experimental Arm only (and not in the Control Arm) was 6.73 (Range = 0-20). Footnote 2 Overall, the top 10% of tested strategies included Conduct Educational Meetings ( n =68), Audit and Provide Feedback ( n =63), External Facilitation ( n =54), Distribute Educational Materials ( n =49), Tailor Strategies ( n =41), Assess for Readiness and Identify Barriers and Facilitators ( n =38) and Organize Clinician Implementation Team Meetings ( n =37). Few studies tested a single strategy ( n =9). These strategies included, Audit and Provide Feedback, Conduct Educational Meetings, Conduct Ongoing Training, Create a Learning Collaborative, External Facilitation ( n =2), Facilitate Relay of Clinical Data To Providers, Prepare Patients/Consumers to be Active Participants, and Use Other Payment Schemes. Three implementation strategies were included in the Control or Experimental Arms but were not Tested including, Use Mass Media, Stage Implementation Scale Up, and Fund and Contract for the Clinical Innovation.

Table 3 shows the five most used strategies in Experimental Arms with their top ten most frequent pairings, excluding Distribute Educational Materials and Conduct Educational Meetings, as these strategies were included in almost all Experimental and half of Control Arms. The five most used strategies in the Experimental Arm included Audit and Provide Feedback ( n =76), External Facilitation ( n =59), Tailor Strategies ( n =43), Assess for Readiness and Identify Barriers and Facilitators ( n =43), and Organize Implementation Teams ( n =42).

Strategies frequently paired with these five strategies included two educational strategies: Distribute Educational Materials and Conduct Educational Meetings. Other commonly paired strategies included Develop a Formal Implementation Blueprint, Promote Adaptability, Conduct Ongoing Training, Purposefully Reexamine the Implementation, and Develop and Implement Tools for Quality Monitoring.

We classified the strength of evidence for each strategy by evaluating both the number of studies in which each strategy appeared in the Experimental Arm and the percentage of times there was at least one significantly positive RE-AIM outcome. Using these factors, Fig. 3 shows the number of studies in which individual strategies were evaluated (on the y axis) compared to the percentage of times that studies including those strategies had at least one positive outcome (on the x axis). Due to the non-normal distribution of both factors, we used the median (rather than the mean) to create four quadrants. Strategies in the lower left quadrant were tested in fewer than the median number of studies (8.5) and were less frequently associated with a significant RE-AIM outcome (75%). The upper right quadrant included strategies that occurred in more than the median number of studies (8.5) and had more than the median percent of studies with a significant RE-AIM outcome (75%); thus those 19 strategies were viewed as having stronger evidence. Of those 19 implementation strategies, Conduct Educational Meetings, Distribute Educational Materials, External Facilitation, and Audit and Provide Feedback continued to occur frequently, appearing in 59-99 studies.

Experimental Arm Implementation Strategies with significant RE-AIM outcome. Explore more here: https://public.tableau.com/views/Figure3_16947017936500/Figure3?:language=en-US&publish=yes&:display_count=n&:origin=viz_share_link

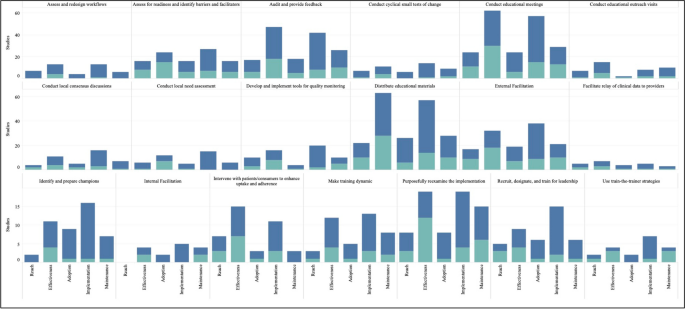

Figure 4 graphically illustrates the proportion of significant outcomes for each RE-AIM outcome for the 19 commonly used and evidence-based implementation strategies in the upper right quadrant. These findings again show the widespread use of Conduct Educational Meetings and Distribute Educational Materials. Implementation and Effectiveness outcomes were assessed most frequently, with Implementation being the mostly commonly reported significantly positive outcome.

RE-AIM outcomes for the 19 Top-Right Quadrant Implementation Strategies . The y-axis is the number of studies and the x-axis is a stacked bar chart for each RE-AIM outcome with R=Reach, E=Effectiveness, A=Adoption, I=Implementation, M=Maintenance. Blue denotes at least one significant RE-AIM outcome; Light blue denotes studies which used the given implementation strategy and did not have a significant RE-AIM . Explore more here: https://public.tableau.com/views/Figure4_16947017112150/Figure4?:language=en-US&publish=yes&:display_count=n&:origin=viz_share_link

This systematic review identified 129 experimental studies examining the effectiveness of implementation strategies across a broad range of health and human service studies. Overall, we found that evidence is lacking for most ERIC implementation strategies, that most studies employed combinations of strategies, and that implementation outcomes, categorized by RE-AIM dimensions, have not been universally defined or applied. Accordingly, other researchers have described the need for universal outcomes definitions and descriptions across implementation research studies [ 28 , 42 ]. Our findings have important implications not only for the current state of the field but also for creating guidance to help investigators determine which strategies and in what context to examine.

The four most evaluated strategies were Distribute Educational Materials, Conduct Educational Meetings, External Facilitation, and Audit and Provide Feedback. Conducting Educational Meetings and Distributing Educational Materials were surprisingly the most common. This may reflect the fact that education strategies are generally considered to be “necessary but not sufficient” for successful implementation [ 43 , 44 ]. Because education is often embedded in interventions, it is critical to define the boundary between the innovation and the implementation strategies used to support the innovation. Further specification as to when these strategies are EBP core components or implementation strategies (e.g., booster trainings or remediation) is needed [ 45 , 46 ].

We identified 19 implementation strategies that were tested in at least 8 studies (more than the median) and were associated with positive results at least 75% of the time. These strategies can be further categorized as being used in early or pre-implementation versus later in implementation. Preparatory activities or pre-implementation, strategies that had strong evidence included educational activities (Meetings, Materials, Outreach visits, Train for Leadership, Use Train the Trainer Strategies) and site diagnostic activities (Assess for Readiness, Identify Barriers and Facilitators, Conduct Local Needs Assessment, Identify and Prepare Champions, and Assess and Redesign Workflows). Strategies that target the implementation phase include those that provide coaching and support (External and Internal Facilitation), involve additional key partners (Intervene with Patients to Enhance Uptake and Adherence), and engage in quality improvement activities (Audit and Provide Feedback, Facilitate the Relay of Clinical Data to Providers, Purposefully Reexamine the Implementation, Conduct Cyclical Small Tests of Change, Develop and Implement Tools for Quality Monitoring).

There were many ERIC strategies that were not represented in the reviewed studies, specifically the financial and policy strategies. Ten strategies were not used in any studies, including: Alter Patient/Consumer Fees, Change Liability Laws, Change Service Sites, Develop Disincentives, Develop Resource Sharing Agreements, Identify Early Adopters, Make Billing Easier, Start a Dissemination Organization, Use Capitated Payments, and Use Data Experts. One of the limitations of this investigation was that not all individual strategies or combinations were investigated. Reasons for the absence of these strategies in our review may include challenges with testing certain strategies experimentally (e.g., changing liability laws), limitations in our search terms, and the relative paucity of implementation strategy trials compared to clinical trials. Many “untested” strategies require large-scale structural changes with leadership support (see [ 47 ] for policy experiment example). Recent preliminary work has assessed the feasibility of applying policy strategies and described the challenges with doing so [ 48 , 49 , 50 ]. While not impossible in large systems like VA (for example: the randomized evaluation of the VA Stratification Tool for Opioid Risk Management) the large size, structure, and organizational imperative makes these initiatives challenging to experimentally evaluate. Likewise, the absence of these ten strategies may have been the result of our inclusion criteria, which required an experimental design. Thus, creative study designs may be needed to test high-level policy or financial strategies experimentally.

Some strategies that were likely under-represented in our search strategy included electronic medical record reminders and clinical decision support tools and systems. These are often considered “interventions” when used by clinical trialists and may not be indexed as studies involving ‘implementation strategies’ (these tools have been reviewed elsewhere [ 51 , 52 , 53 ]). Thus, strategies that are also considered interventions in the literature (e.g., education interventions) were not sought or captured. Our findings do not imply that these strategies are ineffective, rather that more study is needed. Consistent with prior investigations [ 54 ], few studies meeting inclusion criteria tested financial strategies. Accordingly, there are increasing calls to track and monitor the effects of financial strategies within implementation science to understand their effectiveness in practice [ 55 , 56 ]. However, experts have noted that the study of financial strategies can be a challenge given that they are typically implemented at the system-level and necessitate research designs for studying policy-effects (e.g., quasi-experimental methods, systems-science modeling methods) [ 57 ]. Yet, there have been some recent efforts to use financial strategies to support EBPs that appear promising [ 58 ] and could be a model for the field moving forward.

The relationship between the number of strategies used and improved outcomes has been described inconsistently in the literature. While some studies have found improved outcomes with a bundle of strategies that were uniquely combined or a standardized package of strategies (e.g., Replicating Effective Programs [ 59 , 60 ] and Getting To Outcomes [ 61 , 62 ]), others have found that “more is not always better” [ 63 , 64 , 65 ]. For example, Rogal and colleagues documented that VA hospitals implementing a new evidence-based hepatitis C treatment chose >20 strategies, when multiple years of data linking strategies to outcomes showed that 1-3 specific strategies would have yielded the same outcome [ 39 ]. Considering that most studies employed multiple or multifaceted strategies, it seems that there is a benefit of using a targeted bundle of strategies that are purposefully aligns with site/clinic/population norms, rather than simply adding more strategies [ 66 ].

It is difficult to assess the effectiveness of any one implementation strategy in bundles where multiple strategies are used simultaneously. Even a ‘single’ strategy like External Facilitation is, in actuality, a bundle of narrowly constructed strategies (e.g., Conduct Educational Meetings, Identify and Prepare Champions, and Develop a Formal Implementation Blueprint). Thus, studying External Facilitation does not allow for a test of the individual strategies that comprise it, potentially masking the effectiveness of any individual strategy. While we cannot easily disaggregate the effects of multifaceted strategies, doing so may not yield meaningful results. Because strategies often synergize, disaggregated results could either underestimate the true impact of individual strategies or conversely, actually undermine their effectiveness (i.e., when their effectiveness comes from their combination with other strategies). The complexity of health and human service settings, imperative to improve public health outcomes, and engagement with community partners often requires the use of multiple strategies simultaneously. Therefore, the need to improve real-world implementation may outweigh the theoretical need to identify individual strategy effectiveness. In situations where it would be useful to isolate the impact of single strategies, we suggest that the same methods for documenting and analyzing the critical components (or core functions) of complex interventions [ 67 , 68 , 69 , 70 ] may help to identify core components of multifaceted implementation strategies [ 71 , 72 , 73 , 74 ].

In addition, to truly assess the impacts of strategies on outcomes, it may be necessary to track fidelity to implementation strategies (not just the EBPs they support). While this can be challenging, without some degree of tracking and fidelity checks, one cannot determine whether a strategy’s apparent failure to work was because it 1) was ineffective or 2) was not applied well. To facilitate this tracking there are pragmatic tools to support researchers. For example, the Longitudinal Implementation Strategy Tracking System (LISTS) offers a pragmatic and feasible means to assess fidelity to and adaptations of strategies [ 75 ].

Implications for implementation science: four recommendations

Based on our findings, we offer four recommended “best practices” for implementation studies.

Prespecify strategies using standard nomenclature. This study reaffirmed the need to apply not only a standard naming convention (e.g., ERIC) but also a standard reporting of for implementation strategies. While reporting systems like those by Proctor [ 1 ] or Pinnock [ 75 ] would optimize learning across studies, few manuscripts specify strategies as recommended [ 76 , 77 ]. Pre-specification allows planners and evaluators to assess the feasibility and acceptability of strategies with partners and community members [ 24 , 78 , 79 ] and allows evaluators and implementers to monitor and measure the fidelity, dose, and adaptations to strategies delivered over the course of implementation [ 27 ]. In turn, these data can be used to assess the costs, analyze their effectiveness [ 38 , 80 , 81 ], and ensure more accurate reporting [ 82 , 83 , 84 , 85 ]. This specification should include, among other data, the intensity, stage of implementation, and justification for the selection. Information regarding why strategies were selected for specific settings would further the field and be of great use to practitioners. [ 63 , 65 , 69 , 79 , 86 ].

Ensure that standards for measuring and reporting implementation outcomes are consistently applied and account for the complexity of implementation studies. Part of improving standardized reporting must include clearly defining outcomes and linking each outcome to particular implementation strategies. It was challenging in the present review to disentangle the impact of the intervention(s) (i.e., the EBP) versus the impact of the implementation strategy(ies) for each RE-AIM dimension. For example, often fidelity to the EBP was reported but not for the implementation strategies. Similarly, Reach and Adoption of the intervention would be reported for the Experimental Arm but not for the Control Arm, prohibiting statistical comparisons of strategies on the relative impact of the EBP between study arms. Moreover, there were many studies evaluating numerous outcomes, risking data dredging. Further, the significant heterogeneity in the ways in which implementation outcomes are operationalized and reported is a substantial barrier to conducting large-scale meta-analytic approaches to synthesizing evidence for implementation strategies [ 67 ]. The field could look to others in the social and health sciences for examples in how to test, validate, and promote a common set of outcome measures to aid in bringing consistency across studies and real-world practice (e.g., the NIH-funded Patient-Reported Outcomes Measurement Information System [PROMIS], https://www.healthmeasures.net/explore-measurement-systems/promis ).

Develop infrastructure to learn cross-study lessons in implementation science. Data repositories, like those developed by NCI for rare diseases, U.S. HIV Implementation Science Coordination Initiative [ 87 ], and the Behavior Change Technique Ontology [ 88 ], could allow implementation scientists to report their findings in a more standardized manner, which would promote ease of communication and contextualization of findings across studies. For example, the HIV Implementation Science Coordination Initiative requested all implementation projects use common frameworks, developed user friendly databases to enable practitioners to match strategies to determinants, and developed a dashboard of studies that assessed implementation determinants [ 89 , 90 , 91 , 92 , 93 , 94 ].

Develop and apply methods to rigorously study common strategies and bundles. These findings support prior recommendations for improved empirical rigor in implementation studies [ 46 , 95 ]. Many studies were excluded from our review based on not meeting methodological rigor standards. Understanding the effectiveness of discrete strategies deployed alone or in combination requires reliable and low burden tracking methods to collect information about strategy use and outcomes. For example, frameworks like the Implementation Replication Framework [ 96 ] could help interpret findings across studies using the same strategy bundle. Other tracking approaches may leverage technology (e.g., cell phones, tablets, EMR templates) [ 78 , 97 ] or find novel, pragmatic approaches to collect recommended strategy specifications over time (e.g.., dose, deliverer, and mechanism) [ 1 , 9 , 27 , 98 , 99 ]. Rigorous reporting standards could inform more robust analyses and conclusions (e.g., moving toward the goal of understanding causality, microcosting efforts) [ 24 , 38 , 100 , 101 ]. Such detailed tracking is also required to understand how site-level factors moderate implementation strategy effects [ 102 ]. In some cases, adaptive trial designs like sequential multiple assignment randomized trials (SMARTs) and just-in-time adaptive interventions (JITAIs) can be helpful for planning strategy escalation.

Limitations

Despite the strengths of this review, there were certain notable limitations. For one, we only included experimental studies, omitting many informative observational investigations that cover the range of implementation strategies. Second, our study period was centered on the creation of the journal Implementation Science and not on the standardization and operationalization of implementation strategies in the publication of the ERIC taxonomy (which came later). This, in conjunction with latency in reporting study results and funding cycles, means that the employed taxonomy was not applied in earlier studies. To address this limitation, we retroactively mapped strategies to ERIC, but it is possible that some studies were missed. Additionally, indexing approaches used by academic databases may have missed relevant studies. We addressed this particular concern by reviewing other systematic reviews of implementation strategies and soliciting recommendations from global implementation science experts.

Another potential limitation comes from the ERIC taxonomy itself—i.e., strategy listings like ERIC are only useful when they are widely adopted and used in conjunction with guidelines for specifying and reporting strategies [ 1 ] in protocol and outcome papers. Although the ERIC paper has been widely cited (over three thousand times, accessed about 186 thousand times), it is still not universally applied, making tracking the impact of specific strategies more difficult. However, our experience with this review seemed to suggest that ERIC’s use was increasing over time. Also, some have commented that ERIC strategies can be unclear and are missing key domains. Thus, researchers are making definitions clearer for lay users [ 37 , 103 ], increasing the number of discrete strategies for specific domains like HIV treatment, acknowledging strategies for new functions (e.g., de-implementation [ 104 ], local capacity building), accounting for phases of implementation (dissemination, sustainment [ 13 ], scale-up), addressing settings [ 12 , 20 ], actors roles in the process, and making mechanisms of change to select strategies more user-friendly through searchable databases [ 9 , 10 , 54 , 73 , 104 , 105 , 106 ]. In sum, we found the utility of the ERIC taxonomy to outweigh any of the taxonomy’s current limitations.

As with all reviews, the search terms influenced our findings. As such, the broad terms for implementation strategies (e.g., “evidence-based interventions”[ 7 ] or “behavior change techniques” [ 107 ]) may have led to inadvertent omissions of studies of specific strategies. For example, the search terms may not have captured tests of policies, financial strategies, community health promotion initiatives, or electronic medical record reminders, due to differences in terminology used in corresponding subfields of research (e.g., health economics, business, health information technology, and health policy). To manage this, we asked experts to inform us about any studies that they would include and cross-checked their lists with what was identified through our search terms, which yielded very few additional studies. We included standard coding using the ERIC taxonomy, which was a strength, but future work should consider including the additional strategies that have been recommended to augment ERIC, around sustainment [ 13 , 79 , 106 , 108 ], community and public health research [ 12 , 109 , 110 , 111 ], consumer or service user engagement [ 112 ], de-implementation [ 104 , 113 , 114 , 115 , 116 , 117 ] and related terms [ 118 ].

We were unable to assess the bias of studies due to non-standard reporting across the papers and the heterogeneity of study designs, measurement of implementation strategies and outcomes, and analytic approaches. This could have resulted in over- or underestimating the results of our synthesis. We addressed this limitation by being cautious in our reporting of findings, specifically in identifying “effective” implementation strategies. Further, we were not able to gather primary data to evaluate effect sizes across studies in order to systematically evaluate bias, which would be fruitful for future study.

Conclusions

This novel review of 129 studies summarized the body of evidence supporting the use of ERIC-defined implementation strategies to improve health or healthcare. We identified commonly occurring implementation strategies, frequently used bundles, and the strategies with the highest degree of supportive evidence, while simultaneously identifying gaps in the literature. Additionally, we identified several key areas for future growth and operationalization across the field of implementation science with the goal of improved reporting and assessment of implementation strategies and related outcomes.

Availability and materials

All data for this study are included in this published article and its supplementary information files.

We modestly revised the following research questions from our PROSPERO registration after reading the articles and better understanding the nature of the literature: 1) What is the available evidence regarding the effectiveness of implementation strategies in supporting the uptake and sustainment of evidence intended to improve health and healthcare outcomes? 2) What are the current gaps in the literature (i.e., implementation strategies that do not have sufficient evidence of effectiveness) that require further exploration?

Tested strategies are those which exist in the Experimental Arm but not in the Control Arm. Comparative effectiveness or time staggered trials may not have any unique strategies in the Experimental Arm and therefore in our analysis would have no Tested Strategies.

Abbreviations

Centers for Disease Control

Cumulated Index to Nursing and Allied Health Literature

Dissemination and Implementation

Evidence-based practices or programs

Expert Recommendations for Implementing Change

Multiphase Optimization Strategy

National Cancer Institute

National Institutes of Health

The Pittsburgh Dissemination and Implementation Science Collaborative

Sequential Multiple Assignment Randomized Trial

United States

Department of Veterans Affairs

Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8:139.

Article PubMed PubMed Central Google Scholar

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10:21.

Waltz TJ, Powell BJ, Chinman MJ, Smith JL, Matthieu MM, Proctor EK, et al. Expert recommendations for implementing change (ERIC): protocol for a mixed methods study. Implement Sci IS. 2014;9:39.

Article PubMed Google Scholar

Powell BJ, McMillen JC, Proctor EK, Carpenter CR, Griffey RT, Bunger AC, et al. A Compilation of Strategies for Implementing Clinical Innovations in Health and Mental Health. Med Care Res Rev. 2012;69:123–57.

Waltz TJ, Powell BJ, Matthieu MM, Damschroder LJ, Chinman MJ, Smith JL, et al. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the Expert Recommendations for Implementing Change (ERIC) study. Implement Sci. 2015;10:109.

Perry CK, Damschroder LJ, Hemler JR, Woodson TT, Ono SS, Cohen DJ. Specifying and comparing implementation strategies across seven large implementation interventions: a practical application of theory. Implement Sci. 2019;14(1):32.

Community Preventive Services Task Force. Community Preventive Services Task Force: All Active Findings June 2023 [Internet]. 2023 [cited 2023 Aug 7]. Available from: https://www.thecommunityguide.org/media/pdf/CPSTF-All-Findings-508.pdf

Solberg LI, Kuzel A, Parchman ML, Shelley DR, Dickinson WP, Walunas TL, et al. A Taxonomy for External Support for Practice Transformation. J Am Board Fam Med JABFM. 2021;34:32–9.

Leeman J, Birken SA, Powell BJ, Rohweder C, Shea CM. Beyond “implementation strategies”: classifying the full range of strategies used in implementation science and practice. Implement Sci. 2017;12:1–9.

Article Google Scholar

Leeman J, Calancie L, Hartman MA, Escoffery CT, Herrmann AK, Tague LE, et al. What strategies are used to build practitioners’ capacity to implement community-based interventions and are they effective?: a systematic review. Implement Sci. 2015;10:1–15.

Nathan N, Shelton RC, Laur CV, Hailemariam M, Hall A. Editorial: Sustaining the implementation of evidence-based interventions in clinical and community settings. Front Health Serv. 2023;3:1176023.

Balis LE, Houghtaling B, Harden SM. Using implementation strategies in community settings: an introduction to the Expert Recommendations for Implementing Change (ERIC) compilation and future directions. Transl Behav Med. 2022;12:965–78.

Nathan N, Powell BJ, Shelton RC, Laur CV, Wolfenden L, Hailemariam M, et al. Do the Expert Recommendations for Implementing Change (ERIC) strategies adequately address sustainment? Front Health Serv. 2022;2:905909.

Ivers N, Jamtvedt G, Flottorp S, Young JM, Odgaard-Jensen J, French SD, et al. Audit and feedback effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012;6:CD000259.

Google Scholar

Moore L, Guertin JR, Tardif P-A, Ivers NM, Hoch J, Conombo B, et al. Economic evaluations of audit and feedback interventions: a systematic review. BMJ Qual Saf. 2022;31:754–67.

Sykes MJ, McAnuff J, Kolehmainen N. When is audit and feedback effective in dementia care? A systematic review. Int J Nurs Stud. 2018;79:27–35.

Barnes C, McCrabb S, Stacey F, Nathan N, Yoong SL, Grady A, et al. Improving implementation of school-based healthy eating and physical activity policies, practices, and programs: a systematic review. Transl Behav Med. 2021;11:1365–410.

Tomasone JR, Kauffeldt KD, Chaudhary R, Brouwers MC. Effectiveness of guideline dissemination and implementation strategies on health care professionals’ behaviour and patient outcomes in the cancer care context: a systematic review. Implement Sci. 2020;15:1–18.

Seda V, Moles RJ, Carter SR, Schneider CR. Assessing the comparative effectiveness of implementation strategies for professional services to community pharmacy: A systematic review. Res Soc Adm Pharm. 2022;18:3469–83.

Lovero KL, Kemp CG, Wagenaar BH, Giusto A, Greene MC, Powell BJ, et al. Application of the Expert Recommendations for Implementing Change (ERIC) compilation of strategies to health intervention implementation in low- and middle-income countries: a systematic review. Implement Sci. 2023;18:56.

Chapman A, Rankin NM, Jongebloed H, Yoong SL, White V, Livingston PM, et al. Overcoming challenges in conducting systematic reviews in implementation science: a methods commentary. Syst Rev. 2023;12:1–6.

Article CAS Google Scholar

Proctor EK, Bunger AC, Lengnick-Hall R, Gerke DR, Martin JK, Phillips RJ, et al. Ten years of implementation outcomes research: a scoping review. Implement Sci. 2023;18:1–19.

Michaud TL, Pereira E, Porter G, Golden C, Hill J, Kim J, et al. Scoping review of costs of implementation strategies in community, public health and healthcare settings. BMJ Open. 2022;12:e060785.

Sohn H, Tucker A, Ferguson O, Gomes I, Dowdy D. Costing the implementation of public health interventions in resource-limited settings: a conceptual framework. Implement Sci. 2020;15:1–8.

Peek C, Glasgow RE, Stange KC, Klesges LM, Purcell EP, Kessler RS. The 5 R’s: an emerging bold standard for conducting relevant research in a changing world. Ann Fam Med. 2014;12:447–55.

Article CAS PubMed PubMed Central Google Scholar

Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89:1322–7.

Shelton RC, Chambers DA, Glasgow RE. An Extension of RE-AIM to Enhance Sustainability: Addressing Dynamic Context and Promoting Health Equity Over Time. Front Public Health. 2020;8:134.

Holtrop JS, Estabrooks PA, Gaglio B, Harden SM, Kessler RS, King DK, et al. Understanding and applying the RE-AIM framework: Clarifications and resources. J Clin Transl Sci. 2021;5:e126.

Moher D, Shamseer L, Clarke M, Ghersi D, Liberati A, Petticrew M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst Rev. 2015;4:1.

Shamseer L, Moher D, Clarke M, Ghersi D, Liberati A, Petticrew M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015: elaboration and explanation. BMJ. 2015;349:g7647.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ [Internet]. 2021;372. Available from: https://www.bmj.com/content/372/bmj.n71

Rabin BA, Brownson RC, Haire-Joshu D, Kreuter MW, Weaver NL. A Glossary for Dissemination and Implementation Research in Health. J Public Health Manag Pract. 2008;14:117–23.

Eccles MP, Mittman BS. Welcome to Implementation Science. Implement Sci. 2006;1:1.

Article PubMed Central Google Scholar

Miller WR, Wilbourne PL. Mesa Grande: a methodological analysis of clinical trials of treatments for alcohol use disorders. Addict Abingdon Engl. 2002;97:265–77.

Miller WR, Brown JM, Simpson TL, Handmaker NS, Bien TH, Luckie LF, et al. What works? A methodological analysis of the alcohol treatment outcome literature. Handb Alcohol Treat Approaches Eff Altern 2nd Ed. Needham Heights, MA, US: Allyn & Bacon; 1995:12–44.

Wells S, Tamir O, Gray J, Naidoo D, Bekhit M, Goldmann D. Are quality improvement collaboratives effective? A systematic review BMJ Qual Saf. 2018;27:226–40.

Yakovchenko V, Chinman MJ, Lamorte C, Powell BJ, Waltz TJ, Merante M, et al. Refining Expert Recommendations for Implementing Change (ERIC) strategy surveys using cognitive interviews with frontline providers. Implement Sci Commun. 2023;4:1–14.

Wagner TH, Yoon J, Jacobs JC, So A, Kilbourne AM, Yu W, et al. Estimating costs of an implementation intervention. Med Decis Making. 2020;40:959–67.

Gold HT, McDermott C, Hoomans T, Wagner TH. Cost data in implementation science: categories and approaches to costing. Implement Sci. 2022;17:11.

Boutron I, Page MJ, Higgins JP, Altman DG, Lundh A, Hróbjartsson A. Considering bias and conflicts of interest among the included studies. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA, editors. Cochrane Handbook for Systematic Reviews of Interventions. 2019. https://doi.org/10.1002/9781119536604.ch7 .

Higgins JP, Savović J, Page MJ, Elbers RG, Sterne J. Assessing risk of bias in a randomized trial. Cochrane Handb Syst Rev Interv. 2019;6:205–28.

Reilly KL, Kennedy S, Porter G, Estabrooks P. Comparing, Contrasting, and Integrating Dissemination and Implementation Outcomes Included in the RE-AIM and Implementation Outcomes Frameworks. Front Public Health [Internet]. 2020 [cited 2024 Apr 24];8. Available from: https://www.frontiersin.org/journals/public-health/articles/ https://doi.org/10.3389/fpubh.2020.00430/full

Grimshaw JM, Thomas RE, MacLennan G, Fraser C, Ramsay CR, Vale L, et al. Effectiveness and efficiency of guideline dissemination and implementation strategies. Health Technol Assess Winch Engl. 2004;8:iii–iv 1-72.

CAS Google Scholar

Beidas RS, Kendall PC. Training Therapists in Evidence-Based Practice: A Critical Review of Studies From a Systems-Contextual Perspective. Clin Psychol Publ Div Clin Psychol Am Psychol Assoc. 2010;17:1–30.

Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, Proctor EK, et al. Methods to Improve the Selection and Tailoring of Implementation Strategies. J Behav Health Serv Res. 2017;44:177–94.

Powell BJ, Fernandez ME, Williams NJ, Aarons GA, Beidas RS, Lewis CC, et al. Enhancing the Impact of Implementation Strategies in Healthcare: A Research Agenda. Front Public Health [Internet]. 2019 [cited 2021 Mar 31];7. Available from: https://www.frontiersin.org/articles/ https://doi.org/10.3389/fpubh.2019.00003/full

Frakt AB, Prentice JC, Pizer SD, Elwy AR, Garrido MM, Kilbourne AM, et al. Overcoming Challenges to Evidence-Based Policy Development in a Large. Integrated Delivery System Health Serv Res. 2018;53:4789–807.

PubMed Google Scholar

Crable EL, Lengnick-Hall R, Stadnick NA, Moullin JC, Aarons GA. Where is “policy” in dissemination and implementation science? Recommendations to advance theories, models, and frameworks: EPIS as a case example. Implement Sci. 2022;17:80.

Crable EL, Grogan CM, Purtle J, Roesch SC, Aarons GA. Tailoring dissemination strategies to increase evidence-informed policymaking for opioid use disorder treatment: study protocol. Implement Sci Commun. 2023;4:16.

Bond GR. Evidence-based policy strategies: A typology. Clin Psychol Sci Pract. 2018;25:e12267.

Loo TS, Davis RB, Lipsitz LA, Irish J, Bates CK, Agarwal K, et al. Electronic Medical Record Reminders and Panel Management to Improve Primary Care of Elderly Patients. Arch Intern Med. 2011;171:1552–8.

Shojania KG, Jennings A, Mayhew A, Ramsay C, Eccles M, Grimshaw J. Effect of point-of-care computer reminders on physician behaviour: a systematic review. CMAJ Can Med Assoc J. 2010;182:E216-25.

Sequist TD, Gandhi TK, Karson AS, Fiskio JM, Bugbee D, Sperling M, et al. A Randomized Trial of Electronic Clinical Reminders to Improve Quality of Care for Diabetes and Coronary Artery Disease. J Am Med Inform Assoc JAMIA. 2005;12:431–7.

Dopp AR, Kerns SEU, Panattoni L, Ringel JS, Eisenberg D, Powell BJ, et al. Translating economic evaluations into financing strategies for implementing evidence-based practices. Implement Sci. 2021;16:1–12.

Dopp AR, Hunter SB, Godley MD, Pham C, Han B, Smart R, et al. Comparing two federal financing strategies on penetration and sustainment of the adolescent community reinforcement approach for substance use disorders: protocol for a mixed-method study. Implement Sci Commun. 2022;3:51.

Proctor EK, Toker E, Tabak R, McKay VR, Hooley C, Evanoff B. Market viability: a neglected concept in implementation science. Implement Sci. 2021;16:98.

Dopp AR, Narcisse M-R, Mundey P, Silovsky JF, Smith AB, Mandell D, et al. A scoping review of strategies for financing the implementation of evidence-based practices in behavioral health systems: State of the literature and future directions. Implement Res Pract. 2020;1:2633489520939980.

PubMed PubMed Central Google Scholar

Dopp AR, Kerns SEU, Panattoni L, Ringel JS, Eisenberg D, Powell BJ, et al. Translating economic evaluations into financing strategies for implementing evidence-based practices. Implement Sci IS. 2021;16:66.

Kilbourne AM, Neumann MS, Pincus HA, Bauer MS, Stall R. Implementing evidence-based interventions in health care:application of the replicating effective programs framework. Implement Sci. 2007;2:42–51.

Kegeles SM, Rebchook GM, Hays RB, Terry MA, O’Donnell L, Leonard NR, et al. From science to application: the development of an intervention package. AIDS Educ Prev Off Publ Int Soc AIDS Educ. 2000;12:62–74.

Wandersman A, Imm P, Chinman M, Kaftarian S. Getting to outcomes: a results-based approach to accountability. Eval Program Plann. 2000;23:389–95.

Wandersman A, Chien VH, Katz J. Toward an evidence-based system for innovation support for implementing innovations with quality: Tools, training, technical assistance, and quality assurance/quality improvement. Am J Community Psychol. 2012;50:445–59.