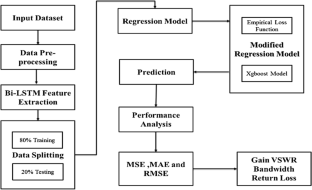

Regression Analysis

Regression analysis is a quantitative research method which is used when the study involves modelling and analysing several variables, where the relationship includes a dependent variable and one or more independent variables. In simple terms, regression analysis is a quantitative method used to test the nature of relationships between a dependent variable and one or more independent variables.

The basic form of regression models includes unknown parameters (β), independent variables (X), and the dependent variable (Y).

Regression model, basically, specifies the relation of dependent variable (Y) to a function combination of independent variables (X) and unknown parameters (β)

Y ≈ f (X, β)

Regression equation can be used to predict the values of ‘y’, if the value of ‘x’ is given, and both ‘y’ and ‘x’ are the two sets of measures of a sample size of ‘n’. The formulae for regression equation would be

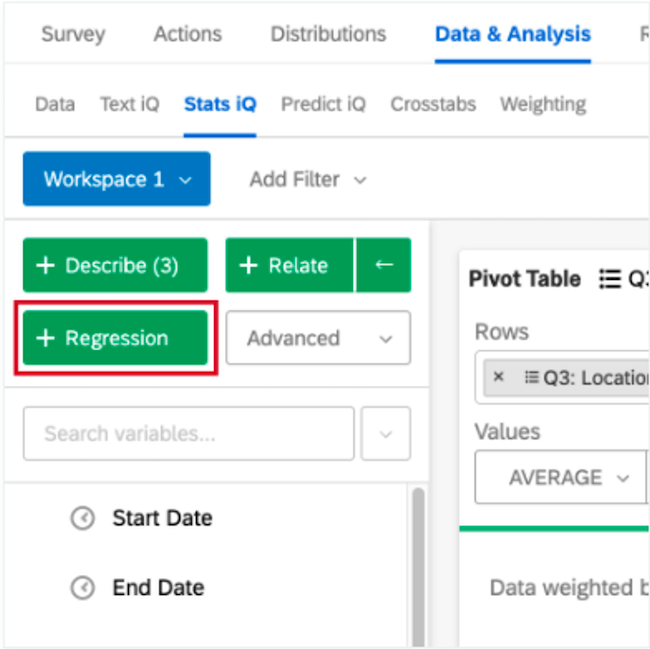

Do not be intimidated by visual complexity of correlation and regression formulae above. You don’t have to apply the formula manually, and correlation and regression analyses can be run with the application of popular analytical software such as Microsoft Excel, Microsoft Access, SPSS and others.

Linear regression analysis is based on the following set of assumptions:

1. Assumption of linearity . There is a linear relationship between dependent and independent variables.

2. Assumption of homoscedasticity . Data values for dependent and independent variables have equal variances.

3. Assumption of absence of collinearity or multicollinearity . There is no correlation between two or more independent variables.

4. Assumption of normal distribution . The data for the independent variables and dependent variable are normally distributed

My e-book, The Ultimate Guide to Writing a Dissertation in Business Studies: a step by step assistance offers practical assistance to complete a dissertation with minimum or no stress. The e-book covers all stages of writing a dissertation starting from the selection to the research area to submitting the completed version of the work within the deadline. John Dudovskiy

- Privacy Policy

Home » Regression Analysis – Methods, Types and Examples

Regression Analysis – Methods, Types and Examples

Table of Contents

Regression Analysis

Regression analysis is a set of statistical processes for estimating the relationships among variables . It includes many techniques for modeling and analyzing several variables when the focus is on the relationship between a dependent variable and one or more independent variables (or ‘predictors’).

Regression Analysis Methodology

Here is a general methodology for performing regression analysis:

- Define the research question: Clearly state the research question or hypothesis you want to investigate. Identify the dependent variable (also called the response variable or outcome variable) and the independent variables (also called predictor variables or explanatory variables) that you believe are related to the dependent variable.

- Collect data: Gather the data for the dependent variable and independent variables. Ensure that the data is relevant, accurate, and representative of the population or phenomenon you are studying.

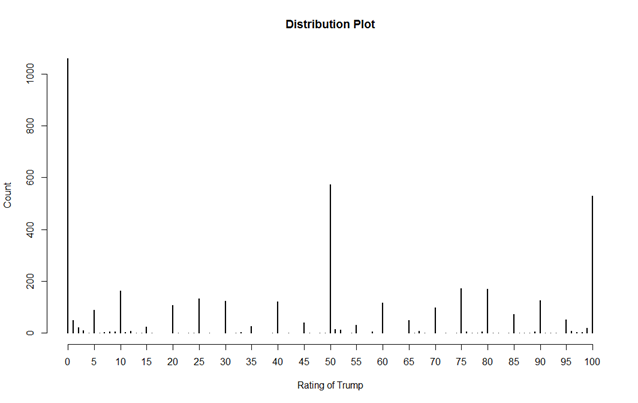

- Explore the data: Perform exploratory data analysis to understand the characteristics of the data, identify any missing values or outliers, and assess the relationships between variables through scatter plots, histograms, or summary statistics.

- Choose the regression model: Select an appropriate regression model based on the nature of the variables and the research question. Common regression models include linear regression, multiple regression, logistic regression, polynomial regression, and time series regression, among others.

- Assess assumptions: Check the assumptions of the regression model. Some common assumptions include linearity (the relationship between variables is linear), independence of errors, homoscedasticity (constant variance of errors), and normality of errors. Violation of these assumptions may require additional steps or alternative models.

- Estimate the model: Use a suitable method to estimate the parameters of the regression model. The most common method is ordinary least squares (OLS), which minimizes the sum of squared differences between the observed and predicted values of the dependent variable.

- I nterpret the results: Analyze the estimated coefficients, p-values, confidence intervals, and goodness-of-fit measures (e.g., R-squared) to interpret the results. Determine the significance and direction of the relationships between the independent variables and the dependent variable.

- Evaluate model performance: Assess the overall performance of the regression model using appropriate measures, such as R-squared, adjusted R-squared, and root mean squared error (RMSE). These measures indicate how well the model fits the data and how much of the variation in the dependent variable is explained by the independent variables.

- Test assumptions and diagnose problems: Check the residuals (the differences between observed and predicted values) for any patterns or deviations from assumptions. Conduct diagnostic tests, such as examining residual plots, testing for multicollinearity among independent variables, and assessing heteroscedasticity or autocorrelation, if applicable.

- Make predictions and draw conclusions: Once you have a satisfactory model, use it to make predictions on new or unseen data. Draw conclusions based on the results of the analysis, considering the limitations and potential implications of the findings.

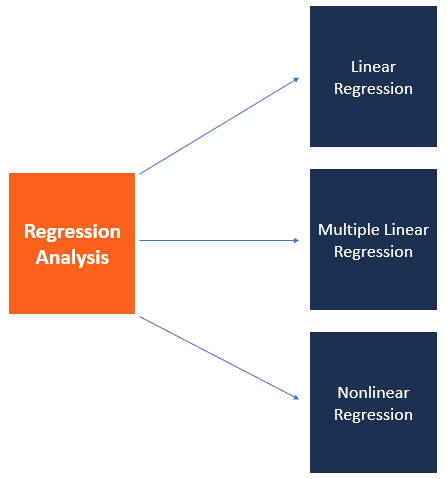

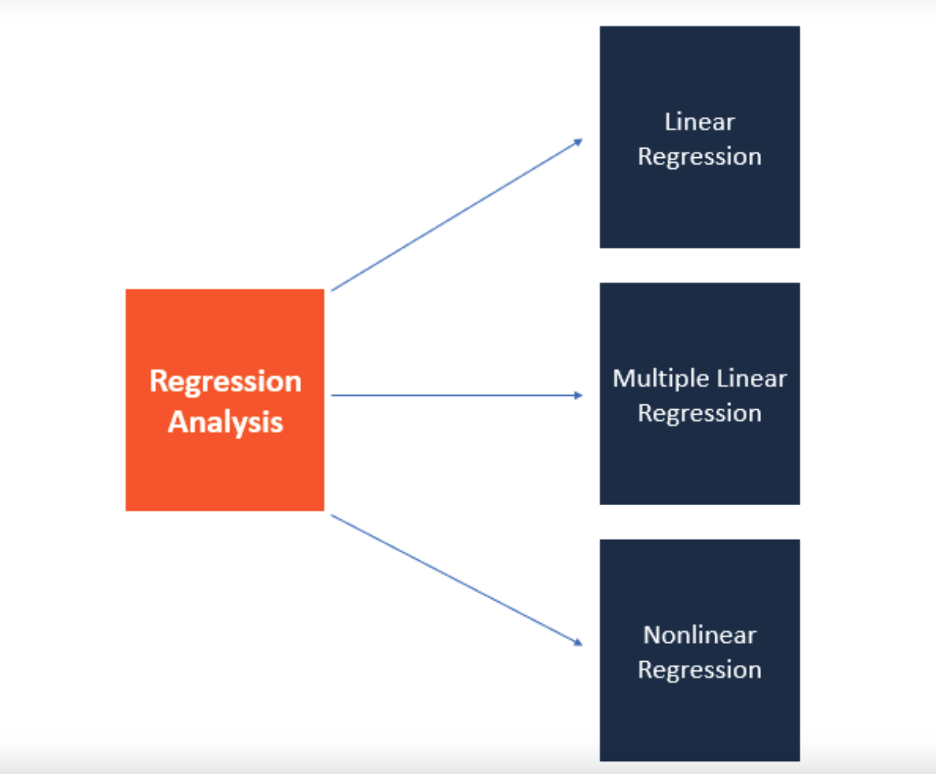

Types of Regression Analysis

Types of Regression Analysis are as follows:

Linear Regression

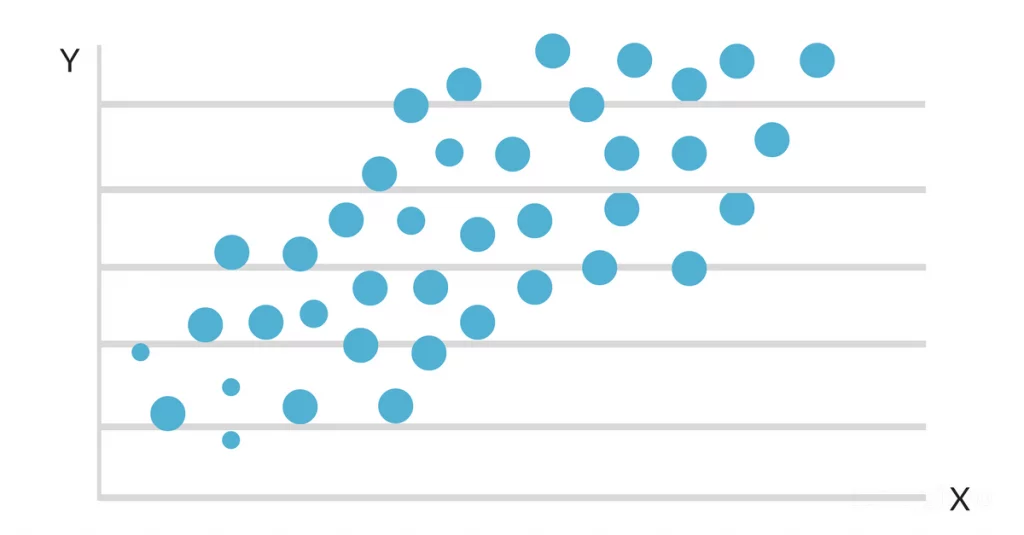

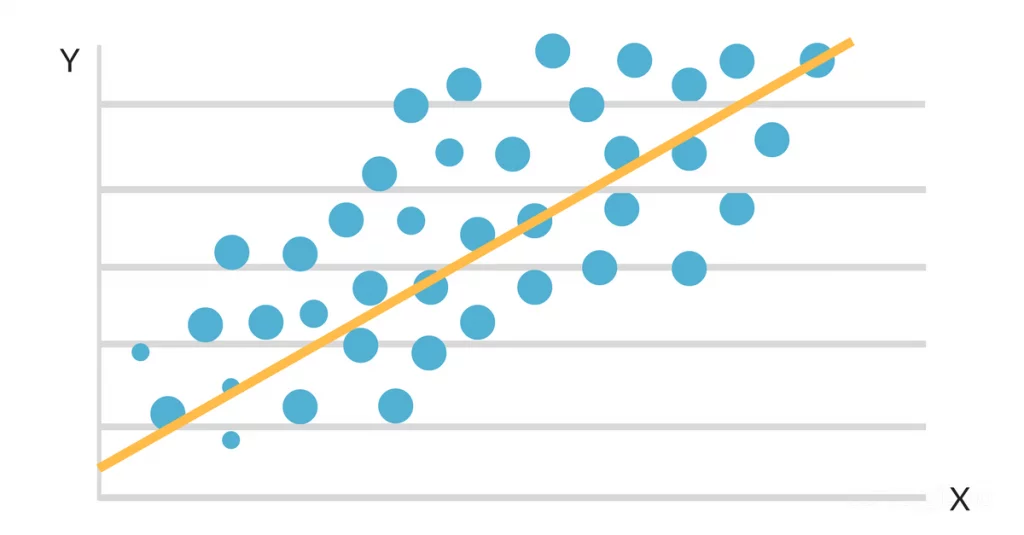

Linear regression is the most basic and widely used form of regression analysis. It models the linear relationship between a dependent variable and one or more independent variables. The goal is to find the best-fitting line that minimizes the sum of squared differences between observed and predicted values.

Multiple Regression

Multiple regression extends linear regression by incorporating two or more independent variables to predict the dependent variable. It allows for examining the simultaneous effects of multiple predictors on the outcome variable.

Polynomial Regression

Polynomial regression models non-linear relationships between variables by adding polynomial terms (e.g., squared or cubic terms) to the regression equation. It can capture curved or nonlinear patterns in the data.

Logistic Regression

Logistic regression is used when the dependent variable is binary or categorical. It models the probability of the occurrence of a certain event or outcome based on the independent variables. Logistic regression estimates the coefficients using the logistic function, which transforms the linear combination of predictors into a probability.

Ridge Regression and Lasso Regression

Ridge regression and Lasso regression are techniques used for addressing multicollinearity (high correlation between independent variables) and variable selection. Both methods introduce a penalty term to the regression equation to shrink or eliminate less important variables. Ridge regression uses L2 regularization, while Lasso regression uses L1 regularization.

Time Series Regression

Time series regression analyzes the relationship between a dependent variable and independent variables when the data is collected over time. It accounts for autocorrelation and trends in the data and is used in forecasting and studying temporal relationships.

Nonlinear Regression

Nonlinear regression models are used when the relationship between the dependent variable and independent variables is not linear. These models can take various functional forms and require estimation techniques different from those used in linear regression.

Poisson Regression

Poisson regression is employed when the dependent variable represents count data. It models the relationship between the independent variables and the expected count, assuming a Poisson distribution for the dependent variable.

Generalized Linear Models (GLM)

GLMs are a flexible class of regression models that extend the linear regression framework to handle different types of dependent variables, including binary, count, and continuous variables. GLMs incorporate various probability distributions and link functions.

Regression Analysis Formulas

Regression analysis involves estimating the parameters of a regression model to describe the relationship between the dependent variable (Y) and one or more independent variables (X). Here are the basic formulas for linear regression, multiple regression, and logistic regression:

Linear Regression:

Simple Linear Regression Model: Y = β0 + β1X + ε

Multiple Linear Regression Model: Y = β0 + β1X1 + β2X2 + … + βnXn + ε

In both formulas:

- Y represents the dependent variable (response variable).

- X represents the independent variable(s) (predictor variable(s)).

- β0, β1, β2, …, βn are the regression coefficients or parameters that need to be estimated.

- ε represents the error term or residual (the difference between the observed and predicted values).

Multiple Regression:

Multiple regression extends the concept of simple linear regression by including multiple independent variables.

Multiple Regression Model: Y = β0 + β1X1 + β2X2 + … + βnXn + ε

The formulas are similar to those in linear regression, with the addition of more independent variables.

Logistic Regression:

Logistic regression is used when the dependent variable is binary or categorical. The logistic regression model applies a logistic or sigmoid function to the linear combination of the independent variables.

Logistic Regression Model: p = 1 / (1 + e^-(β0 + β1X1 + β2X2 + … + βnXn))

In the formula:

- p represents the probability of the event occurring (e.g., the probability of success or belonging to a certain category).

- X1, X2, …, Xn represent the independent variables.

- e is the base of the natural logarithm.

The logistic function ensures that the predicted probabilities lie between 0 and 1, allowing for binary classification.

Regression Analysis Examples

Regression Analysis Examples are as follows:

- Stock Market Prediction: Regression analysis can be used to predict stock prices based on various factors such as historical prices, trading volume, news sentiment, and economic indicators. Traders and investors can use this analysis to make informed decisions about buying or selling stocks.

- Demand Forecasting: In retail and e-commerce, real-time It can help forecast demand for products. By analyzing historical sales data along with real-time data such as website traffic, promotional activities, and market trends, businesses can adjust their inventory levels and production schedules to meet customer demand more effectively.

- Energy Load Forecasting: Utility companies often use real-time regression analysis to forecast electricity demand. By analyzing historical energy consumption data, weather conditions, and other relevant factors, they can predict future energy loads. This information helps them optimize power generation and distribution, ensuring a stable and efficient energy supply.

- Online Advertising Performance: It can be used to assess the performance of online advertising campaigns. By analyzing real-time data on ad impressions, click-through rates, conversion rates, and other metrics, advertisers can adjust their targeting, messaging, and ad placement strategies to maximize their return on investment.

- Predictive Maintenance: Regression analysis can be applied to predict equipment failures or maintenance needs. By continuously monitoring sensor data from machines or vehicles, regression models can identify patterns or anomalies that indicate potential failures. This enables proactive maintenance, reducing downtime and optimizing maintenance schedules.

- Financial Risk Assessment: Real-time regression analysis can help financial institutions assess the risk associated with lending or investment decisions. By analyzing real-time data on factors such as borrower financials, market conditions, and macroeconomic indicators, regression models can estimate the likelihood of default or assess the risk-return tradeoff for investment portfolios.

Importance of Regression Analysis

Importance of Regression Analysis is as follows:

- Relationship Identification: Regression analysis helps in identifying and quantifying the relationship between a dependent variable and one or more independent variables. It allows us to determine how changes in independent variables impact the dependent variable. This information is crucial for decision-making, planning, and forecasting.

- Prediction and Forecasting: Regression analysis enables us to make predictions and forecasts based on the relationships identified. By estimating the values of the dependent variable using known values of independent variables, regression models can provide valuable insights into future outcomes. This is particularly useful in business, economics, finance, and other fields where forecasting is vital for planning and strategy development.

- Causality Assessment: While correlation does not imply causation, regression analysis provides a framework for assessing causality by considering the direction and strength of the relationship between variables. It allows researchers to control for other factors and assess the impact of a specific independent variable on the dependent variable. This helps in determining the causal effect and identifying significant factors that influence outcomes.

- Model Building and Variable Selection: Regression analysis aids in model building by determining the most appropriate functional form of the relationship between variables. It helps researchers select relevant independent variables and eliminate irrelevant ones, reducing complexity and improving model accuracy. This process is crucial for creating robust and interpretable models.

- Hypothesis Testing: Regression analysis provides a statistical framework for hypothesis testing. Researchers can test the significance of individual coefficients, assess the overall model fit, and determine if the relationship between variables is statistically significant. This allows for rigorous analysis and validation of research hypotheses.

- Policy Evaluation and Decision-Making: Regression analysis plays a vital role in policy evaluation and decision-making processes. By analyzing historical data, researchers can evaluate the effectiveness of policy interventions and identify the key factors contributing to certain outcomes. This information helps policymakers make informed decisions, allocate resources effectively, and optimize policy implementation.

- Risk Assessment and Control: Regression analysis can be used for risk assessment and control purposes. By analyzing historical data, organizations can identify risk factors and develop models that predict the likelihood of certain outcomes, such as defaults, accidents, or failures. This enables proactive risk management, allowing organizations to take preventive measures and mitigate potential risks.

When to Use Regression Analysis

- Prediction : Regression analysis is often employed to predict the value of the dependent variable based on the values of independent variables. For example, you might use regression to predict sales based on advertising expenditure, or to predict a student’s academic performance based on variables like study time, attendance, and previous grades.

- Relationship analysis: Regression can help determine the strength and direction of the relationship between variables. It can be used to examine whether there is a linear association between variables, identify which independent variables have a significant impact on the dependent variable, and quantify the magnitude of those effects.

- Causal inference: Regression analysis can be used to explore cause-and-effect relationships by controlling for other variables. For example, in a medical study, you might use regression to determine the impact of a specific treatment while accounting for other factors like age, gender, and lifestyle.

- Forecasting : Regression models can be utilized to forecast future trends or outcomes. By fitting a regression model to historical data, you can make predictions about future values of the dependent variable based on changes in the independent variables.

- Model evaluation: Regression analysis can be used to evaluate the performance of a model or test the significance of variables. You can assess how well the model fits the data, determine if additional variables improve the model’s predictive power, or test the statistical significance of coefficients.

- Data exploration : Regression analysis can help uncover patterns and insights in the data. By examining the relationships between variables, you can gain a deeper understanding of the data set and identify potential patterns, outliers, or influential observations.

Applications of Regression Analysis

Here are some common applications of regression analysis:

- Economic Forecasting: Regression analysis is frequently employed in economics to forecast variables such as GDP growth, inflation rates, or stock market performance. By analyzing historical data and identifying the underlying relationships, economists can make predictions about future economic conditions.

- Financial Analysis: Regression analysis plays a crucial role in financial analysis, such as predicting stock prices or evaluating the impact of financial factors on company performance. It helps analysts understand how variables like interest rates, company earnings, or market indices influence financial outcomes.

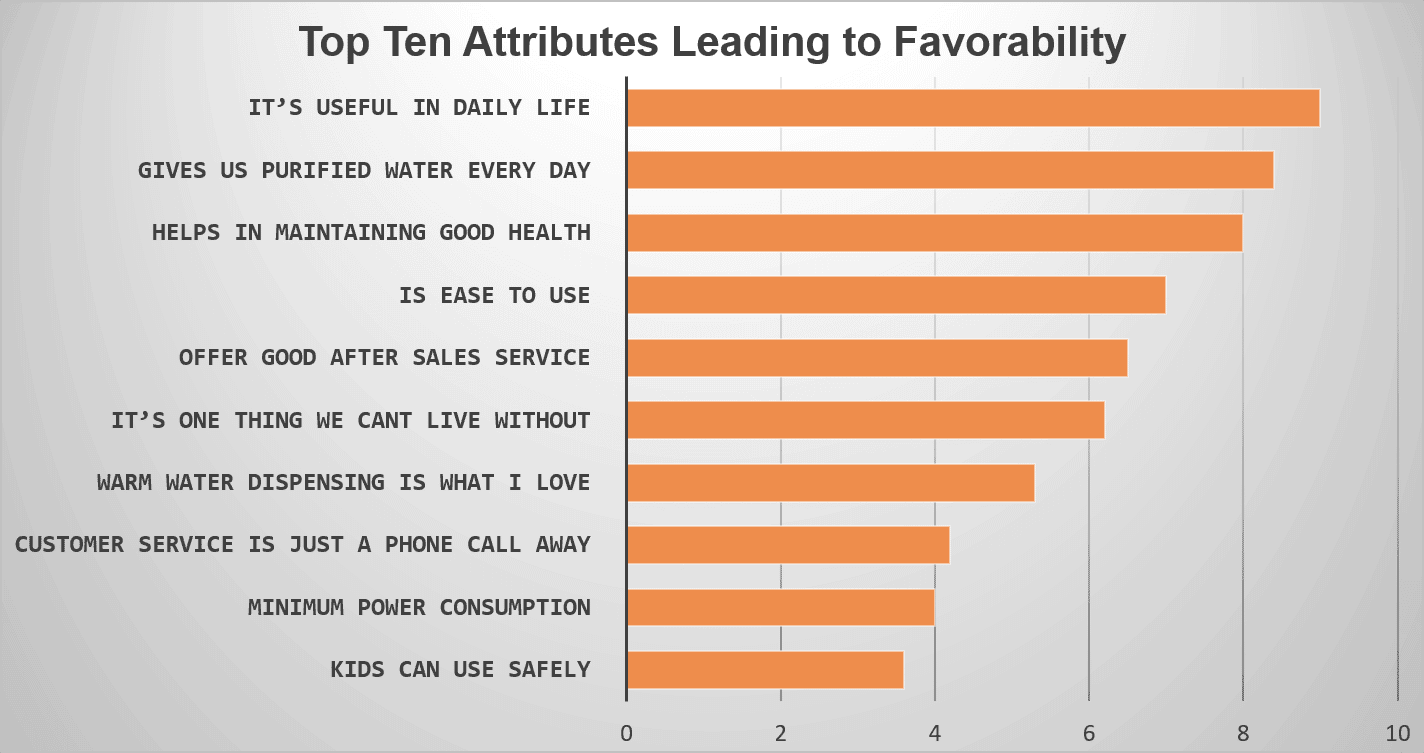

- Marketing Research: Regression analysis helps marketers understand consumer behavior and make data-driven decisions. It can be used to predict sales based on advertising expenditures, pricing strategies, or demographic variables. Regression models provide insights into which marketing efforts are most effective and help optimize marketing campaigns.

- Health Sciences: Regression analysis is extensively used in medical research and public health studies. It helps examine the relationship between risk factors and health outcomes, such as the impact of smoking on lung cancer or the relationship between diet and heart disease. Regression analysis also helps in predicting health outcomes based on various factors like age, genetic markers, or lifestyle choices.

- Social Sciences: Regression analysis is widely used in social sciences like sociology, psychology, and education research. Researchers can investigate the impact of variables like income, education level, or social factors on various outcomes such as crime rates, academic performance, or job satisfaction.

- Operations Research: Regression analysis is applied in operations research to optimize processes and improve efficiency. For example, it can be used to predict demand based on historical sales data, determine the factors influencing production output, or optimize supply chain logistics.

- Environmental Studies: Regression analysis helps in understanding and predicting environmental phenomena. It can be used to analyze the impact of factors like temperature, pollution levels, or land use patterns on phenomena such as species diversity, water quality, or climate change.

- Sports Analytics: Regression analysis is increasingly used in sports analytics to gain insights into player performance, team strategies, and game outcomes. It helps analyze the relationship between various factors like player statistics, coaching strategies, or environmental conditions and their impact on game outcomes.

Advantages and Disadvantages of Regression Analysis

| Advantages of Regression Analysis | Disadvantages of Regression Analysis |

|---|---|

| Provides a quantitative measure of the relationship between variables | Assumes a linear relationship between variables, which may not always hold true |

| Helps in predicting and forecasting outcomes based on historical data | Requires a large sample size to produce reliable results |

| Identifies and measures the significance of independent variables on the dependent variable | Assumes no multicollinearity, meaning that independent variables should not be highly correlated with each other |

| Provides estimates of the coefficients that represent the strength and direction of the relationship between variables | Assumes the absence of outliers or influential data points |

| Allows for hypothesis testing to determine the statistical significance of the relationship | Can be sensitive to the inclusion or exclusion of certain variables, leading to different results |

| Can handle both continuous and categorical variables | Assumes the independence of observations, which may not hold true in some cases |

| Offers a visual representation of the relationship through the use of scatter plots and regression lines | May not capture complex non-linear relationships between variables without appropriate transformations |

| Provides insights into the marginal effects of independent variables on the dependent variable | Requires the assumption of homoscedasticity, meaning that the variance of errors is constant across all levels of the independent variables |

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Probability Histogram – Definition, Examples and...

Data Analysis – Process, Methods and Types

Textual Analysis – Types, Examples and Guide

Histogram – Types, Examples and Making Guide

Content Analysis – Methods, Types and Examples

Critical Analysis – Types, Examples and Writing...

- How it works

A Beginner’s Guide to Regression Analysis

Published by Owen Ingram at September 1st, 2021 , Revised On July 5, 2022

Are you good with data-driven decisions at work? If not, why? What is stopping you from getting on the crest of a wave? There could be just one answer to these questions, and that is “too much data getting in the way.” Do not worry; there is a solution to every problem in this world, and there is definitely one for parsing through tons of data.

Yes, you heard it right! You will not have to get in trouble with the number crunching and counting with this solution. What is the solution?

Well, without further ado, we would like to introduce you to “regression,” which precisely is allowing one to see into the future.

What is Regression Analysis?

Here is a scenario to help you understand what regression is and how it helps you make better strategic decisions in research.

Let’s say you are the CEO of a company and are trying to predict the profit margin for the next month. Now you might have a lot of factors in your mind that can affect the number. Be it the number of sales you get in the month, the number of employees not taking leaves, or the number of hours each worker gives daily. But what if things do not go as planned? The “what if” list here has no stop; it can go on forever. All these impacting factors here are variables, and regression analysis is the process of mathematically figuring out which of these variables actually have an impact and which are not plausible.

So, we can say that regression analysis helps you find the relationship between a set of dependent and independent variables. There are different ways to find this relationship between variables, which in statistics is named “ regression models .”

We will learn about each in the next heading.

Types of Regression Models

If you are not sure which type of regression model you should use for a particular study, this section might help you.

Though there are numerous types of regression models depending on the type of variables , these are the most common ones.

Linear Regression

Logistic regression, ridge regression, lasso regression, polynomial regression, bayesian linear regression.

Linear regression is the real workhorse of the industry and probably is the first type that comes to mind. It is often known as Linear Least Squares and Ordinary Least Squares . This model consists of a dependent variable and a predictable variable that align with each other. Hence, the name linear regression. If the data you are dealing with contains more than one independent variable , then the linear regression here would be Multi-Linear Regression .

Logistic Regression comes into play when the dependent variable is discrete. This means that the target value will only have one or two values. For instance, a true or false, a yes or no, a 0 or 1, and so on. In this case, a sigmoid curve describes the relationship between the independent and dependent variables .

When using this regression model for the data analysis process , two things should strictly be taken into consideration:

- Make sure there is no multi-linearity (like that in the linear regression model) or correlation between the two variables in the dataset

- Also, ensure that the size of data is big with the equal manifestation of values to come in targeted variables

When there is a high correlation between the independent and dependent variables, this type of regression is used. It is simply because, with multi collinear data, least-square estimates give impartial numbers. However, if the collinearity is high, there might be a slight chance of unfair judgment.

Thus, a bias matrix is brought to the surface in ridge regression. This powerful type of regression is less vulnerable to overfitting. Are you familiar with the ‘overfitting’ word?

Overfitting in statistics is a modeling error that one makes when the function is too closely brought into line with limited data points. When a model in research has been compromised with this error, it might lose its value all at once.

Lasso Regression is best suitable for performing regularization alongside feature selection. This type of regression hinders the absolute size of the regression coefficient. What happens next? The coefficient value will almost come nearer zero, which the complete opposite of what happened in Ridge Regression.

This is why feature selection utilizes this regression model that helps to select a set of features from the dataset. Only required and limited features are used in Lasso Regression, and all the other features are zero. Researchers get rid of the overfitting in the model by doing this. But what if the independent variables are highly collinear?

In that case, this model will only choose one variable and turn the others to zero. We can say that it is somewhat like the Ridge Regression but with variable selection.

This is another type of regression that is almost the same as Multi-Linear Regression but with some changes. In the Polynomial Regression Model, the relationship between the two variables, dependent and independent , is denoted by the nth degree. While in a Multi-Linear Regression Model, the line is linear, here it is the opposite. The best fit line in Polynomial Regression passing through all the points is curved. This curve either depends on the value of n or the value of X.

This model is also prone to overfitting. It is best to assess the curve towards the end as the higher polynomials might give strange and unexpected results on extrapolation.

The last type of regression model we are going to discuss is the Bayesian Linear Regression. Have you heard of the Bayes theorem? Well, this regression type basically uses that to figure out the value of regression coefficients.

It is a lot like both Ridge Regression and Linear Regression, but the stability here is much higher. In this model, we find the value of the posterior distribution of the features instead of working on the least squares.

FAQs About Regression Analysis

What is regression.

It is a technique to find out the relationship between the dependent and independent variables

What is a linear regression model?

Linear Regression Model helps determine the relationship between different continuous variables by fitting a linear equation for dealing with data.

What is the difference between multi-linear regression and polynomial regression?

The only difference between Multi-Linear Regression and polynomial repression is that in the latter relationship between ‘x’ and ‘y’ is denoted by the nth value, so the line here is a curve. While in Multi-Linear, the line is straight.

What is overfitting in statistics?

When a function in statistics corresponds too closely to a particular set of data, some modeling error is possible. This modeling error is called overfitting.

What is ridge regression?

It is a method of finding the coefficients of multiple regression models in which the independent variables are highly correlated. In other words, it is a method to develop a parsimonious model when the number of predictable variables is higher than the observations in a set.

You May Also Like

This introductory article aims to define, elaborate and exemplify transferability in qualitative research.

Nominal Data is a type of qualitative data that divides variables into groups and categories. Nominal data helps to find valuable information about a sample.

a multiple linear regression model is used when there are two or more independent variables—x1 and x2—that are predicted to change another variable, y.

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

- How It Works

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Simple Linear Regression | An Easy Introduction & Examples

Simple Linear Regression | An Easy Introduction & Examples

Published on February 19, 2020 by Rebecca Bevans . Revised on June 22, 2023.

Simple linear regression is used to estimate the relationship between two quantitative variables . You can use simple linear regression when you want to know:

- How strong the relationship is between two variables (e.g., the relationship between rainfall and soil erosion).

- The value of the dependent variable at a certain value of the independent variable (e.g., the amount of soil erosion at a certain level of rainfall).

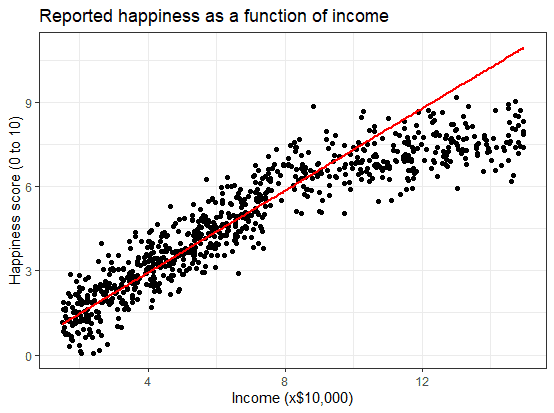

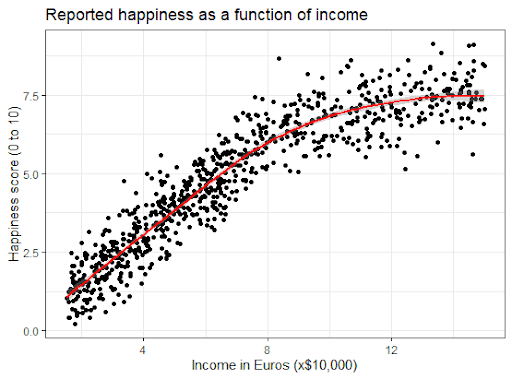

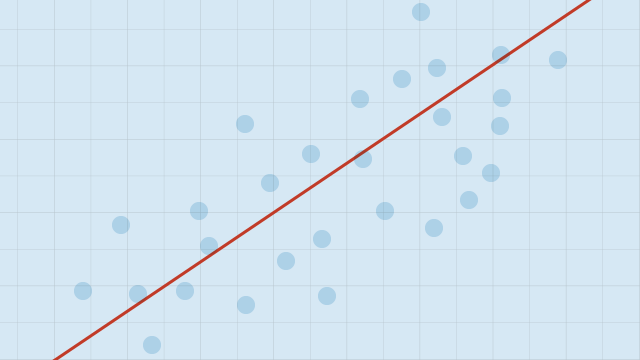

Regression models describe the relationship between variables by fitting a line to the observed data. Linear regression models use a straight line, while logistic and nonlinear regression models use a curved line. Regression allows you to estimate how a dependent variable changes as the independent variable(s) change.

If you have more than one independent variable, use multiple linear regression instead.

Table of contents

Assumptions of simple linear regression, how to perform a simple linear regression, interpreting the results, presenting the results, can you predict values outside the range of your data, other interesting articles, frequently asked questions about simple linear regression.

Simple linear regression is a parametric test , meaning that it makes certain assumptions about the data. These assumptions are:

- Homogeneity of variance (homoscedasticity) : the size of the error in our prediction doesn’t change significantly across the values of the independent variable.

- Independence of observations : the observations in the dataset were collected using statistically valid sampling methods , and there are no hidden relationships among observations.

- Normality : The data follows a normal distribution .

Linear regression makes one additional assumption:

- The relationship between the independent and dependent variable is linear : the line of best fit through the data points is a straight line (rather than a curve or some sort of grouping factor).

If your data do not meet the assumptions of homoscedasticity or normality, you may be able to use a nonparametric test instead, such as the Spearman rank test.

If your data violate the assumption of independence of observations (e.g., if observations are repeated over time), you may be able to perform a linear mixed-effects model that accounts for the additional structure in the data.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Simple linear regression formula

The formula for a simple linear regression is:

- y is the predicted value of the dependent variable ( y ) for any given value of the independent variable ( x ).

- B 0 is the intercept , the predicted value of y when the x is 0.

- B 1 is the regression coefficient – how much we expect y to change as x increases.

- x is the independent variable ( the variable we expect is influencing y ).

- e is the error of the estimate, or how much variation there is in our estimate of the regression coefficient.

Linear regression finds the line of best fit line through your data by searching for the regression coefficient (B 1 ) that minimizes the total error (e) of the model.

While you can perform a linear regression by hand , this is a tedious process, so most people use statistical programs to help them quickly analyze the data.

Simple linear regression in R

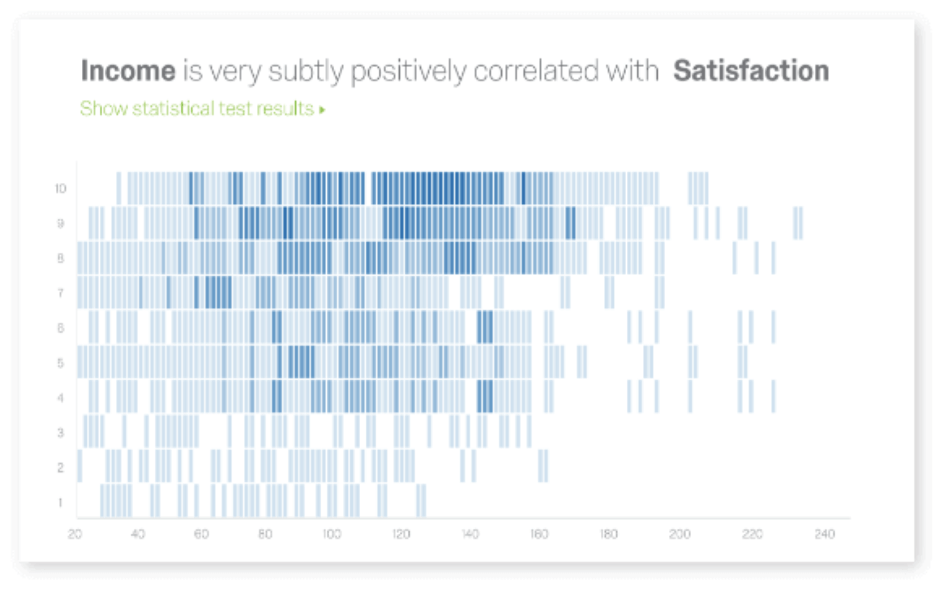

R is a free, powerful, and widely-used statistical program. Download the dataset to try it yourself using our income and happiness example.

Dataset for simple linear regression (.csv)

Load the income.data dataset into your R environment, and then run the following command to generate a linear model describing the relationship between income and happiness:

This code takes the data you have collected data = income.data and calculates the effect that the independent variable income has on the dependent variable happiness using the equation for the linear model: lm() .

To learn more, follow our full step-by-step guide to linear regression in R .

To view the results of the model, you can use the summary() function in R:

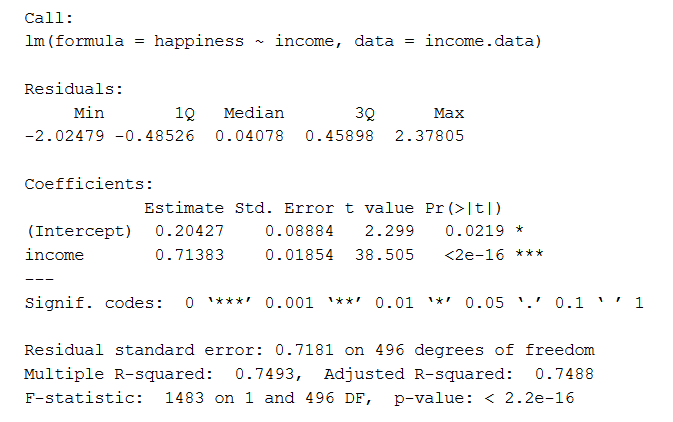

This function takes the most important parameters from the linear model and puts them into a table, which looks like this:

This output table first repeats the formula that was used to generate the results (‘Call’), then summarizes the model residuals (‘Residuals’), which give an idea of how well the model fits the real data.

Next is the ‘Coefficients’ table. The first row gives the estimates of the y-intercept, and the second row gives the regression coefficient of the model.

Row 1 of the table is labeled (Intercept) . This is the y-intercept of the regression equation, with a value of 0.20. You can plug this into your regression equation if you want to predict happiness values across the range of income that you have observed:

The next row in the ‘Coefficients’ table is income. This is the row that describes the estimated effect of income on reported happiness:

The Estimate column is the estimated effect , also called the regression coefficient or r 2 value. The number in the table (0.713) tells us that for every one unit increase in income (where one unit of income = 10,000) there is a corresponding 0.71-unit increase in reported happiness (where happiness is a scale of 1 to 10).

The Std. Error column displays the standard error of the estimate. This number shows how much variation there is in our estimate of the relationship between income and happiness.

The t value column displays the test statistic . Unless you specify otherwise, the test statistic used in linear regression is the t value from a two-sided t test . The larger the test statistic, the less likely it is that our results occurred by chance.

The Pr(>| t |) column shows the p value . This number tells us how likely we are to see the estimated effect of income on happiness if the null hypothesis of no effect were true.

Because the p value is so low ( p < 0.001), we can reject the null hypothesis and conclude that income has a statistically significant effect on happiness.

The last three lines of the model summary are statistics about the model as a whole. The most important thing to notice here is the p value of the model. Here it is significant ( p < 0.001), which means that this model is a good fit for the observed data.

When reporting your results, include the estimated effect (i.e. the regression coefficient), standard error of the estimate, and the p value. You should also interpret your numbers to make it clear to your readers what your regression coefficient means:

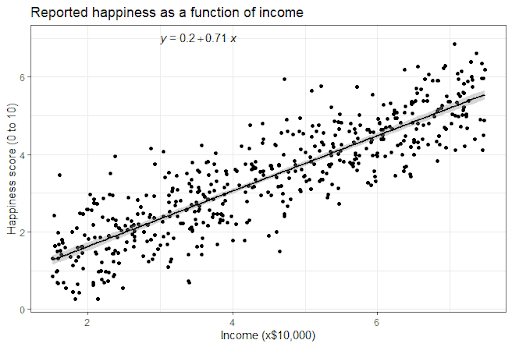

It can also be helpful to include a graph with your results. For a simple linear regression, you can simply plot the observations on the x and y axis and then include the regression line and regression function:

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

No! We often say that regression models can be used to predict the value of the dependent variable at certain values of the independent variable. However, this is only true for the range of values where we have actually measured the response.

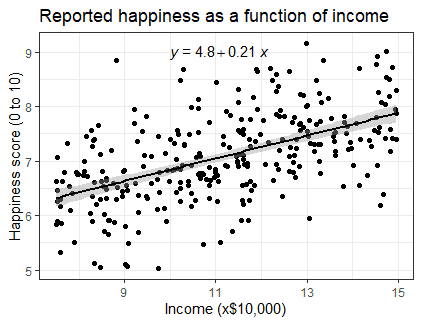

We can use our income and happiness regression analysis as an example. Between 15,000 and 75,000, we found an r 2 of 0.73 ± 0.0193. But what if we did a second survey of people making between 75,000 and 150,000?

The r 2 for the relationship between income and happiness is now 0.21, or a 0.21-unit increase in reported happiness for every 10,000 increase in income. While the relationship is still statistically significant (p<0.001), the slope is much smaller than before.

What if we hadn’t measured this group, and instead extrapolated the line from the 15–75k incomes to the 70–150k incomes?

You can see that if we simply extrapolated from the 15–75k income data, we would overestimate the happiness of people in the 75–150k income range.

If we instead fit a curve to the data, it seems to fit the actual pattern much better.

It looks as though happiness actually levels off at higher incomes, so we can’t use the same regression line we calculated from our lower-income data to predict happiness at higher levels of income.

Even when you see a strong pattern in your data, you can’t know for certain whether that pattern continues beyond the range of values you have actually measured. Therefore, it’s important to avoid extrapolating beyond what the data actually tell you.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Chi square test of independence

- Statistical power

- Descriptive statistics

- Degrees of freedom

- Pearson correlation

- Null hypothesis

Methodology

- Double-blind study

- Case-control study

- Research ethics

- Data collection

- Hypothesis testing

- Structured interviews

Research bias

- Hawthorne effect

- Unconscious bias

- Recall bias

- Halo effect

- Self-serving bias

- Information bias

A regression model is a statistical model that estimates the relationship between one dependent variable and one or more independent variables using a line (or a plane in the case of two or more independent variables).

A regression model can be used when the dependent variable is quantitative, except in the case of logistic regression, where the dependent variable is binary.

Simple linear regression is a regression model that estimates the relationship between one independent variable and one dependent variable using a straight line. Both variables should be quantitative.

For example, the relationship between temperature and the expansion of mercury in a thermometer can be modeled using a straight line: as temperature increases, the mercury expands. This linear relationship is so certain that we can use mercury thermometers to measure temperature.

Linear regression most often uses mean-square error (MSE) to calculate the error of the model. MSE is calculated by:

- measuring the distance of the observed y-values from the predicted y-values at each value of x;

- squaring each of these distances;

- calculating the mean of each of the squared distances.

Linear regression fits a line to the data by finding the regression coefficient that results in the smallest MSE.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 22). Simple Linear Regression | An Easy Introduction & Examples. Scribbr. Retrieved July 4, 2024, from https://www.scribbr.com/statistics/simple-linear-regression/

Is this article helpful?

Rebecca Bevans

Other students also liked, an introduction to t tests | definitions, formula and examples, multiple linear regression | a quick guide (examples), linear regression in r | a step-by-step guide & examples, what is your plagiarism score.

Regression Analysis

The estimation of relationships between a dependent variable and one or more independent variables

What is Regression Analysis?

Regression analysis is a set of statistical methods used for the estimation of relationships between a dependent variable and one or more independent variables . It can be utilized to assess the strength of the relationship between variables and for modeling the future relationship between them.

Regression analysis includes several variations, such as linear, multiple linear, and nonlinear. The most common models are simple linear and multiple linear. Nonlinear regression analysis is commonly used for more complicated data sets in which the dependent and independent variables show a nonlinear relationship.

Regression analysis offers numerous applications in various disciplines, including finance .

Regression Analysis – Linear Model Assumptions

Linear regression analysis is based on six fundamental assumptions:

- The dependent and independent variables show a linear relationship between the slope and the intercept.

- The independent variable is not random.

- The value of the residual (error) is zero.

- The value of the residual (error) is constant across all observations.

- The value of the residual (error) is not correlated across all observations.

- The residual (error) values follow the normal distribution.

Regression Analysis – Simple Linear Regression

Simple linear regression is a model that assesses the relationship between a dependent variable and an independent variable. The simple linear model is expressed using the following equation:

Y = a + bX + ϵ

- Y – Dependent variable

- X – Independent (explanatory) variable

- a – Intercept

- b – Slope

- ϵ – Residual (error)

Check out the following video to learn more about simple linear regression:

Regression Analysis – Multiple Linear Regression

Multiple linear regression analysis is essentially similar to the simple linear model, with the exception that multiple independent variables are used in the model. The mathematical representation of multiple linear regression is:

Y = a + b X 1 + c X 2 + d X 3 + ϵ

- X 1 , X 2 , X 3 – Independent (explanatory) variables

- b, c, d – Slopes

Multiple linear regression follows the same conditions as the simple linear model. However, since there are several independent variables in multiple linear analysis, there is another mandatory condition for the model:

- Non-collinearity: Independent variables should show a minimum correlation with each other. If the independent variables are highly correlated with each other, it will be difficult to assess the true relationships between the dependent and independent variables.

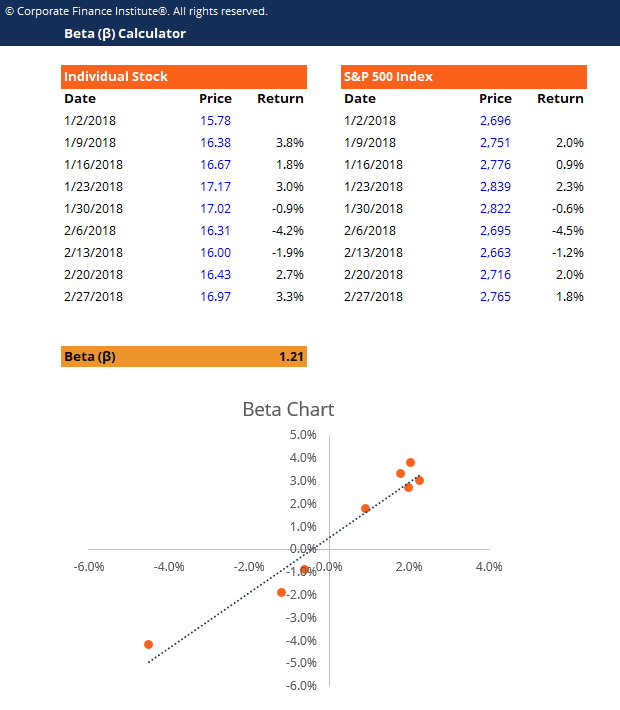

Regression Analysis in Finance

Regression analysis comes with several applications in finance. For example, the statistical method is fundamental to the Capital Asset Pricing Model (CAPM) . Essentially, the CAPM equation is a model that determines the relationship between the expected return of an asset and the market risk premium.

The analysis is also used to forecast the returns of securities, based on different factors, or to forecast the performance of a business. Learn more forecasting methods in CFI’s Budgeting and Forecasting Course !

1. Beta and CAPM

In finance, regression analysis is used to calculate the Beta (volatility of returns relative to the overall market) for a stock. It can be done in Excel using the Slope function .

Download CFI’s free beta calculator !

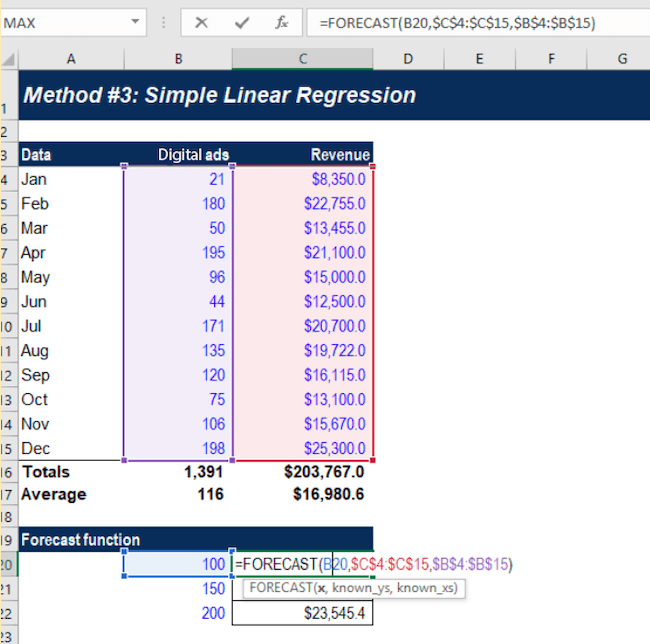

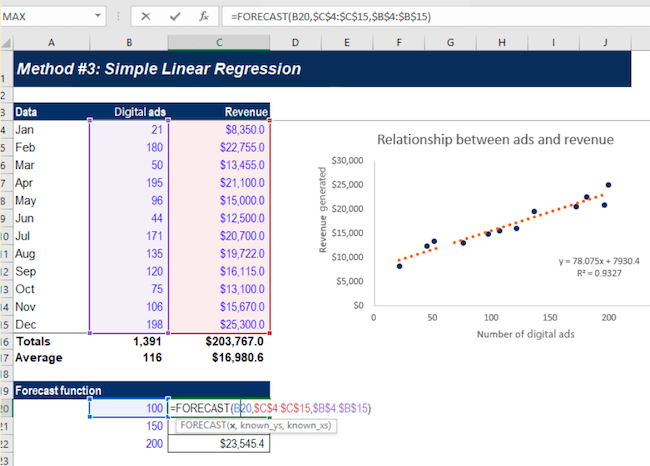

2. Forecasting Revenues and Expenses

When forecasting financial statements for a company, it may be useful to do a multiple regression analysis to determine how changes in certain assumptions or drivers of the business will impact revenue or expenses in the future. For example, there may be a very high correlation between the number of salespeople employed by a company, the number of stores they operate, and the revenue the business generates.

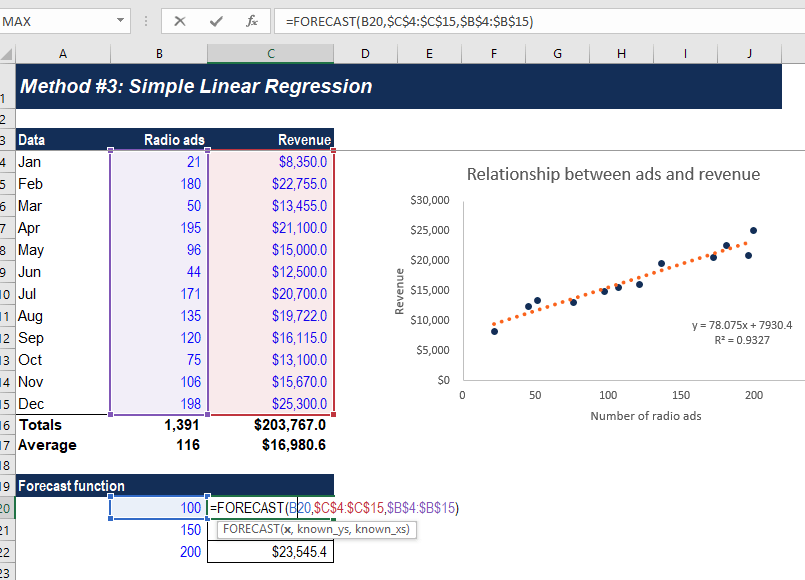

The above example shows how to use the Forecast function in Excel to calculate a company’s revenue, based on the number of ads it runs.

Learn more forecasting methods in CFI’s Budgeting and Forecasting Course !

Regression Tools

Excel remains a popular tool to conduct basic regression analysis in finance, however, there are many more advanced statistical tools that can be used.

Python and R are both powerful coding languages that have become popular for all types of financial modeling, including regression. These techniques form a core part of data science and machine learning where models are trained to detect these relationships in data.

Learn more about regression analysis, Python, and Machine Learning in CFI’s Business Intelligence & Data Analysis certification.

Additional Resources

To learn more about related topics, check out the following free CFI resources:

- Cost Behavior Analysis

- Forecasting Methods

- Joseph Effect

- Variance Inflation Factor (VIF)

- See all data science resources

- Share this article

Create a free account to unlock this Template

Access and download collection of free Templates to help power your productivity and performance.

Already have an account? Log in

Supercharge your skills with Premium Templates

Take your learning and productivity to the next level with our Premium Templates.

Upgrading to a paid membership gives you access to our extensive collection of plug-and-play Templates designed to power your performance—as well as CFI's full course catalog and accredited Certification Programs.

Already have a Self-Study or Full-Immersion membership? Log in

Access Exclusive Templates

Gain unlimited access to more than 250 productivity Templates, CFI's full course catalog and accredited Certification Programs, hundreds of resources, expert reviews and support, the chance to work with real-world finance and research tools, and more.

Already have a Full-Immersion membership? Log in

Suggestions or feedback?

MIT News | Massachusetts Institute of Technology

- Machine learning

- Social justice

- Black holes

- Classes and programs

Departments

- Aeronautics and Astronautics

- Brain and Cognitive Sciences

- Architecture

- Political Science

- Mechanical Engineering

Centers, Labs, & Programs

- Abdul Latif Jameel Poverty Action Lab (J-PAL)

- Picower Institute for Learning and Memory

- Lincoln Laboratory

- School of Architecture + Planning

- School of Engineering

- School of Humanities, Arts, and Social Sciences

- Sloan School of Management

- School of Science

- MIT Schwarzman College of Computing

Explained: Regression analysis

Previous image Next image

Share this news article on:

Related links.

- Department of Economics

- Department of Mathematics

- Explained: "Linear and nonlinear systems"

Related Topics

- Mathematics

More MIT News

Studying astrophysically relevant plasma physics

Read full story →

What is language for?

Signal processing: How did we get to where we’re going?

Summer 2024 reading from MIT

How to increase the rate of plastics recycling

Pioneering the future of materials extraction

- More news on MIT News homepage →

Massachusetts Institute of Technology 77 Massachusetts Avenue, Cambridge, MA, USA

- Map (opens in new window)

- Events (opens in new window)

- People (opens in new window)

- Careers (opens in new window)

- Accessibility

- Social Media Hub

- MIT on Facebook

- MIT on YouTube

- MIT on Instagram

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 31 January 2022

The clinician’s guide to interpreting a regression analysis

- Sofia Bzovsky 1 ,

- Mark R. Phillips ORCID: orcid.org/0000-0003-0923-261X 2 ,

- Robyn H. Guymer ORCID: orcid.org/0000-0002-9441-4356 3 , 4 ,

- Charles C. Wykoff 5 , 6 ,

- Lehana Thabane ORCID: orcid.org/0000-0003-0355-9734 2 , 7 ,

- Mohit Bhandari ORCID: orcid.org/0000-0001-9608-4808 1 , 2 &

- Varun Chaudhary ORCID: orcid.org/0000-0002-9988-4146 1 , 2

on behalf of the R.E.T.I.N.A. study group

Eye volume 36 , pages 1715–1717 ( 2022 ) Cite this article

21k Accesses

9 Citations

1 Altmetric

Metrics details

- Outcomes research

Introduction

When researchers are conducting clinical studies to investigate factors associated with, or treatments for disease and conditions to improve patient care and clinical practice, statistical evaluation of the data is often necessary. Regression analysis is an important statistical method that is commonly used to determine the relationship between several factors and disease outcomes or to identify relevant prognostic factors for diseases [ 1 ].

This editorial will acquaint readers with the basic principles of and an approach to interpreting results from two types of regression analyses widely used in ophthalmology: linear, and logistic regression.

Linear regression analysis

Linear regression is used to quantify a linear relationship or association between a continuous response/outcome variable or dependent variable with at least one independent or explanatory variable by fitting a linear equation to observed data [ 1 ]. The variable that the equation solves for, which is the outcome or response of interest, is called the dependent variable [ 1 ]. The variable that is used to explain the value of the dependent variable is called the predictor, explanatory, or independent variable [ 1 ].

In a linear regression model, the dependent variable must be continuous (e.g. intraocular pressure or visual acuity), whereas, the independent variable may be either continuous (e.g. age), binary (e.g. sex), categorical (e.g. age-related macular degeneration stage or diabetic retinopathy severity scale score), or a combination of these [ 1 ].

When investigating the effect or association of a single independent variable on a continuous dependent variable, this type of analysis is called a simple linear regression [ 2 ]. In many circumstances though, a single independent variable may not be enough to adequately explain the dependent variable. Often it is necessary to control for confounders and in these situations, one can perform a multivariable linear regression to study the effect or association with multiple independent variables on the dependent variable [ 1 , 2 ]. When incorporating numerous independent variables, the regression model estimates the effect or contribution of each independent variable while holding the values of all other independent variables constant [ 3 ].

When interpreting the results of a linear regression, there are a few key outputs for each independent variable included in the model:

Estimated regression coefficient—The estimated regression coefficient indicates the direction and strength of the relationship or association between the independent and dependent variables [ 4 ]. Specifically, the regression coefficient describes the change in the dependent variable for each one-unit change in the independent variable, if continuous [ 4 ]. For instance, if examining the relationship between a continuous predictor variable and intra-ocular pressure (dependent variable), a regression coefficient of 2 means that for every one-unit increase in the predictor, there is a two-unit increase in intra-ocular pressure. If the independent variable is binary or categorical, then the one-unit change represents switching from one category to the reference category [ 4 ]. For instance, if examining the relationship between a binary predictor variable, such as sex, where ‘female’ is set as the reference category, and intra-ocular pressure (dependent variable), a regression coefficient of 2 means that, on average, males have an intra-ocular pressure that is 2 mm Hg higher than females.

Confidence Interval (CI)—The CI, typically set at 95%, is a measure of the precision of the coefficient estimate of the independent variable [ 4 ]. A large CI indicates a low level of precision, whereas a small CI indicates a higher precision [ 5 ].

P value—The p value for the regression coefficient indicates whether the relationship between the independent and dependent variables is statistically significant [ 6 ].

Logistic regression analysis

As with linear regression, logistic regression is used to estimate the association between one or more independent variables with a dependent variable [ 7 ]. However, the distinguishing feature in logistic regression is that the dependent variable (outcome) must be binary (or dichotomous), meaning that the variable can only take two different values or levels, such as ‘1 versus 0’ or ‘yes versus no’ [ 2 , 7 ]. The effect size of predictor variables on the dependent variable is best explained using an odds ratio (OR) [ 2 ]. ORs are used to compare the relative odds of the occurrence of the outcome of interest, given exposure to the variable of interest [ 5 ]. An OR equal to 1 means that the odds of the event in one group are the same as the odds of the event in another group; there is no difference [ 8 ]. An OR > 1 implies that one group has a higher odds of having the event compared with the reference group, whereas an OR < 1 means that one group has a lower odds of having an event compared with the reference group [ 8 ]. When interpreting the results of a logistic regression, the key outputs include the OR, CI, and p-value for each independent variable included in the model.

Clinical example

Sen et al. investigated the association between several factors (independent variables) and visual acuity outcomes (dependent variable) in patients receiving anti-vascular endothelial growth factor therapy for macular oedema (DMO) by means of both linear and logistic regression [ 9 ]. Multivariable linear regression demonstrated that age (Estimate −0.33, 95% CI − 0.48 to −0.19, p < 0.001) was significantly associated with best-corrected visual acuity (BCVA) at 100 weeks at alpha = 0.05 significance level [ 9 ]. The regression coefficient of −0.33 means that the BCVA at 100 weeks decreases by 0.33 with each additional year of older age.

Multivariable logistic regression also demonstrated that age and ellipsoid zone status were statistically significant associated with achieving a BCVA letter score >70 letters at 100 weeks at the alpha = 0.05 significance level. Patients ≥75 years of age were at a decreased odds of achieving a BCVA letter score >70 letters at 100 weeks compared to those <50 years of age, since the OR is less than 1 (OR 0.96, 95% CI 0.94 to 0.98, p = 0.001) [ 9 ]. Similarly, patients between the ages of 50–74 years were also at a decreased odds of achieving a BCVA letter score >70 letters at 100 weeks compared to those <50 years of age, since the OR is less than 1 (OR 0.15, 95% CI 0.04 to 0.48, p = 0.001) [ 9 ]. As well, those with a not intact ellipsoid zone were at a decreased odds of achieving a BCVA letter score >70 letters at 100 weeks compared to those with an intact ellipsoid zone (OR 0.20, 95% CI 0.07 to 0.56; p = 0.002). On the other hand, patients with an ungradable/questionable ellipsoid zone were at an increased odds of achieving a BCVA letter score >70 letters at 100 weeks compared to those with an intact ellipsoid zone, since the OR is greater than 1 (OR 2.26, 95% CI 1.14 to 4.48; p = 0.02) [ 9 ].

The narrower the CI, the more precise the estimate is; and the smaller the p value (relative to alpha = 0.05), the greater the evidence against the null hypothesis of no effect or association.

Simply put, linear and logistic regression are useful tools for appreciating the relationship between predictor/explanatory and outcome variables for continuous and dichotomous outcomes, respectively, that can be applied in clinical practice, such as to gain an understanding of risk factors associated with a disease of interest.

Schneider A, Hommel G, Blettner M. Linear Regression. Anal Dtsch Ärztebl Int. 2010;107:776–82.

Google Scholar

Bender R. Introduction to the use of regression models in epidemiology. In: Verma M, editor. Cancer epidemiology. Methods in molecular biology. Humana Press; 2009:179–95.

Schober P, Vetter TR. Confounding in observational research. Anesth Analg. 2020;130:635.

Article Google Scholar

Schober P, Vetter TR. Linear regression in medical research. Anesth Analg. 2021;132:108–9.

Szumilas M. Explaining odds ratios. J Can Acad Child Adolesc Psychiatry. 2010;19:227–9.

Thiese MS, Ronna B, Ott U. P value interpretations and considerations. J Thorac Dis. 2016;8:E928–31.

Schober P, Vetter TR. Logistic regression in medical research. Anesth Analg. 2021;132:365–6.

Zabor EC, Reddy CA, Tendulkar RD, Patil S. Logistic regression in clinical studies. Int J Radiat Oncol Biol Phys. 2022;112:271–7.

Sen P, Gurudas S, Ramu J, Patrao N, Chandra S, Rasheed R, et al. Predictors of visual acuity outcomes after anti-vascular endothelial growth factor treatment for macular edema secondary to central retinal vein occlusion. Ophthalmol Retin. 2021;5:1115–24.

Download references

R.E.T.I.N.A. study group

Varun Chaudhary 1,2 , Mohit Bhandari 1,2 , Charles C. Wykoff 5,6 , Sobha Sivaprasad 8 , Lehana Thabane 2,7 , Peter Kaiser 9 , David Sarraf 10 , Sophie J. Bakri 11 , Sunir J. Garg 12 , Rishi P. Singh 13,14 , Frank G. Holz 15 , Tien Y. Wong 16,17 , and Robyn H. Guymer 3,4

Author information

Authors and affiliations.

Department of Surgery, McMaster University, Hamilton, ON, Canada

Sofia Bzovsky, Mohit Bhandari & Varun Chaudhary

Department of Health Research Methods, Evidence & Impact, McMaster University, Hamilton, ON, Canada

Mark R. Phillips, Lehana Thabane, Mohit Bhandari & Varun Chaudhary

Centre for Eye Research Australia, Royal Victorian Eye and Ear Hospital, East Melbourne, VIC, Australia

Robyn H. Guymer

Department of Surgery, (Ophthalmology), The University of Melbourne, Melbourne, VIC, Australia

Retina Consultants of Texas (Retina Consultants of America), Houston, TX, USA

Charles C. Wykoff

Blanton Eye Institute, Houston Methodist Hospital, Houston, TX, USA

Biostatistics Unit, St. Joseph’s Healthcare Hamilton, Hamilton, ON, Canada

Lehana Thabane

NIHR Moorfields Biomedical Research Centre, Moorfields Eye Hospital, London, UK

Sobha Sivaprasad

Cole Eye Institute, Cleveland Clinic, Cleveland, OH, USA

Peter Kaiser

Retinal Disorders and Ophthalmic Genetics, Stein Eye Institute, University of California, Los Angeles, CA, USA

David Sarraf

Department of Ophthalmology, Mayo Clinic, Rochester, MN, USA

Sophie J. Bakri

The Retina Service at Wills Eye Hospital, Philadelphia, PA, USA

Sunir J. Garg

Center for Ophthalmic Bioinformatics, Cole Eye Institute, Cleveland Clinic, Cleveland, OH, USA

Rishi P. Singh

Cleveland Clinic Lerner College of Medicine, Cleveland, OH, USA

Department of Ophthalmology, University of Bonn, Bonn, Germany

Frank G. Holz

Singapore Eye Research Institute, Singapore, Singapore

Tien Y. Wong

Singapore National Eye Centre, Duke-NUD Medical School, Singapore, Singapore

You can also search for this author in PubMed Google Scholar

- Varun Chaudhary

- , Mohit Bhandari

- , Charles C. Wykoff

- , Sobha Sivaprasad

- , Lehana Thabane

- , Peter Kaiser

- , David Sarraf

- , Sophie J. Bakri

- , Sunir J. Garg

- , Rishi P. Singh

- , Frank G. Holz

- , Tien Y. Wong

- & Robyn H. Guymer

Contributions

SB was responsible for writing, critical review and feedback on manuscript. MRP was responsible for conception of idea, critical review and feedback on manuscript. RHG was responsible for critical review and feedback on manuscript. CCW was responsible for critical review and feedback on manuscript. LT was responsible for critical review and feedback on manuscript. MB was responsible for conception of idea, critical review and feedback on manuscript. VC was responsible for conception of idea, critical review and feedback on manuscript.

Corresponding author

Correspondence to Varun Chaudhary .

Ethics declarations

Competing interests.

SB: Nothing to disclose. MRP: Nothing to disclose. RHG: Advisory boards: Bayer, Novartis, Apellis, Roche, Genentech Inc.—unrelated to this study. CCW: Consultant: Acuela, Adverum Biotechnologies, Inc, Aerpio, Alimera Sciences, Allegro Ophthalmics, LLC, Allergan, Apellis Pharmaceuticals, Bayer AG, Chengdu Kanghong Pharmaceuticals Group Co, Ltd, Clearside Biomedical, DORC (Dutch Ophthalmic Research Center), EyePoint Pharmaceuticals, Gentech/Roche, GyroscopeTx, IVERIC bio, Kodiak Sciences Inc, Novartis AG, ONL Therapeutics, Oxurion NV, PolyPhotonix, Recens Medical, Regeron Pharmaceuticals, Inc, REGENXBIO Inc, Santen Pharmaceutical Co, Ltd, and Takeda Pharmaceutical Company Limited; Research funds: Adverum Biotechnologies, Inc, Aerie Pharmaceuticals, Inc, Aerpio, Alimera Sciences, Allergan, Apellis Pharmaceuticals, Chengdu Kanghong Pharmaceutical Group Co, Ltd, Clearside Biomedical, Gemini Therapeutics, Genentech/Roche, Graybug Vision, Inc, GyroscopeTx, Ionis Pharmaceuticals, IVERIC bio, Kodiak Sciences Inc, Neurotech LLC, Novartis AG, Opthea, Outlook Therapeutics, Inc, Recens Medical, Regeneron Pharmaceuticals, Inc, REGENXBIO Inc, Samsung Pharm Co, Ltd, Santen Pharmaceutical Co, Ltd, and Xbrane Biopharma AB—unrelated to this study. LT: Nothing to disclose. MB: Research funds: Pendopharm, Bioventus, Acumed—unrelated to this study. VC: Advisory Board Member: Alcon, Roche, Bayer, Novartis; Grants: Bayer, Novartis—unrelated to this study.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Bzovsky, S., Phillips, M.R., Guymer, R.H. et al. The clinician’s guide to interpreting a regression analysis. Eye 36 , 1715–1717 (2022). https://doi.org/10.1038/s41433-022-01949-z

Download citation

Received : 08 January 2022

Revised : 17 January 2022

Accepted : 18 January 2022

Published : 31 January 2022

Issue Date : September 2022

DOI : https://doi.org/10.1038/s41433-022-01949-z

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

When to Use Regression Analysis (With Examples)

Regression analysis can be used to:

- estimate the effect of an exposure on a given outcome

- predict an outcome using known factors

- balance dissimilar groups

- model and replace missing data

- detect unusual records

In the text below, we will go through these points in greater detail and provide a real-world example of each.

1. Estimate the effect of an exposure on a given outcome

Regression can model linear and non-linear associations between an exposure (or treatment) and an outcome of interest. It can also simultaneously model the relationship between more than 1 exposure and an outcome, even when these exposures interact with each other.

Example: Exploring the relationship between Body Mass Index (BMI) and all-cause mortality

De Gonzales et al. used a Cox regression model to estimate the association between BMI and mortality among 1.46 million white adults.

As expected, they found that the risk of mortality increases with progressively higher than normal levels of BMI.

The takeaway message is that regression analysis enabled them to quantify that association while adjusting for smoking, alcohol consumption, physical activity, educational level and marital status — all potential confounders of the relationship between BMI and mortality.

2. Predict an outcome using known factors

A regression model can also be used to predict things like stock prices, weather conditions, the risk of getting a disease, mortality, etc. based on a set of known predictors (also called independent variables).

Example: Predicting malaria in South Africa using seasonal climate data

Kim et al. used Poisson regression to develop a malaria prediction model using climate data such as temperature and precipitation in South Africa.

The model performed best with short-term predictions.

Anyway, the important thing to notice here is the amount of complexities that a regression model can handle. For instance in this example, the model had to be flexible enough to account for non-linear and delayed associations between malaria transmission and climate factors.

This is a recurrent theme with predictive models: We start with a simple model, then we keep adding complexities until we get a satisfying result — this is why we call it model building .

3. Balance dissimilar groups

Proving that a relationship exists between some independent variable X and an outcome Y does not mean much if this result cannot be generalized beyond your sample.

In order for your results to generalize well, the sample you’re working with has to resemble the population from which it was drawn. If it doesn’t, you can use regression to balance some important characteristics in the sample to make it representative of the population of interest.

Another case where you would want to balance dissimilar groups is in a randomized controlled trial, where the objective is to compare the outcome between the group who received the intervention and another one that serves as control/reference. But in order for the comparison to make sense, the 2 groups must have similar characteristics.

Example: Evaluating how sleep quality is affected by sleep hygiene education and behavioral therapy

Nishinoue et al. conducted a randomized controlled trial to compare sleep quality between 2 groups of participants:

- The treatment group: Participants received sleep hygiene education and behavioral therapy

- The control group: Participants received sleep hygiene education only

A generalized linear model (a generalized form of linear regression) was used to:

- Evaluate how sleep quality changed between groups

- Adjust for age, gender, job title, smoking and drinking habits, body-mass index, and mental health to make the groups more comparable

4. Model and replace missing data

Modeling missing data is an important part of data analysis, especially in cases where you have high non-response rates (so a high number of missing values) like in telephone surveys.

Before jumping into imputing missing data, first you must determine:

- How important the variables that have missing values are in your analysis

- The percentage of missing values

- If these values were missing at random or not

Based on this analysis, you can then choose to:

- Delete observations with missing values

- Replace missing data with the column’s mean or median

- Use a a regression model to replace missing data

Example: Using multiple imputation to replace missing data in a medical study

Beynon et al. studied the prognostic role of alcohol and smoking at diagnosis of head and neck cancer.

But before they built their statistical model, they noticed that 11 variables (including smoking status and alcohol intake and other covariates) had missing values, so they used a technique called MICE (Multiple Imputation by Chained Equations) which runs regression models under the hood to replace missing values.

5. Detect unusual records

Regression models alongside other statistical techniques can be used to model how “normal data” should look like, the purpose being to detect values that deviate from this norm. These are referred to as “anomalies” or “outliers” in the data.

Most applications of anomaly detection is outside the healthcare domain. It is typically used for detection of financial frauds, atypical online behavior of website visitors, detection of anomalies in machine performance in a factory, etc.

Example: Detecting critical cases of patients undergoing heart surgery

Presbitero et al. used a time-varying autoregressive model (along with other statistical measures) to flag abnormal cases of patients undergoing heart surgery using data on their blood measurements.

Their goal is to ultimately prevent patient death by allowing early intervention to take place through the use of this early warning detection algorithm.

Further reading

- Variables to Include in a Regression Model

- Understand Linear Regression Assumptions

- 7 Tricks to Get Statistically Significant p-Values

- How to Handle Missing Data in Practice: Guide for Beginners

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Making Predictions with Regression Analysis

By Jim Frost 37 Comments

If you were able to make predictions about something important to you, you’d probably love that, right? It’s even better if you know that your predictions are sound. In this post, I show how to use regression analysis to make predictions and determine whether they are both unbiased and precise.

You can use regression equations to make predictions. Regression equations are a crucial part of the statistical output after you fit a model. The coefficients in the equation define the relationship between each independent variable and the dependent variable. However, you can also enter values for the independent variables into the equation to predict the mean value of the dependent variable.

Related post : When Should I Use Regression Analysis?

The Regression Approach for Predictions

Using regression to make predictions doesn’t necessarily involve predicting the future. Instead, you predict the mean of the dependent variable given specific values of the independent variable(s). For our example, we’ll use one independent variable to predict the dependent variable. I measured both of these variables at the same point in time.

The general procedure for using regression to make good predictions is the following:

- Research the subject-area so you can build on the work of others. This research helps with the subsequent steps.

- Collect data for the relevant variables.

- Specify and assess your regression model.

- If you have a model that adequately fits the data, use it to make predictions.

While this process involves more work than the psychic approach, it provides valuable benefits. With regression, we can evaluate the bias and precision of our predictions:

- Bias in a statistical model indicates that the predictions are systematically too high or too low.

- Precision represents how close the predictions are to the observed values.

When we use regression to make predictions, our goal is to produce predictions that are both correct on average and close to the real values. In other words, we need predictions that are both unbiased and precise.

Example Scenario for Regression Predictions

We’ll use a regression model to predict body fat percentage based on body mass index (BMI). I collected these data for a study with 92 middle school girls. The variables we measured include height, weight, and body fat measured by a Hologic DXA whole-body system. I’ve calculated the BMI using the height and weight measurements. DXA measurements of body fat percentage are considered to be among the best.

You can download the CSV data file: Predict_BMI .

Why might we want to use BMI to predict body fat percentage? It’s more expensive to obtain your body fat percentage through a direct measure like DXA. If you can use your BMI to predict your body fat percentage, that provides valuable information more easily and cheaply. Let’s see if BMI can produce good predictions!

Finding a Good Regression Model for Predictions

We have the data. Now, we need to determine whether there is a statistically significant relationship between the variables. Relationships, or correlations between variables, are crucial if we want to use the value of one variable to predict the value of another. We also need to evaluate the suitability of the regression model for making predictions.

We have only one independent variable (BMI), so we can use a fitted line plot to display its relationship with body fat percentage. The relationship between the variables is curvilinear. I’ll use a polynomial term to fit the curvature. In this case, I’ll include a quadratic (squared) term. The fitted line plot below suggests that this model fits the data.

Related post : Curve Fitting using Linear and Nonlinear Regression

This curvature is readily apparent because we have only one independent variable and we can graph the relationship. If your model has more than one independent variable, use separate scatterplots to display the association between each independent variable and the dependent variable so you can evaluate the nature of each relationship.

Assess the residual plots

You should also assess the residual plots . If you see patterns in the residual plots, you know that your model is incorrect and that you need to reevaluate it. Non-random residuals indicate that the predicted values are biased. You need to fix the model to produce unbiased predictions.

Learn how to choose the correct regression model .

The residual plots below also confirm the unbiased fit because the data points fall randomly around zero and follow a normal distribution.

Interpret the regression output

In the statistical output below, the p-values indicate that both the linear and squared terms are statistically significant. Based on all of this information, we have a model that provides a statistically significant and unbiased fit to these data. We have a valid regression model. However, there are additional issues we must consider before we can use this model to make predictions.

As an aside, the curved relationship is interesting. The flattening curve indicates that higher BMI values are associated with smaller increases in body fat percentage.

Other Considerations for Valid Predictions

Precision of the predictions.

Previously, we established that our regression model provides unbiased predictions of the observed values. That’s good. However, it doesn’t address the precision of those predictions. Precision measures how close the predictions are to the observed values. We want the predictions to be both unbiased and close to the actual values. Predictions are precise when the observed values cluster close to the predicted values.

Regression predictions are for the mean of the dependent variable. If you think of any mean, you know that there is variation around that mean. The same applies to the predicted mean of the dependent variable. In the fitted line plot, the regression line is nicely in the center of the data points. However, there is a spread of data points around the line. We need to quantify that spread to know how close the predictions are to the observed values. If the spread is too large, the predictions won’t provide useful information.

Later, I’ll generate predictions and show you how to assess the precision.

Related post : Understand Precision in Applied Regression to Avoid Costly Mistakes

Goodness-of-Fit Measures

Goodness-of-fit measures, like R-squared , assess the scatter of the data points around the fitted value. The R-squared for our model is 76.1%, which is good but not great. For a given dataset, higher R-squared values represent predictions that are more precise. However, R-squared doesn’t tell us directly how precise the predictions are in the units of the dependent variable. We can use the standard error of the regression (S) to assess the precision in this manner. However, for this post, I’ll use prediction intervals to evaluate precision.

Related post : Standard Error of the Regression vs. R-squared

New Observations versus Data Used to Fit the Model