How to Insert the Null Hypothesis & Alternate Hypothesis Symbols in Microsoft Word

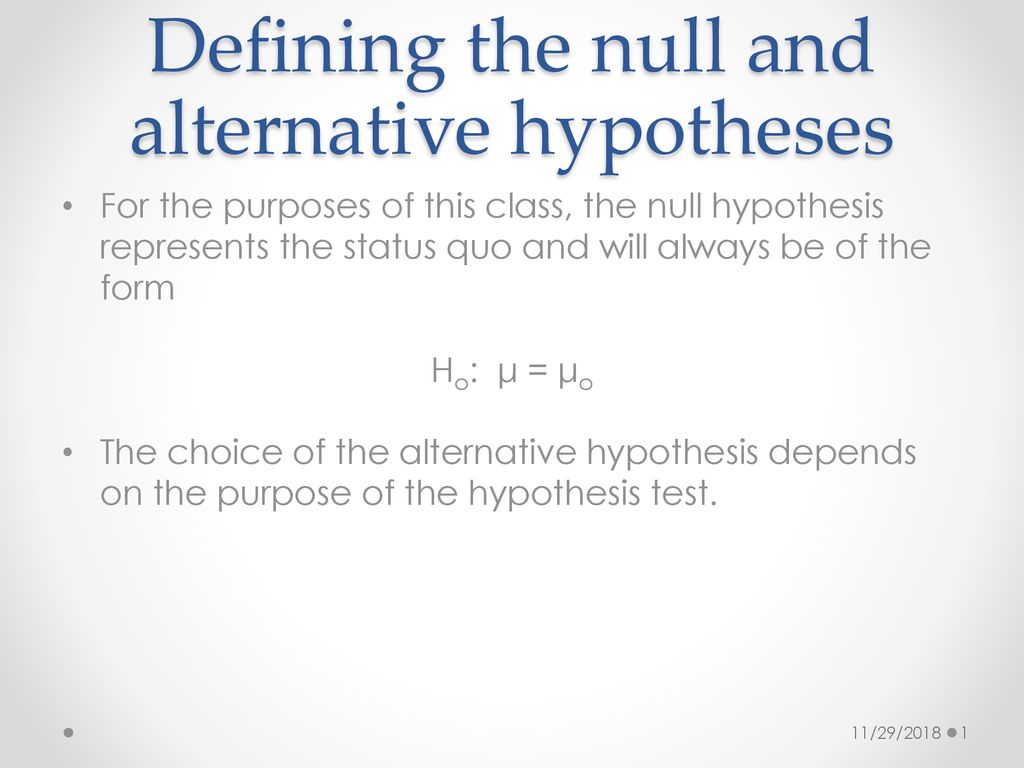

Although the symbols for the null hypothesis and alternative hypothesis -- sometimes called the alternate hypothesis -- do not exist as special characters in Microsoft Word, they are easily created with subscripts.The alternate hypothesis is symbolically represented by a capitalized "H," followed by a subscript "1," although some researchers prefer an "a." The null hypothesis is represented by a capitalized "H," followed by a subscript "0" or "o." The accepted practice in the scientific community is to use two hypotheses when testing the relationship between two events. The alternative hypothesis states that the two events are related. However, scientists have found that testing for a direct correlation can cause bias in the testing procedure. To avoid this bias, scientists test a null hypothesis that states there is no correlation. By disproving the null hypothesis, you imply a correlation in the alternate hypothesis. A similar system is used in the United States legal system where a defendant is found "not guilty," rather than being found "innocent."

Advertisement

Open your document in Microsoft Word and click wherever you want the hypothesis symbols to appear.

Video of the Day

Type a capital "H" on your keyboard.

Click the subscript button, located in the "Font" group of the "Home" tab. This button's icon looks like an "x" with a subscript "2." Alternatively, hold the "Ctrl" key and press "=".

Type a "0" to create a null hypothesis symbol or "1" to create an alternative hypothesis symbol. Alternatively, type an "o" or "a" to represent the null and alternative hypotheses, respectively, although these symbols are not as frequently used.

Press the subscript button again to exit this formatting mode.

- University of New England: Null and Alternative Hypothesis

How to Insert the Null Hypothesis Symbol in Microsoft Word

By filonia lechat / in computers & electronics.

Microsoft Word is a blank canvas for typing anything from facts to not-yet-proven-as facts, and when you are writing specifically-scientific information, you may encounter the need to type characters you can't quite find on the keyboard. In cases of superscript and subscript, found in items such as null hypothesis symbols, Word offers a way to quickly render standard text typed on the page into its proper placement.

Open Microsoft Word. To insert the null hypothesis symbol into an existing document, click the "File" tab. Click "Open." Browse to the Word file, double-click the name and scroll to the place in the document to insert the symbol.

- Microsoft Word is a blank canvas for typing anything from facts to not-yet-proven-as facts, and when you are writing specifically-scientific information, you may encounter the need to type characters you can't quite find on the keyboard.

- Browse to the Word file, double-click the name and scroll to the place in the document to insert the symbol.

Press and hold down the "Shift" key while typing the letter "H" to get a capital "H." Release the "Shift" key.

Type the number zero (0). Make sure to type a zero and not a capital "O."

Highlight the zero. Right-click the highlight and select "Font."

- Make sure to type a zero and not a capital "O."

- Highlight the zero.

Click a check into the "Subscript" box near the bottom of the "Font" window. Click the "OK" button. The zero is reduced to subscript, completing the null hypothesis symbol.

- Null hypothesis

by Marco Taboga , PhD

In a test of hypothesis , a sample of data is used to decide whether to reject or not to reject a hypothesis about the probability distribution from which the sample was extracted.

The hypothesis is called the null hypothesis, or simply "the null".

Table of contents

The null is like the defendant in a criminal trial

How is the null hypothesis tested, example 1 - proportion of defective items, measurement, test statistic, critical region, interpretation, example 2 - reliability of a production plant, rejection and failure to reject, not rejecting and accepting are not the same thing, failure to reject can be due to lack of power, rejections are easier to interpret, but be careful, takeaways - how to (and not to) formulate a null hypothesis, more examples, more details, best practices in science, keep reading the glossary.

Formulating null hypotheses and subjecting them to statistical testing is one of the workhorses of the scientific method.

Scientists in all fields make conjectures about the phenomena they study, translate them into null hypotheses and gather data to test them.

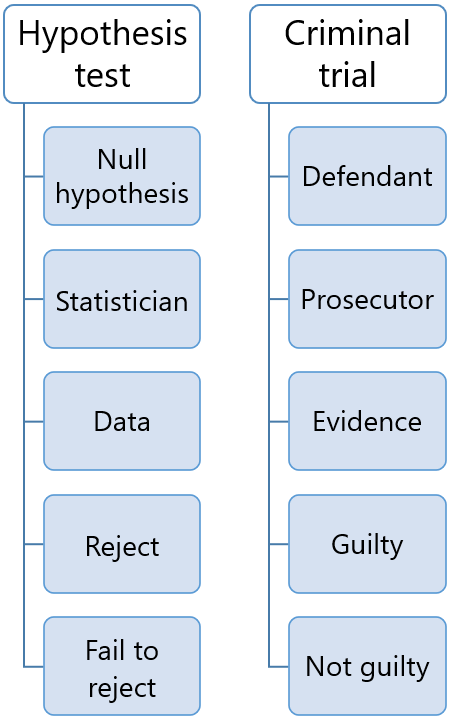

This process resembles a trial:

the defendant (the null hypothesis) is accused of being guilty (wrong);

evidence (data) is gathered in order to prove the defendant guilty (reject the null);

if there is evidence beyond any reasonable doubt, the defendant is found guilty (the null is rejected);

otherwise, the defendant is found not guilty (the null is not rejected).

Keep this analogy in mind because it helps to better understand statistical tests, their limitations, use and misuse, and frequent misinterpretation.

Before collecting the data:

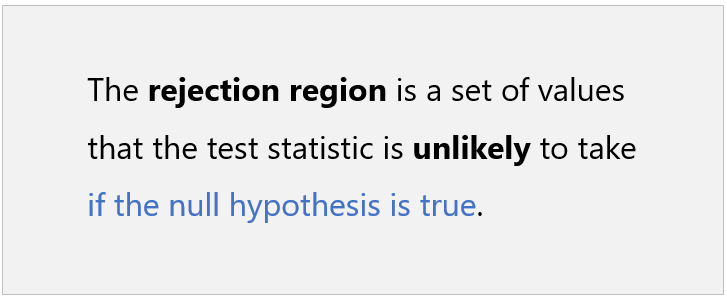

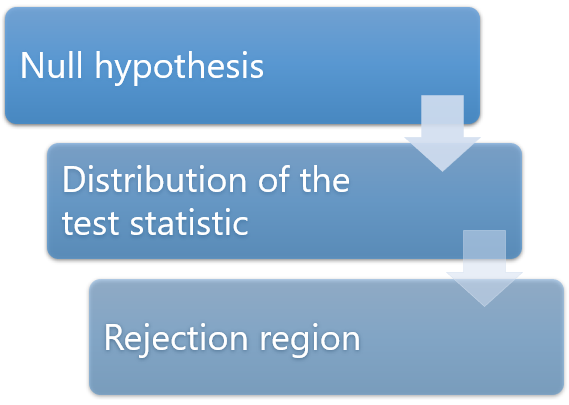

we decide how to summarize the relevant characteristics of the sample data in a single number, the so-called test statistic ;

we derive the probability distribution of the test statistic under the hypothesis that the null is true (the data is regarded as random; therefore, the test statistic is a random variable);

we decide what probability of incorrectly rejecting the null we are willing to tolerate (the level of significance , or size of the test ); the level of significance is typically a small number, such as 5% or 1%.

we choose one or more intervals of values (collectively called rejection region) such that the probability that the test statistic falls within these intervals is equal to the desired level of significance; the rejection region is often a tail of the distribution of the test statistic (one-tailed test) or the union of the left and right tails (two-tailed test).

Then, the data is collected and used to compute the value of the test statistic.

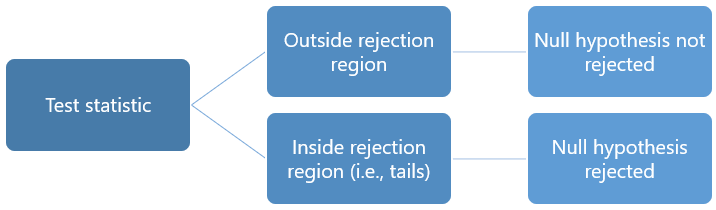

A decision is taken as follows:

if the test statistic falls within the rejection region, then the null hypothesis is rejected;

otherwise, it is not rejected.

We now make two examples of practical problems that lead to formulate and test a null hypothesis.

A new method is proposed to produce light bulbs.

The proponents claim that it produces less defective bulbs than the method currently in use.

To check the claim, we can set up a statistical test as follows.

We keep the light bulbs on for 10 consecutive days, and then we record whether they are still working at the end of the test period.

The probability that a light bulb produced with the new method is still working at the end of the test period is the same as that of a light bulb produced with the old method.

100 light bulbs are tested:

50 of them are produced with the new method (group A)

the remaining 50 are produced with the old method (group B).

The final data comprises 100 observations of:

an indicator variable which is equal to 1 if the light bulb is still working at the end of the test period and 0 otherwise;

a categorical variable that records the group (A or B) to which each light bulb belongs.

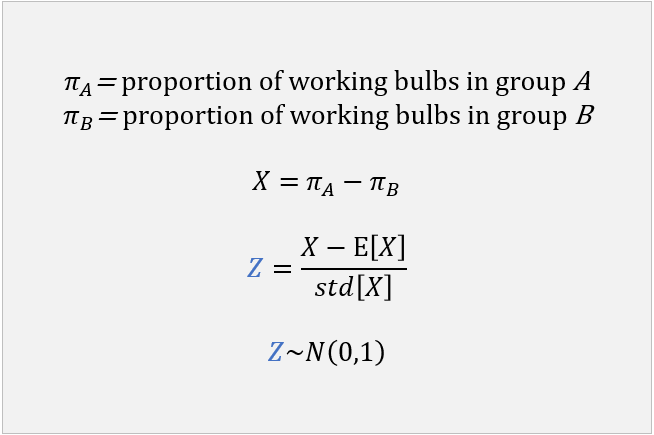

We use the data to compute the proportions of working light bulbs in groups A and B.

The proportions are estimates of the probabilities of not being defective, which are equal for the two groups under the null hypothesis.

We then compute a z-statistic (see here for details) by:

taking the difference between the proportion in group A and the proportion in group B;

standardizing the difference:

we subtract the expected value (which is zero under the null hypothesis);

we divide by the standard deviation (it can be derived analytically).

The distribution of the z-statistic can be approximated by a standard normal distribution .

We decide that the level of confidence must be 5%. In other words, we are going to tolerate a 5% probability of incorrectly rejecting the null hypothesis.

The critical region is the right 5%-tail of the normal distribution, that is, the set of all values greater than 1.645 (see the glossary entry on critical values if you are wondering how this value was obtained).

If the test statistic is greater than 1.645, then the null hypothesis is rejected; otherwise, it is not rejected.

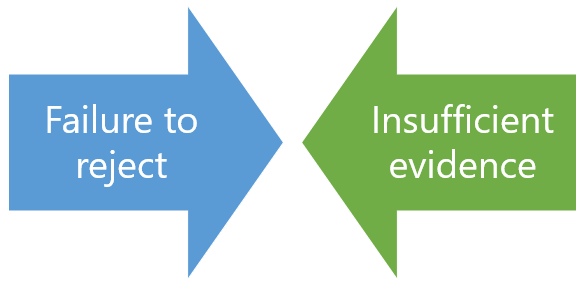

A rejection is interpreted as significant evidence that the new production method produces less defective items; failure to reject is interpreted as insufficient evidence that the new method is better.

A production plant incurs high costs when production needs to be halted because some machinery fails.

The plant manager has decided that he is not willing to tolerate more than one halt per year on average.

If the expected number of halts per year is greater than 1, he will make new investments in order to improve the reliability of the plant.

A statistical test is set up as follows.

The reliability of the plant is measured by the number of halts.

The number of halts in a year is assumed to have a Poisson distribution with expected value equal to 1 (using the Poisson distribution is common in reliability testing).

The manager cannot wait more than one year before taking a decision.

There will be a single datum at his disposal: the number of halts observed during one year.

The number of halts is used as a test statistic. By assumption, it has a Poisson distribution under the null hypothesis.

The manager decides that the probability of incorrectly rejecting the null can be at most 10%.

A Poisson random variable with expected value equal to 1 takes values:

larger than 1 with probability 26.42%;

larger than 2 with probability 8.03%.

Therefore, it is decided that the critical region will be the set of all values greater than or equal to 3.

If the test statistic is strictly greater than or equal to 3, then the null is rejected; otherwise, it is not rejected.

A rejection is interpreted as significant evidence that the production plant is not reliable enough (the average number of halts per year is significantly larger than tolerated).

Failure to reject is interpreted as insufficient evidence that the plant is unreliable.

This section discusses the main problems that arise in the interpretation of the outcome of a statistical test (reject / not reject).

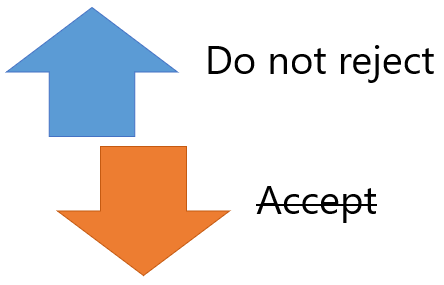

When the test statistic does not fall within the critical region, then we do not reject the null hypothesis.

Does this mean that we accept the null? Not really.

In general, failure to reject does not constitute, per se, strong evidence that the null hypothesis is true .

Remember the analogy between hypothesis testing and a criminal trial. In a trial, when the defendant is declared not guilty, this does not mean that the defendant is innocent. It only means that there was not enough evidence (not beyond any reasonable doubt) against the defendant.

In turn, lack of evidence can be due:

either to the fact that the defendant is innocent ;

or to the fact that the prosecution has not been able to provide enough evidence against the defendant, even if the latter is guilty .

This is the very reason why courts do not declare defendants innocent, but they use the locution "not guilty".

In a similar fashion, statisticians do not say that the null hypothesis has been accepted, but they say that it has not been rejected.

To better understand why failure to reject does not in general constitute strong evidence that the null hypothesis is true, we need to use the concept of statistical power .

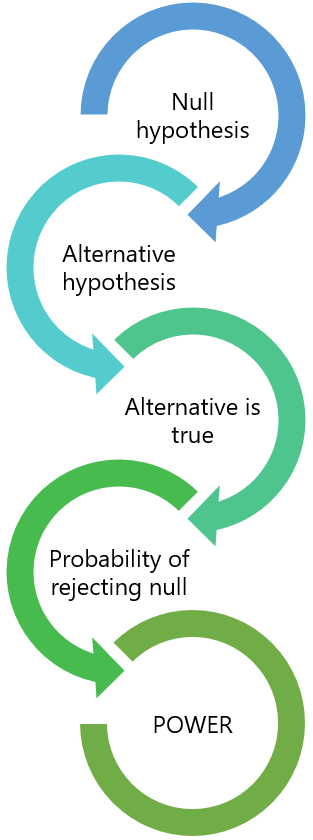

The power of a test is the probability (calculated ex-ante, i.e., before observing the data) that the null will be rejected when another hypothesis (called the alternative hypothesis ) is true.

Let's consider the first of the two examples above (the production of light bulbs).

In that example, the null hypothesis is: the probability that a light bulb is defective does not decrease after introducing a new production method.

Let's make the alternative hypothesis that the probability of being defective is 1% smaller after changing the production process (assume that a 1% decrease is considered a meaningful improvement by engineers).

How much is the ex-ante probability of rejecting the null if the alternative hypothesis is true?

If this probability (the power of the test) is small, then it is very likely that we will not reject the null even if it is wrong.

If we use the analogy with criminal trials, low power means that most likely the prosecution will not be able to provide sufficient evidence, even if the defendant is guilty.

Thus, in the case of lack of power, failure to reject is almost meaningless (it was anyway highly likely).

This is why, before performing a test, it is good statistical practice to compute its power against a relevant alternative .

If the power is found to be too small, there are usually remedies. In particular, statistical power can usually be increased by increasing the sample size (see, e.g., the lecture on hypothesis tests about the mean ).

As we have explained above, interpreting a failure to reject the null hypothesis is not always straightforward. Instead, interpreting a rejection is somewhat easier.

When we reject the null, we know that the data has provided a lot of evidence against the null. In other words, it is unlikely (how unlikely depends on the size of the test) that the null is true given the data we have observed.

There is an important caveat though. The null hypothesis is often made up of several assumptions, including:

the main assumption (the one we are testing);

other assumptions (e.g., technical assumptions) that we need to make in order to set up the hypothesis test.

For instance, in Example 2 above (reliability of a production plant), the main assumption is that the expected number of production halts per year is equal to 1. But there is also a technical assumption: the number of production halts has a Poisson distribution.

It must be kept in mind that a rejection is always a joint rejection of the main assumption and all the other assumptions .

Therefore, we should always ask ourselves whether the null has been rejected because the main assumption is wrong or because the other assumptions are violated.

In the case of Example 2 above, is a rejection of the null due to the fact that the expected number of halts is greater than 1 or is it due to the fact that the distribution of the number of halts is very different from a Poisson distribution?

When we suspect that a rejection is due to the inappropriateness of some technical assumption (e.g., assuming a Poisson distribution in the example), we say that the rejection could be due to misspecification of the model .

The right thing to do when these kind of suspicions arise is to conduct so-called robustness checks , that is, to change the technical assumptions and carry out the test again.

In our example, we could re-run the test by assuming a different probability distribution for the number of halts (e.g., a negative binomial or a compound Poisson - do not worry if you have never heard about these distributions).

If we keep obtaining a rejection of the null even after changing the technical assumptions several times, the we say that our rejection is robust to several different specifications of the model .

What are the main practical implications of everything we have said thus far? How does the theory above help us to set up and test a null hypothesis?

What we said can be summarized in the following guiding principles:

A test of hypothesis is like a criminal trial and you are the prosecutor . You want to find evidence that the defendant (the null hypothesis) is guilty. Your job is not to prove that the defendant is innocent. If you find yourself hoping that the defendant is found not guilty (i.e., the null is not rejected) then something is wrong with the way you set up the test. Remember: you are the prosecutor.

Compute the power of your test against one or more relevant alternative hypotheses. Do not run a test if you know ex-ante that it is unlikely to reject the null when the alternative hypothesis is true.

Beware of technical assumptions that you add to the main assumption you want to test. Make robustness checks in order to verify that the outcome of the test is not biased by model misspecification.

More examples of null hypotheses and how to test them can be found in the following lectures.

| Where the example is found | Null hypothesis |

|---|---|

| The mean of a normal distribution is equal to a certain value | |

| The variance of a normal distribution is equal to a certain value | |

| A vector of parameters estimated by MLE satisfies a set of linear or non-linear restrictions | |

| A regression coefficient is equal to a certain value |

The lecture on Hypothesis testing provides a more detailed mathematical treatment of null hypotheses and how they are tested.

This lecture on the null hypothesis was featured in Stanford University's Best practices in science .

Previous entry: Normal equations

Next entry: Parameter

How to cite

Please cite as:

Taboga, Marco (2021). "Null hypothesis", Lectures on probability theory and mathematical statistics. Kindle Direct Publishing. Online appendix. https://www.statlect.com/glossary/null-hypothesis.

Most of the learning materials found on this website are now available in a traditional textbook format.

- Permutations

- Characteristic function

- Almost sure convergence

- Likelihood ratio test

- Uniform distribution

- Bernoulli distribution

- Multivariate normal distribution

- Chi-square distribution

- Maximum likelihood

- Mathematical tools

- Fundamentals of probability

- Probability distributions

- Asymptotic theory

- Fundamentals of statistics

- About Statlect

- Cookies, privacy and terms of use

- Precision matrix

- Distribution function

- Mean squared error

- IID sequence

- To enhance your privacy,

- we removed the social buttons,

- but don't forget to share .

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

AP®︎/College Statistics

Course: ap®︎/college statistics > unit 10.

- Idea behind hypothesis testing

Examples of null and alternative hypotheses

- Writing null and alternative hypotheses

- P-values and significance tests

- Comparing P-values to different significance levels

- Estimating a P-value from a simulation

- Estimating P-values from simulations

- Using P-values to make conclusions

Want to join the conversation?

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

Video transcript

Frequently asked questions

What symbols are used to represent null hypotheses.

The null hypothesis is often abbreviated as H 0 . When the null hypothesis is written using mathematical symbols, it always includes an equality symbol (usually =, but sometimes ≥ or ≤).

Frequently asked questions: Statistics

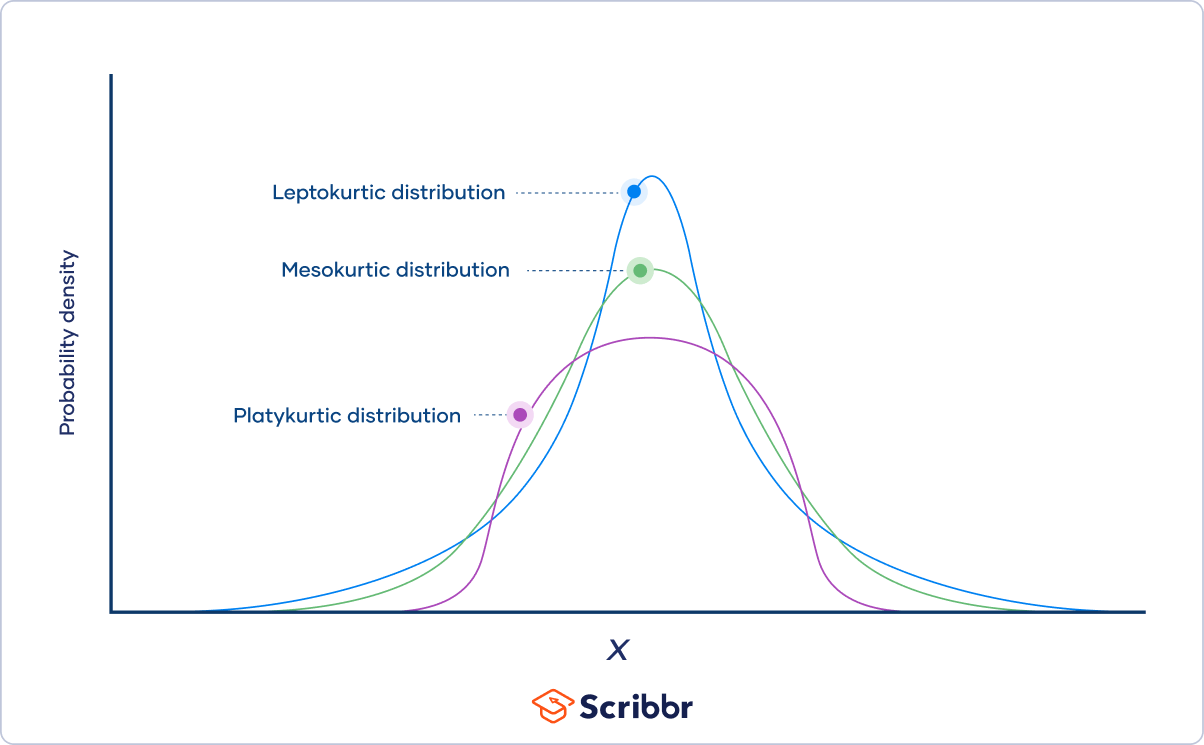

As the degrees of freedom increase, Student’s t distribution becomes less leptokurtic , meaning that the probability of extreme values decreases. The distribution becomes more and more similar to a standard normal distribution .

The three categories of kurtosis are:

- Mesokurtosis : An excess kurtosis of 0. Normal distributions are mesokurtic.

- Platykurtosis : A negative excess kurtosis. Platykurtic distributions are thin-tailed, meaning that they have few outliers .

- Leptokurtosis : A positive excess kurtosis. Leptokurtic distributions are fat-tailed, meaning that they have many outliers.

Probability distributions belong to two broad categories: discrete probability distributions and continuous probability distributions . Within each category, there are many types of probability distributions.

Probability is the relative frequency over an infinite number of trials.

For example, the probability of a coin landing on heads is .5, meaning that if you flip the coin an infinite number of times, it will land on heads half the time.

Since doing something an infinite number of times is impossible, relative frequency is often used as an estimate of probability. If you flip a coin 1000 times and get 507 heads, the relative frequency, .507, is a good estimate of the probability.

Categorical variables can be described by a frequency distribution. Quantitative variables can also be described by a frequency distribution, but first they need to be grouped into interval classes .

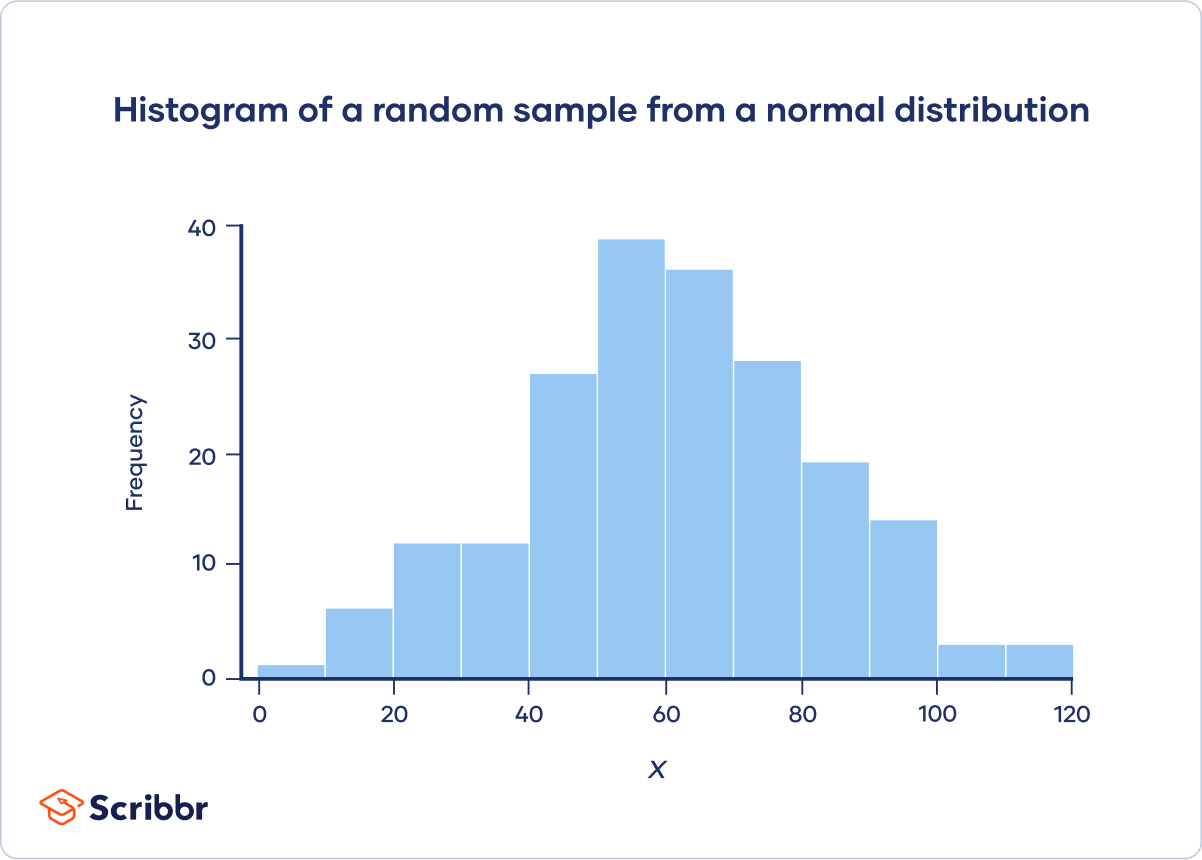

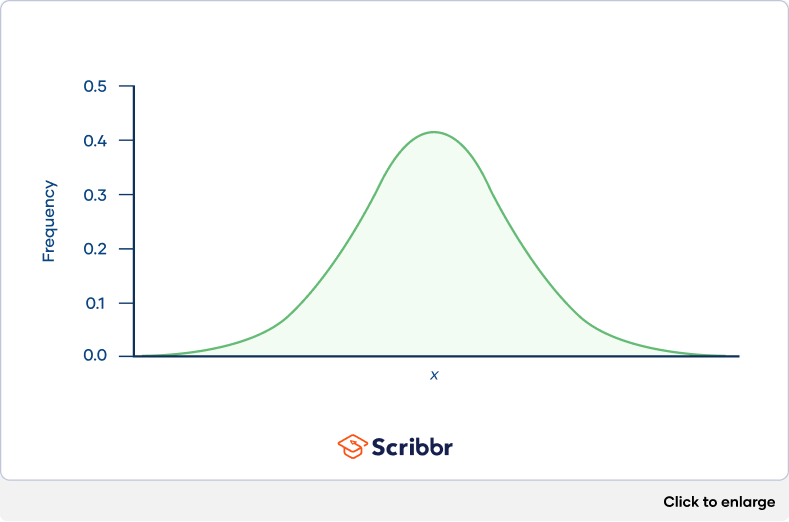

A histogram is an effective way to tell if a frequency distribution appears to have a normal distribution .

Plot a histogram and look at the shape of the bars. If the bars roughly follow a symmetrical bell or hill shape, like the example below, then the distribution is approximately normally distributed.

You can use the CHISQ.INV.RT() function to find a chi-square critical value in Excel.

For example, to calculate the chi-square critical value for a test with df = 22 and α = .05, click any blank cell and type:

=CHISQ.INV.RT(0.05,22)

You can use the qchisq() function to find a chi-square critical value in R.

For example, to calculate the chi-square critical value for a test with df = 22 and α = .05:

qchisq(p = .05, df = 22, lower.tail = FALSE)

You can use the chisq.test() function to perform a chi-square test of independence in R. Give the contingency table as a matrix for the “x” argument. For example:

m = matrix(data = c(89, 84, 86, 9, 8, 24), nrow = 3, ncol = 2)

chisq.test(x = m)

You can use the CHISQ.TEST() function to perform a chi-square test of independence in Excel. It takes two arguments, CHISQ.TEST(observed_range, expected_range), and returns the p value.

Chi-square goodness of fit tests are often used in genetics. One common application is to check if two genes are linked (i.e., if the assortment is independent). When genes are linked, the allele inherited for one gene affects the allele inherited for another gene.

Suppose that you want to know if the genes for pea texture (R = round, r = wrinkled) and color (Y = yellow, y = green) are linked. You perform a dihybrid cross between two heterozygous ( RY / ry ) pea plants. The hypotheses you’re testing with your experiment are:

- This would suggest that the genes are unlinked.

- This would suggest that the genes are linked.

You observe 100 peas:

- 78 round and yellow peas

- 6 round and green peas

- 4 wrinkled and yellow peas

- 12 wrinkled and green peas

Step 1: Calculate the expected frequencies

To calculate the expected values, you can make a Punnett square. If the two genes are unlinked, the probability of each genotypic combination is equal.

| RRYY | RrYy | RRYy | RrYY | |

| RrYy | rryy | Rryy | rrYy | |

| RRYy | Rryy | RRyy | RrYy | |

| RrYY | rrYy | RrYy | rrYY |

The expected phenotypic ratios are therefore 9 round and yellow: 3 round and green: 3 wrinkled and yellow: 1 wrinkled and green.

From this, you can calculate the expected phenotypic frequencies for 100 peas:

| Round and yellow | 78 | 100 * (9/16) = 56.25 |

| Round and green | 6 | 100 * (3/16) = 18.75 |

| Wrinkled and yellow | 4 | 100 * (3/16) = 18.75 |

| Wrinkled and green | 12 | 100 * (1/16) = 6.21 |

Step 2: Calculate chi-square

| − | − | ||||

| Round and yellow | 78 | 56.25 | 21.75 | 473.06 | 8.41 |

| Round and green | 6 | 18.75 | −12.75 | 162.56 | 8.67 |

| Wrinkled and yellow | 4 | 18.75 | −14.75 | 217.56 | 11.6 |

| Wrinkled and green | 12 | 6.21 | 5.79 | 33.52 | 5.4 |

Χ 2 = 8.41 + 8.67 + 11.6 + 5.4 = 34.08

Step 3: Find the critical chi-square value

Since there are four groups (round and yellow, round and green, wrinkled and yellow, wrinkled and green), there are three degrees of freedom .

For a test of significance at α = .05 and df = 3, the Χ 2 critical value is 7.82.

Step 4: Compare the chi-square value to the critical value

Χ 2 = 34.08

Critical value = 7.82

The Χ 2 value is greater than the critical value .

Step 5: Decide whether the reject the null hypothesis

The Χ 2 value is greater than the critical value, so we reject the null hypothesis that the population of offspring have an equal probability of inheriting all possible genotypic combinations. There is a significant difference between the observed and expected genotypic frequencies ( p < .05).

The data supports the alternative hypothesis that the offspring do not have an equal probability of inheriting all possible genotypic combinations, which suggests that the genes are linked

You can use the chisq.test() function to perform a chi-square goodness of fit test in R. Give the observed values in the “x” argument, give the expected values in the “p” argument, and set “rescale.p” to true. For example:

chisq.test(x = c(22,30,23), p = c(25,25,25), rescale.p = TRUE)

You can use the CHISQ.TEST() function to perform a chi-square goodness of fit test in Excel. It takes two arguments, CHISQ.TEST(observed_range, expected_range), and returns the p value .

Both correlations and chi-square tests can test for relationships between two variables. However, a correlation is used when you have two quantitative variables and a chi-square test of independence is used when you have two categorical variables.

Both chi-square tests and t tests can test for differences between two groups. However, a t test is used when you have a dependent quantitative variable and an independent categorical variable (with two groups). A chi-square test of independence is used when you have two categorical variables.

The two main chi-square tests are the chi-square goodness of fit test and the chi-square test of independence .

A chi-square distribution is a continuous probability distribution . The shape of a chi-square distribution depends on its degrees of freedom , k . The mean of a chi-square distribution is equal to its degrees of freedom ( k ) and the variance is 2 k . The range is 0 to ∞.

As the degrees of freedom ( k ) increases, the chi-square distribution goes from a downward curve to a hump shape. As the degrees of freedom increases further, the hump goes from being strongly right-skewed to being approximately normal.

To find the quartiles of a probability distribution, you can use the distribution’s quantile function.

You can use the quantile() function to find quartiles in R. If your data is called “data”, then “quantile(data, prob=c(.25,.5,.75), type=1)” will return the three quartiles.

You can use the QUARTILE() function to find quartiles in Excel. If your data is in column A, then click any blank cell and type “=QUARTILE(A:A,1)” for the first quartile, “=QUARTILE(A:A,2)” for the second quartile, and “=QUARTILE(A:A,3)” for the third quartile.

You can use the PEARSON() function to calculate the Pearson correlation coefficient in Excel. If your variables are in columns A and B, then click any blank cell and type “PEARSON(A:A,B:B)”.

There is no function to directly test the significance of the correlation.

You can use the cor() function to calculate the Pearson correlation coefficient in R. To test the significance of the correlation, you can use the cor.test() function.

You should use the Pearson correlation coefficient when (1) the relationship is linear and (2) both variables are quantitative and (3) normally distributed and (4) have no outliers.

The Pearson correlation coefficient ( r ) is the most common way of measuring a linear correlation. It is a number between –1 and 1 that measures the strength and direction of the relationship between two variables.

This table summarizes the most important differences between normal distributions and Poisson distributions :

| Characteristic | Normal | Poisson |

|---|---|---|

| Continuous | ||

| Mean (µ) and standard deviation (σ) | Lambda (λ) | |

| Shape | Bell-shaped | Depends on λ |

| Symmetrical | Asymmetrical (right-skewed). As λ increases, the asymmetry decreases. | |

| Range | −∞ to ∞ | 0 to ∞ |

When the mean of a Poisson distribution is large (>10), it can be approximated by a normal distribution.

In the Poisson distribution formula, lambda (λ) is the mean number of events within a given interval of time or space. For example, λ = 0.748 floods per year.

The e in the Poisson distribution formula stands for the number 2.718. This number is called Euler’s constant. You can simply substitute e with 2.718 when you’re calculating a Poisson probability. Euler’s constant is a very useful number and is especially important in calculus.

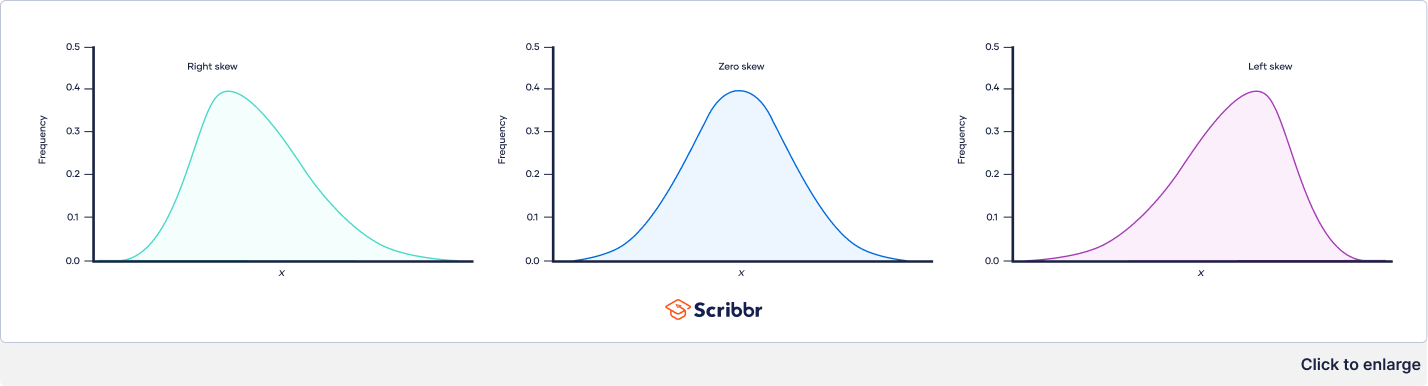

The three types of skewness are:

- Right skew (also called positive skew ) . A right-skewed distribution is longer on the right side of its peak than on its left.

- Left skew (also called negative skew). A left-skewed distribution is longer on the left side of its peak than on its right.

- Zero skew. It is symmetrical and its left and right sides are mirror images.

Skewness and kurtosis are both important measures of a distribution’s shape.

- Skewness measures the asymmetry of a distribution.

- Kurtosis measures the heaviness of a distribution’s tails relative to a normal distribution .

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (“ x affects y because …”).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses . In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

The alternative hypothesis is often abbreviated as H a or H 1 . When the alternative hypothesis is written using mathematical symbols, it always includes an inequality symbol (usually ≠, but sometimes < or >).

The t distribution was first described by statistician William Sealy Gosset under the pseudonym “Student.”

To calculate a confidence interval of a mean using the critical value of t , follow these four steps:

- Choose the significance level based on your desired confidence level. The most common confidence level is 95%, which corresponds to α = .05 in the two-tailed t table .

- Find the critical value of t in the two-tailed t table.

- Multiply the critical value of t by s / √ n .

- Add this value to the mean to calculate the upper limit of the confidence interval, and subtract this value from the mean to calculate the lower limit.

To test a hypothesis using the critical value of t , follow these four steps:

- Calculate the t value for your sample.

- Find the critical value of t in the t table .

- Determine if the (absolute) t value is greater than the critical value of t .

- Reject the null hypothesis if the sample’s t value is greater than the critical value of t . Otherwise, don’t reject the null hypothesis .

You can use the T.INV() function to find the critical value of t for one-tailed tests in Excel, and you can use the T.INV.2T() function for two-tailed tests.

You can use the qt() function to find the critical value of t in R. The function gives the critical value of t for the one-tailed test. If you want the critical value of t for a two-tailed test, divide the significance level by two.

You can use the RSQ() function to calculate R² in Excel. If your dependent variable is in column A and your independent variable is in column B, then click any blank cell and type “RSQ(A:A,B:B)”.

You can use the summary() function to view the R² of a linear model in R. You will see the “R-squared” near the bottom of the output.

There are two formulas you can use to calculate the coefficient of determination (R²) of a simple linear regression .

The coefficient of determination (R²) is a number between 0 and 1 that measures how well a statistical model predicts an outcome. You can interpret the R² as the proportion of variation in the dependent variable that is predicted by the statistical model.

There are three main types of missing data .

Missing completely at random (MCAR) data are randomly distributed across the variable and unrelated to other variables .

Missing at random (MAR) data are not randomly distributed but they are accounted for by other observed variables.

Missing not at random (MNAR) data systematically differ from the observed values.

To tidy up your missing data , your options usually include accepting, removing, or recreating the missing data.

- Acceptance: You leave your data as is

- Listwise or pairwise deletion: You delete all cases (participants) with missing data from analyses

- Imputation: You use other data to fill in the missing data

Missing data are important because, depending on the type, they can sometimes bias your results. This means your results may not be generalizable outside of your study because your data come from an unrepresentative sample .

Missing data , or missing values, occur when you don’t have data stored for certain variables or participants.

In any dataset, there’s usually some missing data. In quantitative research , missing values appear as blank cells in your spreadsheet.

There are two steps to calculating the geometric mean :

- Multiply all values together to get their product.

- Find the n th root of the product ( n is the number of values).

Before calculating the geometric mean, note that:

- The geometric mean can only be found for positive values.

- If any value in the data set is zero, the geometric mean is zero.

The arithmetic mean is the most commonly used type of mean and is often referred to simply as “the mean.” While the arithmetic mean is based on adding and dividing values, the geometric mean multiplies and finds the root of values.

Even though the geometric mean is a less common measure of central tendency , it’s more accurate than the arithmetic mean for percentage change and positively skewed data. The geometric mean is often reported for financial indices and population growth rates.

The geometric mean is an average that multiplies all values and finds a root of the number. For a dataset with n numbers, you find the n th root of their product.

Outliers are extreme values that differ from most values in the dataset. You find outliers at the extreme ends of your dataset.

It’s best to remove outliers only when you have a sound reason for doing so.

Some outliers represent natural variations in the population , and they should be left as is in your dataset. These are called true outliers.

Other outliers are problematic and should be removed because they represent measurement errors , data entry or processing errors, or poor sampling.

You can choose from four main ways to detect outliers :

- Sorting your values from low to high and checking minimum and maximum values

- Visualizing your data with a box plot and looking for outliers

- Using the interquartile range to create fences for your data

- Using statistical procedures to identify extreme values

Outliers can have a big impact on your statistical analyses and skew the results of any hypothesis test if they are inaccurate.

These extreme values can impact your statistical power as well, making it hard to detect a true effect if there is one.

No, the steepness or slope of the line isn’t related to the correlation coefficient value. The correlation coefficient only tells you how closely your data fit on a line, so two datasets with the same correlation coefficient can have very different slopes.

To find the slope of the line, you’ll need to perform a regression analysis .

Correlation coefficients always range between -1 and 1.

The sign of the coefficient tells you the direction of the relationship: a positive value means the variables change together in the same direction, while a negative value means they change together in opposite directions.

The absolute value of a number is equal to the number without its sign. The absolute value of a correlation coefficient tells you the magnitude of the correlation: the greater the absolute value, the stronger the correlation.

These are the assumptions your data must meet if you want to use Pearson’s r :

- Both variables are on an interval or ratio level of measurement

- Data from both variables follow normal distributions

- Your data have no outliers

- Your data is from a random or representative sample

- You expect a linear relationship between the two variables

A correlation coefficient is a single number that describes the strength and direction of the relationship between your variables.

Different types of correlation coefficients might be appropriate for your data based on their levels of measurement and distributions . The Pearson product-moment correlation coefficient (Pearson’s r ) is commonly used to assess a linear relationship between two quantitative variables.

There are various ways to improve power:

- Increase the potential effect size by manipulating your independent variable more strongly,

- Increase sample size,

- Increase the significance level (alpha),

- Reduce measurement error by increasing the precision and accuracy of your measurement devices and procedures,

- Use a one-tailed test instead of a two-tailed test for t tests and z tests.

A power analysis is a calculation that helps you determine a minimum sample size for your study. It’s made up of four main components. If you know or have estimates for any three of these, you can calculate the fourth component.

- Statistical power : the likelihood that a test will detect an effect of a certain size if there is one, usually set at 80% or higher.

- Sample size : the minimum number of observations needed to observe an effect of a certain size with a given power level.

- Significance level (alpha) : the maximum risk of rejecting a true null hypothesis that you are willing to take, usually set at 5%.

- Expected effect size : a standardized way of expressing the magnitude of the expected result of your study, usually based on similar studies or a pilot study.

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

Statistical analysis is the main method for analyzing quantitative research data . It uses probabilities and models to test predictions about a population from sample data.

The risk of making a Type II error is inversely related to the statistical power of a test. Power is the extent to which a test can correctly detect a real effect when there is one.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level to increase statistical power.

The risk of making a Type I error is the significance level (or alpha) that you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value ).

The significance level is usually set at 0.05 or 5%. This means that your results only have a 5% chance of occurring, or less, if the null hypothesis is actually true.

To reduce the Type I error probability, you can set a lower significance level.

In statistics, a Type I error means rejecting the null hypothesis when it’s actually true, while a Type II error means failing to reject the null hypothesis when it’s actually false.

In statistics, power refers to the likelihood of a hypothesis test detecting a true effect if there is one. A statistically powerful test is more likely to reject a false negative (a Type II error).

If you don’t ensure enough power in your study, you may not be able to detect a statistically significant result even when it has practical significance. Your study might not have the ability to answer your research question.

While statistical significance shows that an effect exists in a study, practical significance shows that the effect is large enough to be meaningful in the real world.

Statistical significance is denoted by p -values whereas practical significance is represented by effect sizes .

There are dozens of measures of effect sizes . The most common effect sizes are Cohen’s d and Pearson’s r . Cohen’s d measures the size of the difference between two groups while Pearson’s r measures the strength of the relationship between two variables .

Effect size tells you how meaningful the relationship between variables or the difference between groups is.

A large effect size means that a research finding has practical significance, while a small effect size indicates limited practical applications.

Using descriptive and inferential statistics , you can make two types of estimates about the population : point estimates and interval estimates.

- A point estimate is a single value estimate of a parameter . For instance, a sample mean is a point estimate of a population mean.

- An interval estimate gives you a range of values where the parameter is expected to lie. A confidence interval is the most common type of interval estimate.

Both types of estimates are important for gathering a clear idea of where a parameter is likely to lie.

Standard error and standard deviation are both measures of variability . The standard deviation reflects variability within a sample, while the standard error estimates the variability across samples of a population.

The standard error of the mean , or simply standard error , indicates how different the population mean is likely to be from a sample mean. It tells you how much the sample mean would vary if you were to repeat a study using new samples from within a single population.

To figure out whether a given number is a parameter or a statistic , ask yourself the following:

- Does the number describe a whole, complete population where every member can be reached for data collection ?

- Is it possible to collect data for this number from every member of the population in a reasonable time frame?

If the answer is yes to both questions, the number is likely to be a parameter. For small populations, data can be collected from the whole population and summarized in parameters.

If the answer is no to either of the questions, then the number is more likely to be a statistic.

The arithmetic mean is the most commonly used mean. It’s often simply called the mean or the average. But there are some other types of means you can calculate depending on your research purposes:

- Weighted mean: some values contribute more to the mean than others.

- Geometric mean : values are multiplied rather than summed up.

- Harmonic mean: reciprocals of values are used instead of the values themselves.

You can find the mean , or average, of a data set in two simple steps:

- Find the sum of the values by adding them all up.

- Divide the sum by the number of values in the data set.

This method is the same whether you are dealing with sample or population data or positive or negative numbers.

The median is the most informative measure of central tendency for skewed distributions or distributions with outliers. For example, the median is often used as a measure of central tendency for income distributions, which are generally highly skewed.

Because the median only uses one or two values, it’s unaffected by extreme outliers or non-symmetric distributions of scores. In contrast, the mean and mode can vary in skewed distributions.

To find the median , first order your data. Then calculate the middle position based on n , the number of values in your data set.

A data set can often have no mode, one mode or more than one mode – it all depends on how many different values repeat most frequently.

Your data can be:

- without any mode

- unimodal, with one mode,

- bimodal, with two modes,

- trimodal, with three modes, or

- multimodal, with four or more modes.

To find the mode :

- If your data is numerical or quantitative, order the values from low to high.

- If it is categorical, sort the values by group, in any order.

Then you simply need to identify the most frequently occurring value.

The interquartile range is the best measure of variability for skewed distributions or data sets with outliers. Because it’s based on values that come from the middle half of the distribution, it’s unlikely to be influenced by outliers .

The two most common methods for calculating interquartile range are the exclusive and inclusive methods.

The exclusive method excludes the median when identifying Q1 and Q3, while the inclusive method includes the median as a value in the data set in identifying the quartiles.

For each of these methods, you’ll need different procedures for finding the median, Q1 and Q3 depending on whether your sample size is even- or odd-numbered. The exclusive method works best for even-numbered sample sizes, while the inclusive method is often used with odd-numbered sample sizes.

While the range gives you the spread of the whole data set, the interquartile range gives you the spread of the middle half of a data set.

Homoscedasticity, or homogeneity of variances, is an assumption of equal or similar variances in different groups being compared.

This is an important assumption of parametric statistical tests because they are sensitive to any dissimilarities. Uneven variances in samples result in biased and skewed test results.

Statistical tests such as variance tests or the analysis of variance (ANOVA) use sample variance to assess group differences of populations. They use the variances of the samples to assess whether the populations they come from significantly differ from each other.

Variance is the average squared deviations from the mean, while standard deviation is the square root of this number. Both measures reflect variability in a distribution, but their units differ:

- Standard deviation is expressed in the same units as the original values (e.g., minutes or meters).

- Variance is expressed in much larger units (e.g., meters squared).

Although the units of variance are harder to intuitively understand, variance is important in statistical tests .

The empirical rule, or the 68-95-99.7 rule, tells you where most of the values lie in a normal distribution :

- Around 68% of values are within 1 standard deviation of the mean.

- Around 95% of values are within 2 standard deviations of the mean.

- Around 99.7% of values are within 3 standard deviations of the mean.

The empirical rule is a quick way to get an overview of your data and check for any outliers or extreme values that don’t follow this pattern.

In a normal distribution , data are symmetrically distributed with no skew. Most values cluster around a central region, with values tapering off as they go further away from the center.

The measures of central tendency (mean, mode, and median) are exactly the same in a normal distribution.

The standard deviation is the average amount of variability in your data set. It tells you, on average, how far each score lies from the mean .

In normal distributions, a high standard deviation means that values are generally far from the mean, while a low standard deviation indicates that values are clustered close to the mean.

No. Because the range formula subtracts the lowest number from the highest number, the range is always zero or a positive number.

In statistics, the range is the spread of your data from the lowest to the highest value in the distribution. It is the simplest measure of variability .

While central tendency tells you where most of your data points lie, variability summarizes how far apart your points from each other.

Data sets can have the same central tendency but different levels of variability or vice versa . Together, they give you a complete picture of your data.

Variability is most commonly measured with the following descriptive statistics :

- Range : the difference between the highest and lowest values

- Interquartile range : the range of the middle half of a distribution

- Standard deviation : average distance from the mean

- Variance : average of squared distances from the mean

Variability tells you how far apart points lie from each other and from the center of a distribution or a data set.

Variability is also referred to as spread, scatter or dispersion.

While interval and ratio data can both be categorized, ranked, and have equal spacing between adjacent values, only ratio scales have a true zero.

For example, temperature in Celsius or Fahrenheit is at an interval scale because zero is not the lowest possible temperature. In the Kelvin scale, a ratio scale, zero represents a total lack of thermal energy.

A critical value is the value of the test statistic which defines the upper and lower bounds of a confidence interval , or which defines the threshold of statistical significance in a statistical test. It describes how far from the mean of the distribution you have to go to cover a certain amount of the total variation in the data (i.e. 90%, 95%, 99%).

If you are constructing a 95% confidence interval and are using a threshold of statistical significance of p = 0.05, then your critical value will be identical in both cases.

The t -distribution gives more probability to observations in the tails of the distribution than the standard normal distribution (a.k.a. the z -distribution).

In this way, the t -distribution is more conservative than the standard normal distribution: to reach the same level of confidence or statistical significance , you will need to include a wider range of the data.

A t -score (a.k.a. a t -value) is equivalent to the number of standard deviations away from the mean of the t -distribution .

The t -score is the test statistic used in t -tests and regression tests. It can also be used to describe how far from the mean an observation is when the data follow a t -distribution.

The t -distribution is a way of describing a set of observations where most observations fall close to the mean , and the rest of the observations make up the tails on either side. It is a type of normal distribution used for smaller sample sizes, where the variance in the data is unknown.

The t -distribution forms a bell curve when plotted on a graph. It can be described mathematically using the mean and the standard deviation .

In statistics, ordinal and nominal variables are both considered categorical variables .

Even though ordinal data can sometimes be numerical, not all mathematical operations can be performed on them.

Ordinal data has two characteristics:

- The data can be classified into different categories within a variable.

- The categories have a natural ranked order.

However, unlike with interval data, the distances between the categories are uneven or unknown.

Nominal and ordinal are two of the four levels of measurement . Nominal level data can only be classified, while ordinal level data can be classified and ordered.

Nominal data is data that can be labelled or classified into mutually exclusive categories within a variable. These categories cannot be ordered in a meaningful way.

For example, for the nominal variable of preferred mode of transportation, you may have the categories of car, bus, train, tram or bicycle.

If your confidence interval for a difference between groups includes zero, that means that if you run your experiment again you have a good chance of finding no difference between groups.

If your confidence interval for a correlation or regression includes zero, that means that if you run your experiment again there is a good chance of finding no correlation in your data.

In both of these cases, you will also find a high p -value when you run your statistical test, meaning that your results could have occurred under the null hypothesis of no relationship between variables or no difference between groups.

If you want to calculate a confidence interval around the mean of data that is not normally distributed , you have two choices:

- Find a distribution that matches the shape of your data and use that distribution to calculate the confidence interval.

- Perform a transformation on your data to make it fit a normal distribution, and then find the confidence interval for the transformed data.

The standard normal distribution , also called the z -distribution, is a special normal distribution where the mean is 0 and the standard deviation is 1.

Any normal distribution can be converted into the standard normal distribution by turning the individual values into z -scores. In a z -distribution, z -scores tell you how many standard deviations away from the mean each value lies.

The z -score and t -score (aka z -value and t -value) show how many standard deviations away from the mean of the distribution you are, assuming your data follow a z -distribution or a t -distribution .

These scores are used in statistical tests to show how far from the mean of the predicted distribution your statistical estimate is. If your test produces a z -score of 2.5, this means that your estimate is 2.5 standard deviations from the predicted mean.

The predicted mean and distribution of your estimate are generated by the null hypothesis of the statistical test you are using. The more standard deviations away from the predicted mean your estimate is, the less likely it is that the estimate could have occurred under the null hypothesis .

To calculate the confidence interval , you need to know:

- The point estimate you are constructing the confidence interval for

- The critical values for the test statistic

- The standard deviation of the sample

- The sample size

Then you can plug these components into the confidence interval formula that corresponds to your data. The formula depends on the type of estimate (e.g. a mean or a proportion) and on the distribution of your data.

The confidence level is the percentage of times you expect to get close to the same estimate if you run your experiment again or resample the population in the same way.

The confidence interval consists of the upper and lower bounds of the estimate you expect to find at a given level of confidence.

For example, if you are estimating a 95% confidence interval around the mean proportion of female babies born every year based on a random sample of babies, you might find an upper bound of 0.56 and a lower bound of 0.48. These are the upper and lower bounds of the confidence interval. The confidence level is 95%.

The mean is the most frequently used measure of central tendency because it uses all values in the data set to give you an average.

For data from skewed distributions, the median is better than the mean because it isn’t influenced by extremely large values.

The mode is the only measure you can use for nominal or categorical data that can’t be ordered.

The measures of central tendency you can use depends on the level of measurement of your data.

- For a nominal level, you can only use the mode to find the most frequent value.

- For an ordinal level or ranked data, you can also use the median to find the value in the middle of your data set.

- For interval or ratio levels, in addition to the mode and median, you can use the mean to find the average value.

Measures of central tendency help you find the middle, or the average, of a data set.

The 3 most common measures of central tendency are the mean, median and mode.

- The mode is the most frequent value.

- The median is the middle number in an ordered data set.

- The mean is the sum of all values divided by the total number of values.

Some variables have fixed levels. For example, gender and ethnicity are always nominal level data because they cannot be ranked.

However, for other variables, you can choose the level of measurement . For example, income is a variable that can be recorded on an ordinal or a ratio scale:

- At an ordinal level , you could create 5 income groupings and code the incomes that fall within them from 1–5.

- At a ratio level , you would record exact numbers for income.

If you have a choice, the ratio level is always preferable because you can analyze data in more ways. The higher the level of measurement, the more precise your data is.

The level at which you measure a variable determines how you can analyze your data.

Depending on the level of measurement , you can perform different descriptive statistics to get an overall summary of your data and inferential statistics to see if your results support or refute your hypothesis .

Levels of measurement tell you how precisely variables are recorded. There are 4 levels of measurement, which can be ranked from low to high:

- Nominal : the data can only be categorized.

- Ordinal : the data can be categorized and ranked.

- Interval : the data can be categorized and ranked, and evenly spaced.

- Ratio : the data can be categorized, ranked, evenly spaced and has a natural zero.

No. The p -value only tells you how likely the data you have observed is to have occurred under the null hypothesis .

If the p -value is below your threshold of significance (typically p < 0.05), then you can reject the null hypothesis, but this does not necessarily mean that your alternative hypothesis is true.

The alpha value, or the threshold for statistical significance , is arbitrary – which value you use depends on your field of study.

In most cases, researchers use an alpha of 0.05, which means that there is a less than 5% chance that the data being tested could have occurred under the null hypothesis.

P -values are usually automatically calculated by the program you use to perform your statistical test. They can also be estimated using p -value tables for the relevant test statistic .

P -values are calculated from the null distribution of the test statistic. They tell you how often a test statistic is expected to occur under the null hypothesis of the statistical test, based on where it falls in the null distribution.

If the test statistic is far from the mean of the null distribution, then the p -value will be small, showing that the test statistic is not likely to have occurred under the null hypothesis.

A p -value , or probability value, is a number describing how likely it is that your data would have occurred under the null hypothesis of your statistical test .

The test statistic you use will be determined by the statistical test.

You can choose the right statistical test by looking at what type of data you have collected and what type of relationship you want to test.

The test statistic will change based on the number of observations in your data, how variable your observations are, and how strong the underlying patterns in the data are.

For example, if one data set has higher variability while another has lower variability, the first data set will produce a test statistic closer to the null hypothesis , even if the true correlation between two variables is the same in either data set.

The formula for the test statistic depends on the statistical test being used.

Generally, the test statistic is calculated as the pattern in your data (i.e. the correlation between variables or difference between groups) divided by the variance in the data (i.e. the standard deviation ).

- Univariate statistics summarize only one variable at a time.

- Bivariate statistics compare two variables .

- Multivariate statistics compare more than two variables .

The 3 main types of descriptive statistics concern the frequency distribution, central tendency, and variability of a dataset.

- Distribution refers to the frequencies of different responses.

- Measures of central tendency give you the average for each response.

- Measures of variability show you the spread or dispersion of your dataset.

Descriptive statistics summarize the characteristics of a data set. Inferential statistics allow you to test a hypothesis or assess whether your data is generalizable to the broader population.

In statistics, model selection is a process researchers use to compare the relative value of different statistical models and determine which one is the best fit for the observed data.

The Akaike information criterion is one of the most common methods of model selection. AIC weights the ability of the model to predict the observed data against the number of parameters the model requires to reach that level of precision.

AIC model selection can help researchers find a model that explains the observed variation in their data while avoiding overfitting.

In statistics, a model is the collection of one or more independent variables and their predicted interactions that researchers use to try to explain variation in their dependent variable.

You can test a model using a statistical test . To compare how well different models fit your data, you can use Akaike’s information criterion for model selection.

The Akaike information criterion is calculated from the maximum log-likelihood of the model and the number of parameters (K) used to reach that likelihood. The AIC function is 2K – 2(log-likelihood) .

Lower AIC values indicate a better-fit model, and a model with a delta-AIC (the difference between the two AIC values being compared) of more than -2 is considered significantly better than the model it is being compared to.

The Akaike information criterion is a mathematical test used to evaluate how well a model fits the data it is meant to describe. It penalizes models which use more independent variables (parameters) as a way to avoid over-fitting.

AIC is most often used to compare the relative goodness-of-fit among different models under consideration and to then choose the model that best fits the data.

A factorial ANOVA is any ANOVA that uses more than one categorical independent variable . A two-way ANOVA is a type of factorial ANOVA.

Some examples of factorial ANOVAs include:

- Testing the combined effects of vaccination (vaccinated or not vaccinated) and health status (healthy or pre-existing condition) on the rate of flu infection in a population.

- Testing the effects of marital status (married, single, divorced, widowed), job status (employed, self-employed, unemployed, retired), and family history (no family history, some family history) on the incidence of depression in a population.

- Testing the effects of feed type (type A, B, or C) and barn crowding (not crowded, somewhat crowded, very crowded) on the final weight of chickens in a commercial farming operation.

In ANOVA, the null hypothesis is that there is no difference among group means. If any group differs significantly from the overall group mean, then the ANOVA will report a statistically significant result.

Significant differences among group means are calculated using the F statistic, which is the ratio of the mean sum of squares (the variance explained by the independent variable) to the mean square error (the variance left over).

If the F statistic is higher than the critical value (the value of F that corresponds with your alpha value, usually 0.05), then the difference among groups is deemed statistically significant.

The only difference between one-way and two-way ANOVA is the number of independent variables . A one-way ANOVA has one independent variable, while a two-way ANOVA has two.

- One-way ANOVA : Testing the relationship between shoe brand (Nike, Adidas, Saucony, Hoka) and race finish times in a marathon.

- Two-way ANOVA : Testing the relationship between shoe brand (Nike, Adidas, Saucony, Hoka), runner age group (junior, senior, master’s), and race finishing times in a marathon.

All ANOVAs are designed to test for differences among three or more groups. If you are only testing for a difference between two groups, use a t-test instead.

Multiple linear regression is a regression model that estimates the relationship between a quantitative dependent variable and two or more independent variables using a straight line.

Linear regression most often uses mean-square error (MSE) to calculate the error of the model. MSE is calculated by:

- measuring the distance of the observed y-values from the predicted y-values at each value of x;

- squaring each of these distances;

- calculating the mean of each of the squared distances.

Linear regression fits a line to the data by finding the regression coefficient that results in the smallest MSE.

Simple linear regression is a regression model that estimates the relationship between one independent variable and one dependent variable using a straight line. Both variables should be quantitative.

For example, the relationship between temperature and the expansion of mercury in a thermometer can be modeled using a straight line: as temperature increases, the mercury expands. This linear relationship is so certain that we can use mercury thermometers to measure temperature.

A regression model is a statistical model that estimates the relationship between one dependent variable and one or more independent variables using a line (or a plane in the case of two or more independent variables).

A regression model can be used when the dependent variable is quantitative, except in the case of logistic regression, where the dependent variable is binary.

A t-test should not be used to measure differences among more than two groups, because the error structure for a t-test will underestimate the actual error when many groups are being compared.

If you want to compare the means of several groups at once, it’s best to use another statistical test such as ANOVA or a post-hoc test.

A one-sample t-test is used to compare a single population to a standard value (for example, to determine whether the average lifespan of a specific town is different from the country average).

A paired t-test is used to compare a single population before and after some experimental intervention or at two different points in time (for example, measuring student performance on a test before and after being taught the material).

A t-test measures the difference in group means divided by the pooled standard error of the two group means.

In this way, it calculates a number (the t-value) illustrating the magnitude of the difference between the two group means being compared, and estimates the likelihood that this difference exists purely by chance (p-value).

Your choice of t-test depends on whether you are studying one group or two groups, and whether you care about the direction of the difference in group means.

If you are studying one group, use a paired t-test to compare the group mean over time or after an intervention, or use a one-sample t-test to compare the group mean to a standard value. If you are studying two groups, use a two-sample t-test .

If you want to know only whether a difference exists, use a two-tailed test . If you want to know if one group mean is greater or less than the other, use a left-tailed or right-tailed one-tailed test .

A t-test is a statistical test that compares the means of two samples . It is used in hypothesis testing , with a null hypothesis that the difference in group means is zero and an alternate hypothesis that the difference in group means is different from zero.

Statistical significance is a term used by researchers to state that it is unlikely their observations could have occurred under the null hypothesis of a statistical test . Significance is usually denoted by a p -value , or probability value.

Statistical significance is arbitrary – it depends on the threshold, or alpha value, chosen by the researcher. The most common threshold is p < 0.05, which means that the data is likely to occur less than 5% of the time under the null hypothesis .

When the p -value falls below the chosen alpha value, then we say the result of the test is statistically significant.

A test statistic is a number calculated by a statistical test . It describes how far your observed data is from the null hypothesis of no relationship between variables or no difference among sample groups.

The test statistic tells you how different two or more groups are from the overall population mean , or how different a linear slope is from the slope predicted by a null hypothesis . Different test statistics are used in different statistical tests.

Statistical tests commonly assume that:

- the data are normally distributed

- the groups that are being compared have similar variance

- the data are independent

If your data does not meet these assumptions you might still be able to use a nonparametric statistical test , which have fewer requirements but also make weaker inferences.

Ask our team

Want to contact us directly? No problem. We are always here for you.

- Email [email protected]

- Start live chat

- Call +1 (510) 822-8066

- WhatsApp +31 20 261 6040

Our team helps students graduate by offering:

- A world-class citation generator

- Plagiarism Checker software powered by Turnitin

- Innovative Citation Checker software

- Professional proofreading services

- Over 300 helpful articles about academic writing, citing sources, plagiarism, and more

Scribbr specializes in editing study-related documents . We proofread:

- PhD dissertations

- Research proposals

- Personal statements

- Admission essays

- Motivation letters

- Reflection papers

- Journal articles

- Capstone projects

Scribbr’s Plagiarism Checker is powered by elements of Turnitin’s Similarity Checker , namely the plagiarism detection software and the Internet Archive and Premium Scholarly Publications content databases .

The add-on AI detector is powered by Scribbr’s proprietary software.

The Scribbr Citation Generator is developed using the open-source Citation Style Language (CSL) project and Frank Bennett’s citeproc-js . It’s the same technology used by dozens of other popular citation tools, including Mendeley and Zotero.

You can find all the citation styles and locales used in the Scribbr Citation Generator in our publicly accessible repository on Github .

Null Hypothesis

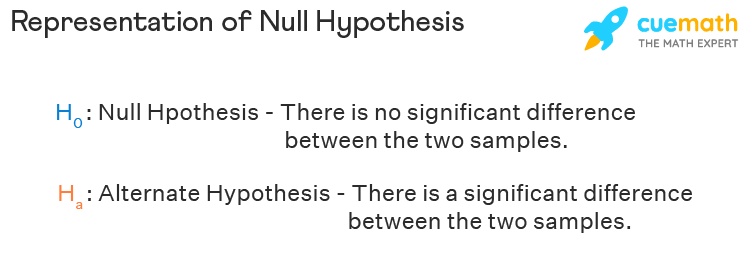

Null hypothesis is used to make decisions based on data and by using statistical tests. Null hypothesis is represented using H o and it states that there is no difference between the characteristics of two samples. Null hypothesis is generally a statement of no difference. The rejection of null hypothesis is equivalent to the acceptance of the alternate hypothesis.

Let us learn more about null hypotheses, tests for null hypotheses, the difference between null hypothesis and alternate hypothesis, with the help of examples, FAQs.

| 1. | |

| 2. | |

| 3. | |

| 4. |

What Is Null Hypothesis?

Null hypothesis states that there is no significant difference between the observed characteristics across two sample sets. Null hypothesis states the observed population parameters or variables is the same across the samples. The null hypothesis states that there is no relationship between the sample parameters, the independent variable, and the dependent variable. The term null hypothesis is used in instances to mean that there is no differences in the two means, or that the difference is not so significant.

If the experimental outcome is the same as the theoretical outcome then the null hypothesis holds good. But if there are any differences in the observed parameters across the samples then the null hypothesis is rejected, and we consider an alternate hypothesis. The rejection of the null hypothesis does not mean that there were flaws in the basic experimentation, but it sets the stage for further research. Generally, the strength of the evidence is tested against the null hypothesis.

Null hypothesis and alternate hypothesis are the two approaches used across statistics. The alternate hypothesis states that there is a significant difference between the parameters across the samples. The alternate hypothesis is the inverse of null hypothesis. An important reason to reject the null hypothesis and consider the alternate hypothesis is due to experimental or sampling errors.

Tests For Null Hypothesis

The two important approaches of statistical interference of null hypothesis are significance testing and hypothesis testing. The null hypothesis is a theoretical hypothesis and is based on insufficient evidence, which requires further testing to prove if it is true or false.

Significance Testing

The aim of significance testing is to provide evidence to reject the null hypothesis. If the difference is strong enough then reject the null hypothesis and accept the alternate hypothesis. The testing is designed to test the strength of the evidence against the hypothesis. The four important steps of significance testing are as follows.

- First state the null and alternate hypotheses.

- Calculate the test statistics.

- Find the p-value.

- Test the p-value with the α and decide if the null hypothesis should be rejected or accepted.

If the p-value is lesser than the significance level α, then the null hypothesis is rejected. And if the p-value is greater than the significance level α, then the null hypothesis is accepted.

- Hypothesis Testing

Hypothesis testing takes the parameters from the sample and makes a derivation about the population. A hypothesis is an educated guess about a sample, which can be tested either through an experiment or an observation. Initially, a tentative assumption is made about the sample in the form of a null hypothesis.

There are four steps to perform hypothesis testing. They are:

- Identify the null hypothesis.

- Define the null hypothesis statement.

- Choose the test to be performed.

- Accept the null hypothesis or the alternate hypothesis.

There are often errors in the process of testing the hypothesis. The two important errors observed in hypothesis testing is as follows.

- Type - I error is rejecting the null hypothesis when the null hypothesis is actually true.

- Type - II error is accepting the null hypothesis when the null hypothesis is actually false.

Difference Between Null Hypothesis And Alternate Hypothesis

The difference between null hypothesis and alternate hypothesis can be understood through the following points.

- The opposite of the null hypothesis is the alternate hypothesis and it is the claim which is being proved by research to be true.

- The null hypothesis states that the two samples of the population are the same, and the alternate hypothesis states that there is a significant difference between the two samples of the population.

- The null hypothesis is designated as H o and the alternate hypothesis is designated as H a .

- For the null hypothesis, the same means are assumed to be equal, and we have H 0 : µ 1 = µ 2. And for the alternate hypothesis, the sample means are unequal, and we have H a : µ 1 ≠ µ 2.

- The observed population parameters and variables are the same across the samples, for a null hypothesis, but in an alternate hypothesis, there is a significant difference between the observed parameters and variables across the samples.

☛ Related Topics

The following topics help in a better understanding of the null hypothesis.

- Probability and Statistics

- Basic Statistics Formula

- Sample Space

Examples on Null Hypothesis

Example 1: A medical experiment and trial is conducted to check if a particular drug can serve as the vaccine for Covid-19, and can prevent from occurrence of Corona. Write the null hypothesis and the alternate hypothesis for this situation.

The given situation refers to a possible new drug and its effectiveness of being a vaccine for Covid-19 or not. The null hypothesis (H o ) and alternate hypothesis (H a ) for this medical experiment is as follows.

- H 0 : The use of the new drug is not helpful for the prevention of Covid-19.

- H a : The use of the new drug serves as a vaccine and helps for the prevention of Covid-19.

Example 2: The teacher has prepared a set of important questions and informs the student that preparing these questions helps in scoring more than 60% marks in the board exams. Write the null hypothesis and the alternate hypothesis for this situation.

The given situation refers to the teacher who has claimed that her important questions helps to score more than 60% marks in the board exams. The null hypothesis(H o ) and alternate hypothesis(H a ) for this situation is as follows.

- H o : The important questions given by the teacher does not really help the students to get a score of more than 60% in the board exams.