- How it works

What is Response Bias – Types & Examples

Published by Owen Ingram at September 4th, 2023 , Revised On September 4, 2023

In research, ensuring the accuracy of responses is crucial to obtaining valid and reliable results. When participants’ answers are systematically influenced by external factors or internal beliefs rather than reflecting their genuine opinions or facts, it introduces response bias. This kind of bias can significantly skew the results of a study, making them less trustworthy. Researchers often refer to a scholarly source to understand the nuances of such biases.

Example of Response Bias

Suppose a researcher is conducting a survey about people’s exercise habits. They ask the question:

“How many days a week do you engage in at least 30 minutes of physical exercise?”

The bias here is that participants may not give honest answers due to the perceived social desirability of the behaviour in question, which can be tied to explicit bias . Researchers need to be wary of this and might consider using alternative methods, such as anonymised surveys or indirect questioning, to obtain more accurate responses.

But what exactly is response bias, why does it matter, and how can it manifest? Let’s discuss this in detail.

What is Response Bias?

Response bias refers to the tendency of respondents to answer questions in a way that does not accurately reflect their true beliefs, feelings, or behaviours. This bias can be introduced into survey or research results due to various factors, including the way questions are phrased, the presence of an interviewer, or the respondents’ perceived social desirability of certain answers.

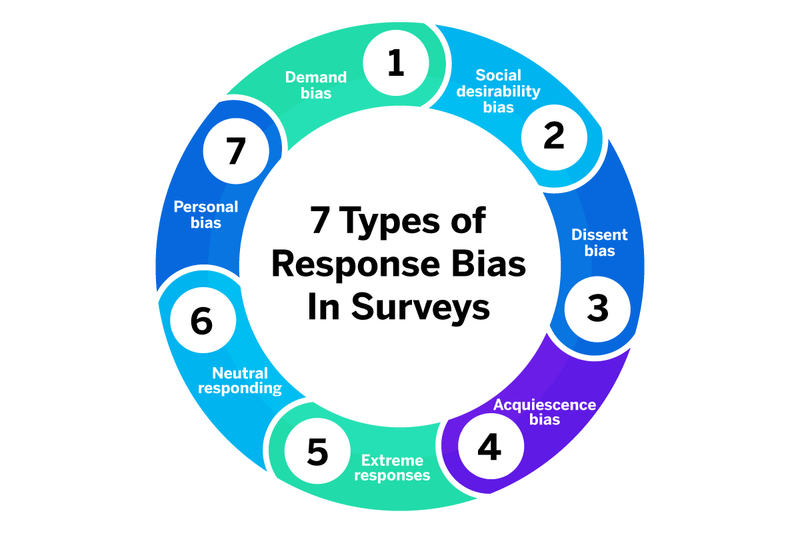

Different Types of Response Bias

The different types of response bias and how to minimise each one are discussed below. These biases can be understood better with the source evaluation method and by referring to a primary source or a secondary source .

Acquiescence Bias (or “Yes-Saying”)

Acquiescence bias occurs when respondents have a tendency to agree with statements or answer ‘yes’ to questions, regardless of their actual opinion. This can particularly distort results in surveys that consist mostly of positive statements, as respondents could be more agreeable or favourable than they truly are.

How to Minimise

Ensure a balance of positive and negative statements in the survey. Also, include a neutral response option where appropriate.

Social Desirability Bias

Social desirability bias comes into play when respondents provide answers they believe will be viewed favourably by others. They might over-report behaviours considered ‘good’ and underreport ‘undesirable’ to present themselves positively.

Assure respondents of the confidentiality of their responses. Employ indirect questioning or third-person techniques to make respondents more comfortable.

Recall Bias

In recall bias , the respondent’s memory of past events is not accurate. They might forget details or remember events differently than they happened. This is particularly common in longitudinal studies or health surveys asking about past behaviours or occurrences.

Use shorter time frames for recall or provide aids (e.g., calendars) to help jog respondents’ memories.

Anchoring Bias

In anchoring bias , respondents rely too heavily on the first piece of information they encounter (the “anchor”) and then adjust their responses based on this anchor. For instance, respondents may anchor around these numbers if a survey asks about the number of books read in the past month and offers an example (“like 10, 20, or 30 books”).

Avoid providing unnecessary examples or information that could act as anchors.

Extreme Responding

Some people are prone to choose the most extreme response options, whether on a scale from 1-5 or 1-10. This might give the impression of very strong opinions, even if the respondent does not feel that strongly.

Use balanced scales with clear delineations between options and provide a neutral midpoint.

Non-Response Bias

Non-response bias occurs when certain groups or individuals choose not to participate in a survey or skip specific questions. If the non-responders are systematically different from those who do respond, this can skew results.

Encourage participation through reminders and incentives. Also, design surveys to be as engaging and concise as possible.

Leading Question Bias

When questions are worded to suggest a particular answer or lead respondents in a certain direction, it can create this bias.

Ensure questions are neutrally worded. Pre-test surveys with a sample group to identify potential leading questions.

Confirmation Bias

While this is more of a cognitive bias than a response bias, it is essential to mention it. When analysing results, researchers might look for data confirming their beliefs or hypotheses and overlook data that contradicts them.

Approach data analysis with an open mind. Use blind analyses when appropriate, and have multiple people review the results.

Order Bias (or Sequence Bias)

The order in which questions or options are presented can influence responses. Respondents might focus more on the first or last items due to primacy and recency effects.

Randomise the order of questions or response options for different respondents.

Halo Effect

If a respondent has a strong positive or negative feeling about one aspect of a subject, that feeling can influence their responses to related questions. For instance, someone who loves a brand might rate it highly across all metrics, even if they have had specific negative experiences.

Design questions to be as specific as possible and separate unrelated concepts.

Causes of Response Bias

Response bias in surveys or questionnaires is a systematic pattern of deviation from the true response due to factors other than the actual subject of the study. Here are some causes of response bias:

Wording of Questions

The way questions are phrased can influence the way respondents answer them. Leading or loaded questions can prompt respondents to answer in a specific way.

Interviewer’s Behaviour or Appearance

How an interviewer behaves, or their personal characteristics can influence respondents’ answers, especially in face-to-face interviews. For example, the respondent might try to please the interviewer or avoid conflict.

Hire an Expert Writer

Proposal and research paper orders completed by our expert writers are

- Formally drafted in academic style

- Plagiarism free

- 100% Confidential

- Never Resold

- Include unlimited free revisions

- Completed to match exact client requirements

Recall Errors

Especially in surveys asking about past events or behaviours, respondents might not remember accurately, and hence, their answers could be biased.

Mood and Context of the Respondent

The environment, the timing, or the respondent’s personal mood or situation can affect their answers. For instance, someone might respond differently to a survey about job satisfaction when they face a lot of work stress compared to a more relaxed day.

Order of Questions

The sequence in which questions are presented can influence how respondents answer subsequent questions. Earlier questions can set a context or frame of mind that affects answers to later questions. This effect can sometimes be due to the ceiling effect or even affinity bias .

Pressure to Conform

In some settings, especially when others are present or watching, respondents might feel pressure to answer in a way that conforms with the majority view or avoids standing out, reflecting a bias for action .

Lack of Anonymity

If respondents believe that their answers will be tied back to them, and they could face negative consequences, they might not answer truthfully.

Fatigue or Boredom

In lengthy surveys or interviews, respondents might get tired or bored and might not answer later questions with the same care and attention as earlier ones.

Misunderstanding of Questions

If respondents do not understand a question fully or interpret it differently than intended, their answers can be biased.

Differential Participation Rates

If certain groups are more or less likely to participate in a survey (e.g., because of its mode or topic), this can introduce bias if the non-participants have answered questions differently than those who did participate.

If respondents believe that their answers will be tied back to them and they could face negative consequences, they might not answer truthfully.

Offering participation incentives might attract respondents with different opinions or characteristics than those who wouldn’t participate without the incentive.

Response Bias Examples

Some response bias examples are:

- When asked about charitable donations, a respondent might exaggerate the amount they have donated because they think it makes them look good, even if they have given little or nothing.

- A participant in a survey agrees with every statement presented to them without really considering the content, such as “I enjoy sunny days” followed by “I prefer rainy days.”

- On a performance review scale of 1 to 7, a manager consistently rates an employee as a ‘4’ for every trait, irrespective of the employee’s true performance.

- A person always uses the ends of a Likert scale (e.g., 1 or 7) regardless of the statement or their true feelings.

- In a feedback survey about a year-long course, a student only recalls and gives feedback on topics from the past few weeks.

- A person is asked to guess the number of candies in a jar after being told that the last guess was 500. They guessed 520, even though their initial thought was 800, because the “500” anchored their response.

- In a survey about a product, the question is phrased as “How much did you love our product?” This can lead respondents to give a more positive answer than if they were asked neutrally.

- A survey about workplace conditions is sent out, but only those with strong opinions (either very positive or very negative) choose to respond. The middle-ground voices are not represented.

- During a face-to-face survey, the interviewer subtly nods when the respondent gives certain answers, unintentionally encouraging the respondent to answer in a particular direction. This can sometimes be a result of the Pygmalion effect , where the expectations of the interviewer influence the responses of the participants.

- On a list of ten movies to rate, respondents give higher ratings to the first and last movies they review, simply because of their positioning on the list.

- Ecological Fallacy

- Pygmalion Effect

- Nonresponse Bias

- Social Desirability Bias

- Ceiling Effect

- Affinity Bias

How to Minimise Response Bias

Minimising response bias is crucial to obtaining valid and reliable data. Here are some strategies to minimise this bias:

- Ensure participants that their responses will remain anonymous and confidential. If they believe their responses can’t be traced back to them, they may be more honest.

- Frame questions in a neutral manner to avoid leading the respondent towards a particular answer.

- Questions that imply a correct answer can skew results. For example, instead of asking, “Don’t you think that X is good?” ask, “What is your opinion on X?”

- For multiple-choice questions, randomising the order of response options can reduce order bias.

- If using Likert scales, offer a neutral middle option and make sure the scale is balanced with an equal number of positive and negative choices.

- Before the main study, conduct a pilot test to identify any potential sources of bias in the questions.

- If the survey or experiment is too long, respondents might rush through it without giving thoughtful answers. Consider breaking up long surveys or providing breaks.

- Assure participants that there are no right or wrong answers and emphasise the importance of honesty.

- If feasible, use multiple measures or methods to assess the same construct. This helps in triangulating the data and checking for consistency.

- If the study involves personal interviews, ensure interviewers are trained to ask questions consistently and without introducing their biases. Publication bias can also have an impact if only studies with certain results are being published and others are not.

- Ensure that participants understand the questions and how to respond. Clarify terms or concepts that might be misunderstood. Checking with a scholarly source can be a good way to ensure the quality of your questions.

- After collecting initial data, consider giving feedback to participants on their responses. This may help clarify or correct misunderstandings.

- After participants have completed the survey or experiment, debrief them to understand their thought processes. This can provide insights into potential sources of bias.

The data collection method you choose (e.g., face-to-face interviews, phone interviews, online surveys) can influence response bias. For example, some people might be more honest in an online survey than in a face-to-face interview.

Frequently Asked Questions

What is response bias.

Response bias refers to the tendency of participants to answer questions untruthfully or misleadingly. This can be due to social desirability, leading questions, or misunderstandings. It affects the validity of survey or questionnaire results, making them not truly representative of the participant’s actual thoughts or feelings.

What is response bias in statistics?

In statistics, response bias occurs when participants’ answers are systematically influenced by external factors rather than reflecting their true feelings, beliefs, or behaviours. This can arise from question-wording, survey method, or the desire to present oneself in a favourable light, potentially skewing the results and reducing data accuracy.

What is voluntary response bias?

Voluntary response bias occurs when individuals choose whether to participate in a survey or study, leading to non-random samples. Those with strong opinions or feelings are more likely to respond, skewing results. This lack of representativeness can lead to inaccurate conclusions that do not reflect the broader population’s true views or characteristics.

How to minimise response bias?

To minimise response bias: use neutral question wording, ensure anonymity, randomise question order, avoid leading or loaded questions, provide a range of response options, pilot test the survey, use trained interviewers, and educate participants about the importance of honesty and accuracy. Choosing appropriate survey methods also helps reduce bias.

How does randomisation in an experiment combat response bias?

Randomisation in an experiment assigns participants to various groups randomly, ensuring that confounding variables are equally distributed. This reduces the likelihood that external factors influence the outcome. By minimising differences between groups, except for the experimental variable, randomisation combats potential biases, including response bias, leading to more valid conclusions.

You May Also Like

Social desirability refers to the tendency of some individuals to respond to questions in a manner that others will view favourably. This can lead to over-reporting “good behaviour” or under-reporting “bad behaviour”.

We all have filled out multiple surveys and questionnaires in our college days. These surveys are conducted to gather data. The common problem is that not everyone can take that survey, meaning individuals select themselves whether they want to respond or not. People have all the authority of this decision.

Have you ever noticed that we often tend to overestimate ourselves? We think we are more skilled than others in performing a certain task, ignoring the complexity of the project.

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

- How It Works

Response Bias: Definition and Examples

Design of Experiments > Response Bias

What is Response Bias?

Self-Reporting Issues

People tend to want to portray themselves in the best light, and this can affect survey responses. According to psychology professor Delroy Paulhus , response bias is a common occurrence in the field of psychology, especially when it comes to self-reporting on:

- Personal traits

- Attitudes, like racism or sexism

- Behavior, like alcohol use or unusual sexual behaviors.

Questionnaire Format Issues

Misleading questions can cause response bias; the wording of the question may influence the way a person responds. For example, a person may be asked about their satisfaction for a recent online purchase and may be presented with three options: very satisfied, satisfied, and dissatisfied. By being given only one option for dissatisfaction, the consumer may be less inclined to pick that option. In some cases, the entire questionnaire may result in response bias. For example, this study showed that patients who are more satisfied tend to respond to surveys in higher numbers than patients who were dissatisfied. This leads to the overestimation of satisfaction levels.

Other questionnaire format problems include:

- Unfamiliar content : the person may not have the background knowledge to fully understand the question.

- Fatigue : giving a survey when a person is tired or ill may affect their responses.

- Faulty recall: asking a person about an event that happened in the distant past may result in erroneous responses.

Many of the above issues can be averted by providing an opt-out choice like “undecided” or “not sure.”

Experimenter Bias (Definition + Examples)

In the early 1900s, a German high school teacher named Wilhelm Von Osten thought that the intelligence of animals was underrated. He decided to teach his horse, Hans, some basic arithmetics to prove his point. Clever Hans, as the horse came to be known, was learning quickly. Soon he could add, subtract, multiply, and divide and would give correct answers by tapping his hoof. It took scientists over a year to prove that the horse wasn’t doing the calculations himself. It turned out that Clever Hans was picking up subtle cues from his owner’s facial expressions and gestures.

Influencing the outcome of an experiment in this way is called "experimenter bias" or "observer-expectancy bias."

What is Experimenter Bias?

Experimenter bias occurs when a researcher either intentionally or unintentionally affects data, participants, or results in an experiment.

The phenomenon is also known as observer bias, information bias, research bias, expectancy bias, experimenter effect, observer-expectancy effect, experimenter-expectancy effect, and observer effect.

One of the leading causes of experimenter bias is the human inability to remain completely objective. Biases like confirmation bias and hindsight bias affect our judgment every day! In the case of the experimenter bias, people conducting research may lean toward their original expectations about a hypothesis without the experimenter being aware of making an error or treating participants differently. These expectations can influence how studies are structured, conducted, and interpreted. They may negatively affect the results, making them flawed or irrelevant. In a way, this is often a more specific case of confirmation bias .

Rosenthal and Fode Experiment

One of the best-known examples of experimenter bias is the experiment conducted by psychologists Robert Rosenthal and Kermit Fode in 1963.

Rosenthal and Kermit asked two groups of psychology students to assess the ability of rats to navigate a maze. While one group was told their rats were “bright”, the other was convinced they were assigned “dull” rats. The rats were randomly chosen, and no significant difference existed between them.

Interestingly, the students who were told their rats were maze-bright reported faster running times than those who did not expect their rodents to perform well. In other words, the students’ expectations directly influenced the obtained results.

Rosenthal and Fode’s experiment shows how the outcomes of a study can be modified as a consequence of the interaction between the experimenter and the subject.

However, experimenter-subject interaction is not the only source of experimenter bias. (It's not the only time bias may appear as one observes another person's actions. We are influenced by the actor-observer bias daily, whether or not we work in a psychology lab!)

Types of Experimenter Bias

Experimenter bias can occur in all study phases, from the initial background research and survey design to data analysis and the final presentation of results.

Design bias

Design bias is one of the most frequent types of experimenter biases. It happens when researchers establish a particular hypothesis and shape their entire methodology to confirm it. Rosenthal showed that 70% of experimenter biases influence outcomes in favor of the researcher‘s hypothesis.

Example of Experimenter Bias (Design Bias)

An experimenter believes separating men and women for long periods eventually makes them restless and hostile. It's a silly hypothesis, but it could be "proven" through design bias. Let's say a psychologist sets this idea as their hypothesis. They measure participants' stress levels before the experiment begins. During the experiment, the participants are separated by gender and isolated from the world. Their diets are off. Routines are shifted. Participants don't have access to their friends or family. Surely, they are going to get restless. The psychologist could argue that these results prove his point. But does it?

Not all examples of design bias are this extreme, but it shows how it can influence outcomes.

Sampling bias

Sampling or selection bias refers to choosing participants so that certain demographics are underrepresented or overrepresented in a study. Studies affected by the sampling bias are not based on a fully representative group.

The omission bias occurs when participants of certain ethnic or age groups are omitted from the sample. In the inclusive bias, on the contrary, samples are selected for convenience, such as all participants fitting a narrow demographic range.

Example of Experimenter Bias (Sampling Bias)

Philip Zimbardo created the Stanford Prison Experiment to answer the question, "What happens when you put good people in an evil place?" The experiment is now one of the most infamous experiments in social psychology. But there is (at least) one problem with Zimbardo's attempt to answer such a vague question. He does not put all types of "good people" in an evil place. All the participants in the Stanford Prison Experiment were young men. Can 24 young men of the same age and background reflect the mindsets of all "good people?" Not really.

Procedural bias

Procedural bias arises when how the experimenter carries out a study affects the results. If participants are given only a short time to answer questions, their responses will be rushed and not correctly show their opinions or knowledge.

Example of Experimenter Bias (Procedural Bias)

Once again, the Stanford Prison Experiment offers a good example of experimenter bias. This example is merely an accusation. Years after the experiment made headlines, Zimbardo was accused of "coaching" the guards. The coaching allegedly encouraged the guards to act aggressively toward the prisoners. If this is true, then the findings regarding the guards' aggression should not reflect the premise of the experiment but the procedure. What happens when you put good people in an evil place and coach them to be evil?

Measurement bias

Measurement bias is a systematic error during the data collection phase of research. It can take place when the equipment used is faulty or when it is not being used correctly.

Example of Experimenter Bias (Measurement Bias)

Failing to calibrate scales can drastically change the results of a study! Another example of this is rounding up or down. If an experimenter is not exact with their measurements, they could skew the results. Bias does not have to be nefarious, it can just be neglectful.

Interviewer bias

Interviewers can consciously or subconsciously influence responses by providing additional information and subtle clues. As we have seen in the rat-maze experiment, the subject's response will inevitably lean towards the interviewer’s opinions.

Example of Experimenter Bias (Interview Bias)

Think about the difference between the following sets of questions:

- "How often do you bathe?" vs. "I'm sure you're very hygienic, right?"

- "On a scale from 1-10, how much pain did you experience?" vs. "Was the pain mild, moderate, or excruciating?"

- "Who influenced you to become kind?" vs. "Did your mother teach you to use manners?"

The differences between these questions are subtle. In some contexts, researchers may not consider them to be biased! If you are creating questions for an interview, be sure to consult a diverse group of researchers. Interview bias can come from our upbringing, media consumption, and other factors we cannot control!

Response bias

Response bias is a tendency to answer questions inaccurately. Participants may want to provide the answers they think are correct, for instance, or those more socially acceptable than they truly believe. Responders are often subject to the Hawthorne effect , a phenomenon where people make more efforts and perform better in a study because they know they are being observed.

Example of Experimenter Bias (Response Bias)

The Asch Line Study is a great example of this bias. Of course, researchers created this study to show the impact of response bias. In the study, participants sat among several "actors." The researcher asked the room to identify a certain line. Every actor in the room answered incorrectly. To confirm, many participants went along with the wrong answer. This is response bias, and it happens more often than you think.

Reporting bias

Reporting bias, also called selective reporting, arises when the nature of the results influences the dissemination of research findings. This type of bias is usually out of the researcher’s control. Even though studies with negative results can be just as significant as positive ones, the latter are much more likely to be reported, published, and cited by others.

Example of Experimenter Bias (Reporting Bias)

Why do we hear about the Stanford Prison Experiment more than other experiments? Reporting bias! The Stanford Prison Experiment is fascinating. The drama surrounding the results makes great headlines. Stanford is a prestigious school. There is even a movie about it! Yes, some biases went into the study. However, psychologists and content creators will continue discussing this experiment for many years.

How Can You Remove Experimenter Bias From Research?

Unfortunately, experimenter bias cannot be wholly stamped out as long as humans are involved in the experiment process. Our upbringing, education, and experience may always color how we gather and analyze data. However, experimenter bias can be controlled by sharing this phenomenon with people involved in conducting experiments first!

How Can Experimenter Bias Be Controlled?

One way to control experimenter bias is to intentionally put together a diverse team and encourage open communication about how to conduct experiments. The larger the group, the more perspectives will be shared, and biases will be revealed. Biases should be considered at every step of the process.

Strategies to Avoid Experimenter Bias

Most modern experiments are designed to reduce the possibility of bias-distorted results. In general, biases can be kept to a minimum if experimenters are properly trained and clear rules and procedures are implemented.

There are several concrete ways in which researchers can avoid experimenter bias.

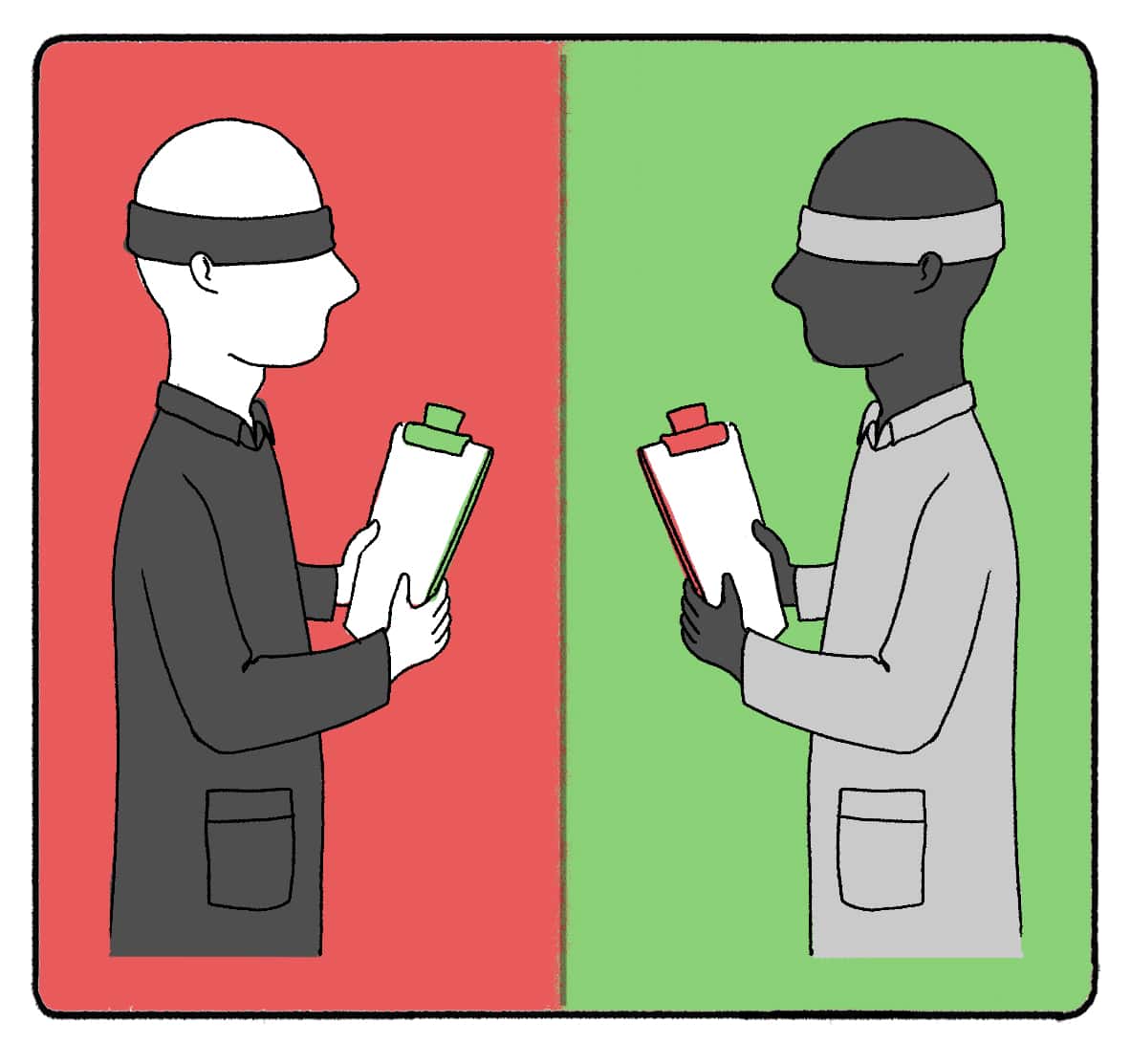

Blind analysis

A blind analysis is an optimal way of reducing experimenter bias in many research fields. All the information which may influence the outcome of the experiment is withheld. Researchers are sometimes not informed about the true results until they have completed the analysis. Similarly, when participants are unaware of the hypothesis, they cannot influence the experiment's outcome.

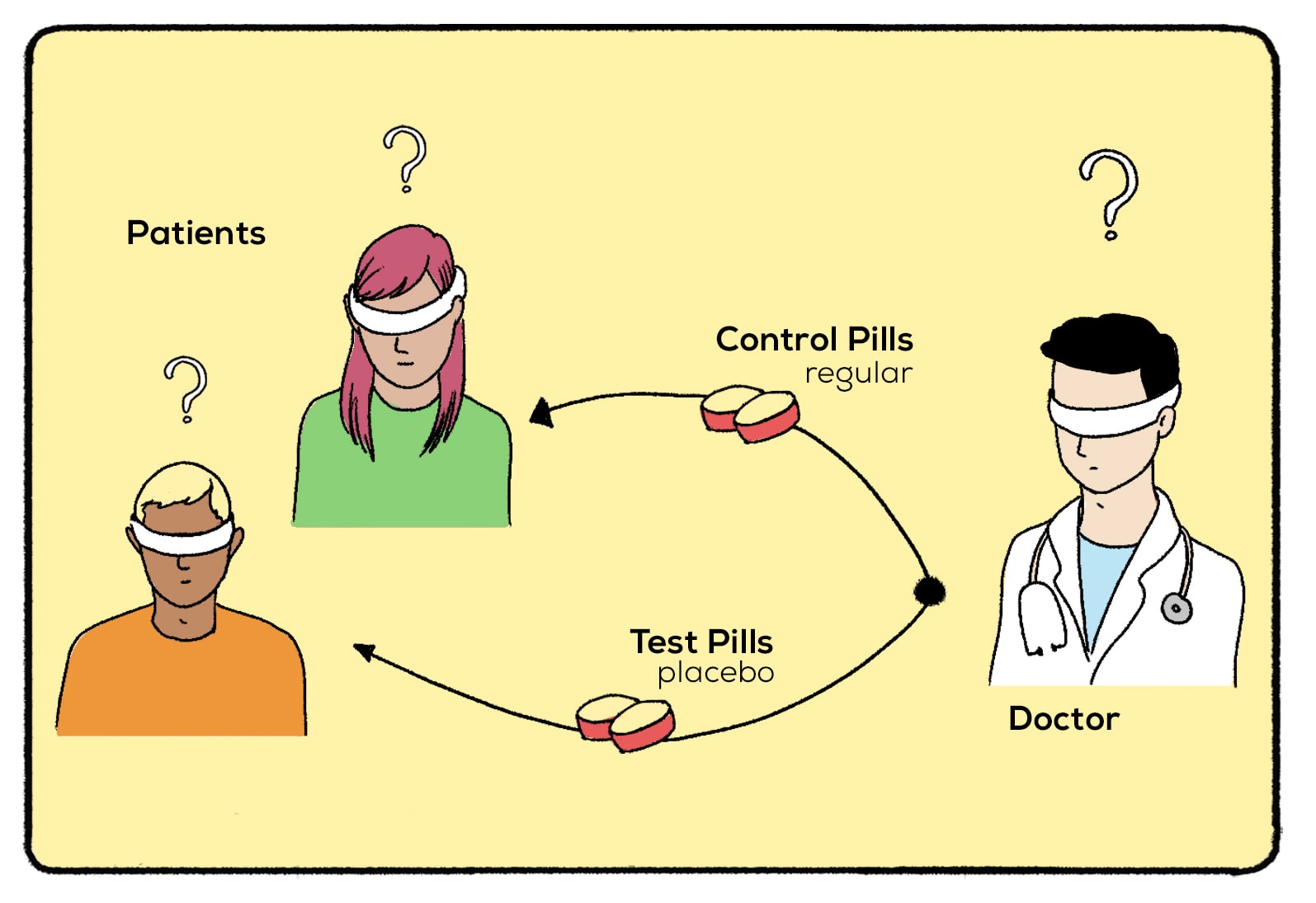

Double-blind study

Double-blind techniques are commonly used in clinical research. In contrast to an open trial, a double-blind study is done so that neither the clinician nor the patients know the nature of the treatment. They don’t know who is receiving an actual treatment and who is given a placebo, thus eliminating any design or interview biases from the experiment.

Minimizing exposure

The less exposure respondents have to experimenters, the less likely they will pick up any cues that would impact their answers. One of the common ways to minimize the interaction between participants and experimenters is to pre-record the instructions.

Peer review

Peer review involves assessing work by individuals possessing comparable expertise to the researcher. Their role is to identify potential biases and thus make sure that the study is reliable and worthy of publication.

Understanding and addressing experimenter bias is crucial in psychological research and beyond. It reminds us that human perception and interpretation can significantly shape outcomes, whether it's Clever Hans responding to his owner's cues or students' expectations influencing their rats' performances.

Researchers can strive for more accurate, reliable, and meaningful results by acknowledging and actively working to minimize these biases. This awareness enhances the integrity of scientific research. It deepens our understanding of the complex interplay between observer and subject, ultimately leading to more profound insights into the human mind and behavior.

Related posts:

- 19+ Experimental Design Examples (Methods + Types)

- Backward Design (Lesson Planning + Examples)

- Actor Observer Bias (Definition + Examples)

- Philip Zimbardo (Biography + Experiments)

- Confirmation Bias (Examples + Definition)

Reference this article:

About The Author

Free Personality Test

Free Memory Test

Free IQ Test

PracticalPie.com is a participant in the Amazon Associates Program. As an Amazon Associate we earn from qualifying purchases.

Follow Us On:

Youtube Facebook Instagram X/Twitter

Psychology Resources

Developmental

Personality

Relationships

Psychologists

Serial Killers

Psychology Tests

Personality Quiz

Memory Test

Depression test

Type A/B Personality Test

© PracticalPsychology. All rights reserved

Privacy Policy | Terms of Use

Root out friction in every digital experience, super-charge conversion rates, and optimize digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Explore the platform powering Experience Management

- Free Account

- Product Demos

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

- Artificial Intelligence

- Market Research

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results, live in Salt Lake City.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- What is a survey?

- Response Bias

Try Qualtrics for free

What is response bias and how can you avoid it.

13 min read Response bias can hinder the results and success of your survey data. In this ultimate guide, we'll discover how to find it and prevent this bias before it's too late.

For a survey to provide usable data, it’s essential that responses are honest and unbiased.

The reason for this is simple: biased, misleading and dishonest responses lead to inaccurate information that prevents good decision making.

Indeed, if you can’t get an accurate overview of how respondents feel about something — whether it’s your brand, product, service or otherwise — how can you make the right decisions?

Now, when people respond inaccurately or falsely to questions, whether accidentally or deliberately, we call this response bias (also known as survey bias). And it’s a major problem when it comes to getting accurate survey data.

So how do you avoid response bias? In this guide, we’ll introduce the concept of response bias, what it is, why it’s a problem and how to reduce response bias in your future surveys.

Free eBook: The qualitative research design handbook

Response bias definition

As mentioned, response bias is a general term that refers to conditions or factors that influence survey responses.

There are several reasons as to why a respondent might provide inaccurate responses, from a desire to comply with social desirability and answer in a way the respondent thinks they ‘should’ to the nature of the survey and the questions asked.

Typically, response bias arises in surveys that focus on individual behavior or opinions — for example, their political allegiance or drinking habits. As perception plays a huge role in our lives, people tend to respond in a way they think is positive.

Using the drinking example, if a respondent was asked how often they consume alcohol and the options were: ‘frequently, sometimes and infrequently’, they’re more likely to choose sometimes or infrequently so they’re perceived positively.

However, dishonest answers that don’t represent the views of your sample can lead to inaccurate data and information that gradually becomes less useful as you scale your research.

Ultimately, this can have devastating effects on organizations that rely heavily on data-driven research initiatives as it leads to poor decision-making. It can also affect an organization’s reputation if they’re known for publishing highly accurate reports.

Types of Response bias

While ‘response bias’ is the widely understood term for biased or dishonest survey responses, there are actually several different types of response bias. Each one has the potential to skew or even ruin your survey data.

In this section, we’ll look at the different types of response bias and provide examples:

Social desirability bias

Social desirability bias often occurs when respondents are asked sensitive questions and — rather than answer honestly — provide what they believe is the more socially desirable response. The idea behind social desirability bias is that respondents overreport ‘good behavior’ and underreport ‘bad behavior’.

These types of socially desirable answers are often the result of poorly worded questions, leading questions or sensitive topics. For example, alcohol consumption, personal income, intellectual achievements, patriotism, religion, health, indicators of charity and so on.

In surveys that are poorly worded or leading, questions are posed in a way in which encourages respondents to provide a specific answer. One of the most obvious examples of a question that will trigger social desirability bias is: “Do you think it’s appropriate to drink alcohol every day?”

Even if the respondent wants to answer honestly, they’ll respond in a more socially acceptable way.

Social desirability bias also works with affirmative actions. For example, asking someone if they think everyone should donate part of their salary to charity is guaranteed to generate a positive response, even if those in the survey don’t do it.

Non-response bias

Non-response bias — which is sometimes called late response bias — is when people who don’t respond to a survey question differ significantly to those who do respond.

This type of bias is inherent in analytical or experimental research, for example, those assessing health and wellbeing.

Non-response bias is tricky for researchers, precisely because the experiences or outcomes of those who don’t respond could wildly differ to the experiences of those who do respond. As a consequence, the results may then over or underrepresent a particular perspective.

For example, imagine you’re conducting psychiatric research to analyze and diagnose major depression in a population. The behaviors of those who do respond could be vastly different to those who don’t, but you end up overreporting one perspective from your sample.

In this example, there’s no way to make an accurate assumption or fully understand what the survey data is telling you.

Demand bias

Demand bias (demand characteristics or sometimes called survey bias ) occurs when participants change their behaviors or views simply because they assume to know or do know the research agenda.

Demand characteristics are problematic because they can bias your research findings and arise from several sources. Think of these as clues about the research hypotheses:

- The title of the study

- Information about the study or rumors

- How researchers interact with participants

- Study procedure (order of tasks)

- Tools and instruments used (e.g. cameras, apparel)

All of these demand characteristics place hidden demands on participants to respond in a particular way once they perceive them. For example, in one classic experiment published in Psychosomatic Medicine , researchers examined whether demand characteristics and expectations could influence menstrual cycle symptoms reported by study participants.

Some participants were informed of the purpose of the study, while the rest were left unaware. The informed participants were significantly more likely to report negative premenstrual and menstrual symptoms than participants who were aware of the study’s purpose.

Survey research methods can also determine the risks of common demand characteristics, e.g. structured interviews when the responder is physically asked survey questions by another person, or when participants take part in group research.

As a result of the above, rather than present their true feelings, respondents are more likely to give a more socially acceptable answer — or an answer which they believe is what the researchers want them to say.

Extreme response bias

Extreme response bias occurs when the respondents answer a question with an extreme view, even if they don’t have an extreme opinion on the subject.

This bias is most common when conducting research through satisfaction surveys. What usually happens is respondents are asked to rank or rate something (whether it’s an experience, the quality of service or a product) out of 5 points and choose the highest or lowest option, even if it’s not their true stance.

For example, with extreme response bias, if you asked respondents to rate the quality of service they received at a restaurant out of 5, they’re much more likely to say 5 or 1, rather than give a neutral response.

Similarly, a respondent might strongly disagree with a given statement, even if they have no strong feelings towards the topic.

Extreme responding can often occur due to a willingness to please the person asking the question or due to the wording of the question, which triggers a response bias and pushes the respondent to answer in a more extreme way than they otherwise would.

For example: “We have a 5* star customer satisfaction rating, would you agree that we provide a good service to customers?”

Neutral responses

As you can guess, neutral response bias is the opposite of extreme response bias and occurs when a respondent simply provides a neutral response to every question.

Neutral responses typically occur as a result of the participant being uninterested in the survey and/or pressed for time. As a result, they answer the questions as quickly as possible.

For example, if you’re conducting research about the development or HR technology but send it to a sample of the general public — it’s highly unlikely that they’ll be interested and will therefore aim to complete the survey as quickly as possible.

This is why it’s so important that your research methodology takes into consideration the sample and the nature of your survey before you put it live.

Acquiescence bias

Acquiescence bias is like an extreme form of social desirability, but instead of responding in a ‘socially acceptable’ way, respondents simply agree with research statements — regardless of their own opinion.

To put it simply, acquiescence bias is based on respondents’ perceptions of how they think the researcher wants them to respond, leading to potential demand effects. For example, respondents might acquiesce and respond favorably to new ideas or products because they think that’s what a market researcher wants to hear.

Similarly, depending on how a question is asked or how an interviewer reacts, respondents can infer cues as to how they should respond.

You can read more about acquiescence bias here .

Dissent bias

Dissent bias, as its name suggests, is the complete opposite of acquiescence bias in that respondents simply disagree with the statements they’re presented with, rather than give true opinions.

Sometimes, dissent bias is intentional and representative of a respondent’s views or (more likely) their lack of attention and/or desire to get through the survey faster.

Dissent bias can negatively affect your survey results, and you’ll need to consider your survey design or question wording to avoid it.

Voluntary response bias

Voluntary response biases in surveys occur when your sample is made of people who have volunteered to take part in the survey.

While this isn’t inherently detrimental to your survey or data collection, it can result in a study that overreports on one aspect as you’re more likely to have a highly opinionated sample.

For example, call-in radio shows that solicit audience participation in surveys or discussions on controversial topics (e.g. abortion, affirmative action). Similarly, if your sample is composed of people who all feel the same way about a particular issue or topic, you’ll overreport on specific aspects of that issue or topic

This type of voluntary bias can make it particularly difficult to generate accurate results as they tend to overrepresent one particular side.

Cognitive bias

Cognitive bias is a subconscious error in thinking that leads people to misinterpret information from the world around them, affecting their rationality and accuracy of decisions. This includes trying to alter facts to fit a personal view or looking at information differently to align with predetermined thoughts.

For example, a customer who has had negative experiences with products like yours is most likely to respond negatively to questions about your product, even if they’ve never used it.

Cognitive biases can also manifest in several ways, from how we put more emphasis on recent events (recency bias) to irrational escalation — e.g. how we tend to justify increased investment in a decision, especially when it’s something we want. Empathy and social desirability are also considered cognitive biases as they alter how we respond to questions.

Having this response bias in your data collection can lead you to either over or underreport certain samples, influencing how and what decisions are made.

How to reduce, avoid and prevent response bias

Whether it’s response bias as a result of overrepresenting a certain sample, the way questions are worded or otherwise, it can quickly become a problem that can compromise the validity of your study.

Having said that, it’s easily avoidable, especially if you have the correct survey tools, methodologies, and software in place. And Qualtrics can help.

With Qualtrics CoreXM , you have an all-in-one solution for everything from simple surveys to comprehensive market research . Empower everyone in your organization to carry out research projects, improve your research quality, reduce the risk of response bias and start generating accurate results from any survey type.

Reach the right respondents wherever they are with our survey and panel management tools. Then, leverage our data analytics capabilities to uncover trends and opportunities from your data.

Plus, you can use our free templates that provide hundreds of carefully created questions that further reduce response bias from your survey and ensure you’re analyzing data that will promote better business decisions.

Related resources

Double barreled question 11 min read, likert scales 14 min read, survey research 15 min read, survey bias types 24 min read, post event survey questions 10 min read, best survey software 16 min read, close-ended questions 7 min read, request demo.

Ready to learn more about Qualtrics?

- Media Center

Why do we give false survey responses?

Response bias, what is response bias.

The response bias refers to our tendency to provide inaccurate, or even false, answers to self-report questions, such as those asked on surveys or in structured interviews.

Where this bias occurs

Researchers who rely on participant self-report methods for data collection are faced with the challenge of structuring questionnaires in a way that increases the likelihood of respondents answering honestly. Take, for example, a researcher investigating alcohol consumption on college campuses through a survey administered to the student population. In this case, a major concern would be ensuring that the survey is neutral and non-judgmental in tone. If the survey comes across as disapproving of heavy alcohol consumption, respondents may be more likely to underreport their drinking, leading to biased survey results.

Individual effects

When this bias occurs, we come up with an answer based on external factors, such as societal norms or what we think the researcher wants to hear. This prevents us from taking time to self-reflect and think about how the topic being assessed is actually relevant to us. Not only is this a missed opportunity for critical thinking about oneself and one’s actions, but, in the case of research, it results in the provision of inaccurate data.

Systemic effects

Researchers need to proceed with caution when designing surveys or structured interviews in order to minimize the likelihood of respondents committing response bias. If they fail to do so, this systematic error could be detrimental to the entire study. Instead of progressing knowledge, biased results can lead researchers to draw inaccurate conclusions, which can have wide implications. Research is expensive to conduct and the questions under investigation tend to be of importance. For these reasons, tremendous effort is required in research design to ensure that all findings are as accurate as possible.

Why it happens

Response bias can occur for a variety of reasons. To categorize the possible causes, different forms of response bias have been defined.

Social desirability bias

First is social desirability bias, which refers to when sensitive questions are answered not with the truth, but with a response that conforms to societal norms. While there’s no real “right” answer to the survey question, social expectations may have deemed one viewpoint more acceptable than the other. In order to conform with what we feel is the appropriate stance, we tend to under- or over-report our own position.

Demand characteristics

Second, are demand characteristics. This is when we attempt to predict how the researcher wants us to answer, and adjust our survey responses to align with that. Simply being part of a study can influence the way we respond. Anything from our interactions with the researcher to the extent of our knowledge about the research topic can have an effect on our answers. This is why it’s such a challenge for the principal investigator to design a study that eliminates, or at least minimizes, this bias.

Acquiescence bias

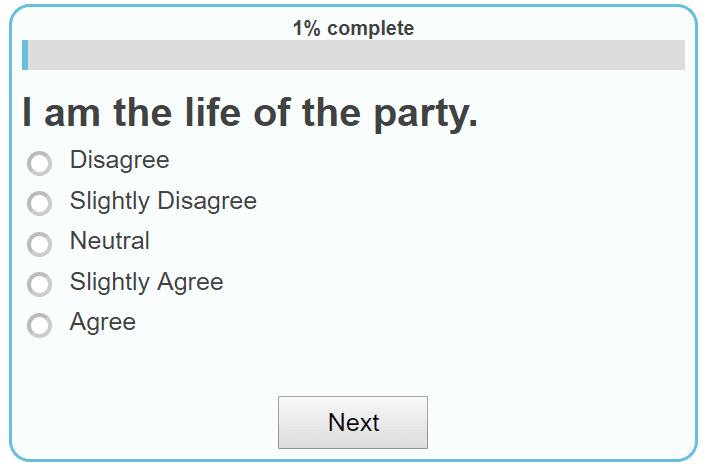

Third, is acquiescence bias, which is the tendency to agree with all “Yes/No” or “Agree/Disagree” questions. This may occur because we are striving to please the researcher, or, as posited by Cronbach, 1 because we are motivated to call to mind information that supports the given statement. He suggests that we selectively focus on information that agrees with the statement, and unconsciously ignore any memories that contradict it.

Extreme responding

A final example of a type of response bias is extreme responding. It’s commonly seen in surveys that use Likert scales - a type of scaled response format with several possible responses ranging from the most negative to the most positive. Responses are biased when respondents select the extremity responses almost exclusively. That is to say, if the Likert scale ranges from 1 to 7, they only ever answer 1 or 7. This can happen when respondents are disinterested and don’t feel like taking the time to actively consider the options. Other times, it happens because demand characteristics have led the participant to believe that the researcher desires a certain response.

Why it is important

In order to conduct well-designed research and obtain the most accurate results possible, academics must have a comprehensive understanding of response bias. However, it’s not just researchers who need to understand this effect. Most of us have, or will go onto, participate in research of some kind, even if it’s as simple as filling out a quick online survey. By being aware of this bias, we can work on being more critical and honest in answering these kinds of questions, instead of responding automatically.

How to avoid it

By knowing about response bias and answering surveys and structured interviews actively, instead of passively, respondents can help researchers by providing more accurate information. However, when it comes to reducing the effects of this bias, the onus is on the creator of the questionnaire.

Wording is of particular importance when it comes to combating response bias. Leading questions can prompt survey-takers to respond in a certain way, even if it’s not how they really feel. For example, in a customer-satisfaction survey a question like “Did you find our customer service satisfactory?” subtly leans towards a more favorable response, whereas asking the respondent to “Rate your customer service experience” is more neutral.

Emphasizing the anonymity of the survey can help reduce social desirability bias, as people feel more comfortable answering sensitive questions honestly when their names aren’t attached to their answers. Utilizing a professional, non-judgemental tone is also important for this.

To avoid bias from demand characteristics, participants should be given as little information about the study as possible. Say, for example, you’re a psychologist at a university, investigating gender differences in shopping habits . A question on this survey might be something like: “How often do you go clothing shopping?”, with the following answer choices: “At least once a week”, “At least once a month”, “At least once a year”, and “Every few years”. If your participants figure out what you’re researching they may answer differently than they otherwise would have.

Many of us resort to response bias, specifically extreme responding and acquiescence bias, when we get bored. This is because it’s easier than putting in the effort to actively consider each statement. For that reason, it’s important to factor in length when designing a survey or structured interview. If it’s too long, participants may zone out and respond less carefully, thereby giving less accurate information.

How it all started

Interestingly, the response bias wasn’t originally considered much of an issue. Gove and Geerken claimed that “response bias variables act largely as random noise," which doesn’t significantly affect the results as long as the sample size is big enough. 2 They weren’t the only researchers to try and quell concerns over this bias but, more recently, it has become increasingly recognized as a genuine source of concern in academia. This is due to the overwhelming amount of research that has come out supporting the presence of an effect, for example, Furnham’s literature review. 3 Knäuper and Wittchen’s 1994 study also demonstrates this bias, specifically, in the context of standardized diagnostic interviews administered to the elderly, who engage in a form of response bias by tending to attribute symptoms of depression to physical conditions. 4

Example 1 - Depression

An emotion-specific response bias has been observed in patients with major depression, as evidenced by a study conducted by Surguladze et al. in 2004. 5 The results of this study showed that patients with major depression had greater difficulty discriminating between happy and sad faces presented for a short duration of time than did the healthy control group. This discrimination impairment wasn’t observed when facial expressions were presented for a longer duration. On these longer trials, patients with major depression exhibited a response bias towards sad faces. It’s important to note that discrimination impairment and response bias did not occur simultaneously, so it’s clear that one can’t be attributed to the other.

Understanding this emotion-specific response bias allows for further insight into the mechanisms of major depression, particularly into the impairments in social functioning associated with the disorder. It’s been suggested that the bias towards sad stimuli may cause people with major depression to interpret situations more negatively. 6

Researchers working outside of mental health should be aware of this bias as well, so that they know to screen for major depression should their survey include questions pertaining to emotion or interpersonal interactions.

Example 2 - Social media

Social media is a useful tool, thanks to both its versatility and its wide reach. However, while most of the surveys used in academic studies have gone through rigorous scrutiny and have been peer-reviewed by experts in the field, this isn’t always the case with social media polls.

Many businesses will administer surveys over social media to gauge their audience’s views on a certain matter. There are many reasons why the results of these kinds of polls should be taken with a grain of salt - for one thing, the sample is most certainly not random. In these situations, response bias is also likely at play.

Take, for example, a poll conducted by a makeup company, where the question is “How much did you love our new mascara?”, with the possible answers: “So much!” and “Not at all.” This is a leading question, which essentially asks for a positive response. Additionally, respondents may be prone to commit acquiescence bias in order to please the company, since there’s no option for a middle-ground response. Even if results of this survey are overwhelmingly positive, you might not want to immediately splurge on the mascara. The positive response could have more to do with the structure of the survey than with the quality of the product.

Response bias describes our tendency to provide inaccurate responses on self-report measures.

Social pressures, disinterest in the survey, and eagerness to please the researcher are all possible causes of response bias. Furthermore, the design of the survey itself can prompt participants to adjust their responses.

Example 1 - Major depression

People with major depression are more likely to identify a given facial expression as sad than people without major depression. This can impact daily interpersonal interactions, in addition to influencing responses on surveys related to emotion-processing.

Example 2 - Interpreting social media surveys

Surveys that aren’t designed to prevent response bias provide misleading results. For this reason, social media surveys, which can be created by anyone, shouldn’t be taken at face value.

When filling out a survey, actively considering each response, instead of answering automatically, can decrease the amount to which we engage in response bias. Anyone conducting research should take care to craft surveys that are anonymous, that are neutral in tone, that provide sufficient answer options, and that don’t give away too much about the research question.

Related Articles

How does society influence one’s behavior.

This article evaluates the ways in which our behaviors are molded by societal influences. The author breaks down the different influences our peers have on our actions, which is pertinent when it comes to exploring social desirability bias.

The Framing Effect

The framing effect describes how the way factors such as wording, setting, and situation influence our choices and opinions. The way survey questions are framed can lead to response bias, by causing respondents to over- or under-report their true viewpoint. This article elaborates on the implications of the framing effect, which are powerful and widespread.

Case studies

From insight to impact: our success stories, is there a problem we can help with.

- Cronbach, L. J. (1942). Studies of acquiescence as a factor in the true-false test. Journal of Educational Psychology, 33 (6), 401–415. doi: 10.1037/h0054677

- Gove, W. R., and Geerken, M. R. (1977). "Response bias in surveys of mental health: An empirical investigation". American Journal of Sociology . 82(6), 1289–1317. doi : 10.1086/226466

- Furnham, Adrian (1986). "Response bias, social desirability and dissimulation". Personality and Individual Differences . 7(3), 385–400. doi: 10.1016/0191-8869(86)90014-0

- Knäuper, Bärbel, and Wittchen, Hans-Ulrich (1994). "Diagnosing major depression in the elderly: Evidence for response bias in standardized diagnostic interviews?". Journal of Psychiatric Research . 28(2), 147–164. doi: 10.1016/0022-3956(94)90026-4

- Surguladze, S. A., Young, A. W., Senior, C., Brébion, G., Travis, M. J., & Phillips, M. L. (2004). Recognition Accuracy and Response Bias to Happy and Sad Facial Expressions in Patients With Major Depression. Neuropsychology, 18 (2), 212–218. doi: 10.1037/0894-4105.18.2.212

About the Authors

Dan is a Co-Founder and Managing Director at The Decision Lab. He is a bestselling author of Intention - a book he wrote with Wiley on the mindful application of behavioral science in organizations. Dan has a background in organizational decision making, with a BComm in Decision & Information Systems from McGill University. He has worked on enterprise-level behavioral architecture at TD Securities and BMO Capital Markets, where he advised management on the implementation of systems processing billions of dollars per week. Driven by an appetite for the latest in technology, Dan created a course on business intelligence and lectured at McGill University, and has applied behavioral science to topics such as augmented and virtual reality.

Dr. Sekoul Krastev

Sekoul is a Co-Founder and Managing Director at The Decision Lab. He is a bestselling author of Intention - a book he wrote with Wiley on the mindful application of behavioral science in organizations. A decision scientist with a PhD in Decision Neuroscience from McGill University, Sekoul's work has been featured in peer-reviewed journals and has been presented at conferences around the world. Sekoul previously advised management on innovation and engagement strategy at The Boston Consulting Group as well as on online media strategy at Google. He has a deep interest in the applications of behavioral science to new technology and has published on these topics in places such as the Huffington Post and Strategy & Business.

We are the leading applied research & innovation consultancy

Our insights are leveraged by the most ambitious organizations.

I was blown away with their application and translation of behavioral science into practice. They took a very complex ecosystem and created a series of interventions using an innovative mix of the latest research and creative client co-creation. I was so impressed at the final product they created, which was hugely comprehensive despite the large scope of the client being of the world's most far-reaching and best known consumer brands. I'm excited to see what we can create together in the future.

Heather McKee

BEHAVIORAL SCIENTIST

GLOBAL COFFEEHOUSE CHAIN PROJECT

OUR CLIENT SUCCESS

Annual revenue increase.

By launching a behavioral science practice at the core of the organization, we helped one of the largest insurers in North America realize $30M increase in annual revenue .

Increase in Monthly Users

By redesigning North America's first national digital platform for mental health, we achieved a 52% lift in monthly users and an 83% improvement on clinical assessment.

Reduction In Design Time

By designing a new process and getting buy-in from the C-Suite team, we helped one of the largest smartphone manufacturers in the world reduce software design time by 75% .

Reduction in Client Drop-Off

By implementing targeted nudges based on proactive interventions, we reduced drop-off rates for 450,000 clients belonging to USA's oldest debt consolidation organizations by 46%

Noble Edge Effect

Why do we tend to favor brands that show care for societal issues, look-elsewhere effect, why do scientists keep looking for a statistically significant result after failing to find one initially, naive allocation, why do we prefer to spread limited resources across our options.

Eager to learn about how behavioral science can help your organization?

Get new behavioral science insights in your inbox every month..

Meet our leadership team

See how we care for the community

Get help, support, or just say hello

Lean how you can become a partner

See our upcoming events

Learn about our culture and jobs

Grow Your Business

Nextiva is shaping the future of growth for all businesses. Start learning how your company can take everything to the next level.

See every product, app, and suite

VoIP phone service

Pipeline and customer management

VoIP, CRM, Live Chat, & Surveys

Worry-free phone service

Advanced business VoIP devices

Learn & Connect

Access our self-help portal

Find phone and product guides

See what people are saying about us

See fees for international calling

One-click VoIP readiness test

Uptime transparency

Nextiva Blog

- Customer Experience

- Business Communication

- Productivity

- Marketing & Sales

- Product Updates

Understanding the 6 Types of Response Bias (With Examples)

June 10, 2019 11 min read

Cameron Johnson

In this guide, we’ll breakdown one of the biggest challenges researchers face when it comes to surveying an audience — Response Bias. Let’s dive in. You need to know exactly what response bias is, what causes it, and crucially, how to avoid response set bias in your surveys. In this article, we cover it all:

- What is response bias?

- Why does response bias matter?

- What types of response bias are there?

- How do you get rid of response bias?

- Which survey tools should your company use?

What Is Response Bias?

This term refers to the various conditions and biases that can influence survey responses. The bias can be intentional or accidental, but with biased responses, survey data becomes less useful as it is inaccurate. This can become a particular issue with self-reporting participant surveys. Bias response is central to any survey, because it dictates the quality of the data, and avoiding bias really is essential if you want meaningful survey responses. Leading bias is one of the more common types. An example would be if your question asks about customer satisfaction , and the options given are Very Satisfied, Satisfied and Dissatisfied. In this instance there is bias that can affect results. To avoid bias here, you could balance the survey questions by including two of each of the positive and negative options.

Why Does Response Bias Matter?

A survey is a powerful tool for businesses because it provides the ability to obtain data and opinions from real members of the target audience, which gives a more accurate assessment of market position and performance than any trial-and-error tests could ever produce. When the goal of the survey is data collection, having the right sample size and survey methodology matter most.

What Types of Response Bias Are There?

One of the key things to avoid response bias is to fully understand how it happens. There are several types of response bias that can affect your surveys, and the ability to recognize each one can help you avoid bias in your surveys as you create them, rather than spotting it later. However, even with this understanding, it is always wise to have several people go through any survey design to check for possible causes of response bias before any survey is sent to respondents. This ensures that the resulting data is as accurate as possible. We will cover the main types of response bias here, and we will provide examples of response bias to show just how easy it is to introduce bias within the survey.

1) Demand Characteristics

One of the more common types of response bias, demand bias, comes from the respondents being influenced simply by being part of the study. This happens as respondents actually change their behavior and opinions as a result of taking part in the study itself. This can happen for several reasons, but it is important to understand each to be able to deal with each form of this response bias problem.

Participants who look to understand the purpose of the survey

For instance, if the survey is looking into a user experience of a website, a participant may see that the aim is to gather data to support making changes to layout or content. The participant may then answer in a way that supports those changes, rather than as they really think, resulting in untruthful or inaccurate responses.

The setting of the survey or study

This is more applicable to surveys carried in person, where researchers conducting the survey can have an influence on the respondents, but it can apply to digital surveys too.

Interaction between researcher and respondent

This can influence how the survey is approached. Note that if it’s a digital survey that researcher-to-respondent interaction is still possible, occurring in the email or message used to invite the respondent to participate.

Wording bias can come into effect here as well

This type of bias influences the entire gamut of responses from individual or multiple participants. For instance, if the researcher knows the participant personally, even greeting them in a friendly manner can have a subconscious effect on the responses. This is as true in an email as it is in person, so by retaining a formal approach to all participants regardless of who they are you can ensure a uniform response from all participants.

Prior knowledge of the survey

Whether the questions themselves, or the general aims of the survey, or how it is being put together, prior knowledge of some aspects of the survey deliver response bias. This is because the participants can become preoccupied with the survey itself, resulting in those participants second-guessing their own answers and providing inaccurate responses as a result.

2) Social Desirability Bias

Maintain survey integrity

Participants second guessing research motives or finding out motives before taking the survey both result in response bias. Do this by maintaining the integrity of the survey and ensuring participants do not have additional information. To ascertain if participants have any understanding of the survey motives, a short after-survey questionnaire can be used.

6) Avoid Emotionally Charged Terms

Your questions should be clear, precise, and easily understandable. That means simple, unbiased language that avoids using words that evoke an emotional (rather than through-based) response. This includes words such as:

And so on. You want answers that are thought through, and these so-called ‘lightening’ words instead elicit an emotional response that is not as valuable for your research. In addition, try and avoid using a lot of negative words, as these can have an effect on how the participant perceives the survey and skew their responses. Finally, avoid word tricks. Be transparent with the audience and allow them to develop their answers. All of these are relatively simple steps to deliver improved survey results. Removing response bias will help you acquire accurate, unbiased data, and reflect the real views of your audience.

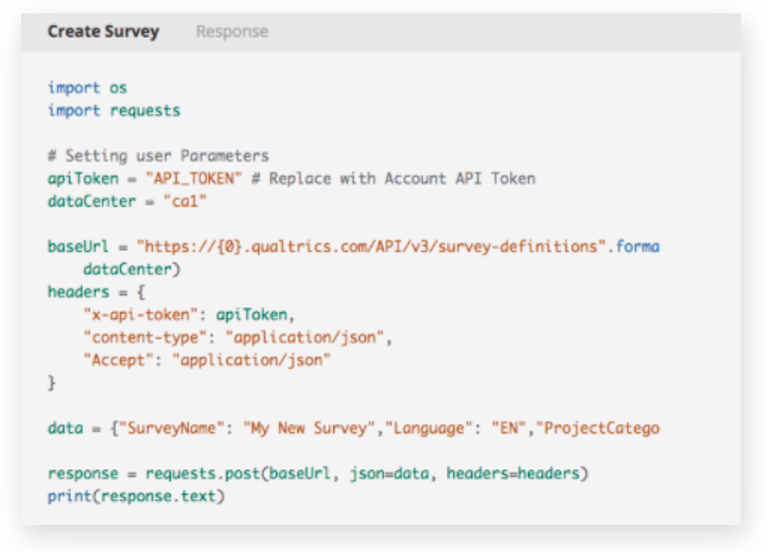

What Survey Tools Should Your Company Use?

Even if you know the various types of response bias, you still need to monitor the survey for problems and inaccurate data. Nextiva has two tools designed to do this for you, providing performance combined with ease of use for seamless integration into your workflow.

Nextiva Survey Analytics

Survey analytics provides business intelligence efficiency with a comprehensive feature set that tracks survey response data throughout your research. This provides simple, clear, visual presentation of the data you need. Through this simple interface you can drill down to get a complete performance picture, analyzing results right down to the individual respondent if necessary. All data is easily accessible, saving time and frustration. With a visual representation of aggregate responses to any question, you can quickly identify trends and anomalies as they are occurring.

Nextiva Surveys

A complete software solution for all your surveys, Nextiva Surveys provides the perfect platform for all your research. With a simple, fast design solution, your surveys will look great. And full customization ensures they always reflect your brand image.

With no coding required, you can get beautiful, rich surveys put together in just a few minutes, saving time and money without sacrificing quality or control. There are templates for all types of questions, complete security, and features including skip logic for a personalized experience. Nextiva Surveys has all the tools you need to create response bias-free, effective surveys that produce exceptional results. Platform free, you can deliver surveys in an email or via the web. The responsive design provides an exceptional user experience even on mobile devices. When it comes to response bias-free surveys, Nextiva has your back. With our easy-to-use survey software creating effective surveys that avoid response bias are just a few clicks away. Try them now and see how easy surveys can be!

ABOUT THE AUTHOR

Cameron Johnson was a market segment leader at Nextiva. Along with his well-researched contributions to the Nextiva Blog, Cameron has written for a variety of publications including Inc. and Business.com. Cameron was recently recognized as Utah's Marketer of the Year.

- Oct 18, 2023

Response Bias Project Makeover

Leigh Nataro teaches elementary statistics, math for business, and math for teaching at Moravian University in Bethlehem, PA. Leigh has been an AP Exam Reader and Table Leader and was on the AP Statistics Instructional Design Team, where she helped to tag items for the AP Classroom question bank. In addition to leading AP Statistics workshops, Leigh leads in-person and virtual Desmos workshops with Amplify. Leigh can be reached on Twitter at @mathteacher24 .

A picture of a pizza is shown to a student and then the student is randomly assigned to answer one of two questions.

Question 1: Would you eat this pizza?

Question 2: Would you eat this vegan pizza?

Does telling a person that a food is vegan impact their response? This was the experiment created by a pair of my students for one of the most engaging projects done in AP Statistics - The Response Bias Project . Students create an experiment to see if they can purposefully bias the results of a question.

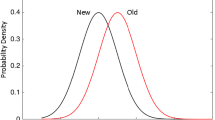

Does inserting the word “vegan” into this question while showing the exact same picture bias the results? Here are the results obtained by the students. What do you think?

In my original iteration of this project, students would create various colorful (and often glitter-laden) graphs to compare the results.

But how likely would it be to get these results by chance if no bias was present?

To answer this question, The Response Bias Project needed a makeover.

The Makeover: Include a Simulation

One of the most challenging AP Stats topics for students is estimating p-values from simulations. This is one of the reasons we do a simulation on the first day of class with playing cards called “Hiring Discrimination: It Just Won’t Fly”. (An online version of the scenario and simulation can be found here: Hiring Discrimination .) Several times during the first semester, we use playing cards to perform simulations and then interpret the results to determine if a given claim is convincing. Although we never formally call the results a p-value, examining the dotplots from simulated results sets the stage for tests of significance in the second half of the course. Making over this project with a simulation leads to more robust conclusions and reinforces the idea of a p-value based on simulations.

Originally my students used the free trial of Fathom to create their simulations. However, in recent years Fathom has not been supported on newer Mac operating systems. This led me to investigate using the Common Online Data Analysis Platform or CODAP . This is a free online tool for data analysis and it is specifically designed for use with students in grades 6-14. Students can save their work in google drive, but an account is not required to access CODAP.

To understand what students need to do to create their simulation, I share an instructional video related to one of the projects. Here is the video that goes with the vegan pizza project: CODAP for Response Bias Project and the CODAP file: Vegan Pizza Simulation .

Based on their results from the simulation, the students determined if adding "vegan" created biased results or if it was possible to get results like what they saw in their experiment due to chance alone. Students display their work on posters that are hung around the room. (A sample of posters is included at the end of the blog.) Then, half the class stands by their posters and they present to the few students that are in front of them. This takes about five minutes. Then, the students move on to another poster and pair of presenters. Students get to give their presentation about three or four times to different small groups of their peers. There are no powerpoints, no notecards, less nervousness and stress for students and this gives them practice with more informal presentations they might need to give at some point in the future. Another benefit is students get to see multiple instances of simulated results which helps to lay the foundation of the concept of a p-value for future units in the course.

Why Is This Makeover Helpful?

Although students learn about some common topics from AP Statistics earlier in their math careers, simulation is a topic that is new and often challenging for many students. Creating the simulation in CODAP helps students to understand what each dot in the simulation represents and how the overall distribution shows more likely and less likely outcomes. Students also identify where the value of their statistic (the count of yes answers) falls on the dotplot. They are essentially answering the test of significance question, that is “assuming there was no bias, how many times did the observed outcome or a more extreme outcome occur by chance alone?” Reading about the results on their classmates' posters and hearing about it in multiple presentations solidifies the concept of using probabilities from simulations to draw conclusions.

The Concept of Simulations from the AP Statistics Course and Exam Description

Skill 3.A: Determine relative frequencies, proportions, or probabilities using simulation or calculations.

UNC.2.A.4 Simulation is a way to model random events, such that simulated outcomes closely match real-world outcomes. All possible outcomes are associated with a value to be determined by chance. Record the counts of simulated outcomes and the count total.

UNC.2.A.5 The relative frequency of an outcome or event in simulated or empirical data can be used to estimate the probability of that outcome or event.

Note: On the 2023 AP Statistics Operational Exam, determining a probability based on data from a simulation was part of free-response question 6 .