Attention Span

- Reference work entry

- Cite this reference work entry

- Elizabeth Levin 3 &

- Jennifer Bernier 3

1701 Accesses

1 Citations

Sustained attention ; Vigilance

Attention span refers to an individual’s ability to attend to a stimulus or object over a period of time. This ability is also known as sustained attention or vigilance.

Description

Attention includes a number of components, one of which, attention span, is the ability to maintain focus and alertness over a period of time. Sustained attention requires persistence and motivation [ 2 ]. Thus, individuals with short attention spans may appear to give up or not put sufficient effort into tasks. Attention span increases with age, and is related to, and plays a role in other aspects of functioning including learning, memory, academic performance, and the understanding and processing of large quantities of information [ 1 , 3 ].

Research has shown that a child’s sustained attention develops in a linear fashion until the age of four, but then undergoes a dramatic increase between the ages of 4 and 6 years [ 1 ]. Between the ages of 7 and 8 years...

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Barkley, R. A. (1997). Behavioral inhibition, sustained attention, and executive function: Constructing a unified theory of ADHD. Psychological Bulletin, 121 (1), 65–94.

PubMed Google Scholar

Bennett Murphy, L., Murphy, E. C., & Rose, C. L. (2001). Sustained attention and unintentional injury among preschool-aged children. Child Neuropsychology, 7 (2), 72–83.

Betts, J., Mckay, J., Maruff, P., & Anderson, V. (2006). The Development of sustained attention in children: The effect of age and task load. Child Neuropsychology, 12 , 205–221.

Christakis, D. A., Zimmerman, F. J., DiGiuseppe, D. L., & McCarty, C. A. (2004). Early television exposure and subsequent attention problems in children. Pediatrics, 113 , 708–713.

DeShazo Barry, T., Grofer Klinger, L., Lyman, R. D., Bush, D., & Hawkins, L. (2001). Visual selective attention versus sustained attention in boys with Attention-Deficit/Hyperactivity Disorder. Journal of Attention Disorders, 4 (4), 193–202.

Google Scholar

Rose, C. L., Murphy, L. B., Schickedantz, B., & Tucci, J. (2001). The effects of event rate and signal probability on children’s vigilance. Journal of Clinical and Experimental Neuropsychology, 23 (2), 215–224.

Ruff, H. A., & Capozzoli, M. C. (2003). Development of attention and distractibility in the first 4 years of life. Developmental Psychology, 39 (5), 877–890.

Download references

Author information

Authors and affiliations.

Department of Psychology, Laurentian University, Ramsey Lake Road, Sudbury, ON, P3E2S9, Canada

Elizabeth Levin & Jennifer Bernier

You can also search for this author in PubMed Google Scholar

Editor information

Editors and affiliations.

Neurology, Learning and Behavior Center, 230 South 500 East, Suite 100, Salt Lake City, Utah, 84102, USA

Sam Goldstein Ph.D.

Department of Psychology MS 2C6, George Mason University, Fairfax, VA, 22030, USA

Jack A. Naglieri Ph.D. ( Professor of Psychology ) ( Professor of Psychology )

Rights and permissions

Reprints and permissions

Copyright information

© 2011 Springer Science+Business Media, LLC

About this entry

Cite this entry.

Levin, E., Bernier, J. (2011). Attention Span. In: Goldstein, S., Naglieri, J.A. (eds) Encyclopedia of Child Behavior and Development. Springer, Boston, MA. https://doi.org/10.1007/978-0-387-79061-9_226

Download citation

DOI : https://doi.org/10.1007/978-0-387-79061-9_226

Publisher Name : Springer, Boston, MA

Print ISBN : 978-0-387-77579-1

Online ISBN : 978-0-387-79061-9

eBook Packages : Behavioral Science

Share this entry

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 03 March 2022

A brain-based general measure of attention

- Kwangsun Yoo ORCID: orcid.org/0000-0002-5213-4575 1 ,

- Monica D. Rosenberg ORCID: orcid.org/0000-0001-6179-4025 1 , 2 ,

- Young Hye Kwon ORCID: orcid.org/0000-0001-7754-4223 1 ,

- Qi Lin ORCID: orcid.org/0000-0001-9702-8584 1 ,

- Emily W. Avery ORCID: orcid.org/0000-0002-8481-3978 1 ,

- Dustin Sheinost ORCID: orcid.org/0000-0002-6301-1167 3 ,

- R. Todd Constable ORCID: orcid.org/0000-0001-5661-9521 3 , 4 , 5 &

- Marvin M. Chun ORCID: orcid.org/0000-0003-1070-7993 1 , 4 , 6 , 7

Nature Human Behaviour volume 6 , pages 782–795 ( 2022 ) Cite this article

4689 Accesses

11 Citations

75 Altmetric

Metrics details

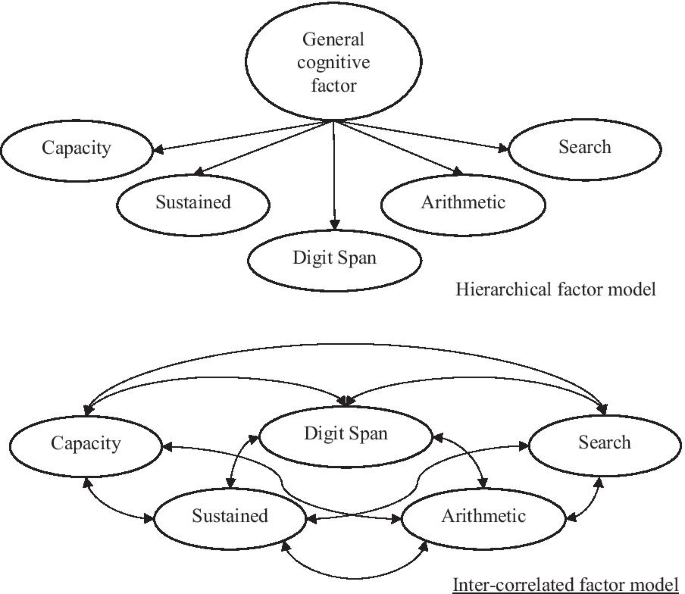

- Network models

Attention is central to many aspects of cognition, but there is no singular neural measure of a person’s overall attentional functioning across tasks. Here, using original data from 92 participants performing three different attention-demanding tasks during functional magnetic resonance imaging, we constructed a suite of whole-brain models that can predict a profile of multiple attentional components (sustained attention, divided attention and tracking, and working memory capacity) for novel individuals. Multiple brain regions across the salience, subcortical and frontoparietal networks drove accurate predictions, supporting a common (general) attention factor across tasks, distinguished from task-specific ones. Furthermore, connectome-to-connectome transformation modelling generated an individual’s task-related connectomes from rest functional magnetic resonance imaging, substantially improving predictive power. Finally, combining the connectome transformation and general attention factor, we built a standardized measure that shows superior generalization across four independent datasets (total N = 495) of various attentional measures, suggesting broad utility for research and clinical applications.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

111,21 € per year

only 9,27 € per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

The subcortical and neurochemical organization of the ventral and dorsal attention networks

Heritability and interindividual variability of regional structure-function coupling

The dynamic process of hyperfocusing and hyperfiltering in schizophrenia

Data availability.

Raw task and rest fMRI data used in the primary analyses ( n = 92) are available at https://doi.org/10.15154/1520622 .

Code availability

Scripts for the predictive model (the general attention model, C2C model and CPM) construction are available for download at https://github.com/rayksyoo/General_Attention . Scripts for the other (statistical) analyses are available from the corresponding author upon request.

Chun, M. M., Golomb, J. D. & Turk-Browne, N. B. A taxonomy of external and internal attention. Annu. Rev. Psychol. 62 , 73–101 (2011).

Article PubMed Google Scholar

Weissman, D. H., Roberts, K. C., Visscher, K. M. & Woldorff, M. G. The neural bases of momentary lapses in attention. Nat. Neurosci. 9 , 971–978 (2006).

Article CAS PubMed Google Scholar

Heinrichs, R. W. & Zakzanis, K. K. Neurocognitive deficit in schizophrenia: a quantitative review of the evidence. Neuropsychology 12 , 426–445 (1998).

Biederman, J., Newcorn, J. & Sprich, S. Comorbidity of attention deficit hyperactivity disorder with conduct, depressive, anxiety, and other disorders. Am. J. Psychiatry 148 , 564–577 (1991).

Levin, H. S. et al. Neurobehavioral outcome following minor head injury: a three-center study. J. Neurosurg. 66 , 234–243 (1987).

Rosenberg, M. D. et al. Functional connectivity predicts changes in attention observed across minutes, days, and months. Proc. Natl Acad. Sci. U. S. A. 117 , 3797–3807 (2020).

Article CAS PubMed PubMed Central Google Scholar

Kucyi, A. et al. Prediction of stimulus-independent and task-unrelated thought from functional brain networks. Nat. Commun. 12 , 1793 (2021).

Deary, I. J., Penke, L. & Johnson, W. The neuroscience of human intelligence differences. Nat. Rev. Neurosci. 11 , 201–211 (2010).

Miyake, A. et al. The unity and diversity of executive functions and their contributions to complex ‘frontal lobe’ tasks: a latent variable analysis. Cogn. Psychol. 41 , 49–100 (2000).

Huang, L., Mo, L. & Li, Y. Measuring the interrelations among multiple paradigms of visual attention: an individual differences approach. J. Exp. Psychol. Hum. Percept. Perform. 38 , 414–428 (2012).

Corbetta, M. & Shulman, G. L. Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 3 , 215–229 (2002).

Article CAS Google Scholar

Kanwisher, N. & Wojciulik, E. Visual attention: insights from brain imaging. Nat. Rev. Neurosci. 1 , 91–100 (2000).

Rosenberg, M. D., Finn, E. S., Scheinost, D., Constable, R. T. & Chun, M. M. Characterizing attention with predictive network models. Trends Cogn. Sci. 21 , 290–302 (2017).

Rosenberg, M. D. et al. A neuromarker of sustained attention from whole-brain functional connectivity. Nat. Neurosci. 19 , 165–171 (2016).

Wu, E. X. W. et al. Overlapping attentional networks yield divergent behavioral predictions across tasks: neuromarkers for diffuse and focused attention? Neuroimage 209 , 116535 (2020).

Kucyi, A., Hove, M. J., Esterman, M., Hutchison, R. M. & Valera, E. M. Dynamic brain network correlates of spontaneous fluctuations in attention. Cereb. Cortex 27 , 1831–1840 (2017).

PubMed Google Scholar

Shen, X. et al. Using connectome-based predictive modeling to predict individual behavior from brain connectivity. Nat. Protoc. 12 , 506–518 (2017).

Finn, E. S. et al. Functional connectome fingerprinting: identifying individuals using patterns of brain connectivity. Nat. Neurosci. 18 , 1664–1671 (2015).

Woo, C. W., Chang, L. J., Lindquist, M. A. & Wager, T. D. Building better biomarkers: brain models in translational neuroimaging. Nat. Neurosci. 20 , 365–377 (2017).

Gratton, C. et al. Defining individual-specific functional neuroanatomy for precision psychiatry. Biol. Psychiatry 88 , 28–39 (2020).

Cohen, J. R. & D’Esposito, M. The segregation and integration of distinct brain networks and their relationship to cognition. J. Neurosci. 36 , 12083–12094 (2016).

Yoo, K. et al. Multivariate approaches improve the reliability and validity of functional connectivity and prediction of individual behaviors. Neuroimage 197 , 212–223 (2019).

Rosenberg, M. D. et al. Methylphenidate modulates functional network connectivity to enhance attention. J. Neurosci. 36 , 9547–9557 (2016).

Rosenberg, M. D., Hsu, W.-T., Scheinost, D., Todd Constable, R. & Chun, M. M. Connectome-based models predict separable components of attention in novel individuals. J. Cogn. Neurosci. 30 , 160–173 (2018).

Yoo, K. et al. Connectome-based predictive modeling of attention: comparing different functional connectivity features and prediction methods across datasets. Neuroimage 167 , 11–22 (2018).

Lin, Q. et al. Resting-state functional connectivity predicts cognitive impairment related to Alzheimer’s disease. Front. Aging Neurosci. 10 , 94 (2018).

Article PubMed PubMed Central Google Scholar

Avery, E. W. et al. Distributed patterns of functional connectivity predict working memory performance in novel healthy and memory-impaired individuals. J. Cogn. Neurosci. 32 , 241–255 (2019).

Zhang, H. et al . Do intrinsic brain functional networks predict working memory from childhood to adulthood? Hum. Brain Mapp . https://doi.org/10.1002/hbm.25143 (2020).

Tomasi, D. & Volkow, N. D. Network connectivity predicts language processing in healthy adults. Hum. Brain Mapp. 41 , 3696–3708 (2020).

Beaty, R. E. et al. Robust prediction of individual creative ability from brain functional connectivity. Proc. Natl Acad. Sci. U. S. A. 115 , 1087–1092 (2018).

Hsu, W.-T., Rosenberg, M. D., Scheinost, D., Constable, R. T. & Chun, M. M. Resting-state functional connectivity predicts neuroticism and extraversion in novel individuals. Soc. Cogn. Affect. Neurosci. 13 , 224–232 (2018).

Jiang, R. et al. Connectome-based individualized prediction of temperament trait scores. Neuroimage 183 , 366–374 (2018).

Cai, H., Chen, J., Liu, S., Zhu, J. & Yu, Y. Brain functional connectome-based prediction of individual decision impulsivity. Cortex 125 , 288–298 (2020).

Esterman, M., Noonan, S. K., Rosenberg, M. & Degutis, J. In the zone or zoning out? Tracking behavioral and neural fluctuations during sustained attention. Cereb. Cortex 23 , 2712–2723 (2013).

Fan, J., McCandliss, B. D., Fossella, J., Flombaum, J. I. & Posner, M. I. The activation of attentional networks. Neuroimage 26 , 471–479 (2005).

Kardan, O. et al . Adult neuromarkers of sustained attention and working memory predict inter- and intra-individual differences in these processes in youth. Preprint at bioRxiv https://doi.org/10.1101/2021.08.01.454530 (2021).

Engle, R. W. Working memory capacity as executive attention. Curr. Dir. Psychol. Sci. 11 , 19–23 (2002).

Article Google Scholar

Yoo, K. et al . A cognitive state transformation model for task-general and task-specific subsystems of the brain connectome. Preprint at bioRxiv https://doi.org/10.1101/2020.12.23.424176 (2020).

Noble, S. et al. Influences on the test-retest reliability of functional connectivity MRI and its relationship with behavioral utility. Cereb. Cortex 27 , 5415–5429 (2017).

Varoquaux, G. Cross-validation failure: small sample sizes lead to large error bars. NeuroImage 180 , 68–77 (2018).

Jangraw, D. C. et al. A functional connectivity-based neuromarker of sustained attention generalizes to predict recall in a reading task. Neuroimage 166 , 99–109 (2018).

Fountain-Zaragoza, S., Samimy, S., Rosenberg, M. D. & Prakash, R. S. Connectome-based models predict attentional control in aging adults. Neuroimage 186 , 1–13 (2019).

Van Essen, D. C. et al. The WU-Minn Human Connectome Project: an overview. Neuroimage 80 , 62–79 (2013).

DuPaul, G. J., Power, T. J., Anastopoulos, A. D. & Reid, R. ADHD Rating Scale—IV: Checklists, Norms, and Clinical Interpretation (Guilford, 1998).

Consortium, T. A.-200. The ADHD-200 Consortium: a model to advance the translational potential of neuroimaging in clinical neuroscience. Front. Syst. Neurosci. 6 , 62 (2012).

Google Scholar

Satterthwaite, T. D. et al. Neuroimaging of the Philadelphia neurodevelopmental cohort. NeuroImage 86 , 544–553 (2014).

Casey, B. J. et al. The adolescent brain cognitive development (ABCD) study: imaging acquisition across 21 sites. Dev. Cogn. Neurosci. 32 , 43–54 (2018).

Wojciulik, E. & Kanwisher, N. The generality of parietal involvement in visual attention. Neuron 23 , 747–764 (1999).

Duncan, J. & Owen, A. M. Common regions of the human frontal lobe recruited by diverse cognitive demands. Trends Neurosci. 23 , 475–483 (2000).

Ramnani, N. & Owen, A. M. Anterior prefrontal cortex: insights into function from anatomy and neuroimaging. Nat. Rev. Neurosci. 5 , 184–194 (2004).

Miller, E. K. & Cohen, J. D. An integrative theory of prefrontal cortex function. Annu. Rev. Neurosci. 24 , 167–202 (2001).

Pardo, J. V., Fox, P. T. & Raichle, M. E. Localization of a human system for sustained attention by positron emission tomography. Nature 349 , 61–64 (1991).

Corbetta, M., Shulman, G. L., Miezin, F. M. & Petersen, S. E. Superior parietal cortex activation during spatial attention shifts and visual feature conjunction. Sci. (80-.) 270 , 802–805 (1995).

Hopfinger, J. B., Buonocore, M. H. & Mangun, G. R. The neural mechanisms of top-down attentional control. Nat. Neurosci. 3 , 284–291 (2000).

Sprague, T. C. & Serences, J. T. Attention modulates spatial priority maps in the human occipital, parietal and frontal cortices. Nat. Neurosci. 16 , 1879–1887 (2013).

Wimmer, R. D. et al. Thalamic control of sensory selection in divided attention. Nature 526 , 705–709 (2015).

Heinze, H. J. et al. Combined spatial and temporal imaging of brain activity during visual selective attention in humans. Nature 372 , 543–546 (1994).

Coull, J. T., Vidal, F., Nazarian, B. & Macar, F. Functional anatomy of the attentional modulation of time estimation. Sci. (80-.) 303 , 1506–1508 (2004).

Gao, J. H. et al. Cerebellum implicated in sensory acquisition and discrimination rather than motor control. Sci. (80-.). 272 , 545–547 (1996).

Leiner, H. C., Leiner, A. L. & Dow, R. S. Does the cerebellum contribute to mental skills? Behav. Neurosci. 100 , 443–454 (1986).

Petersen, S. E., Fox, P. T., Posner, M. I., Mintun, M. & Raichle, M. E. Positron emission tomographic studies of the processing of single words. J. Cogn. Neurosci. 1 , 153–170 (1989).

Stoodley, C. J. The cerebellum and cognition: evidence from functional imaging studies. Cerebellum 11 , 352–365 (2012).

Strick, P. L., Dum, R. P. & Fiez, J. A. Cerebellum and nonmotor function. Annu. Rev. Neurosci. 32 , 413–434 (2009).

Buckner, R. L. The cerebellum and cognitive function: 25 years of insight from anatomy and neuroimaging. Neuron 80 , 807–815 (2013).

Allen, G., Buxton, R. B., Wong, E. C. & Courchesne, E. Attentional activation of the cerebellum independent of motor involvement. Sci. (80-.) 275 , 1940–1943 (1997).

Rees, G., Frackowiak, R. & Frith, C. Two modulatory effects of attention that mediate object categorization in human cortex. Sci. (80-.) 275 , 835–838 (1997).

Gottwald, B., Mihajlovic, Z., Wilde, B. & Mehdorn, H. M. Does the cerebellum contribute to specific aspects of attention? Neuropsychologia 41 , 1452–1460 (2003).

Greene, A. S., Gao, S., Scheinost, D. & Constable, R. T. Task-induced brain state manipulation improves prediction of individual traits. Nat. Commun. 9 , 2807 (2018).

Article PubMed PubMed Central CAS Google Scholar

Jiang, R. et al. Task-induced brain connectivity promotes the detection of individual differences in brain–behavior relationships. Neuroimage 207 , 116370 (2020).

Sui, J., Jiang, R., Bustillo, J. & Calhoun, V. Neuroimaging-based individualized prediction of cognition and behavior for mental disorders and health: methods and promises. Biol. Psychiatry 88 , 818–828 (2020).

Gao, S., Greene, A. S., Constable, R. T. & Scheinost, D. Combining multiple connectomes improves predictive modeling of phenotypic measures. Neuroimage 201 , 116038 (2019).

Pujol, J. et al. Clinical application of functional magnetic resonance imaging in presurgical identification of the central sulcus. J. Neurosurg. 88 , 863–869 (1998).

Bullmore, E. The future of functional MRI in clinical medicine. Neuroimage 62 , 1267–1271 (2012).

Vanderwal, T., Kelly, C., Eilbott, J., Mayes, L. C. & Castellanos, F. X. Inscapes: a movie paradigm to improve compliance in functional magnetic resonance imaging. Neuroimage 122 , 222–232 (2015).

Rosenberg, M., Noonan, S., DeGutis, J. & Esterman, M. Sustaining visual attention in the face of distraction: a novel gradual-onset continuous performance task. Atten. Percept. Psychophys. 75 , 426–439 (2013).

Pylyshyn, Z. W. & Storm, R. W. Tracking multiple independent targets: evidence for a parallel tracking mechanism. Spat. Vis. 3 , 179–197 (1988).

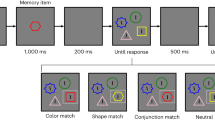

Luck, S. J. & Vogel, E. K. The capacity of visual working memory for features and conjunctions. Nature 390 , 279–284 (1997).

Pashler, H. Familiarity and visual change detection. Percept. Psychophys. 44 , 369–378 (1988).

Rouder, J. N., Morey, R. D., Morey, C. C. & Cowan, N. How to measure working memory capacity in the change detection paradigm. Psychon. Bull. Rev. 18 , 324–330 (2011).

Cox, R. W. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput. Biomed. Res. 29 , 162–173 (1996).

Shen, X., Tokoglu, F., Papademetris, X. & Constable, R. T. Groupwise whole-brain parcellation from resting-state fMRI data for network node identification. Neuroimage 82 , 403–415 (2013).

Scheinost, D. et al. Ten simple rules for predictive modeling of individual differences in neuroimaging. Neuroimage 193 , 35–45 (2019).

Nichols, T. E. & Holmes, A. P. Nonparametric permutation tests for functional neuroimaging: a primer with examples. Hum. Brain Mapp. 15 , 1–25 (2002).

Abbas, K. et al. GEFF: graph embedding for functional fingerprinting. Neuroimage 221 , 117181 (2020).

Amico, E. & Goñi, J. The quest for identifiability in human functional connectomes. Sci. Rep. 8 , 8254 (2018).

Barch, D. M. et al. NeuroImage function in the human connectome: task-fMRI and individual differences in behavior. Neuroimage 80 , 169–189 (2013).

Smith, S. M. et al. Resting-state fMRI in the Human Connectome Project. Neuroimage 80 , 144–168 (2013).

Download references

Acknowledgements

This project was supported by National Institutes of Health grant MH108591 to M.M.C. and by National Science Foundation grant BCS1558497 to M.M.C.

Author information

Authors and affiliations.

Department of Psychology, Yale University, New Haven, CT, USA

Kwangsun Yoo, Monica D. Rosenberg, Young Hye Kwon, Qi Lin, Emily W. Avery & Marvin M. Chun

Department of Psychology, University of Chicago, Chicago, IL, USA

Monica D. Rosenberg

Department of Radiology and Biomedical Imaging, Yale School of Medicine, New Haven, CT, USA

Dustin Sheinost & R. Todd Constable

Interdepartmental Neuroscience Program, Yale University, New Haven, CT, USA

R. Todd Constable & Marvin M. Chun

Department of Neurosurgery, Yale School of Medicine, New Haven, CT, USA

R. Todd Constable

Department of Neuroscience, Yale School of Medicine, New Haven, CT, USA

Marvin M. Chun

Wu Tsai Institute, Yale University, New Haven, CT, USA

You can also search for this author in PubMed Google Scholar

Contributions

K.Y., M.D.R. and M.M.C. designed the study. Y.H.K. and E.W.A. performed fMRI experiments. K.Y. and M.D.R. analysed behavioural data. K.Y. and Y.H.K. analysed fMRI data. K.Y. conducted modelling and visualization. K.Y., M.M.C., M.D.R., Q.L., D.S. and R.T.C. discussed the results and implications. M.M.C. and R.T.C. supervised the project. K.Y., Y.H.K. and M.M.C. wrote the original draft; K.Y., M.M.C., M.D.R., Q.L., E.W.A., D.S. and R.T.C. reviewed the original draft and contributed to the final version of the paper.

Corresponding authors

Correspondence to Kwangsun Yoo or Marvin M. Chun .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Peer review

Peer review information.

Nature Human Behaviour thanks Jing Sui, Francisco Castellanos and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended data fig. 1 predictive anatomy of three task-based cpms..

a . The scale bar in gradCPT, MOT and VSTM represents the relative ratio of predictive functional connections to all possible number of functional connections between networks with a sign representing whether the connection is in a positive or negative network. The scale bar in overlap represents the actual number of predictive functional connections with a sign representing whether the connection is in a positive or negative network. GradCPT: gradual-onset continuous performance task, MOT: multiple object tracking, and VSTM: visual short-term memory. MF: medial-frontal network, FP: frontoparietal network, DM: default mode network, VI: visual I, VII: visual II, VAs: visual association, SA: salience network, Subc: subcortex, Cbl: cerebellum. b . The number of predictive connections of three task-based CPMs in positive and negative networks.

Extended Data Fig. 2 Cross-prediction results of five common attention factor CPMs.

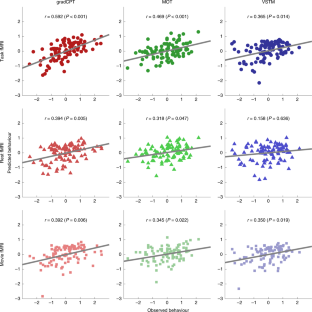

a . Cross-prediction results when models were applied to predict the common attention factor from different fMRI data. Models’ prediction accuracies were assessed by prediction q 2 and correlation r between observed and predicted common factor measures. P values of significance were obtained using 1,000 permutations and corrected for all 5×5 tests (***: p < 0.001, **: p < 0.01, *: p < 0.05, and ~: p < 0.1). Rows represent different fMRI data used to predict a common attention factor used in model construction, and columns represent the same but in model validation. b . Cross-prediction results, taking into account shared variance (the common factor) between task behaviors. Models’ prediction accuracies were assessed by partial correlation between observed and predicted behavior scores while controlling for the shared variance. P values of significance were obtained using 1,000 permutations and corrected for all 5×9 tests (***: p < 0.001, **: p < 0.01, *: p < 0.05, and ~: p < 0.1). Rows represent different fMRI data used to predict a common attention factor used in model construction, and columns represent combinations of fMRI data and behavior scores used in model validation. GradCPT: gradual-onset continuous performance task, MOT: multiple object tracking, and VSTM: visual short-term memory.

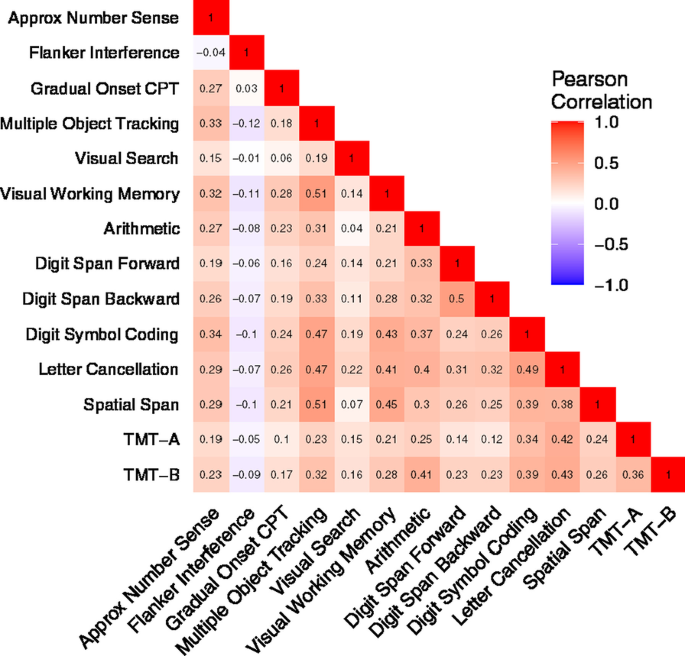

Extended Data Fig. 3 A similarity of individual behaviours between different tasks.

The similarity was assessed by Pearson’s correlation of individual performances between attention tasks. Individual behaviors were significantly correlated between every pair of tasks. GradCPT: gradual-onset continuous performance task, MOT: multiple object tracking, and VSTM: visual short-term memory.

Extended Data Fig. 4 Cross-prediction results of task-specific CPMs.

a . Cross-prediction results, taking into account shared variance between task behaviors. Models’ prediction accuracies were assessed by partial correlation between observed and predicted behavior scores while controlling for the shared variance. P value was obtained using 1,000 permutations and corrected for multiple tests (***: p < 0.001, **: p < 0.01, *: p < 0.05, and ~: p < 0.1). Rows represent combinations of fMRI data and behavior scores used in model construction, and columns represent combinations of fMRI data and behavior scores used in model validation. GradCPT: gradual-onset continuous performance task, MOT: multiple object tracking, and VSTM: visual short-term memory. b . Cross-prediction results when models were applied to predict the common attention factor from different fMRI data. Models’ prediction accuracies were assessed by correlation between observed and predicted common factor. P value was obtained using 1,000 permutations and corrected for all 9×5 tests (***: p < 0.001, **: p < 0.01, *: p < 0.05, and ~: p < 0.1). Rows represent combinations of fMRI data and behavior scores used in model construction, and columns represent different fMRI data used to predict a common attention factor used in model validation.

Extended Data Fig. 5 Cross-prediction using connectivity between the frontoparietal (FP, 2), visual II (VII, 6), salience (SA, 8), subcortical (Subc, 9), cerebellar (Cbl, 10) networks.

Prediction of a model using connectivity between the medial-frontal (1), default mode (3), motor (4), visual I (5), visual association (7) networks was also obtained as a control. A. Rows represent combinations of networks (indicated by numbers) used in each model. Models’ prediction accuracies were assessed by correlating model-predicted and observed behavioral scores. B. Prediction performance of each network obtained by averaging all models that used the network in A. C. The same result as A, but model accuracies were assessed by q2. D. Prediction performance of each network obtained by averaging all models that used the network in C. GradCPT: gradual-onset continuous performance task, MOT: multiple object tracking, and VSTM: visual short-term memory.

Extended Data Fig. 6 Similarity between C2C model-generated task connectomes and empirical task connectomes.

Error bar represents standard deviation from 1,000 iterations. A and C represent a spatial similarity between two connectomes assessed by Pearson’s correlation. Darker bars represent the similarity between empirical task and generated task connectomes, and lighter bars represent the similarity between empirical task and empirical rest connectomes. The higher similarity of the generated connectome indicates that the C2C model accurately generates the target task connectome from the rest connectome. B and D represent root mean square (RMS) difference between two connectomes. The smaller difference of the generated connectome indicates that the C2C model accurately generates the target task connectome from the rest connectome. In a box-whisker plot, a box covers the first to third quartile ( q 1 and q 3, respectively) of the data, and a center line represents the median. A red dot represents the mean. Whisker covers approximately 99.3% of data (±2.7* standrad deviation ), extended to the most extreme point that is not an outlier. A data point is considered an outlier if it is greater than q 3+1.5*( q 3- q 1) or less than q 1-1.5*( q 3- q 1). GradCPT: gradual-onset continuous performance task, MOT: multiple object tracking, and VSTM: visual short-term memory. *: p < 0.001 from 1,000 permutations.

Extended Data Fig. 7 The general attention connectome lookup table.

Out of a total 30,135 edges, 10,885 (36.1%) edges were pulled from gradCPT, 12,542 (41.6%) edges were from MOT, and 6,708 (22.3%) were from VSTM. The Ratio map was obtained based on All map. In each within- or between-network element in Ratio, the number of edges in the element for each task was counted and normalized by the total number of edges of each task. A task with the highest normalized value was assigned.

Extended Data Fig. 8 Scatter plots of predicted and observed attention scores in four external datasets.

Three models, the general attention model and two single task models (model 1 and 4 in Table 1) were trained within the internal dataset and then applied to rest connectomes in the four datasets. If a fitted line closely passes the origin (0,0) with a positive slope (staying within white quadrants), the model could be considered successfully predicting actual attentional abilities. There was no constraint on intercepts in fitting a line. The general model best generalized to predict various attentional measures in four independent external datasets.

Extended Data Fig. 9 Prediction error, assessed by mean square error (MSE), of the general attention model in four independent datasets.

The general model significantly reduced prediction error (assessed by MSE) compared to null models in four datasets. In all datasets, the general attention model produced the lowest prediction error among all models tested. ***: p < 0.001, **: p < 0.01, *: p < 0.05, and ~: p < 0.1 from 1,000 permutations.

Supplementary information

Supplementary methods, results, discussion, references, Tables 1–7 and Figs. 1–11.

Reporting summary

Rights and permissions.

Reprints and permissions

About this article

Cite this article.

Yoo, K., Rosenberg, M.D., Kwon, Y.H. et al. A brain-based general measure of attention. Nat Hum Behav 6 , 782–795 (2022). https://doi.org/10.1038/s41562-022-01301-1

Download citation

Received : 17 June 2021

Accepted : 14 January 2022

Published : 03 March 2022

Issue Date : June 2022

DOI : https://doi.org/10.1038/s41562-022-01301-1

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Gaze-based attention refocusing training in virtual reality for adult attention-deficit/hyperactivity disorder.

- Benjamin Selaskowski

- Laura Marie Asché

- Niclas Braun

BMC Psychiatry (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

- Reference Manager

- Simple TEXT file

People also looked at

Review article, attention in psychology, neuroscience, and machine learning.

- Gatsby Computational Neuroscience Unit, Sainsbury Wellcome Centre, University College London, London, United Kingdom

Attention is the important ability to flexibly control limited computational resources. It has been studied in conjunction with many other topics in neuroscience and psychology including awareness, vigilance, saliency, executive control, and learning. It has also recently been applied in several domains in machine learning. The relationship between the study of biological attention and its use as a tool to enhance artificial neural networks is not always clear. This review starts by providing an overview of how attention is conceptualized in the neuroscience and psychology literature. It then covers several use cases of attention in machine learning, indicating their biological counterparts where they exist. Finally, the ways in which artificial attention can be further inspired by biology for the production of complex and integrative systems is explored.

1. Introduction

Attention is a topic widely discussed publicly and widely studied scientifically. It has many definitions within and across multiple fields including psychology, neuroscience, and, most recently, machine learning ( Chun et al., 2011 ; Cho et al., 2015 ). As William James wrote at the dawn of experimental psychology, “Everyone knows what attention is. It is the taking possession by the mind, in clear, and vivid form, of one out of what seems several simultaneously possible objects or trains of thought.” Since James wrote this, many attempts have been made to more precisely define and quantify this process while also identifying the underlying mental and neural architectures that give rise to it. The glut of different experimental approaches and conceptualizations to study what is spoken of as a single concept, however, has led to something of a backlash amongst researchers. As was claimed in the title of a recent article arguing for a more evolution-informed approach to the concept, “No one knows what attention is” ( Hommel et al., 2019 ).

Attention is certainly far from a clear or unified concept. Yet despite its many, vague, and sometimes conflicting definitions, there is a core quality of attention that is demonstrably of high importance to information processing in the brain and, increasingly, artificial systems. Attention is the flexible control of limited computational resources. Why those resources are limited and how they can best be controlled will vary across use cases, but the ability to dynamically alter and route the flow of information has clear benefits for the adaptiveness of any system.

The realization that attention plays many roles in the brain makes its addition to artificial neural networks unsurprising. Artificial neural networks are parallel processing systems comprised of individual units designed to mimic the basic input-output function of neurons. These models are currently dominating the machine learning and artificial intelligence (AI) literature. Initially constructed without attention, various mechanisms for dynamically re-configuring the representations or structures of these networks have now been added.

The following section, section 2, will cover broadly the different uses of the word attention in neuroscience and psychology, along with its connection to other common neuroscientific topics. Throughout, the conceptualization of attention as a way to control limited resources will be highlighted. Behavioral studies will be used to demonstrate the abilities and limits of attention while neural mechanisms point to the physical means through which these behavioral effects are manifested. In section 3, the state of attention research in machine learning will be summarized and relationships between artificial and biological attention will be indicated where they exist. And in section 4 additional ways in which findings from biological attention can influence its artificial counterpart will be presented.

The primary aim of this review is to give researchers in the field of AI or machine learning an understanding of how attention is conceptualized and studied in neuroscience and psychology in order to facilitate further inspiration where fruitful. A secondary aim is to inform those who study biological attention how these processes are being operationalized in artificial systems as it may influence thinking about the functional implications of biological findings.

2. Attention in Neuroscience and Psychology

The scientific study of attention began in psychology, where careful behavioral experimentation can give rise to precise demonstrations of the tendencies and abilities of attention in different circumstances. Cognitive science and cognitive psychology aim to turn these observations into models of how mental processes could create such behavioral patterns. Many word models and computational models have been created that posit different underlying mechanisms ( Driver, 2001 ; Borji and Itti, 2012 ).

The influence of single-cell neurophysiology in non-human primates along with non-invasive means of monitoring human brain activity such as EEG, fMRI, and MEG have made direct observation of the underlying neural processes possible. From this, computational models of neural circuits have been built that can replicate certain features of the neural responses that relate to attention ( Shipp, 2004 ).

In the following sub-sections, the behavioral and neural findings of several different broad classes of attention will be discussed.

2.1. Attention as Arousal, Alertness, or Vigilance

In its most generic form, attention could be described as merely an overall level of alertness or ability to engage with surroundings. In this way it interacts with arousal and the sleep-wake spectrum. Vigilance in psychology refers to the ability to sustain attention and is therefore related as well. Note, while the use of these words clusters around the same meaning, they are sometimes used more specifically in different niche literature ( Oken et al., 2006 ).

Studying subjects in different phases of the sleep-wake cycle, under sleep deprivation, or while on sedatives offers a view of how this form of attention can vary and what the behavioral consequences are. By giving subjects repetitive tasks that require a level of sustained attention—such as keeping a ball within a certain region on a screen—researchers have observed extended periods of poor performance in drowsy patients that correlate with changes in EEG signals ( Makeig et al., 2000 ). Yet, there are ways in which tasks can be made more engaging that can lead to higher performance even in drowsy or sedated states. This includes increasing the promise of reward for performing the task, adding novelty or irregularity, or introducing stress ( Oken et al., 2006 ). Therefore, general attention appears to have limited reserves that won't be deployed in the case of a mundane or insufficiently rewarding task but can be called upon for more promising or interesting work.

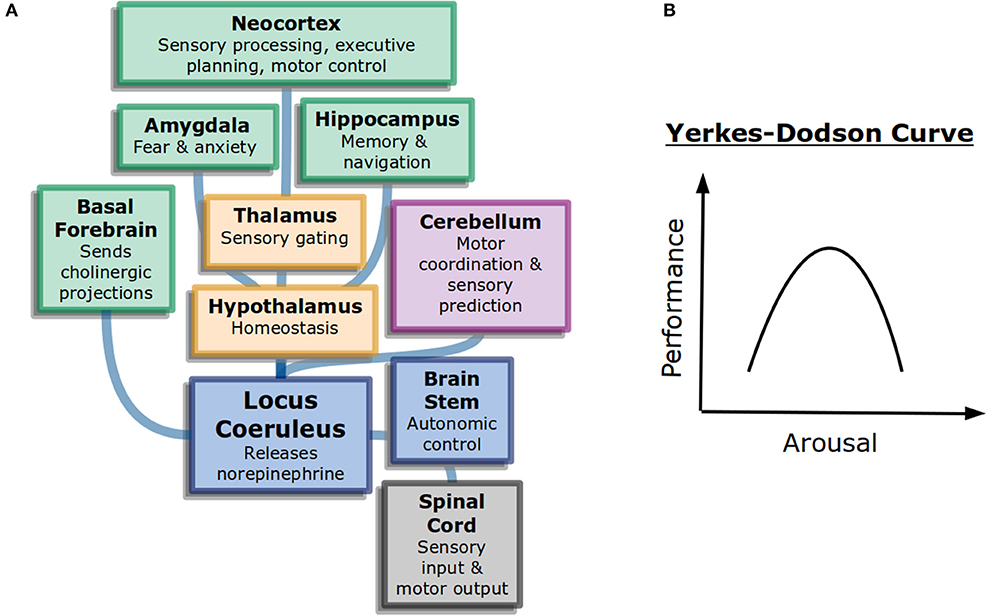

Interestingly, more arousal is not always beneficial. The Yerkes-Dodson curve ( Figure 1B ) is an inverted-U that represents performance as a function of alertness on sufficiently challenging tasks: at low levels of alertness performance is poor, at medium levels it is good, and at high levels it becomes poor again. The original study used electric shocks in mice to vary the level of alertness, but the finding has been repeated with other measures ( Diamond, 2005 ). It may explain why psychostimulants such as Adderall or caffeine can work to increase focus in some people at some doses but become detrimental for others ( Wood et al., 2014 ).

Figure 1 . General attention and alertness (A) Cells in the locus coeruleus release norepinephrine (also known as noradrenaline) onto many parts of the brain with different functions, including onto other neuromodulatory systems. This contributes to overall arousal ( Samuels and Szabadi, 2008 ). Colors here represent different divisions of the brain: forebrain (green), diencephalon (yellow), and brainstem (blue). (B) The Yerkes-Dodson curve describes the nonlinear relationship between arousal and performance on challenging tasks.

The neural circuits underlying the sleep-wake cycle are primarily in the brain stem ( Coenen, 1998 ). These circuits control the flow of information into the thalamus and then onto cortex. Additionally, neuromodulatory systems play a large role in the control of generalized attention. Norepinephrine, acetylcholine, and dopamine are believed to influence alertnesss, orienting to important information, and executive control of attention, respectively ( Posner, 2008 ). The anatomy of neuromodulators matches their function as well. Neurons that release norepinephrine, for example, have their cell bodies in the brain stem but project very broadly across the brain, allowing them to control information processing broadly ( Figure 1A ).

2.2. Sensory Attention

In addition to overall levels of arousal and alertness, attention can also be selectively deployed by an awake subject to specific sensory inputs. Studying attention within the context of a specific sensory system allows for tight control over both stimuli and the locus of attention. Generally, to look for this type of attention the task used needs to be quite challenging. For example, in a change detection task, the to-be-detected difference between two stimuli may be very slight. More generally, task difficulty can be achieved by presenting the stimulus for only a very short period of time or only very weakly.

A large portion of the study of attention in systems neuroscience and psychology centers on visual attention in particular ( Kanwisher and Wojciulik, 2000 ). This may reflect the general trend in these fields to emphasis the study of visual processing over other sensory systems ( Hutmacher, 2019 ), along with the dominant role vision plays in the primate brain. Furthermore, visual stimuli are frequently used in studies meant to address more general, cognitive aspects of attention as well.

Visual attention can be broken down broadly into spatial and feature-based attention.

2.2.1. Visual Spatial Attention

Saccades are small and rapid eye movements made several times each second. As the fovea offers the highest visual resolution on the retina, choosing where to place it is essentially a choice about where to deploy limited computational resources. In this way, eye movements indicate the locus of attention. As this shift of attention is outwardly visible it is known as overt visual attention.

By tracking eye movements as subjects are presented with different images, researchers have identified image patterns that automatically attract attention. Such patterns are defined by oriented edges, spatial frequency, color contrast, intensity, or motion ( Itti and Koch, 2001 ). Image regions that attract attention are considered “salient” and are computed in a “bottom-up” fashion. That is, they don't require conscious or effortful processing to identify and are likely the result of built-in feature detectors in the visual system. As such, saliency can be computed very quickly. Furthermore, different subjects tend to agree on which regions are salient, especially those identified in the first few saccades ( Tatler et al., 2005 ).

Salient regions can be studied in “free-viewing” situations, that is, when the subject is not given any specific instructions about how to view the image. When a particular task is assigned, the interplay between bottom-up and “top-down” attention becomes clear. For example, when instructed to saccade to a specific visual target out of an array, subjects may incorrectly saccade to a particularly salient distractor instead ( van Zoest and Donk, 2005 ). More generally, task instructions can have a significant effect on the pattern of saccades generated when subjects are viewing a complex natural image and given high-level tasks (e.g., asked to assess the age of a person or guess their socio-economic status). Furthermore, the natural pattern of eye movements when subjects perform real world tasks, like sandwich making, can provide insights to underlying cognitive processes ( Hayhoe and Ballard, 2005 ).

When subjects need to make multiple saccades in a row they tend not to return to locations they have recently attended and may be slow to respond if something relevant occurs there. This phenomenon is known as inhibition of return ( Itti and Koch, 2001 ). Such behavior pushes the visual system to not just exploit image regions originally deemed most salient but to explore other areas as well. It also means the saccade generating system needs to have a form of memory; this is believed to be implemented by short-term inhibition of the representation of recently-attended locations.

While eye movements are an effective means of controlling visual attention, they are not the only option. “Covert” spatial attention is a way of emphasizing processing of different spatial locations without an overt shift in fovea location. Generally, in the study of covert spatial attention, subjects must fixate on a central point throughout the task. They are cued to covertly attend to a location in their peripheral vision where stimuli relevant for their visual task will likely appear. For example, in an orientation discrimination task, after the spatial cue is provided an oriented grating will flash in the cued location and the subject will need to indicate its orientation. On invalidly-cued trials (when the stimulus appears in an uncued location), subjects perform worse than on validly-cued (or uncued) trials ( Anton-Erxleben and Carrasco, 2013 ). This indicates that covert spatial attention is a limited resource that can be flexibly deployed and aids in the processing of visual information.

Covert spatial attention is selective in the sense that certain regions are selected for further processing at the expense of others. This has been referred to as the “spotlight” of attention. Importantly, for covert—as opposed to overt—attention the input to the visual system can be identical while the processing of that input is flexibly selective.

Covert spatial attention can be impacted by bottom-up saliency as well. If an irrelevant but salient object is flashed at a location that then goes on to have a task relevant stimulus, the exogenous spatial attention drawn by the irrelevant stimulus can get applied to the task relevant stimulus, possibly providing a performance benefit. If it is flashed at an irrelevant location, however, it will not help, and can harm performance ( Berger et al., 2005 ). Bottom-up/exogenous attention has a quick time course, impacting covert attention for 80–130 ms after the distractor appears ( Anton-Erxleben and Carrasco, 2013 ).

In some theories of attention, covert spatial attention exists to help guide overt attention. Particularly, the pre-motor theory of attention posits that the same neural circuits plan saccades and control covert spatial attention ( Rizzolatti et al., 1987 ). The frontal eye field (FEF) is known to be involved in the control of eye movements. Stimulating the neurons in FEF at levels too low to evoke eye movements has been shown to create effects similar to covert attention ( Moore et al., 2003 ). In this way, covert attention may be a means of deciding where to overtly look. The ability to covertly attend may additionally be helpful in social species, as eye movements convey information about knowledge and intent that may best be kept secret ( Klein et al., 2009 ).

To study the neural correlates of covert spatial attention, researchers identify which aspects of neural activity differ based only on differences in the attentional cue (and not on differences in bottom-up features of the stimuli). On trials where attention is cued toward the receptive field of a recorded neuron, many changes in the neural activity have been observed ( Noudoost et al., 2010 ; Maunsell, 2015 ). A commonly reported finding is an increase in firing rates, typically of 20–30% ( Mitchell et al., 2007 ). However, the exact magnitude of the change depends on the cortical area studied, with later areas showing stronger changes ( Luck et al., 1997 ; Noudoost et al., 2010 ). Attention is also known to impact the variability of neural firing. In particular, it decreases trial-to-trial variability as measured via the Fano Factor and decreases noise correlations between pairs of neurons. Attention has even been found to impact the electrophysiological properties of neurons in a way that reduces their likelihood of firing in bursts and also decreases the height of individual action potentials ( Anderson et al., 2013 ).

In general, the changes associated with attention are believed to increase the signal-to-noise ratio of the neurons that represent the attended stimulus, however they can also impact communication between brain areas. To this end, attention's effect on neural synchrony is important. Within a visual area, attention has been shown to increase spiking coherence in the gamma band—that is at frequencies between 30 and 70 Hz ( Fries et al., 2008 ). When a group of neurons fires synchronously, their ability to influence shared downstream areas is enhanced. Furthermore, attention may also be working to directly coordinate communication across areas. Synchronous activity between two visual areas can be a sign of increased communication and attention has been shown to increase synchrony between the neurons that represent the attended stimulus in areas V1 and V4, for example ( Bosman et al., 2012 ). Control of this cross-area synchronization appears to be carried out by the pulvinar ( Saalmann et al., 2012 ).

In addition to investigating how attention impacts neurons in the visual pathways, studies have also searched for the source of top-down attention ( Noudoost et al., 2010 ; Miller and Buschman, 2014 ). The processing of bottom-up attention appears to culminate with a saliency map produced in the lateral intraparietal area (LIP). The cells here respond when salient stimuli are in their receptive field, including task-irrelevant but salient distractors. Prefrontal areas such as FEF, on the other hand, appear to house the signals needed for top-down control of spatial attention and are less responsive to distractors.

While much of the work on the neural correlates of sensory attention focuses on the cortex, subcortical areas appear to play a strong role in the control and performance benefits of attention as well. In particular, the superior colliculus assists in both covert and overt spatial attention and inactivation of this region can impair attention ( Krauzlis et al., 2013 ). And, as mentioned above, the pulvinar plays a role in attention, particularly with respect to gating effects on cortex ( Zhou et al., 2016 ).

2.2.2. Visual Feature Attention

Feature attention is another form of covert selective attention. In the study of feature attention, instead of being cued to attend to a particular location, subjects are cued on each trial to attend to a particular visual feature such as a specific color, a particular shape, or a certain orientation. The goal of the task may be to detect if the cued feature is present on the screen or readout another one of its qualities (e.g., to answer “what color is the square?” should result in attention first deployed to squares). Valid cueing about the attended feature enhances performance. For example, when attention was directed toward a particular orientation, subjects were better able to detect faint gratings of that orientation than of any other orientation ( Rossi and Paradiso, 1995 ). While the overall task (e.g., detection of an oriented grating) remains the same, the specific instructions (detection of 90° grating vs. 60° vs. 30°) will be cued on each individual trial, or possibly blockwise. Successful trial-wise cueing indicates that this form of attention can be flexibly deployed on fast timescales.

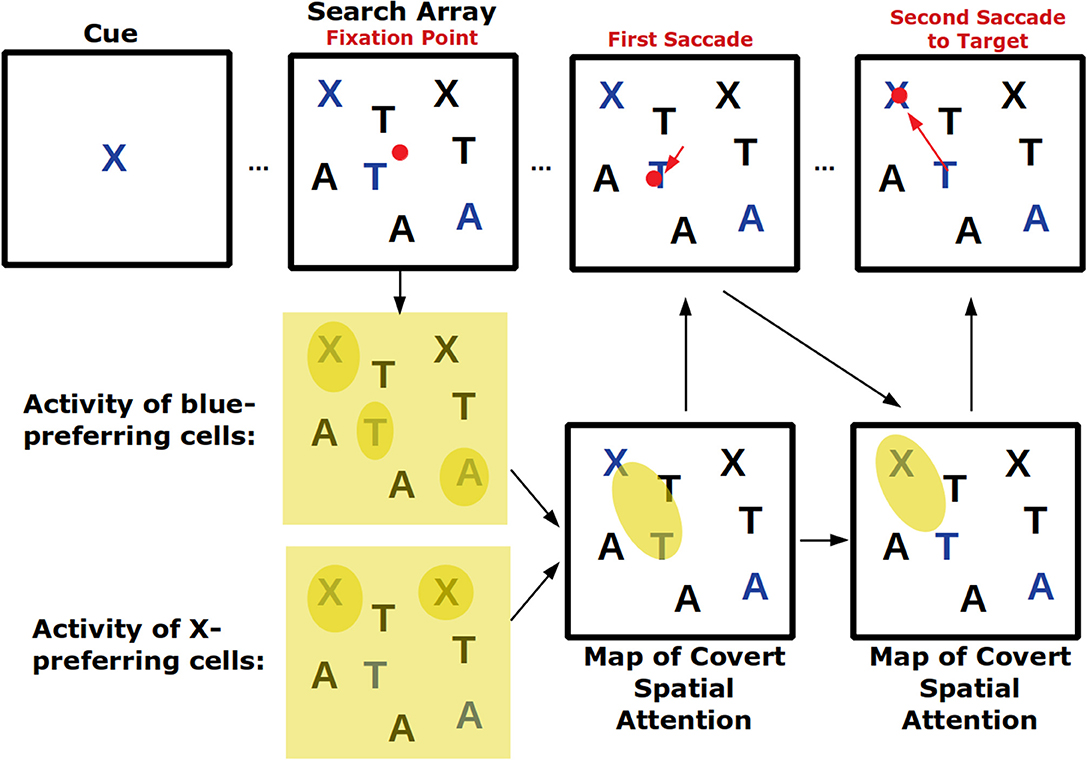

Visual search tasks are also believed to activate feature-based attention ( Figure 2 ). In these tasks, an array of stimuli appears on a screen and subjects need to indicate—frequently with an eye movement—the location of the cued stimulus. As subjects are usually allowed to make saccades throughout the task as they search for the cued stimulus, this task combines covert feature-based attention with overt attention. In fact, signals of top-down feature-based attention have been found in FEF, the area involved in saccade choice ( Zhou and Desimone, 2011 ). Because certain features can create a pop-out effect—for example, a single red shape amongst several black ones will immediately draw attention—visual search tasks also engage bottom-up attention which, depending on the task, may need to be suppressed ( Wolfe and Horowitz, 2004 ).

Figure 2 . Visual search tasks engage many forms of visual attention. Across the top row the progression of a visual search task is shown. First, a cue indicates the target of the visual search, in this case a blue X. Then a search array appears with many non-targets. Top-down feature attention to cells that represent the color blue and the shape X will increase their firing throughout the visual field but firing will be strongest where blue or Xs actually occur. These neural response will play a role in generating a map of covert spatial attention which can be used to explore visual space before saccading. After the shift in overt attention with the first saccade, the covert attention map is remade. Finally, the target is located and successfully saccaded to. If the visual array contained a pop-out stimulus (for example a green O) it may have captured covert spatial attention in a bottom-up way and led to an additional incorrect saccade.

Neural effects of feature-based attention in the visual system are generally similar to those of spatial attention. Neurons that represent the attended feature, for example, have increased firing rates, and those that represent very different features have suppressed rates ( Treue and Trujillo, 1999 ). As opposed to spatial attention, however, feature-based attention is spatially-global. This means that when deploying attention to a particular feature the activity of the neurons that represent that feature anywhere in visual space are modulated ( Saenz et al., 2002 ). Another difference between spatial and feature attention is the question of how sources of top-down attention target the correct neurons in the visual system. The retinotopic map, wherein nearby cells represent nearby spatial locations, makes spatial targeting straightforward, but cells are not as neatly organized according to preferred visual features.

The effects of spatial and feature attention appear to be additive ( Hayden and Gallant, 2009 ). Furthermore, both feature and spatial attention are believed to create their effects by acting on the local neural circuits that implement divisive normalization in visual cortex ( Reynolds and Heeger, 2009 ). Modeling work has shown that many of the neural effects of selective attention can be captured by assuming that top-down connections provide targeted synaptic inputs to cells in these circuits ( Lindsay et al., 2019 ). However, models that rely on effects of the neuromodulator acetylcholine can also replicate neural correlates of attention ( Sajedin et al., 2019 ).

Potential sources of top-down feature-based attention have been found in prefrontal cortex where sustained activity encodes the attended feature ( Bichot et al., 2015 ; Paneri and Gregoriou, 2017 ). Inactivating the ventral prearcuate area impairs performance on search tasks. From prefrontal areas, attention signals are believed to travel in a reverse hierarchical way wherein higher visual areas send inputs to those below them ( Ahissar and Hochstein, 2000 ).

A closely related topic to feature attention is object attention. Here, attention is not deployed to an abstract feature in advance of a visual stimulus, but rather it is applied to a particular object in the visual scene ( Chen, 2012 ). The initial feedforward pass of activity through the visual hierarchy is able to pre-attentively segregate objects from their backgrounds in parallel across the visual field, provided these objects have stark and salient differences from the background. In more crowded or complex visual scenes, recurrent and serial processing is needed in order to identify different objects ( Lamme and Roelfsema, 2000 ). Serial processing involves moving limited attentional resources from one location in the image to another; it can take the form of shifts in either covert or overt spatial attention ( Buschman and Miller, 2009 ). Recurrent connections in the visual system—that is, both horizontal connections from nearby neurons in the same visual area and feedback connections from those in higher visual areas—aid in figure-ground segregation and object identification. The question of how the brain performs perceptual grouping of low-level features into a coherent object identity has been studied for nearly a century. It is believed that attention may be required for grouping, particularly for novel or complex objects ( Roelfsema and Houtkamp, 2011 ). This may be especially important in visual search tasks that require locating an object that is defined by a conjunction of several features.

Neurally, the effects of object-based attention can spread slowly through space as parts of an object are mentally traced ( Roelfsema et al., 1998 ). Switching attention to a location outside an object appears to incur a greater cost than switching to the same distance away but within the object ( Brown and Denney, 2007 ). In addition, once attention is applied to a visual object, it is believed to activate feature-based attention for the different features of that object across the visual field ( O'Craven et al., 1999 ).

Another form of attention sometimes referred to as feature attention involves attending to an entire feature dimension. An example of this is the Stroop test, wherein the names of colors are written in different colored ink and subjects either need to read the word itself or say the color of the ink. Here attention cannot be deployed to a specific feature in advance, only to the dimensions word or color. Neurally, the switch between dimensions appears to impact sensory coding in the visual stream and is controlled by frontal areas ( Liu et al., 2003 ).

2.2.3. Computational Models of Visual Attention

Visual attention, being one of the most heavily-studied topics in the neuroscience of attention, has inspired many computational models of how attention works. In general, these models synthesize various neurophysiological findings in order to help explain how the behavioral impacts of attention arise ( Heinke and Humphreys, 2005 ).

Several computational models meant to calculate saliency have been devised ( Itti and Koch, 2001 ). These models use low-level visual feature detectors—usually designed to match those in the visual system—to create an image-specific saliency map that can predict the saccade patterns of humans in response to the same image. Another approach to calculating saliency based on information theoretic first principles has also been explored and was able to account for certain visual search behaviors ( Bruce and Tsotsos, 2009 ).

Some of the behavioral and neural correlates of attention are similar whether the attention is bottom-up or top-down. In the Biased Competition Model of attention, stimuli compete against each other to dominate the neural response ( Desimone, 1998 ). Attention (bottom-up or top-down) can thus work by biasing this competition toward the stimulus that is the target of attention. While the Biased Competition Model is sometimes used simply as a “word model” to guide intuition, explicit computational instantiations of it have also been built. A hierarchical model of the visual pathway that included top-down biasing as well as local competition mediated through horizontal connections was able to replicate multiple neural effects of attention ( Deco and Rolls, 2004 ). A model embodying similar principles but using spiking neurons was also implemented ( Deco and Rolls, 2005 ).

Similar models have been constructed explicitly to deal with attribute naming tasks such as the Stroop test described above. The Selective Attention Model (SLAM), for example, has local competition in both the sensory encoding and motor output modules and can mimic known properties of response times in easier and more challenging Stroop-like tests ( Phaf et al., 1990 ).

Visual perception has been framed and modeled as a problem of Bayesian inference ( Lee and Mumford, 2003 ). Within this context, attention can help resolve uncertainty under settings where inference is more challenging, typically by modulating priors ( Rao, 2005 ). For example, in Chikkerur et al. (2010) spatial attention functions to reduce uncertainty about object identity and feature attention reduces spatial uncertainty. These principles can capture both behavioral and neural features of attention and can be implemented in a biologically-inspired neural model.

The feature similarity gain model of attention (FSGM) is a description of the neural effects of top-down attention that can be applied in both the feature and spatial domain ( Treue and Trujillo, 1999 ). It says that the way in which a neuron's response is modulated by attention depends on that neuron's tuning. Tuning is a description of how a neuron responds to different stimuli, so according to the FSGM a neuron that prefers (that is, responds strongly to), e.g., the color blue, will have its activity enhanced by top-down attention to blue. The FSGM also says attention to non-preferred stimuli will cause a decrease in firing and that, whether increased or decreased, activity is scaled multiplicatively by attention. Though not initially defined as a computational model, this form of neural modulation has since been shown through modeling to be effective at enhancing performance on challenging visual tasks ( Lindsay and Miller, 2018 ).

Other models conceptualize attention as a dynamic routing of information through a network. An implementation of this form of attention can be found in the Selective Attention for Identification Model (SAIM) ( Heinke and Humphreys, 2003 ). Here, attention routes information from the retina to a representation deemed the “focus of attention”; depending on the current task, different parts of the retinal representation will be mapped to the focus of attention.

2.2.4. Attention in Other Sensory Modalities

A famous example of the need for selective attention in audition is the “cocktail party problem”: the difficulty of focusing on the speech from one speaker in a crowded room of multiple speakers and other noises ( Bronkhorst, 2015 ). Solving the problem is believed to involve “early” selection wherein low level features of a voice such as pitch are used to determine which auditory information is passed on for further linguistic processing. Interestingly, selective auditory attention has the ability to control neural activity at even the earliest level of auditory processing, the cochlea ( Fritz et al., 2007 ).

Spatial and feature attention have also been explored in the somatosensory system. Subjects cued to expect a tap at different parts on their body are better able to detect the sensation when that cue is valid. However, these effects seem weaker than they are in the visual system ( Johansen-Berg and Lloyd, 2000 ). Reaction times are faster in a detection task when subjects are cued about the orientation of a stimulus on their finger ( Schweisfurth et al., 2014 ).

In a study that tested subjects' ability to detect a taste they had been cued for it was shown that validly-cued tastes can be detected at lower concentrations than invalidly-cued ones ( Marks and Wheeler, 1998 ). This mimics the behavioral effects found with feature-based visual attention. Attention to olfactory features has not been thoroughly explored, though visually-induced expectations about a scent can aid its detection ( Gottfried and Dolan, 2003 ; Keller, 2011 ).

Attention can also be spread across modalities to perform tasks that require integration of multiple sensory signals. In general, the use of multiple congruent sensory signals aids detection of objects when compared to relying only on a single modality. Interestingly, some studies suggest that humans may have a bias for the visual domain, even when the signal from another domain is equally valid ( Spence, 2009 ). Specifically, the visual domain appears to dominate most in tasks that require identifying the spatial location of a cue ( Bertelson and Aschersleben, 1998 ). This can be seen most readily in ventriloquism, where the visual cue of the dummy's mouth moving overrides auditory evidence about the true location of the vocal source. Visual evidence can also override tactile evidence, for example, in the context of the rubber arm illusion ( Botvinick and Cohen, 1998 ).

Another effect of the cross-modal nature of sensory processing is that an attentional cue in one modality can cause an orienting of attention in another modality ( Spence and Driver, 2004 ). Generally, the attention effects in the non-cued modality are weaker. This cross-modal interaction can occur in the context of both endogenous (“top-down”) and exogenous (“bottom-up”) attention.

2.3. Attention and Executive Control

With multiple simultaneous competing tasks, a central controller is needed to decide which to engage in and when. What's more, how to best execute tasks can depend on history and context. Combining sensory inputs with past knowledge in order to coordinate multiple systems for the job of efficient task selection and execution is the role of executive control, and this control is usually associated with the prefrontal cortex ( Miller and Buschman, 2014 ). As mentioned above, sources of top-down visual attention have also been located in prefrontal regions. Attention can reasonably be thought of as the output of executive control. The executive control system must thus select the targets of attention and communicate that to the systems responsible for implementing it. According to the reverse hierarchy theory described above, higher areas signal to those from which they get input which send the signal on to those below them and so on ( Ahissar and Hochstein, 2000 ). This means that, at each point, the instructions for attention must be transformed into a representation that makes sense for the targeted region. Through this process, the high level goals of the executive control region can lead to very specific changes, for example, in early sensory processing.

Executive control and working memory are also intertwined, as the ability to make use of past information as well as to keep a current goal in mind requires working memory. Furthermore, working memory is frequently identified as sustained activity in prefrontal areas. A consequence of the three-way relationship between executive control, working memory, and attention is that the contents of working memory can impact attention, even when not desirable for the task ( Soto et al., 2008 ). For example, if a subject has to keep an object in working memory while simultaneously performing a visual search for a separate object, the presence of the stored object in the search array can negatively interfere with the search ( Soto et al., 2005 ). This suggests that working memory can interfere with the executive control of attention. However, there still appears to be additional elements of that control that working memory alone does not disrupt. This can be seen in studies wherein visual search performance is even worse when subjects believe they will need to report the memorized item but are shown a search array for the attended item instead ( Olivers and Eimer, 2011 ). This suggests that, while all objects in working memory may have some influence over attention, the executive controller can choose which will have the most.

Beyond the flexible control of attention within a sensory modality, attention can also be shifted between modalities. Behavioral experiments indicate that switching attention either between two different tasks within a sensory modality (for example, going from locating a visual object to identifying it) or between sensory modalities (switching from an auditory task to a visual one) incurs a computational cost ( Pashler, 2000 ). This cost is usually measured as the extent to which performance is worse on trials just after the task has been switched vs. those where the same task is being repeated. Interestingly, task switching within a modality seems to incur a larger cost than switching between modalities ( Murray et al., 2009 ). A similar result is found when switching between or across modes of response (for example, pressing a bottom vs. verbal report), suggesting this is not specific to sensory processing ( Arrington et al., 2003 ). Such findings are believed to stem from the fact that switching within a modality requires a reconfiguration of the same neural circuits, which is more difficult than merely engaging the circuitry of a different sensory system. An efficient executive controller would need to be aware of these costs when deciding to shift attention and ideally try to minimize them; it has been shown that switch costs can be reduced with training ( Gopher, 1996 ).

The final question regarding the executive control of attention is how it evolves with learning. Eye movement studies indicate that searched-for items can be detected more rapidly in familiar settings rather than novel ones, suggesting that previously-learned associations guide overt attention ( Chun and Jiang, 1998 ). Such benefits are believed to rely on the hippocampus ( Aly and Turk-Browne, 2017 ). In general, however, learning how to direct attention is not as studied as other aspects of the attention process. Some studies have shown that subjects can enhance their ability to suppress irrelevant task information, and the generality of that suppression depends on the training procedure ( Kelley and Yantis, 2009 ). Looking at the neural correlates of attention learning, imaging results suggest that the neural changes associated with learning do not occur in the sensory pathways themselves but rather in areas more associated with attentional control ( Kelley and Yantis, 2010 ). Though not always easy to study, the development of attentional systems in infancy and childhood may provide further clues as to how attention can be learned ( Reynolds and Romano, 2016 ).

2.4. Attention and Memory

Attention and memory have many possible forms of interaction. If memory has a limited capacity, for example, it makes sense for the brain to be selective about what is allowed to enter it. In this way, the ability of attention to dynamically select a subset of total information is well-matched to the needs of the memory system. In the other direction, deciding to recall a specific memory is a choice about how to deploy limited resources. Therefore, both memory encoding and retrieval can rely on attention.

The role of attention in memory encoding appears quite strong ( Aly and Turk-Browne, 2017 ). For information to be properly encoded into memory, it is best for it be the target of attention. When subjects are asked to memorize a list of words while simultaneously engaging in a secondary task that divides their attention, their ability to consciously recall those words later is impaired (though their ability to recognize the words as familiar is not so affected) ( Gardiner and Parkin, 1990 ). Imaging studies have shown that increasing the difficulty of the secondary task weakens the pattern of activity related to memory encoding in the left ventral inferior frontal gyrus and anterior hippocampus and increases the representation of secondary task information in dorsolateral prefrontal and superior parietal regions ( Uncapher and Rugg, 2005 ). Therefore, without the limited neural processing power placed on the task of encoding, memory suffers. Attention has also been implicated in the encoding of spatially-defined memories and appears to stabilize the representations of place cells ( Muzzio et al., 2009 ).

Implicit statistical learning can also be biased by attention. For example, in Turk-Browne et al. (2005) subjects watched a stream of stimuli comprised of red and green shapes. The task was to detect when a shape of the attended color appeared twice in a row. Unbeknownst to the subjects, certain statistical regularities existed in the stream such that there were triplets of shapes likely to occur close together. When shown two sets of three shapes—one an actual co-occurring triplet and another a random selection of shapes of the same color—subjects recognized the real triplet as more familiar, but only if the triplets were from the attended color. The statistical regularities of the unattended shapes were not learned.

Yet some learning can occur even without conscious attention. For example, in Watanabe (2003) patients engaged in a letter detection task located centrally in their visual field while random dot motion was shown in the background at sub-threshold contrast. The motion had 10% coherence in a direction that was correlated with the currently-presented letter. Before and after learning this task, subjects performed an above-threshold direction classification task. After learning the task, direction classification improved only for the direction associated with the targeted letters. This suggests a reward-related signal activated by the target led to learning about a non-attended component of the stimulus.

Many behavioral studies have explored the extent to which attention is needed for memory retrieval. For example, by asking subjects to simultaneously recall a list of previously-memorized words and engage in a secondary task like card sorting, researchers can determine if memory retrieval pulls from the same limited pool of attentional resources as the task. Some such studies have found that retrieval is impaired by the co-occurrence of an attention-demanding task, suggesting it is an attention-dependent process. The exact findings, however, depend on the details of the memory and non-memory tasks used ( Lozito and Mulligan, 2006 ).

Even if memory retrieval does not pull from shared attentional resources, it is still clear that some memories are selected for more vivid retrieval at any given moment than others. Therefore, a selection process must occur. An examination of neuroimaging results suggests that the same parietal brain regions responsible for the top-down allocation and bottom-up capture of attention may play analogous roles during memory retrieval ( Wagner et al., 2005 ; Ciaramelli et al., 2008 ).

Studies of memory retrieval usually look at medium to long-term memory but a mechanism for attention to items in working memory has also been proposed ( Manohar et al., 2019 ). It relies on two different mechanisms of working memory: synaptic traces for non-attended items and sustained activity for the attended one.

Some forms of memory occur automatically and within the sensory processing stream itself. Priming is a well-known phenomenon in psychology wherein the presence of a stimulus at one point in time impacts how later stimuli are processed or interpreted. For example, the word “doctor” may be recognized more quickly following the word “hospital” than the word “school.” In this way, priming requires a form of implicit memory to allow previous stimuli to impact current ones. Several studies on conceptual or semantic priming indicate that attention to the first stimulus is required for priming effects to occur ( Ballesteros and Mayas, 2015 ); this mirrors findings that attention is required for memory encoding more generally.

Most priming is positive, meaning that the presence of a stimulus at one time makes the detection and processing of it or a related stimulus more likely at a later time. In this way, priming can be thought of as biasing bottom-up attention. However, top-down attention can also create negative priming. In negative priming, when stimuli that functioned as a distractor on the previous trial serve as the target of attention on the current trial, performance suffers ( Frings et al., 2015 ). This may stem from a holdover effect wherein the mechanisms of distractor suppression are still activated for the now-target stimulus.