- Evaluation Research Design: Examples, Methods & Types

As you engage in tasks, you will need to take intermittent breaks to determine how much progress has been made and if any changes need to be effected along the way. This is very similar to what organizations do when they carry out evaluation research.

The evaluation research methodology has become one of the most important approaches for organizations as they strive to create products, services, and processes that speak to the needs of target users. In this article, we will show you how your organization can conduct successful evaluation research using Formplus .

What is Evaluation Research?

Also known as program evaluation, evaluation research is a common research design that entails carrying out a structured assessment of the value of resources committed to a project or specific goal. It often adopts social research methods to gather and analyze useful information about organizational processes and products.

As a type of applied research , evaluation research typically associated with real-life scenarios within organizational contexts. This means that the researcher will need to leverage common workplace skills including interpersonal skills and team play to arrive at objective research findings that will be useful to stakeholders.

Characteristics of Evaluation Research

- Research Environment: Evaluation research is conducted in the real world; that is, within the context of an organization.

- Research Focus: Evaluation research is primarily concerned with measuring the outcomes of a process rather than the process itself.

- Research Outcome: Evaluation research is employed for strategic decision making in organizations.

- Research Goal: The goal of program evaluation is to determine whether a process has yielded the desired result(s).

- This type of research protects the interests of stakeholders in the organization.

- It often represents a middle-ground between pure and applied research.

- Evaluation research is both detailed and continuous. It pays attention to performative processes rather than descriptions.

- Research Process: This research design utilizes qualitative and quantitative research methods to gather relevant data about a product or action-based strategy. These methods include observation, tests, and surveys.

Types of Evaluation Research

The Encyclopedia of Evaluation (Mathison, 2004) treats forty-two different evaluation approaches and models ranging from “appreciative inquiry” to “connoisseurship” to “transformative evaluation”. Common types of evaluation research include the following:

- Formative Evaluation

Formative evaluation or baseline survey is a type of evaluation research that involves assessing the needs of the users or target market before embarking on a project. Formative evaluation is the starting point of evaluation research because it sets the tone of the organization’s project and provides useful insights for other types of evaluation.

- Mid-term Evaluation

Mid-term evaluation entails assessing how far a project has come and determining if it is in line with the set goals and objectives. Mid-term reviews allow the organization to determine if a change or modification of the implementation strategy is necessary, and it also serves for tracking the project.

- Summative Evaluation

This type of evaluation is also known as end-term evaluation of project-completion evaluation and it is conducted immediately after the completion of a project. Here, the researcher examines the value and outputs of the program within the context of the projected results.

Summative evaluation allows the organization to measure the degree of success of a project. Such results can be shared with stakeholders, target markets, and prospective investors.

- Outcome Evaluation

Outcome evaluation is primarily target-audience oriented because it measures the effects of the project, program, or product on the users. This type of evaluation views the outcomes of the project through the lens of the target audience and it often measures changes such as knowledge-improvement, skill acquisition, and increased job efficiency.

- Appreciative Enquiry

Appreciative inquiry is a type of evaluation research that pays attention to result-producing approaches. It is predicated on the belief that an organization will grow in whatever direction its stakeholders pay primary attention to such that if all the attention is focused on problems, identifying them would be easy.

In carrying out appreciative inquiry, the research identifies the factors directly responsible for the positive results realized in the course of a project, analyses the reasons for these results, and intensifies the utilization of these factors.

Evaluation Research Methodology

There are four major evaluation research methods, namely; output measurement, input measurement, impact assessment and service quality

- Output/Performance Measurement

Output measurement is a method employed in evaluative research that shows the results of an activity undertaking by an organization. In other words, performance measurement pays attention to the results achieved by the resources invested in a specific activity or organizational process.

More than investing resources in a project, organizations must be able to track the extent to which these resources have yielded results, and this is where performance measurement comes in. Output measurement allows organizations to pay attention to the effectiveness and impact of a process rather than just the process itself.

Other key indicators of performance measurement include user-satisfaction, organizational capacity, market penetration, and facility utilization. In carrying out performance measurement, organizations must identify the parameters that are relevant to the process in question, their industry, and the target markets.

5 Performance Evaluation Research Questions Examples

- What is the cost-effectiveness of this project?

- What is the overall reach of this project?

- How would you rate the market penetration of this project?

- How accessible is the project?

- Is this project time-efficient?

- Input Measurement

In evaluation research, input measurement entails assessing the number of resources committed to a project or goal in any organization. This is one of the most common indicators in evaluation research because it allows organizations to track their investments.

The most common indicator of inputs measurement is the budget which allows organizations to evaluate and limit expenditure for a project. It is also important to measure non-monetary investments like human capital; that is the number of persons needed for successful project execution and production capital.

5 Input Evaluation Research Questions Examples

- What is the budget for this project?

- What is the timeline of this process?

- How many employees have been assigned to this project?

- Do we need to purchase new machinery for this project?

- How many third-parties are collaborators in this project?

- Impact/Outcomes Assessment

In impact assessment, the evaluation researcher focuses on how the product or project affects target markets, both directly and indirectly. Outcomes assessment is somewhat challenging because many times, it is difficult to measure the real-time value and benefits of a project for the users.

In assessing the impact of a process, the evaluation researcher must pay attention to the improvement recorded by the users as a result of the process or project in question. Hence, it makes sense to focus on cognitive and affective changes, expectation-satisfaction, and similar accomplishments of the users.

5 Impact Evaluation Research Questions Examples

- How has this project affected you?

- Has this process affected you positively or negatively?

- What role did this project play in improving your earning power?

- On a scale of 1-10, how excited are you about this project?

- How has this project improved your mental health?

- Service Quality

Service quality is the evaluation research method that accounts for any differences between the expectations of the target markets and their impression of the undertaken project. Hence, it pays attention to the overall service quality assessment carried out by the users.

It is not uncommon for organizations to build the expectations of target markets as they embark on specific projects. Service quality evaluation allows these organizations to track the extent to which the actual product or service delivery fulfils the expectations.

5 Service Quality Evaluation Questions

- On a scale of 1-10, how satisfied are you with the product?

- How helpful was our customer service representative?

- How satisfied are you with the quality of service?

- How long did it take to resolve the issue at hand?

- How likely are you to recommend us to your network?

Uses of Evaluation Research

- Evaluation research is used by organizations to measure the effectiveness of activities and identify areas needing improvement. Findings from evaluation research are key to project and product advancements and are very influential in helping organizations realize their goals efficiently.

- The findings arrived at from evaluation research serve as evidence of the impact of the project embarked on by an organization. This information can be presented to stakeholders, customers, and can also help your organization secure investments for future projects.

- Evaluation research helps organizations to justify their use of limited resources and choose the best alternatives.

- It is also useful in pragmatic goal setting and realization.

- Evaluation research provides detailed insights into projects embarked on by an organization. Essentially, it allows all stakeholders to understand multiple dimensions of a process, and to determine strengths and weaknesses.

- Evaluation research also plays a major role in helping organizations to improve their overall practice and service delivery. This research design allows organizations to weigh existing processes through feedback provided by stakeholders, and this informs better decision making.

- Evaluation research is also instrumental to sustainable capacity building. It helps you to analyze demand patterns and determine whether your organization requires more funds, upskilling or improved operations.

Data Collection Techniques Used in Evaluation Research

In gathering useful data for evaluation research, the researcher often combines quantitative and qualitative research methods . Qualitative research methods allow the researcher to gather information relating to intangible values such as market satisfaction and perception.

On the other hand, quantitative methods are used by the evaluation researcher to assess numerical patterns, that is, quantifiable data. These methods help you measure impact and results; although they may not serve for understanding the context of the process.

Quantitative Methods for Evaluation Research

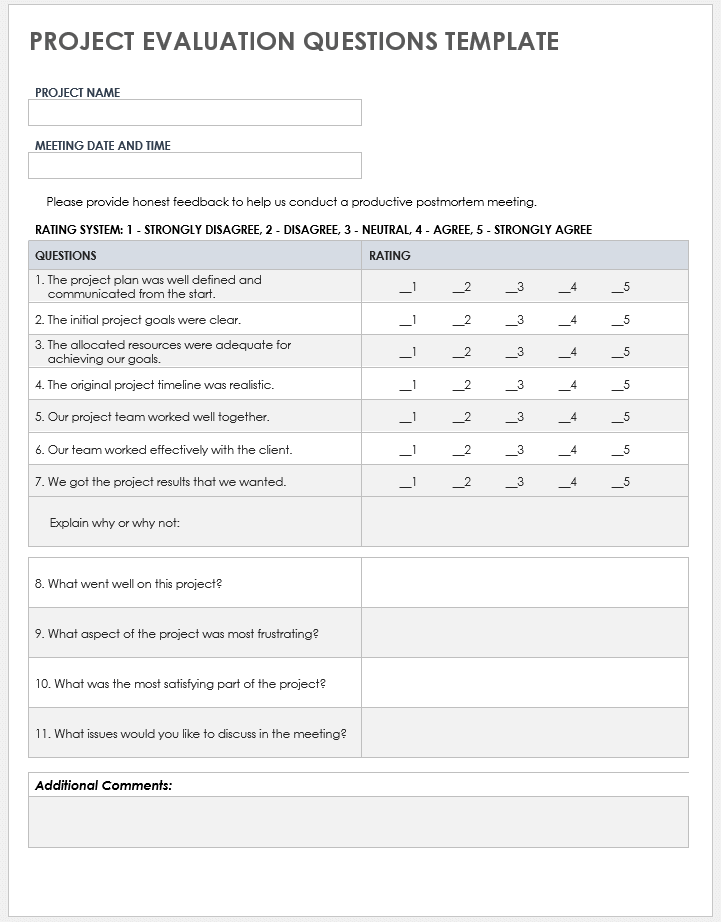

A survey is a quantitative method that allows you to gather information about a project from a specific group of people. Surveys are largely context-based and limited to target groups who are asked a set of structured questions in line with the predetermined context.

Surveys usually consist of close-ended questions that allow the evaluative researcher to gain insight into several variables including market coverage and customer preferences. Surveys can be carried out physically using paper forms or online through data-gathering platforms like Formplus .

- Questionnaires

A questionnaire is a common quantitative research instrument deployed in evaluation research. Typically, it is an aggregation of different types of questions or prompts which help the researcher to obtain valuable information from respondents.

A poll is a common method of opinion-sampling that allows you to weigh the perception of the public about issues that affect them. The best way to achieve accuracy in polling is by conducting them online using platforms like Formplus.

Polls are often structured as Likert questions and the options provided always account for neutrality or indecision. Conducting a poll allows the evaluation researcher to understand the extent to which the product or service satisfies the needs of the users.

Qualitative Methods for Evaluation Research

- One-on-One Interview

An interview is a structured conversation involving two participants; usually the researcher and the user or a member of the target market. One-on-One interviews can be conducted physically, via the telephone and through video conferencing apps like Zoom and Google Meet.

- Focus Groups

A focus group is a research method that involves interacting with a limited number of persons within your target market, who can provide insights on market perceptions and new products.

- Qualitative Observation

Qualitative observation is a research method that allows the evaluation researcher to gather useful information from the target audience through a variety of subjective approaches. This method is more extensive than quantitative observation because it deals with a smaller sample size, and it also utilizes inductive analysis.

- Case Studies

A case study is a research method that helps the researcher to gain a better understanding of a subject or process. Case studies involve in-depth research into a given subject, to understand its functionalities and successes.

How to Formplus Online Form Builder for Evaluation Survey

- Sign into Formplus

In the Formplus builder, you can easily create your evaluation survey by dragging and dropping preferred fields into your form. To access the Formplus builder, you will need to create an account on Formplus.

Once you do this, sign in to your account and click on “Create Form ” to begin.

- Edit Form Title

Click on the field provided to input your form title, for example, “Evaluation Research Survey”.

Click on the edit button to edit the form.

Add Fields: Drag and drop preferred form fields into your form in the Formplus builder inputs column. There are several field input options for surveys in the Formplus builder.

Edit fields

Click on “Save”

Preview form.

- Form Customization

With the form customization options in the form builder, you can easily change the outlook of your form and make it more unique and personalized. Formplus allows you to change your form theme, add background images, and even change the font according to your needs.

- Multiple Sharing Options

Formplus offers multiple form sharing options which enables you to easily share your evaluation survey with survey respondents. You can use the direct social media sharing buttons to share your form link to your organization’s social media pages.

You can send out your survey form as email invitations to your research subjects too. If you wish, you can share your form’s QR code or embed it on your organization’s website for easy access.

Conclusion

Conducting evaluation research allows organizations to determine the effectiveness of their activities at different phases. This type of research can be carried out using qualitative and quantitative data collection methods including focus groups, observation, telephone and one-on-one interviews, and surveys.

Online surveys created and administered via data collection platforms like Formplus make it easier for you to gather and process information during evaluation research. With Formplus multiple form sharing options, it is even easier for you to gather useful data from target markets.

Connect to Formplus, Get Started Now - It's Free!

- characteristics of evaluation research

- evaluation research methods

- types of evaluation research

- what is evaluation research

- busayo.longe

You may also like:

What is Pure or Basic Research? + [Examples & Method]

Simple guide on pure or basic research, its methods, characteristics, advantages, and examples in science, medicine, education and psychology

Assessment vs Evaluation: 11 Key Differences

This article will discuss what constitutes evaluations and assessments along with the key differences between these two research methods.

Formal Assessment: Definition, Types Examples & Benefits

In this article, we will discuss different types and examples of formal evaluation, and show you how to use Formplus for online assessments.

Recall Bias: Definition, Types, Examples & Mitigation

This article will discuss the impact of recall bias in studies and the best ways to avoid them during research.

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Evaluation Research: Definition, Methods and Examples

Content Index

- What is evaluation research

- Why do evaluation research

Quantitative methods

Qualitative methods.

- Process evaluation research question examples

- Outcome evaluation research question examples

What is evaluation research?

Evaluation research, also known as program evaluation, refers to research purpose instead of a specific method. Evaluation research is the systematic assessment of the worth or merit of time, money, effort and resources spent in order to achieve a goal.

Evaluation research is closely related to but slightly different from more conventional social research . It uses many of the same methods used in traditional social research, but because it takes place within an organizational context, it requires team skills, interpersonal skills, management skills, political smartness, and other research skills that social research does not need much. Evaluation research also requires one to keep in mind the interests of the stakeholders.

Evaluation research is a type of applied research, and so it is intended to have some real-world effect. Many methods like surveys and experiments can be used to do evaluation research. The process of evaluation research consisting of data analysis and reporting is a rigorous, systematic process that involves collecting data about organizations, processes, projects, services, and/or resources. Evaluation research enhances knowledge and decision-making, and leads to practical applications.

LEARN ABOUT: Action Research

Why do evaluation research?

The common goal of most evaluations is to extract meaningful information from the audience and provide valuable insights to evaluators such as sponsors, donors, client-groups, administrators, staff, and other relevant constituencies. Most often, feedback is perceived value as useful if it helps in decision-making. However, evaluation research does not always create an impact that can be applied anywhere else, sometimes they fail to influence short-term decisions. It is also equally true that initially, it might seem to not have any influence, but can have a delayed impact when the situation is more favorable. In spite of this, there is a general agreement that the major goal of evaluation research should be to improve decision-making through the systematic utilization of measurable feedback.

Below are some of the benefits of evaluation research

- Gain insights about a project or program and its operations

Evaluation Research lets you understand what works and what doesn’t, where we were, where we are and where we are headed towards. You can find out the areas of improvement and identify strengths. So, it will help you to figure out what do you need to focus more on and if there are any threats to your business. You can also find out if there are currently hidden sectors in the market that are yet untapped.

- Improve practice

It is essential to gauge your past performance and understand what went wrong in order to deliver better services to your customers. Unless it is a two-way communication, there is no way to improve on what you have to offer. Evaluation research gives an opportunity to your employees and customers to express how they feel and if there’s anything they would like to change. It also lets you modify or adopt a practice such that it increases the chances of success.

- Assess the effects

After evaluating the efforts, you can see how well you are meeting objectives and targets. Evaluations let you measure if the intended benefits are really reaching the targeted audience and if yes, then how effectively.

- Build capacity

Evaluations help you to analyze the demand pattern and predict if you will need more funds, upgrade skills and improve the efficiency of operations. It lets you find the gaps in the production to delivery chain and possible ways to fill them.

Methods of evaluation research

All market research methods involve collecting and analyzing the data, making decisions about the validity of the information and deriving relevant inferences from it. Evaluation research comprises of planning, conducting and analyzing the results which include the use of data collection techniques and applying statistical methods.

Some of the evaluation methods which are quite popular are input measurement, output or performance measurement, impact or outcomes assessment, quality assessment, process evaluation, benchmarking, standards, cost analysis, organizational effectiveness, program evaluation methods, and LIS-centered methods. There are also a few types of evaluations that do not always result in a meaningful assessment such as descriptive studies, formative evaluations, and implementation analysis. Evaluation research is more about information-processing and feedback functions of evaluation.

These methods can be broadly classified as quantitative and qualitative methods.

The outcome of the quantitative research methods is an answer to the questions below and is used to measure anything tangible.

- Who was involved?

- What were the outcomes?

- What was the price?

The best way to collect quantitative data is through surveys , questionnaires , and polls . You can also create pre-tests and post-tests, review existing documents and databases or gather clinical data.

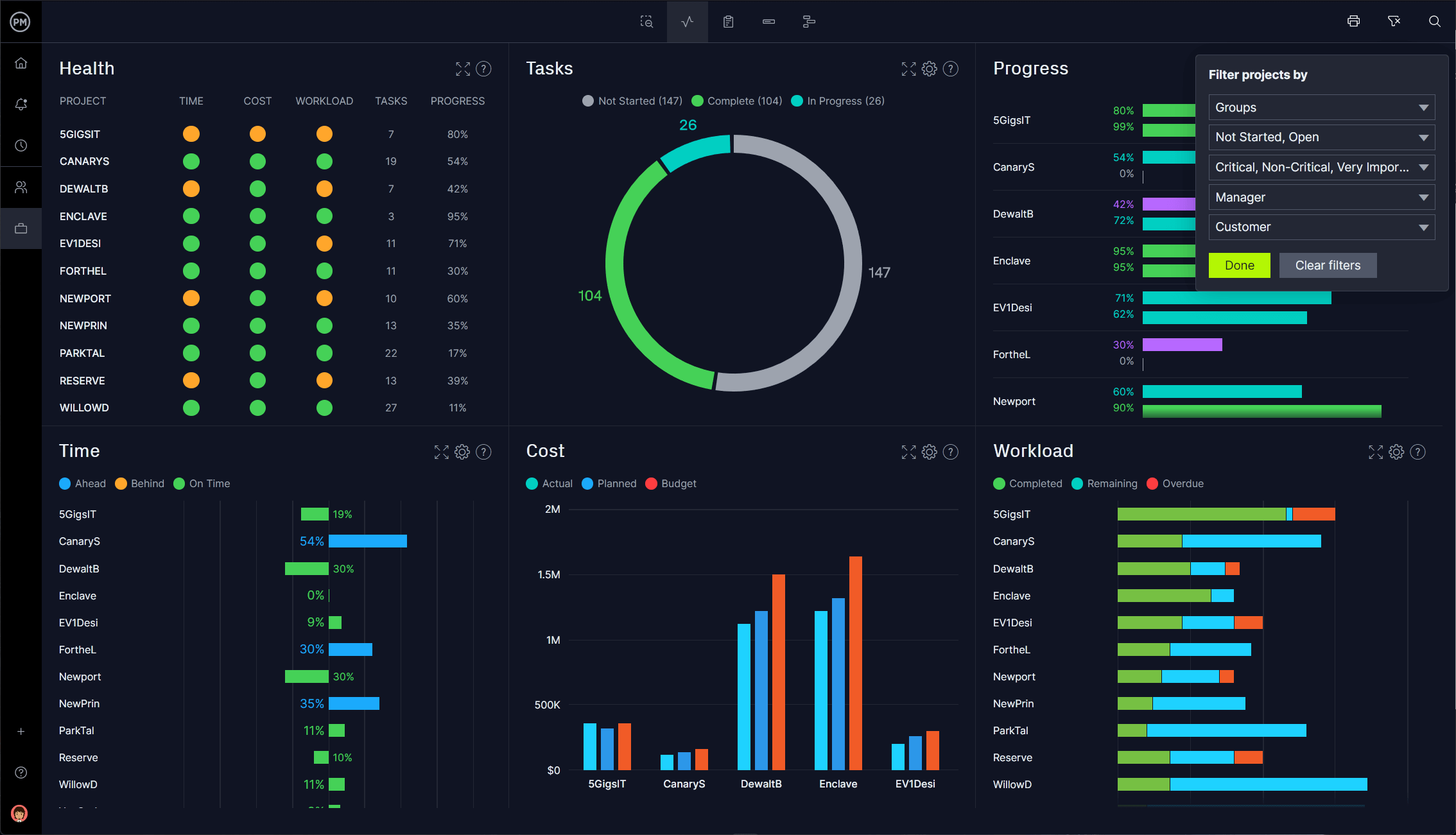

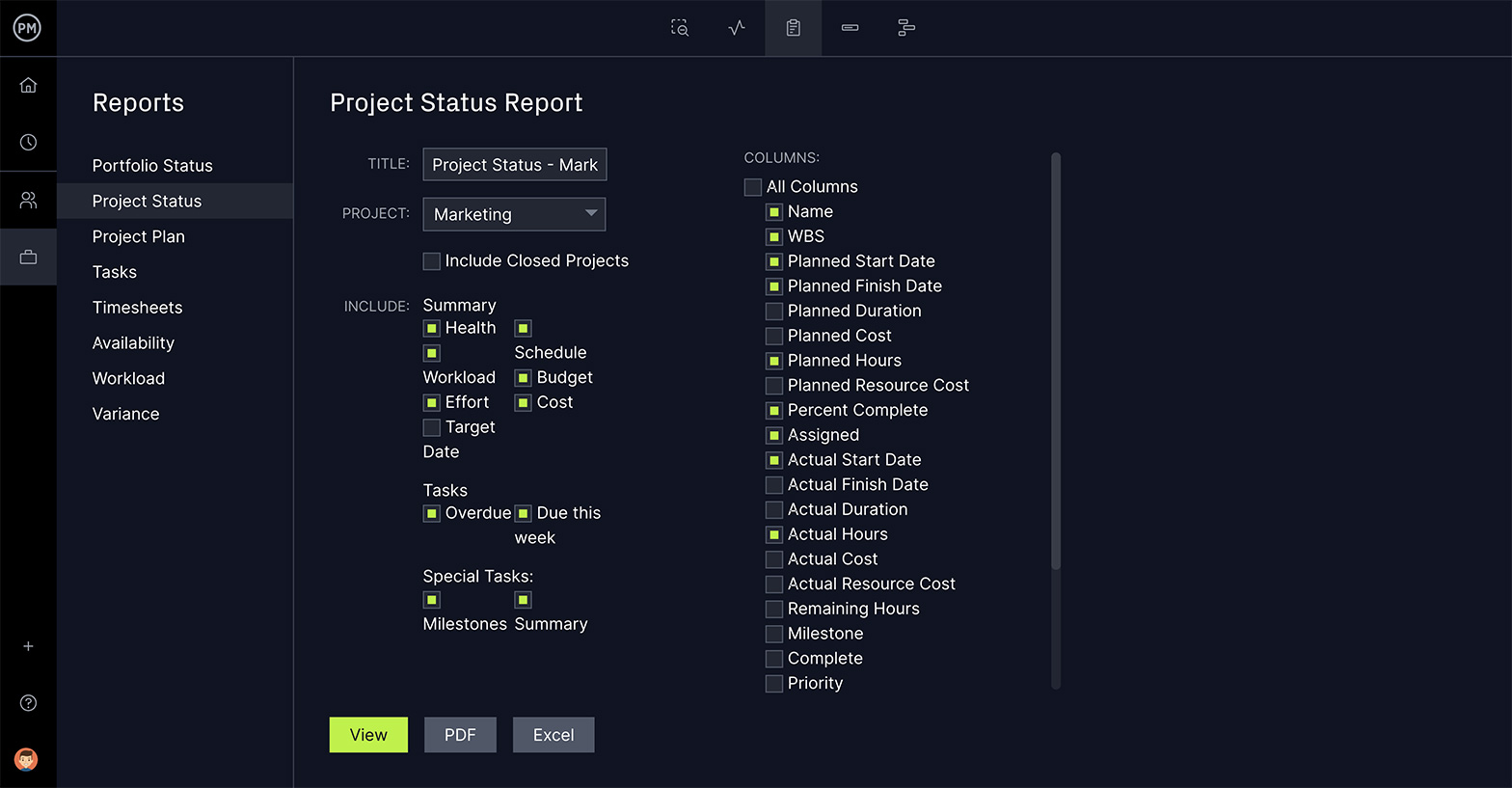

Surveys are used to gather opinions, feedback or ideas of your employees or customers and consist of various question types . They can be conducted by a person face-to-face or by telephone, by mail, or online. Online surveys do not require the intervention of any human and are far more efficient and practical. You can see the survey results on dashboard of research tools and dig deeper using filter criteria based on various factors such as age, gender, location, etc. You can also keep survey logic such as branching, quotas, chain survey, looping, etc in the survey questions and reduce the time to both create and respond to the donor survey . You can also generate a number of reports that involve statistical formulae and present data that can be readily absorbed in the meetings. To learn more about how research tool works and whether it is suitable for you, sign up for a free account now.

Create a free account!

Quantitative data measure the depth and breadth of an initiative, for instance, the number of people who participated in the non-profit event, the number of people who enrolled for a new course at the university. Quantitative data collected before and after a program can show its results and impact.

The accuracy of quantitative data to be used for evaluation research depends on how well the sample represents the population, the ease of analysis, and their consistency. Quantitative methods can fail if the questions are not framed correctly and not distributed to the right audience. Also, quantitative data do not provide an understanding of the context and may not be apt for complex issues.

Learn more: Quantitative Market Research: The Complete Guide

Qualitative research methods are used where quantitative methods cannot solve the research problem , i.e. they are used to measure intangible values. They answer questions such as

- What is the value added?

- How satisfied are you with our service?

- How likely are you to recommend us to your friends?

- What will improve your experience?

LEARN ABOUT: Qualitative Interview

Qualitative data is collected through observation, interviews, case studies, and focus groups. The steps for creating a qualitative study involve examining, comparing and contrasting, and understanding patterns. Analysts conclude after identification of themes, clustering similar data, and finally reducing to points that make sense.

Observations may help explain behaviors as well as the social context that is generally not discovered by quantitative methods. Observations of behavior and body language can be done by watching a participant, recording audio or video. Structured interviews can be conducted with people alone or in a group under controlled conditions, or they may be asked open-ended qualitative research questions . Qualitative research methods are also used to understand a person’s perceptions and motivations.

LEARN ABOUT: Social Communication Questionnaire

The strength of this method is that group discussion can provide ideas and stimulate memories with topics cascading as discussion occurs. The accuracy of qualitative data depends on how well contextual data explains complex issues and complements quantitative data. It helps get the answer of “why” and “how”, after getting an answer to “what”. The limitations of qualitative data for evaluation research are that they are subjective, time-consuming, costly and difficult to analyze and interpret.

Learn more: Qualitative Market Research: The Complete Guide

Survey software can be used for both the evaluation research methods. You can use above sample questions for evaluation research and send a survey in minutes using research software. Using a tool for research simplifies the process right from creating a survey, importing contacts, distributing the survey and generating reports that aid in research.

Examples of evaluation research

Evaluation research questions lay the foundation of a successful evaluation. They define the topics that will be evaluated. Keeping evaluation questions ready not only saves time and money, but also makes it easier to decide what data to collect, how to analyze it, and how to report it.

Evaluation research questions must be developed and agreed on in the planning stage, however, ready-made research templates can also be used.

Process evaluation research question examples:

- How often do you use our product in a day?

- Were approvals taken from all stakeholders?

- Can you report the issue from the system?

- Can you submit the feedback from the system?

- Was each task done as per the standard operating procedure?

- What were the barriers to the implementation of each task?

- Were any improvement areas discovered?

Outcome evaluation research question examples:

- How satisfied are you with our product?

- Did the program produce intended outcomes?

- What were the unintended outcomes?

- Has the program increased the knowledge of participants?

- Were the participants of the program employable before the course started?

- Do participants of the program have the skills to find a job after the course ended?

- Is the knowledge of participants better compared to those who did not participate in the program?

MORE LIKE THIS

Trend Report: Guide for Market Dynamics & Strategic Analysis

May 29, 2024

Cannabis Industry Business Intelligence: Impact on Research

May 28, 2024

Top 10 Dynata Alternatives & Competitors

May 27, 2024

What Are My Employees Really Thinking? The Power of Open-ended Survey Analysis

May 24, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

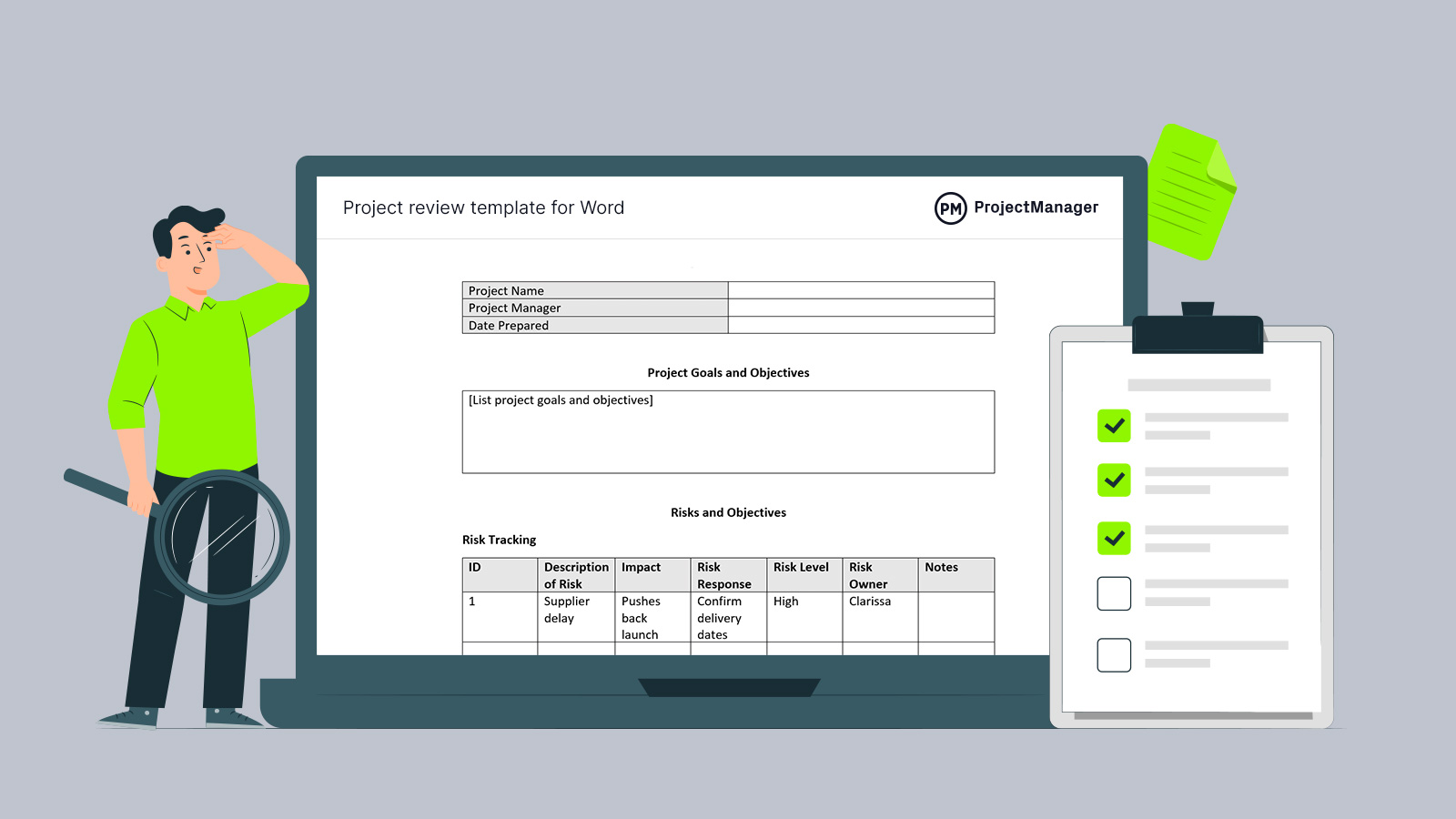

Project Evaluation Examples | 2024 Practical Guide with Templates For Beginners

Jane Ng • 03 May, 2024 • 13 min read

Whether you’re managing projects, running a business, or working as a freelancer, the project plays a vital role in driving the growth of your business model. It offers a structured and systematic way to assess project performance, pinpoint areas that need improvement, and achieve optimal outcomes.

In this blog post, we’ll delve into project evaluation, discover its definition, benefits, key components, types, project evaluation examples , post-evaluation reporting, and create a project evaluation process.

Let’s explore how project evaluation can take your business toward new heights.

Table of Contents

What is project evaluation, benefits of project evaluation, key components of project evaluation, types of project evaluation, project evaluation examples, step-by-step to create project evaluation, post evaluation (report) , project evaluation templates.

- Key Takeaways

Tips For Better Engagement

- Project Management

- Planning Poker Online

- Project management software

Looking for an interactive way to manage your project better?.

Get free templates and quizzes to play for your next meetings. Sign up for free and take what you want from AhaSlides!

Project evaluation is the assessment of a project’s performance, effectiveness, and outcomes. It involves data to see if the project analyzing its goals and met success criteria.

Project evaluation goes beyond simply measuring outputs and deliverables; it examines the overall impact and value generated by the project.

By learning from what worked and didn’t, organizations can improve their planning and make changes to get even better results next time. It’s like taking a step back to see the bigger picture and figure out how to make things even more successful.

Project evaluation offers several key benefits that contribute to the success and growth of an organization, including:

- It improves decision-making: It helps organizations evaluate project performance, identify areas for improvement, and understand factors contributing to success or failure. So they can make more informed decisions about resource allocation, project prioritization, and strategic planning.

- It enhances project performance: Through project evaluation, organizations can identify strengths and weaknesses within their projects. This allows them to implement corrective measures to improve project outcomes.

- It helps to mitigate risks: By regularly assessing project progress, organizations can identify potential risks and take solutions to reduce the possibility of project delays, budget overruns, and other unexpected issues.

- It promotes continuous improvement : By analyzing project failures, organizations can refine their project management practices, this iterative approach to improvement drives innovation, efficiency, and overall project success.

- It improves stakeholder engagement and satisfaction: Evaluating outcomes and gathering stakeholders’ feedback enables organizations to understand their needs, expectations, and satisfaction levels.

- It promotes transparency: Evaluation results can be communicated to stakeholders, demonstrating transparency and building trust. The results provide an objective project performance evaluation, ensuring that projects are aligned with strategic goals and resources are used efficiently.

1/ Clear Objectives and Criteria:

Project evaluation begins with establishing clear objectives and criteria for measuring success. These objectives and criteria provide a framework for evaluation and ensure alignment with the project’s goals.

Here are some project evaluation plan examples and questions that can help in defining clear objectives and criteria:

Questions to Define Clear Objectives:

- What specific goals do we want to achieve with this project?

- What measurable outcomes or results are we aiming for?

- How can we quantify success for this project?

- Are the objectives realistic and attainable within the given resources and timeframe?

- Are the objectives aligned with the organization’s strategic priorities?

Examples of Evaluation Criteria:

- Cost-effectiveness: Assessing if the project was completed within the allocated budget and delivered value for money.

- Timeline: Evaluating if the project was completed within the planned schedule and met milestones.

- Quality: Examining whether the project deliverables and outcomes meet the predetermined quality standards.

- Stakeholder satisfaction: Gather feedback from stakeholders to gauge their satisfaction level with the project’s results.

- Impact: Measuring the project’s broader impact on the organization, customers, and community.

2/ Data Collection and Analysis:

Effective project evaluation relies on collecting relevant data to assess project performance. This includes gathering quantitative and qualitative data through various methods such as surveys, interviews, observations, and document analysis.

The collected data is then analyzed to gain insights into the project’s strengths, weaknesses, and overall performance. Here are some example questions when preparing to collect and analyze data:

- What specific data needs to be collected to evaluate the project’s performance?

- What methods and tools will be employed to collect the required data (e.g., surveys, interviews, observations, document analysis)?

- Who are the key stakeholders from whom data needs to be collected?

- How will the data collection process be structured and organized to ensure accuracy and completeness?

3/ Performance Measurement:

Performance measurement involves assessing the project’s progress, outputs, and outcomes about the established objectives and criteria. It includes tracking key performance indicators (KPIs) and evaluating the project’s adherence to schedules, budgets, quality standards, and stakeholder requirements.

4/ Stakeholder Engagement:

Stakeholders are individuals or groups who are directly or indirectly affected by the project or have a significant interest in its outcomes. They can include project sponsors, team members, end-users, customers, community members, and other relevant parties.

Engaging stakeholders in the project evaluation process means involving them and seeking their perspectives, feedback, and insights. By engaging stakeholders, their diverse viewpoints and experiences are considered, ensuring a more comprehensive evaluation.

5/ Reporting and Communication:

The final key component of project evaluation is the reporting and communication of evaluation results. This involves preparing a comprehensive evaluation report that presents findings, conclusions, and recommendations.

Effective communication of evaluation results ensures that stakeholders are informed about the project’s performance, lessons learned, and potential areas for improvement.

There are generally four main types of project evaluation:

#1 – Performance Evaluation:

This type of evaluation focuses on assessing the performance of a project in terms of its adherence to project plans, schedules, budgets, and quality standards .

It examines whether the project is meeting its objectives, delivering the intended outputs, and effectively utilizing resources.

#2 – Outcomes Evaluation:

Outcomes evaluation assesses the broader impact and results of a project. It looks beyond the immediate outputs and examines the long-term outcomes and benefits generated by the project.

This evaluation type considers whether the project has achieved its desired goals, created positive changes , and contributed to the intended impacts .

#3 – Process Evaluation:

Process evaluation examines the effectiveness and efficiency of the project implementation process. It assesses the project management strategies , methodologies , and approaches used to execute the project.

This evaluation type focuses on identifying areas for improvement in project planning, execution, coordination, and communication.

#4 – Impact Evaluation:

Impact evaluation goes even further than outcomes evaluation and aims to determine the project’s causal relationship with the observed changes or impacts.

It seeks to understand the extent to which the project can be attributed to the achieved outcomes and impacts, taking into account external factors and potential alternative explanations.

*Note: These types of evaluation can be combined or tailored to suit the project’s specific needs and context.

Different project evaluation examples are as follows:

#1 – Performance Evaluation

A construction project aims to complete a building within a specific timeframe and budget. Performance evaluation would assess the project’s progress, adherence to the construction schedule, quality of workmanship, and utilization of resources.

#2 – Outcomes Evaluation

A non-profit organization implements a community development project about improving literacy rates in disadvantaged neighborhoods. Outcomes evaluation would involve assessing literacy levels, school attendance, and community engagement.

#3 – Process Evaluation – Project Evaluation Examples

An IT project involves the implementation of a new software system across a company’s departments. Process evaluation would examine the project’s implementation processes and activities.

#4 – Impact Evaluation

A public health initiative aims to reduce the prevalence of a specific disease in a targeted population. Impact evaluation would assess the project’s contribution to the reduction of disease rates and improvements in community health outcomes.

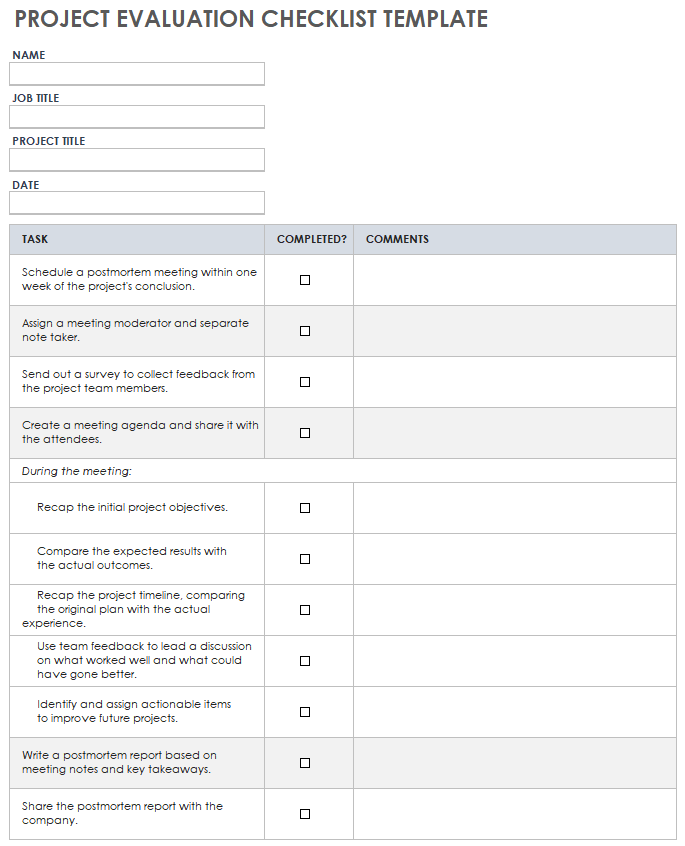

Here is a step-by-step guide to help you create a project evaluation:

1/ Define the Purpose and Objectives:

- Clearly state the purpose of the evaluation, such as project performance or measuring outcomes.

- Establish specific objectives that align with the evaluation’s purpose, focusing on what you aim to achieve.

2/ Identify Evaluation Criteria and Indicators:

- Identify the evaluation criteria for the project. These can include performance, quality, cost, schedule adherence, and stakeholder satisfaction.

- Define measurable indicators for each criterion to facilitate data collection and analysis.

3/ Plan Data Collection Methods:

- Identify the methods and tools to collect data such as surveys, interviews, observations, document analysis, or existing data sources.

- Design questionnaires, interview guides, observation checklists, or other instruments to collect the necessary data. Ensure that they are clear, concise, and focused on gathering relevant information.

4/ Collect Data:

- Implement the planned data collection methods and gather the necessary information. Ensure that data collection is done consistently and accurately to obtain reliable results.

- Consider the appropriate sample size and target stakeholders for data collection.

5/ Analyze Data:

Once the data is collected, analyze it to derive meaningful insights. You can use tools and techniques to interpret the data and identify patterns, trends, and key findings. Ensure that the analysis aligns with the evaluation criteria and objectives.

6/ Draw Conclusions and Make Recommendations:

- Based on the evaluation outcomes, conclude the project’s performance.

- Make actionable recommendations for improvement, highlighting specific areas or strategies to enhance project effectiveness.

- Prepare a comprehensive report that presents the evaluation process, findings, conclusions, and recommendations.

7/ Communicate and Share Results:

- Share the evaluation results with relevant stakeholders and decision-makers.

- Use the findings and recommendations to inform future project planning, decision-making, and continuous improvement.

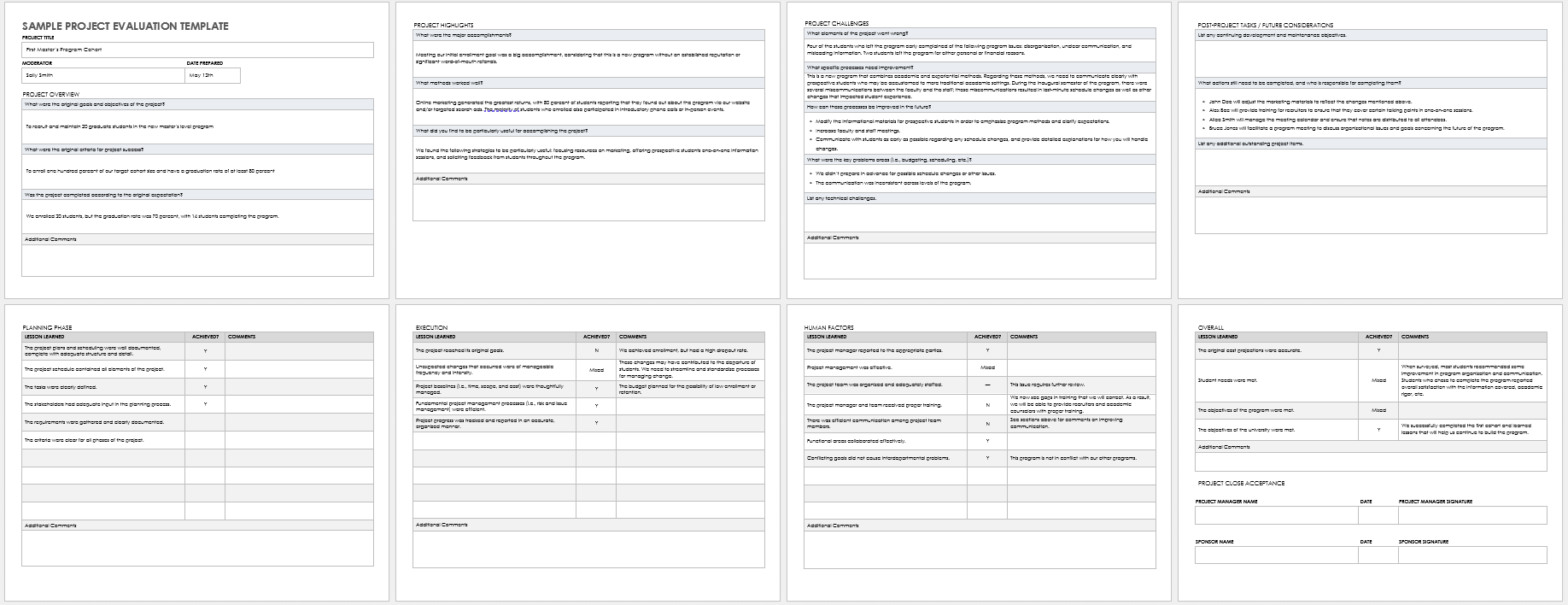

If you have completed the project evaluation, it is time for a follow-up report to provide a comprehensive overview of the evaluation process, its results, and implications for the projects.

Here are the points you need to keep in mind for post-evaluation reporting:

- Provide a concise summary of the evaluation, including its purpose, key findings, and recommendations.

- Detail the evaluation approach, including data collection methods, tools, and techniques used.

- Present the main findings and results of the evaluation.

- Highlight significant achievements, successes, and areas for improvement.

- Discuss the implications of the evaluation findings and recommendations for project planning, decision-making, and resource allocation.

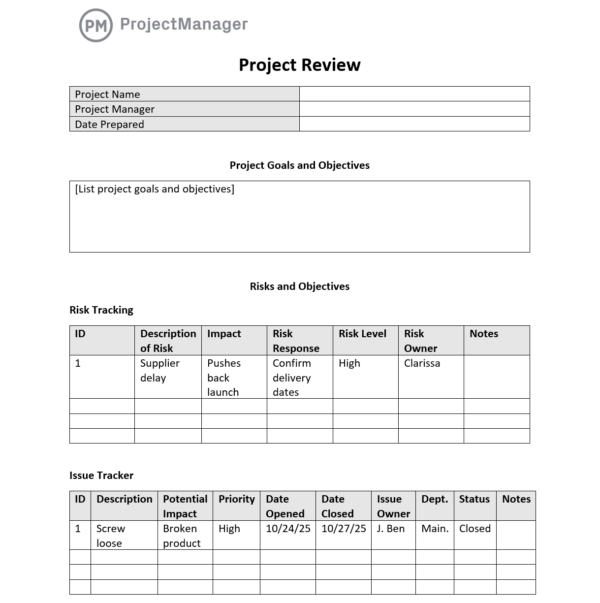

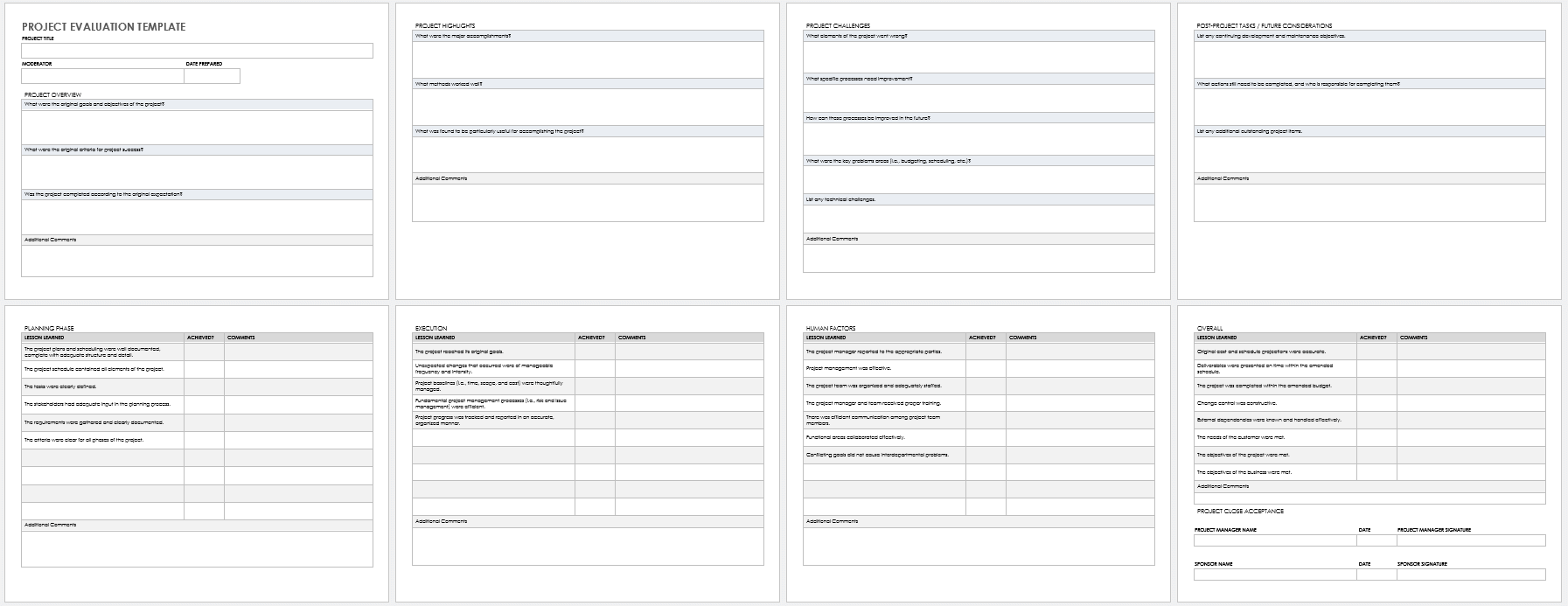

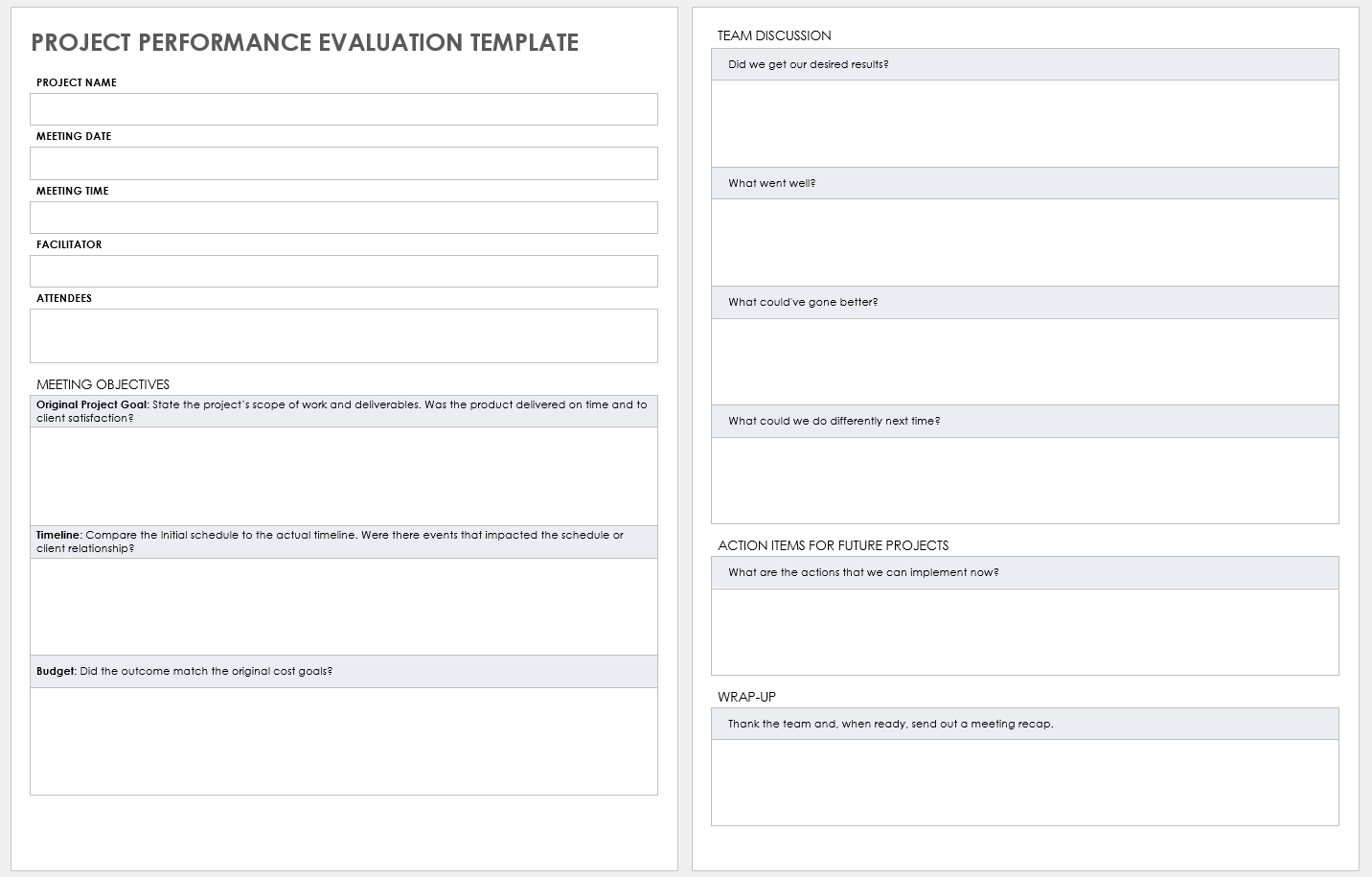

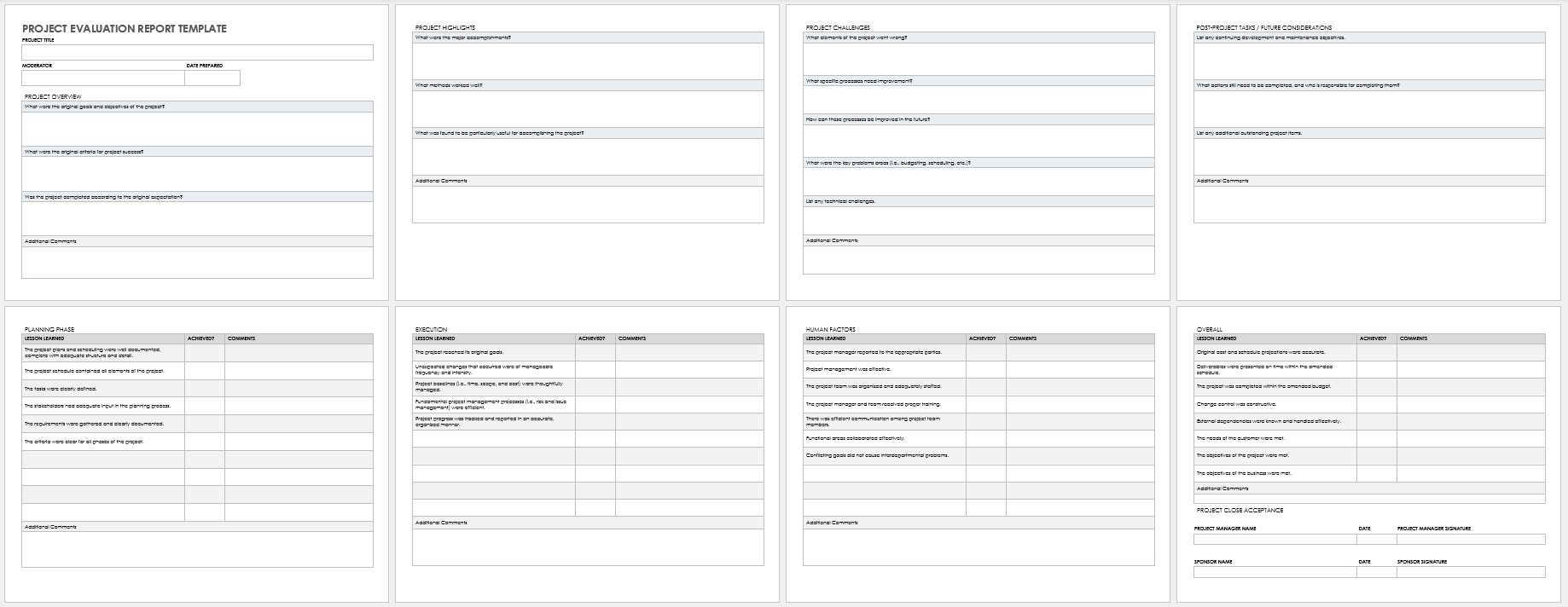

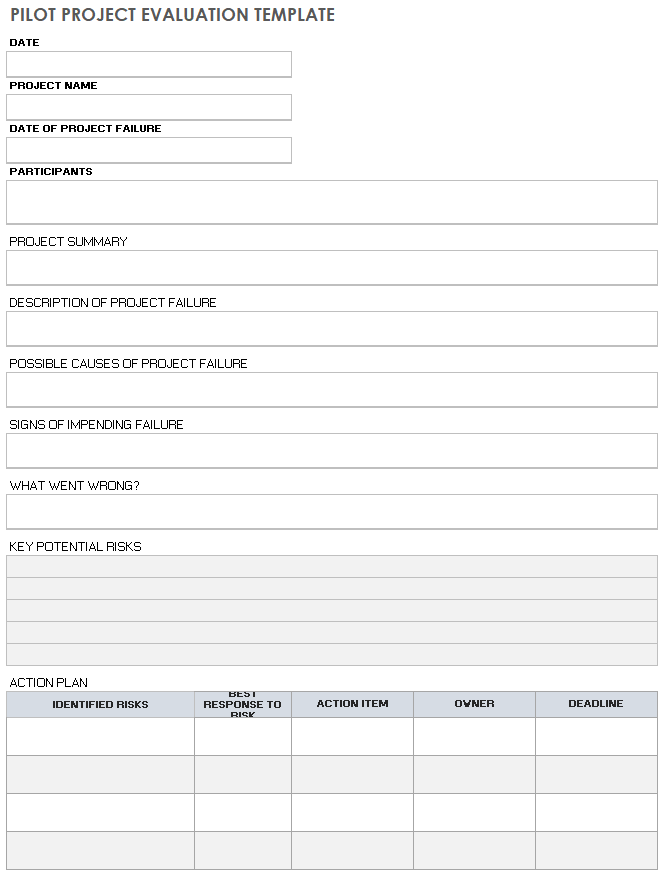

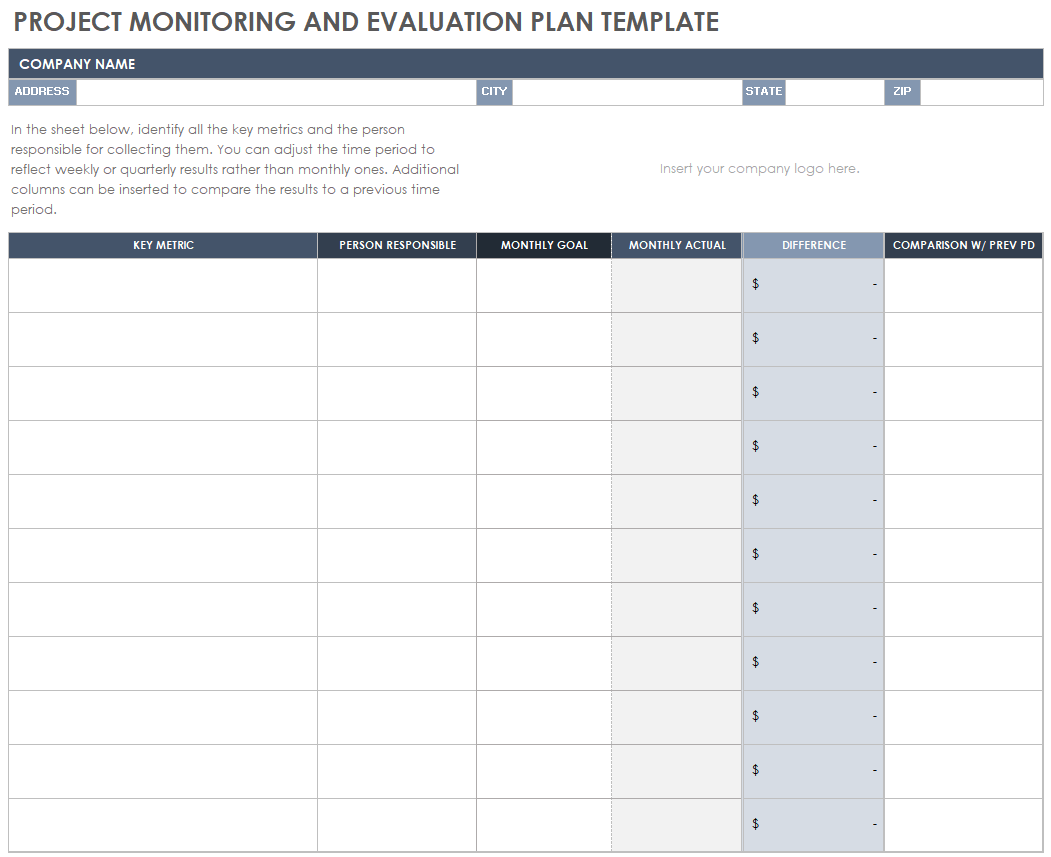

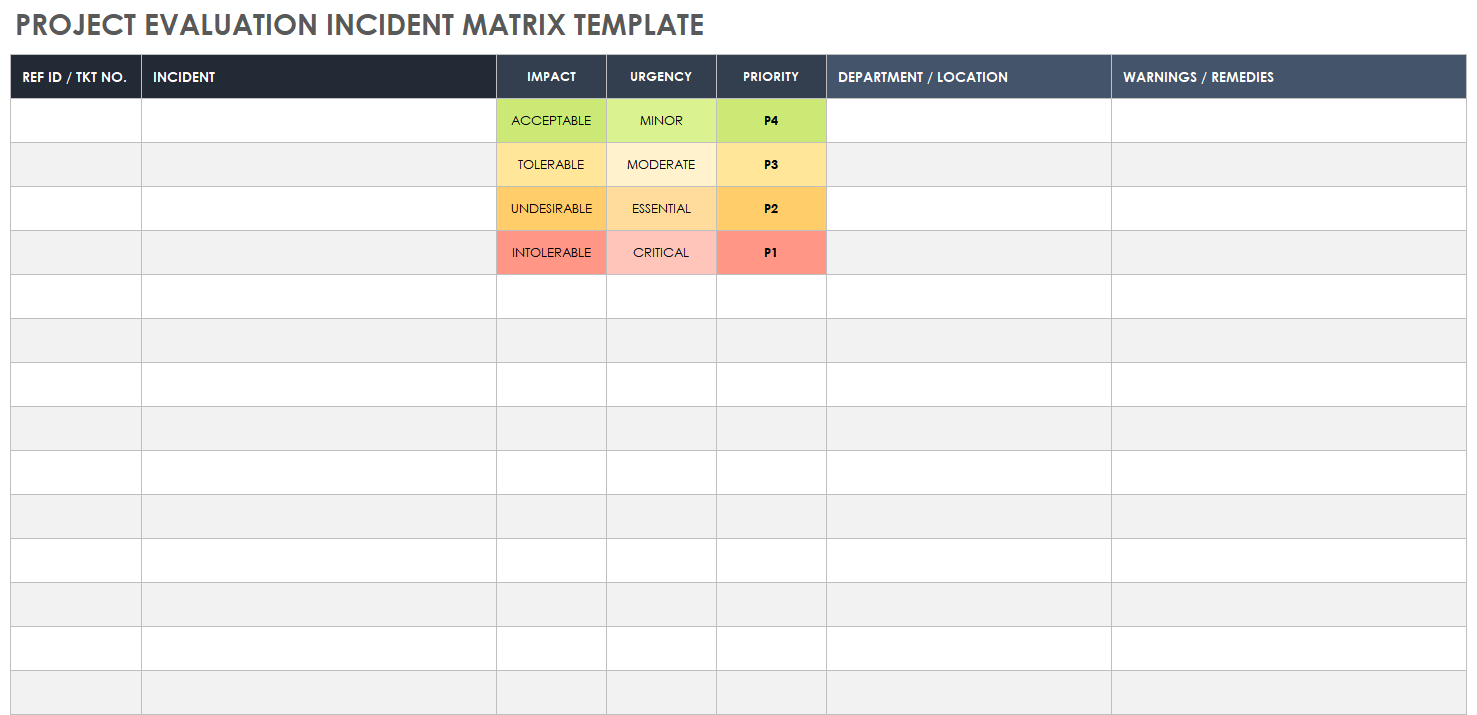

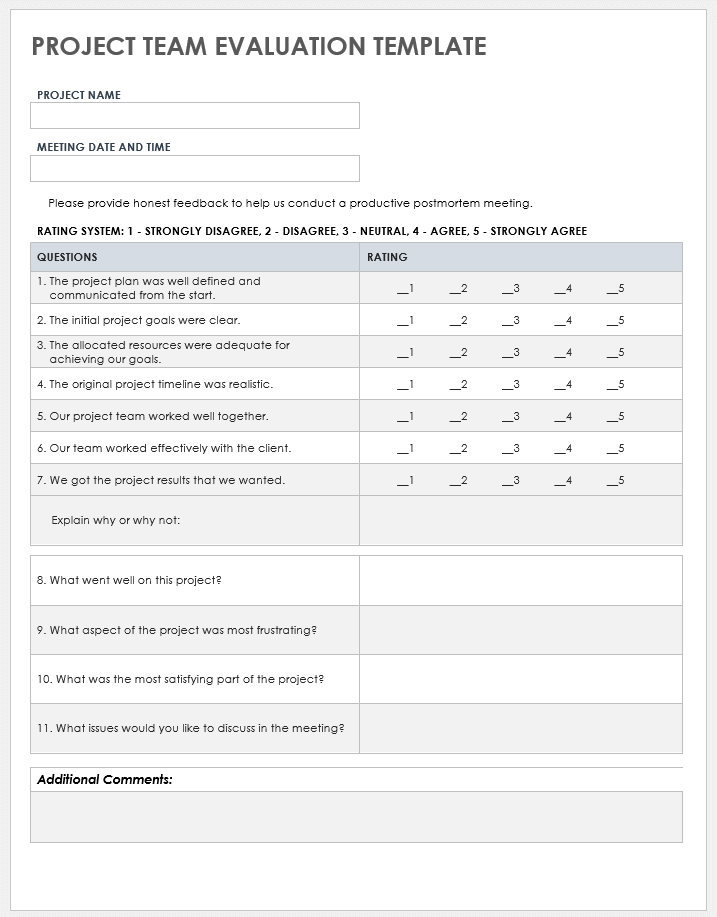

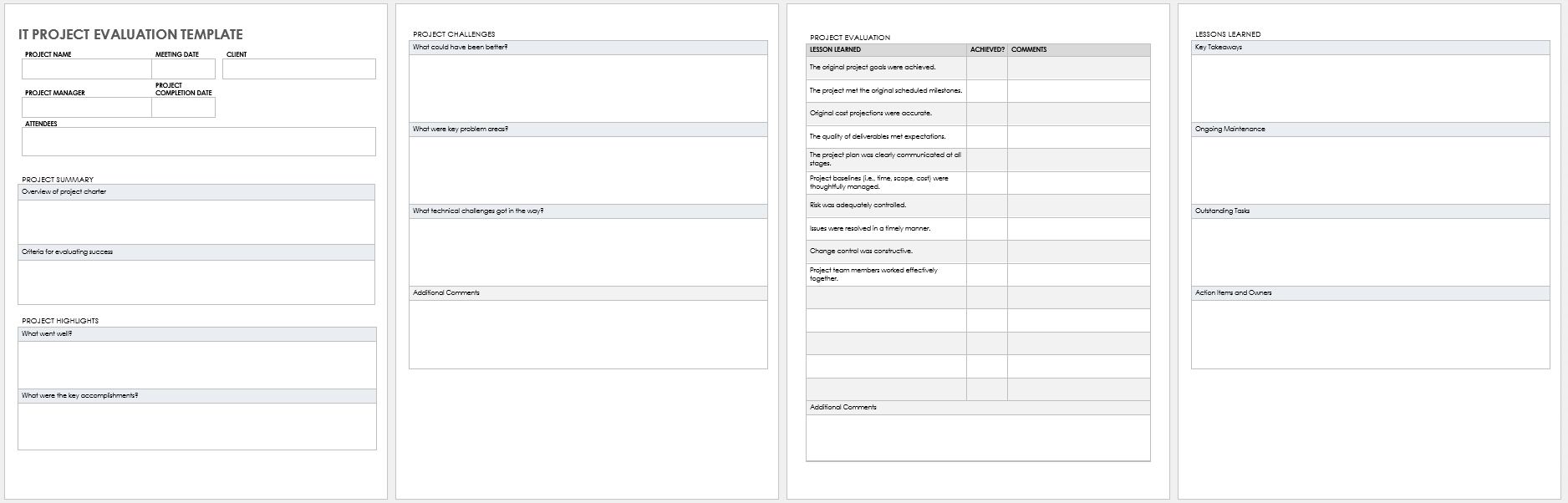

Here’s an overall project evaluation templates. You can customize it based on your specific project and evaluation needs:

Key Takeaways

Project evaluation is a critical process that helps assess the performance, outcomes, and effectiveness of a project. It provides valuable information about what worked well, areas for improvement, and lessons learned.

And don’t forget AhaSlides play a significant role in the evaluation process. We provide pre-made templates with interactive features , which can be utilized to collect data, insights and engage stakeholders! Let’s explore!

What are the 4 types of project evaluation?

What are the steps in a project evaluation.

Here are steps to help you create a project evaluation: Define the Purpose and Objectives Identify Evaluation Criteria and Indicators Plan Data Collection Methods Collect Data and Analyze Data Draw Conclusions and Make Recommendations Communicate and Share Results

What are the 5 elements of evaluation in project management?

- Clear Objectives and Criteria Data Collection and Analysis Performance Measurement Stakeholder Engagement Reporting and Communication

Ref: Project Manager | Eval Community | AHRQ

A writer who wants to create practical and valuable content for the audience

Tips to Engage with Polls & Trivia

More from AhaSlides

- Privacy Policy

Home » Evaluating Research – Process, Examples and Methods

Evaluating Research – Process, Examples and Methods

Table of Contents

Evaluating Research

Definition:

Evaluating Research refers to the process of assessing the quality, credibility, and relevance of a research study or project. This involves examining the methods, data, and results of the research in order to determine its validity, reliability, and usefulness. Evaluating research can be done by both experts and non-experts in the field, and involves critical thinking, analysis, and interpretation of the research findings.

Research Evaluating Process

The process of evaluating research typically involves the following steps:

Identify the Research Question

The first step in evaluating research is to identify the research question or problem that the study is addressing. This will help you to determine whether the study is relevant to your needs.

Assess the Study Design

The study design refers to the methodology used to conduct the research. You should assess whether the study design is appropriate for the research question and whether it is likely to produce reliable and valid results.

Evaluate the Sample

The sample refers to the group of participants or subjects who are included in the study. You should evaluate whether the sample size is adequate and whether the participants are representative of the population under study.

Review the Data Collection Methods

You should review the data collection methods used in the study to ensure that they are valid and reliable. This includes assessing the measures used to collect data and the procedures used to collect data.

Examine the Statistical Analysis

Statistical analysis refers to the methods used to analyze the data. You should examine whether the statistical analysis is appropriate for the research question and whether it is likely to produce valid and reliable results.

Assess the Conclusions

You should evaluate whether the data support the conclusions drawn from the study and whether they are relevant to the research question.

Consider the Limitations

Finally, you should consider the limitations of the study, including any potential biases or confounding factors that may have influenced the results.

Evaluating Research Methods

Evaluating Research Methods are as follows:

- Peer review: Peer review is a process where experts in the field review a study before it is published. This helps ensure that the study is accurate, valid, and relevant to the field.

- Critical appraisal : Critical appraisal involves systematically evaluating a study based on specific criteria. This helps assess the quality of the study and the reliability of the findings.

- Replication : Replication involves repeating a study to test the validity and reliability of the findings. This can help identify any errors or biases in the original study.

- Meta-analysis : Meta-analysis is a statistical method that combines the results of multiple studies to provide a more comprehensive understanding of a particular topic. This can help identify patterns or inconsistencies across studies.

- Consultation with experts : Consulting with experts in the field can provide valuable insights into the quality and relevance of a study. Experts can also help identify potential limitations or biases in the study.

- Review of funding sources: Examining the funding sources of a study can help identify any potential conflicts of interest or biases that may have influenced the study design or interpretation of results.

Example of Evaluating Research

Example of Evaluating Research sample for students:

Title of the Study: The Effects of Social Media Use on Mental Health among College Students

Sample Size: 500 college students

Sampling Technique : Convenience sampling

- Sample Size: The sample size of 500 college students is a moderate sample size, which could be considered representative of the college student population. However, it would be more representative if the sample size was larger, or if a random sampling technique was used.

- Sampling Technique : Convenience sampling is a non-probability sampling technique, which means that the sample may not be representative of the population. This technique may introduce bias into the study since the participants are self-selected and may not be representative of the entire college student population. Therefore, the results of this study may not be generalizable to other populations.

- Participant Characteristics: The study does not provide any information about the demographic characteristics of the participants, such as age, gender, race, or socioeconomic status. This information is important because social media use and mental health may vary among different demographic groups.

- Data Collection Method: The study used a self-administered survey to collect data. Self-administered surveys may be subject to response bias and may not accurately reflect participants’ actual behaviors and experiences.

- Data Analysis: The study used descriptive statistics and regression analysis to analyze the data. Descriptive statistics provide a summary of the data, while regression analysis is used to examine the relationship between two or more variables. However, the study did not provide information about the statistical significance of the results or the effect sizes.

Overall, while the study provides some insights into the relationship between social media use and mental health among college students, the use of a convenience sampling technique and the lack of information about participant characteristics limit the generalizability of the findings. In addition, the use of self-administered surveys may introduce bias into the study, and the lack of information about the statistical significance of the results limits the interpretation of the findings.

Note*: Above mentioned example is just a sample for students. Do not copy and paste directly into your assignment. Kindly do your own research for academic purposes.

Applications of Evaluating Research

Here are some of the applications of evaluating research:

- Identifying reliable sources : By evaluating research, researchers, students, and other professionals can identify the most reliable sources of information to use in their work. They can determine the quality of research studies, including the methodology, sample size, data analysis, and conclusions.

- Validating findings: Evaluating research can help to validate findings from previous studies. By examining the methodology and results of a study, researchers can determine if the findings are reliable and if they can be used to inform future research.

- Identifying knowledge gaps: Evaluating research can also help to identify gaps in current knowledge. By examining the existing literature on a topic, researchers can determine areas where more research is needed, and they can design studies to address these gaps.

- Improving research quality : Evaluating research can help to improve the quality of future research. By examining the strengths and weaknesses of previous studies, researchers can design better studies and avoid common pitfalls.

- Informing policy and decision-making : Evaluating research is crucial in informing policy and decision-making in many fields. By examining the evidence base for a particular issue, policymakers can make informed decisions that are supported by the best available evidence.

- Enhancing education : Evaluating research is essential in enhancing education. Educators can use research findings to improve teaching methods, curriculum development, and student outcomes.

Purpose of Evaluating Research

Here are some of the key purposes of evaluating research:

- Determine the reliability and validity of research findings : By evaluating research, researchers can determine the quality of the study design, data collection, and analysis. They can determine whether the findings are reliable, valid, and generalizable to other populations.

- Identify the strengths and weaknesses of research studies: Evaluating research helps to identify the strengths and weaknesses of research studies, including potential biases, confounding factors, and limitations. This information can help researchers to design better studies in the future.

- Inform evidence-based decision-making: Evaluating research is crucial in informing evidence-based decision-making in many fields, including healthcare, education, and public policy. Policymakers, educators, and clinicians rely on research evidence to make informed decisions.

- Identify research gaps : By evaluating research, researchers can identify gaps in the existing literature and design studies to address these gaps. This process can help to advance knowledge and improve the quality of research in a particular field.

- Ensure research ethics and integrity : Evaluating research helps to ensure that research studies are conducted ethically and with integrity. Researchers must adhere to ethical guidelines to protect the welfare and rights of study participants and to maintain the trust of the public.

Characteristics Evaluating Research

Characteristics Evaluating Research are as follows:

- Research question/hypothesis: A good research question or hypothesis should be clear, concise, and well-defined. It should address a significant problem or issue in the field and be grounded in relevant theory or prior research.

- Study design: The research design should be appropriate for answering the research question and be clearly described in the study. The study design should also minimize bias and confounding variables.

- Sampling : The sample should be representative of the population of interest and the sampling method should be appropriate for the research question and study design.

- Data collection : The data collection methods should be reliable and valid, and the data should be accurately recorded and analyzed.

- Results : The results should be presented clearly and accurately, and the statistical analysis should be appropriate for the research question and study design.

- Interpretation of results : The interpretation of the results should be based on the data and not influenced by personal biases or preconceptions.

- Generalizability: The study findings should be generalizable to the population of interest and relevant to other settings or contexts.

- Contribution to the field : The study should make a significant contribution to the field and advance our understanding of the research question or issue.

Advantages of Evaluating Research

Evaluating research has several advantages, including:

- Ensuring accuracy and validity : By evaluating research, we can ensure that the research is accurate, valid, and reliable. This ensures that the findings are trustworthy and can be used to inform decision-making.

- Identifying gaps in knowledge : Evaluating research can help identify gaps in knowledge and areas where further research is needed. This can guide future research and help build a stronger evidence base.

- Promoting critical thinking: Evaluating research requires critical thinking skills, which can be applied in other areas of life. By evaluating research, individuals can develop their critical thinking skills and become more discerning consumers of information.

- Improving the quality of research : Evaluating research can help improve the quality of research by identifying areas where improvements can be made. This can lead to more rigorous research methods and better-quality research.

- Informing decision-making: By evaluating research, we can make informed decisions based on the evidence. This is particularly important in fields such as medicine and public health, where decisions can have significant consequences.

- Advancing the field : Evaluating research can help advance the field by identifying new research questions and areas of inquiry. This can lead to the development of new theories and the refinement of existing ones.

Limitations of Evaluating Research

Limitations of Evaluating Research are as follows:

- Time-consuming: Evaluating research can be time-consuming, particularly if the study is complex or requires specialized knowledge. This can be a barrier for individuals who are not experts in the field or who have limited time.

- Subjectivity : Evaluating research can be subjective, as different individuals may have different interpretations of the same study. This can lead to inconsistencies in the evaluation process and make it difficult to compare studies.

- Limited generalizability: The findings of a study may not be generalizable to other populations or contexts. This limits the usefulness of the study and may make it difficult to apply the findings to other settings.

- Publication bias: Research that does not find significant results may be less likely to be published, which can create a bias in the published literature. This can limit the amount of information available for evaluation.

- Lack of transparency: Some studies may not provide enough detail about their methods or results, making it difficult to evaluate their quality or validity.

- Funding bias : Research funded by particular organizations or industries may be biased towards the interests of the funder. This can influence the study design, methods, and interpretation of results.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Data Collection – Methods Types and Examples

Delimitations in Research – Types, Examples and...

Research Process – Steps, Examples and Tips

Research Design – Types, Methods and Examples

Institutional Review Board – Application Sample...

Research Questions – Types, Examples and Writing...

You are here

Evaluating research projects.

These Guidelines are neither a handbook on evaluation, nor a manual on how to evaluate, but a guide for the development, adaptation, or assessment of evaluation methods. They are a reference and a guide of good practice about building a specific guide for evaluating a given situation.

This page's content, needless to remind, is aimed at the authors of a specific guide : in the present case a guide for evaluating research projects. The specific guide's authors will pick from this material what is relevant for their needs and situation.

Objectives of evaluating research projects

The two most common situations faced by evaluators of development research projects are ex ante evaluations and ex post evaluations. In a few cases an intermediate evaluation may be performed, also sometimes called a "mid-term" evaluation. The formulation of the objectives in the specific guide will obviously depend on the situation, on the needs of the stakeholders , but also on the researcher's environment and on ethical considerations .

Ex ante evaluation refers to the evaluation of a project proposal, for example for deciding whether or not to finance it, or to provide scientific support.

Ex post evaluation is conducted after a research is completed, again for a variety of reasons such as deciding to publish or to apply the results, to grant an award or a fellowship to the author(s), or to build a new research along a similar line.

An intermediate evaluation is aimed basically at helping to decide to go on, or to reorient the course of the research.

Such objectives are examined in detail below, in the pages on evaluation of research projects ex ante and on evaluation of projects ex post . A final section deals briefly with intermediate evaluation.

Importance of project evaluation

Evaluating research projects is a fundamental dimension in the evaluation of development research, for basically two reasons:

- many of our evaluation concepts and practices are derived from our experience with research projects,

- evaluation of projects is essential for achieving our long term goal of maintaining and improving the quality of development research - and particularly of strengthening research capacity .

Dimensions of the evaluation of development research projects

Scientific quality is a basic requirement for all scientific research projects, and the role of publications is here determinant. Such is obviously the case of ex post evaluation, but publications are also necessary in the case of ex ante situations, where the evaluator needs to trust to a certain extent the proposal's authors, and will largely take into account their past publications.

For more details see the page on evaluation of scientific publications and the annexes on scientific quality and on valorisation .

While scientific quality is a necessary dimension in each evaluation of a development research project, it is not sufficient. An equally indispensable dimension is relevance to development.

Other dimensions will be justified by the context, the evaluation's objectives, the evaluation sponsor's requirements, etc.

- Send a comment

Search form

- Project Management Methodologies

- Project Management Metrics

- Project Portfolio Management

- Proof of Concept Templates

- Punch List Templates

- Requirement Gathering Process

- Requirements Traceability Matrix

- Resource Scheduling

- Roles and Responsibilities Template

- Stakeholder Engagement Model

- Stakeholder Identification

- Stakeholder Mapping

- Stakeholder-theory

- Team Alignment Map

- Team Charter

- Templates for Managers

- What is Project Baseline

- Work Log Templates

- Workback Schedule

- Workload Management

- Work Breakdown Structures

- Agile Team Structure

- Avoding Scope Creep

- Cross-Functional Flowcharts

- Precision VS Accuracy

- Scrum-Spike

- User Story Guide

- Creating Project Charters

- Guide to Team Communication

- How to Prioritize Tasks

- Mastering RAID Logs

- Overcoming Analysis Paralysis

- Understanding RACI Model

- Critical Success Factors

- Deadline Management

- Eisenhower Matrix Guide

- Guide to Multi Project Management

- Procure-to-Pay Best Practices

- Procurement Management Plan Template to Boost Project Success

- Project Execution and Change Management

- Project Plan and Schedule Templates

- Resource Planning Templates for Smooth Project Execution

- Risk Management and Quality Management Plan Templates

- Risk Management in Software Engineering

- Stage Gate Process

- Stakeholder Management Planning

- Understanding the S-Curve

- Visualizing Your To-Do List

- 30-60-90 Day Plan

- Work Plan Template

- Weekly Planner Template

- Task Analysis Examples

- Cross-Functional Flowcharts for Planning

- Inventory Management Tecniques

- Inventory Templates

- Six Sigma DMAIC Method

- Visual Process Improvement

- Value Stream Mapping

- Creating a Workflow

- Fibonacci Scale Template

- Supply Chain Diagram

- Kaizen Method

- Procurement Process Flow Chart

- Guide to State Diagrams

- UML Activity Diagrams

- Class Diagrams & their Relationships

- Visualize flowcharts for software

- Wire-Frame Benefits

- Applications of UML

- Selecting UML Diagrams

- Create Sequence Diagrams Online

- Activity Diagram Tool

- Archimate Tool

- Class Diagram Tool

- Graphic Organizers

- Social Work Assessment Tools

- Using KWL Charts to Boost Learning

- Editable Timeline Templates

- Kinship Diagram Guide

- Power of Visual Documentation

- Graphic Organizers for Teachers & Students

- Visual Documentation Techniques

- Visual Tool for Visual Documentation

- Conducting a Thematic Analysis

- Visualizing a Dichotomous Key

- 5 W's Chart

- Circular Flow Diagram Maker

- Cladogram Maker

- Comic Strip Maker

- Course Design Template

- AI Buyer Persona

- AI Data Visualization

- AI Diagrams

- AI Project Management

- AI SWOT Analysis

- Best AI Templates

- Brainstorming AI

- Pros & Cons of AI

- AI for Business Strategy

- Using AI for Business Plan

- AI for HR Teams

- BPMN Symbols

- BPMN vs UML

- Business Process Analysis

- Business Process Modeling

- Capacity Planning Guide

- Case Management Process

- How to Avoid Bottlenecks in Processes

- Innovation Management Process

- Project vs Process

- Solve Customer Problems

- Spaghetti Diagram

- Startup Templates

- Streamline Purchase Order Process

- What is BPMN

- Approval Process

- Employee Exit Process

- Iterative Process

- Process Documentation

- Process Improvement Ideas

- Risk Assessment Process

- Tiger Teams

- Work Instruction Templates

- Workflow Vs. Process

- Process Mapping

- Business Process Reengineering

- Meddic Sales Process

- SIPOC Diagram

- What is Business Process Management

- Process Mapping Software

- Business Analysis Tool

- Business Capability Map

- Decision Making Tools and Techniques

- Operating Model Canvas

- Mobile App Planning

- Product Development Guide

- Product Roadmap

- Timeline Diagrams

- Visualize User Flow

- Sequence Diagrams

- Flowchart Maker

- Online Class Diagram Tool

- Organizational Chart Maker

- Mind Map Maker

- Retro Software

- Agile Project Charter

- Critical Path Software

- Brainstorming Guide

- Brainstorming Tools

- Concept Map Note Taking

- Visual Tools for Brainstorming

- Brainstorming Content Ideas

- Brainstorming in Business

- Brainstorming Questions

- Brainstorming Rules

- Brainstorming Techniques

- Brainstorming Workshop

- Design Thinking and Brainstorming

- Divergent vs Convergent Thinking

- Group Brainstorming Strategies

- Group Creativity

- How to Make Virtual Brainstorming Fun and Effective

- Ideation Techniques

- Improving Brainstorming

- Marketing Brainstorming

- Rapid Brainstorming

- Reverse Brainstorming Challenges

- Reverse vs. Traditional Brainstorming

- What Comes After Brainstorming

- Flowchart Guide

- Spider Diagram Guide

- 5 Whys Template

- Assumption Grid Template

- Brainstorming Templates

- Brainwriting Template

- Innovation Techniques

- 50 Business Diagrams

- Business Model Canvas

- Change Control Process

- Change Management Process

- Macro Environmental Analysis

- NOISE Analysis

- Profit & Loss Templates

- Scenario Planning

- What are Tree Diagrams

- Winning Brand Strategy

- Work Management Systems

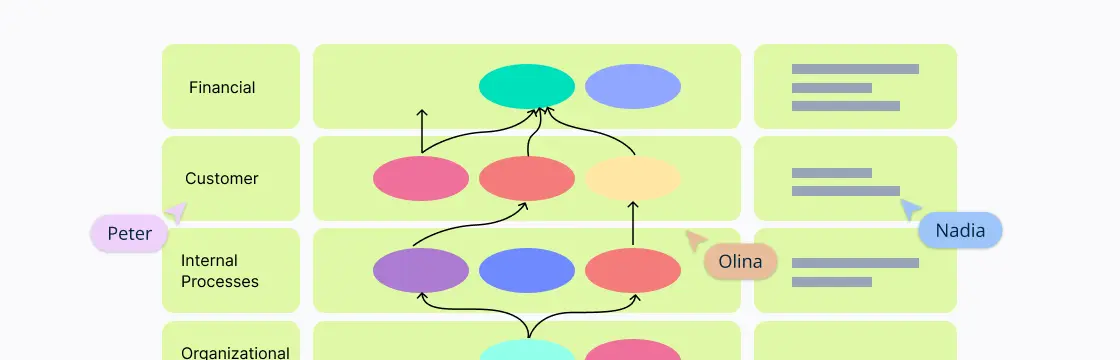

- Balanced Scorecard

- Developing Action Plans

- Guide to setting OKRS

- How to Write a Memo

- Improve Productivity & Efficiency

- Mastering Task Analysis

- Mastering Task Batching

- Monthly Budget Templates

- Program Planning

- Top Down Vs. Bottom Up

- Weekly Schedule Templates

- Kaizen Principles

- Opportunity Mapping

- Strategic-Goals

- Strategy Mapping

- T Chart Guide

- Business Continuity Plan

- Developing Your MVP

- Incident Management

- Needs Assessment Process

- Product Development From Ideation to Launch

- Value-Proposition-Canvas

- Visualizing Competitive Landscape

- Communication Plan

- Graphic Organizer Creator

- Fault Tree Software

- Bowman's Strategy Clock Template

- Decision Matrix Template

- Communities of Practice

- Goal Setting for 2024

- Meeting Templates

- Meetings Participation

- Microsoft Teams Brainstorming

- Retrospective Guide

- Skip Level Meetings

- Visual Documentation Guide

- Visual Note Taking

- Weekly Meetings

- Affinity Diagrams

- Business Plan Presentation

- Post-Mortem Meetings

- Team Building Activities

- WBS Templates

- Online Whiteboard Tool

- Communications Plan Template

- Idea Board Online

- Meeting Minutes Template

- Genograms in Social Work Practice

- Conceptual Framework

- How to Conduct a Genogram Interview

- How to Make a Genogram

- Genogram Questions

- Genograms in Client Counseling

- Understanding Ecomaps

- Visual Research Data Analysis Methods

- House of Quality Template

- Customer Problem Statement Template

- Competitive Analysis Template

- Creating Operations Manual

- Knowledge Base

- Folder Structure Diagram

- Online Checklist Maker

- Lean Canvas Template

- Instructional Design Examples

- Genogram Maker

- Work From Home Guide

- Strategic Planning

- Employee Engagement Action Plan

- Huddle Board

- One-on-One Meeting Template

- Story Map Graphic Organizers

- Introduction to Your Workspace

- Managing Workspaces and Folders

- Adding Text

- Collaborative Content Management

- Creating and Editing Tables

- Adding Notes

- Introduction to Diagramming

- Using Shapes

- Using Freehand Tool

- Adding Images to the Canvas

- Accessing the Contextual Toolbar

- Using Connectors

- Working with Tables

- Working with Templates

- Working with Frames

- Using Notes

- Access Controls

- Exporting a Workspace

- Real-Time Collaboration

- Notifications

- Meet Creately VIZ

- Unleashing the Power of Collaborative Brainstorming

- Uncovering the potential of Retros for all teams

- Collaborative Apps in Microsoft Teams

- Hiring a Great Fit for Your Team

- Project Management Made Easy

- Cross-Corporate Information Radiators

- Creately 4.0 - Product Walkthrough

- What's New

What is Project Evaluation? The Complete Guide with Templates

Project evaluation is an important part of determining the success or failure of a project. Properly evaluating a project helps you understand what worked well and what could be improved for future projects. This blog post will provide an overview of key components of project evaluation and how to conduct effective evaluations.

What is Project Evaluation?

Project evaluation is a key part of assessing the success, progress and areas for improvement of a project. It involves determining how well a project is meeting its goals and objectives. Evaluation helps determine if a project is worth continuing, needs adjustments, or should be discontinued.

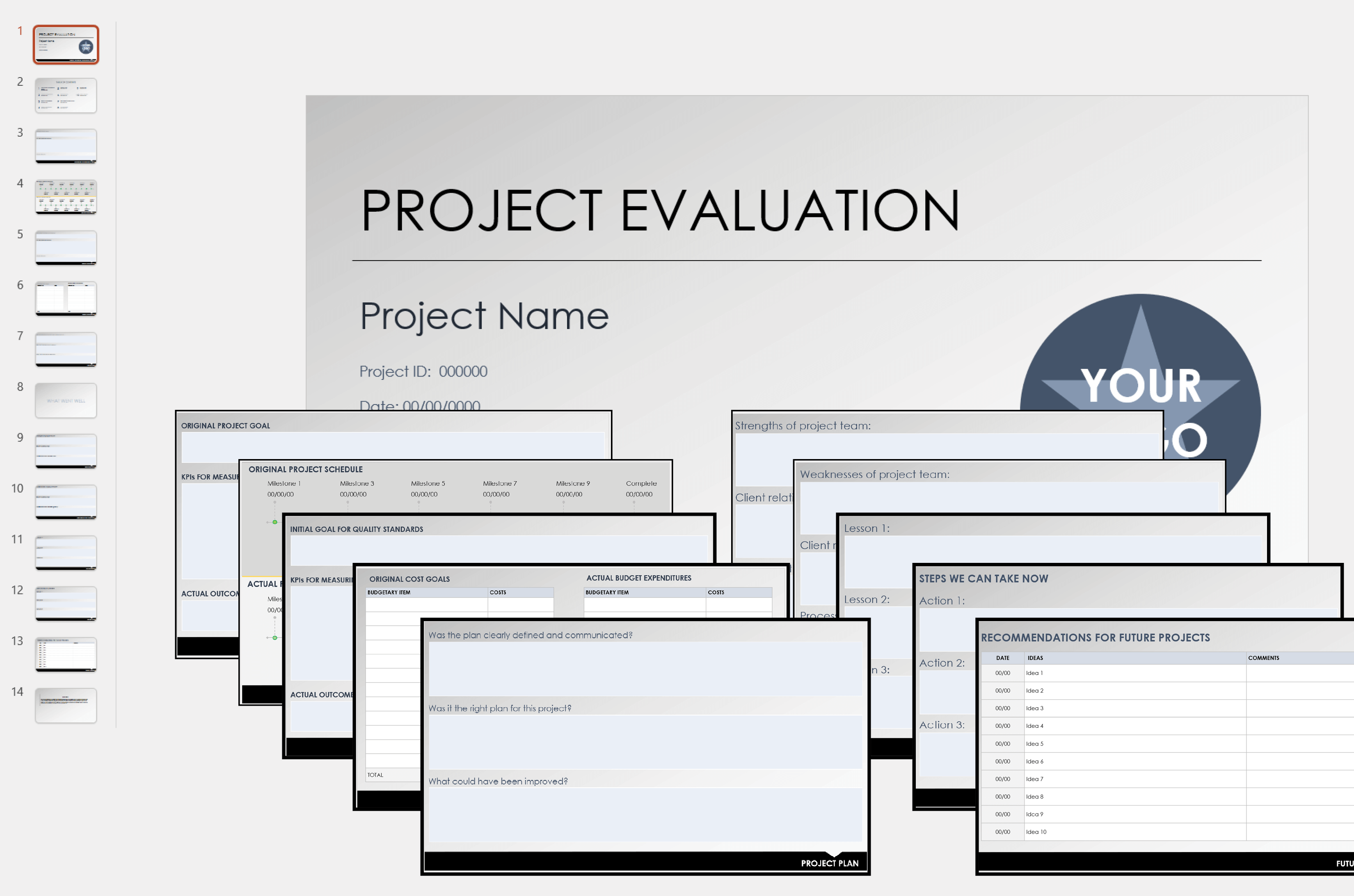

A good evaluation plan is developed at the start of a project. It outlines the criteria that will be used to judge the project’s performance and success. Evaluation criteria can include things like:

- Meeting timelines and budgets - Were milestones and deadlines met? Was the project completed within budget?

- Delivering expected outputs and outcomes - Were the intended products, results and benefits achieved?

- Satisfying stakeholder needs - Were customers, users and other stakeholders satisfied with the project results?

- Achieving quality standards - Were quality metrics and standards defined and met?

- Demonstrating effectiveness - Did the project accomplish its intended purpose?

Project evaluation provides valuable insights that can be applied to the current project and future projects. It helps organizations learn from their projects and continuously improve their processes and outcomes.

Project Evaluation Templates

These templates will help you evaluate your project by providing a clear structure to assess how it was planned, carried out, and what it achieved. Whether you’re managing the project, part of the team, or a stakeholder, these template assist in gathering information systematically for a thorough evaluation.

Project Evaluation Template 1

- Ready to use

- Fully customizable template

- Get Started in seconds

Project Evaluation Template 2

Project Evaluation Methods

Project evaluation involves using various methods to assess the performance and impact of a project. The choice of methods depends on the nature of the project, its objectives, and the available resources. Here are some common project evaluation methods:

Pre-project evaluation

Pre-project evaluations are done before a project begins. This involves evaluating the project plan, scope, objectives, resources, and budget. This helps determine if the project is feasible and identifies any potential issues or risks upfront. It establishes a baseline for later evaluations.

Ongoing evaluation

Ongoing evaluations happen during the project lifecycle. Regular status reports track progress against the project plan, budget, and deadlines. Any deviations or issues are identified and corrective actions can be taken promptly. This allows projects to stay on track and make adjustments as needed.

Post-project evaluation

Post-project evaluations occur after a project is complete. This final assessment determines if the project objectives were achieved and customer requirements were met. Key metrics like timeliness, budget, and quality are examined. Lessons learned are documented to improve processes for future projects. Stakeholder feedback is gathered through surveys, interviews, or focus groups .

Project Evaluation Steps

When evaluating a project, there are several key steps you should follow. These steps will help you determine if the project was successful and identify areas for improvement in future initiatives.

Step 1: Set clear goals

The first step is establishing clear goals and objectives for the project before it begins. Make sure these objectives are SMART: specific, measurable, achievable, relevant and time-bound. Having clear goals from the outset provides a benchmark for measuring success later on.

Step 2: Monitor progress

Once the project is underway, the next step is monitoring progress. Check in regularly with your team to see if you’re on track to meet your objectives and deadlines. Identify and address any issues as early as possible before they become major roadblocks. Monitoring progress also allows you to course correct if needed.

Step 3: Collect data

After the project is complete, collect all relevant data and metrics. This includes both quantitative data like budget information, timelines and deliverables, as well customer feedback and qualitative data from surveys or interviews. Analyzing this data will show you how well the project performed against your original objectives.

Step 4: Analyze and interpret

Identify what worked well and what didn’t during the project. Highlight best practices to replicate and lessons learned to improve future initiatives. Get feedback from all stakeholders involved, including project team members, customers and management.

Step 5: Develop an action plan

Develop an action plan to apply what you’ve learned for the next project. Update processes, procedures and resource allocations based on your evaluation. Communicate changes across your organization and train employees on any new best practices. Implementing these changes will help you avoid similar issues the next time around.

Benefits of Project Evaluation

Project evaluation is a valuable tool for organizations, helping them learn, adapt, and improve their project outcomes over time. Here are some benefits of project evaluation.

- Helps in making informed decisions by providing a clear understanding of the project’s strengths, weaknesses, and areas for improvement.

- Holds the project team accountable for meeting goals and using resources effectively, fostering a sense of responsibility.

- Facilitates organizational learning by capturing valuable insights and lessons from both successful and challenging aspects of the project.

- Allows for the efficient allocation of resources by identifying areas where adjustments or reallocations may be needed.

- Provides evidence of the project’s value by assessing its impact, cost-effectiveness, and alignment with organizational objectives.

- Involves stakeholders in the evaluation process, fostering collaboration, and ensuring that diverse perspectives are considered.

Project Evaluation Best Practices

Follow these best practices to do a more effective and meaningful project evaluation, leading to better project outcomes and organizational learning.

- Clear objectives : Clearly define the goals and questions you want the evaluation to answer.

- Involve stakeholders : Include the perspectives of key stakeholders to ensure a comprehensive evaluation.

- Use appropriate methods : Choose evaluation methods that suit your objectives and available resources.

- Timely data collection : Collect data at relevant points in the project timeline to ensure accuracy and relevance.

- Thorough analysis : Analyze the collected data thoroughly to draw meaningful conclusions and insights.

- Actionable recommendations : Provide practical recommendations that can lead to tangible improvements in future projects.

- Learn and adapt : Use evaluation findings to learn from both successes and challenges, adapting practices for continuous improvement.

- Document lessons : Document lessons learned from the evaluation process for organizational knowledge and future reference.

How to Use Creately to Evaluate Your Projects

Use Creately’s visual collaboration platform to evaluate your project and improve communication, streamline collaboration, and provide a visual representation of project data effectively.

Task tracking and assignment

Use the built-in project management tools to create, assign, and track tasks right on the canvas. Assign responsibilities, set due dates, and monitor progress with Agile Kanban boards, Gantt charts, timelines and more. Create task cards containing detailed information, descriptions, due dates, and assigned responsibilities.

Notes and attachments