Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

Machine learning articles from across Nature Portfolio

Machine learning is the ability of a machine to improve its performance based on previous results. Machine learning methods enable computers to learn without being explicitly programmed and have multiple applications, for example, in the improvement of data mining algorithms.

Meta’s AI translation model embraces overlooked languages

More than 7,000 languages are in use throughout the world, but popular translation tools cannot deal with most of them. A translation model that was tested on under-represented languages takes a key step towards a solution.

- David I. Adelani

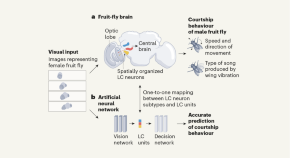

AI networks reveal how flies find a mate

Artificial neural networks that model the visual system of a male fruit fly can accurately predict the insect’s behaviour in response to seeing a potential mate — paving the way for the building of more complex models of brain circuits.

- Pavan Ramdya

Predicting tumour origin with cytology-based deep learning: hype or hope?

The majority of patients with cancers of unknown primary have unfavourable outcomes when they receive empirical chemotherapy. The shift towards using precision medicine-based treatment strategies involves two options: tissue-agnostic or site-specific approaches. Here, we reflect on how cytology-based deep learning tools can be leveraged in these approaches.

- Nicholas Pavlidis

Latest Research and Reviews

Deep learning restores speech intelligibility in multi-talker interference for cochlear implant users

- Agudemu Borjigin

- Kostas Kokkinakis

- Joshua S. Stohl

Machine learning for the identification of neoantigen-reactive CD8 + T cells in gastrointestinal cancer using single-cell sequencing

- Hongwei Sun

Automated assessment of cardiac dynamics in aging and dilated cardiomyopathy Drosophila models using machine learning

A novel deep learning method is developed to analyse Drosophila hearts which is able to predict ageing and study cardiac dysfunction, offering potential for modeling human ailments in Drosophila

- Yash Melkani

- Aniket Pant

- Girish C. Melkani

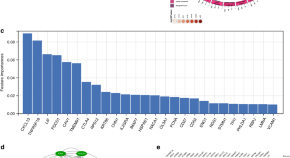

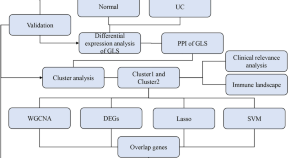

Identification of subclusters and prognostic genes based on GLS-associated molecular signature in ulcerative colitis

- Chunyan Zeng

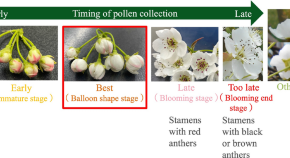

Estimation of the amount of pear pollen based on flowering stage detection using deep learning

- Takefumi Hiraguri

- Yoshihiro Takemura

Generic protein–ligand interaction scoring by integrating physical prior knowledge and data augmentation modelling

Machine learning can improve scoring methods to evaluate protein–ligand interactions, but achieving good generalization is an outstanding challenge. Cao et al. introduce EquiScore, which is based on a graph neural network that integrates physical knowledge and is shown to have robust capabilities when applied to unseen protein targets.

- Duanhua Cao

- Mingyue Zheng

News and Comment

Meta’s AI system is a boost to endangered languages — as long as humans aren’t forgotten

Automated approaches to translation could provide a lifeline to under-resourced languages, but only if companies engage with the people who speak them.

Need a policy for using ChatGPT in the classroom? Try asking students

Students are the key users of AI chatbots in university settings, but their opinions are rarely solicited when crafting policies. That needs to change, says Maja Zonjić.

- Maja Zonjić

Superfast Microsoft AI is first to predict air pollution for the whole world

The model, called Aurora, also forecasts global weather for ten days — all in less than a minute.

- Carissa Wong

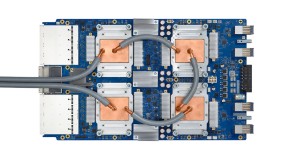

Accelerating AI: the cutting-edge chips powering the computing revolution

Engineers are harnessing the powers of graphics processing units (GPUs) and more, with a bevy of tricks to meet the computational demands of artificial intelligence.

- Dan Garisto

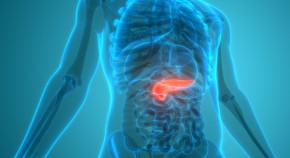

A surprising abundance of pancreatic pre-cancers

AI-based three-dimensional genomic mapping reveals a large abundance of cancer precursors in normal pancreatic tissue — prompting new insights and research directions.

- Karen O’Leary

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Frequently Asked Questions

JMLR Papers

Select a volume number to see its table of contents with links to the papers.

Volume 25 (January 2024 - Present)

Volume 24 (January 2023 - December 2023)

Volume 23 (January 2022 - December 2022)

Volume 22 (January 2021 - December 2021)

Volume 21 (January 2020 - December 2020)

Volume 20 (January 2019 - December 2019)

Volume 19 (August 2018 - December 2018)

Volume 18 (February 2017 - August 2018)

Volume 17 (January 2016 - January 2017)

Volume 16 (January 2015 - December 2015)

Volume 15 (January 2014 - December 2014)

Volume 14 (January 2013 - December 2013)

Volume 13 (January 2012 - December 2012)

Volume 12 (January 2011 - December 2011)

Volume 11 (January 2010 - December 2010)

Volume 10 (January 2009 - December 2009)

Volume 9 (January 2008 - December 2008)

Volume 8 (January 2007 - December 2007)

Volume 7 (January 2006 - December 2006)

Volume 6 (January 2005 - December 2005)

Volume 5 (December 2003 - December 2004)

Volume 4 (Apr 2003 - December 2003)

Volume 3 (Jul 2002 - Mar 2003)

Volume 2 (Oct 2001 - Mar 2002)

Volume 1 (Oct 2000 - Sep 2001)

Special Topics

Bayesian Optimization

Learning from Electronic Health Data (December 2016)

Gesture Recognition (May 2012 - present)

Large Scale Learning (Jul 2009 - present)

Mining and Learning with Graphs and Relations (February 2009 - present)

Grammar Induction, Representation of Language and Language Learning (Nov 2010 - Apr 2011)

Causality (Sep 2007 - May 2010)

Model Selection (Apr 2007 - Jul 2010)

Conference on Learning Theory 2005 (February 2007 - Jul 2007)

Machine Learning for Computer Security (December 2006)

Machine Learning and Large Scale Optimization (Jul 2006 - Oct 2006)

Approaches and Applications of Inductive Programming (February 2006 - Mar 2006)

Learning Theory (Jun 2004 - Aug 2004)

Special Issues

In Memory of Alexey Chervonenkis (Sep 2015)

Independent Components Analysis (December 2003)

Learning Theory (Oct 2003)

Inductive Logic Programming (Aug 2003)

Fusion of Domain Knowledge with Data for Decision Support (Jul 2003)

Variable and Feature Selection (Mar 2003)

Machine Learning Methods for Text and Images (February 2003)

Eighteenth International Conference on Machine Learning (ICML2001) (December 2002)

Computational Learning Theory (Nov 2002)

Shallow Parsing (Mar 2002)

Kernel Methods (December 2001)

| . |

Volume 139: International Conference on Machine Learning, 18-24 July 2021, Virtual

Editors: Marina Meila, Tong Zhang

[ bib ][ citeproc ]

Filter Authors: Filter Titles:

A New Representation of Successor Features for Transfer across Dissimilar Environments

Majid Abdolshah, Hung Le, Thommen Karimpanal George, Sunil Gupta, Santu Rana, Svetha Venkatesh ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1-9

[ abs ][ Download PDF ][ Supplementary PDF ]

Massively Parallel and Asynchronous Tsetlin Machine Architecture Supporting Almost Constant-Time Scaling

Kuruge Darshana Abeyrathna, Bimal Bhattarai, Morten Goodwin, Saeed Rahimi Gorji, Ole-Christoffer Granmo, Lei Jiao, Rupsa Saha, Rohan K. Yadav ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:10-20

[ abs ][ Download PDF ]

Debiasing Model Updates for Improving Personalized Federated Training

Durmus Alp Emre Acar, Yue Zhao, Ruizhao Zhu, Ramon Matas, Matthew Mattina, Paul Whatmough, Venkatesh Saligrama ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:21-31

Memory Efficient Online Meta Learning

Durmus Alp Emre Acar, Ruizhao Zhu, Venkatesh Saligrama ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:32-42

Robust Testing and Estimation under Manipulation Attacks

Jayadev Acharya, Ziteng Sun, Huanyu Zhang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:43-53

GP-Tree: A Gaussian Process Classifier for Few-Shot Incremental Learning

Idan Achituve, Aviv Navon, Yochai Yemini, Gal Chechik, Ethan Fetaya ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:54-65

f-Domain Adversarial Learning: Theory and Algorithms

David Acuna, Guojun Zhang, Marc T. Law, Sanja Fidler ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:66-75

Towards Rigorous Interpretations: a Formalisation of Feature Attribution

Darius Afchar, Vincent Guigue, Romain Hennequin ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:76-86

Acceleration via Fractal Learning Rate Schedules

Naman Agarwal, Surbhi Goel, Cyril Zhang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:87-99

A Regret Minimization Approach to Iterative Learning Control

Naman Agarwal, Elad Hazan, Anirudha Majumdar, Karan Singh ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:100-109

Towards the Unification and Robustness of Perturbation and Gradient Based Explanations

Sushant Agarwal, Shahin Jabbari, Chirag Agarwal, Sohini Upadhyay, Steven Wu, Himabindu Lakkaraju ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:110-119

Label Inference Attacks from Log-loss Scores

Abhinav Aggarwal, Shiva Kasiviswanathan, Zekun Xu, Oluwaseyi Feyisetan, Nathanael Teissier ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:120-129

Deep kernel processes

Laurence Aitchison, Adam Yang, Sebastian W. Ober ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:130-140

How Does Loss Function Affect Generalization Performance of Deep Learning? Application to Human Age Estimation

Ali Akbari, Muhammad Awais, Manijeh Bashar, Josef Kittler ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:141-151

On Learnability via Gradient Method for Two-Layer ReLU Neural Networks in Teacher-Student Setting

Shunta Akiyama, Taiji Suzuki ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:152-162

Slot Machines: Discovering Winning Combinations of Random Weights in Neural Networks

Maxwell M Aladago, Lorenzo Torresani ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:163-174

A large-scale benchmark for few-shot program induction and synthesis

Ferran Alet, Javier Lopez-Contreras, James Koppel, Maxwell Nye, Armando Solar-Lezama, Tomas Lozano-Perez, Leslie Kaelbling, Joshua Tenenbaum ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:175-186

Robust Pure Exploration in Linear Bandits with Limited Budget

Ayya Alieva, Ashok Cutkosky, Abhimanyu Das ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:187-195

Communication-Efficient Distributed Optimization with Quantized Preconditioners

Foivos Alimisis, Peter Davies, Dan Alistarh ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:196-206

Non-Exponentially Weighted Aggregation: Regret Bounds for Unbounded Loss Functions

Pierre Alquier ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:207-218

Dataset Dynamics via Gradient Flows in Probability Space

David Alvarez-Melis, Nicolò Fusi ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:219-230

Submodular Maximization subject to a Knapsack Constraint: Combinatorial Algorithms with Near-optimal Adaptive Complexity

Georgios Amanatidis, Federico Fusco, Philip Lazos, Stefano Leonardi, Alberto Marchetti-Spaccamela, Rebecca Reiffenhäuser ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:231-242

Safe Reinforcement Learning with Linear Function Approximation

Sanae Amani, Christos Thrampoulidis, Lin Yang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:243-253

[ abs ][ Download PDF ][ Supplementary ZIP ]

Automatic variational inference with cascading flows

Luca Ambrogioni, Gianluigi Silvestri, Marcel van Gerven ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:254-263

Sparse Bayesian Learning via Stepwise Regression

Sebastian E. Ament, Carla P. Gomes ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:264-274

Locally Persistent Exploration in Continuous Control Tasks with Sparse Rewards

Susan Amin, Maziar Gomrokchi, Hossein Aboutalebi, Harsh Satija, Doina Precup ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:275-285

Preferential Temporal Difference Learning

Nishanth Anand, Doina Precup ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:286-296

Unitary Branching Programs: Learnability and Lower Bounds

Fidel Ernesto Diaz Andino, Maria Kokkou, Mateus De Oliveira Oliveira, Farhad Vadiee ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:297-306

The Logical Options Framework

Brandon Araki, Xiao Li, Kiran Vodrahalli, Jonathan Decastro, Micah Fry, Daniela Rus ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:307-317

Annealed Flow Transport Monte Carlo

Michael Arbel, Alex Matthews, Arnaud Doucet ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:318-330

Permutation Weighting

David Arbour, Drew Dimmery, Arjun Sondhi ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:331-341

Analyzing the tree-layer structure of Deep Forests

Ludovic Arnould, Claire Boyer, Erwan Scornet ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:342-350

Dropout: Explicit Forms and Capacity Control

Raman Arora, Peter Bartlett, Poorya Mianjy, Nathan Srebro ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:351-361

Tighter Bounds on the Log Marginal Likelihood of Gaussian Process Regression Using Conjugate Gradients

Artem Artemev, David R. Burt, Mark van der Wilk ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:362-372

Deciding What to Learn: A Rate-Distortion Approach

Dilip Arumugam, Benjamin Van Roy ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:373-382

Private Adaptive Gradient Methods for Convex Optimization

Hilal Asi, John Duchi, Alireza Fallah, Omid Javidbakht, Kunal Talwar ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:383-392

Private Stochastic Convex Optimization: Optimal Rates in L1 Geometry

Hilal Asi, Vitaly Feldman, Tomer Koren, Kunal Talwar ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:393-403

Combinatorial Blocking Bandits with Stochastic Delays

Alexia Atsidakou, Orestis Papadigenopoulos, Soumya Basu, Constantine Caramanis, Sanjay Shakkottai ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:404-413

Dichotomous Optimistic Search to Quantify Human Perception

Julien Audiffren ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:414-424

Federated Learning under Arbitrary Communication Patterns

Dmitrii Avdiukhin, Shiva Kasiviswanathan ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:425-435

Asynchronous Distributed Learning : Adapting to Gradient Delays without Prior Knowledge

Rotem Zamir Aviv, Ido Hakimi, Assaf Schuster, Kfir Yehuda Levy ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:436-445

Decomposable Submodular Function Minimization via Maximum Flow

Kyriakos Axiotis, Adam Karczmarz, Anish Mukherjee, Piotr Sankowski, Adrian Vladu ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:446-456

Differentially Private Query Release Through Adaptive Projection

Sergul Aydore, William Brown, Michael Kearns, Krishnaram Kenthapadi, Luca Melis, Aaron Roth, Ankit A. Siva ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:457-467

On the Implicit Bias of Initialization Shape: Beyond Infinitesimal Mirror Descent

Shahar Azulay, Edward Moroshko, Mor Shpigel Nacson, Blake E Woodworth, Nathan Srebro, Amir Globerson, Daniel Soudry ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:468-477

On-Off Center-Surround Receptive Fields for Accurate and Robust Image Classification

Zahra Babaiee, Ramin Hasani, Mathias Lechner, Daniela Rus, Radu Grosu ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:478-489

Uniform Convergence, Adversarial Spheres and a Simple Remedy

Gregor Bachmann, Seyed-Mohsen Moosavi-Dezfooli, Thomas Hofmann ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:490-499

Faster Kernel Matrix Algebra via Density Estimation

Arturs Backurs, Piotr Indyk, Cameron Musco, Tal Wagner ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:500-510

Robust Reinforcement Learning using Least Squares Policy Iteration with Provable Performance Guarantees

Kishan Panaganti Badrinath, Dileep Kalathil ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:511-520

Skill Discovery for Exploration and Planning using Deep Skill Graphs

Akhil Bagaria, Jason K Senthil, George Konidaris ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:521-531

Locally Adaptive Label Smoothing Improves Predictive Churn

Dara Bahri, Heinrich Jiang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:532-542

How Important is the Train-Validation Split in Meta-Learning?

Yu Bai, Minshuo Chen, Pan Zhou, Tuo Zhao, Jason Lee, Sham Kakade, Huan Wang, Caiming Xiong ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:543-553

Stabilizing Equilibrium Models by Jacobian Regularization

Shaojie Bai, Vladlen Koltun, Zico Kolter ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:554-565

Don’t Just Blame Over-parametrization for Over-confidence: Theoretical Analysis of Calibration in Binary Classification

Yu Bai, Song Mei, Huan Wang, Caiming Xiong ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:566-576

Principled Exploration via Optimistic Bootstrapping and Backward Induction

Chenjia Bai, Lingxiao Wang, Lei Han, Jianye Hao, Animesh Garg, Peng Liu, Zhaoran Wang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:577-587

GLSearch: Maximum Common Subgraph Detection via Learning to Search

Yunsheng Bai, Derek Xu, Yizhou Sun, Wei Wang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:588-598

Breaking the Limits of Message Passing Graph Neural Networks

Muhammet Balcilar, Pierre Heroux, Benoit Gauzere, Pascal Vasseur, Sebastien Adam, Paul Honeine ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:599-608

Instance Specific Approximations for Submodular Maximization

Eric Balkanski, Sharon Qian, Yaron Singer ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:609-618

Augmented World Models Facilitate Zero-Shot Dynamics Generalization From a Single Offline Environment

Philip J Ball, Cong Lu, Jack Parker-Holder, Stephen Roberts ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:619-629

Regularized Online Allocation Problems: Fairness and Beyond

Santiago Balseiro, Haihao Lu, Vahab Mirrokni ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:630-639

Predict then Interpolate: A Simple Algorithm to Learn Stable Classifiers

Yujia Bao, Shiyu Chang, Regina Barzilay ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:640-650

Variational (Gradient) Estimate of the Score Function in Energy-based Latent Variable Models

Fan Bao, Kun Xu, Chongxuan Li, Lanqing Hong, Jun Zhu, Bo Zhang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:651-661

Compositional Video Synthesis with Action Graphs

Amir Bar, Roei Herzig, Xiaolong Wang, Anna Rohrbach, Gal Chechik, Trevor Darrell, Amir Globerson ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:662-673

Approximating a Distribution Using Weight Queries

Nadav Barak, Sivan Sabato ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:674-683

Graph Convolution for Semi-Supervised Classification: Improved Linear Separability and Out-of-Distribution Generalization

Aseem Baranwal, Kimon Fountoulakis, Aukosh Jagannath ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:684-693

Training Quantized Neural Networks to Global Optimality via Semidefinite Programming

Burak Bartan, Mert Pilanci ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:694-704

Beyond $log^2(T)$ regret for decentralized bandits in matching markets

Soumya Basu, Karthik Abinav Sankararaman, Abishek Sankararaman ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:705-715

Optimal Thompson Sampling strategies for support-aware CVaR bandits

Dorian Baudry, Romain Gautron, Emilie Kaufmann, Odalric Maillard ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:716-726

On Limited-Memory Subsampling Strategies for Bandits

Dorian Baudry, Yoan Russac, Olivier Cappé ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:727-737

Generalized Doubly Reparameterized Gradient Estimators

Matthias Bauer, Andriy Mnih ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:738-747

Directional Graph Networks

Dominique Beaini, Saro Passaro, Vincent Létourneau, Will Hamilton, Gabriele Corso, Pietro Lió ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:748-758

Policy Analysis using Synthetic Controls in Continuous-Time

Alexis Bellot, Mihaela van der Schaar ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:759-768

Loss Surface Simplexes for Mode Connecting Volumes and Fast Ensembling

Gregory Benton, Wesley Maddox, Sanae Lotfi, Andrew Gordon Gordon Wilson ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:769-779

TFix: Learning to Fix Coding Errors with a Text-to-Text Transformer

Berkay Berabi, Jingxuan He, Veselin Raychev, Martin Vechev ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:780-791

Learning Queueing Policies for Organ Transplantation Allocation using Interpretable Counterfactual Survival Analysis

Jeroen Berrevoets, Ahmed Alaa, Zhaozhi Qian, James Jordon, Alexander E. S. Gimson, Mihaela van der Schaar ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:792-802

Learning from Biased Data: A Semi-Parametric Approach

Patrice Bertail, Stephan Clémençon, Yannick Guyonvarch, Nathan Noiry ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:803-812

Is Space-Time Attention All You Need for Video Understanding?

Gedas Bertasius, Heng Wang, Lorenzo Torresani ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:813-824

Confidence Scores Make Instance-dependent Label-noise Learning Possible

Antonin Berthon, Bo Han, Gang Niu, Tongliang Liu, Masashi Sugiyama ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:825-836

Size-Invariant Graph Representations for Graph Classification Extrapolations

Beatrice Bevilacqua, Yangze Zhou, Bruno Ribeiro ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:837-851

Principal Bit Analysis: Autoencoding with Schur-Concave Loss

Sourbh Bhadane, Aaron B Wagner, Jayadev Acharya ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:852-862

Lower Bounds on Cross-Entropy Loss in the Presence of Test-time Adversaries

Arjun Nitin Bhagoji, Daniel Cullina, Vikash Sehwag, Prateek Mittal ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:863-873

Additive Error Guarantees for Weighted Low Rank Approximation

Aditya Bhaskara, Aravinda Kanchana Ruwanpathirana, Maheshakya Wijewardena ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:874-883

Sample Complexity of Robust Linear Classification on Separated Data

Robi Bhattacharjee, Somesh Jha, Kamalika Chaudhuri ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:884-893

Finding k in Latent $k-$ polytope

Chiranjib Bhattacharyya, Ravindran Kannan, Amit Kumar ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:894-903

Non-Autoregressive Electron Redistribution Modeling for Reaction Prediction

Hangrui Bi, Hengyi Wang, Chence Shi, Connor Coley, Jian Tang, Hongyu Guo ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:904-913

TempoRL: Learning When to Act

André Biedenkapp, Raghu Rajan, Frank Hutter, Marius Lindauer ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:914-924

Follow-the-Regularized-Leader Routes to Chaos in Routing Games

Jakub Bielawski, Thiparat Chotibut, Fryderyk Falniowski, Grzegorz Kosiorowski, Michał Misiurewicz, Georgios Piliouras ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:925-935

Neural Symbolic Regression that scales

Luca Biggio, Tommaso Bendinelli, Alexander Neitz, Aurelien Lucchi, Giambattista Parascandolo ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:936-945

Model Distillation for Revenue Optimization: Interpretable Personalized Pricing

Max Biggs, Wei Sun, Markus Ettl ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:946-956

Scalable Normalizing Flows for Permutation Invariant Densities

Marin Biloš, Stephan Günnemann ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:957-967

Online Learning for Load Balancing of Unknown Monotone Resource Allocation Games

Ilai Bistritz, Nicholas Bambos ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:968-979

Low-Precision Reinforcement Learning: Running Soft Actor-Critic in Half Precision

Johan Björck, Xiangyu Chen, Christopher De Sa, Carla P Gomes, Kilian Weinberger ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:980-991

Multiplying Matrices Without Multiplying

Davis Blalock, John Guttag ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:992-1004

One for One, or All for All: Equilibria and Optimality of Collaboration in Federated Learning

Avrim Blum, Nika Haghtalab, Richard Lanas Phillips, Han Shao ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1005-1014

Black-box density function estimation using recursive partitioning

Erik Bodin, Zhenwen Dai, Neill Campbell, Carl Henrik Ek ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1015-1025

Weisfeiler and Lehman Go Topological: Message Passing Simplicial Networks

Cristian Bodnar, Fabrizio Frasca, Yuguang Wang, Nina Otter, Guido F Montufar, Pietro Lió, Michael Bronstein ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1026-1037

The Hintons in your Neural Network: a Quantum Field Theory View of Deep Learning

Roberto Bondesan, Max Welling ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1038-1048

Offline Contextual Bandits with Overparameterized Models

David Brandfonbrener, William Whitney, Rajesh Ranganath, Joan Bruna ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1049-1058

High-Performance Large-Scale Image Recognition Without Normalization

Andy Brock, Soham De, Samuel L Smith, Karen Simonyan ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1059-1071

Evaluating the Implicit Midpoint Integrator for Riemannian Hamiltonian Monte Carlo

James Brofos, Roy R Lederman ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1072-1081

Reinforcement Learning of Implicit and Explicit Control Flow Instructions

Ethan Brooks, Janarthanan Rajendran, Richard L Lewis, Satinder Singh ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1082-1091

Machine Unlearning for Random Forests

Jonathan Brophy, Daniel Lowd ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1092-1104

Value Alignment Verification

Daniel S Brown, Jordan Schneider, Anca Dragan, Scott Niekum ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1105-1115

Model-Free and Model-Based Policy Evaluation when Causality is Uncertain

David A Bruns-Smith ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1116-1126

Narrow Margins: Classification, Margins and Fat Tails

Francois Buet-Golfouse ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1127-1135

Differentially Private Correlation Clustering

Mark Bun, Marek Elias, Janardhan Kulkarni ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1136-1146

Disambiguation of Weak Supervision leading to Exponential Convergence rates

Vivien A Cabannnes, Francis Bach, Alessandro Rudi ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1147-1157

Finite mixture models do not reliably learn the number of components

Diana Cai, Trevor Campbell, Tamara Broderick ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1158-1169

A Theory of Label Propagation for Subpopulation Shift

Tianle Cai, Ruiqi Gao, Jason Lee, Qi Lei ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1170-1182

Lenient Regret and Good-Action Identification in Gaussian Process Bandits

Xu Cai, Selwyn Gomes, Jonathan Scarlett ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1183-1192

A Zeroth-Order Block Coordinate Descent Algorithm for Huge-Scale Black-Box Optimization

Hanqin Cai, Yuchen Lou, Daniel Mckenzie, Wotao Yin ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1193-1203

GraphNorm: A Principled Approach to Accelerating Graph Neural Network Training

Tianle Cai, Shengjie Luo, Keyulu Xu, Di He, Tie-Yan Liu, Liwei Wang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1204-1215

On Lower Bounds for Standard and Robust Gaussian Process Bandit Optimization

Xu Cai, Jonathan Scarlett ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1216-1226

High-dimensional Experimental Design and Kernel Bandits

Romain Camilleri, Kevin Jamieson, Julian Katz-Samuels ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1227-1237

A Gradient Based Strategy for Hamiltonian Monte Carlo Hyperparameter Optimization

Andrew Campbell, Wenlong Chen, Vincent Stimper, Jose Miguel Hernandez-Lobato, Yichuan Zhang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1238-1248

Asymmetric Heavy Tails and Implicit Bias in Gaussian Noise Injections

Alexander Camuto, Xiaoyu Wang, Lingjiong Zhu, Chris Holmes, Mert Gurbuzbalaban, Umut Simsekli ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1249-1260

Fold2Seq: A Joint Sequence(1D)-Fold(3D) Embedding-based Generative Model for Protein Design

Yue Cao, Payel Das, Vijil Chenthamarakshan, Pin-Yu Chen, Igor Melnyk, Yang Shen ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1261-1271

Learning from Similarity-Confidence Data

Yuzhou Cao, Lei Feng, Yitian Xu, Bo An, Gang Niu, Masashi Sugiyama ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1272-1282

Parameter-free Locally Accelerated Conditional Gradients

Alejandro Carderera, Jelena Diakonikolas, Cheuk Yin Lin, Sebastian Pokutta ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1283-1293

Optimizing persistent homology based functions

Mathieu Carriere, Frederic Chazal, Marc Glisse, Yuichi Ike, Hariprasad Kannan, Yuhei Umeda ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1294-1303

Online Policy Gradient for Model Free Learning of Linear Quadratic Regulators with $\sqrt$T Regret

Asaf B Cassel, Tomer Koren ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1304-1313

Multi-Receiver Online Bayesian Persuasion

Matteo Castiglioni, Alberto Marchesi, Andrea Celli, Nicola Gatti ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1314-1323

Marginal Contribution Feature Importance - an Axiomatic Approach for Explaining Data

Amnon Catav, Boyang Fu, Yazeed Zoabi, Ahuva Libi Weiss Meilik, Noam Shomron, Jason Ernst, Sriram Sankararaman, Ran Gilad-Bachrach ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1324-1335

Disentangling syntax and semantics in the brain with deep networks

Charlotte Caucheteux, Alexandre Gramfort, Jean-Remi King ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1336-1348

Fair Classification with Noisy Protected Attributes: A Framework with Provable Guarantees

L. Elisa Celis, Lingxiao Huang, Vijay Keswani, Nisheeth K. Vishnoi ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1349-1361

Best Model Identification: A Rested Bandit Formulation

Leonardo Cella, Massimiliano Pontil, Claudio Gentile ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1362-1372

Revisiting Rainbow: Promoting more insightful and inclusive deep reinforcement learning research

Johan Samir Obando Ceron, Pablo Samuel Castro ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1373-1383

Learning Routines for Effective Off-Policy Reinforcement Learning

Edoardo Cetin, Oya Celiktutan ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1384-1394

Learning Node Representations Using Stationary Flow Prediction on Large Payment and Cash Transaction Networks

Ciwan Ceylan, Salla Franzén, Florian T. Pokorny ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1395-1406

GRAND: Graph Neural Diffusion

Ben Chamberlain, James Rowbottom, Maria I Gorinova, Michael Bronstein, Stefan Webb, Emanuele Rossi ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1407-1418

HoroPCA: Hyperbolic Dimensionality Reduction via Horospherical Projections

Ines Chami, Albert Gu, Dat P Nguyen, Christopher Re ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1419-1429

Goal-Conditioned Reinforcement Learning with Imagined Subgoals

Elliot Chane-Sane, Cordelia Schmid, Ivan Laptev ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1430-1440

Locally Private k-Means in One Round

Alisa Chang, Badih Ghazi, Ravi Kumar, Pasin Manurangsi ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1441-1451

Modularity in Reinforcement Learning via Algorithmic Independence in Credit Assignment

Michael Chang, Sid Kaushik, Sergey Levine, Tom Griffiths ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1452-1462

Image-Level or Object-Level? A Tale of Two Resampling Strategies for Long-Tailed Detection

Nadine Chang, Zhiding Yu, Yu-Xiong Wang, Animashree Anandkumar, Sanja Fidler, Jose M Alvarez ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1463-1472

DeepWalking Backwards: From Embeddings Back to Graphs

Sudhanshu Chanpuriya, Cameron Musco, Konstantinos Sotiropoulos, Charalampos Tsourakakis ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1473-1483

Differentiable Spatial Planning using Transformers

Devendra Singh Chaplot, Deepak Pathak, Jitendra Malik ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1484-1495

Solving Challenging Dexterous Manipulation Tasks With Trajectory Optimisation and Reinforcement Learning

Henry J Charlesworth, Giovanni Montana ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1496-1506

Classification with Rejection Based on Cost-sensitive Classification

Nontawat Charoenphakdee, Zhenghang Cui, Yivan Zhang, Masashi Sugiyama ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1507-1517

Actionable Models: Unsupervised Offline Reinforcement Learning of Robotic Skills

Yevgen Chebotar, Karol Hausman, Yao Lu, Ted Xiao, Dmitry Kalashnikov, Jacob Varley, Alex Irpan, Benjamin Eysenbach, Ryan C Julian, Chelsea Finn, Sergey Levine ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1518-1528

Unified Robust Semi-Supervised Variational Autoencoder

Xu Chen ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1529-1538

Unsupervised Learning of Visual 3D Keypoints for Control

Boyuan Chen, Pieter Abbeel, Deepak Pathak ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1539-1549

Integer Programming for Causal Structure Learning in the Presence of Latent Variables

Rui Chen, Sanjeeb Dash, Tian Gao ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1550-1560

Improved Corruption Robust Algorithms for Episodic Reinforcement Learning

Yifang Chen, Simon Du, Kevin Jamieson ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1561-1570

Scalable Computations of Wasserstein Barycenter via Input Convex Neural Networks

Jiaojiao Fan, Amirhossein Taghvaei, Yongxin Chen ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1571-1581

Neural Feature Matching in Implicit 3D Representations

Yunlu Chen, Basura Fernando, Hakan Bilen, Thomas Mensink, Efstratios Gavves ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1582-1593

Decentralized Riemannian Gradient Descent on the Stiefel Manifold

Shixiang Chen, Alfredo Garcia, Mingyi Hong, Shahin Shahrampour ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1594-1605

Learning Self-Modulating Attention in Continuous Time Space with Applications to Sequential Recommendation

Chao Chen, Haoyu Geng, Nianzu Yang, Junchi Yan, Daiyue Xue, Jianping Yu, Xiaokang Yang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1606-1616

Mandoline: Model Evaluation under Distribution Shift

Mayee Chen, Karan Goel, Nimit S Sohoni, Fait Poms, Kayvon Fatahalian, Christopher Re ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1617-1629

Order Matters: Probabilistic Modeling of Node Sequence for Graph Generation

Xiaohui Chen, Xu Han, Jiajing Hu, Francisco Ruiz, Liping Liu ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1630-1639

CARTL: Cooperative Adversarially-Robust Transfer Learning

Dian Chen, Hongxin Hu, Qian Wang, Li Yinli, Cong Wang, Chao Shen, Qi Li ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1640-1650

Finding the Stochastic Shortest Path with Low Regret: the Adversarial Cost and Unknown Transition Case

Liyu Chen, Haipeng Luo ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1651-1660

SpreadsheetCoder: Formula Prediction from Semi-structured Context

Xinyun Chen, Petros Maniatis, Rishabh Singh, Charles Sutton, Hanjun Dai, Max Lin, Denny Zhou ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1661-1672

Large-Margin Contrastive Learning with Distance Polarization Regularizer

Shuo Chen, Gang Niu, Chen Gong, Jun Li, Jian Yang, Masashi Sugiyama ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1673-1683

Z-GCNETs: Time Zigzags at Graph Convolutional Networks for Time Series Forecasting

Yuzhou Chen, Ignacio Segovia, Yulia R. Gel ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1684-1694

A Unified Lottery Ticket Hypothesis for Graph Neural Networks

Tianlong Chen, Yongduo Sui, Xuxi Chen, Aston Zhang, Zhangyang Wang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1695-1706

Network Inference and Influence Maximization from Samples

Wei Chen, Xiaoming Sun, Jialin Zhang, Zhijie Zhang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1707-1716

Data-driven Prediction of General Hamiltonian Dynamics via Learning Exactly-Symplectic Maps

Renyi Chen, Molei Tao ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1717-1727

Analysis of stochastic Lanczos quadrature for spectrum approximation

Tyler Chen, Thomas Trogdon, Shashanka Ubaru ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1728-1739

Large-Scale Multi-Agent Deep FBSDEs

Tianrong Chen, Ziyi O Wang, Ioannis Exarchos, Evangelos Theodorou ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1740-1748

Representation Subspace Distance for Domain Adaptation Regression

Xinyang Chen, Sinan Wang, Jianmin Wang, Mingsheng Long ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1749-1759

Overcoming Catastrophic Forgetting by Bayesian Generative Regularization

Pei-Hung Chen, Wei Wei, Cho-Jui Hsieh, Bo Dai ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1760-1770

Cyclically Equivariant Neural Decoders for Cyclic Codes

Xiangyu Chen, Min Ye ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1771-1780

A Receptor Skeleton for Capsule Neural Networks

Jintai Chen, Hongyun Yu, Chengde Qian, Danny Z Chen, Jian Wu ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1781-1790

Accelerating Gossip SGD with Periodic Global Averaging

Yiming Chen, Kun Yuan, Yingya Zhang, Pan Pan, Yinghui Xu, Wotao Yin ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1791-1802

ActNN: Reducing Training Memory Footprint via 2-Bit Activation Compressed Training

Jianfei Chen, Lianmin Zheng, Zhewei Yao, Dequan Wang, Ion Stoica, Michael Mahoney, Joseph Gonzalez ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1803-1813

SPADE: A Spectral Method for Black-Box Adversarial Robustness Evaluation

Wuxinlin Cheng, Chenhui Deng, Zhiqiang Zhao, Yaohui Cai, Zhiru Zhang, Zhuo Feng ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1814-1824

Self-supervised and Supervised Joint Training for Resource-rich Machine Translation

Yong Cheng, Wei Wang, Lu Jiang, Wolfgang Macherey ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1825-1835

Exact Optimization of Conformal Predictors via Incremental and Decremental Learning

Giovanni Cherubin, Konstantinos Chatzikokolakis, Martin Jaggi ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1836-1845

Problem Dependent View on Structured Thresholding Bandit Problems

James Cheshire, Pierre Menard, Alexandra Carpentier ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1846-1854

Online Optimization in Games via Control Theory: Connecting Regret, Passivity and Poincaré Recurrence

Yun Kuen Cheung, Georgios Piliouras ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1855-1865

Understanding and Mitigating Accuracy Disparity in Regression

Jianfeng Chi, Yuan Tian, Geoffrey J. Gordon, Han Zhao ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1866-1876

Private Alternating Least Squares: Practical Private Matrix Completion with Tighter Rates

Steve Chien, Prateek Jain, Walid Krichene, Steffen Rendle, Shuang Song, Abhradeep Thakurta, Li Zhang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1877-1887

Flavio Chierichetti, Ravi Kumar, Andrew Tomkins ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1888-1897

Parallelizing Legendre Memory Unit Training

Narsimha Reddy Chilkuri, Chris Eliasmith ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1898-1907

Quantifying and Reducing Bias in Maximum Likelihood Estimation of Structured Anomalies

Uthsav Chitra, Kimberly Ding, Jasper C.H. Lee, Benjamin J Raphael ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1908-1919

Robust Learning-Augmented Caching: An Experimental Study

Jakub Chłędowski, Adam Polak, Bartosz Szabucki, Konrad Tomasz Żołna ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1920-1930

Unifying Vision-and-Language Tasks via Text Generation

Jaemin Cho, Jie Lei, Hao Tan, Mohit Bansal ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1931-1942

Learning from Nested Data with Ornstein Auto-Encoders

Youngwon Choi, Sungdong Lee, Joong-Ho Won ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1943-1952

Variational Empowerment as Representation Learning for Goal-Conditioned Reinforcement Learning

Jongwook Choi, Archit Sharma, Honglak Lee, Sergey Levine, Shixiang Shane Gu ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1953-1963

Label-Only Membership Inference Attacks

Christopher A. Choquette-Choo, Florian Tramer, Nicholas Carlini, Nicolas Papernot ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1964-1974

Modeling Hierarchical Structures with Continuous Recursive Neural Networks

Jishnu Ray Chowdhury, Cornelia Caragea ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1975-1988

Scaling Multi-Agent Reinforcement Learning with Selective Parameter Sharing

Filippos Christianos, Georgios Papoudakis, Muhammad A Rahman, Stefano V Albrecht ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1989-1998

Beyond Variance Reduction: Understanding the True Impact of Baselines on Policy Optimization

Wesley Chung, Valentin Thomas, Marlos C. Machado, Nicolas Le Roux ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:1999-2009

First-Order Methods for Wasserstein Distributionally Robust MDP

Julien Grand Clement, Christian Kroer ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2010-2019

Phasic Policy Gradient

Karl W Cobbe, Jacob Hilton, Oleg Klimov, John Schulman ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2020-2027

Riemannian Convex Potential Maps

Samuel Cohen, Brandon Amos, Yaron Lipman ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2028-2038

Scaling Properties of Deep Residual Networks

Alain-Sam Cohen, Rama Cont, Alain Rossier, Renyuan Xu ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2039-2048

Differentially-Private Clustering of Easy Instances

Edith Cohen, Haim Kaplan, Yishay Mansour, Uri Stemmer, Eliad Tsfadia ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2049-2059

Improving Ultrametrics Embeddings Through Coresets

Vincent Cohen-Addad, Rémi De Joannis De Verclos, Guillaume Lagarde ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2060-2068

Correlation Clustering in Constant Many Parallel Rounds

Vincent Cohen-Addad, Silvio Lattanzi, Slobodan Mitrović, Ashkan Norouzi-Fard, Nikos Parotsidis, Jakub Tarnawski ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2069-2078

Concentric mixtures of Mallows models for top-$k$ rankings: sampling and identifiability

Fabien Collas, Ekhine Irurozki ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2079-2088

Exploiting Shared Representations for Personalized Federated Learning

Liam Collins, Hamed Hassani, Aryan Mokhtari, Sanjay Shakkottai ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2089-2099

Differentiable Particle Filtering via Entropy-Regularized Optimal Transport

Adrien Corenflos, James Thornton, George Deligiannidis, Arnaud Doucet ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2100-2111

Fairness and Bias in Online Selection

Jose Correa, Andres Cristi, Paul Duetting, Ashkan Norouzi-Fard ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2112-2121

Relative Deviation Margin Bounds

Corinna Cortes, Mehryar Mohri, Ananda Theertha Suresh ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2122-2131

A Discriminative Technique for Multiple-Source Adaptation

Corinna Cortes, Mehryar Mohri, Ananda Theertha Suresh, Ningshan Zhang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2132-2143

Characterizing Fairness Over the Set of Good Models Under Selective Labels

Amanda Coston, Ashesh Rambachan, Alexandra Chouldechova ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2144-2155

Two-way kernel matrix puncturing: towards resource-efficient PCA and spectral clustering

Romain Couillet, Florent Chatelain, Nicolas Le Bihan ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2156-2165

Explaining Time Series Predictions with Dynamic Masks

Jonathan Crabbé, Mihaela Van Der Schaar ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2166-2177

Generalised Lipschitz Regularisation Equals Distributional Robustness

Zac Cranko, Zhan Shi, Xinhua Zhang, Richard Nock, Simon Kornblith ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2178-2188

Environment Inference for Invariant Learning

Elliot Creager, Joern-Henrik Jacobsen, Richard Zemel ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2189-2200

Mind the Box: $l_1$-APGD for Sparse Adversarial Attacks on Image Classifiers

Francesco Croce, Matthias Hein ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2201-2211

Parameterless Transductive Feature Re-representation for Few-Shot Learning

Wentao Cui, Yuhong Guo ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2212-2221

Randomized Algorithms for Submodular Function Maximization with a $k$-System Constraint

Shuang Cui, Kai Han, Tianshuai Zhu, Jing Tang, Benwei Wu, He Huang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2222-2232

GBHT: Gradient Boosting Histogram Transform for Density Estimation

Jingyi Cui, Hanyuan Hang, Yisen Wang, Zhouchen Lin ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2233-2243

ProGraML: A Graph-based Program Representation for Data Flow Analysis and Compiler Optimizations

Chris Cummins, Zacharias V. Fisches, Tal Ben-Nun, Torsten Hoefler, Michael F P O’Boyle, Hugh Leather ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2244-2253

Combining Pessimism with Optimism for Robust and Efficient Model-Based Deep Reinforcement Learning

Sebastian Curi, Ilija Bogunovic, Andreas Krause ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2254-2264

Quantifying Availability and Discovery in Recommender Systems via Stochastic Reachability

Mihaela Curmei, Sarah Dean, Benjamin Recht ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2265-2275

Dynamic Balancing for Model Selection in Bandits and RL

Ashok Cutkosky, Christoph Dann, Abhimanyu Das, Claudio Gentile, Aldo Pacchiano, Manish Purohit ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2276-2285

ConViT: Improving Vision Transformers with Soft Convolutional Inductive Biases

Stéphane D’Ascoli, Hugo Touvron, Matthew L Leavitt, Ari S Morcos, Giulio Biroli, Levent Sagun ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2286-2296

Consistent regression when oblivious outliers overwhelm

Tommaso D’Orsi, Gleb Novikov, David Steurer ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2297-2306

Offline Reinforcement Learning with Pseudometric Learning

Robert Dadashi, Shideh Rezaeifar, Nino Vieillard, Léonard Hussenot, Olivier Pietquin, Matthieu Geist ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2307-2318

A Tale of Two Efficient and Informative Negative Sampling Distributions

Shabnam Daghaghi, Tharun Medini, Nicholas Meisburger, Beidi Chen, Mengnan Zhao, Anshumali Shrivastava ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2319-2329

SiameseXML: Siamese Networks meet Extreme Classifiers with 100M Labels

Kunal Dahiya, Ananye Agarwal, Deepak Saini, Gururaj K, Jian Jiao, Amit Singh, Sumeet Agarwal, Purushottam Kar, Manik Varma ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2330-2340

Fixed-Parameter and Approximation Algorithms for PCA with Outliers

Yogesh Dahiya, Fedor Fomin, Fahad Panolan, Kirill Simonov ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2341-2351

Sliced Iterative Normalizing Flows

Biwei Dai, Uros Seljak ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2352-2364

Convex Regularization in Monte-Carlo Tree Search

Tuan Q Dam, Carlo D’Eramo, Jan Peters, Joni Pajarinen ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2365-2375

Demonstration-Conditioned Reinforcement Learning for Few-Shot Imitation

Christopher R. Dance, Julien Perez, Théo Cachet ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2376-2387

Re-understanding Finite-State Representations of Recurrent Policy Networks

Mohamad H Danesh, Anurag Koul, Alan Fern, Saeed Khorram ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2388-2397

Newton Method over Networks is Fast up to the Statistical Precision

Amir Daneshmand, Gesualdo Scutari, Pavel Dvurechensky, Alexander Gasnikov ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2398-2409

BasisDeVAE: Interpretable Simultaneous Dimensionality Reduction and Feature-Level Clustering with Derivative-Based Variational Autoencoders

Dominic Danks, Christopher Yau ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2410-2420

Intermediate Layer Optimization for Inverse Problems using Deep Generative Models

Giannis Daras, Joseph Dean, Ajil Jalal, Alex Dimakis ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2421-2432

Measuring Robustness in Deep Learning Based Compressive Sensing

Mohammad Zalbagi Darestani, Akshay S Chaudhari, Reinhard Heckel ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2433-2444

SAINT-ACC: Safety-Aware Intelligent Adaptive Cruise Control for Autonomous Vehicles Using Deep Reinforcement Learning

Lokesh Chandra Das, Myounggyu Won ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2445-2455

Lipschitz normalization for self-attention layers with application to graph neural networks

George Dasoulas, Kevin Scaman, Aladin Virmaux ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2456-2466

Householder Sketch for Accurate and Accelerated Least-Mean-Squares Solvers

Jyotikrishna Dass, Rabi Mahapatra ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2467-2477

Byzantine-Resilient High-Dimensional SGD with Local Iterations on Heterogeneous Data

Deepesh Data, Suhas Diggavi ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2478-2488

Catformer: Designing Stable Transformers via Sensitivity Analysis

Jared Q Davis, Albert Gu, Krzysztof Choromanski, Tri Dao, Christopher Re, Chelsea Finn, Percy Liang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2489-2499

Diffusion Source Identification on Networks with Statistical Confidence

Quinlan E Dawkins, Tianxi Li, Haifeng Xu ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2500-2509

Bayesian Deep Learning via Subnetwork Inference

Erik Daxberger, Eric Nalisnick, James U Allingham, Javier Antoran, Jose Miguel Hernandez-Lobato ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2510-2521

Adversarial Robustness Guarantees for Random Deep Neural Networks

Giacomo De Palma, Bobak Kiani, Seth Lloyd ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2522-2534

High-Dimensional Gaussian Process Inference with Derivatives

Filip de Roos, Alexandra Gessner, Philipp Hennig ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2535-2545

Transfer-Based Semantic Anomaly Detection

Lucas Deecke, Lukas Ruff, Robert A. Vandermeulen, Hakan Bilen ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2546-2558

Grid-Functioned Neural Networks

Javier Dehesa, Andrew Vidler, Julian Padget, Christof Lutteroth ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2559-2567

Multidimensional Scaling: Approximation and Complexity

Erik Demaine, Adam Hesterberg, Frederic Koehler, Jayson Lynch, John Urschel ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2568-2578

What Does Rotation Prediction Tell Us about Classifier Accuracy under Varying Testing Environments?

Weijian Deng, Stephen Gould, Liang Zheng ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2579-2589

Toward Better Generalization Bounds with Locally Elastic Stability

Zhun Deng, Hangfeng He, Weijie Su ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2590-2600

Revenue-Incentive Tradeoffs in Dynamic Reserve Pricing

Yuan Deng, Sebastien Lahaie, Vahab Mirrokni, Song Zuo ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2601-2610

Heterogeneity for the Win: One-Shot Federated Clustering

Don Kurian Dennis, Tian Li, Virginia Smith ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2611-2620

Kernel Continual Learning

Mohammad Mahdi Derakhshani, Xiantong Zhen, Ling Shao, Cees Snoek ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2621-2631

Bayesian Optimization over Hybrid Spaces

Aryan Deshwal, Syrine Belakaria, Janardhan Rao Doppa ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2632-2643

Navigation Turing Test (NTT): Learning to Evaluate Human-Like Navigation

Sam Devlin, Raluca Georgescu, Ida Momennejad, Jaroslaw Rzepecki, Evelyn Zuniga, Gavin Costello, Guy Leroy, Ali Shaw, Katja Hofmann ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2644-2653

Versatile Verification of Tree Ensembles

Laurens Devos, Wannes Meert, Jesse Davis ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2654-2664

On the Inherent Regularization Effects of Noise Injection During Training

Oussama Dhifallah, Yue Lu ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2665-2675

Hierarchical Agglomerative Graph Clustering in Nearly-Linear Time

Laxman Dhulipala, David Eisenstat, Jakub Łącki, Vahab Mirrokni, Jessica Shi ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2676-2686

Learning Online Algorithms with Distributional Advice

Ilias Diakonikolas, Vasilis Kontonis, Christos Tzamos, Ali Vakilian, Nikos Zarifis ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2687-2696

A Wasserstein Minimax Framework for Mixed Linear Regression

Theo Diamandis, Yonina Eldar, Alireza Fallah, Farzan Farnia, Asuman Ozdaglar ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2697-2706

Context-Aware Online Collective Inference for Templated Graphical Models

Charles Dickens, Connor Pryor, Eriq Augustine, Alexander Miller, Lise Getoor ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2707-2716

ARMS: Antithetic-REINFORCE-Multi-Sample Gradient for Binary Variables

Aleksandar Dimitriev, Mingyuan Zhou ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2717-2727

XOR-CD: Linearly Convergent Constrained Structure Generation

Fan Ding, Jianzhu Ma, Jinbo Xu, Yexiang Xue ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2728-2738

Dual Principal Component Pursuit for Robust Subspace Learning: Theory and Algorithms for a Holistic Approach

Tianyu Ding, Zhihui Zhu, Rene Vidal, Daniel P Robinson ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2739-2748

Coded-InvNet for Resilient Prediction Serving Systems

Tuan Dinh, Kangwook Lee ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2749-2759

Estimation and Quantization of Expected Persistence Diagrams

Vincent Divol, Theo Lacombe ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2760-2770

On Energy-Based Models with Overparametrized Shallow Neural Networks

Carles Domingo-Enrich, Alberto Bietti, Eric Vanden-Eijnden, Joan Bruna ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2771-2782

Kernel-Based Reinforcement Learning: A Finite-Time Analysis

Omar Darwiche Domingues, Pierre Menard, Matteo Pirotta, Emilie Kaufmann, Michal Valko ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2783-2792

Attention is not all you need: pure attention loses rank doubly exponentially with depth

Yihe Dong, Jean-Baptiste Cordonnier, Andreas Loukas ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2793-2803

How rotational invariance of common kernels prevents generalization in high dimensions

Konstantin Donhauser, Mingqi Wu, Fanny Yang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2804-2814

Fast Stochastic Bregman Gradient Methods: Sharp Analysis and Variance Reduction

Radu Alexandru Dragomir, Mathieu Even, Hadrien Hendrikx ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2815-2825

Bilinear Classes: A Structural Framework for Provable Generalization in RL

Simon Du, Sham Kakade, Jason Lee, Shachar Lovett, Gaurav Mahajan, Wen Sun, Ruosong Wang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2826-2836

Improved Contrastive Divergence Training of Energy-Based Models

Yilun Du, Shuang Li, Joshua Tenenbaum, Igor Mordatch ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2837-2848

Order-Agnostic Cross Entropy for Non-Autoregressive Machine Translation

Cunxiao Du, Zhaopeng Tu, Jing Jiang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2849-2859

Putting the “Learning" into Learning-Augmented Algorithms for Frequency Estimation

Elbert Du, Franklyn Wang, Michael Mitzenmacher ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2860-2869

Estimating $α$-Rank from A Few Entries with Low Rank Matrix Completion

Yali Du, Xue Yan, Xu Chen, Jun Wang, Haifeng Zhang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2870-2879

Learning Diverse-Structured Networks for Adversarial Robustness

Xuefeng Du, Jingfeng Zhang, Bo Han, Tongliang Liu, Yu Rong, Gang Niu, Junzhou Huang, Masashi Sugiyama ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2880-2891

Risk Bounds and Rademacher Complexity in Batch Reinforcement Learning

Yaqi Duan, Chi Jin, Zhiyuan Li ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2892-2902

Sawtooth Factorial Topic Embeddings Guided Gamma Belief Network

Zhibin Duan, Dongsheng Wang, Bo Chen, Chaojie Wang, Wenchao Chen, Yewen Li, Jie Ren, Mingyuan Zhou ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2903-2913

Exponential Reduction in Sample Complexity with Learning of Ising Model Dynamics

Arkopal Dutt, Andrey Lokhov, Marc D Vuffray, Sidhant Misra ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2914-2925

Reinforcement Learning Under Moral Uncertainty

Adrien Ecoffet, Joel Lehman ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2926-2936

Confidence-Budget Matching for Sequential Budgeted Learning

Yonathan Efroni, Nadav Merlis, Aadirupa Saha, Shie Mannor ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2937-2947

Self-Paced Context Evaluation for Contextual Reinforcement Learning

Theresa Eimer, André Biedenkapp, Frank Hutter, Marius Lindauer ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2948-2958

Provably Strict Generalisation Benefit for Equivariant Models

Bryn Elesedy, Sheheryar Zaidi ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2959-2969

Efficient Iterative Amortized Inference for Learning Symmetric and Disentangled Multi-Object Representations

Patrick Emami, Pan He, Sanjay Ranka, Anand Rangarajan ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2970-2981

Implicit Bias of Linear RNNs

Melikasadat Emami, Mojtaba Sahraee-Ardakan, Parthe Pandit, Sundeep Rangan, Alyson K Fletcher ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2982-2992

Global Optimality Beyond Two Layers: Training Deep ReLU Networks via Convex Programs

Tolga Ergen, Mert Pilanci ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:2993-3003

Revealing the Structure of Deep Neural Networks via Convex Duality

Tolga Ergen, Mert Pilanci ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3004-3014

Whitening for Self-Supervised Representation Learning

Aleksandr Ermolov, Aliaksandr Siarohin, Enver Sangineto, Nicu Sebe ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3015-3024

Graph Mixture Density Networks

Federico Errica, Davide Bacciu, Alessio Micheli ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3025-3035

Cross-Gradient Aggregation for Decentralized Learning from Non-IID Data

Yasaman Esfandiari, Sin Yong Tan, Zhanhong Jiang, Aditya Balu, Ethan Herron, Chinmay Hegde, Soumik Sarkar ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3036-3046

Weight-covariance alignment for adversarially robust neural networks

Panagiotis Eustratiadis, Henry Gouk, Da Li, Timothy Hospedales ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3047-3056

Data augmentation for deep learning based accelerated MRI reconstruction with limited data

Zalan Fabian, Reinhard Heckel, Mahdi Soltanolkotabi ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3057-3067

Poisson-Randomised DirBN: Large Mutation is Needed in Dirichlet Belief Networks

Xuhui Fan, Bin Li, Yaqiong Li, Scott A. Sisson ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3068-3077

Model-based Reinforcement Learning for Continuous Control with Posterior Sampling

Ying Fan, Yifei Ming ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3078-3087

SECANT: Self-Expert Cloning for Zero-Shot Generalization of Visual Policies

Linxi Fan, Guanzhi Wang, De-An Huang, Zhiding Yu, Li Fei-Fei, Yuke Zhu, Animashree Anandkumar ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3088-3099

On Estimation in Latent Variable Models

Guanhua Fang, Ping Li ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3100-3110

On Variational Inference in Biclustering Models

Guanhua Fang, Ping Li ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3111-3121

Learning Bounds for Open-Set Learning

Zhen Fang, Jie Lu, Anjin Liu, Feng Liu, Guangquan Zhang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3122-3132

Streaming Bayesian Deep Tensor Factorization

Shikai Fang, Zheng Wang, Zhimeng Pan, Ji Liu, Shandian Zhe ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3133-3142

PID Accelerated Value Iteration Algorithm

Amir-Massoud Farahmand, Mohammad Ghavamzadeh ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3143-3153

Near-Optimal Entrywise Anomaly Detection for Low-Rank Matrices with Sub-Exponential Noise

Vivek Farias, Andrew A Li, Tianyi Peng ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3154-3163

Connecting Optimal Ex-Ante Collusion in Teams to Extensive-Form Correlation: Faster Algorithms and Positive Complexity Results

Gabriele Farina, Andrea Celli, Nicola Gatti, Tuomas Sandholm ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3164-3173

Train simultaneously, generalize better: Stability of gradient-based minimax learners

Farzan Farnia, Asuman Ozdaglar ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3174-3185

Unbalanced minibatch Optimal Transport; applications to Domain Adaptation

Kilian Fatras, Thibault Sejourne, Rémi Flamary, Nicolas Courty ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3186-3197

Risk-Sensitive Reinforcement Learning with Function Approximation: A Debiasing Approach

Yingjie Fei, Zhuoran Yang, Zhaoran Wang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3198-3207

Lossless Compression of Efficient Private Local Randomizers

Vitaly Feldman, Kunal Talwar ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3208-3219

Dimensionality Reduction for the Sum-of-Distances Metric

Zhili Feng, Praneeth Kacham, David Woodruff ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3220-3229

Reserve Price Optimization for First Price Auctions in Display Advertising

Zhe Feng, Sebastien Lahaie, Jon Schneider, Jinchao Ye ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3230-3239

Uncertainty Principles of Encoding GANs

Ruili Feng, Zhouchen Lin, Jiapeng Zhu, Deli Zhao, Jingren Zhou, Zheng-Jun Zha ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3240-3251

Pointwise Binary Classification with Pairwise Confidence Comparisons

Lei Feng, Senlin Shu, Nan Lu, Bo Han, Miao Xu, Gang Niu, Bo An, Masashi Sugiyama ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3252-3262

Provably Correct Optimization and Exploration with Non-linear Policies

Fei Feng, Wotao Yin, Alekh Agarwal, Lin Yang ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3263-3273

KD3A: Unsupervised Multi-Source Decentralized Domain Adaptation via Knowledge Distillation

Haozhe Feng, Zhaoyang You, Minghao Chen, Tianye Zhang, Minfeng Zhu, Fei Wu, Chao Wu, Wei Chen ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3274-3283

Understanding Noise Injection in GANs

Ruili Feng, Deli Zhao, Zheng-Jun Zha ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3284-3293

GNNAutoScale: Scalable and Expressive Graph Neural Networks via Historical Embeddings

Matthias Fey, Jan E. Lenssen, Frank Weichert, Jure Leskovec ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3294-3304

PsiPhi-Learning: Reinforcement Learning with Demonstrations using Successor Features and Inverse Temporal Difference Learning

Angelos Filos, Clare Lyle, Yarin Gal, Sergey Levine, Natasha Jaques, Gregory Farquhar ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3305-3317

A Practical Method for Constructing Equivariant Multilayer Perceptrons for Arbitrary Matrix Groups

Marc Finzi, Max Welling, Andrew Gordon Wilson ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3318-3328

Few-Shot Conformal Prediction with Auxiliary Tasks

Adam Fisch, Tal Schuster, Tommi Jaakkola, Dr.Regina Barzilay ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3329-3339

Scalable Certified Segmentation via Randomized Smoothing

Marc Fischer, Maximilian Baader, Martin Vechev ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3340-3351

What’s in the Box? Exploring the Inner Life of Neural Networks with Robust Rules

Jonas Fischer, Anna Olah, Jilles Vreeken ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3352-3362

Online Learning with Optimism and Delay

Genevieve E Flaspohler, Francesco Orabona, Judah Cohen, Soukayna Mouatadid, Miruna Oprescu, Paulo Orenstein, Lester Mackey ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3363-3373

Online A-Optimal Design and Active Linear Regression

Xavier Fontaine, Pierre Perrault, Michal Valko, Vianney Perchet ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3374-3383

Deep Adaptive Design: Amortizing Sequential Bayesian Experimental Design

Adam Foster, Desi R Ivanova, Ilyas Malik, Tom Rainforth ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3384-3395

Efficient Online Learning for Dynamic k-Clustering

Dimitris Fotakis, Georgios Piliouras, Stratis Skoulakis ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3396-3406

Clustered Sampling: Low-Variance and Improved Representativity for Clients Selection in Federated Learning

Yann Fraboni, Richard Vidal, Laetitia Kameni, Marco Lorenzi ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3407-3416

Agnostic Learning of Halfspaces with Gradient Descent via Soft Margins

Spencer Frei, Yuan Cao, Quanquan Gu ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3417-3426

Provable Generalization of SGD-trained Neural Networks of Any Width in the Presence of Adversarial Label Noise

Spencer Frei, Yuan Cao, Quanquan Gu ; Proceedings of the 38th International Conference on Machine Learning , PMLR 139:3427-3438

Post-selection inference with HSIC-Lasso