Society Homepage About Public Health Policy Contact

Data-driven hypothesis generation in clinical research: what we learned from a human subject study, article sidebar.

Submit your own article

Register as an author to reserve your spot in the next issue of the Medical Research Archives.

Join the Society

The European Society of Medicine is more than a professional association. We are a community. Our members work in countries across the globe, yet are united by a common goal: to promote health and health equity, around the world.

Join Europe’s leading medical society and discover the many advantages of membership, including free article publication.

Main Article Content

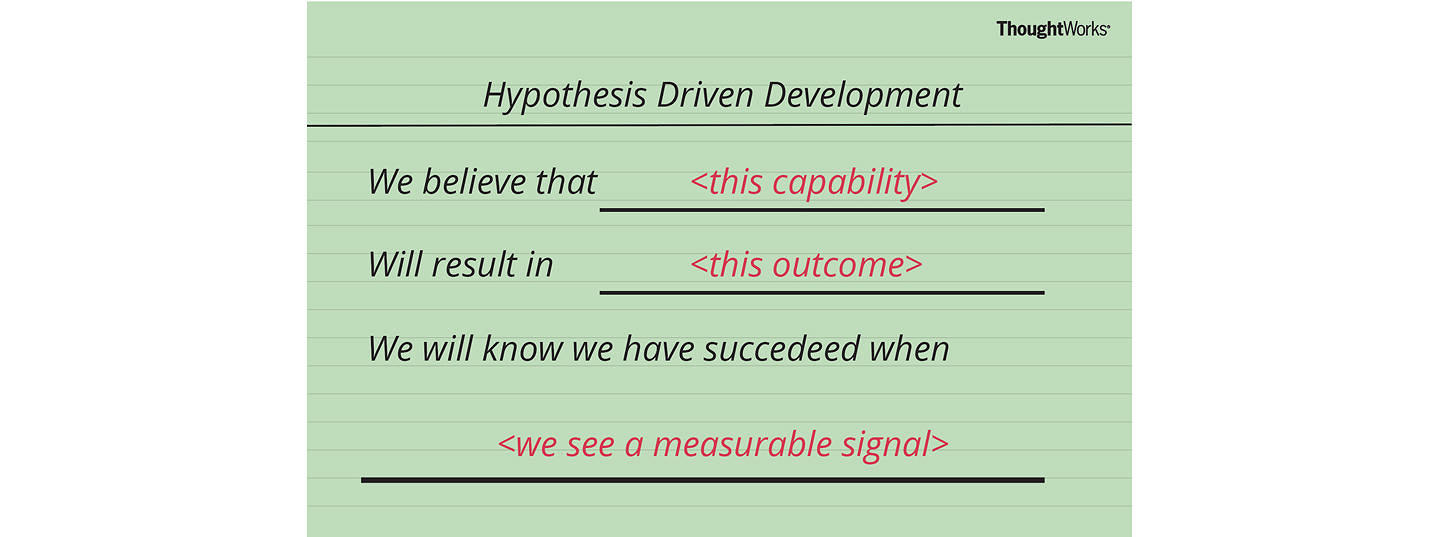

Hypothesis generation is an early and critical step in any hypothesis-driven clinical research project. Because it is not yet a well-understood cognitive process, the need to improve the process goes unrecognized. Without an impactful hypothesis, the significance of any research project can be questionable, regardless of the rigor or diligence applied in other steps of the study, e.g., study design, data collection, and result analysis. In this perspective article, the authors provide a literature review on the following topics first: scientific thinking, reasoning, medical reasoning, literature-based discovery, and a field study to explore scientific thinking and discovery. Over the years, scientific thinking has shown excellent progress in cognitive science and its applied areas: education, medicine, and biomedical research. However, a review of the literature reveals the lack of original studies on hypothesis generation in clinical research. The authors then summarize their first human participant study exploring data-driven hypothesis generation by clinical researchers in a simulated setting. The results indicate that a secondary data analytical tool, VIADS—a visual interactive analytic tool for filtering, summarizing, and visualizing large health data sets coded with hierarchical terminologies, can shorten the time participants need, on average, to generate a hypothesis and also requires fewer cognitive events to generate each hypothesis. As a counterpoint, this exploration also indicates that the quality ratings of the hypotheses thus generated carry significantly lower ratings for feasibility when applying VIADS. Despite its small scale, the study confirmed the feasibility of conducting a human participant study directly to explore the hypothesis generation process in clinical research. This study provides supporting evidence to conduct a larger-scale study with a specifically designed tool to facilitate the hypothesis-generation process among inexperienced clinical researchers. A larger study could provide generalizable evidence, which in turn can potentially improve clinical research productivity and overall clinical research enterprise.

Article Details

The Medical Research Archives grants authors the right to publish and reproduce the unrevised contribution in whole or in part at any time and in any form for any scholarly non-commercial purpose with the condition that all publications of the contribution include a full citation to the journal as published by the Medical Research Archives .

Warning: The NCBI web site requires JavaScript to function. more...

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

Walker HK, Hall WD, Hurst JW, editors. Clinical Methods: The History, Physical, and Laboratory Examinations. 3rd edition. Boston: Butterworths; 1990.

Clinical Methods: The History, Physical, and Laboratory Examinations. 3rd edition.

Chapter 2 collecting and analyzing data: doing and thinking.

David A. Nardone .

Clinicians embrace problem solving as one of their primary goals in patient care and value this skill as the major determinant of clinical competence. Despite these tenets, there is little conscious utilization of diagnostic reasoning strategies in clinical medicine. The focus is usually placed on pathophysiological knowledge base and not on collecting and analyzing data. When the latter are discussed, it is almost always in reference to algorithms, decision analysis, Bayes's theorem, and the clinicopathological conference exercise.

The physician, as decision maker, must possess a propensity for taking risks, a willingness to be dogmatic at times, and a dogged determination to make adequate decisions based on inadequate information. It is necessary to recognize patterns and to conceptualize, correlate, and compare data analytically. Even the experienced problem solver, however, is limited by cognitive strain. Only a few bits and chunks of data can be processed consciously through operative channels simultaneously. The clinician is limited also by the natural history of the disease. For instance, a symptom or sign may not have been manifested as yet; or certain manifestations may occur only in a small percentage of patients, that is, the sensitivity is low. Finally, the success or failure in the diagnostic process is dependent upon the quality of the patient–physician relationship. The physician must be caring and command sufficient competence in the psychosocial aspects of clinical medicine to facilitate the development of a trusting bond and structure an environment that is conducive to interchange. On the other hand, the patient must be cooperative and capable of relating problems, priorities, and expectations.

Reduction of cognitive strain is dependent primarily on implementing certain strategies of doing and thinking. Figure 2.1 and Table 2.1 represent a compilation of these general concepts. Whereas it might be assumed that problem solving begins after the pertinent manifestations from the history and physical examination have been gleaned and collated, it actually begins at the moment the patient and physician make initial contact. At this point, the diagnostic possibilities are infinite. The strategies then enable the clinician to sense the existence of a symptom or a sign (problem identification); formulate potential causes (hypothesis generation); collect data methodically (hypothesis evaluation and hypothesis analysis); and finally, to organize, synthesize, and prioritize the significant clinical findings (hypothesis assembling) for subsequent steps in diagnostic reasoning.

Collecting and analyzing data (Adapted from Feinstein, 1973.).

Collecting and Analyzing Data.

This series of methods directs the evaluation and interpretation of disease manifestations and the handling of rival hypotheses and discordant data. They determine the content and sequence of questions posed to the patient, of maneuvers performed during the physical examination, and of laboratory procedures utilized. The physician obviously does not proceed rigidly in the manner outlined. There is constant movement back and forth from one modality to another. The positive outcome of such a process is that the clinician can effectively proceed from infinity, the diagnostic unknown so to speak, to a point quite proximal to the diagnosis utilizing the doing and thinking strategies in the history and physical alone. The diagnosis is reached ultimately, in most circumstances, by implementing the same techniques as they pertain to the laboratory.

It is important to note the differences in doing and thinking when considering the issue of collecting and analyzing data. Doing refers to asking questions during the history, performing both general and specific maneuvers in the physical examination, and performing appropriate laboratory procedures. Thinking strategies reflect the intellectual tasks required throughout the encounter. The clinician continually generates and reformulates hypotheses, grapples with concepts of choosing appropriate labels or manifestations, and assembles each symptom and sign elicited in the history, physical, and laboratory into problem lists and diagnostic impressions. Thus, thinking forms the basis for all the action-oriented (doing) strategies.

- Problem Identification

One cannot solve a problem without first determining that it exists. In the earliest stages of the history, the clinician elicits the chief complaint and other health concerns. This technique of symptom and problem listing provides the interviewer with diagnostic leads to generate hypotheses and assists in prioritizing the patient's concerns so the problem solver can grasp the big picture and appreciate any potential interrelationship between the various symptoms and problems identified. Another advantage of symptom listing is that it may avoid the dilemma posed by the patient with a positive review of systems.

As symptoms are evaluated and analyzed, other problems are frequently uncovered. Consider a patient who presents with joint pains. During review of this problem, the physician learns that salicylate therapy alleviated the symptoms but was discontinued. Querying the patient discloses that there was an episode of black stools. Thus, the additional problem of melena is identified.

Obviously, there is no correlate of symptom listing in the physical and laboratory areas. However, problems are identified in a fashion similar to the patient with melena above. As an example, a clinician has interviewed a patient with chest pain and has a strong suspicion that it represents angina pectoris. During routine cardiac examination, the patient is found to have a systolic crescendo–decrescendo murmur heard best at the second right intercostal space and radiating to the carotid vessels. Heretofore, aortic stenosis had not been a problem. As for the laboratory, consider a woman who presents with peripheral edema. Neither the history nor the physical examination supports a cardiac, hepatic, venous, or renal cause. Preliminary laboratory investigation includes obtaining a serum albumin level to test the hypothesis of decreased colloid oncotic pressure from liver disease and a urinalysis to confirm or eliminate the possibility of nephrotic syndrome. Both are negative, but the urine does contain moderate amounts of glucose. The possibility of diabetes mellitus now enters this patient's medical profile.

- Hypothesis Generation

Formulation and revision of hypotheses are constant features of diagnostic reasoning and pervade the entire encounter ( Fig. 2.1 ). This is somewhat contrary to what is commonly believed, since hypothesis generation is usually ascribed to the history. Hypotheses may be general and refer to topographic parts of the anatomy such as domains (organ, system, region, channel) and foci (a subset of domain). When domains are diseased, certain symptoms and signs emanate. Hypotheses may be specific as well and refer to certain explicatory sets ( Table 2.1 ). These sets may be further categorized into disorders (congestive heart failure), derangements (myocardial infarction), pathoanatomic entities (coronary thrombosis), and pathophysiologic entities (hyperlipoproteinemia). The more specific the symptom or sign elicited, the better chance of activating specific hypotheses. For instance, nausea, inspiratory rales, and an elevated sedimentation rate are nonspecific, whereas syncope, S 3 gallop, and heavy proteinuria are specific. The latter three examples evoke a more narrow differential diagnosis. Some clinical manifestations may even be pathognomonic, that is, only one hypothesis fits. Consider the significance of paroxysmal nocturnal dyspnea, Cheyne-Stokes respirations, an arterial plaque in the fundus, etc. The seasoned practitioner more reliably generates specific hypotheses.

Hypothesis generation is predicated on informed intuition. It is imaginative and to a great extent subconscious. Frequently, armed with the mere knowledge of age, sex, and chief complaint, the clinician can entertain general and specific hypotheses that implicate common, reversible, and even exotic disease states. In fact, early hypothesis generation is the rule. Nevertheless, this is more readily achievable if the case is familiar. Conversely, with an unfamiliar case, effective hypothesis generation is often delayed until a higher percentage of the complete data base is collected. In this latter situation, it is best to concentrate on topographic and not explicatory sets.

Typically only a few hypotheses can be entertained at any time. Clinical findings elicited during the medical interview and physical examination generates the most hypotheses, but positive laboratory data contribute very little to generation of new hypotheses. Usually laboratory procedures are utilized to confirm or reject hypotheses. The number of hypotheses generated depends on the experience of the clinician.

- Hypothesis Evaluation

This is the strategy in which the clinician obtains the patient's story and performs the core physical and laboratory examinations in order to clarify and refine hypotheses generated to date. The major elements of hypothesis evaluation are characterization (doing) and choosing manifestations (thinking). Ultimately, these contribute in a meaningful way to the reformulation of hypotheses for appropriate analysis later. To this point, there has been identification of problems and generation of hypotheses. In order to resolve a problem of unknown cause, as is often the case at the bedside, the physician is confronted with the decision either to search in a nonbiased manner for information through hypothesis evaluation or proceed directly with hypothesis analysis. The option of evaluation grants the potential to convert an open-ended problem into one that is more defined, and in the history dramatically increases the probability of eliciting affirmative responses that are of significantly greater value than when directly testing hypotheses. If one resorts to hypothesis analysis immediately, there is a certain risk to assume. On the one hand, the problem in question may be solved promptly. If the result is negative or not particularly helpful, however, then premature closure is likely and very little has been accomplished.

The following two cases are illustrative: A 72-year-old man presents with progressive dyspnea. No doubt the topographic hypotheses of cardiac and pulmonary causes of dyspnea come to mind immediately. Perhaps such explicatory set hypotheses as chronic obstructive pulmonary disease and congestive heart failure are entertained. Hypothesis evaluation dictates that the physician obtain a clearer picture of dyspnea by determining the circumstances and characteristics of dyspnea (What? How? When? Where?), whereas hypothesis analysis would cause the clinician to query the patient immediately about tobacco usage and a prior history of myocardial infarction, etc.

In the second case, a 47-year-old man consults his physician for anorexia, weight gain, and increased abdominal girth. There is a strong suspicion of heavy alcohol intake. The examining physician may choose to evaluate the patient thoroughly by a careful and methodical examination of all core systems or resort to an hypothesis-driven examination where only the supraclavicular fossae are palpated (neoplastic nodes), the abdomen is inspected for distention (ascites), palpated for masses (hepatosplenomegaly) and fluid wave (ascites), and the skin checked for spider angiomas, the breasts examined for gynecomastia, and the testicles palpated for atrophy. The latter three represent direct testing for potential complications from alcoholism.

Characterization

The hallmarks of characterization are chronology, severity, influential factors, and expert witness. Chronology is applicable to the interview only and is the crux of any present illness. Just as virtually every pathophysiologic process has a beginning, an intermediate stage, and current status, so does each clinical manifestation of disease. Frequently just determining the chronology of a symptom carries clinical significance for diagnostic purposes. Consider the implications of the 45-year-old woman with intermittent disabling headaches for 22 years versus the patient who has suffered similar headaches but only for the last 2 weeks.

Severity is an index of the magnitude, progression, and impact of the disease on the patient's lifestyle. This technique assists the clarification process immeasurably. Reflect on the significance of the following: (1) a patient with extertional and nonexertional chest pain who consumes 5 to 10 sublingual trinitroglycerin tablets per day and is unable to work; (2) a patient with longstanding peptic ulcer disease who presents with an exacerbation and on physical examination is found to have marked epigastric involuntary guarding; and (3) the patient with progressive dyspnea whose pulmonary function testing reveals marked airflow obstruction on all parameters.

Precipitating events, alleviating elements, exacerbating stimuli, and associated symptoms or signs form the components of influential factors. These are well-known aspects of symptom characterization in the present illness but perhaps not appreciated when performing physical examination maneuvers and laboratory procedures. For instance, when examining an elderly woman who injured her hip in a fall, palpation and observation reveal that the pain is partially alleviated with hip flexion, exacerbated with other movements, and that there are associated signs of adductor muscle spasm and external rotation of the hip. As for the laboratory, a 41 -year-old man presents with substernal pressure-like chest pain occurring more commonly at rest than after exertion. Both the physical examination and resting electrocardiogram are normal. During a treadmill electrocardiogram, 2 mm of ST depression developed in the inferior leads (precipitated), one episode of six-beat atrial tachycardia was observed (associated), and all changes reverted to baseline 6 minutes after completion of the procedure (alleviated).

The expert witness technique is a method to validate, to collect additional information of diagnostic importance, and to assist in the determination of severity. The physician implements this strategy during the interview with the patient, when communicating with family, friends, ambulance technicians, nurses, etc., and during the physical and laboratory examinations by requesting informal and formal second-opinion consultations from colleagues. There are distinct advantages in the diagnostic and therapeutic framework when a critical finding is confirmed by a trusted associate. Is there asymmetry of the supraclavicular fossae? Is this nevus suspicious for neoplasm? Do you see an infiltrate in the lingula? Are these atypical lymphocytes? As a result of such exchange, clinical certainty is increased and the probability of premature closure is lessened.

Choosing Manifestations

There is a constant interplay between characterization and choosing manifestations. The latter is an intermediate step of hypothesis evaluation in which symptoms, signs, and problems are translated into medically meaningful terms with appropriate pathophysiological significance. Two categories exist under choosing manifestations: labeling and deviance.

Labeling permits the matching of symptoms and signs with accepted medical terminology. Accuracy depends on the clinician's and patient's ability to perceive, interact, and respond to the other individual's verbal comments. When examining the patient, the physician's psychomotor skills, perception, and interpretation of findings are necessary to label correctly. Frequently, when describing or inscribing physical findings, they are not expressed literally and only the interpretative statement is made. The term "spider angiomas" quite adequately and completely accounts for the description: "there are multiple erythematous dot-like lesions with serpiginous processes radiating in several different directions; they blanch on pressure and refill centrally when the pressure is released." The exception is the situation when findings cannot be labeled because the clinician is not knowledgeable enough to do so. In a laboratory study, the process is the same as in the physical examination despite the fact that labeling may be the province of a consultant. As an example, a radiologic procedure would probably be interpreted more expertly by a radiologist. Obviously, there are many pitfalls and errors in labeling because the process is complex, subjective, and dynamic.

The technique of deviance applies to understanding the ranges of normalcy and abnormality in assessing the significance of symptoms and signs being evaluated. Discussion of laboratory normal ranges are contained elsewhere in this book. There is considerable difficulty in assigning normalcy and abnormality to clinical manifestations collected during the history and physical. The physician's challenge in the history depends not only on his or her clinical skills, zeal, and biases but also on the patient's level of cooperation and memory. Thus, without attention to details, misperceptions and verbal misstatements occur. Likewise, signs elicited during the physical examination are at best semiquantitative. The danger, of course, is either overinterpretation or underinterpretation of manifestations. The consequences of the former are needless worry, unnecessary investigations, operations, treatments, and excessive costs. In the opposite situation, an underlying disease is not detected.

There is rarely difficulty in determining the abnormal state when marked deviations from normal exist. It is when the manifestation is less than severe that the clinician has a dilemma. It is no wonder that items in the history and physical are reviewed and repeated, that the same laboratory procedure is reordered, and that an advanced level test of a more invasive and costly nature is requested. Furthermore, these situations typically result in soliciting second opinions from other experts.

- Hypothesis Analysis

Whether it be soliciting a response during the history, performing a physical examination maneuver, or utilizing a laboratory test, the physician proceeds from an open-ended data collection mode in characterization to direct and specific ones in hypothesis analysis. Implicit in this definition is either a yes–no response in the history or a positive–negative result in the physical and laboratory examinations. Although clinical experience and knowledge of pathophysiology are central to any aspect of the patient–physician encounter, they are infinitely more essential when testing hypotheses. Frequently clinicians may characterize with only topographic-based hypotheses in mind, but it is impossible to analyze without explanatory sets relating to explicit entities, etiologies, and complications at hand. The intellectual preparatory mechanisms embodied in analyzing result in questions, maneuvers, and procedures that reflect more synthesis, development, and creativity. While employing this strategy, the clinician focuses on solidifying or refuting hypotheses entertained. The reward for the yes–no response and the positive–negative result justifies any inherent risk assumed by thwarting spontaneous symptom-related statements from the patient and by sacrificing detailed evaluation of every aspect of the physical and laboratory examinations. The danger of premature closure is no longer a factor.

Pertinent Systems Review

The presence of a symptom, problem, or physical sign, already localized to a domain and focus, requires the search for other symptoms and signs that, if present, may be manifestations of disease in the same domain. This is explorative direct questioning and examining in a nondirective manner. The clinician assumes that pursuing symptoms and signs within the same system is more likely to yield positive results than embarking on a questioning and examining process in an unrelated system area. It also compensates for the physician's fallibility in remembering and recognizing all disease patterns, provides additional thinking time, and permits one to rule out more remote, or even more common, possibilities.

Pathophysiology

This strategy depends on that basic fund of knowledge related to etiologies and complications of disease processes. In this context, as one applies the principles of the history, physical, and laboratory medicine to resolve a patient's problems, the pervasive and primary concern is to affix causality. This is the essence of the physician's expectations as a problem solver. Inherent in utilizing the technique of pathophysiology is that no question, maneuver, or procedure can be effected without a specific hypothetical explicatory set in mind ( Figure 2.1 , Table 2.1 ). Searching for etiological clues assists the clinician in isolating the problem according to the pathoanatomic and pathophysiologic entities, whereas seeking complications facilitates focusing on derangements and disorders. Note that the latter two, as explicatory sets, do explain symptoms and signs, but rarely, if ever, account for ultimate causation. For instance, when determining that a patient has paroxysmal nocturnal dyspnea, the theory is that congestive heart failure is responsible for the symptom. As a disorder, however, congestive heart failure is a complication of a more basic disease mechanism, perhaps myocardial infarction secondary to atherosclerosis, which in itself may be caused by acquired hyperlipidemia, etc.

Clinician Priority

This particular subsection of hypothesis analysis addresses techniques that transcend purity in diagnostic reasoning. In all preceding sections the goal was strictly the pursuit of a diagnosis. The concept of clinician priority attempts to focus the diagnostic process into the practical perspective of clinical medicine. These techniques are utilized subconsciously throughout the encounter, but are rarely appreciated as such by the clinician. They represent the "art of medicine" and "picking up the game" strategies that one assimilates, perhaps by osmosis, during clinical training apprenticeships and through continuing professional experience. There are five such categories: urgency, uncertainty, threshold, reversibility, and commonness.

When a clinician acts out of urgency , it is because the presence of a particular symptom or sign implies that immediate diagnostic or therapeutic intervention is indicated. It is action oriented. Attention is directed toward the acutely ill and potentially acutely ill, who may have serious life-threatening or fatal diseases. Accordingly, uncommon entities may be ranked higher than those with greater frequency of occurrence. With this in mind, it is easy to comprehend why the physician chooses to elicit the presence of rigors in a patient with fever, dysuria, and flank pain ("Is the patient bacteremic?") and dedicates more than a few moments to observe the same patient carefully for pilo-erection and decreased skin perfusion. There are even occasions in which the search for a particular complication (hypovolemia from diarrhea) provides a much stronger stimulus for the clinician than the cause of the diarrhea itself.

Coping with uncertainty at the bedside is universal. It plagues the physician, but protects the patient. Basically this strategy forces one to collect more historical, physical examination, and laboratory data than may be necessary because the consequences of diagnostic error without doing so are too great. A decision must be made at what level uncertainty is tolerable. The challenge is to be conservative and to avoid errors of omission. It is as if the fear of clinical consequences and its attendant penalties enable clinicians to be more effective. Unfortunately, there is excessive reliance on laboratory medicine. It is infinitely more acceptable to implement the strategy of uncertainty to its fullest during history taking and the physical examination, when patient risk is virtually nonexistent, but it is not necessarily more judicious when utilizing laboratory procedures.

Threshold is the converse of uncertainty. It is that point at which further data could be collected but neither a positive nor a negative response would contribute to the analytic process or change the predictive value significantly. Uncertainty prevails until that critical point when the remaining doubt can be tolerated. The dilemma is whether to continue being driven by uncertainty or to invoke threshold. If the threshold is set too high, then redundant, and often needless, information is sought. When set too low, the physician may negate an opportunity to make a diagnosis or institute therapy.

The emphasis on reversibility or treatability embodies the essence of medicine, that is, cure the patient. Relevant data must be collected to aid therapeutic decision making. If presented with two competing hypotheses, the one with the greatest potential for treatment will be ranked higher. The payoff for doing so is greater. The following case exemplifies this: A 59-year-old man has had progressively worsening dyspnea for 2½ years without associated wheezing. Physical examination reveals findings compatible with chronic obstructive pulmonary disease. Pulmonary function spirometry with and without bronchodilators is ordered despite the fact the patient has clinical evidence of irreversible disease. It is hoped that a reactive obstructive component, responsive to bronchodilator therapy, will be uncovered.

The adage "common things are common" aptly describes the technique of commonness. Of those techniques discussed previously, it is most likely to be in the clinician's awareness. The issue is one of good sense. It is not helpful to entertain an uncommon hypothesis unless there is good reason, as when invoking urgency and uncertainty. Thus, in the patient with abdominal pain in whom pancreatitis is under consideration, why collect data about symptoms relevant to renal disease, emboli, vasculitis, etc., when it is more appropriate to investigate the presence of symptoms of cholelithiasis and alcohol intake, both of which account for 95% of the cases of acute pancreatitis? Similarly, in a patient with an enlarging abdomen and swollen ankles, it will be a much higher priority to check for signs of cardiac and hepatic diseases as opposed to those implicating inferior vena cava obstruction.

Case Building

This process involves both consolidation of clinical data and refinement and modification of diagnostic possibilities to assist in solidifying hypotheses, refuting them, and distinguishing between two likely candidates. Elimination enables one to disprove a hypothesis in a convincing manner by seeking negative responses and results to questions and maneuvers of high sensitivity (true positive rate) for a given hypothesis. Thus it is difficult to entertain seriously the diagnosis of infectious mononucleosis without sore throat, reactive airway disease without prolonged expiration, and nephrotic syndrome in the patient without proteinuria. Discriminating between two closely related hypotheses is a frequent challenge. In the patient with several episodes of hematochezia, determining whether the blood is on the outside of the stool or mixed in with the stool helps to distinguish between anal disease and luminal pathology more proximal to the anus. A comparable example in the physical examination is attempting to transilluminate a scrotal mass, and in the laboratory arena when ordering a serum gamma-glutamyltransferase in a patient with elevated alkaline phosphatase. Finally, with confirmation one attempts to clinch a diagnosis by seeking clinical manifestations of high specificity despite the fact that one or more bits of data already support such. Thus, discerning that a patient has low back pain that radiates to the thigh and lateral calf is suggestive of radiculopathy. But determining that this pain is associated with numbness and that it is exacerbated by coughing, sneezing, and straining is even more convincing. Similarly in the physical examination of a patient with dyspnea on exertion, the findings of peripheral edema, hepatomegaly, inspiratory rales, and distended neck veins support the contention that left-sided heart failure is the cause, but the finding of an S 3 , gallop is definitive and confirms the suspicion.

- Hypothesis Assembling

This element in the sequential strategic process of diagnostic reasoning encompasses the synthesis and integration of multiple clinical clues from the vast amount of data collected. Assembling is governed by the principle that a hierarchical organizational structure of facts exists in the scheme of diagnosis. The stimuli are to reduce the scope of the problem and sort out the complexities encountered to date. Ultimately, a working problem list will be developed to guide any further investigative pursuits and therapeutic management. To be functional, the problem list must be both coherent and adequate in the context of the patient being evaluated. In history taking, hypothesis assembling encompasses the formulation of a narrowed set of hypotheses to permit further characterization and analysis during the physical examination and laboratory testing. At the conclusion of the physical examination, all clues and elicited manifestations from the history and physical undergo the same process to direct laboratory data collection. Finally, after all appropriate laboratory tests are completed, the problem list is transformed into the refined product of impressions and diagnoses.

Many positive and negative items elicited at the bedside are not relevant and must be filtered out. Pertinence determines which normal and abnormal manifestations will be retained or disregarded and which will require further attention. In general, findings with pathophysiological significance, especially those with a high clinician priority, will be kept. Likewise, symptoms and signs will be retained if there is a potential for inclusion in a particular explicatory set. For example, an 85-year-old man has a 7-day history of nausea, vomiting (once daily), diarrhea (five to ten times daily), headache, nasal stuffiness, and dizziness. He was treated with antibiotics for a carbuncle 4 weeks ago and has a past history of left inguinal herniorrhaphy and cholecystectomy. On physical examination, he had a 25 mm Hg orthostatic drop in systolic blood pressure, poor skin turgor, scarred tympanic membranes, anisocoria, S 4 gallop, right upper quadrant and left inguinal scars, active bowel sounds, diffuse abdominal tenderness, perianal erythema, perianal skin tag, and a mildly enlarged prostate. Are headache, nasal stuffiness, left inguinal herniorrhaphy, scarred tympanic membranes, anisocoria, etc., pertinent? Are they truly clinical problems worthy of note at this time?

Clustering or lumping is the aggregation of several symptoms and signs into recognizable patterns that fit under the sets of disorders, derangements, pathoanatomic entities, and pathophysiologic entities. They may be related to one another by cause and effect (dependent clustering) or by virtue of their clinical significance (independent clustering). In the former category polyuria, polydipsia, and polyphagia are classic symptoms for diabetes mellitus. The osmotic effect of glucose is responsible for polyuria and calorie loss, which cause both polydipsia and polyphagia. In the independent category, one can cluster orthopnea, paroxysmal nocturnal dyspnea, distended neck veins, positive hepatojugular reflux, S 3 gallop, and peripheral edema under the umbrella of congestive heart failure. Lumping then is consistent with the law of parsimony, which dictates that clinicians should make as few diagnoses as possible. This is monopathic reasoning.

Frequently patients have many positive symptoms and signs. Whereas clustering supports economy in diagnosis, splitting promotes polypathic reasoning with the retention of certain clinical manifestations as separate problem entities because inappropriate aggregation may jeopardize the diagnostic and therapeutic processes. The dilemma posed to physicians by splitting is similar to uncertainty in some respects. There is a fear of missing a diagnosis. In the 85-year-old patient described above under pertinence, orthostatic hypotension was found on physical examination. There is a high probability that this objective sign is caused by vomiting and diarrhea. Thus, they could be lumped together as cause and effect. In so doing, however, the clinician is at risk of excluding a completely separate and important problem, namely, extracellular volume depletion. Splitting prevents this from happening.

Problem listing is the identification of a formalized working set of symptoms and signs, aggregated symptoms and signs, as well as hypothesized derangements and disorders. Either by lumping or splitting, the clinician must account for all positive findings elicited in the history, physical, and laboratory sections of the patient's evaluation. In order to qualify for listing, each must have an importance diagnostically, therapeutically, or both.

Referring once again to the patient described earlier, original laboratory work-up revealed azotemia (blood urea nitrogen, 38 mg/dl; creatinine, 2.4 mg/dl), hypoalbuminemia (albumin, 3.1 g/dl), elevated alkaline phosphatase (alkaline phosphatase, 212 U/L), hyperuricemia (uric acid, 9.2 mg/dl), and moderate stool leukocytes. Thus an appropriate problem list for this patient at the end of the history might include (1) diarrhea, (2) headache, (3) dizziness, and (4) prior surgeries. After the physical examination, the list might be revised to state (1) diarrhea, (2) dizziness, (3) prior surgeries, (4) extracellular volume depletion, (5) perianal erythema, and (6) mildly enlarged prostate. With the knowledge of the laboratory data the refined version might be listed accordingly: (1) inflammatory diarrhea, (2) extracellular volume depletion, (3) dizziness, (4) perianal erythema, (5) mildly enlarged prostate, (6) azotemia, (7) hypoalbuminemia, (8) hyperuricemia, and (9) elevated alkaline phosphatase. Obviously, the patient's problems are not totally resolved, but they are certainly narrowed to the point that focused supportive therapy can be administered and second-line hypothesis-driven laboratory testing can be planned ultimately to confirm definitive diagnoses.

Collecting and analyzing data involve problem identification, generation of general and specific hypotheses, methodical information gathering during evaluation and analysis, and assembling of pertinent clinical clues as problems to direct further investigation and treatment. This process is a continuum and quite dynamic. It never seems to end because the "patient" host and disease are changing variables. The clinician must proceed in a flexible manner throughout the entire framework, based on judgment, learned behavior, and knowledge of pathophysiologic principles.

- Bashook PG. A conceptual framework for measuring clinical problem-solving. J Med Educ. 1976; 51 :109–14. [ PubMed : 1249820 ]

- Benbassat J, Bachar-Bassan E. A comparison of initial diagnostic hypotheses of medical students and internists. J Med Educ. 1984; 59 :951–56. [ PubMed : 6502664 ]

- Blois MS. Clinical judgment and computers. N Engl J Med. 1980; 303 :192–97. [ PubMed : 7383090 ]

- Bollet AJ. Analyzing the diagnostic process. Res Staff Phys Dec 1978; 41–42.

- Christensen-Szalanski JJJ, Bushyhead JB. Physicians" misunderstanding of normal findings. Med Decis Making. 1983; 3 :169–75. [ PubMed : 6633186 ]

- Connelly DP, Johnson PE. The medical problem-solving process. Hum Pathol. 1980; 11 :412–18. [ PubMed : 7429488 ]

- Cox KR. How do you decide what it is and what to do? Med J Aust. 1975; 2 :57–59. [ PubMed : 1160724 ]

- de Dombal FT, Horrorcks JC, Staniland JR, Guillou PJ. Production of artificial "case histories" by using a small computer. Br Med J. 1971; 2 :578–81. [ PMC free article : PMC1795846 ] [ PubMed : 5579198 ]

- Dudley HAF. The clinical task. Lancet. 1970; 2 :1352–54. [ PubMed : 4098919 ]

- *Eddy DM, Clanton CH. The art of diagnosis: solving the clinicopathological exercise. N Engl J Med. 1982; 306 :1263–67. [ PubMed : 7070446 ]

- Ekwo EE. An analysis of the problem-solving process of third year medical students. Annu Conf Res Med Educ. 1977; 16 :317–22. [ PubMed : 75707 ]

- *Elstein AS, Shulman LS, Sprafka SA. Medical problem solving: an analysis of clinical reasoning. Cambridge: Harvard University Press, 1978.

- *Feinstein AR. An analysis of diagnostic reasoning: I. The domains and disorders of clinical macrobiology. Yale J Biol Med. 1973; 46 :212–32. [ PMC free article : PMC2591978 ] [ PubMed : 4803623 ]

- *Feinstein AR. An analysis of diagnostic reasoning: II. The strategy of intermediate decisions. Yale J Biol Med. 1973; 46 :264–83. [ PMC free article : PMC2591913 ] [ PubMed : 4775683 ]

- Illingworth RS. The importance of knowing what is normal. Publ Health Lond. 1981; 95 :66–68. [ PubMed : 7244078 ]

- Johnson PE, Duran AS, Hassebrock F, Moller J, Prietula M, Fel-tovich PJ, Swanson DB. Expertise and error in diagnostic reasoning. Cog Sci. 1981; 5 :235–83.

- *Kassirer JP, Gorry GA. Clinical problem solving: a behavioral analysis. Ann Intern Med. 1978; 89 :245–55. [ PubMed : 677593 ]

- Komaroff AL. The variability and inaccuracy of medical data. IEEE Proc. 1979; 67 :1196–1207.

- Lipkin M. Diagnosis, the assessment of the patient and his problems. In: The care of patients: concepts and tactics. New York: Oxford University Press 1974:103–56.

- Miller GA. The magical number seven, plus or minus two: some limits on our capacity for processing information. Psychol Rev. 1956; 63 :81–97. [ PubMed : 13310704 ]

- *Miller PB. Strategy selection in medical diagnosis. MAC-TR-153. Massachusetts Institute of Technology, Project MAC, Cambridge, 1975.

- Nardone DA, Reuler JB, Girard DE. Teaching history-taking: where are we? Yale J Biol Med. 1980; 53 :233–50. [ PMC free article : PMC2595873 ] [ PubMed : 7405275 ]

- Pauker SG, Kassirer JP. The threshold approach to clinical decision making. N Engl J Med. 1980; 302 :1109–17. [ PubMed : 7366635 ]

- Pople HE Jr., Myers JD, Miller RA. DIALOG: A model of diagnostic logic for internal medicine. In: Proceedings of the Fourth International Conference on Artificial Intelligence, 1975:848–55.

- *Rubin AD. Hypothesis formation and evaluation in medical diagnosis. AI-TR-316, Artificial Intelligence Laboratory, Massachusetts Institute of Technology, Cambridge, 1975.

- Sox HC; Jr., Blatt MA, Higgins MC, Marlon, KI. Medical decision making. Boston: Bulterworths, 1988.

- *Sprosty PJ. The use of questions in the diagnostic problem solving process. In: The diagnostic process, Jacquez JA, (ed). Ann Arbor: University of Michigan Press, 1964:281–310.

- Style A. Intuition and problem solving. J Roy Coll Gen Pract. 1979; 29 :71–74. [ PMC free article : PMC2159128 ] [ PubMed : 480297 ]

- Vovtovich AF, Rippey RM. Knowledge, realism, and diagnostic reasoning in a physical diagnosis course. J Med Educ. 1982; 57 :461–67. [ PubMed : 7077636 ]

- Cite this Page Nardone DA. Collecting and Analyzing Data: Doing and Thinking. In: Walker HK, Hall WD, Hurst JW, editors. Clinical Methods: The History, Physical, and Laboratory Examinations. 3rd edition. Boston: Butterworths; 1990. Chapter 2.

- PDF version of this page (2.3M)

In this Page

Related items in bookshelf.

- All Textbooks

Related information

- PMC PubMed Central citations

- PubMed Links to PubMed

Similar articles in PubMed

- [Issues of research in medicine]. [Acta Med Croatica. 2006] [Issues of research in medicine]. Topić E. Acta Med Croatica. 2006; 60 Suppl 1:5-16.

- Guilty? Not guilty? Of "doing" without "thinking" "thinking" without "doing" or 'thinking with doing--doing with thinking'. [Iowa Dent J. 1977] Guilty? Not guilty? Of "doing" without "thinking" "thinking" without "doing" or 'thinking with doing--doing with thinking'. Richey DW. Iowa Dent J. 1977 Jan; 63(1):11.

- Perspective: Whither the problem list? Organ-based documentation and deficient synthesis by medical trainees. [Acad Med. 2010] Perspective: Whither the problem list? Organ-based documentation and deficient synthesis by medical trainees. Kaplan DM. Acad Med. 2010 Oct; 85(10):1578-82.

- Review A taxonomy of prospection: introducing an organizational framework for future-oriented cognition. [Proc Natl Acad Sci U S A. 2014] Review A taxonomy of prospection: introducing an organizational framework for future-oriented cognition. Szpunar KK, Spreng RN, Schacter DL. Proc Natl Acad Sci U S A. 2014 Dec 30; 111(52):18414-21. Epub 2014 Nov 21.

- Review Milestones of critical thinking: a developmental model for medicine and nursing. [Acad Med. 2014] Review Milestones of critical thinking: a developmental model for medicine and nursing. Papp KK, Huang GC, Lauzon Clabo LM, Delva D, Fischer M, Konopasek L, Schwartzstein RM, Gusic M. Acad Med. 2014 May; 89(5):715-20.

Recent Activity

- Collecting and Analyzing Data: Doing and Thinking - Clinical Methods Collecting and Analyzing Data: Doing and Thinking - Clinical Methods

Your browsing activity is empty.

Activity recording is turned off.

Turn recording back on

Connect with NLM

National Library of Medicine 8600 Rockville Pike Bethesda, MD 20894

Web Policies FOIA HHS Vulnerability Disclosure

Help Accessibility Careers

Scientific hypothesis generation process in clinical research: a secondary data analytic tool versus experience study protocol

- Find this author on Google Scholar

- Find this author on PubMed

- Search for this author on this site

- ORCID record for Xia Jing

- For correspondence: [email protected]

- Info/History

- Supplementary material

- Preview PDF

Background Scientific hypothesis generation is a critical step in scientific research that determines the direction and impact of any investigation. Despite its vital role, we have limited knowledge of the process itself, hindering our ability to address some critical questions.

Objective To what extent can secondary data analytic tools facilitate scientific hypothesis generation during clinical research? Are the processes similar in developing clinical diagnoses during clinical practice and developing scientific hypotheses for clinical research projects? We explore the process of scientific hypothesis generation in the context of clinical research. The study is designed to compare the role of VIADS, our web-based interactive secondary data analysis tool, and the experience levels of study participants during their scientific hypothesis generation processes.

Methods Inexperienced and experienced clinical researchers are recruited. In this 2×2 study design, all participants use the same data sets during scientific hypothesis-generation sessions, following pre-determined scripts. The inexperienced and experienced clinical researchers are randomly assigned into groups with and without using VIADS. The study sessions, screen activities, and audio recordings of participants are captured. Participants use the think-aloud protocol during the study sessions. After each study session, every participant is given a follow-up survey, with participants using VIADS completing an additional modified System Usability Scale (SUS) survey. A panel of clinical research experts will assess the scientific hypotheses generated based on pre-developed metrics. All data will be anonymized, transcribed, aggregated, and analyzed.

Results This study is currently underway. Recruitment is ongoing via a brief online survey 1 . The preliminary results show that study participants can generate a few to over a dozen scientific hypotheses during a 2-hour study session, regardless of whether they use VIADS or other analytic tools. A metric to assess scientific hypotheses within a clinical research context more accurately, comprehensively, and consistently has also been developed.

Conclusion The scientific hypothesis-generation process is an advanced cognitive activity and a complex process. Clinical researchers can quickly generate initial scientific hypotheses based on data sets and prior experience based on our current results. However, refining these scientific hypotheses is much more time-consuming. To uncover the fundamental mechanisms of generating scientific hypotheses, we need breakthroughs that capture thinking processes more precisely.

Competing Interest Statement

The authors have declared no competing interest.

Clinical Trial

This study is not a clinical trial per NIH definition.

Funding Statement

The project is supported by a grant from the National Library of Medicine of the United States National Institutes of Health (R15LM012941) and partially supported by the National Institute of General Medical Sciences of the National Institutes of Health (P20 GM121342). The content is solely the author's responsibility and does not necessarily represent the official views of the National Institutes of Health.

Author Declarations

I confirm all relevant ethical guidelines have been followed, and any necessary IRB and/or ethics committee approvals have been obtained.

The details of the IRB/oversight body that provided approval or exemption for the research described are given below:

The study has been approved by the Institutional Review Board (IRB) at Clemson University (IRB2020-056).

I confirm that all necessary patient/participant consent has been obtained and the appropriate institutional forms have been archived, and that any patient/participant/sample identifiers included were not known to anyone (e.g., hospital staff, patients or participants themselves) outside the research group so cannot be used to identify individuals.

I understand that all clinical trials and any other prospective interventional studies must be registered with an ICMJE-approved registry, such as ClinicalTrials.gov. I confirm that any such study reported in the manuscript has been registered and the trial registration ID is provided (note: if posting a prospective study registered retrospectively, please provide a statement in the trial ID field explaining why the study was not registered in advance).

I have followed all appropriate research reporting guidelines and uploaded the relevant EQUATOR Network research reporting checklist(s) and other pertinent material as supplementary files, if applicable.

Data Availability

This manuscript is the study protocol. After we analyze and publish the results, transcribed, aggregated, de-identified data can be requested from the authors.

Abbreviations

View the discussion thread.

Supplementary Material

Thank you for your interest in spreading the word about medRxiv.

NOTE: Your email address is requested solely to identify you as the sender of this article.

Citation Manager Formats

- EndNote (tagged)

- EndNote 8 (xml)

- RefWorks Tagged

- Ref Manager

- Tweet Widget

- Facebook Like

- Google Plus One

Subject Area

- Health Informatics

- Addiction Medicine (336)

- Allergy and Immunology (664)

- Anesthesia (178)

- Cardiovascular Medicine (2600)

- Dentistry and Oral Medicine (314)

- Dermatology (218)

- Emergency Medicine (391)

- Endocrinology (including Diabetes Mellitus and Metabolic Disease) (920)

- Epidemiology (12135)

- Forensic Medicine (10)

- Gastroenterology (752)

- Genetic and Genomic Medicine (4031)

- Geriatric Medicine (380)

- Health Economics (670)

- Health Informatics (2605)

- Health Policy (994)

- Health Systems and Quality Improvement (966)

- Hematology (359)

- HIV/AIDS (832)

- Infectious Diseases (except HIV/AIDS) (13616)

- Intensive Care and Critical Care Medicine (785)

- Medical Education (397)

- Medical Ethics (109)

- Nephrology (426)

- Neurology (3797)

- Nursing (208)

- Nutrition (564)

- Obstetrics and Gynecology (728)

- Occupational and Environmental Health (689)

- Oncology (1989)

- Ophthalmology (576)

- Orthopedics (235)

- Otolaryngology (303)

- Pain Medicine (249)

- Palliative Medicine (72)

- Pathology (470)

- Pediatrics (1098)

- Pharmacology and Therapeutics (456)

- Primary Care Research (443)

- Psychiatry and Clinical Psychology (3379)

- Public and Global Health (6470)

- Radiology and Imaging (1379)

- Rehabilitation Medicine and Physical Therapy (801)

- Respiratory Medicine (866)

- Rheumatology (395)

- Sexual and Reproductive Health (403)

- Sports Medicine (337)

- Surgery (438)

- Toxicology (51)

- Transplantation (185)

- Urology (165)

- Comprehensive Learning Paths

- 150+ Hours of Videos

- Complete Access to Jupyter notebooks, Datasets, References.

Hypothesis Testing – A Deep Dive into Hypothesis Testing, The Backbone of Statistical Inference

- September 21, 2023

Explore the intricacies of hypothesis testing, a cornerstone of statistical analysis. Dive into methods, interpretations, and applications for making data-driven decisions.

In this Blog post we will learn:

- What is Hypothesis Testing?

- Steps in Hypothesis Testing 2.1. Set up Hypotheses: Null and Alternative 2.2. Choose a Significance Level (α) 2.3. Calculate a test statistic and P-Value 2.4. Make a Decision

- Example : Testing a new drug.

- Example in python

1. What is Hypothesis Testing?

In simple terms, hypothesis testing is a method used to make decisions or inferences about population parameters based on sample data. Imagine being handed a dice and asked if it’s biased. By rolling it a few times and analyzing the outcomes, you’d be engaging in the essence of hypothesis testing.

Think of hypothesis testing as the scientific method of the statistics world. Suppose you hear claims like “This new drug works wonders!” or “Our new website design boosts sales.” How do you know if these statements hold water? Enter hypothesis testing.

2. Steps in Hypothesis Testing

- Set up Hypotheses : Begin with a null hypothesis (H0) and an alternative hypothesis (Ha).

- Choose a Significance Level (α) : Typically 0.05, this is the probability of rejecting the null hypothesis when it’s actually true. Think of it as the chance of accusing an innocent person.

- Calculate Test statistic and P-Value : Gather evidence (data) and calculate a test statistic.

- p-value : This is the probability of observing the data, given that the null hypothesis is true. A small p-value (typically ≤ 0.05) suggests the data is inconsistent with the null hypothesis.

- Decision Rule : If the p-value is less than or equal to α, you reject the null hypothesis in favor of the alternative.

2.1. Set up Hypotheses: Null and Alternative

Before diving into testing, we must formulate hypotheses. The null hypothesis (H0) represents the default assumption, while the alternative hypothesis (H1) challenges it.

For instance, in drug testing, H0 : “The new drug is no better than the existing one,” H1 : “The new drug is superior .”

2.2. Choose a Significance Level (α)

When You collect and analyze data to test H0 and H1 hypotheses. Based on your analysis, you decide whether to reject the null hypothesis in favor of the alternative, or fail to reject / Accept the null hypothesis.

The significance level, often denoted by $α$, represents the probability of rejecting the null hypothesis when it is actually true.

In other words, it’s the risk you’re willing to take of making a Type I error (false positive).

Type I Error (False Positive) :

- Symbolized by the Greek letter alpha (α).

- Occurs when you incorrectly reject a true null hypothesis . In other words, you conclude that there is an effect or difference when, in reality, there isn’t.

- The probability of making a Type I error is denoted by the significance level of a test. Commonly, tests are conducted at the 0.05 significance level , which means there’s a 5% chance of making a Type I error .

- Commonly used significance levels are 0.01, 0.05, and 0.10, but the choice depends on the context of the study and the level of risk one is willing to accept.

Example : If a drug is not effective (truth), but a clinical trial incorrectly concludes that it is effective (based on the sample data), then a Type I error has occurred.

Type II Error (False Negative) :

- Symbolized by the Greek letter beta (β).

- Occurs when you accept a false null hypothesis . This means you conclude there is no effect or difference when, in reality, there is.

- The probability of making a Type II error is denoted by β. The power of a test (1 – β) represents the probability of correctly rejecting a false null hypothesis.

Example : If a drug is effective (truth), but a clinical trial incorrectly concludes that it is not effective (based on the sample data), then a Type II error has occurred.

Balancing the Errors :

In practice, there’s a trade-off between Type I and Type II errors. Reducing the risk of one typically increases the risk of the other. For example, if you want to decrease the probability of a Type I error (by setting a lower significance level), you might increase the probability of a Type II error unless you compensate by collecting more data or making other adjustments.

It’s essential to understand the consequences of both types of errors in any given context. In some situations, a Type I error might be more severe, while in others, a Type II error might be of greater concern. This understanding guides researchers in designing their experiments and choosing appropriate significance levels.

2.3. Calculate a test statistic and P-Value

Test statistic : A test statistic is a single number that helps us understand how far our sample data is from what we’d expect under a null hypothesis (a basic assumption we’re trying to test against). Generally, the larger the test statistic, the more evidence we have against our null hypothesis. It helps us decide whether the differences we observe in our data are due to random chance or if there’s an actual effect.

P-value : The P-value tells us how likely we would get our observed results (or something more extreme) if the null hypothesis were true. It’s a value between 0 and 1. – A smaller P-value (typically below 0.05) means that the observation is rare under the null hypothesis, so we might reject the null hypothesis. – A larger P-value suggests that what we observed could easily happen by random chance, so we might not reject the null hypothesis.

2.4. Make a Decision

Relationship between $α$ and P-Value

When conducting a hypothesis test:

We then calculate the p-value from our sample data and the test statistic.

Finally, we compare the p-value to our chosen $α$:

- If $p−value≤α$: We reject the null hypothesis in favor of the alternative hypothesis. The result is said to be statistically significant.

- If $p−value>α$: We fail to reject the null hypothesis. There isn’t enough statistical evidence to support the alternative hypothesis.

3. Example : Testing a new drug.

Imagine we are investigating whether a new drug is effective at treating headaches faster than drug B.

Setting Up the Experiment : You gather 100 people who suffer from headaches. Half of them (50 people) are given the new drug (let’s call this the ‘Drug Group’), and the other half are given a sugar pill, which doesn’t contain any medication.

- Set up Hypotheses : Before starting, you make a prediction:

- Null Hypothesis (H0): The new drug has no effect. Any difference in healing time between the two groups is just due to random chance.

- Alternative Hypothesis (H1): The new drug does have an effect. The difference in healing time between the two groups is significant and not just by chance.

Calculate Test statistic and P-Value : After the experiment, you analyze the data. The “test statistic” is a number that helps you understand the difference between the two groups in terms of standard units.

For instance, let’s say:

- The average healing time in the Drug Group is 2 hours.

- The average healing time in the Placebo Group is 3 hours.

The test statistic helps you understand how significant this 1-hour difference is. If the groups are large and the spread of healing times in each group is small, then this difference might be significant. But if there’s a huge variation in healing times, the 1-hour difference might not be so special.

Imagine the P-value as answering this question: “If the new drug had NO real effect, what’s the probability that I’d see a difference as extreme (or more extreme) as the one I found, just by random chance?”

For instance:

- P-value of 0.01 means there’s a 1% chance that the observed difference (or a more extreme difference) would occur if the drug had no effect. That’s pretty rare, so we might consider the drug effective.

- P-value of 0.5 means there’s a 50% chance you’d see this difference just by chance. That’s pretty high, so we might not be convinced the drug is doing much.

- If the P-value is less than ($α$) 0.05: the results are “statistically significant,” and they might reject the null hypothesis , believing the new drug has an effect.

- If the P-value is greater than ($α$) 0.05: the results are not statistically significant, and they don’t reject the null hypothesis , remaining unsure if the drug has a genuine effect.

4. Example in python

For simplicity, let’s say we’re using a t-test (common for comparing means). Let’s dive into Python:

Making a Decision : “The results are statistically significant! p-value < 0.05 , The drug seems to have an effect!” If not, we’d say, “Looks like the drug isn’t as miraculous as we thought.”

5. Conclusion

Hypothesis testing is an indispensable tool in data science, allowing us to make data-driven decisions with confidence. By understanding its principles, conducting tests properly, and considering real-world applications, you can harness the power of hypothesis testing to unlock valuable insights from your data.

More Articles

F statistic formula – explained, correlation – connecting the dots, the role of correlation in data analysis, sampling and sampling distributions – a comprehensive guide on sampling and sampling distributions, law of large numbers – a deep dive into the world of statistics, central limit theorem – a deep dive into central limit theorem and its significance in statistics, similar articles, complete introduction to linear regression in r, how to implement common statistical significance tests and find the p value, logistic regression – a complete tutorial with examples in r.

Subscribe to Machine Learning Plus for high value data science content

© Machinelearningplus. All rights reserved.

Machine Learning A-Z™: Hands-On Python & R In Data Science

Free sample videos:.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 09 July 2024

Automating psychological hypothesis generation with AI: when large language models meet causal graph

- Song Tong ORCID: orcid.org/0000-0002-4183-8454 1 , 2 , 3 , 4 na1 ,

- Kai Mao 5 na1 ,

- Zhen Huang 2 ,

- Yukun Zhao 2 &

- Kaiping Peng 1 , 2 , 3 , 4

Humanities and Social Sciences Communications volume 11 , Article number: 896 ( 2024 ) Cite this article

1512 Accesses

4 Altmetric

Metrics details

- Science, technology and society

Leveraging the synergy between causal knowledge graphs and a large language model (LLM), our study introduces a groundbreaking approach for computational hypothesis generation in psychology. We analyzed 43,312 psychology articles using a LLM to extract causal relation pairs. This analysis produced a specialized causal graph for psychology. Applying link prediction algorithms, we generated 130 potential psychological hypotheses focusing on “well-being”, then compared them against research ideas conceived by doctoral scholars and those produced solely by the LLM. Interestingly, our combined approach of a LLM and causal graphs mirrored the expert-level insights in terms of novelty, clearly surpassing the LLM-only hypotheses ( t (59) = 3.34, p = 0.007 and t (59) = 4.32, p < 0.001, respectively). This alignment was further corroborated using deep semantic analysis. Our results show that combining LLM with machine learning techniques such as causal knowledge graphs can revolutionize automated discovery in psychology, extracting novel insights from the extensive literature. This work stands at the crossroads of psychology and artificial intelligence, championing a new enriched paradigm for data-driven hypothesis generation in psychological research.

Similar content being viewed by others

Augmenting interpretable models with large language models during training

ThoughtSource: A central hub for large language model reasoning data

Testing theory of mind in large language models and humans

Introduction.

In an age in which the confluence of artificial intelligence (AI) with various subjects profoundly shapes sectors ranging from academic research to commercial enterprises, dissecting the interplay of these disciplines becomes paramount (Williams et al., 2023 ). In particular, psychology, which serves as a nexus between the humanities and natural sciences, consistently endeavors to demystify the complex web of human behaviors and cognition (Hergenhahn and Henley, 2013 ). Its profound insights have significantly enriched academia, inspiring innovative applications in AI design. For example, AI models have been molded on hierarchical brain structures (Cichy et al., 2016 ) and human attention systems (Vaswani et al., 2017 ). Additionally, these AI models reciprocally offer a rejuvenated perspective, deepening our understanding from the foundational cognitive taxonomy to nuanced esthetic perceptions (Battleday et al., 2020 ; Tong et al., 2021 ). Nevertheless, the multifaceted domain of psychology, particularly social psychology, has exhibited a measured evolution compared to its tech-centric counterparts. This can be attributed to its enduring reliance on conventional theory-driven methodologies (Henrich et al., 2010 ; Shah et al., 2015 ), a characteristic that stands in stark contrast to the burgeoning paradigms of AI and data-centric research (Bechmann and Bowker, 2019 ; Wang et al., 2023 ).

In the journey of psychological research, each exploration originates from a spark of innovative thought. These research trajectories may arise from established theoretical frameworks, daily event insights, anomalies within data, or intersections of interdisciplinary discoveries (Jaccard and Jacoby, 2019 ). Hypothesis generation is pivotal in psychology (Koehler, 1994 ; McGuire, 1973 ), as it facilitates the exploration of multifaceted influencers of human attitudes, actions, and beliefs. The HyGene model (Thomas et al., 2008 ) elucidated the intricacies of hypothesis generation, encompassing the constraints of working memory and the interplay between ambient and semantic memories. Recently, causal graphs have provided psychology with a systematic framework that enables researchers to construct and simulate intricate systems for a holistic view of “bio-psycho-social” interactions (Borsboom et al., 2021 ; Crielaard et al., 2022 ). Yet, the labor-intensive nature of the methodology poses challenges, which requires multidisciplinary expertise in algorithmic development, exacerbating the complexities (Crielaard et al., 2022 ). Meanwhile, advancements in AI, exemplified by models such as the generative pretrained transformer (GPT), present new avenues for creativity and hypothesis generation (Wang et al., 2023 ).

Building on this, notably large language models (LLMs) such as GPT-3, GPT-4, and Claude-2, which demonstrate profound capabilities to comprehend and infer causality from natural language texts, a promising path has emerged to extract causal knowledge from vast textual data (Binz and Schulz, 2023 ; Gu et al., 2023 ). Exciting possibilities are seen in specific scenarios in which LLMs and causal graphs manifest complementary strengths (Pan et al., 2023 ). Their synergistic combination converges human analytical and systemic thinking, echoing the holistic versus analytic cognition delineated in social psychology (Nisbett et al., 2001 ). This amalgamation enables fine-grained semantic analysis and conceptual understanding via LLMs, while causal graphs offer a global perspective on causality, alleviating the interpretability challenges of AI (Pan et al., 2023 ). This integrated methodology efficiently counters the inherent limitations of working and semantic memories in hypothesis generation and, as previous academic endeavors indicate, has proven efficacious across disciplines. For example, a groundbreaking study in physics synthesized 750,000 physics publications, utilizing cutting-edge natural language processing to extract 6368 pivotal quantum physics concepts, culminating in a semantic network forecasting research trajectories (Krenn and Zeilinger, 2020 ). Additionally, by integrating knowledge-based causal graphs into the foundation of the LLM, the LLM’s capability for causative inference significantly improves (Kıcıman et al., 2023 ).

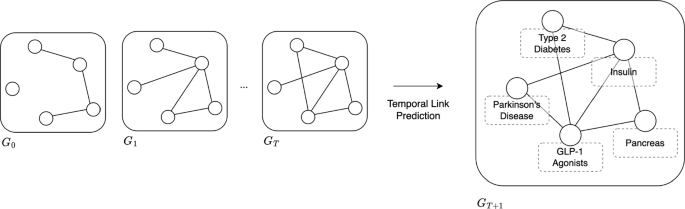

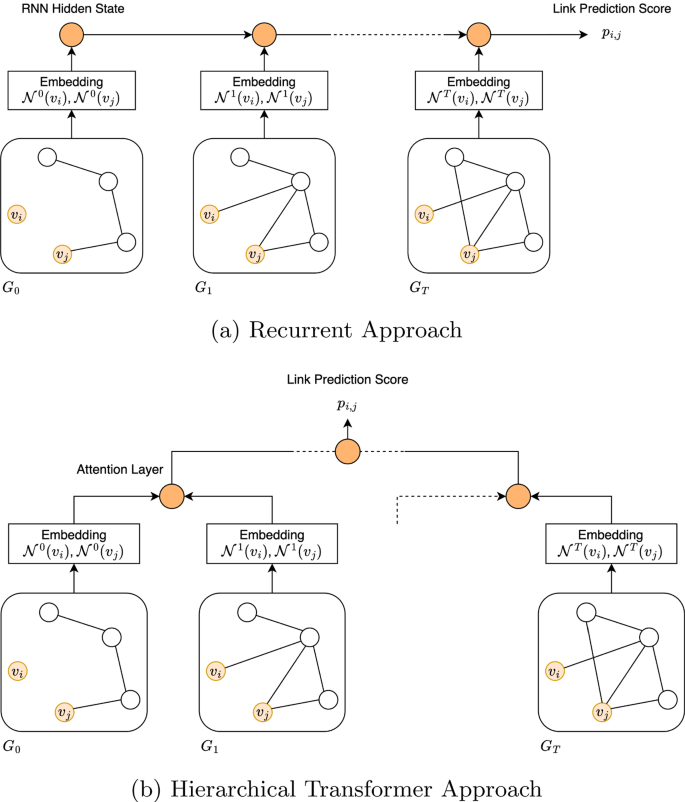

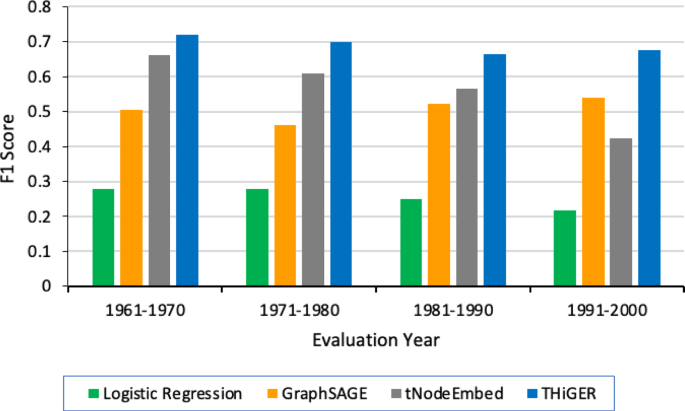

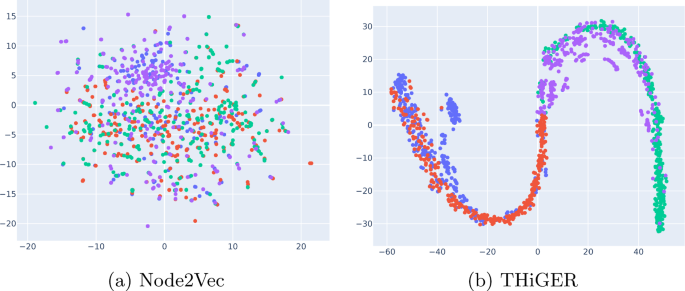

To this end, our study seeks to build a pioneering analytical framework, combining the semantic and conceptual extraction proficiency of LLMs with the systemic thinking of the causal graph, with the aim of crafting a comprehensive causal network of semantic concepts within psychology. We meticulously analyzed 43,312 psychological articles, devising an automated method to construct a causal graph, and systematically mining causative concepts and their interconnections. Specifically, the initial sifting and preparation of the data ensures a high-quality corpus, and is followed by employing advanced extraction techniques to identify standardized causal concepts. This results in a graph database that serves as a reservoir of causal knowledge. In conclusion, using node embedding and similarity-based link prediction, we unearthed potential causal relationships, and thus generated the corresponding hypotheses.

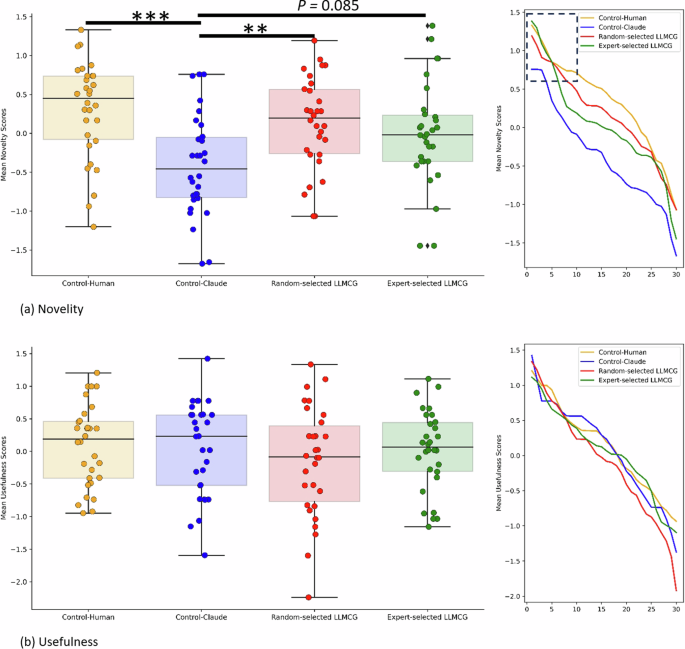

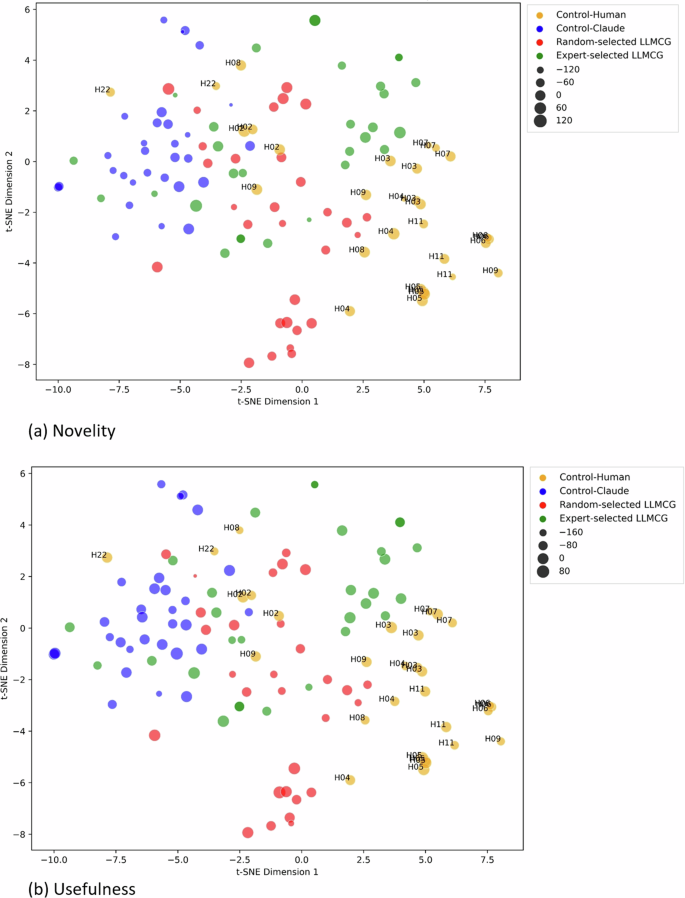

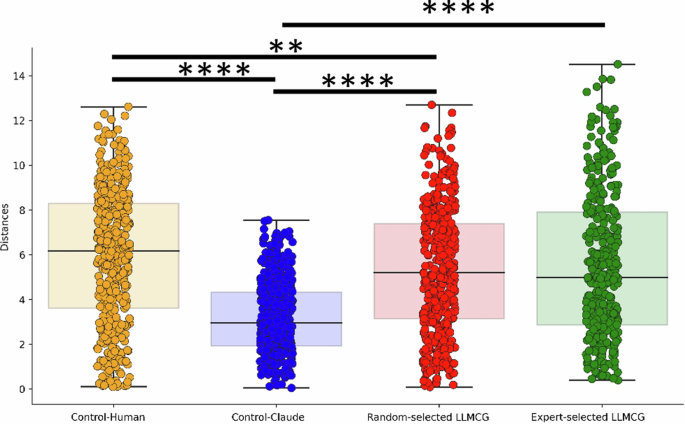

To gauge the pragmatic value of our network, we selected 130 hypotheses on “well-being” generated by our framework, comparing them with hypotheses crafted by novice experts (doctoral students in psychology) and the LLM models. The results are encouraging: Our algorithm matches the caliber of novice experts, outshining the hypotheses generated solely by the LLM models in novelty. Additionally, through deep semantic analysis, we demonstrated that our algorithm contains more profound conceptual incorporations and a broader semantic spectrum.

Our study advances the field of psychology in two significant ways. Firstly, it extracts invaluable causal knowledge from the literature and converts it to visual graphics. These aids can feed algorithms to help deduce more latent causal relations and guide models in generating a plethora of novel causal hypotheses. Secondly, our study furnishes novel tools and methodologies for causal analysis and scientific knowledge discovery, representing the seamless fusion of modern AI with traditional research methodologies. This integration serves as a bridge between conventional theory-driven methodologies in psychology and the emerging paradigms of data-centric research, thereby enriching our understanding of the factors influencing psychology, especially within the realm of social psychology.

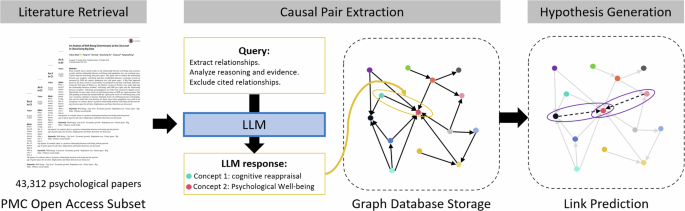

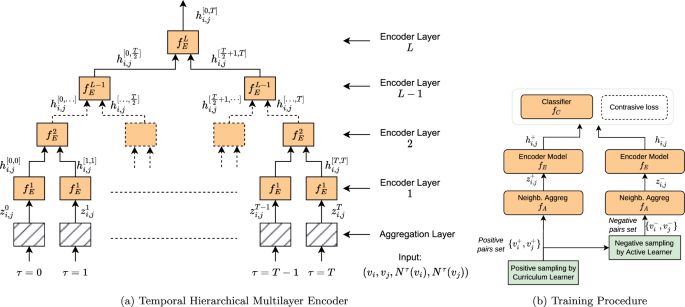

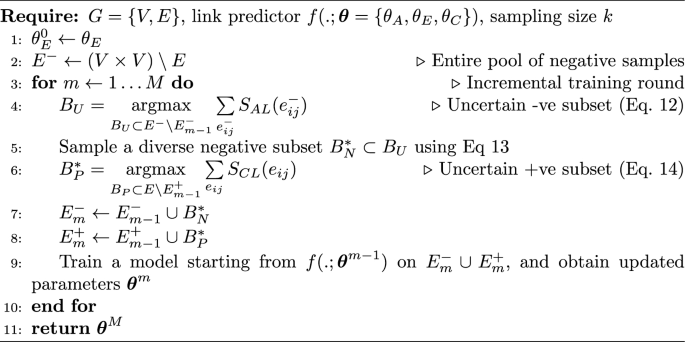

Methodological framework for hypothesis generation

The proposed LLM-based causal graph (LLMCG) framework encompasses three steps: literature retrieval, causal pair extraction, and hypothesis generation, as illustrated in Fig. 1 . In the literature gathering phase, ~140k psychology-related articles were downloaded from public databases. In step two, GPT-4 were used to distil causal relationships from these articles, culminating in the creation of a causal relationship network based on 43,312 selected articles. In the third step, an in-depth examination of these data was executed, adopting link prediction algorithms to forecast the dynamics within the causal relationship network for searching the highly potential causality concept pairs.

Note: LLM stands for large language model; LLMCG algorithm stands for LLM-based causal graph algorithm, which includes the processes of literature retrieval, causal pair extraction, and hypothesis generation.

Step 1: Literature retrieval

The primary data source for this study was a public repository of scientific articles, the PMC Open Access Subset. Our decision to utilize this repository was informed by several key attributes that it possesses. The PMC Open Access Subset boasts an expansive collection of over 2 million full-text XML science and medical articles, providing a substantial and diverse base from which to derive insights for our research. Furthermore, the open-access nature of the articles not only enhances the transparency and reproducibility of our methodology, but also ensures that the results and processes can be independently accessed and verified by other researchers. Notably, the content within this subset originates from recognized journals, all of which have undergone rigorous peer review, lending credence to the quality and reliability of the data we leveraged. Finally, an added advantage was the rich metadata accompanying each article. These metadata were instrumental in refining our article selection process, ensuring coherent thematic alignment with our research objectives in the domains of psychology.

To identify articles relevant to our study, we applied a series of filtering criteria. First, the presence of certain keywords within article titles or abstracts was mandatory. Some examples of these keywords include “psychol”, “clin psychol”, and “biol psychol”. Second, we exploited the metadata accompanying each article. The classification of articles based on these metadata ensured alignment with recognized thematic standards in the domains of psychology and neuroscience. Upon the application of these criteria, we managed to curate a subset of approximately 140K articles that most likely discuss causal concepts in both psychology and neuroscience.

Step 2: Causal pair extraction

The process of extracting causal knowledge from vast troves of scientific literature is intricate and multifaceted. Our methodology distils this complex process into four coherent steps, each serving a distinct purpose. (1) Article selection and cost analysis: Determines the feasibility of processing a specific volume of articles, ensuring optimal resource allocation. (2) Text extraction and analysis: Ensures the purity of the data that enter our causal extraction phase by filtering out nonrelevant content. (3) Causal knowledge extraction: Uses advanced language models to detect, classify, and standardize causal factors relationships present in texts. (4) Graph database storage: Facilitates structured storage, easy retrieval, and the possibility of advanced relational analyses for future research. This streamlined approach ensures accuracy, consistency, and scalability in our endeavor to understand the interplay of causal concepts in psychology and neuroscience.

Text extraction and cleaning

After a meticulous cost analysis detailed in Appendix A , our selection process identified 43,312 articles. This selection was strategically based on the criterion that the journal titles must incorporate the term “Psychol”, signifying their direct relevance to the field of psychology. The distributions of publication sources and years can be found in Table 1 . Extracting the full texts of the articles from their PDF sources was an essential initial step, and, for this purpose, the PyPDF2 Python library was used. This library allowed us to seamlessly extract and concatenate titles, abstracts, and main content from each PDF article. However, a challenge arose with the presence of extraneous sections such as references or tables, in the extracted texts. The implemented procedure, employing regular expressions in Python, was not only adept at identifying variations of the term “references” but also ascertained whether this section appeared as an isolated segment. This check was critical to ensure that the identified that the “references” section was indeed distinct, marking the start of a reference list without continuation into other text. Once identified as a standalone entity, the next step in the method was to efficiently remove the reference section and its subsequent content.

Causal knowledge extraction method

In our effort to extract causal knowledge, the choice of GPT-4 was not arbitrary. While several models were available for such tasks, GPT-4 emerged as a frontrunner due to its advanced capabilities (Wu et al., 2023 ), extensive training on diverse data, with its proven proficiency in understanding context, especially in complex scientific texts (Cheng et al., 2023 ; Sanderson, 2023 ). Other models were indeed considered; however, the capacity of GPT-4 to generate coherent, contextually relevant responses gave our project an edge in its specific requirements.