An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

The PMC website is updating on October 15, 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Korean Med Sci

- v.37(16); 2022 Apr 25

A Practical Guide to Writing Quantitative and Qualitative Research Questions and Hypotheses in Scholarly Articles

Edward barroga.

1 Department of General Education, Graduate School of Nursing Science, St. Luke’s International University, Tokyo, Japan.

Glafera Janet Matanguihan

2 Department of Biological Sciences, Messiah University, Mechanicsburg, PA, USA.

The development of research questions and the subsequent hypotheses are prerequisites to defining the main research purpose and specific objectives of a study. Consequently, these objectives determine the study design and research outcome. The development of research questions is a process based on knowledge of current trends, cutting-edge studies, and technological advances in the research field. Excellent research questions are focused and require a comprehensive literature search and in-depth understanding of the problem being investigated. Initially, research questions may be written as descriptive questions which could be developed into inferential questions. These questions must be specific and concise to provide a clear foundation for developing hypotheses. Hypotheses are more formal predictions about the research outcomes. These specify the possible results that may or may not be expected regarding the relationship between groups. Thus, research questions and hypotheses clarify the main purpose and specific objectives of the study, which in turn dictate the design of the study, its direction, and outcome. Studies developed from good research questions and hypotheses will have trustworthy outcomes with wide-ranging social and health implications.

INTRODUCTION

Scientific research is usually initiated by posing evidenced-based research questions which are then explicitly restated as hypotheses. 1 , 2 The hypotheses provide directions to guide the study, solutions, explanations, and expected results. 3 , 4 Both research questions and hypotheses are essentially formulated based on conventional theories and real-world processes, which allow the inception of novel studies and the ethical testing of ideas. 5 , 6

It is crucial to have knowledge of both quantitative and qualitative research 2 as both types of research involve writing research questions and hypotheses. 7 However, these crucial elements of research are sometimes overlooked; if not overlooked, then framed without the forethought and meticulous attention it needs. Planning and careful consideration are needed when developing quantitative or qualitative research, particularly when conceptualizing research questions and hypotheses. 4

There is a continuing need to support researchers in the creation of innovative research questions and hypotheses, as well as for journal articles that carefully review these elements. 1 When research questions and hypotheses are not carefully thought of, unethical studies and poor outcomes usually ensue. Carefully formulated research questions and hypotheses define well-founded objectives, which in turn determine the appropriate design, course, and outcome of the study. This article then aims to discuss in detail the various aspects of crafting research questions and hypotheses, with the goal of guiding researchers as they develop their own. Examples from the authors and peer-reviewed scientific articles in the healthcare field are provided to illustrate key points.

DEFINITIONS AND RELATIONSHIP OF RESEARCH QUESTIONS AND HYPOTHESES

A research question is what a study aims to answer after data analysis and interpretation. The answer is written in length in the discussion section of the paper. Thus, the research question gives a preview of the different parts and variables of the study meant to address the problem posed in the research question. 1 An excellent research question clarifies the research writing while facilitating understanding of the research topic, objective, scope, and limitations of the study. 5

On the other hand, a research hypothesis is an educated statement of an expected outcome. This statement is based on background research and current knowledge. 8 , 9 The research hypothesis makes a specific prediction about a new phenomenon 10 or a formal statement on the expected relationship between an independent variable and a dependent variable. 3 , 11 It provides a tentative answer to the research question to be tested or explored. 4

Hypotheses employ reasoning to predict a theory-based outcome. 10 These can also be developed from theories by focusing on components of theories that have not yet been observed. 10 The validity of hypotheses is often based on the testability of the prediction made in a reproducible experiment. 8

Conversely, hypotheses can also be rephrased as research questions. Several hypotheses based on existing theories and knowledge may be needed to answer a research question. Developing ethical research questions and hypotheses creates a research design that has logical relationships among variables. These relationships serve as a solid foundation for the conduct of the study. 4 , 11 Haphazardly constructed research questions can result in poorly formulated hypotheses and improper study designs, leading to unreliable results. Thus, the formulations of relevant research questions and verifiable hypotheses are crucial when beginning research. 12

CHARACTERISTICS OF GOOD RESEARCH QUESTIONS AND HYPOTHESES

Excellent research questions are specific and focused. These integrate collective data and observations to confirm or refute the subsequent hypotheses. Well-constructed hypotheses are based on previous reports and verify the research context. These are realistic, in-depth, sufficiently complex, and reproducible. More importantly, these hypotheses can be addressed and tested. 13

There are several characteristics of well-developed hypotheses. Good hypotheses are 1) empirically testable 7 , 10 , 11 , 13 ; 2) backed by preliminary evidence 9 ; 3) testable by ethical research 7 , 9 ; 4) based on original ideas 9 ; 5) have evidenced-based logical reasoning 10 ; and 6) can be predicted. 11 Good hypotheses can infer ethical and positive implications, indicating the presence of a relationship or effect relevant to the research theme. 7 , 11 These are initially developed from a general theory and branch into specific hypotheses by deductive reasoning. In the absence of a theory to base the hypotheses, inductive reasoning based on specific observations or findings form more general hypotheses. 10

TYPES OF RESEARCH QUESTIONS AND HYPOTHESES

Research questions and hypotheses are developed according to the type of research, which can be broadly classified into quantitative and qualitative research. We provide a summary of the types of research questions and hypotheses under quantitative and qualitative research categories in Table 1 .

| Quantitative research questions | Quantitative research hypotheses |

|---|---|

| Descriptive research questions | Simple hypothesis |

| Comparative research questions | Complex hypothesis |

| Relationship research questions | Directional hypothesis |

| Non-directional hypothesis | |

| Associative hypothesis | |

| Causal hypothesis | |

| Null hypothesis | |

| Alternative hypothesis | |

| Working hypothesis | |

| Statistical hypothesis | |

| Logical hypothesis | |

| Hypothesis-testing | |

| Qualitative research questions | Qualitative research hypotheses |

| Contextual research questions | Hypothesis-generating |

| Descriptive research questions | |

| Evaluation research questions | |

| Explanatory research questions | |

| Exploratory research questions | |

| Generative research questions | |

| Ideological research questions | |

| Ethnographic research questions | |

| Phenomenological research questions | |

| Grounded theory questions | |

| Qualitative case study questions |

Research questions in quantitative research

In quantitative research, research questions inquire about the relationships among variables being investigated and are usually framed at the start of the study. These are precise and typically linked to the subject population, dependent and independent variables, and research design. 1 Research questions may also attempt to describe the behavior of a population in relation to one or more variables, or describe the characteristics of variables to be measured ( descriptive research questions ). 1 , 5 , 14 These questions may also aim to discover differences between groups within the context of an outcome variable ( comparative research questions ), 1 , 5 , 14 or elucidate trends and interactions among variables ( relationship research questions ). 1 , 5 We provide examples of descriptive, comparative, and relationship research questions in quantitative research in Table 2 .

| Quantitative research questions | |

|---|---|

| Descriptive research question | |

| - Measures responses of subjects to variables | |

| - Presents variables to measure, analyze, or assess | |

| What is the proportion of resident doctors in the hospital who have mastered ultrasonography (response of subjects to a variable) as a diagnostic technique in their clinical training? | |

| Comparative research question | |

| - Clarifies difference between one group with outcome variable and another group without outcome variable | |

| Is there a difference in the reduction of lung metastasis in osteosarcoma patients who received the vitamin D adjunctive therapy (group with outcome variable) compared with osteosarcoma patients who did not receive the vitamin D adjunctive therapy (group without outcome variable)? | |

| - Compares the effects of variables | |

| How does the vitamin D analogue 22-Oxacalcitriol (variable 1) mimic the antiproliferative activity of 1,25-Dihydroxyvitamin D (variable 2) in osteosarcoma cells? | |

| Relationship research question | |

| - Defines trends, association, relationships, or interactions between dependent variable and independent variable | |

| Is there a relationship between the number of medical student suicide (dependent variable) and the level of medical student stress (independent variable) in Japan during the first wave of the COVID-19 pandemic? | |

Hypotheses in quantitative research

In quantitative research, hypotheses predict the expected relationships among variables. 15 Relationships among variables that can be predicted include 1) between a single dependent variable and a single independent variable ( simple hypothesis ) or 2) between two or more independent and dependent variables ( complex hypothesis ). 4 , 11 Hypotheses may also specify the expected direction to be followed and imply an intellectual commitment to a particular outcome ( directional hypothesis ) 4 . On the other hand, hypotheses may not predict the exact direction and are used in the absence of a theory, or when findings contradict previous studies ( non-directional hypothesis ). 4 In addition, hypotheses can 1) define interdependency between variables ( associative hypothesis ), 4 2) propose an effect on the dependent variable from manipulation of the independent variable ( causal hypothesis ), 4 3) state a negative relationship between two variables ( null hypothesis ), 4 , 11 , 15 4) replace the working hypothesis if rejected ( alternative hypothesis ), 15 explain the relationship of phenomena to possibly generate a theory ( working hypothesis ), 11 5) involve quantifiable variables that can be tested statistically ( statistical hypothesis ), 11 6) or express a relationship whose interlinks can be verified logically ( logical hypothesis ). 11 We provide examples of simple, complex, directional, non-directional, associative, causal, null, alternative, working, statistical, and logical hypotheses in quantitative research, as well as the definition of quantitative hypothesis-testing research in Table 3 .

| Quantitative research hypotheses | |

|---|---|

| Simple hypothesis | |

| - Predicts relationship between single dependent variable and single independent variable | |

| If the dose of the new medication (single independent variable) is high, blood pressure (single dependent variable) is lowered. | |

| Complex hypothesis | |

| - Foretells relationship between two or more independent and dependent variables | |

| The higher the use of anticancer drugs, radiation therapy, and adjunctive agents (3 independent variables), the higher would be the survival rate (1 dependent variable). | |

| Directional hypothesis | |

| - Identifies study direction based on theory towards particular outcome to clarify relationship between variables | |

| Privately funded research projects will have a larger international scope (study direction) than publicly funded research projects. | |

| Non-directional hypothesis | |

| - Nature of relationship between two variables or exact study direction is not identified | |

| - Does not involve a theory | |

| Women and men are different in terms of helpfulness. (Exact study direction is not identified) | |

| Associative hypothesis | |

| - Describes variable interdependency | |

| - Change in one variable causes change in another variable | |

| A larger number of people vaccinated against COVID-19 in the region (change in independent variable) will reduce the region’s incidence of COVID-19 infection (change in dependent variable). | |

| Causal hypothesis | |

| - An effect on dependent variable is predicted from manipulation of independent variable | |

| A change into a high-fiber diet (independent variable) will reduce the blood sugar level (dependent variable) of the patient. | |

| Null hypothesis | |

| - A negative statement indicating no relationship or difference between 2 variables | |

| There is no significant difference in the severity of pulmonary metastases between the new drug (variable 1) and the current drug (variable 2). | |

| Alternative hypothesis | |

| - Following a null hypothesis, an alternative hypothesis predicts a relationship between 2 study variables | |

| The new drug (variable 1) is better on average in reducing the level of pain from pulmonary metastasis than the current drug (variable 2). | |

| Working hypothesis | |

| - A hypothesis that is initially accepted for further research to produce a feasible theory | |

| Dairy cows fed with concentrates of different formulations will produce different amounts of milk. | |

| Statistical hypothesis | |

| - Assumption about the value of population parameter or relationship among several population characteristics | |

| - Validity tested by a statistical experiment or analysis | |

| The mean recovery rate from COVID-19 infection (value of population parameter) is not significantly different between population 1 and population 2. | |

| There is a positive correlation between the level of stress at the workplace and the number of suicides (population characteristics) among working people in Japan. | |

| Logical hypothesis | |

| - Offers or proposes an explanation with limited or no extensive evidence | |

| If healthcare workers provide more educational programs about contraception methods, the number of adolescent pregnancies will be less. | |

| Hypothesis-testing (Quantitative hypothesis-testing research) | |

| - Quantitative research uses deductive reasoning. | |

| - This involves the formation of a hypothesis, collection of data in the investigation of the problem, analysis and use of the data from the investigation, and drawing of conclusions to validate or nullify the hypotheses. | |

Research questions in qualitative research

Unlike research questions in quantitative research, research questions in qualitative research are usually continuously reviewed and reformulated. The central question and associated subquestions are stated more than the hypotheses. 15 The central question broadly explores a complex set of factors surrounding the central phenomenon, aiming to present the varied perspectives of participants. 15

There are varied goals for which qualitative research questions are developed. These questions can function in several ways, such as to 1) identify and describe existing conditions ( contextual research question s); 2) describe a phenomenon ( descriptive research questions ); 3) assess the effectiveness of existing methods, protocols, theories, or procedures ( evaluation research questions ); 4) examine a phenomenon or analyze the reasons or relationships between subjects or phenomena ( explanatory research questions ); or 5) focus on unknown aspects of a particular topic ( exploratory research questions ). 5 In addition, some qualitative research questions provide new ideas for the development of theories and actions ( generative research questions ) or advance specific ideologies of a position ( ideological research questions ). 1 Other qualitative research questions may build on a body of existing literature and become working guidelines ( ethnographic research questions ). Research questions may also be broadly stated without specific reference to the existing literature or a typology of questions ( phenomenological research questions ), may be directed towards generating a theory of some process ( grounded theory questions ), or may address a description of the case and the emerging themes ( qualitative case study questions ). 15 We provide examples of contextual, descriptive, evaluation, explanatory, exploratory, generative, ideological, ethnographic, phenomenological, grounded theory, and qualitative case study research questions in qualitative research in Table 4 , and the definition of qualitative hypothesis-generating research in Table 5 .

| Qualitative research questions | |

|---|---|

| Contextual research question | |

| - Ask the nature of what already exists | |

| - Individuals or groups function to further clarify and understand the natural context of real-world problems | |

| What are the experiences of nurses working night shifts in healthcare during the COVID-19 pandemic? (natural context of real-world problems) | |

| Descriptive research question | |

| - Aims to describe a phenomenon | |

| What are the different forms of disrespect and abuse (phenomenon) experienced by Tanzanian women when giving birth in healthcare facilities? | |

| Evaluation research question | |

| - Examines the effectiveness of existing practice or accepted frameworks | |

| How effective are decision aids (effectiveness of existing practice) in helping decide whether to give birth at home or in a healthcare facility? | |

| Explanatory research question | |

| - Clarifies a previously studied phenomenon and explains why it occurs | |

| Why is there an increase in teenage pregnancy (phenomenon) in Tanzania? | |

| Exploratory research question | |

| - Explores areas that have not been fully investigated to have a deeper understanding of the research problem | |

| What factors affect the mental health of medical students (areas that have not yet been fully investigated) during the COVID-19 pandemic? | |

| Generative research question | |

| - Develops an in-depth understanding of people’s behavior by asking ‘how would’ or ‘what if’ to identify problems and find solutions | |

| How would the extensive research experience of the behavior of new staff impact the success of the novel drug initiative? | |

| Ideological research question | |

| - Aims to advance specific ideas or ideologies of a position | |

| Are Japanese nurses who volunteer in remote African hospitals able to promote humanized care of patients (specific ideas or ideologies) in the areas of safe patient environment, respect of patient privacy, and provision of accurate information related to health and care? | |

| Ethnographic research question | |

| - Clarifies peoples’ nature, activities, their interactions, and the outcomes of their actions in specific settings | |

| What are the demographic characteristics, rehabilitative treatments, community interactions, and disease outcomes (nature, activities, their interactions, and the outcomes) of people in China who are suffering from pneumoconiosis? | |

| Phenomenological research question | |

| - Knows more about the phenomena that have impacted an individual | |

| What are the lived experiences of parents who have been living with and caring for children with a diagnosis of autism? (phenomena that have impacted an individual) | |

| Grounded theory question | |

| - Focuses on social processes asking about what happens and how people interact, or uncovering social relationships and behaviors of groups | |

| What are the problems that pregnant adolescents face in terms of social and cultural norms (social processes), and how can these be addressed? | |

| Qualitative case study question | |

| - Assesses a phenomenon using different sources of data to answer “why” and “how” questions | |

| - Considers how the phenomenon is influenced by its contextual situation. | |

| How does quitting work and assuming the role of a full-time mother (phenomenon assessed) change the lives of women in Japan? | |

| Qualitative research hypotheses | |

|---|---|

| Hypothesis-generating (Qualitative hypothesis-generating research) | |

| - Qualitative research uses inductive reasoning. | |

| - This involves data collection from study participants or the literature regarding a phenomenon of interest, using the collected data to develop a formal hypothesis, and using the formal hypothesis as a framework for testing the hypothesis. | |

| - Qualitative exploratory studies explore areas deeper, clarifying subjective experience and allowing formulation of a formal hypothesis potentially testable in a future quantitative approach. | |

Qualitative studies usually pose at least one central research question and several subquestions starting with How or What . These research questions use exploratory verbs such as explore or describe . These also focus on one central phenomenon of interest, and may mention the participants and research site. 15

Hypotheses in qualitative research

Hypotheses in qualitative research are stated in the form of a clear statement concerning the problem to be investigated. Unlike in quantitative research where hypotheses are usually developed to be tested, qualitative research can lead to both hypothesis-testing and hypothesis-generating outcomes. 2 When studies require both quantitative and qualitative research questions, this suggests an integrative process between both research methods wherein a single mixed-methods research question can be developed. 1

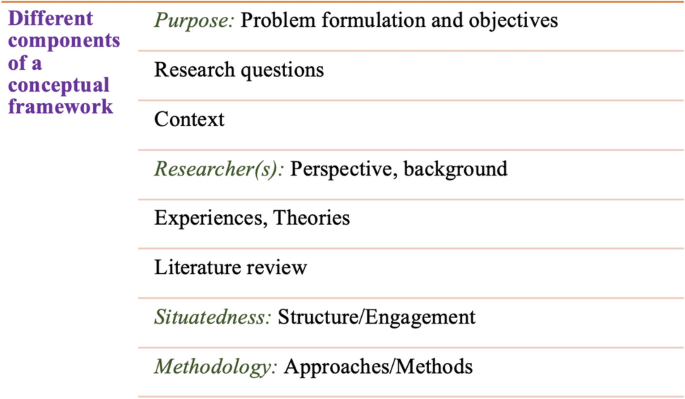

FRAMEWORKS FOR DEVELOPING RESEARCH QUESTIONS AND HYPOTHESES

Research questions followed by hypotheses should be developed before the start of the study. 1 , 12 , 14 It is crucial to develop feasible research questions on a topic that is interesting to both the researcher and the scientific community. This can be achieved by a meticulous review of previous and current studies to establish a novel topic. Specific areas are subsequently focused on to generate ethical research questions. The relevance of the research questions is evaluated in terms of clarity of the resulting data, specificity of the methodology, objectivity of the outcome, depth of the research, and impact of the study. 1 , 5 These aspects constitute the FINER criteria (i.e., Feasible, Interesting, Novel, Ethical, and Relevant). 1 Clarity and effectiveness are achieved if research questions meet the FINER criteria. In addition to the FINER criteria, Ratan et al. described focus, complexity, novelty, feasibility, and measurability for evaluating the effectiveness of research questions. 14

The PICOT and PEO frameworks are also used when developing research questions. 1 The following elements are addressed in these frameworks, PICOT: P-population/patients/problem, I-intervention or indicator being studied, C-comparison group, O-outcome of interest, and T-timeframe of the study; PEO: P-population being studied, E-exposure to preexisting conditions, and O-outcome of interest. 1 Research questions are also considered good if these meet the “FINERMAPS” framework: Feasible, Interesting, Novel, Ethical, Relevant, Manageable, Appropriate, Potential value/publishable, and Systematic. 14

As we indicated earlier, research questions and hypotheses that are not carefully formulated result in unethical studies or poor outcomes. To illustrate this, we provide some examples of ambiguous research question and hypotheses that result in unclear and weak research objectives in quantitative research ( Table 6 ) 16 and qualitative research ( Table 7 ) 17 , and how to transform these ambiguous research question(s) and hypothesis(es) into clear and good statements.

| Variables | Unclear and weak statement (Statement 1) | Clear and good statement (Statement 2) | Points to avoid |

|---|---|---|---|

| Research question | Which is more effective between smoke moxibustion and smokeless moxibustion? | “Moreover, regarding smoke moxibustion versus smokeless moxibustion, it remains unclear which is more effective, safe, and acceptable to pregnant women, and whether there is any difference in the amount of heat generated.” | 1) Vague and unfocused questions |

| 2) Closed questions simply answerable by yes or no | |||

| 3) Questions requiring a simple choice | |||

| Hypothesis | The smoke moxibustion group will have higher cephalic presentation. | “Hypothesis 1. The smoke moxibustion stick group (SM group) and smokeless moxibustion stick group (-SLM group) will have higher rates of cephalic presentation after treatment than the control group. | 1) Unverifiable hypotheses |

| Hypothesis 2. The SM group and SLM group will have higher rates of cephalic presentation at birth than the control group. | 2) Incompletely stated groups of comparison | ||

| Hypothesis 3. There will be no significant differences in the well-being of the mother and child among the three groups in terms of the following outcomes: premature birth, premature rupture of membranes (PROM) at < 37 weeks, Apgar score < 7 at 5 min, umbilical cord blood pH < 7.1, admission to neonatal intensive care unit (NICU), and intrauterine fetal death.” | 3) Insufficiently described variables or outcomes | ||

| Research objective | To determine which is more effective between smoke moxibustion and smokeless moxibustion. | “The specific aims of this pilot study were (a) to compare the effects of smoke moxibustion and smokeless moxibustion treatments with the control group as a possible supplement to ECV for converting breech presentation to cephalic presentation and increasing adherence to the newly obtained cephalic position, and (b) to assess the effects of these treatments on the well-being of the mother and child.” | 1) Poor understanding of the research question and hypotheses |

| 2) Insufficient description of population, variables, or study outcomes |

a These statements were composed for comparison and illustrative purposes only.

b These statements are direct quotes from Higashihara and Horiuchi. 16

| Variables | Unclear and weak statement (Statement 1) | Clear and good statement (Statement 2) | Points to avoid |

|---|---|---|---|

| Research question | Does disrespect and abuse (D&A) occur in childbirth in Tanzania? | How does disrespect and abuse (D&A) occur and what are the types of physical and psychological abuses observed in midwives’ actual care during facility-based childbirth in urban Tanzania? | 1) Ambiguous or oversimplistic questions |

| 2) Questions unverifiable by data collection and analysis | |||

| Hypothesis | Disrespect and abuse (D&A) occur in childbirth in Tanzania. | Hypothesis 1: Several types of physical and psychological abuse by midwives in actual care occur during facility-based childbirth in urban Tanzania. | 1) Statements simply expressing facts |

| Hypothesis 2: Weak nursing and midwifery management contribute to the D&A of women during facility-based childbirth in urban Tanzania. | 2) Insufficiently described concepts or variables | ||

| Research objective | To describe disrespect and abuse (D&A) in childbirth in Tanzania. | “This study aimed to describe from actual observations the respectful and disrespectful care received by women from midwives during their labor period in two hospitals in urban Tanzania.” | 1) Statements unrelated to the research question and hypotheses |

| 2) Unattainable or unexplorable objectives |

a This statement is a direct quote from Shimoda et al. 17

The other statements were composed for comparison and illustrative purposes only.

CONSTRUCTING RESEARCH QUESTIONS AND HYPOTHESES

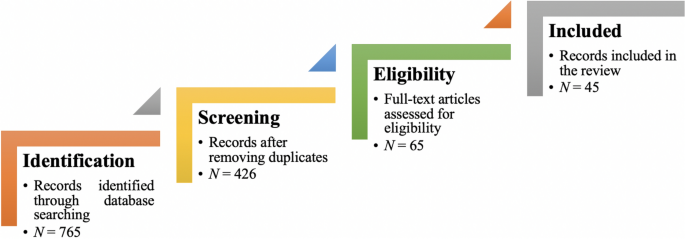

To construct effective research questions and hypotheses, it is very important to 1) clarify the background and 2) identify the research problem at the outset of the research, within a specific timeframe. 9 Then, 3) review or conduct preliminary research to collect all available knowledge about the possible research questions by studying theories and previous studies. 18 Afterwards, 4) construct research questions to investigate the research problem. Identify variables to be accessed from the research questions 4 and make operational definitions of constructs from the research problem and questions. Thereafter, 5) construct specific deductive or inductive predictions in the form of hypotheses. 4 Finally, 6) state the study aims . This general flow for constructing effective research questions and hypotheses prior to conducting research is shown in Fig. 1 .

Research questions are used more frequently in qualitative research than objectives or hypotheses. 3 These questions seek to discover, understand, explore or describe experiences by asking “What” or “How.” The questions are open-ended to elicit a description rather than to relate variables or compare groups. The questions are continually reviewed, reformulated, and changed during the qualitative study. 3 Research questions are also used more frequently in survey projects than hypotheses in experiments in quantitative research to compare variables and their relationships.

Hypotheses are constructed based on the variables identified and as an if-then statement, following the template, ‘If a specific action is taken, then a certain outcome is expected.’ At this stage, some ideas regarding expectations from the research to be conducted must be drawn. 18 Then, the variables to be manipulated (independent) and influenced (dependent) are defined. 4 Thereafter, the hypothesis is stated and refined, and reproducible data tailored to the hypothesis are identified, collected, and analyzed. 4 The hypotheses must be testable and specific, 18 and should describe the variables and their relationships, the specific group being studied, and the predicted research outcome. 18 Hypotheses construction involves a testable proposition to be deduced from theory, and independent and dependent variables to be separated and measured separately. 3 Therefore, good hypotheses must be based on good research questions constructed at the start of a study or trial. 12

In summary, research questions are constructed after establishing the background of the study. Hypotheses are then developed based on the research questions. Thus, it is crucial to have excellent research questions to generate superior hypotheses. In turn, these would determine the research objectives and the design of the study, and ultimately, the outcome of the research. 12 Algorithms for building research questions and hypotheses are shown in Fig. 2 for quantitative research and in Fig. 3 for qualitative research.

EXAMPLES OF RESEARCH QUESTIONS FROM PUBLISHED ARTICLES

- EXAMPLE 1. Descriptive research question (quantitative research)

- - Presents research variables to be assessed (distinct phenotypes and subphenotypes)

- “BACKGROUND: Since COVID-19 was identified, its clinical and biological heterogeneity has been recognized. Identifying COVID-19 phenotypes might help guide basic, clinical, and translational research efforts.

- RESEARCH QUESTION: Does the clinical spectrum of patients with COVID-19 contain distinct phenotypes and subphenotypes? ” 19

- EXAMPLE 2. Relationship research question (quantitative research)

- - Shows interactions between dependent variable (static postural control) and independent variable (peripheral visual field loss)

- “Background: Integration of visual, vestibular, and proprioceptive sensations contributes to postural control. People with peripheral visual field loss have serious postural instability. However, the directional specificity of postural stability and sensory reweighting caused by gradual peripheral visual field loss remain unclear.

- Research question: What are the effects of peripheral visual field loss on static postural control ?” 20

- EXAMPLE 3. Comparative research question (quantitative research)

- - Clarifies the difference among groups with an outcome variable (patients enrolled in COMPERA with moderate PH or severe PH in COPD) and another group without the outcome variable (patients with idiopathic pulmonary arterial hypertension (IPAH))

- “BACKGROUND: Pulmonary hypertension (PH) in COPD is a poorly investigated clinical condition.

- RESEARCH QUESTION: Which factors determine the outcome of PH in COPD?

- STUDY DESIGN AND METHODS: We analyzed the characteristics and outcome of patients enrolled in the Comparative, Prospective Registry of Newly Initiated Therapies for Pulmonary Hypertension (COMPERA) with moderate or severe PH in COPD as defined during the 6th PH World Symposium who received medical therapy for PH and compared them with patients with idiopathic pulmonary arterial hypertension (IPAH) .” 21

- EXAMPLE 4. Exploratory research question (qualitative research)

- - Explores areas that have not been fully investigated (perspectives of families and children who receive care in clinic-based child obesity treatment) to have a deeper understanding of the research problem

- “Problem: Interventions for children with obesity lead to only modest improvements in BMI and long-term outcomes, and data are limited on the perspectives of families of children with obesity in clinic-based treatment. This scoping review seeks to answer the question: What is known about the perspectives of families and children who receive care in clinic-based child obesity treatment? This review aims to explore the scope of perspectives reported by families of children with obesity who have received individualized outpatient clinic-based obesity treatment.” 22

- EXAMPLE 5. Relationship research question (quantitative research)

- - Defines interactions between dependent variable (use of ankle strategies) and independent variable (changes in muscle tone)

- “Background: To maintain an upright standing posture against external disturbances, the human body mainly employs two types of postural control strategies: “ankle strategy” and “hip strategy.” While it has been reported that the magnitude of the disturbance alters the use of postural control strategies, it has not been elucidated how the level of muscle tone, one of the crucial parameters of bodily function, determines the use of each strategy. We have previously confirmed using forward dynamics simulations of human musculoskeletal models that an increased muscle tone promotes the use of ankle strategies. The objective of the present study was to experimentally evaluate a hypothesis: an increased muscle tone promotes the use of ankle strategies. Research question: Do changes in the muscle tone affect the use of ankle strategies ?” 23

EXAMPLES OF HYPOTHESES IN PUBLISHED ARTICLES

- EXAMPLE 1. Working hypothesis (quantitative research)

- - A hypothesis that is initially accepted for further research to produce a feasible theory

- “As fever may have benefit in shortening the duration of viral illness, it is plausible to hypothesize that the antipyretic efficacy of ibuprofen may be hindering the benefits of a fever response when taken during the early stages of COVID-19 illness .” 24

- “In conclusion, it is plausible to hypothesize that the antipyretic efficacy of ibuprofen may be hindering the benefits of a fever response . The difference in perceived safety of these agents in COVID-19 illness could be related to the more potent efficacy to reduce fever with ibuprofen compared to acetaminophen. Compelling data on the benefit of fever warrant further research and review to determine when to treat or withhold ibuprofen for early stage fever for COVID-19 and other related viral illnesses .” 24

- EXAMPLE 2. Exploratory hypothesis (qualitative research)

- - Explores particular areas deeper to clarify subjective experience and develop a formal hypothesis potentially testable in a future quantitative approach

- “We hypothesized that when thinking about a past experience of help-seeking, a self distancing prompt would cause increased help-seeking intentions and more favorable help-seeking outcome expectations .” 25

- “Conclusion

- Although a priori hypotheses were not supported, further research is warranted as results indicate the potential for using self-distancing approaches to increasing help-seeking among some people with depressive symptomatology.” 25

- EXAMPLE 3. Hypothesis-generating research to establish a framework for hypothesis testing (qualitative research)

- “We hypothesize that compassionate care is beneficial for patients (better outcomes), healthcare systems and payers (lower costs), and healthcare providers (lower burnout). ” 26

- Compassionomics is the branch of knowledge and scientific study of the effects of compassionate healthcare. Our main hypotheses are that compassionate healthcare is beneficial for (1) patients, by improving clinical outcomes, (2) healthcare systems and payers, by supporting financial sustainability, and (3) HCPs, by lowering burnout and promoting resilience and well-being. The purpose of this paper is to establish a scientific framework for testing the hypotheses above . If these hypotheses are confirmed through rigorous research, compassionomics will belong in the science of evidence-based medicine, with major implications for all healthcare domains.” 26

- EXAMPLE 4. Statistical hypothesis (quantitative research)

- - An assumption is made about the relationship among several population characteristics ( gender differences in sociodemographic and clinical characteristics of adults with ADHD ). Validity is tested by statistical experiment or analysis ( chi-square test, Students t-test, and logistic regression analysis)

- “Our research investigated gender differences in sociodemographic and clinical characteristics of adults with ADHD in a Japanese clinical sample. Due to unique Japanese cultural ideals and expectations of women's behavior that are in opposition to ADHD symptoms, we hypothesized that women with ADHD experience more difficulties and present more dysfunctions than men . We tested the following hypotheses: first, women with ADHD have more comorbidities than men with ADHD; second, women with ADHD experience more social hardships than men, such as having less full-time employment and being more likely to be divorced.” 27

- “Statistical Analysis

- ( text omitted ) Between-gender comparisons were made using the chi-squared test for categorical variables and Students t-test for continuous variables…( text omitted ). A logistic regression analysis was performed for employment status, marital status, and comorbidity to evaluate the independent effects of gender on these dependent variables.” 27

EXAMPLES OF HYPOTHESIS AS WRITTEN IN PUBLISHED ARTICLES IN RELATION TO OTHER PARTS

- EXAMPLE 1. Background, hypotheses, and aims are provided

- “Pregnant women need skilled care during pregnancy and childbirth, but that skilled care is often delayed in some countries …( text omitted ). The focused antenatal care (FANC) model of WHO recommends that nurses provide information or counseling to all pregnant women …( text omitted ). Job aids are visual support materials that provide the right kind of information using graphics and words in a simple and yet effective manner. When nurses are not highly trained or have many work details to attend to, these job aids can serve as a content reminder for the nurses and can be used for educating their patients (Jennings, Yebadokpo, Affo, & Agbogbe, 2010) ( text omitted ). Importantly, additional evidence is needed to confirm how job aids can further improve the quality of ANC counseling by health workers in maternal care …( text omitted )” 28

- “ This has led us to hypothesize that the quality of ANC counseling would be better if supported by job aids. Consequently, a better quality of ANC counseling is expected to produce higher levels of awareness concerning the danger signs of pregnancy and a more favorable impression of the caring behavior of nurses .” 28

- “This study aimed to examine the differences in the responses of pregnant women to a job aid-supported intervention during ANC visit in terms of 1) their understanding of the danger signs of pregnancy and 2) their impression of the caring behaviors of nurses to pregnant women in rural Tanzania.” 28

- EXAMPLE 2. Background, hypotheses, and aims are provided

- “We conducted a two-arm randomized controlled trial (RCT) to evaluate and compare changes in salivary cortisol and oxytocin levels of first-time pregnant women between experimental and control groups. The women in the experimental group touched and held an infant for 30 min (experimental intervention protocol), whereas those in the control group watched a DVD movie of an infant (control intervention protocol). The primary outcome was salivary cortisol level and the secondary outcome was salivary oxytocin level.” 29

- “ We hypothesize that at 30 min after touching and holding an infant, the salivary cortisol level will significantly decrease and the salivary oxytocin level will increase in the experimental group compared with the control group .” 29

- EXAMPLE 3. Background, aim, and hypothesis are provided

- “In countries where the maternal mortality ratio remains high, antenatal education to increase Birth Preparedness and Complication Readiness (BPCR) is considered one of the top priorities [1]. BPCR includes birth plans during the antenatal period, such as the birthplace, birth attendant, transportation, health facility for complications, expenses, and birth materials, as well as family coordination to achieve such birth plans. In Tanzania, although increasing, only about half of all pregnant women attend an antenatal clinic more than four times [4]. Moreover, the information provided during antenatal care (ANC) is insufficient. In the resource-poor settings, antenatal group education is a potential approach because of the limited time for individual counseling at antenatal clinics.” 30

- “This study aimed to evaluate an antenatal group education program among pregnant women and their families with respect to birth-preparedness and maternal and infant outcomes in rural villages of Tanzania.” 30

- “ The study hypothesis was if Tanzanian pregnant women and their families received a family-oriented antenatal group education, they would (1) have a higher level of BPCR, (2) attend antenatal clinic four or more times, (3) give birth in a health facility, (4) have less complications of women at birth, and (5) have less complications and deaths of infants than those who did not receive the education .” 30

Research questions and hypotheses are crucial components to any type of research, whether quantitative or qualitative. These questions should be developed at the very beginning of the study. Excellent research questions lead to superior hypotheses, which, like a compass, set the direction of research, and can often determine the successful conduct of the study. Many research studies have floundered because the development of research questions and subsequent hypotheses was not given the thought and meticulous attention needed. The development of research questions and hypotheses is an iterative process based on extensive knowledge of the literature and insightful grasp of the knowledge gap. Focused, concise, and specific research questions provide a strong foundation for constructing hypotheses which serve as formal predictions about the research outcomes. Research questions and hypotheses are crucial elements of research that should not be overlooked. They should be carefully thought of and constructed when planning research. This avoids unethical studies and poor outcomes by defining well-founded objectives that determine the design, course, and outcome of the study.

Disclosure: The authors have no potential conflicts of interest to disclose.

Author Contributions:

- Conceptualization: Barroga E, Matanguihan GJ.

- Methodology: Barroga E, Matanguihan GJ.

- Writing - original draft: Barroga E, Matanguihan GJ.

- Writing - review & editing: Barroga E, Matanguihan GJ.

An official website of the United States government

Here’s how you know

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( A locked padlock ) or https:// means you’ve safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Heart-Healthy Living

- High Blood Pressure

- Sickle Cell Disease

- Sleep Apnea

- Information & Resources on COVID-19

- The Heart Truth®

- Learn More Breathe Better®

- Blood Diseases & Disorders Education Program

- Publications and Resources

- Clinical Trials

- Blood Disorders and Blood Safety

- Sleep Science and Sleep Disorders

- Lung Diseases

- Health Disparities and Inequities

- Heart and Vascular Diseases

- Precision Medicine Activities

- Obesity, Nutrition, and Physical Activity

- Population and Epidemiology Studies

- Women’s Health

- Research Topics

- All Science A-Z

- Grants and Training Home

- Policies and Guidelines

- Funding Opportunities and Contacts

- Training and Career Development

- Email Alerts

- NHLBI in the Press

- Research Features

- Ask a Scientist

- Past Events

- Upcoming Events

- Mission and Strategic Vision

- Divisions, Offices and Centers

- Advisory Committees

- Budget and Legislative Information

- Jobs and Working at the NHLBI

- Contact and FAQs

- NIH Sleep Research Plan

- < Back To Health Topics

Study Quality Assessment Tools

In 2013, NHLBI developed a set of tailored quality assessment tools to assist reviewers in focusing on concepts that are key to a study’s internal validity. The tools were specific to certain study designs and tested for potential flaws in study methods or implementation. Experts used the tools during the systematic evidence review process to update existing clinical guidelines, such as those on cholesterol, blood pressure, and obesity. Their findings are outlined in the following reports:

- Assessing Cardiovascular Risk: Systematic Evidence Review from the Risk Assessment Work Group

- Management of Blood Cholesterol in Adults: Systematic Evidence Review from the Cholesterol Expert Panel

- Management of Blood Pressure in Adults: Systematic Evidence Review from the Blood Pressure Expert Panel

- Managing Overweight and Obesity in Adults: Systematic Evidence Review from the Obesity Expert Panel

While these tools have not been independently published and would not be considered standardized, they may be useful to the research community. These reports describe how experts used the tools for the project. Researchers may want to use the tools for their own projects; however, they would need to determine their own parameters for making judgements. Details about the design and application of the tools are included in Appendix A of the reports.

Quality Assessment of Controlled Intervention Studies - Study Quality Assessment Tools

| Criteria | Yes | No | Other (CD, NR, NA)* |

|---|---|---|---|

| 1. Was the study described as randomized, a randomized trial, a randomized clinical trial, or an RCT? | |||

| 2. Was the method of randomization adequate (i.e., use of randomly generated assignment)? | |||

| 3. Was the treatment allocation concealed (so that assignments could not be predicted)? | |||

| 4. Were study participants and providers blinded to treatment group assignment? | |||

| 5. Were the people assessing the outcomes blinded to the participants' group assignments? | |||

| 6. Were the groups similar at baseline on important characteristics that could affect outcomes (e.g., demographics, risk factors, co-morbid conditions)? | |||

| 7. Was the overall drop-out rate from the study at endpoint 20% or lower of the number allocated to treatment? | |||

| 8. Was the differential drop-out rate (between treatment groups) at endpoint 15 percentage points or lower? | |||

| 9. Was there high adherence to the intervention protocols for each treatment group? | |||

| 10. Were other interventions avoided or similar in the groups (e.g., similar background treatments)? | |||

| 11. Were outcomes assessed using valid and reliable measures, implemented consistently across all study participants? | |||

| 12. Did the authors report that the sample size was sufficiently large to be able to detect a difference in the main outcome between groups with at least 80% power? | |||

| 13. Were outcomes reported or subgroups analyzed prespecified (i.e., identified before analyses were conducted)? | |||

| 14. Were all randomized participants analyzed in the group to which they were originally assigned, i.e., did they use an intention-to-treat analysis? |

| Quality Rating (Good, Fair, or Poor) |

|---|

| Rater #1 initials: |

| Rater #2 initials: |

| Additional Comments (If POOR, please state why): |

*CD, cannot determine; NA, not applicable; NR, not reported

Guidance for Assessing the Quality of Controlled Intervention Studies

The guidance document below is organized by question number from the tool for quality assessment of controlled intervention studies.

Question 1. Described as randomized

Was the study described as randomized? A study does not satisfy quality criteria as randomized simply because the authors call it randomized; however, it is a first step in determining if a study is randomized

Questions 2 and 3. Treatment allocation–two interrelated pieces

Adequate randomization: Randomization is adequate if it occurred according to the play of chance (e.g., computer generated sequence in more recent studies, or random number table in older studies). Inadequate randomization: Randomization is inadequate if there is a preset plan (e.g., alternation where every other subject is assigned to treatment arm or another method of allocation is used, such as time or day of hospital admission or clinic visit, ZIP Code, phone number, etc.). In fact, this is not randomization at all–it is another method of assignment to groups. If assignment is not by the play of chance, then the answer to this question is no. There may be some tricky scenarios that will need to be read carefully and considered for the role of chance in assignment. For example, randomization may occur at the site level, where all individuals at a particular site are assigned to receive treatment or no treatment. This scenario is used for group-randomized trials, which can be truly randomized, but often are "quasi-experimental" studies with comparison groups rather than true control groups. (Few, if any, group-randomized trials are anticipated for this evidence review.)

Allocation concealment: This means that one does not know in advance, or cannot guess accurately, to what group the next person eligible for randomization will be assigned. Methods include sequentially numbered opaque sealed envelopes, numbered or coded containers, central randomization by a coordinating center, computer-generated randomization that is not revealed ahead of time, etc. Questions 4 and 5. Blinding

Blinding means that one does not know to which group–intervention or control–the participant is assigned. It is also sometimes called "masking." The reviewer assessed whether each of the following was blinded to knowledge of treatment assignment: (1) the person assessing the primary outcome(s) for the study (e.g., taking the measurements such as blood pressure, examining health records for events such as myocardial infarction, reviewing and interpreting test results such as x ray or cardiac catheterization findings); (2) the person receiving the intervention (e.g., the patient or other study participant); and (3) the person providing the intervention (e.g., the physician, nurse, pharmacist, dietitian, or behavioral interventionist).

Generally placebo-controlled medication studies are blinded to patient, provider, and outcome assessors; behavioral, lifestyle, and surgical studies are examples of studies that are frequently blinded only to the outcome assessors because blinding of the persons providing and receiving the interventions is difficult in these situations. Sometimes the individual providing the intervention is the same person performing the outcome assessment. This was noted when it occurred.

Question 6. Similarity of groups at baseline

This question relates to whether the intervention and control groups have similar baseline characteristics on average especially those characteristics that may affect the intervention or outcomes. The point of randomized trials is to create groups that are as similar as possible except for the intervention(s) being studied in order to compare the effects of the interventions between groups. When reviewers abstracted baseline characteristics, they noted when there was a significant difference between groups. Baseline characteristics for intervention groups are usually presented in a table in the article (often Table 1).

Groups can differ at baseline without raising red flags if: (1) the differences would not be expected to have any bearing on the interventions and outcomes; or (2) the differences are not statistically significant. When concerned about baseline difference in groups, reviewers recorded them in the comments section and considered them in their overall determination of the study quality.

Questions 7 and 8. Dropout

"Dropouts" in a clinical trial are individuals for whom there are no end point measurements, often because they dropped out of the study and were lost to followup.

Generally, an acceptable overall dropout rate is considered 20 percent or less of participants who were randomized or allocated into each group. An acceptable differential dropout rate is an absolute difference between groups of 15 percentage points at most (calculated by subtracting the dropout rate of one group minus the dropout rate of the other group). However, these are general rates. Lower overall dropout rates are expected in shorter studies, whereas higher overall dropout rates may be acceptable for studies of longer duration. For example, a 6-month study of weight loss interventions should be expected to have nearly 100 percent followup (almost no dropouts–nearly everybody gets their weight measured regardless of whether or not they actually received the intervention), whereas a 10-year study testing the effects of intensive blood pressure lowering on heart attacks may be acceptable if there is a 20-25 percent dropout rate, especially if the dropout rate between groups was similar. The panels for the NHLBI systematic reviews may set different levels of dropout caps.

Conversely, differential dropout rates are not flexible; there should be a 15 percent cap. If there is a differential dropout rate of 15 percent or higher between arms, then there is a serious potential for bias. This constitutes a fatal flaw, resulting in a poor quality rating for the study.

Question 9. Adherence

Did participants in each treatment group adhere to the protocols for assigned interventions? For example, if Group 1 was assigned to 10 mg/day of Drug A, did most of them take 10 mg/day of Drug A? Another example is a study evaluating the difference between a 30-pound weight loss and a 10-pound weight loss on specific clinical outcomes (e.g., heart attacks), but the 30-pound weight loss group did not achieve its intended weight loss target (e.g., the group only lost 14 pounds on average). A third example is whether a large percentage of participants assigned to one group "crossed over" and got the intervention provided to the other group. A final example is when one group that was assigned to receive a particular drug at a particular dose had a large percentage of participants who did not end up taking the drug or the dose as designed in the protocol.

Question 10. Avoid other interventions

Changes that occur in the study outcomes being assessed should be attributable to the interventions being compared in the study. If study participants receive interventions that are not part of the study protocol and could affect the outcomes being assessed, and they receive these interventions differentially, then there is cause for concern because these interventions could bias results. The following scenario is another example of how bias can occur. In a study comparing two different dietary interventions on serum cholesterol, one group had a significantly higher percentage of participants taking statin drugs than the other group. In this situation, it would be impossible to know if a difference in outcome was due to the dietary intervention or the drugs.

Question 11. Outcome measures assessment

What tools or methods were used to measure the outcomes in the study? Were the tools and methods accurate and reliable–for example, have they been validated, or are they objective? This is important as it indicates the confidence you can have in the reported outcomes. Perhaps even more important is ascertaining that outcomes were assessed in the same manner within and between groups. One example of differing methods is self-report of dietary salt intake versus urine testing for sodium content (a more reliable and valid assessment method). Another example is using BP measurements taken by practitioners who use their usual methods versus using BP measurements done by individuals trained in a standard approach. Such an approach may include using the same instrument each time and taking an individual's BP multiple times. In each of these cases, the answer to this assessment question would be "no" for the former scenario and "yes" for the latter. In addition, a study in which an intervention group was seen more frequently than the control group, enabling more opportunities to report clinical events, would not be considered reliable and valid.

Question 12. Power calculation

Generally, a study's methods section will address the sample size needed to detect differences in primary outcomes. The current standard is at least 80 percent power to detect a clinically relevant difference in an outcome using a two-sided alpha of 0.05. Often, however, older studies will not report on power.

Question 13. Prespecified outcomes

Investigators should prespecify outcomes reported in a study for hypothesis testing–which is the reason for conducting an RCT. Without prespecified outcomes, the study may be reporting ad hoc analyses, simply looking for differences supporting desired findings. Investigators also should prespecify subgroups being examined. Most RCTs conduct numerous post hoc analyses as a way of exploring findings and generating additional hypotheses. The intent of this question is to give more weight to reports that are not simply exploratory in nature.

Question 14. Intention-to-treat analysis

Intention-to-treat (ITT) means everybody who was randomized is analyzed according to the original group to which they are assigned. This is an extremely important concept because conducting an ITT analysis preserves the whole reason for doing a randomized trial; that is, to compare groups that differ only in the intervention being tested. When the ITT philosophy is not followed, groups being compared may no longer be the same. In this situation, the study would likely be rated poor. However, if an investigator used another type of analysis that could be viewed as valid, this would be explained in the "other" box on the quality assessment form. Some researchers use a completers analysis (an analysis of only the participants who completed the intervention and the study), which introduces significant potential for bias. Characteristics of participants who do not complete the study are unlikely to be the same as those who do. The likely impact of participants withdrawing from a study treatment must be considered carefully. ITT analysis provides a more conservative (potentially less biased) estimate of effectiveness.

General Guidance for Determining the Overall Quality Rating of Controlled Intervention Studies

The questions on the assessment tool were designed to help reviewers focus on the key concepts for evaluating a study's internal validity. They are not intended to create a list that is simply tallied up to arrive at a summary judgment of quality.

Internal validity is the extent to which the results (effects) reported in a study can truly be attributed to the intervention being evaluated and not to flaws in the design or conduct of the study–in other words, the ability for the study to make causal conclusions about the effects of the intervention being tested. Such flaws can increase the risk of bias. Critical appraisal involves considering the risk of potential for allocation bias, measurement bias, or confounding (the mixture of exposures that one cannot tease out from each other). Examples of confounding include co-interventions, differences at baseline in patient characteristics, and other issues addressed in the questions above. High risk of bias translates to a rating of poor quality. Low risk of bias translates to a rating of good quality.

Fatal flaws: If a study has a "fatal flaw," then risk of bias is significant, and the study is of poor quality. Examples of fatal flaws in RCTs include high dropout rates, high differential dropout rates, no ITT analysis or other unsuitable statistical analysis (e.g., completers-only analysis).

Generally, when evaluating a study, one will not see a "fatal flaw;" however, one will find some risk of bias. During training, reviewers were instructed to look for the potential for bias in studies by focusing on the concepts underlying the questions in the tool. For any box checked "no," reviewers were told to ask: "What is the potential risk of bias that may be introduced by this flaw?" That is, does this factor cause one to doubt the results that were reported in the study?

NHLBI staff provided reviewers with background reading on critical appraisal, while emphasizing that the best approach to use is to think about the questions in the tool in determining the potential for bias in a study. The staff also emphasized that each study has specific nuances; therefore, reviewers should familiarize themselves with the key concepts.

Quality Assessment of Systematic Reviews and Meta-Analyses - Study Quality Assessment Tools

| Criteria | Yes | No | Other (CD, NR, NA)* |

|---|---|---|---|

| 1. Is the review based on a focused question that is adequately formulated and described? | |||

| 2. Were eligibility criteria for included and excluded studies predefined and specified? | |||

| 3. Did the literature search strategy use a comprehensive, systematic approach? | |||

| 4. Were titles, abstracts, and full-text articles dually and independently reviewed for inclusion and exclusion to minimize bias? | |||

| 5. Was the quality of each included study rated independently by two or more reviewers using a standard method to appraise its internal validity? | |||

| 6. Were the included studies listed along with important characteristics and results of each study? | |||

| 7. Was publication bias assessed? | |||

| 8. Was heterogeneity assessed? (This question applies only to meta-analyses.) |

Guidance for Quality Assessment Tool for Systematic Reviews and Meta-Analyses

A systematic review is a study that attempts to answer a question by synthesizing the results of primary studies while using strategies to limit bias and random error.424 These strategies include a comprehensive search of all potentially relevant articles and the use of explicit, reproducible criteria in the selection of articles included in the review. Research designs and study characteristics are appraised, data are synthesized, and results are interpreted using a predefined systematic approach that adheres to evidence-based methodological principles.

Systematic reviews can be qualitative or quantitative. A qualitative systematic review summarizes the results of the primary studies but does not combine the results statistically. A quantitative systematic review, or meta-analysis, is a type of systematic review that employs statistical techniques to combine the results of the different studies into a single pooled estimate of effect, often given as an odds ratio. The guidance document below is organized by question number from the tool for quality assessment of systematic reviews and meta-analyses.

Question 1. Focused question

The review should be based on a question that is clearly stated and well-formulated. An example would be a question that uses the PICO (population, intervention, comparator, outcome) format, with all components clearly described.

Question 2. Eligibility criteria

The eligibility criteria used to determine whether studies were included or excluded should be clearly specified and predefined. It should be clear to the reader why studies were included or excluded.

Question 3. Literature search

The search strategy should employ a comprehensive, systematic approach in order to capture all of the evidence possible that pertains to the question of interest. At a minimum, a comprehensive review has the following attributes:

- Electronic searches were conducted using multiple scientific literature databases, such as MEDLINE, EMBASE, Cochrane Central Register of Controlled Trials, PsychLit, and others as appropriate for the subject matter.

- Manual searches of references found in articles and textbooks should supplement the electronic searches.

Additional search strategies that may be used to improve the yield include the following:

- Studies published in other countries

- Studies published in languages other than English

- Identification by experts in the field of studies and articles that may have been missed

- Search of grey literature, including technical reports and other papers from government agencies or scientific groups or committees; presentations and posters from scientific meetings, conference proceedings, unpublished manuscripts; and others. Searching the grey literature is important (whenever feasible) because sometimes only positive studies with significant findings are published in the peer-reviewed literature, which can bias the results of a review.

In their reviews, researchers described the literature search strategy clearly, and ascertained it could be reproducible by others with similar results.

Question 4. Dual review for determining which studies to include and exclude

Titles, abstracts, and full-text articles (when indicated) should be reviewed by two independent reviewers to determine which studies to include and exclude in the review. Reviewers resolved disagreements through discussion and consensus or with third parties. They clearly stated the review process, including methods for settling disagreements.

Question 5. Quality appraisal for internal validity

Each included study should be appraised for internal validity (study quality assessment) using a standardized approach for rating the quality of the individual studies. Ideally, this should be done by at least two independent reviewers appraised each study for internal validity. However, there is not one commonly accepted, standardized tool for rating the quality of studies. So, in the research papers, reviewers looked for an assessment of the quality of each study and a clear description of the process used.

Question 6. List and describe included studies

All included studies were listed in the review, along with descriptions of their key characteristics. This was presented either in narrative or table format.

Question 7. Publication bias

Publication bias is a term used when studies with positive results have a higher likelihood of being published, being published rapidly, being published in higher impact journals, being published in English, being published more than once, or being cited by others.425,426 Publication bias can be linked to favorable or unfavorable treatment of research findings due to investigators, editors, industry, commercial interests, or peer reviewers. To minimize the potential for publication bias, researchers can conduct a comprehensive literature search that includes the strategies discussed in Question 3.

A funnel plot–a scatter plot of component studies in a meta-analysis–is a commonly used graphical method for detecting publication bias. If there is no significant publication bias, the graph looks like a symmetrical inverted funnel.

Reviewers assessed and clearly described the likelihood of publication bias.

Question 8. Heterogeneity

Heterogeneity is used to describe important differences in studies included in a meta-analysis that may make it inappropriate to combine the studies.427 Heterogeneity can be clinical (e.g., important differences between study participants, baseline disease severity, and interventions); methodological (e.g., important differences in the design and conduct of the study); or statistical (e.g., important differences in the quantitative results or reported effects).

Researchers usually assess clinical or methodological heterogeneity qualitatively by determining whether it makes sense to combine studies. For example:

- Should a study evaluating the effects of an intervention on CVD risk that involves elderly male smokers with hypertension be combined with a study that involves healthy adults ages 18 to 40? (Clinical Heterogeneity)

- Should a study that uses a randomized controlled trial (RCT) design be combined with a study that uses a case-control study design? (Methodological Heterogeneity)

Statistical heterogeneity describes the degree of variation in the effect estimates from a set of studies; it is assessed quantitatively. The two most common methods used to assess statistical heterogeneity are the Q test (also known as the X2 or chi-square test) or I2 test.

Reviewers examined studies to determine if an assessment for heterogeneity was conducted and clearly described. If the studies are found to be heterogeneous, the investigators should explore and explain the causes of the heterogeneity, and determine what influence, if any, the study differences had on overall study results.

Quality Assessment Tool for Observational Cohort and Cross-Sectional Studies - Study Quality Assessment Tools

| Criteria | Yes | No | Other (CD, NR, NA)* |

|---|---|---|---|

| 1. Was the research question or objective in this paper clearly stated? | |||

| 2. Was the study population clearly specified and defined? | |||

| 3. Was the participation rate of eligible persons at least 50%? | |||

| 4. Were all the subjects selected or recruited from the same or similar populations (including the same time period)? Were inclusion and exclusion criteria for being in the study prespecified and applied uniformly to all participants? | |||

| 5. Was a sample size justification, power description, or variance and effect estimates provided? | |||

| 6. For the analyses in this paper, were the exposure(s) of interest measured prior to the outcome(s) being measured? | |||

| 7. Was the timeframe sufficient so that one could reasonably expect to see an association between exposure and outcome if it existed? | |||

| 8. For exposures that can vary in amount or level, did the study examine different levels of the exposure as related to the outcome (e.g., categories of exposure, or exposure measured as continuous variable)? | |||

| 9. Were the exposure measures (independent variables) clearly defined, valid, reliable, and implemented consistently across all study participants? | |||

| 10. Was the exposure(s) assessed more than once over time? | |||

| 11. Were the outcome measures (dependent variables) clearly defined, valid, reliable, and implemented consistently across all study participants? | |||

| 12. Were the outcome assessors blinded to the exposure status of participants? | |||

| 13. Was loss to follow-up after baseline 20% or less? | |||

| 14. Were key potential confounding variables measured and adjusted statistically for their impact on the relationship between exposure(s) and outcome(s)? |

Guidance for Assessing the Quality of Observational Cohort and Cross-Sectional Studies

The guidance document below is organized by question number from the tool for quality assessment of observational cohort and cross-sectional studies.

Question 1. Research question

Did the authors describe their goal in conducting this research? Is it easy to understand what they were looking to find? This issue is important for any scientific paper of any type. Higher quality scientific research explicitly defines a research question.

Questions 2 and 3. Study population

Did the authors describe the group of people from which the study participants were selected or recruited, using demographics, location, and time period? If you were to conduct this study again, would you know who to recruit, from where, and from what time period? Is the cohort population free of the outcomes of interest at the time they were recruited?

An example would be men over 40 years old with type 2 diabetes who began seeking medical care at Phoenix Good Samaritan Hospital between January 1, 1990 and December 31, 1994. In this example, the population is clearly described as: (1) who (men over 40 years old with type 2 diabetes); (2) where (Phoenix Good Samaritan Hospital); and (3) when (between January 1, 1990 and December 31, 1994). Another example is women ages 34 to 59 years of age in 1980 who were in the nursing profession and had no known coronary disease, stroke, cancer, hypercholesterolemia, or diabetes, and were recruited from the 11 most populous States, with contact information obtained from State nursing boards.

In cohort studies, it is crucial that the population at baseline is free of the outcome of interest. For example, the nurses' population above would be an appropriate group in which to study incident coronary disease. This information is usually found either in descriptions of population recruitment, definitions of variables, or inclusion/exclusion criteria.

You may need to look at prior papers on methods in order to make the assessment for this question. Those papers are usually in the reference list.

If fewer than 50% of eligible persons participated in the study, then there is concern that the study population does not adequately represent the target population. This increases the risk of bias.

Question 4. Groups recruited from the same population and uniform eligibility criteria

Were the inclusion and exclusion criteria developed prior to recruitment or selection of the study population? Were the same underlying criteria used for all of the subjects involved? This issue is related to the description of the study population, above, and you may find the information for both of these questions in the same section of the paper.

Most cohort studies begin with the selection of the cohort; participants in this cohort are then measured or evaluated to determine their exposure status. However, some cohort studies may recruit or select exposed participants in a different time or place than unexposed participants, especially retrospective cohort studies–which is when data are obtained from the past (retrospectively), but the analysis examines exposures prior to outcomes. For example, one research question could be whether diabetic men with clinical depression are at higher risk for cardiovascular disease than those without clinical depression. So, diabetic men with depression might be selected from a mental health clinic, while diabetic men without depression might be selected from an internal medicine or endocrinology clinic. This study recruits groups from different clinic populations, so this example would get a "no."

However, the women nurses described in the question above were selected based on the same inclusion/exclusion criteria, so that example would get a "yes."

Question 5. Sample size justification

Did the authors present their reasons for selecting or recruiting the number of people included or analyzed? Do they note or discuss the statistical power of the study? This question is about whether or not the study had enough participants to detect an association if one truly existed.