Portland Community College | Portland, Oregon

Core outcomes.

- Core Outcomes: Critical Thinking and Problem Solving

Think Critically and Imaginatively

- Engage the imagination to explore new possibilities.

- Formulate and articulate ideas.

- Recognize explicit and tacit assumptions and their consequences.

- Weigh connections and relationships.

- Distinguish relevant from non-relevant data, fact from opinion.

- Identify, evaluate and synthesize information (obtained through library, world-wide web, and other sources as appropriate) in a collaborative environment.

- Reason toward a conclusion or application.

- Understand the contributions and applications of associative, intuitive and metaphoric modes of reasoning to argument and analysis.

- Analyze and draw inferences from numerical models.

- Determine the extent of information needed.

- Access the needed information effectively and efficiently.

- Evaluate information and its sources critically.

- Incorporate selected information into one’s knowledge base.

- Understand the economic, legal, and social issues surrounding the use of information, and access and use information ethically and legally.

Problem-Solve

- Identify and define central and secondary problems.

- Research and analyze data relevant to issues from a variety of media.

- Select and use appropriate concepts and methods from a variety of disciplines to solve problems effectively and creatively.

- Form associations between disparate facts and methods, which may be cross-disciplinary.

- Identify and use appropriate technology to research, solve, and present solutions to problems.

- Understand the roles of collaboration, risk-taking, multi-disciplinary awareness, and the imagination in achieving creative responses to problems.

- Make a decision and take actions based on analysis.

- Interpret and express quantitative ideas effectively in written, visual, aural, and oral form.

- Interpret and use written, quantitative, and visual text effectively in presentation of solutions to problems.

| Sample Indicators | |

|---|---|

| Limited demonstration or application of knowledge and skills. | Identifies the main problem, question at issue or the source’s position. Identifies implicit aspects of the problem and addresses their relationship to each other. |

| Basic demonstration and application of knowledge and skills. | Identifies one’s own position on the issue, drawing support from experience, and information not available from assigned sources. Addresses more than one perspective including perspectives drawn from outside information. Clearly distinguishes between fact, opinion and acknowledges value judgments. |

| Demonstrates comprehension and is able to apply essential knowledge and skill. | Identifies and addresses the validity of key assumptions that underlie the issue. Examines the evidence and source of evidence. Relates cause and effect. Illustrates existing or potential consequences. Analyzes the scope and context of the issue including an assessment of the audience of the analysis. |

| Demonstrates thorough, effective and/or sophisticated application of knowledge and skills. | Identifies and discusses conclusions, implication and consequences of issues considering context, assumptions, data and evidence. Objectively reflects upon own assertions. |

- AB: Auto Collision Repair Technology

- ABE: Adult Basic Education

- AD: Addiction Studies

- AM: Automotive Service Technology

- AMT: Aviation Maintenance Technology

- APR: Apprenticeship

- ARCH: Architectural Design and Drafting

- ASL: American Sign Language

- ATH: Anthropology

- AVS: Aviation Science

- BA: Business Administration

- BCT: Building Construction Technology

- BI: Biology

- BIT: Bioscience Technology

- CADD: Computer Aided Design and Drafting

- CAS/OS: Computer Applications & Web Technologies

- CG: Counseling and Guidance

- CH: Chemistry

- CHLA: Chicano/ Latino Studies

- CHN: Chinese

- CIS: Computer Information Systems

- CJA: Criminal Justice

- CMET: Civil and Mechanical Engineering Technology

- COMM: Communication Studies

- Core Outcomes: Communication

- Core Outcomes: Community and Environmental Responsibility

- Core Outcomes: Cultural Awareness

- Core Outcomes: Professional Competence

- Core Outcomes: Self-Reflection

- CS: Computer Science

- CTT: Computed Tomography

- DA: Dental Assisting

- DE: Developmental Education – Reading & Writing

- DE: Developmental Education – Reading and Writing

- DH: Dental Hygiene

- DS: Diesel Service Technology

- DST: Dealer Service Technology

- DT: Dental Lab Technology

- DT: Dental Technology

- EC: Economics

- ECE/HEC/HUS: Child and Family Studies

- ED: Paraeducator and Library Assistant

- EET: Electronic Engineering Technology

- ELT: Electrical Trades

- EMS: Emergency Medical Services

- ENGR: Engineering

- ESOL: English for Speakers of Other Languages

- ESR: Environmental Studies

- Exercise Science (formerly FT: Fitness Technology)

- FMT: Facilities Maintenance Technology

- FN: Foods and Nutrition

- FOT: Fiber Optics Technology

- FP: Fire Protection Technology

- GD: Graphic Design

- GEO: Geography

- GER: German

- GGS: Geology and General Science

- GRN: Gerontology

- HE: Health Education

- HIM: Health Information Management

- HR: Culinary Assistant Program

- HST: History

- ID: Interior Design

- INSP: Building Inspection Technology

- Integrated Studies

- ITP: Sign Language Interpretation

- J: Journalism

- JPN: Japanese

- LAT: Landscape Technology

- LIB: Library

- Literature (ENG)

- MA: Medical Assisting

- MCH: Machine Manufacturing Technology

- MLT: Medical Laboratory Technology

- MM: Multimedia

- MP: Medical Professions

- MRI: Magnetic Resonance Imaging

- MSD: Management/Supervisory Development

- MT: Microelectronic Technology

- MTH: Mathematics

- MUC: Music & Sonic Arts (formerly Professional Music)

- NRS: Nursing

- OMT: Ophthalmic Medical Technology

- OST: Occupational Skills Training

- PCC Core Outcomes/Course Mapping Matrix

- PE: Physical Education

- PHL: Philosophy

- PHY: Physics

- PL: Paralegal

- PS: Political Science

- PSY: Psychology

- Race, Indigenous Nations, and Gender (RING)

- RAD: Radiography

- RE: Real Estate

- RUS: Russian

- SC: Skill Center

- SOC: Sociology

- SPA: Spanish

- TA: Theatre Arts

- TE: Facilities Maintenance

- VP: Video Production

- VT: Veterinary Technology

- WLD: Welding Technology

- Writing/Composition

- WS: Women’s and Gender Studies

Classroom Q&A

With larry ferlazzo.

In this EdWeek blog, an experiment in knowledge-gathering, Ferlazzo will address readers’ questions on classroom management, ELL instruction, lesson planning, and other issues facing teachers. Send your questions to [email protected]. Read more from this blog.

Eight Instructional Strategies for Promoting Critical Thinking

- Share article

(This is the first post in a three-part series.)

The new question-of-the-week is:

What is critical thinking and how can we integrate it into the classroom?

This three-part series will explore what critical thinking is, if it can be specifically taught and, if so, how can teachers do so in their classrooms.

Today’s guests are Dara Laws Savage, Patrick Brown, Meg Riordan, Ph.D., and Dr. PJ Caposey. Dara, Patrick, and Meg were also guests on my 10-minute BAM! Radio Show . You can also find a list of, and links to, previous shows here.

You might also be interested in The Best Resources On Teaching & Learning Critical Thinking In The Classroom .

Current Events

Dara Laws Savage is an English teacher at the Early College High School at Delaware State University, where she serves as a teacher and instructional coach and lead mentor. Dara has been teaching for 25 years (career preparation, English, photography, yearbook, newspaper, and graphic design) and has presented nationally on project-based learning and technology integration:

There is so much going on right now and there is an overload of information for us to process. Did you ever stop to think how our students are processing current events? They see news feeds, hear news reports, and scan photos and posts, but are they truly thinking about what they are hearing and seeing?

I tell my students that my job is not to give them answers but to teach them how to think about what they read and hear. So what is critical thinking and how can we integrate it into the classroom? There are just as many definitions of critical thinking as there are people trying to define it. However, the Critical Think Consortium focuses on the tools to create a thinking-based classroom rather than a definition: “Shape the climate to support thinking, create opportunities for thinking, build capacity to think, provide guidance to inform thinking.” Using these four criteria and pairing them with current events, teachers easily create learning spaces that thrive on thinking and keep students engaged.

One successful technique I use is the FIRE Write. Students are given a quote, a paragraph, an excerpt, or a photo from the headlines. Students are asked to F ocus and respond to the selection for three minutes. Next, students are asked to I dentify a phrase or section of the photo and write for two minutes. Third, students are asked to R eframe their response around a specific word, phrase, or section within their previous selection. Finally, students E xchange their thoughts with a classmate. Within the exchange, students also talk about how the selection connects to what we are covering in class.

There was a controversial Pepsi ad in 2017 involving Kylie Jenner and a protest with a police presence. The imagery in the photo was strikingly similar to a photo that went viral with a young lady standing opposite a police line. Using that image from a current event engaged my students and gave them the opportunity to critically think about events of the time.

Here are the two photos and a student response:

F - Focus on both photos and respond for three minutes

In the first picture, you see a strong and courageous black female, bravely standing in front of two officers in protest. She is risking her life to do so. Iesha Evans is simply proving to the world she does NOT mean less because she is black … and yet officers are there to stop her. She did not step down. In the picture below, you see Kendall Jenner handing a police officer a Pepsi. Maybe this wouldn’t be a big deal, except this was Pepsi’s weak, pathetic, and outrageous excuse of a commercial that belittles the whole movement of people fighting for their lives.

I - Identify a word or phrase, underline it, then write about it for two minutes

A white, privileged female in place of a fighting black woman was asking for trouble. A struggle we are continuously fighting every day, and they make a mockery of it. “I know what will work! Here Mr. Police Officer! Drink some Pepsi!” As if. Pepsi made a fool of themselves, and now their already dwindling fan base continues to ever shrink smaller.

R - Reframe your thoughts by choosing a different word, then write about that for one minute

You don’t know privilege until it’s gone. You don’t know privilege while it’s there—but you can and will be made accountable and aware. Don’t use it for evil. You are not stupid. Use it to do something. Kendall could’ve NOT done the commercial. Kendall could’ve released another commercial standing behind a black woman. Anything!

Exchange - Remember to discuss how this connects to our school song project and our previous discussions?

This connects two ways - 1) We want to convey a strong message. Be powerful. Show who we are. And Pepsi definitely tried. … Which leads to the second connection. 2) Not mess up and offend anyone, as had the one alma mater had been linked to black minstrels. We want to be amazing, but we have to be smart and careful and make sure we include everyone who goes to our school and everyone who may go to our school.

As a final step, students read and annotate the full article and compare it to their initial response.

Using current events and critical-thinking strategies like FIRE writing helps create a learning space where thinking is the goal rather than a score on a multiple-choice assessment. Critical-thinking skills can cross over to any of students’ other courses and into life outside the classroom. After all, we as teachers want to help the whole student be successful, and critical thinking is an important part of navigating life after they leave our classrooms.

‘Before-Explore-Explain’

Patrick Brown is the executive director of STEM and CTE for the Fort Zumwalt school district in Missouri and an experienced educator and author :

Planning for critical thinking focuses on teaching the most crucial science concepts, practices, and logical-thinking skills as well as the best use of instructional time. One way to ensure that lessons maintain a focus on critical thinking is to focus on the instructional sequence used to teach.

Explore-before-explain teaching is all about promoting critical thinking for learners to better prepare students for the reality of their world. What having an explore-before-explain mindset means is that in our planning, we prioritize giving students firsthand experiences with data, allow students to construct evidence-based claims that focus on conceptual understanding, and challenge students to discuss and think about the why behind phenomena.

Just think of the critical thinking that has to occur for students to construct a scientific claim. 1) They need the opportunity to collect data, analyze it, and determine how to make sense of what the data may mean. 2) With data in hand, students can begin thinking about the validity and reliability of their experience and information collected. 3) They can consider what differences, if any, they might have if they completed the investigation again. 4) They can scrutinize outlying data points for they may be an artifact of a true difference that merits further exploration of a misstep in the procedure, measuring device, or measurement. All of these intellectual activities help them form more robust understanding and are evidence of their critical thinking.

In explore-before-explain teaching, all of these hard critical-thinking tasks come before teacher explanations of content. Whether we use discovery experiences, problem-based learning, and or inquiry-based activities, strategies that are geared toward helping students construct understanding promote critical thinking because students learn content by doing the practices valued in the field to generate knowledge.

An Issue of Equity

Meg Riordan, Ph.D., is the chief learning officer at The Possible Project, an out-of-school program that collaborates with youth to build entrepreneurial skills and mindsets and provides pathways to careers and long-term economic prosperity. She has been in the field of education for over 25 years as a middle and high school teacher, school coach, college professor, regional director of N.Y.C. Outward Bound Schools, and director of external research with EL Education:

Although critical thinking often defies straightforward definition, most in the education field agree it consists of several components: reasoning, problem-solving, and decisionmaking, plus analysis and evaluation of information, such that multiple sides of an issue can be explored. It also includes dispositions and “the willingness to apply critical-thinking principles, rather than fall back on existing unexamined beliefs, or simply believe what you’re told by authority figures.”

Despite variation in definitions, critical thinking is nonetheless promoted as an essential outcome of students’ learning—we want to see students and adults demonstrate it across all fields, professions, and in their personal lives. Yet there is simultaneously a rationing of opportunities in schools for students of color, students from under-resourced communities, and other historically marginalized groups to deeply learn and practice critical thinking.

For example, many of our most underserved students often spend class time filling out worksheets, promoting high compliance but low engagement, inquiry, critical thinking, or creation of new ideas. At a time in our world when college and careers are critical for participation in society and the global, knowledge-based economy, far too many students struggle within classrooms and schools that reinforce low-expectations and inequity.

If educators aim to prepare all students for an ever-evolving marketplace and develop skills that will be valued no matter what tomorrow’s jobs are, then we must move critical thinking to the forefront of classroom experiences. And educators must design learning to cultivate it.

So, what does that really look like?

Unpack and define critical thinking

To understand critical thinking, educators need to first unpack and define its components. What exactly are we looking for when we speak about reasoning or exploring multiple perspectives on an issue? How does problem-solving show up in English, math, science, art, or other disciplines—and how is it assessed? At Two Rivers, an EL Education school, the faculty identified five constructs of critical thinking, defined each, and created rubrics to generate a shared picture of quality for teachers and students. The rubrics were then adapted across grade levels to indicate students’ learning progressions.

At Avenues World School, critical thinking is one of the Avenues World Elements and is an enduring outcome embedded in students’ early experiences through 12th grade. For instance, a kindergarten student may be expected to “identify cause and effect in familiar contexts,” while an 8th grader should demonstrate the ability to “seek out sufficient evidence before accepting a claim as true,” “identify bias in claims and evidence,” and “reconsider strongly held points of view in light of new evidence.”

When faculty and students embrace a common vision of what critical thinking looks and sounds like and how it is assessed, educators can then explicitly design learning experiences that call for students to employ critical-thinking skills. This kind of work must occur across all schools and programs, especially those serving large numbers of students of color. As Linda Darling-Hammond asserts , “Schools that serve large numbers of students of color are least likely to offer the kind of curriculum needed to ... help students attain the [critical-thinking] skills needed in a knowledge work economy. ”

So, what can it look like to create those kinds of learning experiences?

Designing experiences for critical thinking

After defining a shared understanding of “what” critical thinking is and “how” it shows up across multiple disciplines and grade levels, it is essential to create learning experiences that impel students to cultivate, practice, and apply these skills. There are several levers that offer pathways for teachers to promote critical thinking in lessons:

1.Choose Compelling Topics: Keep it relevant

A key Common Core State Standard asks for students to “write arguments to support claims in an analysis of substantive topics or texts using valid reasoning and relevant and sufficient evidence.” That might not sound exciting or culturally relevant. But a learning experience designed for a 12th grade humanities class engaged learners in a compelling topic— policing in America —to analyze and evaluate multiple texts (including primary sources) and share the reasoning for their perspectives through discussion and writing. Students grappled with ideas and their beliefs and employed deep critical-thinking skills to develop arguments for their claims. Embedding critical-thinking skills in curriculum that students care about and connect with can ignite powerful learning experiences.

2. Make Local Connections: Keep it real

At The Possible Project , an out-of-school-time program designed to promote entrepreneurial skills and mindsets, students in a recent summer online program (modified from in-person due to COVID-19) explored the impact of COVID-19 on their communities and local BIPOC-owned businesses. They learned interviewing skills through a partnership with Everyday Boston , conducted virtual interviews with entrepreneurs, evaluated information from their interviews and local data, and examined their previously held beliefs. They created blog posts and videos to reflect on their learning and consider how their mindsets had changed as a result of the experience. In this way, we can design powerful community-based learning and invite students into productive struggle with multiple perspectives.

3. Create Authentic Projects: Keep it rigorous

At Big Picture Learning schools, students engage in internship-based learning experiences as a central part of their schooling. Their school-based adviser and internship-based mentor support them in developing real-world projects that promote deeper learning and critical-thinking skills. Such authentic experiences teach “young people to be thinkers, to be curious, to get from curiosity to creation … and it helps students design a learning experience that answers their questions, [providing an] opportunity to communicate it to a larger audience—a major indicator of postsecondary success.” Even in a remote environment, we can design projects that ask more of students than rote memorization and that spark critical thinking.

Our call to action is this: As educators, we need to make opportunities for critical thinking available not only to the affluent or those fortunate enough to be placed in advanced courses. The tools are available, let’s use them. Let’s interrogate our current curriculum and design learning experiences that engage all students in real, relevant, and rigorous experiences that require critical thinking and prepare them for promising postsecondary pathways.

Critical Thinking & Student Engagement

Dr. PJ Caposey is an award-winning educator, keynote speaker, consultant, and author of seven books who currently serves as the superintendent of schools for the award-winning Meridian CUSD 223 in northwest Illinois. You can find PJ on most social-media platforms as MCUSDSupe:

When I start my keynote on student engagement, I invite two people up on stage and give them each five paper balls to shoot at a garbage can also conveniently placed on stage. Contestant One shoots their shot, and the audience gives approval. Four out of 5 is a heckuva score. Then just before Contestant Two shoots, I blindfold them and start moving the garbage can back and forth. I usually try to ensure that they can at least make one of their shots. Nobody is successful in this unfair environment.

I thank them and send them back to their seats and then explain that this little activity was akin to student engagement. While we all know we want student engagement, we are shooting at different targets. More importantly, for teachers, it is near impossible for them to hit a target that is moving and that they cannot see.

Within the world of education and particularly as educational leaders, we have failed to simplify what student engagement looks like, and it is impossible to define or articulate what student engagement looks like if we cannot clearly articulate what critical thinking is and looks like in a classroom. Because, simply, without critical thought, there is no engagement.

The good news here is that critical thought has been defined and placed into taxonomies for decades already. This is not something new and not something that needs to be redefined. I am a Bloom’s person, but there is nothing wrong with DOK or some of the other taxonomies, either. To be precise, I am a huge fan of Daggett’s Rigor and Relevance Framework. I have used that as a core element of my practice for years, and it has shaped who I am as an instructional leader.

So, in order to explain critical thought, a teacher or a leader must familiarize themselves with these tried and true taxonomies. Easy, right? Yes, sort of. The issue is not understanding what critical thought is; it is the ability to integrate it into the classrooms. In order to do so, there are a four key steps every educator must take.

- Integrating critical thought/rigor into a lesson does not happen by chance, it happens by design. Planning for critical thought and engagement is much different from planning for a traditional lesson. In order to plan for kids to think critically, you have to provide a base of knowledge and excellent prompts to allow them to explore their own thinking in order to analyze, evaluate, or synthesize information.

- SIDE NOTE – Bloom’s verbs are a great way to start when writing objectives, but true planning will take you deeper than this.

QUESTIONING

- If the questions and prompts given in a classroom have correct answers or if the teacher ends up answering their own questions, the lesson will lack critical thought and rigor.

- Script five questions forcing higher-order thought prior to every lesson. Experienced teachers may not feel they need this, but it helps to create an effective habit.

- If lessons are rigorous and assessments are not, students will do well on their assessments, and that may not be an accurate representation of the knowledge and skills they have mastered. If lessons are easy and assessments are rigorous, the exact opposite will happen. When deciding to increase critical thought, it must happen in all three phases of the game: planning, instruction, and assessment.

TALK TIME / CONTROL

- To increase rigor, the teacher must DO LESS. This feels counterintuitive but is accurate. Rigorous lessons involving tons of critical thought must allow for students to work on their own, collaborate with peers, and connect their ideas. This cannot happen in a silent room except for the teacher talking. In order to increase rigor, decrease talk time and become comfortable with less control. Asking questions and giving prompts that lead to no true correct answer also means less control. This is a tough ask for some teachers. Explained differently, if you assign one assignment and get 30 very similar products, you have most likely assigned a low-rigor recipe. If you assign one assignment and get multiple varied products, then the students have had a chance to think deeply, and you have successfully integrated critical thought into your classroom.

Thanks to Dara, Patrick, Meg, and PJ for their contributions!

Please feel free to leave a comment with your reactions to the topic or directly to anything that has been said in this post.

Consider contributing a question to be answered in a future post. You can send one to me at [email protected] . When you send it in, let me know if I can use your real name if it’s selected or if you’d prefer remaining anonymous and have a pseudonym in mind.

You can also contact me on Twitter at @Larryferlazzo .

Education Week has published a collection of posts from this blog, along with new material, in an e-book form. It’s titled Classroom Management Q&As: Expert Strategies for Teaching .

Just a reminder; you can subscribe and receive updates from this blog via email (The RSS feed for this blog, and for all Ed Week articles, has been changed by the new redesign—new ones won’t be available until February). And if you missed any of the highlights from the first nine years of this blog, you can see a categorized list below.

- This Year’s Most Popular Q&A Posts

- Race & Racism in Schools

- School Closures & the Coronavirus Crisis

- Classroom-Management Advice

- Best Ways to Begin the School Year

- Best Ways to End the School Year

- Student Motivation & Social-Emotional Learning

- Implementing the Common Core

- Facing Gender Challenges in Education

- Teaching Social Studies

- Cooperative & Collaborative Learning

- Using Tech in the Classroom

- Student Voices

- Parent Engagement in Schools

- Teaching English-Language Learners

- Reading Instruction

- Writing Instruction

- Education Policy Issues

- Differentiating Instruction

- Math Instruction

- Science Instruction

- Advice for New Teachers

- Author Interviews

- Entering the Teaching Profession

- The Inclusive Classroom

- Learning & the Brain

- Administrator Leadership

- Teacher Leadership

- Relationships in Schools

- Professional Development

- Instructional Strategies

- Best of Classroom Q&A

- Professional Collaboration

- Classroom Organization

- Mistakes in Education

- Project-Based Learning

I am also creating a Twitter list including all contributors to this column .

The opinions expressed in Classroom Q&A With Larry Ferlazzo are strictly those of the author(s) and do not reflect the opinions or endorsement of Editorial Projects in Education, or any of its publications.

Sign Up for EdWeek Update

Edweek top school jobs.

Sign Up & Sign In

Essential Learning Outcomes: Critical/Creative Thinking

- Civic Responsibility

- Critical/Creative Thinking

- Cultural Sensitivity

- Information Literacy

- Oral Communication

- Quantitative Reasoning

- Written Communication

- Diversity, Equity & Inclusion

Description

Guide to Critical/Creative Thinking

Intended Learning Outcome:

Analyze, evaluate, and synthesize information in order to consider problems/ideas and transform them in innovative or imaginative ways (See below for definitions)

Assessment may include but is not limited to the following criteria and intended outcomes:

Analyze problems/ideas critically and/or creatively

- Formulates appropriate questions to consider problems/issues

- Evaluates costs and benefits of a solution

- Identifies possible solutions to problems or resolution to issues

- Applies innovative and imaginative approaches to problems/ideas

Synthesize information/ideas into a coherent whole

- Seeks and compares information that leads to informed decisions/opinions

- Applies fact and opinion appropriately

- Expands upon ideas to foster new lines of inquiry

- Synthesizes ideas into a coherent whole

Evaluate synthesized information in order to transform problems/ideas in innovative or imaginative ways

- Applies synthesized information to inform effective decisions

- Experiments with creating a novel idea, question, or product

- Uses new approaches and takes appropriate risks without going beyond the guidelines of the assignment

- Evaluates and reflects on the decision through a process that takes into account the complexities of an issue

From Association of American Colleges & Universities, LEAP outcomes and VALUE rubrics: Critical thinking is a habit of mind characterized by the comprehensive exploration of issues, ideas, artifacts, and events before accepting or formulating an opinion or conclusion.

Creative thinking is both the capacity to combine or synthesize existing ideas, images, or expertise in original ways and the experience of thinking, reacting, and working in an imaginative way characterized by a high degree of innovation, divergent thinking, and risk taking.

Elements, excerpts, and ideas borrowed with permission form Assessing Outcomes and Improving Achievement: Tips and tools for Using Rubrics , edited by Terrel L. Rhodes. Copyright 2010 by the Association of American Colleges and Universities.

How to Align - Critical/Creative Thinking

- Critical/Creative Thinking ELO Tutorial

Critical/Creative Thinking Rubric

Analyze, evaluate, and synthesize information in order to consider problems/ideas and transform them into innovative or imaginative ways.

| Criteria | Inadequate | Developing | Competent | Proficient |

|---|---|---|---|---|

| Analyze problems/ideas critically and/or creatively | Does not analyze problems/ideas | Analyzes problems/ideas but not critically and/or creatively | Begins to analyze the problems/ideas critically and/or creatively | analyzes the problems/ideas critically and/or creatively |

| Synthesize information/ideas in order to synthesize into a coherent whole | Does not synthesize information/ideas | Begins to synthesize information/ideas but not into a coherent whole | Synthesizes information/ideas but not into a coherent whole | Synthesizes information/ideas into a coherent whole |

| Evaluate synthesized information in order to transform problems/ideas in innovative | Does not evaluate synthesized information in order to transform problems/ideas | Evaluates synthesized information and begins to transform problems/ideas | Evaluates synthesized information and transforms problems/ideas | Evaluates, synthesized information and transforms problems/ideas accounting for their complexities or nuances |

Elements, excerpts, and ideas borrowed with permission form Assessing Outcomes and Improving Achievement: Tips and tools for Using Rubrics , edited by Terrel L. Rhodes. Copyright 2010 by the Association of American Colleges and Universities.

Sample Assignments

- Cleveland Museum of Art tour (Just Mercy) Assignment contributed by Chris Wolken, Matt Lafferty, Luke Schuleter and Sara Clark.

- Disaster Analysis This assignment was created by faculty at Durham College in Canada The purpose of this assignment is to evaluate students’ ability to think critically about how natural disasters are portrayed in the media.

- Laboratory Report-Critical Thinking Assignment contributed by Anne Distler.

- (Re)Imaginings assignment ENG 1020 Assignment contributed by Sara Fuller.

- Sustainability Project-Part 1 Waste Journal Assignment contributed by Anne Distler.

- Sustainability Project-Part 2 Research Assignment contributed by Anne Distler.

- Sustainability Project-Part 3 Waste Journal Continuation Assignment contributed by Anne Distler.

- Sustainability Project-Part 4 Reflection Assignment contributed by Anne Distler.

- Reconstructed Landscapes (VCPH) Assignment contributed by Jonathan Wayne

- Book Cover Design (VCIL)) Assignment contributed by George Kopec

Ask a Librarian

- << Previous: Civic Responsibility

- Next: Cultural Sensitivity >>

- Last Updated: Jan 8, 2024 12:20 PM

- URL: https://libguides.tri-c.edu/Essential

Learning outcomes and critical thinking – good intentions in conflict

- Studies in Higher Education 44(2):1-11

- CC BY-NC-ND 4.0

- Högskolan i Borås

- This person is not on ResearchGate, or hasn't claimed this research yet.

Discover the world's research

- 25+ million members

- 160+ million publication pages

- 2.3+ billion citations

- J Comput High Educ

- Iolanda Moura Costa

- Febrinna Marchy

- Elmawati Elmawati

- Christina Monika Samosir

- Mohamad Hafizi Bin Masdar

- Engku Abdullah¹

- Dalila Burhan¹

- J COMPUT ASSIST LEAR

- Hye Kyung Jin

- Eunyoung Kim

- Hassan Aliyu

- Aina Jacob Kola

- Leander Luiz Klein

- Gabriela Dos Santos Malaquias

- Vanessa Giacomelli Bressan

- Moipone Motaung

- Marianne Ellegaard

- Henriette Lorenzen

- Jesper Bahrenscheer

- Dragos Bigu

- E. V. Pullias

- Karl Jaspers

- Linda Frederiksen

- Bruce Macfarlane

- Jeremy Berg

- Tracy Bowell

- Catherine Tang

- Recruit researchers

- Join for free

- Login Email Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google Welcome back! Please log in. Email · Hint Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google No account? Sign up

- DOI: 10.1080/03075079.2018.1486813

- Corpus ID: 51996395

Learning outcomes and critical thinking – good intentions in conflict

- Martin G. Erikson , Malgorzata Erikson

- Published in Studies in Higher Education 19 June 2018

- Education, Philosophy

110 Citations

Efforts to drill the critical thinking skills on momentum and impulse phenomena using discovery learning model, critical thinking. undeveloped competence in teachers a review, critical thinking in the higher education classroom: knowledge, power, control and identities, assessing critical thinking in business education: key issues and practical solutions, an inquiry into critical thinking in the australian curriculum: examining its conceptual understandings and their implications on developing critical thinking as a “general capability” on teachers’ practice and knowledge, the relationship between critical thinking and knowledge acquisition: the role of digital mind maps-pbl strategies, profile of 11th-grade students’ critical thinking skills in the reaction rate topic and their relationship with learning outcomes, the critical thinking-oriented adaptations of problem-based learning models: a systematic review, individualised and instrumentalised critical thinking, students and the optics of possibility within neoliberal higher education, assessment of students’ critical thinking maturity in the process of teaching philosophy, 54 references, critical thinking and the disciplines reconsidered, critical thinking and disciplinary thinking: a continuing debate, understanding and enacting learning outcomes: the academic's perspective, learning outcomes and ways of thinking across contrasting disciplines and settings in higher education, critical thinking in education: a review, ‘lost in translation’: learning outcomes and the governance of education.

- Highly Influential

Policing the Subject: Learning Outcomes, Managerialism and Research in PCET

Teaching critical thinking for transfer across domains. dispositions, skills, structure training, and metacognitive monitoring., learning outcomes in higher education, why use learning outcomes in higher education exploring the grounds for academic resistance and reclaiming the value of unexpected learning, related papers.

Showing 1 through 3 of 0 Related Papers

- LEARNING SKILLS

- Study Skills

- Critical Thinking

Search SkillsYouNeed:

Learning Skills:

- A - Z List of Learning Skills

- What is Learning?

- Learning Approaches

- Learning Styles

- 8 Types of Learning Styles

- Understanding Your Preferences to Aid Learning

- Lifelong Learning

- Decisions to Make Before Applying to University

- Top Tips for Surviving Student Life

- Living Online: Education and Learning

- 8 Ways to Embrace Technology-Based Learning Approaches

Critical Thinking Skills

- Critical Thinking and Fake News

- Understanding and Addressing Conspiracy Theories

- Critical Analysis

- Top Tips for Study

- Staying Motivated When Studying

- Student Budgeting and Economic Skills

- Getting Organised for Study

- Finding Time to Study

- Sources of Information

- Assessing Internet Information

- Using Apps to Support Study

- What is Theory?

- Styles of Writing

- Effective Reading

- Critical Reading

- Note-Taking from Reading

- Note-Taking for Verbal Exchanges

- Planning an Essay

- How to Write an Essay

- The Do’s and Don’ts of Essay Writing

- How to Write a Report

- Academic Referencing

- Assignment Finishing Touches

- Reflecting on Marked Work

- 6 Skills You Learn in School That You Use in Real Life

- Top 10 Tips on How to Study While Working

- Exam Skills

- Writing a Dissertation or Thesis

- Research Methods

- Teaching, Coaching, Mentoring and Counselling

- Employability Skills for Graduates

Subscribe to our FREE newsletter and start improving your life in just 5 minutes a day.

You'll get our 5 free 'One Minute Life Skills' and our weekly newsletter.

We'll never share your email address and you can unsubscribe at any time.

What is Critical Thinking?

Critical thinking is the ability to think clearly and rationally, understanding the logical connection between ideas. Critical thinking has been the subject of much debate and thought since the time of early Greek philosophers such as Plato and Socrates and has continued to be a subject of discussion into the modern age, for example the ability to recognise fake news .

Critical thinking might be described as the ability to engage in reflective and independent thinking.

In essence, critical thinking requires you to use your ability to reason. It is about being an active learner rather than a passive recipient of information.

Critical thinkers rigorously question ideas and assumptions rather than accepting them at face value. They will always seek to determine whether the ideas, arguments and findings represent the entire picture and are open to finding that they do not.

Critical thinkers will identify, analyse and solve problems systematically rather than by intuition or instinct.

Someone with critical thinking skills can:

Understand the links between ideas.

Determine the importance and relevance of arguments and ideas.

Recognise, build and appraise arguments.

Identify inconsistencies and errors in reasoning.

Approach problems in a consistent and systematic way.

Reflect on the justification of their own assumptions, beliefs and values.

Critical thinking is thinking about things in certain ways so as to arrive at the best possible solution in the circumstances that the thinker is aware of. In more everyday language, it is a way of thinking about whatever is presently occupying your mind so that you come to the best possible conclusion.

Critical Thinking is:

A way of thinking about particular things at a particular time; it is not the accumulation of facts and knowledge or something that you can learn once and then use in that form forever, such as the nine times table you learn and use in school.

The Skills We Need for Critical Thinking

The skills that we need in order to be able to think critically are varied and include observation, analysis, interpretation, reflection, evaluation, inference, explanation, problem solving, and decision making.

Specifically we need to be able to:

Think about a topic or issue in an objective and critical way.

Identify the different arguments there are in relation to a particular issue.

Evaluate a point of view to determine how strong or valid it is.

Recognise any weaknesses or negative points that there are in the evidence or argument.

Notice what implications there might be behind a statement or argument.

Provide structured reasoning and support for an argument that we wish to make.

The Critical Thinking Process

You should be aware that none of us think critically all the time.

Sometimes we think in almost any way but critically, for example when our self-control is affected by anger, grief or joy or when we are feeling just plain ‘bloody minded’.

On the other hand, the good news is that, since our critical thinking ability varies according to our current mindset, most of the time we can learn to improve our critical thinking ability by developing certain routine activities and applying them to all problems that present themselves.

Once you understand the theory of critical thinking, improving your critical thinking skills takes persistence and practice.

Try this simple exercise to help you to start thinking critically.

Think of something that someone has recently told you. Then ask yourself the following questions:

Who said it?

Someone you know? Someone in a position of authority or power? Does it matter who told you this?

What did they say?

Did they give facts or opinions? Did they provide all the facts? Did they leave anything out?

Where did they say it?

Was it in public or in private? Did other people have a chance to respond an provide an alternative account?

When did they say it?

Was it before, during or after an important event? Is timing important?

Why did they say it?

Did they explain the reasoning behind their opinion? Were they trying to make someone look good or bad?

How did they say it?

Were they happy or sad, angry or indifferent? Did they write it or say it? Could you understand what was said?

What are you Aiming to Achieve?

One of the most important aspects of critical thinking is to decide what you are aiming to achieve and then make a decision based on a range of possibilities.

Once you have clarified that aim for yourself you should use it as the starting point in all future situations requiring thought and, possibly, further decision making. Where needed, make your workmates, family or those around you aware of your intention to pursue this goal. You must then discipline yourself to keep on track until changing circumstances mean you have to revisit the start of the decision making process.

However, there are things that get in the way of simple decision making. We all carry with us a range of likes and dislikes, learnt behaviours and personal preferences developed throughout our lives; they are the hallmarks of being human. A major contribution to ensuring we think critically is to be aware of these personal characteristics, preferences and biases and make allowance for them when considering possible next steps, whether they are at the pre-action consideration stage or as part of a rethink caused by unexpected or unforeseen impediments to continued progress.

The more clearly we are aware of ourselves, our strengths and weaknesses, the more likely our critical thinking will be productive.

The Benefit of Foresight

Perhaps the most important element of thinking critically is foresight.

Almost all decisions we make and implement don’t prove disastrous if we find reasons to abandon them. However, our decision making will be infinitely better and more likely to lead to success if, when we reach a tentative conclusion, we pause and consider the impact on the people and activities around us.

The elements needing consideration are generally numerous and varied. In many cases, consideration of one element from a different perspective will reveal potential dangers in pursuing our decision.

For instance, moving a business activity to a new location may improve potential output considerably but it may also lead to the loss of skilled workers if the distance moved is too great. Which of these is the more important consideration? Is there some way of lessening the conflict?

These are the sort of problems that may arise from incomplete critical thinking, a demonstration perhaps of the critical importance of good critical thinking.

Further Reading from Skills You Need

The Skills You Need Guide for Students

Develop the skills you need to make the most of your time as a student.

Our eBooks are ideal for students at all stages of education, school, college and university. They are full of easy-to-follow practical information that will help you to learn more effectively and get better grades.

In Summary:

Critical thinking is aimed at achieving the best possible outcomes in any situation. In order to achieve this it must involve gathering and evaluating information from as many different sources possible.

Critical thinking requires a clear, often uncomfortable, assessment of your personal strengths, weaknesses and preferences and their possible impact on decisions you may make.

Critical thinking requires the development and use of foresight as far as this is possible. As Doris Day sang, “the future’s not ours to see”.

Implementing the decisions made arising from critical thinking must take into account an assessment of possible outcomes and ways of avoiding potentially negative outcomes, or at least lessening their impact.

- Critical thinking involves reviewing the results of the application of decisions made and implementing change where possible.

It might be thought that we are overextending our demands on critical thinking in expecting that it can help to construct focused meaning rather than examining the information given and the knowledge we have acquired to see if we can, if necessary, construct a meaning that will be acceptable and useful.

After all, almost no information we have available to us, either externally or internally, carries any guarantee of its life or appropriateness. Neat step-by-step instructions may provide some sort of trellis on which our basic understanding of critical thinking can blossom but it doesn’t and cannot provide any assurance of certainty, utility or longevity.

Continue to: Critical Thinking and Fake News Critical Reading

See also: Analytical Skills Understanding and Addressing Conspiracy Theories Introduction to Neuro-Linguistic Programming (NLP)

Essential Learning Outcomes Resources

- Stockton's ELO Resources

- Adapting to Change

- Communication Skills

- Creativity and Innovation

Critical Thinking

- Ethical Reasoning

- Global Awareness

- Information Literacy and Research Skills

- Program Competence

- Quantitative Reasoning

- Teamwork and Collaboration

The ability to formulate an effective, balanced perspective on an issue or topic.

- Bezanilla, María José, et al. (2019). Methodologies for teaching-learning critical thinking in higher education: The teacher’s view

- Bjerkvik, Liv ; Hilli, Yvonne. (2019). Reflective writing in undergraduate clinical nursing education: A literature review

- Cooke, Lori, Stroup, Harrington. (2019). Operationalizing the concept of critical thinking for student learning outcome development

- D’alessio, Fernando A. et al. (2019). Studying the impact of critical thinking on the academic performance of executive MBA students

- Janssen, Eva M., et al. (2019). Training higher education teachers’ critical thinking and attitudes towards teaching it

- Morris, Richard, et al. (2019). Effectiveness of two methods for teaching critical thinking to communication sciences and disorders undergraduates

- Plotnikova, N. F. ; Strukov, E. N. (2019). Integration of teamwork and critical thinking skills in the process of teaching students

- Stephenson, Norda, et al. (2019). Impact of peer-led team learning and the science writing and workshop template on the critical thinking skills of first-year chemistry students

- Venugopalan, Murali. (2019). Building critical thinking skills through literature

- Yusuf, Nur Muthmainnah. (2019). Optimizing critical thinking skill through peer editing technique in teaching writing

- Zucker, Andrew. (2019). Using critical thinking to counter misinformation

- Center for Teaching Thinking (CTT)

- Foundation for Critical Thinking

- Critical Thinking Value Rubrics (AAC&U)

- Stockton Institute for Faculty Development

- << Previous: Creativity and Innovation

- Next: Ethical Reasoning >>

- Last Updated: Sep 13, 2023 8:31 AM

- 101 Vera King Farris Drive

- Galloway, NJ 08205-9411

- (609) 652-4346

- Find us on Google Map

- A-Z Databases

- Subject Guides

- Subject Librarians

- Opening Hours

- Ask Us & FAQ

- Study Rooms

- Library Instruction

- Streaming Video

- Course Reserves

- ELO Resources

- My Library Account

- Website Feedback

- Staff Login

Writing Student Learning Outcomes

Student learning outcomes state what students are expected to know or be able to do upon completion of a course or program. Course learning outcomes may contribute, or map to, program learning outcomes, and are required in group instruction course syllabi .

At both the course and program level, student learning outcomes should be clear, observable and measurable, and reflect what will be included in the course or program requirements (assignments, exams, projects, etc.). Typically there are 3-7 course learning outcomes and 3-7 program learning outcomes.

When submitting learning outcomes for course or program approvals, or assessment planning and reporting, please:

- Begin with a verb (exclude any introductory text and the phrase “Students will…”, as this is assumed)

- Limit the length of each learning outcome to 400 characters

- Exclude special characters (e.g., accents, umlats, ampersands, etc.)

- Exclude special formatting (e.g., bullets, dashes, numbering, etc.)

Writing Course Learning Outcomes Video

Watch Video

Steps for Writing Outcomes

The following are recommended steps for writing clear, observable and measurable student learning outcomes. In general, use student-focused language, begin with action verbs and ensure that the learning outcomes demonstrate actionable attributes.

1. Begin with an Action Verb

Begin with an action verb that denotes the level of learning expected. Terms such as know , understand , learn , appreciate are generally not specific enough to be measurable. Levels of learning and associated verbs may include the following:

- Remembering and understanding: recall, identify, label, illustrate, summarize.

- Applying and analyzing: use, differentiate, organize, integrate, apply, solve, analyze.

- Evaluating and creating: Monitor, test, judge, produce, revise, compose.

Consult Bloom’s Revised Taxonomy (below) for more details. For additional sample action verbs, consult this list from The Centre for Learning, Innovation & Simulation at The Michener Institute of Education at UNH.

2. Follow with a Statement

- Identify and summarize the important feature of major periods in the history of western culture

- Apply important chemical concepts and principles to draw conclusions about chemical reactions

- Demonstrate knowledge about the significance of current research in the field of psychology by writing a research paper

- Length – Should be no more than 400 characters.

*Note: Any special characters (e.g., accents, umlats, ampersands, etc.) and formatting (e.g., bullets, dashes, numbering, etc.) will need to be removed when submitting learning outcomes through HelioCampus Assessment and Credentialing (formerly AEFIS) and other digital campus systems.

Revised Bloom’s Taxonomy of Learning: The “Cognitive” Domain

To the right: find a sampling of verbs that represent learning at each level. Find additional action verbs .

*Text adapted from: Bloom, B.S. (Ed.) 1956. Taxonomy of Educational Objectives: The classification of educational goals. Handbook 1, Cognitive Domain. New York.

Anderson, L.W. (Ed.), Krathwohl, D.R. (Ed.), Airasian, P.W., Cruikshank, K.A., Mayer, R.E., Pintrich, P.R., Raths, J., & Wittrock, M.C. (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom’s Taxonomy of Educational Objectives (Complete edition). New York: Longman.

Examples of Learning Outcomes

Academic program learning outcomes.

The following examples of academic program student learning outcomes come from a variety of academic programs across campus, and are organized in four broad areas: 1) contextualization of knowledge; 2) praxis and technique; 3) critical thinking; and, 4) research and communication.

Student learning outcomes for each UW-Madison undergraduate and graduate academic program can be found in Guide . Click on the program of your choosing to find its designated learning outcomes.

This is an accordion element with a series of buttons that open and close related content panels.

Contextualization of Knowledge

Students will…

- identify, formulate and solve problems using appropriate information and approaches.

- demonstrate their understanding of major theories, approaches, concepts, and current and classical research findings in the area of concentration.

- apply knowledge of mathematics, chemistry, physics, and materials science and engineering principles to materials and materials systems.

- demonstrate an understanding of the basic biology of microorganisms.

Praxis and Technique

- utilize the techniques, skills and modern tools necessary for practice.

- demonstrate professional and ethical responsibility.

- appropriately apply laws, codes, regulations, architectural and interiors standards that protect the health and safety of the public.

Critical Thinking

- recognize, describe, predict, and analyze systems behavior.

- evaluate evidence to determine and implement best practice.

- examine technical literature, resolve ambiguity and develop conclusions.

- synthesize knowledge and use insight and creativity to better understand and improve systems.

Research and Communication

- retrieve, analyze, and interpret the professional and lay literature providing information to both professionals and the public.

- propose original research: outlining a plan, assembling the necessary protocol, and performing the original research.

- design and conduct experiments, and analyze and interpret data.

- write clear and concise technical reports and research articles.

- communicate effectively through written reports, oral presentations and discussion.

- guide, mentor and support peers to achieve excellence in practice of the discipline.

- work in multi-disciplinary teams and provide leadership on materials-related problems that arise in multi-disciplinary work.

Course Learning Outcomes

- identify, formulate and solve integrative chemistry problems. (Chemistry)

- build probability models to quantify risks of an insurance system, and use data and technology to make appropriate statistical inferences. (Actuarial Science)

- use basic vector, raster, 3D design, video and web technologies in the creation of works of art. (Art)

- apply differential calculus to model rates of change in time of physical and biological phenomena. (Math)

- identify characteristics of certain structures of the body and explain how structure governs function. (Human Anatomy lab)

- calculate the magnitude and direction of magnetic fields created by moving electric charges. (Physics)

Additional Resources

- Bloom’s Taxonomy

- The Six Facets of Understanding – Wiggins, G. & McTighe, J. (2005). Understanding by Design (2nd ed.). ASCD

- Taxonomy of Significant Learning – Fink, L.D. (2003). A Self-Directed Guide to Designing Courses for Significant Learning. Jossey-Bass

- College of Agricultural & Life Sciences Undergraduate Learning Outcomes

- College of Letters & Science Undergraduate Learning Outcomes

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Athl Train

- v.38(3); Jul-Sep 2003

Active Learning Strategies to Promote Critical Thinking

Stacy E. Walker, PhD, ATC, provided conception and design; acquisition and analysis and interpretation of the data; and drafting, critical revision, and final approval of the article.

To provide a brief introduction to the definition and disposition to think critically along with active learning strategies to promote critical thinking.

Data Sources:

I searched MEDLINE and Educational Resources Information Center (ERIC) from 1933 to 2002 for literature related to critical thinking, the disposition to think critically, questioning, and various critical-thinking pedagogic techniques.

Data Synthesis:

The development of critical thinking has been the topic of many educational articles recently. Numerous instructional methods exist to promote thought and active learning in the classroom, including case studies, discussion methods, written exercises, questioning techniques, and debates. Three methods—questioning, written exercises, and discussion and debates—are highlighted.

Conclusions/Recommendations:

The definition of critical thinking, the disposition to think critically, and different teaching strategies are featured. Although not appropriate for all subject matter and classes, these learning strategies can be used and adapted to facilitate critical thinking and active participation.

The development of critical thinking (CT) has been a focus of educators at every level of education for years. Imagine a certified athletic trainer (ATC) who does not consider all of the injury options when performing an assessment or an ATC who fails to consider using any new rehabilitation techniques because the ones used for years have worked. Envision ATCs who are unable to react calmly during an emergency because, although they designed the emergency action plan, they never practiced it or mentally prepared for an emergency. These are all examples of situations in which ATCs must think critically.

Presently, athletic training educators are teaching many competencies and proficiencies to entry-level athletic training students. As Davies 1 pointed out, CT is needed in clinical decision making because of the many changes occurring in education, technology, and health care reform. Yet little information exists in the athletic training literature regarding CT and methods to promote thought. Fuller, 2 using the Bloom taxonomy, classified learning objectives, written assignments, and examinations as CT and nonCT. Athletic training educators fostered more CT in their learning objectives and written assignments than in examinations. The disposition of athletic training students to think critically exists but is weak. Leaver-Dunn et al 3 concluded that teaching methods that promote the various components of CT should be used. My purpose is to provide a brief introduction to the definition and disposition to think critically along with active learning strategies to promote CT.

DEFINITION OF CRITICAL THINKING

Four commonly referenced definitions of critical thinking are provided in Table Table1. 1 . All of these definitions describe an individual who is actively engaged in the thought process. Not only is this person evaluating, analyzing, and interpreting the information, he or she is also analyzing inferences and assumptions made regarding that information. The use of CT skills such as analysis of inferences and assumptions shows involvement in the CT process. These cognitive skills are employed to form a judgment. Reflective thinking, defined by Dewey 8 as the type of thinking that consists of turning a subject over in the mind and giving it serious and consecutive consideration, can be used to evaluate the quality of judgment(s) made. 9 Unfortunately, not everyone uses CT when solving problems. Therefore, in order to think critically, there must be a certain amount of self-awareness and other characteristics present to enable a person to explain the analysis and interpretation and to evaluate any inferences made.

Various Definitions of Critical Thinking

DISPOSITION TO THINK CRITICALLY

Recently researchers have begun to investigate the relationship between the disposition to think critically and CT skills. Many believe that in order to develop CT skills, the disposition to think critically must be nurtured as well. 4 , 10 – 12 Although research related to the disposition to think critically has recently increased, as far back as 1933 Dewey 8 argued that possession of knowledge is no guarantee for the ability to think well but that an individual must desire to think. Open mindedness, wholeheartedness, and responsibility were 3 of the attitudes he felt were important traits of character to develop the habit of thinking. 8

More recently, the American Philosophical Association Delphi report on critical thinking 7 was released in 1990. This report resulted from a questionnaire regarding CT completed by a cross-disciplinary panel of experts from the United States and Canada. Findings included continued support for the theory that to develop CT, an individual must possess and use certain dispositional characteristics. Based upon the dispositional phrases, the California Critical Thinking Dispositional Inventory 13 was developed. Seven dispositions (Table (Table2) 2 ) were derived from the original 19 published in the Delphi report. 12 It is important to note that these are attitudes or affects, which are sought after in an individual, and not thinking skills. Facione et al 9 purported that a person who thinks critically uses these 7 dispositions to form and make judgments. For example, if an individual is not truth seeking, he or she may not consider other opinions or theories regarding an issue or problem before forming an opinion. A student may possess the knowledge to think critically about an issue, but if these dispositional affects do not work in concert, the student may fail to analyze, evaluate, and synthesize the information to think critically. More research is needed to determine the relationship between CT and the disposition to think critically.

Dispositions to Think Critically 12

METHODS TO PROMOTE CRITICAL THOUGHT

Educators can use various instructional methods to promote CT and problem solving. Although educators value a student who thinks critically about concepts, the spirit or disposition to think critically is, unfortunately, not always present in all students. Many college faculty expect their students to think critically. 14 Some nursing-specific common assumptions made by university nursing teaching faculty are provided 15 (Table (Table3) 3 ) because no similar research exists in athletic training. Espeland and Shanta 16 argued that faculty who select lecture formats as a large part of their teaching strategy may be enabling students. When lecturing, the instructor organizes and presents essential information without student input. This practice eliminates the opportunity for students to decide for themselves what information is important to know. For example, instead of telling our students via lecture what medications could be given to athletes with an upper respiratory infection, they could be assigned to investigate medications and decide which one is appropriate.

Common Assumptions of Nursing Faculty 15

Students need to be exposed to diverse teaching methods that promote CT in order to nurture the CT process. 14 , 17 – 19 As pointed out by Kloss, 20 sometimes students are stuck and unable to understand that various answers exist for one problem. Each ATC has a different method of taping a sprained ankle, performing special tests, and obtaining medical information. Kloss 20 stated that students must be exposed to ambiguity and multiple interpretations and perspectives of a situation or problem in order to stimulate growth. As students move through their clinical experiences, they witness the various methods for taping ankles, performing special tests, and obtaining a thorough history from an injured athlete. Paul and Elder 21 stated that many professors may try to encourage students to learn a body of knowledge by stating that body of knowledge in a sequence of lectures and then asking students to internalize knowledge outside of class on their own time. Not all students possess the thinking skills to analyze and synthesize information without practice. The following 3 sections present information and examples of different teaching techniques to promote CT.

Questioning

An assortment of questioning tactics exists to promote CT. Depending on how a question is asked, the student may use various CT skills such as interpretation, analysis, and recognition of assumptions to form a conclusion. Mills 22 suggested that the thoughtful use of questions may be the quintessential activity of an effective teacher. Questions are only as good as the thought put into them and should go beyond knowledge-level recall. 22 Researchers 23 , 24 have found that often clinical teachers asked significantly more lower-level cognitive questions than higher-level questions. Questions should be designed to promote evaluation and synthesis of facts and concepts. Asking a student to evaluate when proprioception exercises should be included in a rehabilitation program is more challenging than asking a student to define proprioception. Higher-level thinking questions should start or end with words or phrases such as, “explain,” “compare,” “why,” “which is a solution to the problem,” “what is the best and why,” and “do you agree or disagree with this statement?” For example, a student could be asked to compare the use of parachlorophenylalanine versus serotonin for control of posttreatment soreness. Examples of words that can be used to begin questions to challenge at the different levels of the Bloom Taxonomy 25 are given in Table Table4. 4 . The Bloom Taxonomy 25 is a hierarchy of thinking skills that ranges from simple skills, such as knowledge, to complex thinking, such as evaluation. Depending on the initial words used in the question, students can be challenged at different levels of cognition.

Examples of Questions 23

Another type of questioning technique is Socratic questioning. Socratic questioning is defined as a type of questioning that deeply probes or explores the meaning, justification, or logical strength of a claim, position, or line of reasoning. 4 , 26 Questions are asked that investigate assumptions, viewpoints, consequences, and evidence. Questioning methods, such as calling on students who do not have their hands up, can enhance learning by engaging students to think. The Socratic method focuses on clarification. A student's answer to a question can be followed by asking a fellow student to summarize the previous answer. Summarizing the information allows the student to demonstrate whether he or she was listening, had digested the information, and understood it enough to put it into his or her own words. Avoiding questions with one set answer allows for different viewpoints and encourages students to compare problems and approaches. Asking students to explain how the high school and the collegiate or university field experiences are similar and different is an example. There is no right or wrong answer because the answers depend upon the individual student's experiences. 19 Regardless of the answer, the student must think critically about the topic to form a conclusion of how the field experiences are different and similar.

In addition to using these questioning techniques, it is equally important to orient the students to this type of classroom interaction. Mills 22 suggested that provocative questions should be brief and contain only one or two issues at a time for class reflection. It is also important to provide deliberate silence, or “wait” time, for students upon asking questions. 22 , 27 Waiting at least 5 seconds allows the students to think and encourages thought. Elliot 18 argued that waiting even as long as 10 seconds allows the students time to think about possibilities. If a thought question is asked, time must be given for the students to think about the answer.

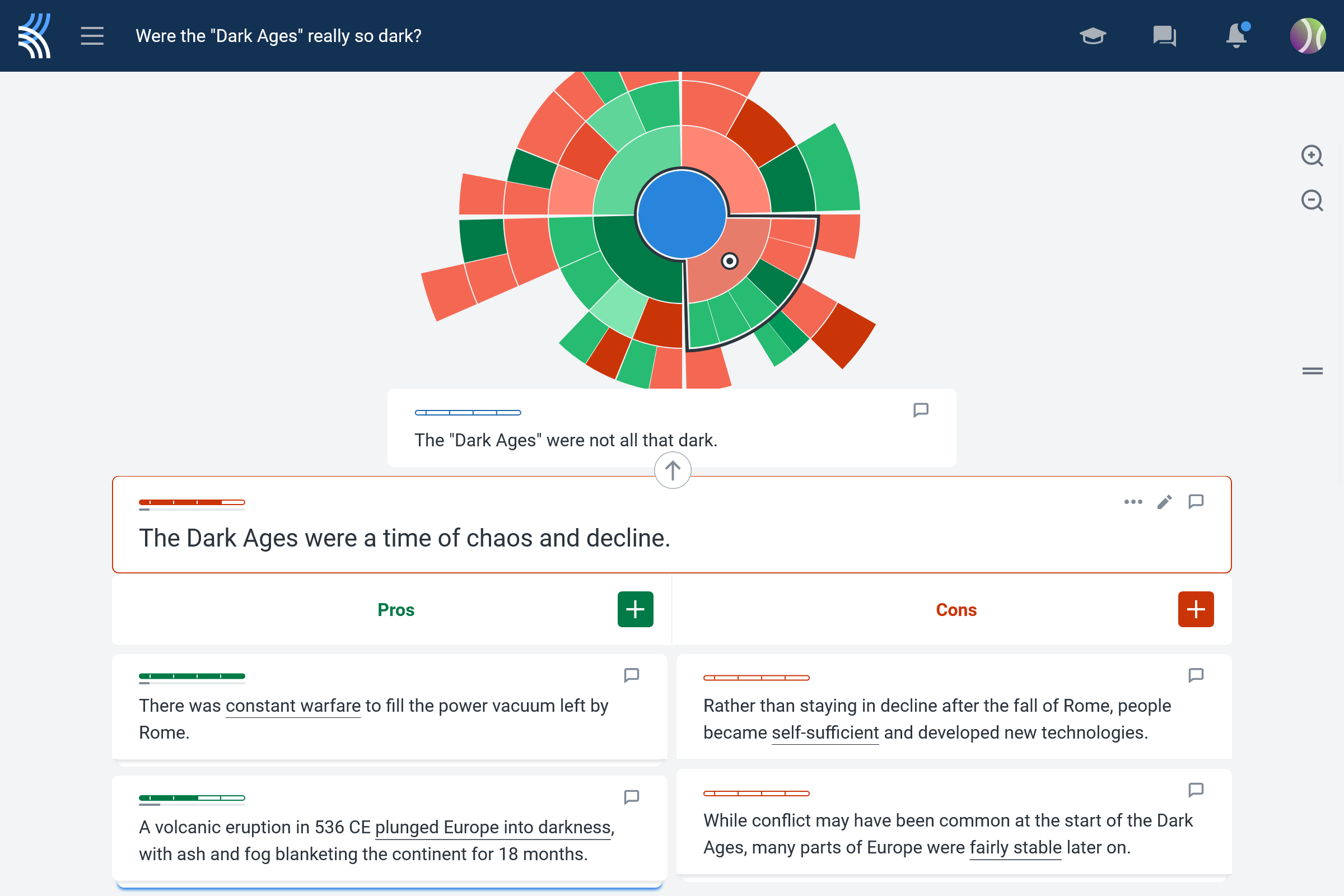

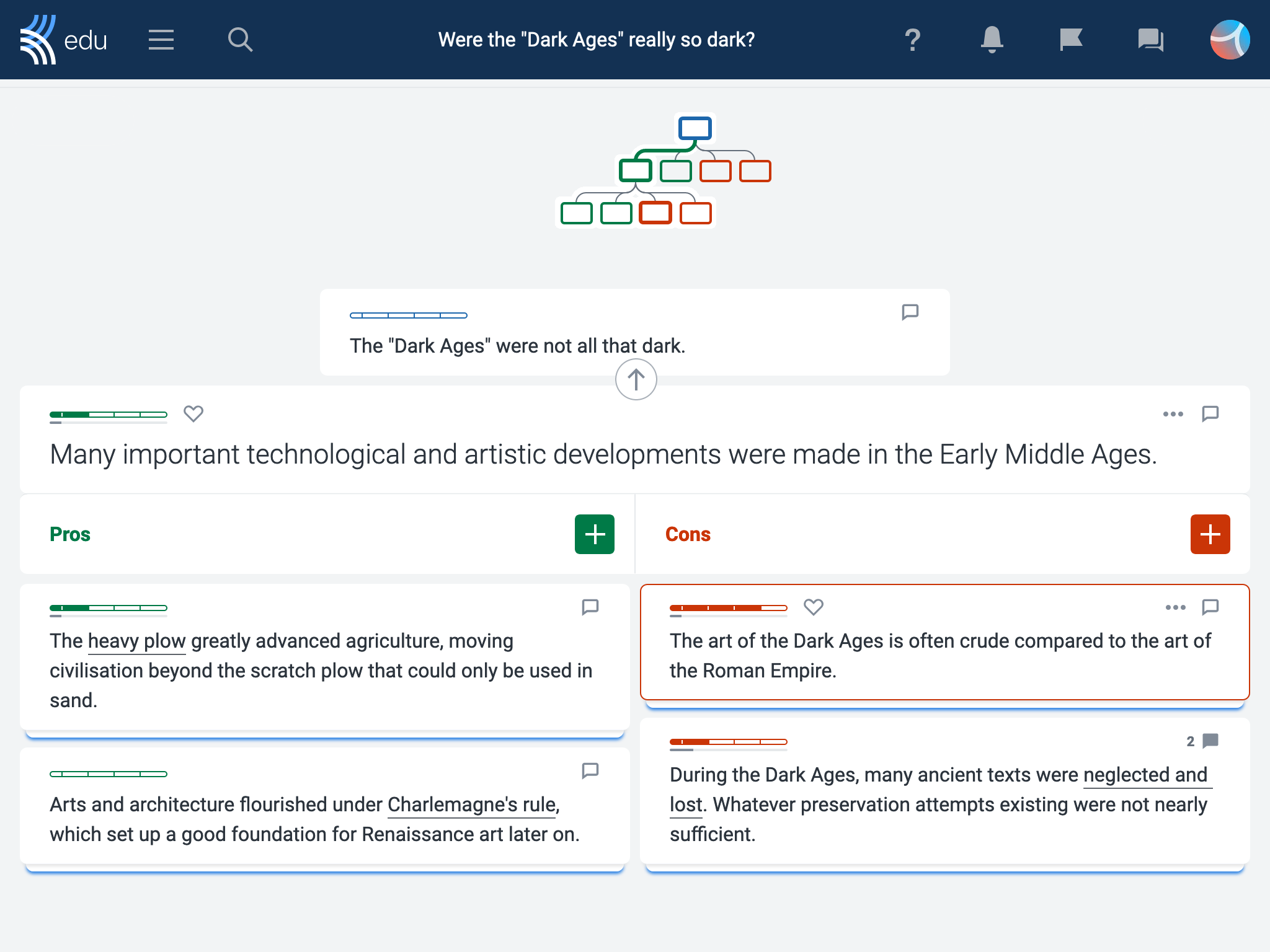

Classroom Discussion and Debates

Classroom discussion and debates can promote critical thinking. Various techniques are available. Bernstein 28 developed a negotiation model in which students were confronted with credible but antagonistic arguments. Students were challenged to deal with the tension between the two arguments. This tension is believed to be one component driving critical thought. Controversial issues in psychology, such as animal rights and pornography, were presented and discussed. Students responded favorably and, as the class progressed over time, they reported being more comfortable arguing both sides of an issue. In athletic training education, a negotiation model could be employed to discuss certain topics, such as the use of heat versus ice or the use of ultrasound versus electric stimulation in the treatment of an injury. Students could be assigned to defend the use of a certain treatment. Another strategy to promote students to seek both sides of an issue is pro and con grids. 29 Students create grids with the pros and cons or advantages or disadvantages of an issue or treatment. Debate was used to promote CT in second-year medical students. 30 After debating, students reported improvements in literature searching, weighing risks and benefits of treatments, and making evidence-based decisions. Regardless of the teaching methods used, students should be exposed to analyzing the costs and benefits of issues, problems, and treatments to help prepare them for real-life decision making.

Observing the reasoning skills of another person was used by Galotti 31 to promote CT. Students were paired, and 4 reasoning tasks were administered. As the tasks were administered, students were told to talk aloud through the reasoning process of their decisions. Students who were observing were to write down key phrases and statements. This same process can be used in an injury-evaluation class. One student performs an evaluation while the others in the class observe. Classroom discussion can then follow. Another alternative is to divide students into pairs. One student performs an evaluation while the other observes. After the evaluation is completed, the students discuss with each other the evaluation (Table (Table5 5 presents examples). Another option is to have athletic training students observe a student peer or ATC during a field evaluation of an athlete. While observing, the student can write down any questions or topics to discuss after the evaluation, providing the student an opportunity to ask why certain evaluation methods were and were not used.

Postevaluation Questions

Daily newspaper clippings directly related to current classroom content also allow an instructor to incorporate discussion into the classroom. 32 For example, an athlete who has been reported to have died as a result of heat illness could provide subject matter for classroom discussion or various written assignments. Such news also affords the instructor an opportunity to discuss the affective components involved. Students could be asked to step into the role of the ATC and think about the reported implications of this death from different perspectives. They could also list any assumptions made by the article or follow-up questions they would ask if they could interview the persons involved. This provides a forum to enlighten students to think for themselves and realize that not each person in the room perceives the article the same way. Whatever the approach taken, investigators and educators agree that assignments and arguments are useful to promote thought among students.

Written Assignments

In-class and out-of-class assignments can also serve as powerful vehicles to allow students to expand their thinking processes. Emig 33 believed that involving students in writing serves their learning uniquely because writing, as process and product, possesses a cluster of attributes that correspond uniquely to certain powerful learning strategies. As a general rule, assignments for the purpose of promoting thought should be short (not long term papers) and focus on the aspect of thinking. 19 Research or 1-topic papers may or may not be a student's own thoughts, and Meyers 32 argued that term papers often prove to be exercises in recapitulating the thoughts of others.

Allegretti and Frederick 34 used a variety of cases from a book to promote CT regarding different ethical issues. Countless case-study situations can be created to allow students to practice managing situations and assess clinical decision making. For example, after reading the National Athletic Trainers' Association position statement on lightning, a student can be asked to address the following scenario: “Explain how you would handle a situation in which a coach has kept athletes outside practicing unsafely. What information would you use from this statement to explain your concerns? Explain why you picked the specific concerns.” These questions can be answered individually or in small groups and then discussed in class. The students will pick different concerns based on their thinking. This variety in answers is not only one way to show that no answer is right or wrong but also allows students to defend their answers to peers. Questions posed on listservs are excellent avenues to enrich a student's education. Using these real-life questions, students read about real issues and concerns of ATCs. These topics present excellent opportunities to pose questions to senior-level athletic training students to examine how they would handle the situation. This provides the students a safe place to analyze the problem and form a decision. Once the students make a decision, additional factors, assumptions, and inferences can be discussed by having all students share the solution they chose.

Lantz and Meyers 35 used personification and assigned students to assume the character of a drug. Students were to relate themselves to the drug, in the belief that drugs exhibit many unique characteristics, such as belonging to a family, interaction problems, adverse reactions, and so forth. The development of analogies comes from experience and comparing one theory or scenario to another with strong similarities.