User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

S.3.2 hypothesis testing (p-value approach).

The P -value approach involves determining "likely" or "unlikely" by determining the probability — assuming the null hypothesis was true — of observing a more extreme test statistic in the direction of the alternative hypothesis than the one observed. If the P -value is small, say less than (or equal to) \(\alpha\), then it is "unlikely." And, if the P -value is large, say more than \(\alpha\), then it is "likely."

If the P -value is less than (or equal to) \(\alpha\), then the null hypothesis is rejected in favor of the alternative hypothesis. And, if the P -value is greater than \(\alpha\), then the null hypothesis is not rejected.

Specifically, the four steps involved in using the P -value approach to conducting any hypothesis test are:

- Specify the null and alternative hypotheses.

- Using the sample data and assuming the null hypothesis is true, calculate the value of the test statistic. Again, to conduct the hypothesis test for the population mean μ , we use the t -statistic \(t^*=\frac{\bar{x}-\mu}{s/\sqrt{n}}\) which follows a t -distribution with n - 1 degrees of freedom.

- Using the known distribution of the test statistic, calculate the P -value : "If the null hypothesis is true, what is the probability that we'd observe a more extreme test statistic in the direction of the alternative hypothesis than we did?" (Note how this question is equivalent to the question answered in criminal trials: "If the defendant is innocent, what is the chance that we'd observe such extreme criminal evidence?")

- Set the significance level, \(\alpha\), the probability of making a Type I error to be small — 0.01, 0.05, or 0.10. Compare the P -value to \(\alpha\). If the P -value is less than (or equal to) \(\alpha\), reject the null hypothesis in favor of the alternative hypothesis. If the P -value is greater than \(\alpha\), do not reject the null hypothesis.

Example S.3.2.1

Mean gpa section .

In our example concerning the mean grade point average, suppose that our random sample of n = 15 students majoring in mathematics yields a test statistic t * equaling 2.5. Since n = 15, our test statistic t * has n - 1 = 14 degrees of freedom. Also, suppose we set our significance level α at 0.05 so that we have only a 5% chance of making a Type I error.

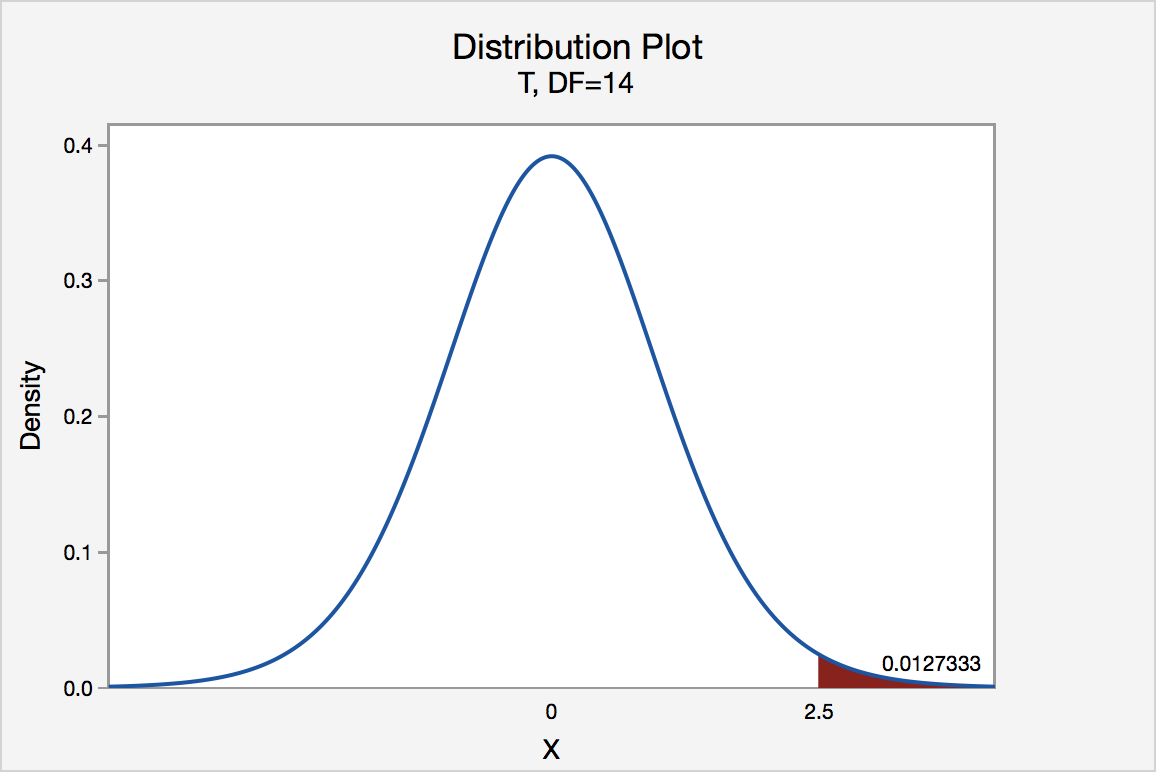

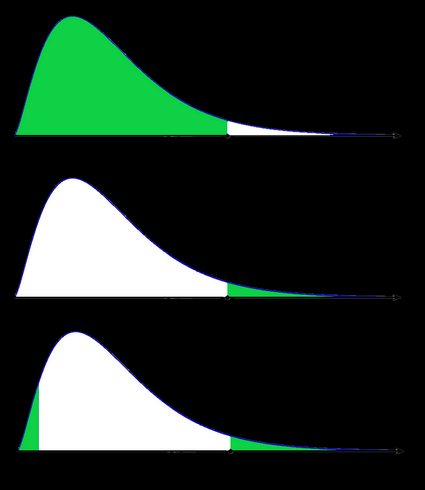

Right Tailed

The P -value for conducting the right-tailed test H 0 : μ = 3 versus H A : μ > 3 is the probability that we would observe a test statistic greater than t * = 2.5 if the population mean \(\mu\) really were 3. Recall that probability equals the area under the probability curve. The P -value is therefore the area under a t n - 1 = t 14 curve and to the right of the test statistic t * = 2.5. It can be shown using statistical software that the P -value is 0.0127. The graph depicts this visually.

The P -value, 0.0127, tells us it is "unlikely" that we would observe such an extreme test statistic t * in the direction of H A if the null hypothesis were true. Therefore, our initial assumption that the null hypothesis is true must be incorrect. That is, since the P -value, 0.0127, is less than \(\alpha\) = 0.05, we reject the null hypothesis H 0 : μ = 3 in favor of the alternative hypothesis H A : μ > 3.

Note that we would not reject H 0 : μ = 3 in favor of H A : μ > 3 if we lowered our willingness to make a Type I error to \(\alpha\) = 0.01 instead, as the P -value, 0.0127, is then greater than \(\alpha\) = 0.01.

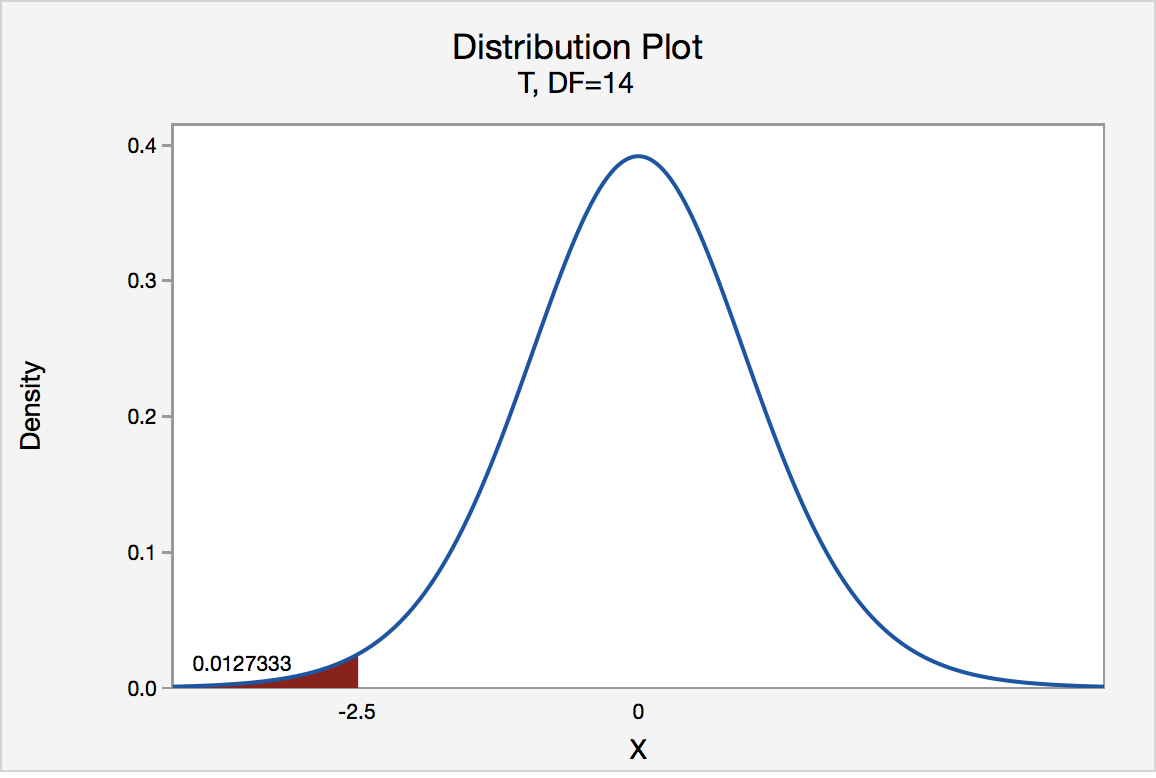

Left Tailed

In our example concerning the mean grade point average, suppose that our random sample of n = 15 students majoring in mathematics yields a test statistic t * instead of equaling -2.5. The P -value for conducting the left-tailed test H 0 : μ = 3 versus H A : μ < 3 is the probability that we would observe a test statistic less than t * = -2.5 if the population mean μ really were 3. The P -value is therefore the area under a t n - 1 = t 14 curve and to the left of the test statistic t* = -2.5. It can be shown using statistical software that the P -value is 0.0127. The graph depicts this visually.

The P -value, 0.0127, tells us it is "unlikely" that we would observe such an extreme test statistic t * in the direction of H A if the null hypothesis were true. Therefore, our initial assumption that the null hypothesis is true must be incorrect. That is, since the P -value, 0.0127, is less than α = 0.05, we reject the null hypothesis H 0 : μ = 3 in favor of the alternative hypothesis H A : μ < 3.

Note that we would not reject H 0 : μ = 3 in favor of H A : μ < 3 if we lowered our willingness to make a Type I error to α = 0.01 instead, as the P -value, 0.0127, is then greater than \(\alpha\) = 0.01.

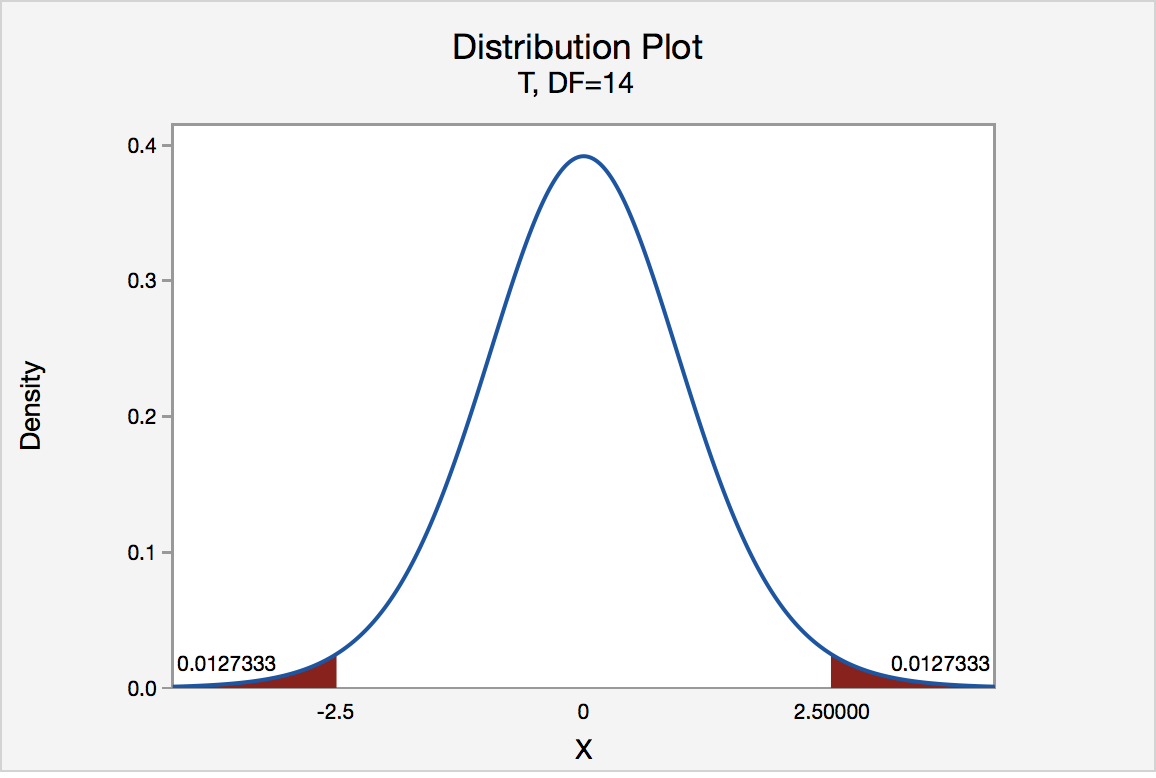

In our example concerning the mean grade point average, suppose again that our random sample of n = 15 students majoring in mathematics yields a test statistic t * instead of equaling -2.5. The P -value for conducting the two-tailed test H 0 : μ = 3 versus H A : μ ≠ 3 is the probability that we would observe a test statistic less than -2.5 or greater than 2.5 if the population mean μ really was 3. That is, the two-tailed test requires taking into account the possibility that the test statistic could fall into either tail (hence the name "two-tailed" test). The P -value is, therefore, the area under a t n - 1 = t 14 curve to the left of -2.5 and to the right of 2.5. It can be shown using statistical software that the P -value is 0.0127 + 0.0127, or 0.0254. The graph depicts this visually.

Note that the P -value for a two-tailed test is always two times the P -value for either of the one-tailed tests. The P -value, 0.0254, tells us it is "unlikely" that we would observe such an extreme test statistic t * in the direction of H A if the null hypothesis were true. Therefore, our initial assumption that the null hypothesis is true must be incorrect. That is, since the P -value, 0.0254, is less than α = 0.05, we reject the null hypothesis H 0 : μ = 3 in favor of the alternative hypothesis H A : μ ≠ 3.

Note that we would not reject H 0 : μ = 3 in favor of H A : μ ≠ 3 if we lowered our willingness to make a Type I error to α = 0.01 instead, as the P -value, 0.0254, is then greater than \(\alpha\) = 0.01.

Now that we have reviewed the critical value and P -value approach procedures for each of the three possible hypotheses, let's look at three new examples — one of a right-tailed test, one of a left-tailed test, and one of a two-tailed test.

The good news is that, whenever possible, we will take advantage of the test statistics and P -values reported in statistical software, such as Minitab, to conduct our hypothesis tests in this course.

P-Value in Statistical Hypothesis Tests: What is it?

P value definition.

A p value is used in hypothesis testing to help you support or reject the null hypothesis . The p value is the evidence against a null hypothesis . The smaller the p-value, the stronger the evidence that you should reject the null hypothesis.

P values are expressed as decimals although it may be easier to understand what they are if you convert them to a percentage . For example, a p value of 0.0254 is 2.54%. This means there is a 2.54% chance your results could be random (i.e. happened by chance). That’s pretty tiny. On the other hand, a large p-value of .9(90%) means your results have a 90% probability of being completely random and not due to anything in your experiment. Therefore, the smaller the p-value, the more important (“ significant “) your results.

When you run a hypothesis test , you compare the p value from your test to the alpha level you selected when you ran the test. Alpha levels can also be written as percentages.

P Value vs Alpha level

Alpha levels are controlled by the researcher and are related to confidence levels . You get an alpha level by subtracting your confidence level from 100%. For example, if you want to be 98 percent confident in your research, the alpha level would be 2% (100% – 98%). When you run the hypothesis test, the test will give you a value for p. Compare that value to your chosen alpha level. For example, let’s say you chose an alpha level of 5% (0.05). If the results from the test give you:

- A small p (≤ 0.05), reject the null hypothesis . This is strong evidence that the null hypothesis is invalid.

- A large p (> 0.05) means the alternate hypothesis is weak, so you do not reject the null.

P Values and Critical Values

What if I Don’t Have an Alpha Level?

In an ideal world, you’ll have an alpha level. But if you do not, you can still use the following rough guidelines in deciding whether to support or reject the null hypothesis:

- If p > .10 → “not significant”

- If p ≤ .10 → “marginally significant”

- If p ≤ .05 → “significant”

- If p ≤ .01 → “highly significant.”

How to Calculate a P Value on the TI 83

Example question: The average wait time to see an E.R. doctor is said to be 150 minutes. You think the wait time is actually less. You take a random sample of 30 people and find their average wait is 148 minutes with a standard deviation of 5 minutes. Assume the distribution is normal. Find the p value for this test.

- Press STAT then arrow over to TESTS.

- Press ENTER for Z-Test .

- Arrow over to Stats. Press ENTER.

- Arrow down to μ0 and type 150. This is our null hypothesis mean.

- Arrow down to σ. Type in your std dev: 5.

- Arrow down to xbar. Type in your sample mean : 148.

- Arrow down to n. Type in your sample size : 30.

- Arrow to <μ0 for a left tail test . Press ENTER.

- Arrow down to Calculate. Press ENTER. P is given as .014, or about 1%.

The probability that you would get a sample mean of 148 minutes is tiny, so you should reject the null hypothesis.

Note : If you don’t want to run a test, you could also use the TI 83 NormCDF function to get the area (which is the same thing as the probability value).

Dodge, Y. (2008). The Concise Encyclopedia of Statistics . Springer. Gonick, L. (1993). The Cartoon Guide to Statistics . HarperPerennial.

Warning: The NCBI web site requires JavaScript to function. more...

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

StatPearls [Internet].

Hypothesis testing, p values, confidence intervals, and significance.

Jacob Shreffler ; Martin R. Huecker .

Affiliations

Last Update: March 13, 2023 .

- Definition/Introduction

Medical providers often rely on evidence-based medicine to guide decision-making in practice. Often a research hypothesis is tested with results provided, typically with p values, confidence intervals, or both. Additionally, statistical or research significance is estimated or determined by the investigators. Unfortunately, healthcare providers may have different comfort levels in interpreting these findings, which may affect the adequate application of the data.

- Issues of Concern

Without a foundational understanding of hypothesis testing, p values, confidence intervals, and the difference between statistical and clinical significance, it may affect healthcare providers' ability to make clinical decisions without relying purely on the research investigators deemed level of significance. Therefore, an overview of these concepts is provided to allow medical professionals to use their expertise to determine if results are reported sufficiently and if the study outcomes are clinically appropriate to be applied in healthcare practice.

Hypothesis Testing

Investigators conducting studies need research questions and hypotheses to guide analyses. Starting with broad research questions (RQs), investigators then identify a gap in current clinical practice or research. Any research problem or statement is grounded in a better understanding of relationships between two or more variables. For this article, we will use the following research question example:

Research Question: Is Drug 23 an effective treatment for Disease A?

Research questions do not directly imply specific guesses or predictions; we must formulate research hypotheses. A hypothesis is a predetermined declaration regarding the research question in which the investigator(s) makes a precise, educated guess about a study outcome. This is sometimes called the alternative hypothesis and ultimately allows the researcher to take a stance based on experience or insight from medical literature. An example of a hypothesis is below.

Research Hypothesis: Drug 23 will significantly reduce symptoms associated with Disease A compared to Drug 22.

The null hypothesis states that there is no statistical difference between groups based on the stated research hypothesis.

Researchers should be aware of journal recommendations when considering how to report p values, and manuscripts should remain internally consistent.

Regarding p values, as the number of individuals enrolled in a study (the sample size) increases, the likelihood of finding a statistically significant effect increases. With very large sample sizes, the p-value can be very low significant differences in the reduction of symptoms for Disease A between Drug 23 and Drug 22. The null hypothesis is deemed true until a study presents significant data to support rejecting the null hypothesis. Based on the results, the investigators will either reject the null hypothesis (if they found significant differences or associations) or fail to reject the null hypothesis (they could not provide proof that there were significant differences or associations).

To test a hypothesis, researchers obtain data on a representative sample to determine whether to reject or fail to reject a null hypothesis. In most research studies, it is not feasible to obtain data for an entire population. Using a sampling procedure allows for statistical inference, though this involves a certain possibility of error. [1] When determining whether to reject or fail to reject the null hypothesis, mistakes can be made: Type I and Type II errors. Though it is impossible to ensure that these errors have not occurred, researchers should limit the possibilities of these faults. [2]

Significance

Significance is a term to describe the substantive importance of medical research. Statistical significance is the likelihood of results due to chance. [3] Healthcare providers should always delineate statistical significance from clinical significance, a common error when reviewing biomedical research. [4] When conceptualizing findings reported as either significant or not significant, healthcare providers should not simply accept researchers' results or conclusions without considering the clinical significance. Healthcare professionals should consider the clinical importance of findings and understand both p values and confidence intervals so they do not have to rely on the researchers to determine the level of significance. [5] One criterion often used to determine statistical significance is the utilization of p values.

P values are used in research to determine whether the sample estimate is significantly different from a hypothesized value. The p-value is the probability that the observed effect within the study would have occurred by chance if, in reality, there was no true effect. Conventionally, data yielding a p<0.05 or p<0.01 is considered statistically significant. While some have debated that the 0.05 level should be lowered, it is still universally practiced. [6] Hypothesis testing allows us to determine the size of the effect.

An example of findings reported with p values are below:

Statement: Drug 23 reduced patients' symptoms compared to Drug 22. Patients who received Drug 23 (n=100) were 2.1 times less likely than patients who received Drug 22 (n = 100) to experience symptoms of Disease A, p<0.05.

Statement:Individuals who were prescribed Drug 23 experienced fewer symptoms (M = 1.3, SD = 0.7) compared to individuals who were prescribed Drug 22 (M = 5.3, SD = 1.9). This finding was statistically significant, p= 0.02.

For either statement, if the threshold had been set at 0.05, the null hypothesis (that there was no relationship) should be rejected, and we should conclude significant differences. Noticeably, as can be seen in the two statements above, some researchers will report findings with < or > and others will provide an exact p-value (0.000001) but never zero [6] . When examining research, readers should understand how p values are reported. The best practice is to report all p values for all variables within a study design, rather than only providing p values for variables with significant findings. [7] The inclusion of all p values provides evidence for study validity and limits suspicion for selective reporting/data mining.

While researchers have historically used p values, experts who find p values problematic encourage the use of confidence intervals. [8] . P-values alone do not allow us to understand the size or the extent of the differences or associations. [3] In March 2016, the American Statistical Association (ASA) released a statement on p values, noting that scientific decision-making and conclusions should not be based on a fixed p-value threshold (e.g., 0.05). They recommend focusing on the significance of results in the context of study design, quality of measurements, and validity of data. Ultimately, the ASA statement noted that in isolation, a p-value does not provide strong evidence. [9]

When conceptualizing clinical work, healthcare professionals should consider p values with a concurrent appraisal study design validity. For example, a p-value from a double-blinded randomized clinical trial (designed to minimize bias) should be weighted higher than one from a retrospective observational study [7] . The p-value debate has smoldered since the 1950s [10] , and replacement with confidence intervals has been suggested since the 1980s. [11]

Confidence Intervals

A confidence interval provides a range of values within given confidence (e.g., 95%), including the accurate value of the statistical constraint within a targeted population. [12] Most research uses a 95% CI, but investigators can set any level (e.g., 90% CI, 99% CI). [13] A CI provides a range with the lower bound and upper bound limits of a difference or association that would be plausible for a population. [14] Therefore, a CI of 95% indicates that if a study were to be carried out 100 times, the range would contain the true value in 95, [15] confidence intervals provide more evidence regarding the precision of an estimate compared to p-values. [6]

In consideration of the similar research example provided above, one could make the following statement with 95% CI:

Statement: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22; there was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

It is important to note that the width of the CI is affected by the standard error and the sample size; reducing a study sample number will result in less precision of the CI (increase the width). [14] A larger width indicates a smaller sample size or a larger variability. [16] A researcher would want to increase the precision of the CI. For example, a 95% CI of 1.43 – 1.47 is much more precise than the one provided in the example above. In research and clinical practice, CIs provide valuable information on whether the interval includes or excludes any clinically significant values. [14]

Null values are sometimes used for differences with CI (zero for differential comparisons and 1 for ratios). However, CIs provide more information than that. [15] Consider this example: A hospital implements a new protocol that reduced wait time for patients in the emergency department by an average of 25 minutes (95% CI: -2.5 – 41 minutes). Because the range crosses zero, implementing this protocol in different populations could result in longer wait times; however, the range is much higher on the positive side. Thus, while the p-value used to detect statistical significance for this may result in "not significant" findings, individuals should examine this range, consider the study design, and weigh whether or not it is still worth piloting in their workplace.

Similarly to p-values, 95% CIs cannot control for researchers' errors (e.g., study bias or improper data analysis). [14] In consideration of whether to report p-values or CIs, researchers should examine journal preferences. When in doubt, reporting both may be beneficial. [13] An example is below:

Reporting both: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22, p = 0.009. There was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

- Clinical Significance

Recall that clinical significance and statistical significance are two different concepts. Healthcare providers should remember that a study with statistically significant differences and large sample size may be of no interest to clinicians, whereas a study with smaller sample size and statistically non-significant results could impact clinical practice. [14] Additionally, as previously mentioned, a non-significant finding may reflect the study design itself rather than relationships between variables.

Healthcare providers using evidence-based medicine to inform practice should use clinical judgment to determine the practical importance of studies through careful evaluation of the design, sample size, power, likelihood of type I and type II errors, data analysis, and reporting of statistical findings (p values, 95% CI or both). [4] Interestingly, some experts have called for "statistically significant" or "not significant" to be excluded from work as statistical significance never has and will never be equivalent to clinical significance. [17]

The decision on what is clinically significant can be challenging, depending on the providers' experience and especially the severity of the disease. Providers should use their knowledge and experiences to determine the meaningfulness of study results and make inferences based not only on significant or insignificant results by researchers but through their understanding of study limitations and practical implications.

- Nursing, Allied Health, and Interprofessional Team Interventions

All physicians, nurses, pharmacists, and other healthcare professionals should strive to understand the concepts in this chapter. These individuals should maintain the ability to review and incorporate new literature for evidence-based and safe care.

- Review Questions

- Access free multiple choice questions on this topic.

- Comment on this article.

Disclosure: Jacob Shreffler declares no relevant financial relationships with ineligible companies.

Disclosure: Martin Huecker declares no relevant financial relationships with ineligible companies.

This book is distributed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) ( http://creativecommons.org/licenses/by-nc-nd/4.0/ ), which permits others to distribute the work, provided that the article is not altered or used commercially. You are not required to obtain permission to distribute this article, provided that you credit the author and journal.

- Cite this Page Shreffler J, Huecker MR. Hypothesis Testing, P Values, Confidence Intervals, and Significance. [Updated 2023 Mar 13]. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

In this Page

Bulk download.

- Bulk download StatPearls data from FTP

Related information

- PMC PubMed Central citations

- PubMed Links to PubMed

Similar articles in PubMed

- The reporting of p values, confidence intervals and statistical significance in Preventive Veterinary Medicine (1997-2017). [PeerJ. 2021] The reporting of p values, confidence intervals and statistical significance in Preventive Veterinary Medicine (1997-2017). Messam LLM, Weng HY, Rosenberger NWY, Tan ZH, Payet SDM, Santbakshsing M. PeerJ. 2021; 9:e12453. Epub 2021 Nov 24.

- Review Clinical versus statistical significance: interpreting P values and confidence intervals related to measures of association to guide decision making. [J Pharm Pract. 2010] Review Clinical versus statistical significance: interpreting P values and confidence intervals related to measures of association to guide decision making. Ferrill MJ, Brown DA, Kyle JA. J Pharm Pract. 2010 Aug; 23(4):344-51. Epub 2010 Apr 13.

- Interpreting "statistical hypothesis testing" results in clinical research. [J Ayurveda Integr Med. 2012] Interpreting "statistical hypothesis testing" results in clinical research. Sarmukaddam SB. J Ayurveda Integr Med. 2012 Apr; 3(2):65-9.

- Confidence intervals in procedural dermatology: an intuitive approach to interpreting data. [Dermatol Surg. 2005] Confidence intervals in procedural dermatology: an intuitive approach to interpreting data. Alam M, Barzilai DA, Wrone DA. Dermatol Surg. 2005 Apr; 31(4):462-6.

- Review Is statistical significance testing useful in interpreting data? [Reprod Toxicol. 1993] Review Is statistical significance testing useful in interpreting data? Savitz DA. Reprod Toxicol. 1993; 7(2):95-100.

Recent Activity

- Hypothesis Testing, P Values, Confidence Intervals, and Significance - StatPearl... Hypothesis Testing, P Values, Confidence Intervals, and Significance - StatPearls

Your browsing activity is empty.

Activity recording is turned off.

Turn recording back on

Connect with NLM

National Library of Medicine 8600 Rockville Pike Bethesda, MD 20894

Web Policies FOIA HHS Vulnerability Disclosure

Help Accessibility Careers

- Data Science

- Data Analysis

- Data Visualization

- Machine Learning

- Deep Learning

- Computer Vision

- Artificial Intelligence

- AI ML DS Interview Series

- AI ML DS Projects series

- Data Engineering

- Web Scrapping

P-Value: Comprehensive Guide to Understand, Apply, and Interpret

A p-value is a statistical metric used to assess a hypothesis by comparing it with observed data.

This article delves into the concept of p-value, its calculation, interpretation, and significance. It also explores the factors that influence p-value and highlights its limitations.

Table of Content

- What is P-value?

How P-value is calculated?

How to interpret p-value, p-value in hypothesis testing, implementing p-value in python, applications of p-value, what is the p-value.

The p-value, or probability value, is a statistical measure used in hypothesis testing to assess the strength of evidence against a null hypothesis. It represents the probability of obtaining results as extreme as, or more extreme than, the observed results under the assumption that the null hypothesis is true.

In simpler words, it is used to reject or support the null hypothesis during hypothesis testing. In data science, it gives valuable insights on the statistical significance of an independent variable in predicting the dependent variable.

Calculating the p-value typically involves the following steps:

- Formulate the Null Hypothesis (H0) : Clearly state the null hypothesis, which typically states that there is no significant relationship or effect between the variables.

- Choose an Alternative Hypothesis (H1) : Define the alternative hypothesis, which proposes the existence of a significant relationship or effect between the variables.

- Determine the Test Statistic : Calculate the test statistic, which is a measure of the discrepancy between the observed data and the expected values under the null hypothesis. The choice of test statistic depends on the type of data and the specific research question.

- Identify the Distribution of the Test Statistic : Determine the appropriate sampling distribution for the test statistic under the null hypothesis. This distribution represents the expected values of the test statistic if the null hypothesis is true.

- Calculate the Critical-value : Based on the observed test statistic and the sampling distribution, find the probability of obtaining the observed test statistic or a more extreme one, assuming the null hypothesis is true.

- Interpret the results: Compare the critical-value with t-statistic. If the t-statistic is larger than the critical value, it provides evidence to reject the null hypothesis, and vice-versa.

Its interpretation depends on the specific test and the context of the analysis. Several popular methods for calculating test statistics that are utilized in p-value calculations.

Test | Scenario | Interpretation |

|---|---|---|

| Used when dealing with large sample sizes or when the population standard deviation is known. | A small p-value (smaller than 0.05) indicates strong evidence against the null hypothesis, leading to its rejection. |

| Appropriate for small sample sizes or when the population standard deviation is unknown. | Similar to the Z-test |

|

| Used for tests of independence or goodness-of-fit. | A small p-value indicates that there is a significant association between the categorical variables, leading to the rejection of the null hypothesis. |

| Commonly used in Analysis of Variance (ANOVA) to compare variances between groups. | A small p-value suggests that at least one group mean is different from the others, leading to the rejection of the null hypothesis. |

| Measures the strength and direction of a linear relationship between two continuous variables. | A small p-value indicates that there is a significant linear relationship between the variables, leading to rejection of the null hypothesis that there is no correlation. |

In general, a small p-value indicates that the observed data is unlikely to have occurred by random chance alone, which leads to the rejection of the null hypothesis. However, it’s crucial to choose the appropriate test based on the nature of the data and the research question, as well as to interpret the p-value in the context of the specific test being used.

The table given below shows the importance of p-value and shows the various kinds of errors that occur during hypothesis testing.

|

|

|

| Correct decision based | Type I error |

| Type II error | Incorrect decision based |

Type I error: Incorrect rejection of the null hypothesis. It is denoted by α (significance level). Type II error: Incorrect acceptance of the null hypothesis. It is denoted by β (power level)

Let’s consider an example to illustrate the process of calculating a p-value for Two Sample T-Test:

A researcher wants to investigate whether there is a significant difference in mean height between males and females in a population of university students.

Suppose we have the following data:

Starting with interpreting the process of calculating p-value

Step 1 : Formulate the Null Hypothesis (H0):

H0: There is no significant difference in mean height between males and females.

Step 2 : Choose an Alternative Hypothesis (H1):

H1: There is a significant difference in mean height between males and females.

Step 3 : Determine the Test Statistic:

The appropriate test statistic for this scenario is the two-sample t-test, which compares the means of two independent groups.

The t-statistic is a measure of the difference between the means of two groups relative to the variability within each group. It is calculated as the difference between the sample means divided by the standard error of the difference. It is also known as the t-value or t-score.

- s1 = First sample’s standard deviation

- s2 = Second sample’s standard deviation

- n1 = First sample’s sample size

- n2 = Second sample’s sample size

So, the calculated two-sample t-test statistic (t) is approximately 5.13.

Step 4 : Identify the Distribution of the Test Statistic:

The t-distribution is used for the two-sample t-test . The degrees of freedom for the t-distribution are determined by the sample sizes of the two groups.

The t-distribution is a probability distribution with tails that are thicker than those of the normal distribution.

- where, n1 is total number of values for 1st category.

- n2 is total number of values for 2nd category.

The degrees of freedom (63) represent the variability available in the data to estimate the population parameters. In the context of the two-sample t-test, higher degrees of freedom provide a more precise estimate of the population variance, influencing the shape and characteristics of the t-distribution.

.png)

T-Statistic

The t-distribution is symmetric and bell-shaped, similar to the normal distribution. As the degrees of freedom increase, the t-distribution approaches the shape of the standard normal distribution. Practically, it affects the critical values used to determine statistical significance and confidence intervals.

Step 5 : Calculate Critical Value.

To find the critical t-value with a t-statistic of 5.13 and 63 degrees of freedom, we can either consult a t-table or use statistical software.

We can use scipy.stats module in Python to find the critical t-value using below code.

Comparing with T-Statistic:

The larger t-statistic suggests that the observed difference between the sample means is unlikely to have occurred by random chance alone. Therefore, we reject the null hypothesis.

- p ≤ (α = 0.05) : Reject the null hypothesis. There is sufficient evidence to conclude that the observed effect or relationship is statistically significant, meaning it is unlikely to have occurred by chance alone.

- p > (α = 0.05) : reject alternate hypothesis (or accept null hypothesis). The observed effect or relationship does not provide enough evidence to reject the null hypothesis. This does not necessarily mean there is no effect; it simply means the sample data does not provide strong enough evidence to rule out the possibility that the effect is due to chance.

In case the significance level is not specified, consider the below general inferences while interpreting your results.

- If p > .10: not significant

- If p ≤ .10: slightly significant

- If p ≤ .05: significant

- If p ≤ .001: highly significant

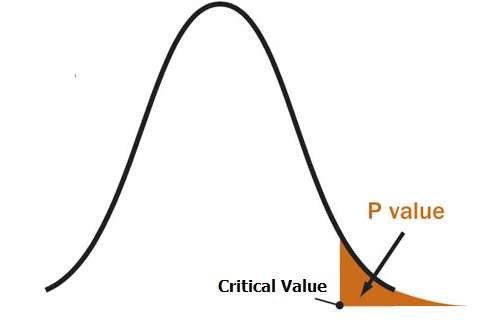

Graphically, the p-value is located at the tails of any confidence interval. [As shown in fig 1]

Fig 1: Graphical Representation

What influences p-value?

The p-value in hypothesis testing is influenced by several factors:

- Sample Size : Larger sample sizes tend to yield smaller p-values, increasing the likelihood of detecting significant effects.

- Effect Size: A larger effect size results in smaller p-values, making it easier to detect a significant relationship.

- Variability in the Data : Greater variability often leads to larger p-values, making it harder to identify significant effects.

- Significance Level : A lower chosen significance level increases the threshold for considering p-values as significant.

- Choice of Test: Different statistical tests may yield different p-values for the same data.

- Assumptions of the Test : Violations of test assumptions can impact p-values.

Understanding these factors is crucial for interpreting p-values accurately and making informed decisions in hypothesis testing.

Significance of P-value

- The p-value provides a quantitative measure of the strength of the evidence against the null hypothesis.

- Decision-Making in Hypothesis Testing

- P-value serves as a guide for interpreting the results of a statistical test. A small p-value suggests that the observed effect or relationship is statistically significant, but it does not necessarily mean that it is practically or clinically meaningful.

Limitations of P-value

- The p-value is not a direct measure of the effect size, which represents the magnitude of the observed relationship or difference between variables. A small p-value does not necessarily mean that the effect size is large or practically meaningful.

- Influenced by Various Factors

The p-value is a crucial concept in statistical hypothesis testing, serving as a guide for making decisions about the significance of the observed relationship or effect between variables.

Let’s consider a scenario where a tutor believes that the average exam score of their students is equal to the national average (85). The tutor collects a sample of exam scores from their students and performs a one-sample t-test to compare it to the population mean (85).

- The code performs a one-sample t-test to compare the mean of a sample data set to a hypothesized population mean.

- It utilizes the scipy.stats library to calculate the t-statistic and p-value. SciPy is a Python library that provides efficient numerical routines for scientific computing.

- The p-value is compared to a significance level (alpha) to determine whether to reject the null hypothesis.

Since, 0.7059>0.05 , we would conclude to fail to reject the null hypothesis. This means that, based on the sample data, there isn’t enough evidence to claim a significant difference in the exam scores of the tutor’s students compared to the national average. The tutor would accept the null hypothesis, suggesting that the average exam score of their students is statistically consistent with the national average.

- During Forward and Backward propagation: When fitting a model (say a Multiple Linear Regression model), we use the p-value in order to find the most significant variables that contribute significantly in predicting the output.

- Effects of various drug medicines: It is highly used in the field of medical research in determining whether the constituents of any drug will have the desired effect on humans or not. P-value is a very strong statistical tool used in hypothesis testing. It provides a plethora of valuable information while making an important decision like making a business intelligence inference or determining whether a drug should be used on humans or not, etc. For any doubt/query, comment below.

The p-value is a crucial concept in statistical hypothesis testing, providing a quantitative measure of the strength of evidence against the null hypothesis. It guides decision-making by comparing the p-value to a chosen significance level, typically 0.05. A small p-value indicates strong evidence against the null hypothesis, suggesting a statistically significant relationship or effect. However, the p-value is influenced by various factors and should be interpreted alongside other considerations, such as effect size and context.

Frequently Based Questions (FAQs)

Why is p-value greater than 1.

A p-value is a probability, and probabilities must be between 0 and 1. Therefore, a p-value greater than 1 is not possible.

What does P 0.01 mean?

It means that the observed test statistic is unlikely to occur by chance if the null hypothesis is true. It represents a 1% chance of observing the test statistic or a more extreme one under the null hypothesis.

Is 0.9 a good p-value?

A good p-value is typically less than or equal to 0.05, indicating that the null hypothesis is likely false and the observed relationship or effect is statistically significant.

What is p-value in a model?

It is a measure of the statistical significance of a parameter in the model. It represents the probability of obtaining the observed value of the parameter or a more extreme one, assuming the null hypothesis is true.

Why is p-value so low?

A low p-value means that the observed test statistic is unlikely to occur by chance if the null hypothesis is true. It suggests that the observed relationship or effect is statistically significant and not due to random sampling variation.

How Can You Use P-value to Compare Two Different Results of a Hypothesis Test?

Compare p-values: Lower p-value indicates stronger evidence against null hypothesis, favoring results with smaller p-values in hypothesis testing.

Similar Reads

Please login to comment....

- Free Music Recording Software in 2024: Top Picks for Windows, Mac, and Linux

- Best Free Music Maker Software in 2024

- What is Quantum AI? The Future of Computing and Artificial Intelligence Explained

- Noel Tata: Ratan Tata's Brother Named as a new Chairman of Tata Trusts

- GeeksforGeeks Practice - Leading Online Coding Platform

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

What is The Null Hypothesis & When Do You Reject The Null Hypothesis

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A null hypothesis is a statistical concept suggesting no significant difference or relationship between measured variables. It’s the default assumption unless empirical evidence proves otherwise.

The null hypothesis states no relationship exists between the two variables being studied (i.e., one variable does not affect the other).

The null hypothesis is the statement that a researcher or an investigator wants to disprove.

Testing the null hypothesis can tell you whether your results are due to the effects of manipulating the dependent variable or due to random chance.

How to Write a Null Hypothesis

Null hypotheses (H0) start as research questions that the investigator rephrases as statements indicating no effect or relationship between the independent and dependent variables.

It is a default position that your research aims to challenge or confirm.

For example, if studying the impact of exercise on weight loss, your null hypothesis might be:

There is no significant difference in weight loss between individuals who exercise daily and those who do not.

Examples of Null Hypotheses

| Research Question | Null Hypothesis |

|---|---|

| Do teenagers use cell phones more than adults? | Teenagers and adults use cell phones the same amount. |

| Do tomato plants exhibit a higher rate of growth when planted in compost rather than in soil? | Tomato plants show no difference in growth rates when planted in compost rather than soil. |

| Does daily meditation decrease the incidence of depression? | Daily meditation does not decrease the incidence of depression. |

| Does daily exercise increase test performance? | There is no relationship between daily exercise time and test performance. |

| Does the new vaccine prevent infections? | The vaccine does not affect the infection rate. |

| Does flossing your teeth affect the number of cavities? | Flossing your teeth has no effect on the number of cavities. |

When Do We Reject The Null Hypothesis?

We reject the null hypothesis when the data provide strong enough evidence to conclude that it is likely incorrect. This often occurs when the p-value (probability of observing the data given the null hypothesis is true) is below a predetermined significance level.

If the collected data does not meet the expectation of the null hypothesis, a researcher can conclude that the data lacks sufficient evidence to back up the null hypothesis, and thus the null hypothesis is rejected.

Rejecting the null hypothesis means that a relationship does exist between a set of variables and the effect is statistically significant ( p > 0.05).

If the data collected from the random sample is not statistically significance , then the null hypothesis will be accepted, and the researchers can conclude that there is no relationship between the variables.

You need to perform a statistical test on your data in order to evaluate how consistent it is with the null hypothesis. A p-value is one statistical measurement used to validate a hypothesis against observed data.

Calculating the p-value is a critical part of null-hypothesis significance testing because it quantifies how strongly the sample data contradicts the null hypothesis.

The level of statistical significance is often expressed as a p -value between 0 and 1. The smaller the p-value, the stronger the evidence that you should reject the null hypothesis.

Usually, a researcher uses a confidence level of 95% or 99% (p-value of 0.05 or 0.01) as general guidelines to decide if you should reject or keep the null.

When your p-value is less than or equal to your significance level, you reject the null hypothesis.

In other words, smaller p-values are taken as stronger evidence against the null hypothesis. Conversely, when the p-value is greater than your significance level, you fail to reject the null hypothesis.

In this case, the sample data provides insufficient data to conclude that the effect exists in the population.

Because you can never know with complete certainty whether there is an effect in the population, your inferences about a population will sometimes be incorrect.

When you incorrectly reject the null hypothesis, it’s called a type I error. When you incorrectly fail to reject it, it’s called a type II error.

Why Do We Never Accept The Null Hypothesis?

The reason we do not say “accept the null” is because we are always assuming the null hypothesis is true and then conducting a study to see if there is evidence against it. And, even if we don’t find evidence against it, a null hypothesis is not accepted.

A lack of evidence only means that you haven’t proven that something exists. It does not prove that something doesn’t exist.

It is risky to conclude that the null hypothesis is true merely because we did not find evidence to reject it. It is always possible that researchers elsewhere have disproved the null hypothesis, so we cannot accept it as true, but instead, we state that we failed to reject the null.

One can either reject the null hypothesis, or fail to reject it, but can never accept it.

Why Do We Use The Null Hypothesis?

We can never prove with 100% certainty that a hypothesis is true; We can only collect evidence that supports a theory. However, testing a hypothesis can set the stage for rejecting or accepting this hypothesis within a certain confidence level.

The null hypothesis is useful because it can tell us whether the results of our study are due to random chance or the manipulation of a variable (with a certain level of confidence).

A null hypothesis is rejected if the measured data is significantly unlikely to have occurred and a null hypothesis is accepted if the observed outcome is consistent with the position held by the null hypothesis.

Rejecting the null hypothesis sets the stage for further experimentation to see if a relationship between two variables exists.

Hypothesis testing is a critical part of the scientific method as it helps decide whether the results of a research study support a particular theory about a given population. Hypothesis testing is a systematic way of backing up researchers’ predictions with statistical analysis.

It helps provide sufficient statistical evidence that either favors or rejects a certain hypothesis about the population parameter.

Purpose of a Null Hypothesis

- The primary purpose of the null hypothesis is to disprove an assumption.

- Whether rejected or accepted, the null hypothesis can help further progress a theory in many scientific cases.

- A null hypothesis can be used to ascertain how consistent the outcomes of multiple studies are.

Do you always need both a Null Hypothesis and an Alternative Hypothesis?

The null (H0) and alternative (Ha or H1) hypotheses are two competing claims that describe the effect of the independent variable on the dependent variable. They are mutually exclusive, which means that only one of the two hypotheses can be true.

While the null hypothesis states that there is no effect in the population, an alternative hypothesis states that there is statistical significance between two variables.

The goal of hypothesis testing is to make inferences about a population based on a sample. In order to undertake hypothesis testing, you must express your research hypothesis as a null and alternative hypothesis. Both hypotheses are required to cover every possible outcome of the study.

What is the difference between a null hypothesis and an alternative hypothesis?

The alternative hypothesis is the complement to the null hypothesis. The null hypothesis states that there is no effect or no relationship between variables, while the alternative hypothesis claims that there is an effect or relationship in the population.

It is the claim that you expect or hope will be true. The null hypothesis and the alternative hypothesis are always mutually exclusive, meaning that only one can be true at a time.

What are some problems with the null hypothesis?

One major problem with the null hypothesis is that researchers typically will assume that accepting the null is a failure of the experiment. However, accepting or rejecting any hypothesis is a positive result. Even if the null is not refuted, the researchers will still learn something new.

Why can a null hypothesis not be accepted?

We can either reject or fail to reject a null hypothesis, but never accept it. If your test fails to detect an effect, this is not proof that the effect doesn’t exist. It just means that your sample did not have enough evidence to conclude that it exists.

We can’t accept a null hypothesis because a lack of evidence does not prove something that does not exist. Instead, we fail to reject it.

Failing to reject the null indicates that the sample did not provide sufficient enough evidence to conclude that an effect exists.

If the p-value is greater than the significance level, then you fail to reject the null hypothesis.

Is a null hypothesis directional or non-directional?

A hypothesis test can either contain an alternative directional hypothesis or a non-directional alternative hypothesis. A directional hypothesis is one that contains the less than (“<“) or greater than (“>”) sign.

A nondirectional hypothesis contains the not equal sign (“≠”). However, a null hypothesis is neither directional nor non-directional.

A null hypothesis is a prediction that there will be no change, relationship, or difference between two variables.

The directional hypothesis or nondirectional hypothesis would then be considered alternative hypotheses to the null hypothesis.

Gill, J. (1999). The insignificance of null hypothesis significance testing. Political research quarterly , 52 (3), 647-674.

Krueger, J. (2001). Null hypothesis significance testing: On the survival of a flawed method. American Psychologist , 56 (1), 16.

Masson, M. E. (2011). A tutorial on a practical Bayesian alternative to null-hypothesis significance testing. Behavior research methods , 43 , 679-690.

Nickerson, R. S. (2000). Null hypothesis significance testing: a review of an old and continuing controversy. Psychological methods , 5 (2), 241.

Rozeboom, W. W. (1960). The fallacy of the null-hypothesis significance test. Psychological bulletin , 57 (5), 416.

p-value Calculator

Table of contents

Welcome to our p-value calculator! You will never again have to wonder how to find the p-value, as here you can determine the one-sided and two-sided p-values from test statistics, following all the most popular distributions: normal, t-Student, chi-squared, and Snedecor's F.

P-values appear all over science, yet many people find the concept a bit intimidating. Don't worry – in this article, we will explain not only what the p-value is but also how to interpret p-values correctly . Have you ever been curious about how to calculate the p-value by hand? We provide you with all the necessary formulae as well!

🙋 If you want to revise some basics from statistics, our normal distribution calculator is an excellent place to start.

What is p-value?

Formally, the p-value is the probability that the test statistic will produce values at least as extreme as the value it produced for your sample . It is crucial to remember that this probability is calculated under the assumption that the null hypothesis H 0 is true !

More intuitively, p-value answers the question:

Assuming that I live in a world where the null hypothesis holds, how probable is it that, for another sample, the test I'm performing will generate a value at least as extreme as the one I observed for the sample I already have?

It is the alternative hypothesis that determines what "extreme" actually means , so the p-value depends on the alternative hypothesis that you state: left-tailed, right-tailed, or two-tailed. In the formulas below, S stands for a test statistic, x for the value it produced for a given sample, and Pr(event | H 0 ) is the probability of an event, calculated under the assumption that H 0 is true:

Left-tailed test: p-value = Pr(S ≤ x | H 0 )

Right-tailed test: p-value = Pr(S ≥ x | H 0 )

Two-tailed test:

p-value = 2 × min{Pr(S ≤ x | H 0 ), Pr(S ≥ x | H 0 )}

(By min{a,b} , we denote the smaller number out of a and b .)

If the distribution of the test statistic under H 0 is symmetric about 0 , then: p-value = 2 × Pr(S ≥ |x| | H 0 )

or, equivalently: p-value = 2 × Pr(S ≤ -|x| | H 0 )

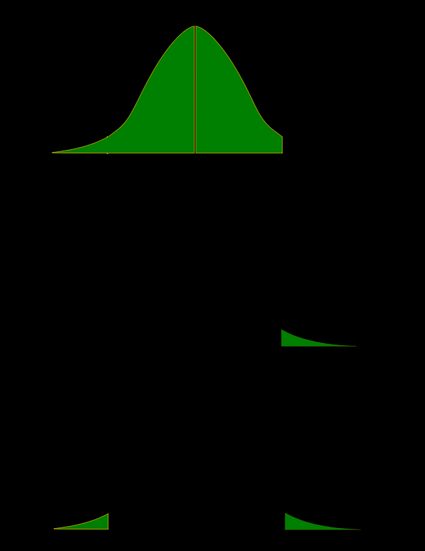

As a picture is worth a thousand words, let us illustrate these definitions. Here, we use the fact that the probability can be neatly depicted as the area under the density curve for a given distribution. We give two sets of pictures: one for a symmetric distribution and the other for a skewed (non-symmetric) distribution.

- Symmetric case: normal distribution:

- Non-symmetric case: chi-squared distribution:

In the last picture (two-tailed p-value for skewed distribution), the area of the left-hand side is equal to the area of the right-hand side.

How do I calculate p-value from test statistic?

To determine the p-value, you need to know the distribution of your test statistic under the assumption that the null hypothesis is true . Then, with the help of the cumulative distribution function ( cdf ) of this distribution, we can express the probability of the test statistics being at least as extreme as its value x for the sample:

Left-tailed test:

p-value = cdf(x) .

Right-tailed test:

p-value = 1 - cdf(x) .

p-value = 2 × min{cdf(x) , 1 - cdf(x)} .

If the distribution of the test statistic under H 0 is symmetric about 0 , then a two-sided p-value can be simplified to p-value = 2 × cdf(-|x|) , or, equivalently, as p-value = 2 - 2 × cdf(|x|) .

The probability distributions that are most widespread in hypothesis testing tend to have complicated cdf formulae, and finding the p-value by hand may not be possible. You'll likely need to resort to a computer or to a statistical table, where people have gathered approximate cdf values.

Well, you now know how to calculate the p-value, but… why do you need to calculate this number in the first place? In hypothesis testing, the p-value approach is an alternative to the critical value approach . Recall that the latter requires researchers to pre-set the significance level, α, which is the probability of rejecting the null hypothesis when it is true (so of type I error ). Once you have your p-value, you just need to compare it with any given α to quickly decide whether or not to reject the null hypothesis at that significance level, α. For details, check the next section, where we explain how to interpret p-values.

How to interpret p-value

As we have mentioned above, the p-value is the answer to the following question:

What does that mean for you? Well, you've got two options:

- A high p-value means that your data is highly compatible with the null hypothesis; and

- A small p-value provides evidence against the null hypothesis , as it means that your result would be very improbable if the null hypothesis were true.

However, it may happen that the null hypothesis is true, but your sample is highly unusual! For example, imagine we studied the effect of a new drug and got a p-value of 0.03 . This means that in 3% of similar studies, random chance alone would still be able to produce the value of the test statistic that we obtained, or a value even more extreme, even if the drug had no effect at all!

The question "what is p-value" can also be answered as follows: p-value is the smallest level of significance at which the null hypothesis would be rejected. So, if you now want to make a decision on the null hypothesis at some significance level α , just compare your p-value with α :

- If p-value ≤ α , then you reject the null hypothesis and accept the alternative hypothesis; and

- If p-value ≥ α , then you don't have enough evidence to reject the null hypothesis.

Obviously, the fate of the null hypothesis depends on α . For instance, if the p-value was 0.03 , we would reject the null hypothesis at a significance level of 0.05 , but not at a level of 0.01 . That's why the significance level should be stated in advance and not adapted conveniently after the p-value has been established! A significance level of 0.05 is the most common value, but there's nothing magical about it. Here, you can see what too strong a faith in the 0.05 threshold can lead to. It's always best to report the p-value, and allow the reader to make their own conclusions.

Also, bear in mind that subject area expertise (and common reason) is crucial. Otherwise, mindlessly applying statistical principles, you can easily arrive at statistically significant, despite the conclusion being 100% untrue.

How to use the p-value calculator to find p-value from test statistic

As our p-value calculator is here at your service, you no longer need to wonder how to find p-value from all those complicated test statistics! Here are the steps you need to follow:

Pick the alternative hypothesis : two-tailed, right-tailed, or left-tailed.

Tell us the distribution of your test statistic under the null hypothesis: is it N(0,1), t-Student, chi-squared, or Snedecor's F? If you are unsure, check the sections below, as they are devoted to these distributions.

If needed, specify the degrees of freedom of the test statistic's distribution.

Enter the value of test statistic computed for your data sample.

By default, the calculator uses the significance level of 0.05.

Our calculator determines the p-value from the test statistic and provides the decision to be made about the null hypothesis.

How do I find p-value from z-score?

In terms of the cumulative distribution function (cdf) of the standard normal distribution, which is traditionally denoted by Φ , the p-value is given by the following formulae:

Left-tailed z-test:

p-value = Φ(Z score )

Right-tailed z-test:

p-value = 1 - Φ(Z score )

Two-tailed z-test:

p-value = 2 × Φ(−|Z score |)

p-value = 2 - 2 × Φ(|Z score |)

🙋 To learn more about Z-tests, head to Omni's Z-test calculator .

We use the Z-score if the test statistic approximately follows the standard normal distribution N(0,1) . Thanks to the central limit theorem, you can count on the approximation if you have a large sample (say at least 50 data points) and treat your distribution as normal.

A Z-test most often refers to testing the population mean , or the difference between two population means, in particular between two proportions. You can also find Z-tests in maximum likelihood estimations.

How do I find p-value from t?

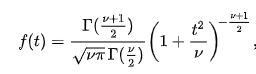

The p-value from the t-score is given by the following formulae, in which cdf t,d stands for the cumulative distribution function of the t-Student distribution with d degrees of freedom:

Left-tailed t-test:

p-value = cdf t,d (t score )

Right-tailed t-test:

p-value = 1 - cdf t,d (t score )

Two-tailed t-test:

p-value = 2 × cdf t,d (−|t score |)

p-value = 2 - 2 × cdf t,d (|t score |)

Use the t-score option if your test statistic follows the t-Student distribution . This distribution has a shape similar to N(0,1) (bell-shaped and symmetric) but has heavier tails – the exact shape depends on the parameter called the degrees of freedom . If the number of degrees of freedom is large (>30), which generically happens for large samples, the t-Student distribution is practically indistinguishable from the normal distribution N(0,1).

The most common t-tests are those for population means with an unknown population standard deviation, or for the difference between means of two populations , with either equal or unequal yet unknown population standard deviations. There's also a t-test for paired (dependent) samples .

🙋 To get more insights into t-statistics, we recommend using our t-test calculator .

p-value from chi-square score (χ² score)

Use the χ²-score option when performing a test in which the test statistic follows the χ²-distribution .

This distribution arises if, for example, you take the sum of squared variables, each following the normal distribution N(0,1). Remember to check the number of degrees of freedom of the χ²-distribution of your test statistic!

How to find the p-value from chi-square-score ? You can do it with the help of the following formulae, in which cdf χ²,d denotes the cumulative distribution function of the χ²-distribution with d degrees of freedom:

Left-tailed χ²-test:

p-value = cdf χ²,d (χ² score )

Right-tailed χ²-test:

p-value = 1 - cdf χ²,d (χ² score )

Remember that χ²-tests for goodness-of-fit and independence are right-tailed tests! (see below)

Two-tailed χ²-test:

p-value = 2 × min{cdf χ²,d (χ² score ), 1 - cdf χ²,d (χ² score )}

(By min{a,b} , we denote the smaller of the numbers a and b .)

The most popular tests which lead to a χ²-score are the following:

Testing whether the variance of normally distributed data has some pre-determined value. In this case, the test statistic has the χ²-distribution with n - 1 degrees of freedom, where n is the sample size. This can be a one-tailed or two-tailed test .

Goodness-of-fit test checks whether the empirical (sample) distribution agrees with some expected probability distribution. In this case, the test statistic follows the χ²-distribution with k - 1 degrees of freedom, where k is the number of classes into which the sample is divided. This is a right-tailed test .

Independence test is used to determine if there is a statistically significant relationship between two variables. In this case, its test statistic is based on the contingency table and follows the χ²-distribution with (r - 1)(c - 1) degrees of freedom, where r is the number of rows, and c is the number of columns in this contingency table. This also is a right-tailed test .

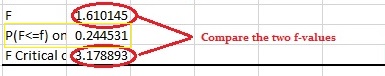

p-value from F-score

Finally, the F-score option should be used when you perform a test in which the test statistic follows the F-distribution , also known as the Fisher–Snedecor distribution. The exact shape of an F-distribution depends on two degrees of freedom .

To see where those degrees of freedom come from, consider the independent random variables X and Y , which both follow the χ²-distributions with d 1 and d 2 degrees of freedom, respectively. In that case, the ratio (X/d 1 )/(Y/d 2 ) follows the F-distribution, with (d 1 , d 2 ) -degrees of freedom. For this reason, the two parameters d 1 and d 2 are also called the numerator and denominator degrees of freedom .

The p-value from F-score is given by the following formulae, where we let cdf F,d1,d2 denote the cumulative distribution function of the F-distribution, with (d 1 , d 2 ) -degrees of freedom:

Left-tailed F-test:

p-value = cdf F,d1,d2 (F score )

Right-tailed F-test:

p-value = 1 - cdf F,d1,d2 (F score )

Two-tailed F-test:

p-value = 2 × min{cdf F,d1,d2 (F score ), 1 - cdf F,d1,d2 (F score )}

Below we list the most important tests that produce F-scores. All of them are right-tailed tests .

A test for the equality of variances in two normally distributed populations . Its test statistic follows the F-distribution with (n - 1, m - 1) -degrees of freedom, where n and m are the respective sample sizes.

ANOVA is used to test the equality of means in three or more groups that come from normally distributed populations with equal variances. We arrive at the F-distribution with (k - 1, n - k) -degrees of freedom, where k is the number of groups, and n is the total sample size (in all groups together).

A test for overall significance of regression analysis . The test statistic has an F-distribution with (k - 1, n - k) -degrees of freedom, where n is the sample size, and k is the number of variables (including the intercept).

With the presence of the linear relationship having been established in your data sample with the above test, you can calculate the coefficient of determination, R 2 , which indicates the strength of this relationship . You can do it by hand or use our coefficient of determination calculator .

A test to compare two nested regression models . The test statistic follows the F-distribution with (k 2 - k 1 , n - k 2 ) -degrees of freedom, where k 1 and k 2 are the numbers of variables in the smaller and bigger models, respectively, and n is the sample size.

You may notice that the F-test of an overall significance is a particular form of the F-test for comparing two nested models: it tests whether our model does significantly better than the model with no predictors (i.e., the intercept-only model).

Can p-value be negative?

No, the p-value cannot be negative. This is because probabilities cannot be negative, and the p-value is the probability of the test statistic satisfying certain conditions.

What does a high p-value mean?

A high p-value means that under the null hypothesis, there's a high probability that for another sample, the test statistic will generate a value at least as extreme as the one observed in the sample you already have. A high p-value doesn't allow you to reject the null hypothesis.

What does a low p-value mean?

A low p-value means that under the null hypothesis, there's little probability that for another sample, the test statistic will generate a value at least as extreme as the one observed for the sample you already have. A low p-value is evidence in favor of the alternative hypothesis – it allows you to reject the null hypothesis.

What do you want?

What do you know?

Your Z-score

Z-score : the test statistic follows the standard normal distribution N(0,1).