Research Methodology

- Introduction to Research Methodology

- Research Approaches

- Concepts of Theory and Empiricism

- Characteristics of scientific method

- Understanding the Language of Research

- 11 Steps in Research Process

- Research Design

- Different Research Designs

- Compare and Contrast the Main Types of Research Designs

- Cross-sectional research design

- Qualitative and Quantitative Research

- Descriptive Research VS Qualitative Research

- Experimental Research VS Quantitative Research

- Sampling Design

- Probability VS Non-Probability Sampling

40 MCQ on Research Methodology

- MCQ on research Process

- MCQ on Research Design

- 18 MCQ on Quantitative Research

- 30 MCQ on Qualitative Research

- 45 MCQ on Sampling Methods

- 20 MCQ on Principles And Planning For Research

Q1. Which of the following statement is correct? (A) Reliability ensures the validity (B) Validity ensures reliability (C) Reliability and validity are independent of each other (D) Reliability does not depend on objectivity

Answer: (C)

Q2. Which of the following statements is correct? (A) Objectives of research are stated in first chapter of the thesis (B) Researcher must possess analytical ability (C) Variability is the source of problem (D) All the above

Answer: (D)

Q3. The first step of research is: (A) Selecting a problem (B) Searching a problem (C) Finding a problem (D) Identifying a problem

Q4. Research can be conducted by a person who: (A) holds a postgraduate degree (B) has studied research methodology (C) possesses thinking and reasoning ability (D) is a hard worker

Answer: (B)

Q5. Research can be classified as: (A) Basic, Applied and Action Research (B) Philosophical, Historical, Survey and Experimental Research (C) Quantitative and Qualitative Research (D) All the above

Q6. To test null hypothesis, a researcher uses: (A) t test (B) ANOVA (C) X 2 (D) factorial analysis

Answer: (B)

Q7. Bibliography given in a research report: (A) shows vast knowledge of the researcher (B) helps those interested in further research (C) has no relevance to research (D) all the above

Q8. A research problem is feasible only when: (A) it has utility and relevance (B) it is researchable (C) it is new and adds something to knowledge (D) all the above

Q9. The study in which the investigators attempt to trace an effect is known as: (A) Survey Research (B) Summative Research (C) Historical Research (D) ‘Ex-post Facto’ Research

Answer: (D)

Q10. Generalized conclusion on the basis of a sample is technically known as: (A) Data analysis and interpretation (B) Parameter inference (C) Statistical inference (D) All of the above

Answer: (A)

Q11. Fundamental research reflects the ability to: (A) Synthesize new ideals (B) Expound new principles (C) Evaluate the existing material concerning research (D) Study the existing literature regarding various topics

Q12. The main characteristic of scientific research is: (A) empirical (B) theoretical (C) experimental (D) all of the above

Q13. Authenticity of a research finding is its: (A) Originality (B) Validity (C) Objectivity (D) All of the above

Q14. Which technique is generally followed when the population is finite? (A) Area Sampling Technique (B) Purposive Sampling Technique (C) Systematic Sampling Technique (D) None of the above

Q15. Research problem is selected from the stand point of: (A) Researcher’s interest (B) Financial support (C) Social relevance (D) Availability of relevant literature

Q16. The research is always – (A) verifying the old knowledge (B) exploring new knowledge (C) filling the gap between knowledge (D) all of these

Q17. Research is (A) Searching again and again (B) Finding a solution to any problem (C) Working in a scientific way to search for the truth of any problem (D) None of the above

Q20. A common test in research demands much priority on (A) Reliability (B) Useability (C) Objectivity (D) All of the above

Q21. Which of the following is the first step in starting the research process? (A) Searching sources of information to locate the problem. (B) Survey of related literature (C) Identification of the problem (D) Searching for solutions to the problem

Answer: (C)

Q22. Which correlation coefficient best explains the relationship between creativity and intelligence? (A) 1.00 (B) 0.6 (C) 0.5 (D) 0.3

Q23. Manipulation is always a part of (A) Historical research (B) Fundamental research (C) Descriptive research (D) Experimental research

Explanation: In experimental research, researchers deliberately manipulate one or more independent variables to observe their effects on dependent variables. The goal is to establish cause-and-effect relationships and test hypotheses. This type of research often involves control groups and random assignment to ensure the validity of the findings. Manipulation is an essential aspect of experimental research to assess the impact of specific variables and draw conclusions about their influence on the outcome.

Q24. The research which is exploring new facts through the study of the past is called (A) Philosophical research (B) Historical research (C) Mythological research (D) Content analysis

Q25. A null hypothesis is (A) when there is no difference between the variables (B) the same as research hypothesis (C) subjective in nature (D) when there is difference between the variables

Q26. We use Factorial Analysis: (A) To know the relationship between two variables (B) To test the Hypothesis (C) To know the difference between two variables (D) To know the difference among the many variables

Explanation: Factorial analysis, specifically factorial analysis of variance (ANOVA), is used to investigate the effects of two or more independent variables on a dependent variable. It helps to determine whether there are significant differences or interactions among the independent variables and their combined effects on the dependent variable.

Q27. Which of the following is classified in the category of the developmental research? (A) Philosophical research (B) Action research (C) Descriptive research (D) All the above

Q28. Action-research is: (A) An applied research (B) A research carried out to solve immediate problems (C) A longitudinal research (D) All the above

Explanation: Action research is an approach to research that encompasses all the options mentioned. It is an applied research method where researchers work collaboratively with practitioners or stakeholders to address immediate problems or issues in a real-world context. It is often conducted over a period of time, making it a longitudinal research approach. So, all the options (A) An applied research, (B) A research carried out to solve immediate problems, and (C) A longitudinal research are correct when describing action research.

Q29. The basis on which assumptions are formulated: (A) Cultural background of the country (B) Universities (C) Specific characteristics of the castes (D) All of these

Q30. How can the objectivity of the research be enhanced? (A) Through its impartiality (B) Through its reliability (C) Through its validity (D) All of these

Q31. A research problem is not feasible only when: (A) it is researchable (B) it is new and adds something to the knowledge (C) it consists of independent and dependent var i ables (D) it has utility and relevance

Explanation: A research problem is considered feasible when it can be studied and investigated using appropriate research methods and resources. The presence of independent and dependent variables is not a factor that determines the feasibility of a research problem. Instead, it is an essential component of a well-defined research problem that helps in formulating research questions or hypotheses. Feasibility depends on whether the research problem can be addressed and answered within the constraints of available time, resources, and methods. Options (A), (B), and (D) are more relevant to the feasibility of a research problem.

Q32. The process not needed in experimental research is: (A) Observation (B) Manipulation and replication (C) Controlling (D) Reference collection

In experimental research, reference collection is not a part of the process.

Q33. When a research problem is related to heterogeneous population, the most suitable sampling method is: (A) Cluster Sampling (B) Stratified Sampling (C) Convenient Sampling (D) Lottery Method

Explanation: When a research problem involves a heterogeneous population, stratified sampling is the most suitable sampling method. Stratified sampling involves dividing the population into subgroups or strata based on certain characteristics or variables. Each stratum represents a relatively homogeneous subset of the population. Then, a random sample is taken from each stratum in proportion to its size or importance in the population. This method ensures that the sample is representative of the diversity present in the population and allows for more precise estimates of population parameters for each subgroup.

Q34. Generalised conclusion on the basis of a sample is technically known as: (A) Data analysis and interpretation (B) Parameter inference (C) Statistical inference (D) All of the above

Explanation: Generalized conclusions based on a sample are achieved through statistical inference. It involves using sample data to make inferences or predictions about a larger population. Statistical inference helps researchers draw conclusions, estimate parameters, and test hypotheses about the population from which the sample was taken. It is a fundamental concept in statistics and plays a crucial role in various fields, including research, data analysis, and decision-making.

Q35. The experimental study is based on

(A) The manipulation of variables (B) Conceptual parameters (C) Replication of research (D) Survey of literature

Q36. Which one is called non-probability sampling? (A) Cluster sampling (B) Quota sampling (C) Systematic sampling (D) Stratified random sampling

Q37. Formulation of hypothesis may NOT be required in: (A) Survey method (B) Historical studies (C) Experimental studies (D) Normative studies

Q38. Field-work-based research is classified as: (A) Empirical (B) Historical (C) Experimental (D) Biographical

Q39. Which of the following sampling method is appropriate to study the prevalence of AIDS amongst male and female in India in 1976, 1986, 1996 and 2006? (A) Cluster sampling (B) Systematic sampling (C) Quota sampling (D) Stratified random sampling

Q40. The research that applies the laws at the time of field study to draw more and more clear ideas about the problem is: (A) Applied research (B) Action research (C) Experimental research (D) None of these

Answer: (A)

Probability and Statistics Questions and Answers – Testing of Hypothesis

This set of Probability and Statistics Multiple Choice Questions & Answers (MCQs) focuses on “Testing of Hypothesis”.

Sanfoundry Global Education & Learning Series – Probability and Statistics.

To practice all areas of Probability and Statistics, here is complete set of 1000+ Multiple Choice Questions and Answers .

- Check Probability and Statistics Books

- Practice Numerical Methods MCQ

- Practice Engineering Mathematics MCQ

- Apply for 1st Year Engineering Internship

Recommended Articles:

- Probability and Statistics Questions and Answers – Testing of Hypothesis Concerning Single Population Mean

- Probability and Statistics Questions and Answers – Probability Distributions – 1

- Probability and Statistics Questions and Answers – Sampling Distribution of Means

- Probability and Statistics Questions and Answers – Sampling Distribution – 2

- Probability and Statistics Questions and Answers – Chi-Squared Distribution

- Probability and Statistics Questions and Answers – Sampling Distribution – 1

- Probability and Statistics MCQ (Multiple Choice Questions)

- Probability and Statistics Questions and Answers – Sampling Distribution of Proportions

- Probability and Statistics Questions and Answers – F-Distribution

- Probability and Statistics Questions and Answers – Probability Distributions – 2

- Probability and Statistics MCQ Questions

- Mechanical Behaviour & Testing of Materials MCQ Questions

- Probability MCQ Questions

- JavaScript MCQ Questions

- Statistical Quality Control MCQ Questions

- Visual Basic MCQ Questions

- C# Programs on Functions

- String Programs in C++

- Linked List Programs in C

- JUnit MCQ Questions

Research Methodology Quiz | MCQ (Multiple Choice Questions)

In order to enhance your understanding of research methodology, we have made thought-provoking quiz featuring multiple-choice questions.

The quiz aimed to sharpen your critical thinking skills and reinforce our grasp on essential concepts in the realm of research. By actively participating in this exercise, we deepened your appreciation for the significance of selecting the right research methods to achieve reliable and meaningful results.

Other articles

Related posts, alternative hypothesis: types and examples, causal research: examples, benefits, and practical tips, 7 types of observational studies | examples, writing an introduction for a research paper: a guide (with examples), clinical research design: elements and importance, dependent variable in research: examples, what is an independent variable, how to write a conclusion for research paper | examples, six useful tips for finding research gap, example of abstract for your research paper: tips, dos, and don’ts, leave a reply cancel reply.

Research Methods- multiple choice exam questions

Students also viewed

- Definitions

Verbal Ability

- Interview Q

| 1) Who was the author of the book named "Methods in Social Research"? c) Goode and HaltThe book named "Methods in Social Research" was authored by Goode and Hatt on Dec 01, 1952, which was specifically aimed to improve student's knowledge as well as response skills. a) Association among variablesMainly the correlational analysis focus on finding the association between one or more quantitative independent variables and one or more quantitative dependent variables. d) Research designA conceptual framework can be understood as a Research design that you require before research. d) To help an applicant in becoming a renowned educationalistEducational research can be defined as an assurance for reviewing and improving educational practice, which will result in becoming a renowned educationalist. c) Collecting data with bottom-up empirical evidence.In qualitative research, we use an inductive methodology that starts from particular to general. In other words, we study society from the bottom, then move upward to make the theories. d) All of the aboveIn random sampling, for each element of the set, there exist a possibility to get selected. c) Ex-post facto methodMainly in the ex-post facto method, the existing groups with qualities are compared on some dependent variable. It is also known as quasi-experimental for the fact that instead of randomly assigning the subjects, they are grouped on the basis of a particular characteristic or trait. d) All of the aboveTippit table was first published by L.H.C Tippett in 1927. b) Formulating a research questionBefore starting with research, it is necessary to have a research question or a topic because once the problem is identified, then we can decide the research design. c) A research dissertationThe format of thesis writing is similar to that of a research dissertation, or we can simply say that dissertation is another word for a thesis. d) Its sole purpose is the production of knowledgeParticipatory action research is a kind of research that stresses participation and action. b) It is only the null hypothesis that can be tested.Hypotheses testing evaluates its plausibility by using sample data. b) The null hypotheses get rejected even if it is trueThe Type-I Error can be defined as the first kind of error. d) All of the above.No explanation. a) Long-term researchIn general, the longitudinal approach is long-term research in which the researchers keep on examining similar individuals to detect if any change has occurred over a while. b) Following an aimNo explanation. a) How well are we doing?Instead of focusing on the process, the evaluation research measures the consequences of the process, for example, if the objectives are met or not. d) Research is not a processResearch is an inspired and systematic work that is undertaken by the researchers to intensify expertise. d) All of the aboveResearch is an inspired and systematic work that is undertaken by the researchers to intensify expertise. b) To bring out the holistic approach to researchParticularly in interdisciplinary research, it combines two or more hypothetical disciplines into one activity. d) Eliminate spurious relationsScientific research aims to build knowledge by hypothesizing new theories and discovering laws. c) QuestionnaireSince it is an urban area, so there is a probability of literacy amongst a greater number of people. Also, there would be numerous questions over the ruling period of a political party, which cannot be simply answered by rating. The rating can only be considered if any political party has done some work, which is why the Questionnaire is used. b) Historical ResearchOne cannot generalize historical research in the USA, which has been done in India. c) By research objectivesResearch objectives concisely demonstrate what we are trying to achieve through the research. c) Has studied research methodologyAnyone who has studied the research methodology can undergo the research. c) ObservationMainly the research method comprises strategies, processes or techniques that are being utilized to collect the data or evidence so as to reveal new information or create a better understanding of a topic. d) All of the aboveA research problem can be defined as a statement about the area of interest, a condition that is required to be improved, a difficulty that has to be eradicated, or any disquieting question existing in scholarly literature, in theory, or in practice that points to be solved. d) How are various parts related to the whole?A circle graph helps in visualizing information as well as the data. b) ObjectivityNo explanation. a) Quota samplingIn non-probability sampling, all the members do not get an equal opportunity to participate in the study. a) Reducing punctuations as well as grammatical errors to minimalist Select the answers from the codes given below: B. a), b), c) and d)All of the above. a) Research refers to a series of systematic activity or activities undertaken to find out the solution to a problem. Select the correct answer from the codes given below: A. a), b), c) and d)All of the above. b) Fundamental ResearchJean Piaget, in his cognitive-developmental theory, proposed the idea that children can actively construct knowledge simply by exploring and manipulating the world around them. d) Introduction; Literature Review; Research Methodology; Results; Discussions and ConclusionsThe core elements of the dissertation are as follows: Introduction; Literature Review; Research Methodology; Results; Discussions and Conclusions d) A sampling of people, newspapers, television programs etc.In general, sampling in case study research involves decisions made by the researchers regarding the strategies of sampling, the number of case studies, and the definition of the unit of analysis. a) Systematic Sampling TechniqueSystematic sampling can be understood as a probability sampling method in which the members of the population are selected by the researchers at a regular interval. a) Social relevanceNo explanation. c) Can be one-tailed as well as two-tailed depending on the hypothesesAn F-test corresponds to a statistical test in which the test statistic has an F-distribution under the null hypothesis. a) CensusCensus is an official survey that keeps track of the population data. b) ObservationNo explanation. d) It contains dependent and independent variablesA research problem can be defined as a statement about the concerned area, a condition needed to be improved, a difficulty that has to be eliminated, or a troubling question existing in scholarly literature, in theory, or in practice pointing towards the need of delivering a deliberate investigation. d) All of the aboveThe research objectives must be concisely described before starting the research as it illustrates what we are going to achieve as an end result after the accomplishment. c) A kind of research being carried out to solve a specific problemIn general, action research is termed as a philosophy or a research methodology, which is implemented in social sciences. a) The cultural background of the countryAn assumption can be identified as an unexamined belief, which we contemplate without even comprehending it. Also, the conclusions that we draw are often based on assumptions. d) All of the aboveNo explanation. b) To understand the difference between two variablesFactor analysis can be understood as a statistical method that defines the variability between two variables in terms of factors, which are nothing but unobserved variables. a) ManipulationIn an experimental research design, whenever the independent variables (i.e., treatment variables or factors) decisively get altered by researchers, then that process is termed as an experimental manipulation. d) Professional AttitudeA professional attitude is an ability that inclines you to manage your time, portray a leadership quality, make you self-determined and persistent. b) Human RelationsThe term sociogram can be defined as a graphical representation of human relation that portrays the social links formed by one particular person. c) Objective ObservationThe research process comprises classifying, locating, evaluating, and investigating the data, which is required to support your research question, followed by developing and expressing your ideas. |

- Send your Feedback to [email protected]

Help Others, Please Share

Learn Latest Tutorials

Transact-SQL

Reinforcement Learning

R Programming

React Native

Python Design Patterns

Python Pillow

Python Turtle

Preparation

Interview Questions

Company Questions

Trending Technologies

Artificial Intelligence

Cloud Computing

Data Science

Machine Learning

B.Tech / MCA

Data Structures

Operating System

Computer Network

Compiler Design

Computer Organization

Discrete Mathematics

Ethical Hacking

Computer Graphics

Software Engineering

Web Technology

Cyber Security

C Programming

Control System

Data Mining

Data Warehouse

In order to continue enjoying our site, we ask that you confirm your identity as a human. Thank you very much for your cooperation.

University Courses

Introduction to Psychology Practice Tests

Introduction to Psychology Online Tests

Research Hypothesis Multiple Choice Questions (MCQ) PDF Download

The Research Hypothesis Multiple Choice Questions (MCQ Quiz) with Answers PDF , Research Hypothesis MCQ PDF e-Book download to practice Introduction to Psychology Tests . Study Psychological Science Multiple Choice Questions and Answers (MCQs) , Research Hypothesis quiz answers PDF to study MSc in psychology courses. The Research Hypothesis MCQ App Download: Free learning app for psychological science, scientific method, ensuring that research is ethical test prep for online college courses.

The MCQ: A attribute, presuming different values among different people in different times or places, known as; "Research Hypothesis" App Download (Free) with answers: Symbol; Attribute; Scientific; Variable; to study MSc in psychology courses. Practice Research Hypothesis Quiz Questions , download Apple e-Book (Free Sample) for accelerated bachelors degree online.

Research Hypothesis MCQs: Questions and Answers PDF Download

A theoretical ideas that form the basis of research hypothesis is:

- Research hypothesis

- Research analysis

- Conceptual variables

- Composed data

A attribute, presuming different values among different people in different times or places, known as:

The concept which form the basis of a research hypothesis are known as:

- Research Method

- Theory of organisms

A concrete statement, prediction of what may happen in a study, termed as:

- Research Tools

A variables that consisting of a numbers that represent the conceptual variables are known as:

- Measured variables

- Non vulnerable variables

Introduction To Psychology Practice Tests

Research hypothesis learning app: free download android & ios.

The App: Research Hypothesis MCQs App to study Research Hypothesis textbook, Introduction to Psychology MCQ App, and RF Electronics MCQ App. The "Research Hypothesis MCQs" App to free download iOS & Android Apps includes complete analytics with interactive assessments. Download App Store & Play Store learning Apps & enjoy 100% functionality with subscriptions!

Research Hypothesis App (Android & iOS)

Introduction to Psychology App (iOS & Android)

RF Electronics App (Android & iOS)

Educational Psychology App (iOS & Android)

Histology MCQs eBook Download

Histology MCQ Book PDF

Microbiology Practice Questions

- Basic Mycology MCQs

- Classification of Medically important Bacteria MCQs

- Classification of Viruses MCQs

- Clinical Virology MCQs

- Drugs and Vaccines MCQs

- Genetics of Bacterial Cells MCQs

- Genetics of Viruses MCQs

- Growth of Bacterial Cells MCQs

- Brains, Bodies, and Behavior Quiz

- Emotions and Motivations Quiz

- Growing and Developing Quiz

- Introduction to Psychology Quiz

- Learning Phychology Quiz

- Personality Quiz

- Psychological Science Quiz

- Remembering and Judging Quiz

Microbiology MCQ Questions

- Inflammation of cornea usually occurs in wearing contact lenses resulting inflammation is known as

- What is estimated diameter of Caliciviruses?

- Absence of skin and other organs is a disease caused by

- Anthrax is caused by gram-positive rod named as

- Shigellosis is a disease found particularly in

Research Hypothesis MCQs Book Questions

- Two areas of 'hypothalamus' are known as:

- Personality is a derivation of our;

- Most important communicator of emotion is:

- Hormonal surge in developmental stage is related with;

- Light enters in our eye through;

- Request new password

- Create a new account

Research Methodology

Student resources, multiple choice questions.

Research: A Way of Thinking

The Research Process: A Quick Glance

Reviewing the Literature

Formulating a Research Problem

Identifying Variables

Constructing Hypotheses

The Research Design

Selecting a Study Design

Selecting a Method of Data Collection

Collecting Data Using Attitudinal Scales

Establishing the Validity and Reliability of a Research Instrument

Selecting a Sample

Writing a Research Proposal

Considering Ethical Issues in Data Collection

Processing Data

Displaying Data

Writing a Research Report

430+ Research Methodology (RM) Solved MCQs

| 1. | |

| A. | Wilkinson |

| B. | CR Kothari |

| C. | Kerlinger |

| D. | Goode and Halt |

| Answer» D. Goode and Halt | |

| 2. | |

| A. | Marshall |

| B. | P.V. Young |

| C. | Emory |

| D. | Kerlinger |

| Answer» C. Emory | |

| 3. | |

| A. | Young |

| B. | Kerlinger |

| C. | Kothari |

| D. | Emory |

| Answer» A. Young | |

| 4. | |

| A. | Experiment |

| B. | Observation |

| C. | Deduction |

| D. | Scientific method |

| Answer» D. Scientific method | |

| 5. | |

| A. | Deduction |

| B. | Scientific method |

| C. | Observation |

| D. | experience |

| Answer» B. Scientific method | |

| 6. | |

| A. | Objectivity |

| B. | Ethics |

| C. | Proposition |

| D. | Neutrality |

| Answer» A. Objectivity | |

| 7. | |

| A. | Induction |

| B. | Deduction |

| C. | Research |

| D. | Experiment |

| Answer» A. Induction | |

| 8. | |

| A. | Belief |

| B. | Value |

| C. | Objectivity |

| D. | Subjectivity |

| Answer» C. Objectivity | |

| 9. | |

| A. | Induction |

| B. | deduction |

| C. | Observation |

| D. | experience |

| Answer» B. deduction | |

| 10. | |

| A. | Caroline |

| B. | P.V.Young |

| C. | Dewey John |

| D. | Emory |

| Answer» B. P.V.Young | |

| 11. | |

| A. | Facts |

| B. | Values |

| C. | Theory |

| D. | Generalization |

| Answer» C. Theory | |

| 12. | |

| A. | Jack Gibbs |

| B. | PV Young |

| C. | Black |

| D. | Rose Arnold |

| Answer» B. PV Young | |

| 13. | |

| A. | Black James and Champion |

| B. | P.V. Young |

| C. | Emory |

| D. | Gibbes |

| Answer» A. Black James and Champion | |

| 14. | |

| A. | Theory |

| B. | Value |

| C. | Fact |

| D. | Statement |

| Answer» C. Fact | |

| 15. | |

| A. | Good and Hatt |

| B. | Emory |

| C. | P.V. Young |

| D. | Claver |

| Answer» A. Good and Hatt | |

| 16. | |

| A. | Concept |

| B. | Variable |

| C. | Model |

| D. | Facts |

| Answer» C. Model | |

| 17. | |

| A. | Objects |

| B. | Human beings |

| C. | Living things |

| D. | Non living things |

| Answer» B. Human beings | |

| 18. | |

| A. | Natural and Social |

| B. | Natural and Physical |

| C. | Physical and Mental |

| D. | Social and Physical |

| Answer» A. Natural and Social | |

| 19. | |

| A. | Causal Connection |

| B. | reason |

| C. | Interaction |

| D. | Objectives |

| Answer» A. Causal Connection | |

| 20. | |

| A. | Explain |

| B. | diagnosis |

| C. | Recommend |

| D. | Formulate |

| Answer» B. diagnosis | |

| 21. | |

| A. | Integration |

| B. | Social Harmony |

| C. | National Integration |

| D. | Social Equality |

| Answer» A. Integration | |

| 22. | |

| A. | Unit |

| B. | design |

| C. | Random |

| D. | Census |

| Answer» B. design | |

| 23. | |

| A. | Objectivity |

| B. | Specificity |

| C. | Values |

| D. | Facts |

| Answer» A. Objectivity | |

| 24. | |

| A. | Purpose |

| B. | Intent |

| C. | Methodology |

| D. | Techniques |

| Answer» B. Intent | |

| 25. | |

| A. | Pure Research |

| B. | Action Research |

| C. | Pilot study |

| D. | Survey |

| Answer» A. Pure Research | |

| 26. | |

| A. | Pure Research |

| B. | Survey |

| C. | Action Research |

| D. | Long term Research |

| Answer» B. Survey | |

| 27. | |

| A. | Survey |

| B. | Action research |

| C. | Analytical research |

| D. | Pilot study |

| Answer» C. Analytical research | |

| 28. | |

| A. | Fundamental Research |

| B. | Analytical Research |

| C. | Survey |

| D. | Action Research |

| Answer» D. Action Research | |

| 29. | |

| A. | Action Research |

| B. | Survey |

| C. | Pilot study |

| D. | Pure Research |

| Answer» D. Pure Research | |

| 30. | |

| A. | Quantitative |

| B. | Qualitative |

| C. | Pure |

| D. | applied |

| Answer» B. Qualitative | |

| 31. | |

| A. | Empirical research |

| B. | Conceptual Research |

| C. | Quantitative research |

| D. | Qualitative research |

| Answer» B. Conceptual Research | |

| 32. | |

| A. | Clinical or diagnostic |

| B. | Causal |

| C. | Analytical |

| D. | Qualitative |

| Answer» A. Clinical or diagnostic | |

| 33. | |

| A. | Field study |

| B. | Survey |

| C. | Laboratory Research |

| D. | Empirical Research |

| Answer» C. Laboratory Research | |

| 34. | |

| A. | Clinical Research |

| B. | Experimental Research |

| C. | Laboratory Research |

| D. | Empirical Research |

| Answer» D. Empirical Research | |

| 35. | |

| A. | Survey |

| B. | Empirical |

| C. | Clinical |

| D. | Diagnostic |

| Answer» A. Survey | |

| 36. | |

| A. | Ostle |

| B. | Richard |

| C. | Karl Pearson |

| D. | Kerlinger |

| Answer» C. Karl Pearson | |

| 37. | |

| A. | Redmen and Mory |

| B. | P.V.Young |

| C. | Robert C meir |

| D. | Harold Dazier |

| Answer» A. Redmen and Mory | |

| 38. | |

| A. | Technique |

| B. | Operations |

| C. | Research methodology |

| D. | Research Process |

| Answer» C. Research methodology | |

| 39. | |

| A. | Slow |

| B. | Fast |

| C. | Narrow |

| D. | Systematic |

| Answer» D. Systematic | |

| 40. | |

| A. | Logical |

| B. | Non logical |

| C. | Narrow |

| D. | Systematic |

| Answer» A. Logical | |

| 41. | |

| A. | Delta Kappan |

| B. | James Harold Fox |

| C. | P.V.Young |

| D. | Karl Popper |

| Answer» B. James Harold Fox | |

| 42. | |

| A. | Problem |

| B. | Experiment |

| C. | Research Techniques |

| D. | Research methodology |

| Answer» D. Research methodology | |

| 43. | |

| A. | Field Study |

| B. | diagnosis tic study |

| C. | Action study |

| D. | Pilot study |

| Answer» B. diagnosis tic study | |

| 44. | |

| A. | Social Science Research |

| B. | Experience Survey |

| C. | Problem formulation |

| D. | diagnostic study |

| Answer» A. Social Science Research | |

| 45. | |

| A. | P.V. Young |

| B. | Kerlinger |

| C. | Emory |

| D. | Clover Vernon |

| Answer» B. Kerlinger | |

| 46. | |

| A. | Black James and Champions |

| B. | P.V. Young |

| C. | Mortan Kaplan |

| D. | William Emory |

| Answer» A. Black James and Champions | |

| 47. | |

| A. | Best John |

| B. | Emory |

| C. | Clover |

| D. | P.V. Young |

| Answer» D. P.V. Young | |

| 48. | |

| A. | Belief |

| B. | Value |

| C. | Confidence |

| D. | Overconfidence |

| Answer» D. Overconfidence | |

| 49. | |

| A. | Velocity |

| B. | Momentum |

| C. | Frequency |

| D. | gravity |

| Answer» C. Frequency | |

| 50. | |

| A. | Research degree |

| B. | Research Academy |

| C. | Research Labs |

| D. | Research Problems |

| Answer» A. Research degree | |

| 51. | |

| A. | Book |

| B. | Journal |

| C. | News Paper |

| D. | Census Report |

| Answer» C. News Paper | |

| 52. | |

| A. | Lack of sufficient number of Universities |

| B. | Lack of sufficient research guides |

| C. | Lack of sufficient Fund |

| D. | Lack of scientific training in research |

| Answer» D. Lack of scientific training in research | |

| 53. | |

| A. | Indian Council for Survey and Research |

| B. | Indian Council for strategic Research |

| C. | Indian Council for Social Science Research |

| D. | Inter National Council for Social Science Research |

| Answer» C. Indian Council for Social Science Research | |

| 54. | |

| A. | University Grants Commission |

| B. | Union Government Commission |

| C. | University Governance Council |

| D. | Union government Council |

| Answer» A. University Grants Commission | |

| 55. | |

| A. | Junior Research Functions |

| B. | Junior Research Fellowship |

| C. | Junior Fellowship |

| D. | None of the above |

| Answer» B. Junior Research Fellowship | |

| 56. | |

| A. | Formulation of a problem |

| B. | Collection of Data |

| C. | Editing and Coding |

| D. | Selection of a problem |

| Answer» D. Selection of a problem | |

| 57. | |

| A. | Fully solved |

| B. | Not solved |

| C. | Cannot be solved |

| D. | half- solved |

| Answer» D. half- solved | |

| 58. | |

| A. | Schools and Colleges |

| B. | Class Room Lectures |

| C. | Play grounds |

| D. | Infra structures |

| Answer» B. Class Room Lectures | |

| 59. | |

| A. | Observation |

| B. | Problem |

| C. | Data |

| D. | Experiment |

| Answer» B. Problem | |

| 60. | |

| A. | Solution |

| B. | Examination |

| C. | Problem formulation |

| D. | Problem Solving |

| Answer» C. Problem formulation | |

| 61. | |

| A. | Very Common |

| B. | Overdone |

| C. | Easy one |

| D. | rare |

| Answer» B. Overdone | |

| 62. | |

| A. | Statement of the problem |

| B. | Gathering of Data |

| C. | Measurement |

| D. | Survey |

| Answer» A. Statement of the problem | |

| 63. | |

| A. | Professor |

| B. | Tutor |

| C. | HOD |

| D. | Guide |

| Answer» D. Guide | |

| 64. | |

| A. | Statement of the problem |

| B. | Understanding the nature of the problem |

| C. | Survey |

| D. | Discussions |

| Answer» B. Understanding the nature of the problem | |

| 65. | |

| A. | Statement of the problem |

| B. | Understanding the nature of the problem |

| C. | Survey the available literature |

| D. | Discussion |

| Answer» C. Survey the available literature | |

| 66. | |

| A. | Survey |

| B. | Discussion |

| C. | Literature survey |

| D. | Re Phrasing the Research problem |

| Answer» D. Re Phrasing the Research problem | |

| 67. | |

| A. | Title |

| B. | Index |

| C. | Bibliography |

| D. | Concepts |

| Answer» A. Title | |

| 68. | |

| A. | Questions to be answered |

| B. | methods |

| C. | Techniques |

| D. | methodology |

| Answer» A. Questions to be answered | |

| 69. | |

| A. | Speed |

| B. | Facts |

| C. | Values |

| D. | Novelty |

| Answer» D. Novelty | |

| 70. | |

| A. | Originality |

| B. | Values |

| C. | Coherence |

| D. | Facts |

| Answer» A. Originality | |

| 71. | |

| A. | Academic and Non academic |

| B. | Cultivation |

| C. | Academic |

| D. | Utilitarian |

| Answer» B. Cultivation | |

| 72. | |

| A. | Information |

| B. | firsthand knowledge |

| C. | Knowledge and information |

| D. | models |

| Answer» C. Knowledge and information | |

| 73. | |

| A. | Alienation |

| B. | Cohesion |

| C. | mobility |

| D. | Integration |

| Answer» B. Cohesion | |

| 74. | |

| A. | Scientific temper |

| B. | Age |

| C. | Money |

| D. | time |

| Answer» A. Scientific temper | |

| 75. | |

| A. | Secular |

| B. | Totalitarian |

| C. | democratic |

| D. | welfare |

| Answer» D. welfare | |

| 76. | |

| A. | Hypothesis |

| B. | Variable |

| C. | Concept |

| D. | facts |

| Answer» C. Concept | |

| 77. | |

| A. | Abstract and Coherent |

| B. | Concrete and Coherent |

| C. | Abstract and concrete |

| D. | None of the above |

| Answer» C. Abstract and concrete | |

| 78. | |

| A. | 4 |

| B. | 6 |

| C. | 10 |

| D. | 2 |

| Answer» D. 2 | |

| 79. | |

| A. | Observation |

| B. | formulation |

| C. | Theory |

| D. | Postulation |

| Answer» D. Postulation | |

| 80. | |

| A. | Formulation |

| B. | Postulation |

| C. | Intuition |

| D. | Observation |

| Answer» C. Intuition | |

| 81. | |

| A. | guide |

| B. | tools |

| C. | methods |

| D. | Variables |

| Answer» B. tools | |

| 82. | |

| A. | Metaphor |

| B. | Simile |

| C. | Symbols |

| D. | Models |

| Answer» C. Symbols | |

| 83. | |

| A. | Formulation |

| B. | Calculation |

| C. | Abstraction |

| D. | Specification |

| Answer» C. Abstraction | |

| 84. | |

| A. | Verbal |

| B. | Oral |

| C. | Hypothetical |

| D. | Operational |

| Answer» C. Hypothetical | |

| 85. | |

| A. | Kerlinger |

| B. | P.V. Young |

| C. | Aurthur |

| D. | Kaplan |

| Answer» B. P.V. Young | |

| 86. | |

| A. | Same and different |

| B. | Same |

| C. | different |

| D. | None of the above |

| Answer» C. different | |

| 87. | |

| A. | Greek |

| B. | English |

| C. | Latin |

| D. | Many languages |

| Answer» D. Many languages | |

| 88. | |

| A. | Variable |

| B. | Hypothesis |

| C. | Data |

| D. | Concept |

| Answer» B. Hypothesis | |

| 89. | |

| A. | Data |

| B. | Concept |

| C. | Research |

| D. | Hypothesis |

| Answer» D. Hypothesis | |

| 90. | |

| A. | Lund berg |

| B. | Emory |

| C. | Johnson |

| D. | Good and Hatt |

| Answer» D. Good and Hatt | |

| 91. | |

| A. | Good and Hatt |

| B. | Lund berg |

| C. | Emory |

| D. | Orwell |

| Answer» B. Lund berg | |

| 92. | |

| A. | Descriptive |

| B. | Imaginative |

| C. | Relational |

| D. | Variable |

| Answer» A. Descriptive | |

| 93. | |

| A. | Null Hypothesis |

| B. | Working Hypothesis |

| C. | Relational Hypothesis |

| D. | Descriptive Hypothesis |

| Answer» B. Working Hypothesis | |

| 94. | |

| A. | Relational Hypothesis |

| B. | Situational Hypothesis |

| C. | Null Hypothesis |

| D. | Casual Hypothesis |

| Answer» C. Null Hypothesis | |

| 95. | |

| A. | Abstract |

| B. | Dependent |

| C. | Independent |

| D. | Separate |

| Answer» C. Independent | |

| 96. | |

| A. | Independent |

| B. | Dependent |

| C. | Separate |

| D. | Abstract |

| Answer» B. Dependent | |

| 97. | |

| A. | Causal |

| B. | Relational |

| C. | Descriptive |

| D. | Tentative |

| Answer» B. Relational | |

| 98. | |

| A. | One |

| B. | Many |

| C. | Zero |

| D. | None of these |

| Answer» C. Zero | |

| 99. | |

| A. | Statistical Hypothesis |

| B. | Complex Hypothesis |

| C. | Common sense Hypothesis |

| D. | Analytical Hypothesis |

| Answer» C. Common sense Hypothesis | |

| 100. | |

| A. | Null Hypothesis |

| B. | Casual Hypothesis |

| C. | Barren Hypothesis |

| D. | Analytical Hypothesis |

| Answer» D. Analytical Hypothesis | |

- Biology MCQs

- Biology Notes

- __Biotechnology

- __Microbiology

- __Biochemistry

- _Immunology

- _Biology MCQ

- Practice Tests

- _Exam Questions

- _NEET Biology MCQs

Multiple Choice Questions on Research Methodology

Our website uses cookies to improve your experience. Learn more

Contact form

Research Hypothesis In Psychology: Types, & Examples

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A research hypothesis, in its plural form “hypotheses,” is a specific, testable prediction about the anticipated results of a study, established at its outset. It is a key component of the scientific method .

Hypotheses connect theory to data and guide the research process towards expanding scientific understanding

Some key points about hypotheses:

- A hypothesis expresses an expected pattern or relationship. It connects the variables under investigation.

- It is stated in clear, precise terms before any data collection or analysis occurs. This makes the hypothesis testable.

- A hypothesis must be falsifiable. It should be possible, even if unlikely in practice, to collect data that disconfirms rather than supports the hypothesis.

- Hypotheses guide research. Scientists design studies to explicitly evaluate hypotheses about how nature works.

- For a hypothesis to be valid, it must be testable against empirical evidence. The evidence can then confirm or disprove the testable predictions.

- Hypotheses are informed by background knowledge and observation, but go beyond what is already known to propose an explanation of how or why something occurs.

Predictions typically arise from a thorough knowledge of the research literature, curiosity about real-world problems or implications, and integrating this to advance theory. They build on existing literature while providing new insight.

Types of Research Hypotheses

Alternative hypothesis.

The research hypothesis is often called the alternative or experimental hypothesis in experimental research.

It typically suggests a potential relationship between two key variables: the independent variable, which the researcher manipulates, and the dependent variable, which is measured based on those changes.

The alternative hypothesis states a relationship exists between the two variables being studied (one variable affects the other).

A hypothesis is a testable statement or prediction about the relationship between two or more variables. It is a key component of the scientific method. Some key points about hypotheses:

- Important hypotheses lead to predictions that can be tested empirically. The evidence can then confirm or disprove the testable predictions.

In summary, a hypothesis is a precise, testable statement of what researchers expect to happen in a study and why. Hypotheses connect theory to data and guide the research process towards expanding scientific understanding.

An experimental hypothesis predicts what change(s) will occur in the dependent variable when the independent variable is manipulated.

It states that the results are not due to chance and are significant in supporting the theory being investigated.

The alternative hypothesis can be directional, indicating a specific direction of the effect, or non-directional, suggesting a difference without specifying its nature. It’s what researchers aim to support or demonstrate through their study.

Null Hypothesis

The null hypothesis states no relationship exists between the two variables being studied (one variable does not affect the other). There will be no changes in the dependent variable due to manipulating the independent variable.

It states results are due to chance and are not significant in supporting the idea being investigated.

The null hypothesis, positing no effect or relationship, is a foundational contrast to the research hypothesis in scientific inquiry. It establishes a baseline for statistical testing, promoting objectivity by initiating research from a neutral stance.

Many statistical methods are tailored to test the null hypothesis, determining the likelihood of observed results if no true effect exists.

This dual-hypothesis approach provides clarity, ensuring that research intentions are explicit, and fosters consistency across scientific studies, enhancing the standardization and interpretability of research outcomes.

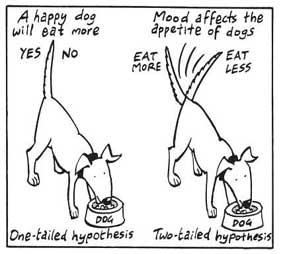

Nondirectional Hypothesis

A non-directional hypothesis, also known as a two-tailed hypothesis, predicts that there is a difference or relationship between two variables but does not specify the direction of this relationship.

It merely indicates that a change or effect will occur without predicting which group will have higher or lower values.

For example, “There is a difference in performance between Group A and Group B” is a non-directional hypothesis.

Directional Hypothesis

A directional (one-tailed) hypothesis predicts the nature of the effect of the independent variable on the dependent variable. It predicts in which direction the change will take place. (i.e., greater, smaller, less, more)

It specifies whether one variable is greater, lesser, or different from another, rather than just indicating that there’s a difference without specifying its nature.

For example, “Exercise increases weight loss” is a directional hypothesis.

Falsifiability

The Falsification Principle, proposed by Karl Popper , is a way of demarcating science from non-science. It suggests that for a theory or hypothesis to be considered scientific, it must be testable and irrefutable.

Falsifiability emphasizes that scientific claims shouldn’t just be confirmable but should also have the potential to be proven wrong.

It means that there should exist some potential evidence or experiment that could prove the proposition false.

However many confirming instances exist for a theory, it only takes one counter observation to falsify it. For example, the hypothesis that “all swans are white,” can be falsified by observing a black swan.

For Popper, science should attempt to disprove a theory rather than attempt to continually provide evidence to support a research hypothesis.

Can a Hypothesis be Proven?

Hypotheses make probabilistic predictions. They state the expected outcome if a particular relationship exists. However, a study result supporting a hypothesis does not definitively prove it is true.

All studies have limitations. There may be unknown confounding factors or issues that limit the certainty of conclusions. Additional studies may yield different results.

In science, hypotheses can realistically only be supported with some degree of confidence, not proven. The process of science is to incrementally accumulate evidence for and against hypothesized relationships in an ongoing pursuit of better models and explanations that best fit the empirical data. But hypotheses remain open to revision and rejection if that is where the evidence leads.

- Disproving a hypothesis is definitive. Solid disconfirmatory evidence will falsify a hypothesis and require altering or discarding it based on the evidence.

- However, confirming evidence is always open to revision. Other explanations may account for the same results, and additional or contradictory evidence may emerge over time.

We can never 100% prove the alternative hypothesis. Instead, we see if we can disprove, or reject the null hypothesis.

If we reject the null hypothesis, this doesn’t mean that our alternative hypothesis is correct but does support the alternative/experimental hypothesis.

Upon analysis of the results, an alternative hypothesis can be rejected or supported, but it can never be proven to be correct. We must avoid any reference to results proving a theory as this implies 100% certainty, and there is always a chance that evidence may exist which could refute a theory.

How to Write a Hypothesis

- Identify variables . The researcher manipulates the independent variable and the dependent variable is the measured outcome.

- Operationalized the variables being investigated . Operationalization of a hypothesis refers to the process of making the variables physically measurable or testable, e.g. if you are about to study aggression, you might count the number of punches given by participants.

- Decide on a direction for your prediction . If there is evidence in the literature to support a specific effect of the independent variable on the dependent variable, write a directional (one-tailed) hypothesis. If there are limited or ambiguous findings in the literature regarding the effect of the independent variable on the dependent variable, write a non-directional (two-tailed) hypothesis.

- Make it Testable : Ensure your hypothesis can be tested through experimentation or observation. It should be possible to prove it false (principle of falsifiability).

- Clear & concise language . A strong hypothesis is concise (typically one to two sentences long), and formulated using clear and straightforward language, ensuring it’s easily understood and testable.

Consider a hypothesis many teachers might subscribe to: students work better on Monday morning than on Friday afternoon (IV=Day, DV= Standard of work).

Now, if we decide to study this by giving the same group of students a lesson on a Monday morning and a Friday afternoon and then measuring their immediate recall of the material covered in each session, we would end up with the following:

- The alternative hypothesis states that students will recall significantly more information on a Monday morning than on a Friday afternoon.

- The null hypothesis states that there will be no significant difference in the amount recalled on a Monday morning compared to a Friday afternoon. Any difference will be due to chance or confounding factors.

More Examples

- Memory : Participants exposed to classical music during study sessions will recall more items from a list than those who studied in silence.

- Social Psychology : Individuals who frequently engage in social media use will report higher levels of perceived social isolation compared to those who use it infrequently.

- Developmental Psychology : Children who engage in regular imaginative play have better problem-solving skills than those who don’t.

- Clinical Psychology : Cognitive-behavioral therapy will be more effective in reducing symptoms of anxiety over a 6-month period compared to traditional talk therapy.

- Cognitive Psychology : Individuals who multitask between various electronic devices will have shorter attention spans on focused tasks than those who single-task.

- Health Psychology : Patients who practice mindfulness meditation will experience lower levels of chronic pain compared to those who don’t meditate.

- Organizational Psychology : Employees in open-plan offices will report higher levels of stress than those in private offices.

- Behavioral Psychology : Rats rewarded with food after pressing a lever will press it more frequently than rats who receive no reward.

Related Articles

Research Methodology

Qualitative Data Coding

What Is a Focus Group?

Cross-Cultural Research Methodology In Psychology

What Is Internal Validity In Research?

Research Methodology , Statistics

What Is Face Validity In Research? Importance & How To Measure

Criterion Validity: Definition & Examples

What is a scientific hypothesis?

It's the initial building block in the scientific method.

Hypothesis basics

What makes a hypothesis testable.

- Types of hypotheses

- Hypothesis versus theory

Additional resources

Bibliography.

A scientific hypothesis is a tentative, testable explanation for a phenomenon in the natural world. It's the initial building block in the scientific method . Many describe it as an "educated guess" based on prior knowledge and observation. While this is true, a hypothesis is more informed than a guess. While an "educated guess" suggests a random prediction based on a person's expertise, developing a hypothesis requires active observation and background research.

The basic idea of a hypothesis is that there is no predetermined outcome. For a solution to be termed a scientific hypothesis, it has to be an idea that can be supported or refuted through carefully crafted experimentation or observation. This concept, called falsifiability and testability, was advanced in the mid-20th century by Austrian-British philosopher Karl Popper in his famous book "The Logic of Scientific Discovery" (Routledge, 1959).

A key function of a hypothesis is to derive predictions about the results of future experiments and then perform those experiments to see whether they support the predictions.

A hypothesis is usually written in the form of an if-then statement, which gives a possibility (if) and explains what may happen because of the possibility (then). The statement could also include "may," according to California State University, Bakersfield .

Here are some examples of hypothesis statements:

- If garlic repels fleas, then a dog that is given garlic every day will not get fleas.

- If sugar causes cavities, then people who eat a lot of candy may be more prone to cavities.

- If ultraviolet light can damage the eyes, then maybe this light can cause blindness.

A useful hypothesis should be testable and falsifiable. That means that it should be possible to prove it wrong. A theory that can't be proved wrong is nonscientific, according to Karl Popper's 1963 book " Conjectures and Refutations ."

An example of an untestable statement is, "Dogs are better than cats." That's because the definition of "better" is vague and subjective. However, an untestable statement can be reworded to make it testable. For example, the previous statement could be changed to this: "Owning a dog is associated with higher levels of physical fitness than owning a cat." With this statement, the researcher can take measures of physical fitness from dog and cat owners and compare the two.

Types of scientific hypotheses

In an experiment, researchers generally state their hypotheses in two ways. The null hypothesis predicts that there will be no relationship between the variables tested, or no difference between the experimental groups. The alternative hypothesis predicts the opposite: that there will be a difference between the experimental groups. This is usually the hypothesis scientists are most interested in, according to the University of Miami .

For example, a null hypothesis might state, "There will be no difference in the rate of muscle growth between people who take a protein supplement and people who don't." The alternative hypothesis would state, "There will be a difference in the rate of muscle growth between people who take a protein supplement and people who don't."

If the results of the experiment show a relationship between the variables, then the null hypothesis has been rejected in favor of the alternative hypothesis, according to the book " Research Methods in Psychology " (BCcampus, 2015).

There are other ways to describe an alternative hypothesis. The alternative hypothesis above does not specify a direction of the effect, only that there will be a difference between the two groups. That type of prediction is called a two-tailed hypothesis. If a hypothesis specifies a certain direction — for example, that people who take a protein supplement will gain more muscle than people who don't — it is called a one-tailed hypothesis, according to William M. K. Trochim , a professor of Policy Analysis and Management at Cornell University.

Sometimes, errors take place during an experiment. These errors can happen in one of two ways. A type I error is when the null hypothesis is rejected when it is true. This is also known as a false positive. A type II error occurs when the null hypothesis is not rejected when it is false. This is also known as a false negative, according to the University of California, Berkeley .

A hypothesis can be rejected or modified, but it can never be proved correct 100% of the time. For example, a scientist can form a hypothesis stating that if a certain type of tomato has a gene for red pigment, that type of tomato will be red. During research, the scientist then finds that each tomato of this type is red. Though the findings confirm the hypothesis, there may be a tomato of that type somewhere in the world that isn't red. Thus, the hypothesis is true, but it may not be true 100% of the time.

Scientific theory vs. scientific hypothesis

The best hypotheses are simple. They deal with a relatively narrow set of phenomena. But theories are broader; they generally combine multiple hypotheses into a general explanation for a wide range of phenomena, according to the University of California, Berkeley . For example, a hypothesis might state, "If animals adapt to suit their environments, then birds that live on islands with lots of seeds to eat will have differently shaped beaks than birds that live on islands with lots of insects to eat." After testing many hypotheses like these, Charles Darwin formulated an overarching theory: the theory of evolution by natural selection.

"Theories are the ways that we make sense of what we observe in the natural world," Tanner said. "Theories are structures of ideas that explain and interpret facts."

- Read more about writing a hypothesis, from the American Medical Writers Association.

- Find out why a hypothesis isn't always necessary in science, from The American Biology Teacher.

- Learn about null and alternative hypotheses, from Prof. Essa on YouTube .

Encyclopedia Britannica. Scientific Hypothesis. Jan. 13, 2022. https://www.britannica.com/science/scientific-hypothesis

Karl Popper, "The Logic of Scientific Discovery," Routledge, 1959.

California State University, Bakersfield, "Formatting a testable hypothesis." https://www.csub.edu/~ddodenhoff/Bio100/Bio100sp04/formattingahypothesis.htm

Karl Popper, "Conjectures and Refutations," Routledge, 1963.

Price, P., Jhangiani, R., & Chiang, I., "Research Methods of Psychology — 2nd Canadian Edition," BCcampus, 2015.

University of Miami, "The Scientific Method" http://www.bio.miami.edu/dana/161/evolution/161app1_scimethod.pdf

William M.K. Trochim, "Research Methods Knowledge Base," https://conjointly.com/kb/hypotheses-explained/

University of California, Berkeley, "Multiple Hypothesis Testing and False Discovery Rate" https://www.stat.berkeley.edu/~hhuang/STAT141/Lecture-FDR.pdf

University of California, Berkeley, "Science at multiple levels" https://undsci.berkeley.edu/article/0_0_0/howscienceworks_19

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

What's the difference between a rock and a mineral?

Earth from space: Mysterious, slow-spinning cloud 'cyclone' hugs the Iberian coast

4,000-year-old 'Seahenge' in UK was built to 'extend summer,' archaeologist suggests

Most Popular

- 2 Rare fungal STI spotted in US for the 1st time

- 3 James Webb telescope finds carbon at the dawn of the universe, challenging our understanding of when life could have emerged

- 4 Neanderthals and humans interbred 47,000 years ago for nearly 7,000 years, research suggests

- 5 Noise-canceling headphones can use AI to 'lock on' to somebody when they speak and drown out all other noises

- 2 Bornean clouded leopard family filmed in wild for 1st time ever

- 3 What is the 3-body problem, and is it really unsolvable?

- 4 7 potential 'alien megastructures' spotted in our galaxy are not what they seem

- Current Students

- Online Only Students

- Faculty & Staff

- Parents & Family

- Alumni & Friends

- Community & Business

- Student Life

- Coles College of Business

- Michael J Coles

- Hall of Fame

- Building Bold Connections Podcast

- Rankings & Distinctions

- AACSB Accreditation Information

- Faculty and Staff

- Center for Student Success

- Academic Advising

- Education Abroad

- Internships & Co-ops

- School of Accountancy

- Michael A. Leven School of Management, Entrepreneurship and Hospitality

- Department of Economics, Finance & Quantitative Analysis

- Department of Information Systems and Security

- Department of Marketing & Professional Sales

- Undergraduate

- Executive Education

- All-Access Business Series

- Lessons in Leadership

- Tetley Distinguished Leader Lecture Series

- Faculty Blogs

How does Services Development Affect Manufacturing Export Competitiveness?

KENNESAW, Ga. | Jun 11, 2024

Xuepeng Liu The Research Brief

Most manufacturing activities use service inputs such as financial and business services. Dr. Xuepeng Liu’s paper [1] examines the implications of services development for the export performance of manufacturing sectors. They develop a methodology to quantify the indirect role of services in international trade in goods and construct new measures of revealed comparative advantage based on value-added exports. They show that the development of financial and business services enhances the revealed comparative advantage of manufacturing sectors that use these services intensively but not of other manufacturing sectors. They also find that a country can partially overcome the handicap of an underdeveloped domestic services sector by relying more on imported services inputs. Thus, lower services trade barriers in developing countries can help to promote their manufacturing exports.

The Main Hypothesis

On the face of it, services play a relatively small role in international trade. Conventional trade statistics show that services trade currently accounts for only one-fifth of cross-border trade. However, a significant part of goods trade includes trade in embodied services. In the United States, for example, more than a quarter of intermediate inputs purchased by manufacturers were from the services sector. For certain manufacturing sectors, such as computers and electronic products, this percentage — a measure of “services intensity” — is as high as 47.6 percent. The development of the domestic services sector, as well as access to imported services inputs, can, therefore, be expected to influence comparative advantage in manufacturing trade. Dr. Liu seeks to understand this indirect role of services development drawing upon new measures based on newly available data.

The impact of services development is not straightforward. On the one hand, as services are used as inputs in the production of manufactured goods, services development can help to increase manufacturing production. On the other hand, since services and manufacturing compete for resources, the development of the former can be at the expense of the latter. For example, it is evident that the development of the services sector has drawn resources away from manufacturing not just in developed countries like the United States and the United Kingdom, but also in developing countries like India and the Philippines, provoking “deindustrialization” concerns.

The first hypothesis is that, while the overall effect of services development on the performance of manufacturing sectors is ambiguous, the effect is more likely to be positive for manufacturing sectors that use the services inputs more intensively.

This paper focuses on two services sectors that are crucial for modern economic development: financial services and business services. Both have emerged as skill-intensive, dynamic, internationally traded services. These two services sectors are often regarded as the pillars of modern economies. Services development is mainly measured by the share of a country’s services value-added in GDP. Dr. Liu and his coauthors develop a methodology to quantify the indirect role of services in international trade in goods. They use a suitably modified version of revealed comparative advantage (RCA) to measure the competitiveness of manufacturing sectors. They improve on the traditional Balassa (1965) [2] RCA and construct new measures of RCA based on value-added exports rather than gross exports. Their econometric analysis provides a strong support for the hypothesis.

Policy Implications

Industrial countries have been strong in exporting services, both directly and indirectly. For example, the U.S. is not only the largest direct exporter of business services in the world, but also the largest indirect exporter of business services (actually twice as large), suggesting an important role of business services in U.S.’ manufacturing activities. However, developing and emerging economies have significantly lagged behind, with India being the only exception as a significant direct exporter of business services. Services development in these latter countries would not only strengthen their service sectors but also promote manufacturing sectors.

Countries such as China that may be concerned with the durability of their manufacturing export success may consider building stronger service sectors as a way to upgrade their manufacturing sectors to an even higher level of sophistication. China’s business services exports in value-added terms, relative to its exports in gross terms, are less impressive. Miroudot and Cadestin (2017) [3] show that China is the only country in their sample which has a majority of the manufacturing firms (77 percent in 2013) selling only goods, with little bundling of goods and services, as seen with Apple iPhones/iPads and Apple Stores. To strengthen the manufacturing sector, countries may need to have a favorable business environment that facilitates services upgrading, including but not limited to R&D, marketing, advertising, inventory management, quality control, production scheduling, and after-sale customer services.

With significant improvement in transportation and communication technologies and increasing services outsourcing activities, some developing countries such as India have developed competitive services sectors. For developed countries that have the same strength in service sectors as India, this paper suggests that the manufacturing sectors that use these services intensively tend to have a strong revealed comparative advantage. However, different from most of the other countries, Indian gross exports of business services are actually larger than its total value-added exports, suggesting relatively little embodied business services in other sectors. There is a plenty of room left for India, Philippines and other similar countries to take advantage of their competitive services sectors during their industrialization process.

The Second Hypothesis

This paper also provides evidence for a bypass effect, that is, countries may bypass their inefficient domestic services sectors by making use of imported services inputs. This suggests that nations with under-developed services may take advantage of globalization in services. Countries that hesitate to liberalize their services sectors in the hope of protecting their inefficient domestic services sectors may hurt the competitiveness of their manufacturing sectors.

[1] Liu, Xuepeng, Aaditya Mattoo, Zhi Wang, and Shang-Jin Wei, 2020. "Services Development and Comparative Advantage in Manufacturing.” Journal of Development Economics 144(C) . https://doi.org/10.1016/j.jdeveco.2019.102438

[2] Balassa, Bela, 1965. “Trade Liberalization and ‘Revealed’ Comparative Advantage.” Manchester School of Economic and Social Studies 33: 99-123.

[3] Miroudot, Sébastien, and Charles Cadestin, 2017. “Services In Global Value Chains: From Inputs to Value-Creating Activities.” OECD Trade Policy Papers , No. 197.

Related Posts

The Problem of Teaching Performance Evaluation and a Proposed Solution

Disruption at Regional Universities: Challenges and Opportunities.

Bridging the Gap between Theory and Practice Through Design Science Research

Superintelligence, Conscious Empathic AI, and the Future of Business Research

Contact Info

Kennesaw Campus 1000 Chastain Road Kennesaw, GA 30144

Marietta Campus 1100 South Marietta Pkwy Marietta, GA 30060

Campus Maps

Phone 470-KSU-INFO (470-578-4636)

kennesaw.edu/info

Media Resources

Resources For

Related Links

- Financial Aid

- Degrees, Majors & Programs

- Job Opportunities

- Campus Security

- Global Education

- Sustainability

- Accessibility

470-KSU-INFO (470-578-4636)

© 2024 Kennesaw State University. All Rights Reserved.

- Privacy Statement

- Accreditation

- Emergency Information

- Report a Concern

- Open Records

- Human Trafficking Notice

- Open access

- Published: 13 June 2024

Dyport: dynamic importance-based biomedical hypothesis generation benchmarking technique

- Ilya Tyagin 1 &

- Ilya Safro 2

BMC Bioinformatics volume 25 , Article number: 213 ( 2024 ) Cite this article

Metrics details

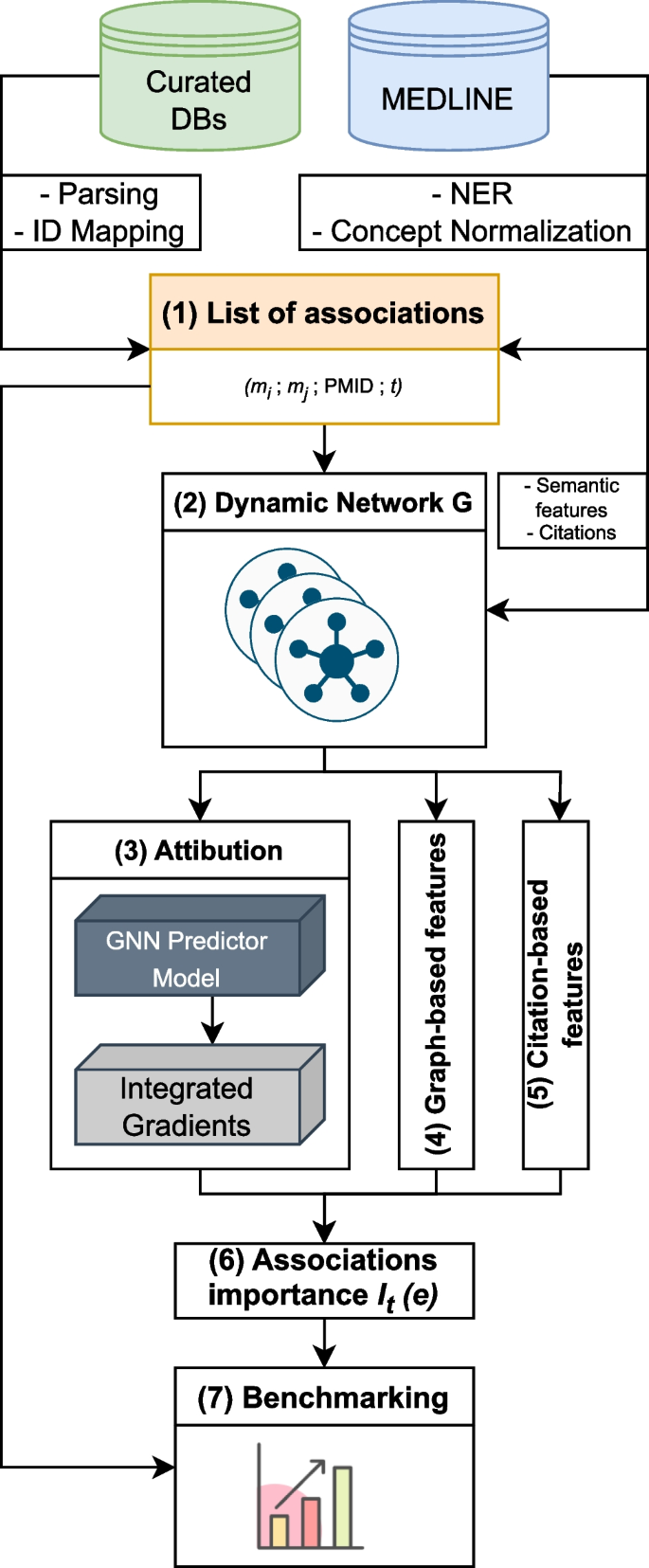

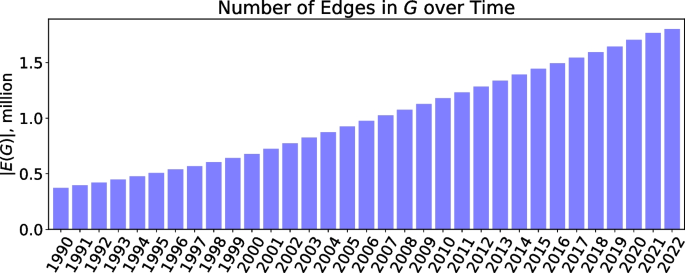

Automated hypothesis generation (HG) focuses on uncovering hidden connections within the extensive information that is publicly available. This domain has become increasingly popular, thanks to modern machine learning algorithms. However, the automated evaluation of HG systems is still an open problem, especially on a larger scale.

This paper presents a novel benchmarking framework Dyport for evaluating biomedical hypothesis generation systems. Utilizing curated datasets, our approach tests these systems under realistic conditions, enhancing the relevance of our evaluations. We integrate knowledge from the curated databases into a dynamic graph, accompanied by a method to quantify discovery importance. This not only assesses hypotheses accuracy but also their potential impact in biomedical research which significantly extends traditional link prediction benchmarks. Applicability of our benchmarking process is demonstrated on several link prediction systems applied on biomedical semantic knowledge graphs. Being flexible, our benchmarking system is designed for broad application in hypothesis generation quality verification, aiming to expand the scope of scientific discovery within the biomedical research community.

Conclusions

Dyport is an open-source benchmarking framework designed for biomedical hypothesis generation systems evaluation, which takes into account knowledge dynamics, semantics and impact. All code and datasets are available at: https://github.com/IlyaTyagin/Dyport .

Peer Review reports

Introduction

Automated hypothesis generation (HG, also known as Literature Based Discovery, LBD) has gone a long way since its establishment in 1986, when Swanson introduced the concept of “Undiscovered Public Knowledge” [ 1 ]. It pertains to the idea that within the public domain, there is a significant abundance of information, allowing for the uncovering of implicit connections among various pieces of information. There are many systems developed throughout the years, which incorporate different reasoning methods: from concept co-occurrence in scientific literature [ 2 , 3 ] to the advanced deep learning-based algorithms and generative models (such as BioGPT [ 4 ] and CBAG [ 5 ]). Examples include but are not limited to probabilistic topic modeling over relevant papers [ 6 ], semantic inference [ 7 ], association rule discovery [ 8 ], latent semantic indexing [ 9 ], semantic knowledge network completion [ 10 ] or human-aware artificial intelligence [ 11 ] to mention just a few. The common thread running through these lines of research is that they are all meant to fill in the gaps between pieces of existing knowledge.

The evaluation of HG is still one of the major problems of these systems, especially when it comes to fully automated large-scale general purpose systems (such as IBM Watson Drug Discovery [ 12 ], AGATHA [ 10 ] or BioGPT [ 4 ]). For these, a massive assessment (that is normal in the machine learning and general AI domains) performed manually by the domain experts is usually not feasible and other methods are required.

One traditional evaluation approach is to make a system “rediscover” some of the landmark findings, similar to what was done in numerous works replicating well-known connections, such as: Fish Oil \(\leftrightarrow\) Raynaud’s Syndrome [ 13 ], Migraine \(\leftrightarrow\) Magnesium [ 13 ] or Alzheimer \(\leftrightarrow\) Estrogen [ 14 ]. This technique is frequently used even in a majority of the recently published papers, despite of its obvious drawbacks, such as very limited number of validation samples and their general obsolesce (some of these connections are over 30 years old). Furthermore, in some of these works, the training set is not carefully chosen to include only the information published prior the discovery of interest which turns the HG goal into the information retrieval task.

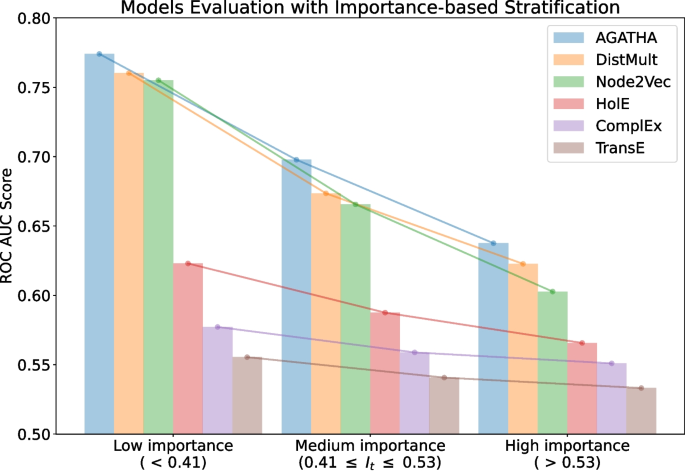

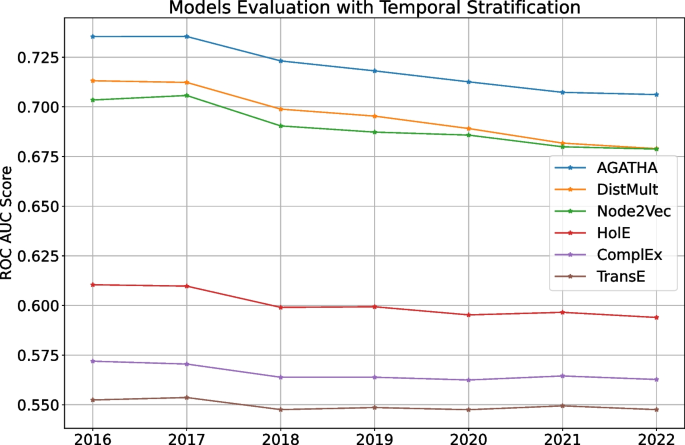

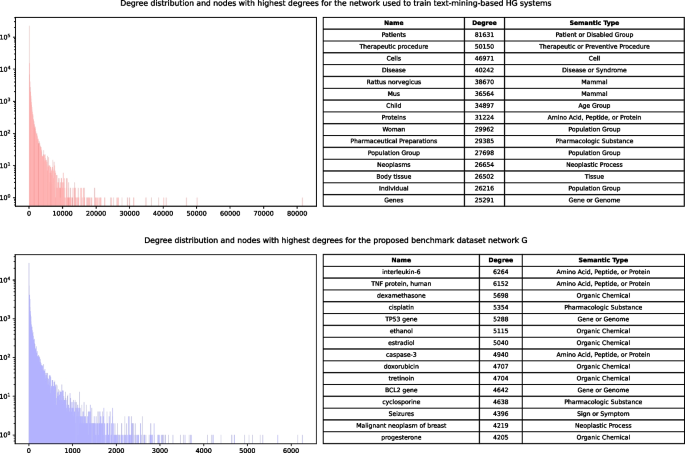

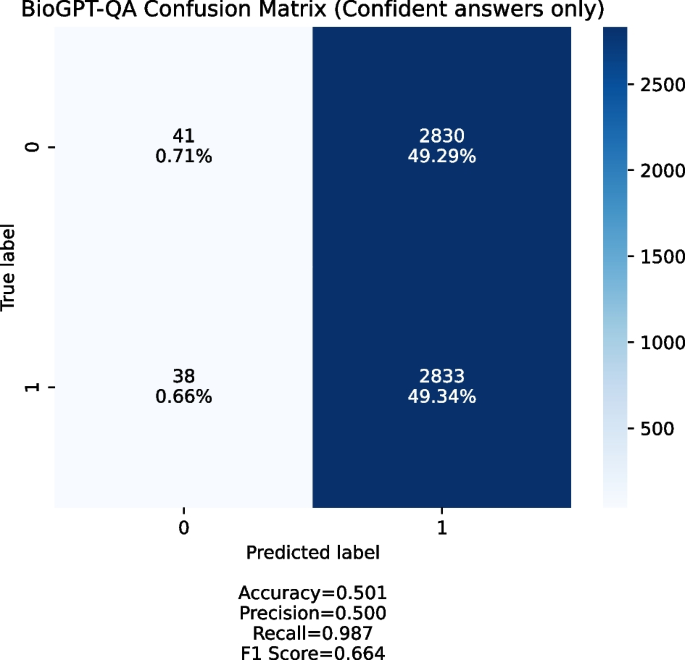

Another commonly used technique is based on the time-slicing [ 10 , 15 ], when a system is trained on a subset of data prior to a specified cut-off date and then evaluated on the data from the future. This method addresses the weaknesses of previous approach and can be automated, but it does not immediately answer the question of how significant or impactful the connections are. The lack of this information may lead to deceiving results: many connections, even recently published, are trivial (especially if they are found by the text mining methods) and do not advance the scientific field in a meaningful way.