- Original article

- Open access

- Published: 13 July 2021

Assisting you to advance with ethics in research: an introduction to ethical governance and application procedures

- Shivadas Sivasubramaniam 1 ,

- Dita Henek Dlabolová 2 ,

- Veronika Kralikova 3 &

- Zeenath Reza Khan 3

International Journal for Educational Integrity volume 17 , Article number: 14 ( 2021 ) Cite this article

18k Accesses

12 Citations

4 Altmetric

Metrics details

Ethics and ethical behaviour are the fundamental pillars of a civilised society. The focus on ethical behaviour is indispensable in certain fields such as medicine, finance, or law. In fact, ethics gets precedence with anything that would include, affect, transform, or influence upon individuals, communities or any living creatures. Many institutions within Europe have set up their own committees to focus on or approve activities that have ethical impact. In contrast, lesser-developed countries (worldwide) are trying to set up these committees to govern their academia and research. As the first European consortium established to assist academic integrity, European Network for Academic Integrity (ENAI), we felt the importance of guiding those institutions and communities that are trying to conduct research with ethical principles. We have established an ethical advisory working group within ENAI with the aim to promote ethics within curriculum, research and institutional policies. We are constantly researching available data on this subject and committed to help the academia to convey and conduct ethical behaviour. Upon preliminary review and discussion, the group found a disparity in understanding, practice and teaching approaches to ethical applications of research projects among peers. Therefore, this short paper preliminarily aims to critically review the available information on ethics, the history behind establishing ethical principles and its international guidelines to govern research.

The paper is based on the workshop conducted in the 5th International conference Plagiarism across Europe and Beyond, in Mykolas Romeris University, Lithuania in 2019. During the workshop, we have detailed a) basic needs of an ethical committee within an institution; b) a typical ethical approval process (with examples from three different universities); and c) the ways to obtain informed consent with some examples. These are summarised in this paper with some example comparisons of ethical approval processes from different universities. We believe this paper will provide guidelines on preparing and training both researchers and research students in appropriately upholding ethical practices through ethical approval processes.

Introduction

Ethics and ethical behaviour (often linked to “responsible practice”) are the fundamental pillars of a civilised society. Ethical behaviour with integrity is important to maintain academic and research activities. It affects everything we do, and gets precedence with anything that would include/affect, transform, or impact upon individuals, communities or any living creatures. In other words, ethics would help us improve our living standards (LaFollette, 2007 ). The focus on ethical behaviour is indispensable in certain fields such as medicine, finance, or law, but is also gaining recognition in all disciplines engaged in research. Therefore, institutions are expected to develop ethical guidelines in research to maintain quality, initiate/own integrity and above all be transparent to be successful by limiting any allegation of misconduct (Flite and Harman, 2013 ). This is especially true for higher education organisations that promote research and scholarly activities. Many European institutions have developed their own regulations for ethics by incorporating international codes (Getz, 1990 ). The lesser developed countries are trying to set up these committees to govern their academia and research. World Health Organization has stated that adhering to “ ethical principles … [is central and important]... in order to protect the dignity, rights and welfare of research participants ” (WHO, 2021 ). Ethical guidelines taught to students can help develop ethical researchers and members of society who uphold values of ethical principles in practice.

As the first European-wide consortium established to assist academic integrity (European Network for Academic Integrity – ENAI), we felt the importance of guiding those institutions and communities that are trying to teach, research, and include ethical principles by providing overarching understanding of ethical guidelines that may influence policy. Therefore, we set up an advisory working group within ENAI in 2018 to support matters related to ethics, ethical committees and assisting on ethics related teaching activities.

Upon preliminary review and discussion, the group found a disparity in understanding, practice and teaching approaches to ethical applications among peers. This became the premise for this research paper. We first carried out a literature survey to review and summarise existing ethical governance (with historical perspectives) and procedures that are already in place to guide researchers in different discipline areas. By doing so, we attempted to consolidate, document and provide important steps in a typical ethical application process with example procedures from different universities. Finally, we attempted to provide insights and findings from practical workshops carried out at the 5th International Conference Plagiarism across Europe and Beyond, in Mykolas Romeris University, Lithuania in 2019, focussing on:

• highlighting the basic needs of an ethical committee within an institution,

• discussing and sharing examples of a typical ethical approval process,

• providing guidelines on the ways to teach research ethics with some examples.

We believe this paper provides guidelines on preparing and training both researchers and research students in appropriately upholding ethical practices through ethical approval processes.

Background literature survey

Responsible research practice (RRP) is scrutinised by the aspects of ethical principles and professional standards (WHO’s Code of Conduct for responsible Research, 2017). The Singapore statement on research integrity (The Singapore Statement on Research integrity, 2010) has provided an internationally acceptable guidance for RRP. The statement is based on maintaining honesty, accountability, professional courtesy in all aspects of research and maintaining fairness during collaborations. In other words, it does not simply focus on the procedural part of the research, instead covers wider aspects of “integrity” beyond the operational aspects (Israel and Drenth, 2016 ).

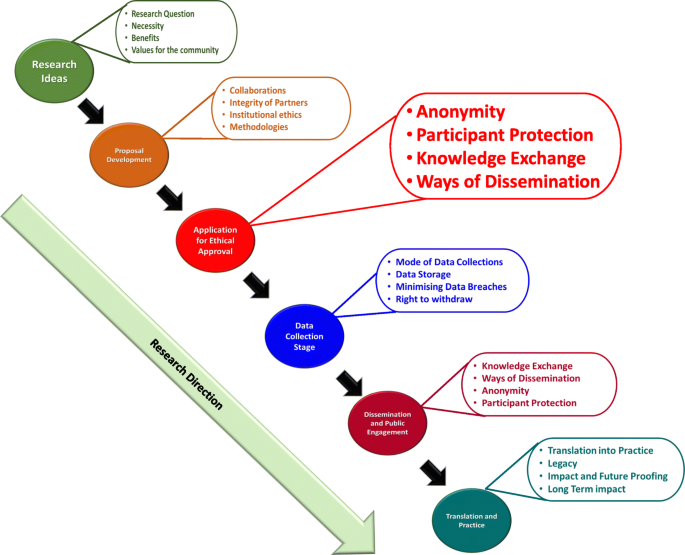

Institutions should focus on providing ethical guidance based on principles and values reflecting upon all aspects/stages of research (from the funding application/project development stage upto or beyond project closing stage). Figure 1 summarizes the different aspects/stages of a typical research and highlights the needs of RRP in compliance with ethical governance at each stage with examples (the figure is based on Resnik, 2020 ; Žukauskas et al., 2018 ; Anderson, 2011 ; Fouka and Mantzorou, 2011 ).

Summary of the enabling ethical governance at different stages of research. Note that it is imperative for researchers to proactively consider the ethical implications before, during and after the actual research process. The summary shows that RRP should be in line with ethical considerations even long before the ethical approval stage

Individual responsibilities to enhance RRP

As explained in Fig. 1 , a successfully governed research should consider ethics at the planning stages prior to research. Many international guidance are compatible in enforcing/recommending 14 different “responsibilities” that were first highlighted in the Singapore Statement (2010) for researchers to follow and achieve competency in RRP. In order to understand the purpose and the expectation of these ethical guidelines, we have carried out an initial literature survey on expected individual responsibilities. These are summarised in Table 1 .

By following these directives, researchers can carry out accountable research by maximising ethical self-governance whilst minimising misconducts. In our own experiences of working with many researchers, their focus usually revolves around ethical “clearance” rather than behaviour. In other words, they perceive this as a paper exercise rather than trying to “own” ethical behaviour in everything they do. Although the ethical principles and responsibilities are explicitly highlighted in the majority of international guidelines [such as UK’s Research Governance Policy (NICE, 2018 ), Australian Government’s National Statement on Ethical Conduct in Human Research (Difn website a - National Statement on Ethical Conduct in Human Research (NSECHR), 2018 ), the Singapore Statement (2010) etc.]; and the importance of holistic approach has been argued in ethical decision making, many researchers and/or institutions only focus on ethics linked to the procedural aspects.

Studies in the past have also highlighted inconsistencies in institutional guidelines pointing to the fact that these inconsistencies may hinder the predicted research progress (Desmond & Dierickx 2021 ; Alba et al., 2020 ; Dellaportas et al., 2014 ; Speight 2016 ). It may also be possible that these were and still are linked to the institutional perceptions/expectations or the pre-empting contextual conditions that are imposed by individual countries. In fact, it is interesting to note many research organisations and HE institutions establish their own policies based on these directives.

Research governance - origins, expectations and practices

Ethical governance in clinical medicine helps us by providing a structure for analysis and decision-making. By providing workable definitions of benefits and risks as well as the guidance for evaluating/balancing benefits over risks, it supports the researchers to protect the participants and the general population.

According to the definition given by National Institute of Clinical care Excellence, UK (NICE 2018 ), “ research governance can be defined as the broad range of regulations, principles and standards of good practice that ensure high quality research ”. As stated above, our literature-based research survey showed that most of the ethical definitions are basically evolved from the medical field and other disciplines have utilised these principles to develop their own ethical guidance. Interestingly, historical data show that the medical research has been “self-governed” or in other words implicated by the moral behaviour of individual researchers (Fox 2017 ; Shaw et al., 2005 ; Getz, 1990 ). For example, early human vaccination trials conducted in 1700s used the immediate family members as test subjects (Fox, 2017 ). Here the moral justification might have been the fact that the subjects who would have been at risk were either the scientists themselves or their immediate families but those who would reap the benefits from the vaccination were the general public/wider communities. However, according to the current ethical principles, this assumption is entirely not acceptable.

Historically, ambiguous decision-making and resultant incidences of research misconduct have led to the need for ethical research governance in as early as the 1940’s. For instance, the importance of an international governance was realised only after the World War II, when people were astonished to note the unethical research practices carried out by Nazi scientists. As a result of this, in 1947 the Nuremberg code was published. The code mainly focussed on the following:

Informed consent and further insisted the research involving humans should be based on prior animal work,

The anticipated benefits should outweigh the risk,

Research should be carried out only by qualified scientists must conduct research,

Avoiding physical and mental suffering and.

Avoiding human research that would result in which death or disability.

(Weindling, 2001 ).

Unfortunately, it was reported that many researchers in the USA and elsewhere considered the Nuremberg code as a document condemning the Nazi atrocities, rather than a code for ethical governance and therefore ignored these directives (Ghooi, 2011 ). It was only in 1964 that the World Medical Association published the Helsinki Declaration, which set the stage for ethical governance and the implementation of the Institutional Review Board (IRB) process (Shamoo and Irving, 1993 ). This declaration was based on Nuremberg code. In addition, the declaration also paved the way for enforcing research being conducted in accordance with these guidelines.

Incidentally, the focus on research/ethical governance gained its momentum in 1974. As a result of this, a report on ethical principles and guidelines for the protection of human subjects of research was published in 1979 (The Belmont Report, 1979 ). This report paved the way to the current forms of ethical governance in biomedical and behavioural research by providing guidance.

Since 1994, the WHO itself has been providing several guidance to health care policy-makers, researchers and other stakeholders detailing the key concepts in medical ethics. These are specific to applying ethical principles in global public health.

Likewise, World Organization for Animal Health (WOAH), and International Convention for the Protection of Animals (ICPA) provide guidance on animal welfare in research. Due to this continuous guidance, together with accepted practices, there are internationally established ethical guidelines to carry out medical research. Our literature survey further identified freely available guidance from independent organisations such as COPE (Committee of Publication Ethics) and ALLEA (All European Academics) which provide support for maintaining research ethics in other fields such as education, sociology, psychology etc. In reality, ethical governance is practiced differently in different countries. In the UK, there is a clinical excellence research governance, which oversees all NHS related medical research (Mulholland and Bell, 2005 ). Although, the governance in other disciplines is not entirely centralised, many research funding councils and organisations [such as UKRI (UK-Research and Innovation; BBSC (Biotechnology and Biological Sciences Research Council; MRC (Medical Research Council); EPSRC (Economic and Social Research Council)] provide ethical governance and expect institutional adherence and monitoring. They expect local institutional (i.e. university/institutional) research governance for day-to-day monitoring of the research conducted within the organisation and report back to these funding bodies, monthly or annually (Department of Health, 2005). Likewise, there are nationally coordinated/regulated ethics governing bodies such as the US Office for Human Research Protections (US-OHRP), National Institute of Health (NIH) and the Canadian Institutes for Health Research (CIHR) in the USA and Canada respectively (Mulholland and Bell, 2005 ). The OHRP in the USA formally reviews all research activities involving human subjects. On the other hand, in Canada, CIHR works with the Natural Sciences and Engineering Research Council (NSERC), and the Social Sciences and Humanities Research Council (SSHRC). They together have produced a Tri-Council Policy Statement (TCPS) (Stephenson et al., 2020 ) as ethical governance. All Canadian institutions are expected to adhere to this policy for conducting research. As for Australia, the research is governed by the Australian code for the responsible conduct of research (2008). It identifies the responsibilities of institutions and researchers in all areas of research. The code has been jointly developed by the National Health and Medical Research Council (NHMRC), the Australian Research Council (ARC) and Universities Australia (UA). This information is summarized in Table 2 .

Basic structure of an institutional ethical advisory committee (EAC)

The WHO published an article defining the basic concepts of an ethical advisory committee in 2009 (WHO, 2009 - see above). According to this, many countries have established research governance and monitor the ethical practice in research via national and/or regional review committees. The main aims of research ethics committees include reviewing the study proposals, trying to understand the justifications for human/animal use, weighing the merits and demerits of the usage (linking to risks vs. potential benefits) and ensuring the local, ethical guidelines are followed Difn website b - Enago academy Importance of Ethics Committees in Scholarly Research, 2020 ; Guide for Research Ethics - Council of Europe, 2014 ). Once the research has started, the committee needs to carry out periodic surveillance to ensure the institutional ethical norms are followed during and beyond the study. They may also be involved in setting up and/or reviewing the institutional policies.

For these aspects, IRB (or institutional ethical advisory committee - IEAC) is essential for local governance to enhance best practices. The advantage of an IRB/EEAC is that they understand the institutional conditions and can closely monitor the ongoing research, including any changes in research directions. On the other hand, the IRB may be overly supportive to accept applications, influenced by the local agenda for achieving research excellence, disregarding ethical issues (Kotecha et al., 2011 ; Kayser-Jones, 2003 ) or, they may be influenced by the financial interests in attracting external funding. In this respect, regional and national ethics committees are advantageous to ensure ethical practice. Due to their impartiality, they would provide greater consistency and legitimacy to the research (WHO, 2009 ). However, the ethical approval process of regional and national ethics committees would be time consuming, as they do not have the local knowledge.

As for membership in the IRBs, most of the guidelines [WHO, NICE, Council of Europe, (2012), European Commission - Facilitating Research Excellence in FP7 ( 2013 ) and OHRP] insist on having a variety of representations including experts in different fields of research, and non-experts with the understanding of local, national/international conflicts of interest. The former would be able to understand/clarify the procedural elements of the research in different fields; whilst the latter would help to make neutral and impartial decisions. These non-experts are usually not affiliated to the institution and consist of individuals representing the broader community (particularly those related to social, legal or cultural considerations). IRBs consisting of these varieties of representation would not only be in a position to understand the study procedures and their potential direct or indirect consequences for participants, but also be able to identify any community, cultural or religious implications of the study.

Understanding the subtle differences between ethics and morals

Interestingly, many ethical guidelines are based on society’s moral “beliefs” in such a way that the words “ethics”‘and “morals” are reciprocally used to define each other. However, there are several subtle differences between them and we have attempted to compare and contrast them herein. In the past, many authors have interchangeably used the words “morals”‘and “ethics”‘(Warwick, 2003 ; Kant, 2018 ; Hazard, GC (Jr)., 1994 , Larry, 1982 ). However, ethics is linked to rules governed by an external source such as codes of conduct in workplaces (Kuyare et al., 2014 ). In contrast, morals refer to an individual’s own principles regarding right and wrong. Quinn ( 2011 ) defines morality as “ rules of conduct describing what people ought and ought not to do in various situations … ” while ethics is “... the philosophical study of morality, a rational examination into people’s moral beliefs and behaviours ”. For instance, in a case of parents demanding that schools overturn a ban on use of corporal punishment of children by schools and teachers (Children’s Rights Alliance for England, 2005 ), the parents believed that teachers should assume the role of parent in schools and use corporal or physical punishment for children who misbehaved. This stemmed from their beliefs and what they felt were motivated by “beliefs of individuals or groups”. For example, recent media highlights about some parents opposing LGBT (Lesbian, Gay, Bisexual, and Transgender) education to their children (BBC News, 2019 ). One parent argued, “Teaching young children about LGBT at a very early stage is ‘morally’ wrong”. She argued “let them learn by themselves as they grow”. This behaviour is linked to and governed by the morals of an ethnic community. Thus, morals are linked to the “beliefs of individuals or group”. However, when it comes to the LGBT rights these are based on ethical principles of that society and governed by law of the land. However, the rights of children to be protected from “inhuman and degrading” treatment is based on the ethical principles of the society and governed by law of the land. Individuals, especially those who are working in medical or judicial professions have to follow an ethical code laid down by their profession, regardless of their own feelings, time or preferences. For instance, a lawyer is expected to follow the professional ethics and represent a defendant, despite the fact that his morals indicate the defendant is guilty.

In fact, we as a group could not find many scholarly articles clearly comparing or contrasting ethics with morals. However, a table presented by Surbhi ( 2015 ) (Difn website c ) tries to differentiate these two terms (see Table 3 ).

Although Table 3 gives some insight on the differences between these two terms, in practice many use these terms as loosely as possible mainly because of their ambiguity. As a group focussed on the application of these principles, we would recommend to use the term “ethics” and avoid “morals” in research and academia.

Based on the literature survey carried out, we were able to identify the following gaps:

there is some disparity in existing literature on the importance of ethical guidelines in research

there is a lack of consensus on what code of conduct should be followed, where it should be derived from and how it should be implemented

The mission of ENAI’s ethical advisory working group

The Ethical Advisory Working Group of ENAI was established in 2018 to promote ethical code of conduct/practice amongst higher educational organisations within Europe and beyond (European Network for Academic Integrity, 2018 ). We aim to provide unbiased advice and consultancy on embedding ethical principles within all types of academic, research and public engagement activities. Our main objective is to promote ethical principles and share good practice in this field. This advisory group aims to standardise ethical norms and to offer strategic support to activities including (but not exclusive to):

● rendering advice and assistance to develop institutional ethical committees and their regulations in member institutions,

● sharing good practice in research and academic ethics,

● acting as a critical guide to institutional review processes, assisting them to maintain/achieve ethical standards,

● collaborating with similar bodies in establishing collegiate partnerships to enhance awareness and practice in this field,

● providing support within and outside ENAI to develop materials to enhance teaching activities in this field,

● organising training for students and early-career researchers about ethical behaviours in form of lectures, seminars, debates and webinars,

● enhancing research and dissemination of the findings in matters and topics related to ethics.

The following sections focus on our suggestions based on collective experiences, review of literature provided in earlier sections and workshop feedback collected:

a) basic needs of an ethical committee within an institution;

b) a typical ethical approval process (with examples from three different universities); and

c) the ways to obtain informed consent with some examples. This would give advice on preparing and training both researchers and research students in appropriately upholding ethical practices through ethical approval processes.

Setting up an institutional ethical committee (ECs)

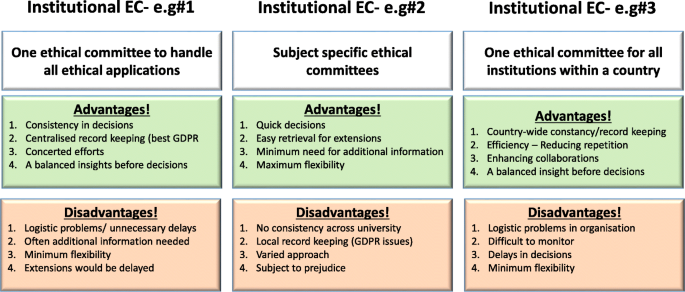

Institutional Ethical Committees (ECs) are essential to govern every aspect of the activities undertaken by that institute. With regards to higher educational organisations, this is vital to establish ethical behaviour for students and staff to impart research, education and scholarly activities (or everything) they do. These committees should be knowledgeable about international laws relating to different fields of studies (such as science, medicine, business, finance, law, and social sciences). The advantages and disadvantages of institutional, subject specific or common (statutory) ECs are summarised in Fig. 2 . Some institutions have developed individual ECs linked to specific fields (or subject areas) whilst others have one institutional committee that overlooks the entire ethical behaviour and approval process. There is no clear preference between the two as both have their own advantages and disadvantages (see Fig. 2 ). Subject specific ECs are attractive to medical, law and business provisions, as it is perceived the members within respective committees would be able to understand the subject and therefore comprehend the need of the proposed research/activity (Kadam, 2012 ; Schnyder et al., 2018 ). However, others argue, due to this “ specificity ”, the committee would fail to forecast the wider implications of that application. On the other hand, university-wide ECs would look into the wider implications. Yet they find it difficult to understand the purpose and the specific applications of that research. Not everyone understands dynamics of all types of research methodologies, data collection, etc., and therefore there might be a chance of a proposal being rejected merely because the EC could not understand the research applications (Getz, 1990 ).

Summary of advantages and disadvantages of three different forms of ethical committees

[N/B for Fig. 2 : Examples of different types of ethical application procedures and forms used were discussed with the workshop attendees to enhance their understanding of the differences. GDPR = General Data Protection Regulation].

Although we recommend a designated EC with relevant professional, academic and ethical expertise to deal with particular types of applications, the membership (of any EC) should include some non-experts who would represent the wider community (see above). Having some non-experts in EC would not only help the researchers to consider explaining their research in layperson’s terms (by thinking outside the box) but also would ensure efficiency without compromising participants/animal safety. They may even help to address the common ethical issues outside research culture. Some UK universities usually offer this membership to a clergy, councillor or a parliamentarian who does not have any links to the institutions. Most importantly, it is vital for any EC members to undertake further training in addition to previous experience in the relevant field of research ethics.

Another issue that raises concerns is multi-centre research, involving several institutions, where institutionalised ethical approvals are needed from each partner. In some cases, such as clinical research within the UK, a common statutory EC called National Health Services (NHS) Research Ethics Committee (NREC) is in place to cover research ethics involving all partner institutions (NHS, 2018 ). The process of obtaining approval from this type of EC takes time, therefore advanced planning is needed.

Ethics approval forms and process

During the workshop, we discussed some anonymised application forms obtained from open-access sources for qualitative and quantitative research as examples. Considering research ethics, for the purpose of understanding, we arbitrarily divided this in two categories; research based on (a) quantitative and (b) qualitative methodologies. As their name suggests their research approach is extremely different from each other. The discussion elicited how ECs devise different types of ethical application form/questions. As for qualitative research, these are often conducted as “face-to-face” interviews, which would have implications on volunteer anonymity.

Furthermore, discussions posited when the interviews are replaced by on-line surveys, they have to be administered through registered university staff to maintain confidentiality. This becomes difficult when the research is a multi-centre study. These types of issues are also common in medical research regarding participants’ anonymity, confidentially, and above all their right to withdraw consent to be involved in research.

Storing and protecting data collected in the process of the study is also a point of consideration when applying for approval.

Finally, the ethical processes of invasive (involving human/animals) and non-invasive research (questionnaire based) may slightly differ from one another. Following research areas are considered as investigations that need ethical approval:

research that involves human participants (see below)

use of the ‘products’ of human participants (see below)

work that potentially impacts on humans (see below)

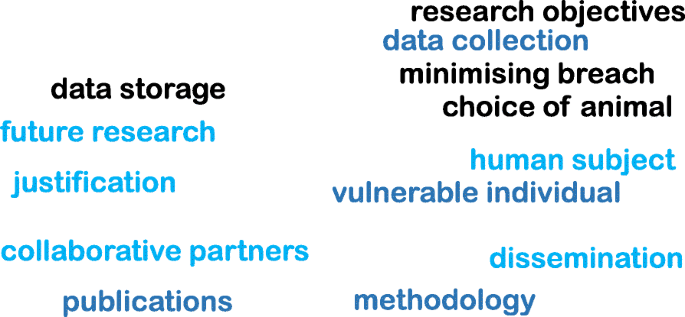

research that involves animals

In addition, it is important to provide a disclaimer even if an ethical approval is deemed unnecessary. Following word cloud (Fig. 3 ) shows the important variables that need to be considered at the brainstorming stage before an ethical application. It is worth noting the importance of proactive planning predicting the “unexpected” during different phases of a research project (such as planning, execution, publication, and future directions). Some applications (such as working with vulnerable individuals or children) will require safety protection clearance (such as DBS - Disclosure and Barring Service, commonly obtained from the local police). Please see section on Research involving Humans - Informed consents for further discussions.

Examples of important variables that need to be considered for an ethical approval

It is also imperative to report or re-apply for ethical approval for any minor or major post-approval changes to original proposals made. In case of methodological changes, evidence of risk assessments for changes and/or COSHH (Control of Substances Hazardous to Health Regulations) should also be given. Likewise, any new collaborative partners or removal of researchers should also be notified to the IEAC.

Other findings include:

in case of complete changes in the project, the research must be stopped and new approval should be seeked,

in case of noticing any adverse effects to project participants (human or non-human), these should also be notified to the committee for appropriate clearance to continue the work, and

the completion of the project must also be notified with the indication whether the researchers may restart the project at a later stage.

Research involving humans - informed consents

While discussing research involving humans and based on literature review, findings highlight the human subjects/volunteers must willingly participate in research after being adequately informed about the project. Therefore, research involving humans and animals takes precedence in obtaining ethical clearance and its strict adherence, one of which is providing a participant information sheet/leaflet. This sheet should contain a full explanation about the research that is being carried out and be given out in lay-person’s terms in writing (Manti and Licari 2018 ; Hardicre 2014 ). Measures should also be in place to explain and clarify any doubts from the participants. In addition, there should be a clear statement on how the participants’ anonymity is protected. We provide below some example questions below to help the researchers to write this participant information sheet:

What is the purpose of the study?

Why have they been chosen?

What will happen if they take part?

What do they have to do?

What happens when the research stops?

What if something goes wrong?

What will happen to the results of the research study?

Will taking part be kept confidential?

How to handle “vulnerable” participants?

How to mitigate risks to participants?

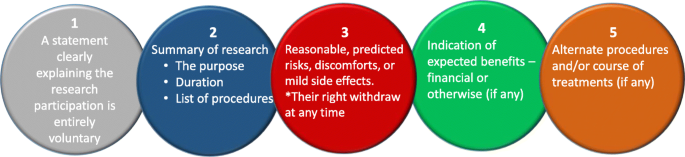

Many institutional ethics committees expect the researchers to produce a FAQ (frequently asked questions) in addition to the information about research. Most importantly, the researchers also need to provide an informed consent form, which should be signed by each human participant. The five elements identified that are needed to be considered for an informed consent statement are summarized in Fig. 4 below (slightly modified from the Federal Policy for the Protection of Human Subjects ( 2018 ) - Diffn website c ).

Five basic elements to consider for an informed consent [figure adapted from Diffn website c ]

The informed consent form should always contain a clause for the participant to withdraw their consent at any time. Should this happen all the data from that participant should be eliminated from the study without affecting their anonymity.

Typical research ethics approval process

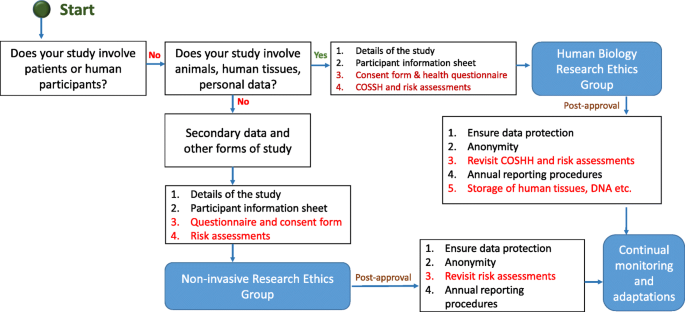

In this section, we provide an example flow chart explaining how researchers may choose the appropriate application and process, as highlighted in Fig. 5 . However, it is imperative to note here that these are examples only and some institutions may have one unified application with separate sections to demarcate qualitative and quantitative research criteria.

Typical ethical approval processes for quantitative and qualitative research. [N/B for Fig. 5 - This simplified flow chart shows that fundamental process for invasive and non-invasive EC application is same, the routes and the requirements for additional information are slightly different]

Once the ethical application is submitted, the EC should ensure a clear approval procedure with distinctly defined timeline. An example flow chart showing the procedure for an ethical approval was obtained from University of Leicester as open-access. This is presented in Fig. 6 . Further examples of the ethical approval process and governance were discussed in the workshop.

An example ethical approval procedures conducted within University of Leicester (Figure obtained from the University of Leicester research pages - Difn website d - open access)

Strategies for ethics educations for students

Student education on the importance of ethics and ethical behaviour in research and scholarly activities is extremely essential. Literature posits in the area of medical research that many universities are incorporating ethics in post-graduate degrees but when it comes to undergraduate degrees, there is less appetite to deliver modules or even lectures focussing on research ethics (Seymour et al., 2004 ; Willison and O’Regan, 2007 ). This may be due to the fact that undergraduate degree structure does not really focus on research (DePasse et al., 2016 ). However, as Orr ( 2018 ) suggested, institutions should focus more on educating all students about ethics/ethical behaviour and their importance in research, than enforcing punitive measures for unethical behaviour. Therefore, as an advisory committee, and based on our preliminary literature survey and workshop results, we strongly recommend incorporating ethical education within undergraduate curriculum. Looking at those institutions which focus on ethical education for both under-and postgraduate courses, their approaches are either (a) a lecture-based delivery, (b) case study based approach or (c) a combined delivery starting with a lecture on basic principles of ethics followed by generating a debate based discussion using interesting case studies. The combined method seems much more effective than the other two as per our findings as explained next.

As many academics who have been involved in teaching ethics and/or research ethics agree, the underlying principles of ethics is often perceived as a boring subject. Therefore, lecture-based delivery may not be suitable. On the other hand, a debate based approach, though attractive and instantly generates student interest, cannot be effective without students understanding the underlying basic principles. In addition, when selecting case studies, it would be advisable to choose cases addressing all different types of ethical dilemmas. As an advisory group within ENAI, we are in the process of collating supporting materials to help to develop institutional policies, creating advisory documents to help in obtaining ethical approvals, and teaching materials to enhance debate-based lesson plans that can be used by the member and other institutions.

Concluding remarks

In summary, our literature survey and workshop findings highlight that researchers should accept that ethics underpins everything we do, especially in research. Although ethical approval is tedious, it is an imperative process in which proactive thinking is essential to identify ethical issues that might affect the project. Our findings further lead us to state that the ethical approval process differs from institution to institution and we strongly recommend the researchers to follow the institutional guidelines and their underlying ethical principles. The ENAI workshop in Vilnius highlighted the importance of ethical governance by establishing ECs, discussed different types of ECs and procedures with some examples and highlighted the importance of student education to impart ethical culture within research communities, an area that needs further study as future scope.

Declarations

The manuscript was entirely written by the corresponding author with contributions from co-authors who have also taken part in the delivery of the workshop. Authors confirm that the data supporting the findings of this study are available within the article. We can also confirm that there are no potential competing interests with other organisations.

Availability of data and materials

Authors confirm that the data supporting the findings of this study are available within the article.

Abbreviations

ALL European academics

Australian research council

Biotechnology and biological sciences research council

Canadian institutes for health research

Committee of publication ethics

Ethical committee

European network of academic integrity

Economic and social research council

International convention for the protection of animals

institutional ethical advisory committee

Institutional review board

Immaculata university of Pennsylvania

Lesbian, gay, bisexual, and transgender

Medical research council)

National health services

National health services nih national institute of health (NIH)

National institute of clinical care excellence

National health and medical research council

Natural sciences and engineering research council

National research ethics committee

National statement on ethical conduct in human research

Responsible research practice

Social sciences and humanities research council

Tri-council policy statement

World Organization for animal health

Universities Australia

UK-research and innovation

US office for human research protections

Alba S, Lenglet A, Verdonck K, Roth J, Patil R, Mendoza W, Juvekar S, Rumisha SF (2020) Bridging research integrity and global health epidemiology (BRIDGE) guidelines: explanation and elaboration. BMJ Glob Health 5(10):e003237. https://doi.org/10.1136/bmjgh-2020-003237

Article Google Scholar

Anderson MS (2011) Research misconduct and misbehaviour. In: Bertram Gallant T (ed) Creating the ethical academy: a systems approach to understanding misconduct and empowering change in higher education. Routledge, pp 83–96

BBC News. (2019). Birmingham school LGBT LESSONS PROTEST investigated. March 8, 2019. Retrieved February 14, 2021, available online. URL: https://www.bbc.com/news/uk-england-birmingham-47498446

Children’s Rights Alliance for England. (2005). R (Williamson and others) v Secretary of State for Education and Employment. Session 2004–05. [2005] UKHL 15. Available Online. URL: http://www.crae.org.uk/media/33624/R-Williamson-and-others-v-Secretary-of-State-for-Education-and-Employment.pdf

Council of Europe. (2014). Texts of the Council of Europe on bioethical matters. Available Online. https://www.coe.int/t/dg3/healthbioethic/Texts_and_documents/INF_2014_5_vol_II_textes_%20CoE_%20bio%C3%A9thique_E%20(2).pdf

Dellaportas S, Kanapathippillai S, Khan, A and Leung, P. (2014). Ethics education in the Australian accounting curriculum: a longitudinal study examining barriers and enablers. 362–382. Available Online. URL: https://doi.org/10.1080/09639284.2014.930694 , 23, 4, 362, 382

DePasse JM, Palumbo MA, Eberson CP, Daniels AH (2016) Academic characteristics of orthopaedic surgery residency applicants from 2007 to 2014. JBJS 98(9):788–795. https://doi.org/10.2106/JBJS.15.00222

Desmond H, Dierickx K (2021) Research integrity codes of conduct in Europe: understanding the divergences. https://doi.org/10.1111/bioe.12851

Difn website a - National Statement on Ethical Conduct in Human Research (NSECHR). (2018). Available Online. URL: https://www.nhmrc.gov.au/about-us/publications/australian-code-responsible-conduct-research-2018

Difn website b - Enago academy Importance of Ethics Committees in Scholarly Research (2020, October 26). Available online. URL: https://www.enago.com/academy/importance-of-ethics-committees-in-scholarly-research/

Difn website c - Ethics vs Morals - Difference and Comparison. Retrieved July 14, 2020. Available online. URL: https://www.diffen.com/difference/Ethics_vs_Morals

Difn website d - University of Leicester. (2015). Staff ethics approval flowchart. May 1, 2015. Retrieved July 14, 2020. Available Online. URL: https://www2.le.ac.uk/institution/ethics/images/ethics-approval-flowchart/view

European Commission - Facilitating Research Excellence in FP7 (2013) https://ec.europa.eu/research/participants/data/ref/fp7/89888/ethics-for-researchers_en.pdf

European Network for Academic Integrity. (2018). Ethical advisory group. Retrieved February 14, 2021. Available online. URL: http://www.academicintegrity.eu/wp/wg-ethical/

Federal Policy for the Protection of Human Subjects. (2018). Retrieved February 14, 2021. Available Online. URL: https://www.federalregister.gov/documents/2017/01/19/2017-01058/federal-policy-for-the-protection-of-human-subjects#p-855

Flite, CA and Harman, LB. (2013). Code of ethics: principles for ethical leadership Perspect Health Inf Mana; 10(winter): 1d. PMID: 23346028

Fouka G, Mantzorou M (2011) What are the major ethical issues in conducting research? Is there a conflict between the research ethics and the nature of nursing. Health Sci J 5(1) Available Online. URL: https://www.hsj.gr/medicine/what-are-the-major-ethical-issues-in-conducting-research-is-there-a-conflict-between-the-research-ethics-and-the-nature-of-nursing.php?aid=3485

Fox G (2017) History and ethical principles. The University of Miami and the Collaborative Institutional Training Initiative (CITI) Program URL https://silo.tips/download/chapter-1-history-and-ethical-principles # (Available Online)

Getz KA (1990) International codes of conduct: An analysis of ethical reasoning. J Bus Ethics 9(7):567–577

Ghooi RB (2011) The nuremberg code–a critique. Perspect Clin Res 2(2):72–76. https://doi.org/10.4103/2229-3485.80371

Hardicre, J. (2014) Valid informed consent in research: an introduction Br J Nurs 23(11). https://doi.org/10.12968/bjon.2014.23.11.564 , 567

Hazard, GC (Jr). (1994). Law, morals, and ethics. Yale law school legal scholarship repository. Faculty Scholarship Series. Yale University. Available Online. URL: https://digitalcommons.law.yale.edu/cgi/viewcontent.cgi?referer=https://www.google.com/&httpsredir=1&article=3322&context=fss_papers

Israel, M., & Drenth, P. (2016). Research integrity: perspectives from Australia and Netherlands. In T. Bretag (Ed.), Handbook of academic integrity (pp. 789–808). Springer, Singapore. https://doi.org/10.1007/978-981-287-098-8_64

Kadam R (2012) Proactive role for ethics committees. Indian J Med Ethics 9(3):216. https://doi.org/10.20529/IJME.2012.072

Kant I (2018) The metaphysics of morals. Cambridge University Press, UK https://doi.org/10.1017/9781316091388

Kayser-Jones J (2003) Continuing to conduct research in nursing homes despite controversial findings: reflections by a research scientist. Qual Health Res 13(1):114–128. https://doi.org/10.1177/1049732302239414

Kotecha JA, Manca D, Lambert-Lanning A, Keshavjee K, Drummond N, Godwin M, Greiver M, Putnam W, Lussier M-T, Birtwhistle R (2011) Ethics and privacy issues of a practice-based surveillance system: need for a national-level institutional research ethics board and consent standards. Can Fam physician 57(10):1165–1173. https://europepmc.org/article/pmc/pmc3192088

Kuyare, MS., Taur, SR., Thatte, U. (2014). Establishing institutional ethics committees: challenges and solutions–a review of the literature. Indian J Med Ethics. https://doi.org/10.20529/IJME.2014.047

LaFollette, H. (2007). Ethics in practice (3rd edition). Blackwell

Larry RC (1982) The teaching of ethics and moral values in teaching. J High Educ 53(3):296–306. https://doi.org/10.1080/00221546.1982.11780455

Manti S, Licari A (2018) How to obtain informed consent for research. Breathe (Sheff) 14(2):145–152. https://doi.org/10.1183/20734735.001918

Mulholland MW, Bell J (2005) Research Governance and Research Funding in the USA: What the academic surgeon needs to know. J R Soc Med 98(11):496–502. https://doi.org/10.1258/jrsm.98.11.496

National Institute of Health (NIH) Ethics in Clinical Research. n.d. Available Online. URL: https://clinicalcenter.nih.gov/recruit/ethics.html

NHS (2018) Flagged Research Ethics Committees. Retrieved February 14, 2021. Available online. URL: https://www.hra.nhs.uk/about-us/committees-and-services/res-and-recs/flagged-research-ethics-committees/

NICE (2018) Research governance policy. Retrieved February 14, 2021. Available online. URL: https://www.nice.org.uk/Media/Default/About/what-we-do/science-policy-and-research/research-governance-policy.pdf

Orr, J. (2018). Developing a campus academic integrity education seminar. J Acad Ethics 16(3), 195–209. https://doi.org/10.1007/s10805-018-9304-7

Quinn, M. (2011). Introduction to Ethics. Ethics for an Information Age. 4th Ed. Ch 2. 53–108. Pearson. UK

Resnik. (2020). What is ethics in Research & why is it Important? Available Online. URL: https://www.niehs.nih.gov/research/resources/bioethics/whatis/index.cfm

Schnyder S, Starring H, Fury M, Mora A, Leonardi C, Dasa V (2018) The formation of a medical student research committee and its impact on involvement in departmental research. Med Educ Online 23(1):1. https://doi.org/10.1080/10872981.2018.1424449

Seymour E, Hunter AB, Laursen SL, DeAntoni T (2004) Establishing the benefits of research experiences for undergraduates in the sciences: first findings from a three-year study. Sci Educ 88(4):493–534. https://doi.org/10.1002/sce.10131

Shamoo AE, Irving DN (1993) Accountability in research using persons with mental illness. Account Res 3(1):1–17. https://doi.org/10.1080/08989629308573826

Shaw, S., Boynton, PM., and Greenhalgh, T. (2005). Research governance: where did it come from, what does it mean? Research governance framework for health and social care, 2nd ed. London: Department of Health. https://doi.org/10.1258/jrsm.98.11.496 , 98, 11, 496, 502

Book Google Scholar

Speight, JG. (2016) Ethics in the university |DOI: https://doi.org/10.1002/9781119346449 scrivener publishing LLC

Stephenson GK, Jones GA, Fick E, Begin-Caouette O, Taiyeb A, Metcalfe A (2020) What’s the protocol? Canadian university research ethics boards and variations in implementing tri-Council policy. Can J Higher Educ 50(1)1): 68–81

Surbhi, S. (2015). Difference between morals and ethics [weblog]. March 25, 2015. Retrieved February 14, 2021. Available Online. URL: http://keydifferences.com/difference-between-morals-and-ethics.html

The Belmont Report (1979). Ethical Principles and Guidelines for the Protection of Human Subjects of Research. The National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research. Retrieved February 14, 2021. Available online. URL: https://www.hhs.gov/ohrp/sites/default/files/the-belmont-report-508c_FINAL.pdf

The Singapore Statement on Research Integrity. (2020). Nicholas Steneck and Tony Mayer, Co-chairs, 2nd World Conference on Research Integrity; Melissa Anderson, Chair, Organizing Committee, 3rd World Conference on Research Integrity. Retrieved February 14, 2021. Available online. URL: https://wcrif.org/documents/327-singapore-statement-a4size/file

Warwick K (2003) Cyborg morals, cyborg values, cyborg ethics. Ethics Inf Technol 5(3):131–137. https://doi.org/10.1023/B:ETIN.0000006870.65865.cf

Weindling P (2001) The origins of informed consent: the international scientific commission on medical war crimes, and the Nuremberg code. Bull Hist Med 75(1):37–71. https://doi.org/10.1353/bhm.2001.0049

WHO. (2009). Research ethics committees Basic concepts for capacity-building. Retrieved February 14, 2021. Available online. URL: https://www.who.int/ethics/Ethics_basic_concepts_ENG.pdf

WHO. (2021). Chronological list of publications. Retrieved February 14, 2021. Available online. URL: https://www.who.int/ethics/publications/year/en/

Willison, J. and O’Regan, K. (2007). Commonly known, commonly not known, totally unknown: a framework for students becoming researchers. High Educ Res Dev 26(4). 393–409. https://doi.org/10.1080/07294360701658609

Žukauskas P, Vveinhardt J, and Andriukaitienė R. (2018). Research Ethics In book: Management Culture and Corporate Social Responsibility Eds Jolita Vveinhardt IntechOpenEditors DOI: https://doi.org/10.5772/intechopen.70629 , 2018

Download references

Acknowledgements

Authors wish to thank the organising committee of the 5th international conference named plagiarism across Europe and beyond, in Vilnius, Lithuania for accepting this paper to be presented in the conference.

Not applicable as this is an independent study, which is not funded by any internal or external bodies.

Author information

Authors and affiliations.

School of Human Sciences, University of Derby, DE22 1, Derby, GB, UK

Shivadas Sivasubramaniam

Department of Informatics, Mendel University in Brno, Zemědělská, 1665, Brno, Czechia

Dita Henek Dlabolová

Centre for Academic Integrity in the UAE, Faculty of Engineering & Information Sciences, University of Wollongong in Dubai, Dubai, UAE

Veronika Kralikova & Zeenath Reza Khan

You can also search for this author in PubMed Google Scholar

Contributions

The manuscript was entirely written by the corresponding author with contributions from co-authors who have equally contributed to presentation of this paper in the 5th international conference named plagiarism across Europe and beyond, in Vilnius, Lithuania. Authors have equally contributed for the information collection, which were then summarised as narrative explanations by the Corresponding author and Dr. Zeenath Reza Khan. Then checked and verified by Dr. Dlabolova and Ms. Králíková. The author(s) read and approved the final manuscript.

Corresponding author

Correspondence to Shivadas Sivasubramaniam .

Ethics declarations

Competing interests.

We can also confirm that there are no potential competing interest with other organisations.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ . The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

Reprints and permissions

About this article

Cite this article.

Sivasubramaniam, S., Dlabolová, D.H., Kralikova, V. et al. Assisting you to advance with ethics in research: an introduction to ethical governance and application procedures. Int J Educ Integr 17 , 14 (2021). https://doi.org/10.1007/s40979-021-00078-6

Download citation

Received : 17 July 2020

Accepted : 25 April 2021

Published : 13 July 2021

DOI : https://doi.org/10.1007/s40979-021-00078-6

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Higher education

- Ethical codes

- Ethics committee

- Post-secondary education

- Institutional policies

- Research ethics

International Journal for Educational Integrity

ISSN: 1833-2595

- Submission enquiries: Access here and click Contact Us

- General enquiries: [email protected]

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Ethical Considerations in Research | Types & Examples

Ethical Considerations in Research | Types & Examples

Published on 7 May 2022 by Pritha Bhandari .

Ethical considerations in research are a set of principles that guide your research designs and practices. Scientists and researchers must always adhere to a certain code of conduct when collecting data from people.

The goals of human research often include understanding real-life phenomena, studying effective treatments, investigating behaviours, and improving lives in other ways. What you decide to research and how you conduct that research involve key ethical considerations.

These considerations work to:

- Protect the rights of research participants

- Enhance research validity

- Maintain scientific integrity

Table of contents

Why do research ethics matter, getting ethical approval for your study, types of ethical issues, voluntary participation, informed consent, confidentiality, potential for harm, results communication, examples of ethical failures, frequently asked questions about research ethics.

Research ethics matter for scientific integrity, human rights and dignity, and collaboration between science and society. These principles make sure that participation in studies is voluntary, informed, and safe for research subjects.

You’ll balance pursuing important research aims with using ethical research methods and procedures. It’s always necessary to prevent permanent or excessive harm to participants, whether inadvertent or not.

Defying research ethics will also lower the credibility of your research because it’s hard for others to trust your data if your methods are morally questionable.

Even if a research idea is valuable to society, it doesn’t justify violating the human rights or dignity of your study participants.

Prevent plagiarism, run a free check.

Before you start any study involving data collection with people, you’ll submit your research proposal to an institutional review board (IRB) .

An IRB is a committee that checks whether your research aims and research design are ethically acceptable and follow your institution’s code of conduct. They check that your research materials and procedures are up to code.

If successful, you’ll receive IRB approval, and you can begin collecting data according to the approved procedures. If you want to make any changes to your procedures or materials, you’ll need to submit a modification application to the IRB for approval.

If unsuccessful, you may be asked to re-submit with modifications or your research proposal may receive a rejection. To get IRB approval, it’s important to explicitly note how you’ll tackle each of the ethical issues that may arise in your study.

There are several ethical issues you should always pay attention to in your research design, and these issues can overlap with each other.

You’ll usually outline ways you’ll deal with each issue in your research proposal if you plan to collect data from participants.

Voluntary participation means that all research subjects are free to choose to participate without any pressure or coercion.

All participants are able to withdraw from, or leave, the study at any point without feeling an obligation to continue. Your participants don’t need to provide a reason for leaving the study.

It’s important to make it clear to participants that there are no negative consequences or repercussions to their refusal to participate. After all, they’re taking the time to help you in the research process, so you should respect their decisions without trying to change their minds.

Voluntary participation is an ethical principle protected by international law and many scientific codes of conduct.

Take special care to ensure there’s no pressure on participants when you’re working with vulnerable groups of people who may find it hard to stop the study even when they want to.

Informed consent refers to a situation in which all potential participants receive and understand all the information they need to decide whether they want to participate. This includes information about the study’s benefits, risks, funding, and institutional approval.

- What the study is about

- The risks and benefits of taking part

- How long the study will take

- Your supervisor’s contact information and the institution’s approval number

Usually, you’ll provide participants with a text for them to read and ask them if they have any questions. If they agree to participate, they can sign or initial the consent form. Note that this may not be sufficient for informed consent when you work with particularly vulnerable groups of people.

If you’re collecting data from people with low literacy, make sure to verbally explain the consent form to them before they agree to participate.

For participants with very limited English proficiency, you should always translate the study materials or work with an interpreter so they have all the information in their first language.

In research with children, you’ll often need informed permission for their participation from their parents or guardians. Although children cannot give informed consent, it’s best to also ask for their assent (agreement) to participate, depending on their age and maturity level.

Anonymity means that you don’t know who the participants are and you can’t link any individual participant to their data.

You can only guarantee anonymity by not collecting any personally identifying information – for example, names, phone numbers, email addresses, IP addresses, physical characteristics, photos, and videos.

In many cases, it may be impossible to truly anonymise data collection. For example, data collected in person or by phone cannot be considered fully anonymous because some personal identifiers (demographic information or phone numbers) are impossible to hide.

You’ll also need to collect some identifying information if you give your participants the option to withdraw their data at a later stage.

Data pseudonymisation is an alternative method where you replace identifying information about participants with pseudonymous, or fake, identifiers. The data can still be linked to participants, but it’s harder to do so because you separate personal information from the study data.

Confidentiality means that you know who the participants are, but you remove all identifying information from your report.

All participants have a right to privacy, so you should protect their personal data for as long as you store or use it. Even when you can’t collect data anonymously, you should secure confidentiality whenever you can.

Some research designs aren’t conducive to confidentiality, but it’s important to make all attempts and inform participants of the risks involved.

As a researcher, you have to consider all possible sources of harm to participants. Harm can come in many different forms.

- Psychological harm: Sensitive questions or tasks may trigger negative emotions such as shame or anxiety.

- Social harm: Participation can involve social risks, public embarrassment, or stigma.

- Physical harm: Pain or injury can result from the study procedures.

- Legal harm: Reporting sensitive data could lead to legal risks or a breach of privacy.

It’s best to consider every possible source of harm in your study, as well as concrete ways to mitigate them. Involve your supervisor to discuss steps for harm reduction.

Make sure to disclose all possible risks of harm to participants before the study to get informed consent. If there is a risk of harm, prepare to provide participants with resources, counselling, or medical services if needed.

Some of these questions may bring up negative emotions, so you inform participants about the sensitive nature of the survey and assure them that their responses will be confidential.

The way you communicate your research results can sometimes involve ethical issues. Good science communication is honest, reliable, and credible. It’s best to make your results as transparent as possible.

Take steps to actively avoid plagiarism and research misconduct wherever possible.

Plagiarism means submitting others’ works as your own. Although it can be unintentional, copying someone else’s work without proper credit amounts to stealing. It’s an ethical problem in research communication because you may benefit by harming other researchers.

Self-plagiarism is when you republish or re-submit parts of your own papers or reports without properly citing your original work.

This is problematic because you may benefit from presenting your ideas as new and original even though they’ve already been published elsewhere in the past. You may also be infringing on your previous publisher’s copyright, violating an ethical code, or wasting time and resources by doing so.

In extreme cases of self-plagiarism, entire datasets or papers are sometimes duplicated. These are major ethical violations because they can skew research findings if taken as original data.

You notice that two published studies have similar characteristics even though they are from different years. Their sample sizes, locations, treatments, and results are highly similar, and the studies share one author in common.

Research misconduct

Research misconduct means making up or falsifying data, manipulating data analyses, or misrepresenting results in research reports. It’s a form of academic fraud.

These actions are committed intentionally and can have serious consequences; research misconduct is not a simple mistake or a point of disagreement about data analyses.

Research misconduct is a serious ethical issue because it can undermine scientific integrity and institutional credibility. It leads to a waste of funding and resources that could have been used for alternative research.

Later investigations revealed that they fabricated and manipulated their data to show a nonexistent link between vaccines and autism. Wakefield also neglected to disclose important conflicts of interest, and his medical license was taken away.

This fraudulent work sparked vaccine hesitancy among parents and caregivers. The rate of MMR vaccinations in children fell sharply, and measles outbreaks became more common due to a lack of herd immunity.

Research scandals with ethical failures are littered throughout history, but some took place not that long ago.

Some scientists in positions of power have historically mistreated or even abused research participants to investigate research problems at any cost. These participants were prisoners, under their care, or otherwise trusted them to treat them with dignity.

To demonstrate the importance of research ethics, we’ll briefly review two research studies that violated human rights in modern history.

These experiments were inhumane and resulted in trauma, permanent disabilities, or death in many cases.

After some Nazi doctors were put on trial for their crimes, the Nuremberg Code of research ethics for human experimentation was developed in 1947 to establish a new standard for human experimentation in medical research.

In reality, the actual goal was to study the effects of the disease when left untreated, and the researchers never informed participants about their diagnoses or the research aims.

Although participants experienced severe health problems, including blindness and other complications, the researchers only pretended to provide medical care.

When treatment became possible in 1943, 11 years after the study began, none of the participants were offered it, despite their health conditions and high risk of death.

Ethical failures like these resulted in severe harm to participants, wasted resources, and lower trust in science and scientists. This is why all research institutions have strict ethical guidelines for performing research.

Ethical considerations in research are a set of principles that guide your research designs and practices. These principles include voluntary participation, informed consent, anonymity, confidentiality, potential for harm, and results communication.

Scientists and researchers must always adhere to a certain code of conduct when collecting data from others .

These considerations protect the rights of research participants, enhance research validity , and maintain scientific integrity.

Research ethics matter for scientific integrity, human rights and dignity, and collaboration between science and society. These principles make sure that participation in studies is voluntary, informed, and safe.

Anonymity means you don’t know who the participants are, while confidentiality means you know who they are but remove identifying information from your research report. Both are important ethical considerations .

You can only guarantee anonymity by not collecting any personally identifying information – for example, names, phone numbers, email addresses, IP addresses, physical characteristics, photos, or videos.

You can keep data confidential by using aggregate information in your research report, so that you only refer to groups of participants rather than individuals.

These actions are committed intentionally and can have serious consequences; research misconduct is not a simple mistake or a point of disagreement but a serious ethical failure.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bhandari, P. (2022, May 07). Ethical Considerations in Research | Types & Examples. Scribbr. Retrieved 31 May 2024, from https://www.scribbr.co.uk/research-methods/ethical-considerations/

Is this article helpful?

Pritha Bhandari

Other students also liked, a quick guide to experimental design | 5 steps & examples, data collection methods | step-by-step guide & examples, how to avoid plagiarism | tips on citing sources.

- Privacy Policy

Home » Ethical Considerations – Types, Examples and Writing Guide

Ethical Considerations – Types, Examples and Writing Guide

Table of Contents

Ethical Considerations

Ethical considerations in research refer to the principles and guidelines that researchers must follow to ensure that their studies are conducted in an ethical and responsible manner. These considerations are designed to protect the rights, safety, and well-being of research participants, as well as the integrity and credibility of the research itself

Some of the key ethical considerations in research include:

- Informed consent: Researchers must obtain informed consent from study participants, which means they must inform participants about the study’s purpose, procedures, risks, benefits, and their right to withdraw at any time.

- Privacy and confidentiality : Researchers must ensure that participants’ privacy and confidentiality are protected. This means that personal information should be kept confidential and not shared without the participant’s consent.

- Harm reduction : Researchers must ensure that the study does not harm the participants physically or psychologically. They must take steps to minimize the risks associated with the study.

- Fairness and equity : Researchers must ensure that the study does not discriminate against any particular group or individual. They should treat all participants equally and fairly.

- Use of deception: Researchers must use deception only if it is necessary to achieve the study’s objectives. They must inform participants of the deception as soon as possible.

- Use of vulnerable populations : Researchers must be especially cautious when working with vulnerable populations, such as children, pregnant women, prisoners, and individuals with cognitive or intellectual disabilities.

- Conflict of interest : Researchers must disclose any potential conflicts of interest that may affect the study’s integrity. This includes financial or personal relationships that could influence the study’s results.

- Data manipulation: Researchers must not manipulate data to support a particular hypothesis or agenda. They should report the results of the study objectively, even if the findings are not consistent with their expectations.

- Intellectual property: Researchers must respect intellectual property rights and give credit to previous studies and research.

- Cultural sensitivity : Researchers must be sensitive to the cultural norms and beliefs of the participants. They should avoid imposing their values and beliefs on the participants and should be respectful of their cultural practices.

Types of Ethical Considerations

Types of Ethical Considerations are as follows:

Research Ethics:

This includes ethical principles and guidelines that govern research involving human or animal subjects, ensuring that the research is conducted in an ethical and responsible manner.

Business Ethics :

This refers to ethical principles and standards that guide business practices and decision-making, such as transparency, honesty, fairness, and social responsibility.

Medical Ethics :

This refers to ethical principles and standards that govern the practice of medicine, including the duty to protect patient autonomy, informed consent, confidentiality, and non-maleficence.

Environmental Ethics :

This involves ethical principles and values that guide our interactions with the natural world, including the obligation to protect the environment, minimize harm, and promote sustainability.

Legal Ethics

This involves ethical principles and standards that guide the conduct of legal professionals, including issues such as confidentiality, conflicts of interest, and professional competence.

Social Ethics

This involves ethical principles and values that guide our interactions with other individuals and society as a whole, including issues such as justice, fairness, and human rights.

Information Ethics

This involves ethical principles and values that govern the use and dissemination of information, including issues such as privacy, accuracy, and intellectual property.

Cultural Ethics

This involves ethical principles and values that govern the relationship between different cultures and communities, including issues such as respect for diversity, cultural sensitivity, and inclusivity.

Technological Ethics

This refers to ethical principles and guidelines that govern the development, use, and impact of technology, including issues such as privacy, security, and social responsibility.

Journalism Ethics

This involves ethical principles and standards that guide the practice of journalism, including issues such as accuracy, fairness, and the public interest.

Educational Ethics

This refers to ethical principles and standards that guide the practice of education, including issues such as academic integrity, fairness, and respect for diversity.

Political Ethics

This involves ethical principles and values that guide political decision-making and behavior, including issues such as accountability, transparency, and the protection of civil liberties.

Professional Ethics

This refers to ethical principles and standards that guide the conduct of professionals in various fields, including issues such as honesty, integrity, and competence.

Personal Ethics

This involves ethical principles and values that guide individual behavior and decision-making, including issues such as personal responsibility, honesty, and respect for others.

Global Ethics

This involves ethical principles and values that guide our interactions with other nations and the global community, including issues such as human rights, environmental protection, and social justice.

Applications of Ethical Considerations

Ethical considerations are important in many areas of society, including medicine, business, law, and technology. Here are some specific applications of ethical considerations:

- Medical research : Ethical considerations are crucial in medical research, particularly when human subjects are involved. Researchers must ensure that their studies are conducted in a way that does not harm participants and that participants give informed consent before participating.

- Business practices: Ethical considerations are also important in business, where companies must make decisions that are socially responsible and avoid activities that are harmful to society. For example, companies must ensure that their products are safe for consumers and that they do not engage in exploitative labor practices.

- Environmental protection: Ethical considerations play a crucial role in environmental protection, as companies and governments must weigh the benefits of economic development against the potential harm to the environment. Decisions about land use, resource allocation, and pollution must be made in an ethical manner that takes into account the long-term consequences for the planet and future generations.

- Technology development : As technology continues to advance rapidly, ethical considerations become increasingly important in areas such as artificial intelligence, robotics, and genetic engineering. Developers must ensure that their creations do not harm humans or the environment and that they are developed in a way that is fair and equitable.

- Legal system : The legal system relies on ethical considerations to ensure that justice is served and that individuals are treated fairly. Lawyers and judges must abide by ethical standards to maintain the integrity of the legal system and to protect the rights of all individuals involved.

Examples of Ethical Considerations

Here are a few examples of ethical considerations in different contexts:

- In healthcare : A doctor must ensure that they provide the best possible care to their patients and avoid causing them harm. They must respect the autonomy of their patients, and obtain informed consent before administering any treatment or procedure. They must also ensure that they maintain patient confidentiality and avoid any conflicts of interest.

- In the workplace: An employer must ensure that they treat their employees fairly and with respect, provide them with a safe working environment, and pay them a fair wage. They must also avoid any discrimination based on race, gender, religion, or any other characteristic protected by law.

- In the media : Journalists must ensure that they report the news accurately and without bias. They must respect the privacy of individuals and avoid causing harm or distress. They must also be transparent about their sources and avoid any conflicts of interest.

- In research: Researchers must ensure that they conduct their studies ethically and with integrity. They must obtain informed consent from participants, protect their privacy, and avoid any harm or discomfort. They must also ensure that their findings are reported accurately and without bias.

- In personal relationships : People must ensure that they treat others with respect and kindness, and avoid causing harm or distress. They must respect the autonomy of others and avoid any actions that would be considered unethical, such as lying or cheating. They must also respect the confidentiality of others and maintain their privacy.

How to Write Ethical Considerations