Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Quasi-Experimental Design | Definition, Types & Examples

Quasi-Experimental Design | Definition, Types & Examples

Published on July 31, 2020 by Lauren Thomas . Revised on January 22, 2024.

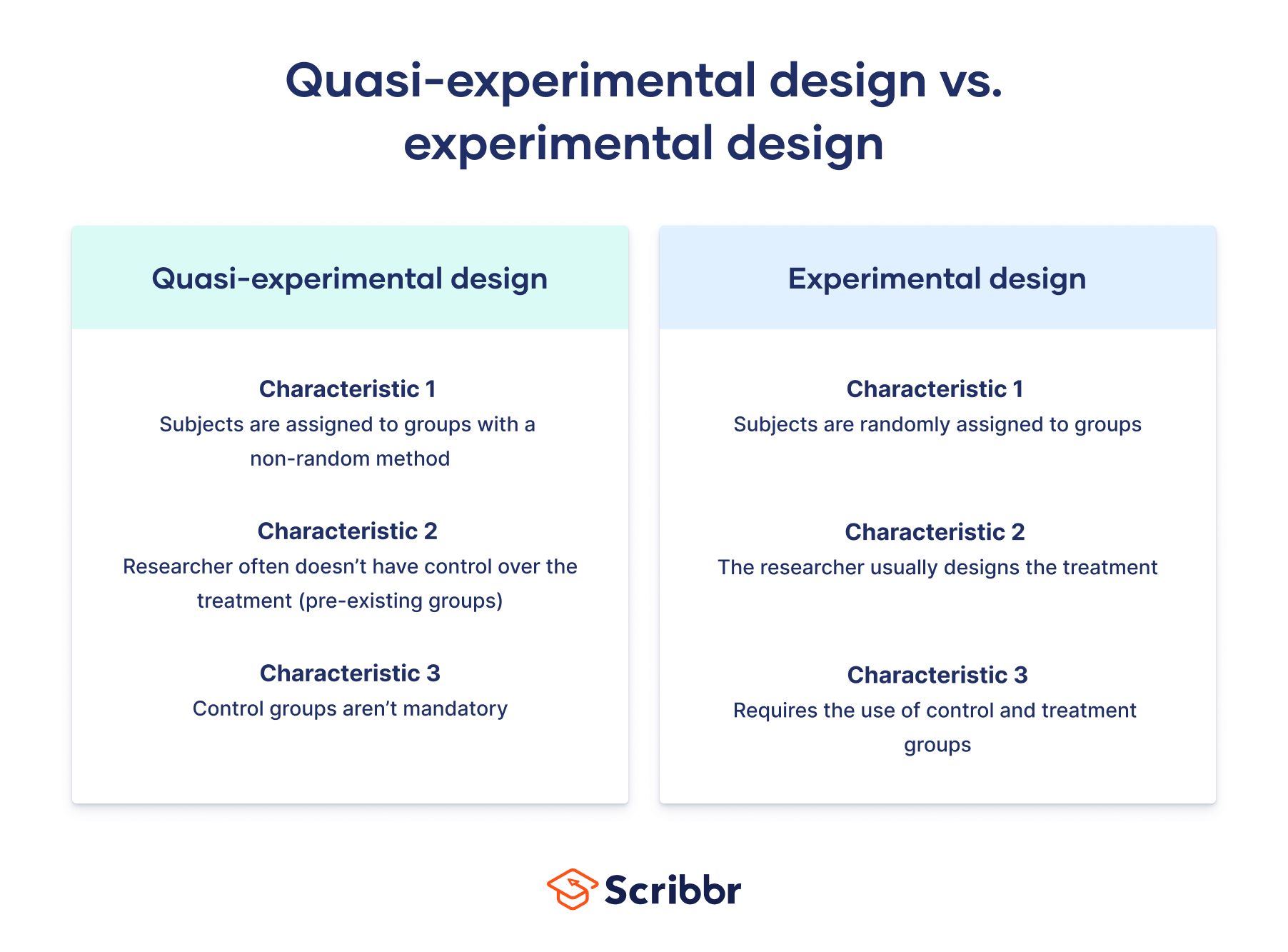

Like a true experiment , a quasi-experimental design aims to establish a cause-and-effect relationship between an independent and dependent variable .

However, unlike a true experiment, a quasi-experiment does not rely on random assignment . Instead, subjects are assigned to groups based on non-random criteria.

Quasi-experimental design is a useful tool in situations where true experiments cannot be used for ethical or practical reasons.

Table of contents

Differences between quasi-experiments and true experiments, types of quasi-experimental designs, when to use quasi-experimental design, advantages and disadvantages, other interesting articles, frequently asked questions about quasi-experimental designs.

There are several common differences between true and quasi-experimental designs.

| True experimental design | Quasi-experimental design | |

|---|---|---|

| Assignment to treatment | The researcher subjects to control and treatment groups. | Some other, method is used to assign subjects to groups. |

| Control over treatment | The researcher usually . | The researcher often , but instead studies pre-existing groups that received different treatments after the fact. |

| Use of | Requires the use of . | Control groups are not required (although they are commonly used). |

Example of a true experiment vs a quasi-experiment

However, for ethical reasons, the directors of the mental health clinic may not give you permission to randomly assign their patients to treatments. In this case, you cannot run a true experiment.

Instead, you can use a quasi-experimental design.

You can use these pre-existing groups to study the symptom progression of the patients treated with the new therapy versus those receiving the standard course of treatment.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Many types of quasi-experimental designs exist. Here we explain three of the most common types: nonequivalent groups design, regression discontinuity, and natural experiments.

Nonequivalent groups design

In nonequivalent group design, the researcher chooses existing groups that appear similar, but where only one of the groups experiences the treatment.

In a true experiment with random assignment , the control and treatment groups are considered equivalent in every way other than the treatment. But in a quasi-experiment where the groups are not random, they may differ in other ways—they are nonequivalent groups .

When using this kind of design, researchers try to account for any confounding variables by controlling for them in their analysis or by choosing groups that are as similar as possible.

This is the most common type of quasi-experimental design.

Regression discontinuity

Many potential treatments that researchers wish to study are designed around an essentially arbitrary cutoff, where those above the threshold receive the treatment and those below it do not.

Near this threshold, the differences between the two groups are often so minimal as to be nearly nonexistent. Therefore, researchers can use individuals just below the threshold as a control group and those just above as a treatment group.

However, since the exact cutoff score is arbitrary, the students near the threshold—those who just barely pass the exam and those who fail by a very small margin—tend to be very similar, with the small differences in their scores mostly due to random chance. You can therefore conclude that any outcome differences must come from the school they attended.

Natural experiments

In both laboratory and field experiments, researchers normally control which group the subjects are assigned to. In a natural experiment, an external event or situation (“nature”) results in the random or random-like assignment of subjects to the treatment group.

Even though some use random assignments, natural experiments are not considered to be true experiments because they are observational in nature.

Although the researchers have no control over the independent variable , they can exploit this event after the fact to study the effect of the treatment.

However, as they could not afford to cover everyone who they deemed eligible for the program, they instead allocated spots in the program based on a random lottery.

Although true experiments have higher internal validity , you might choose to use a quasi-experimental design for ethical or practical reasons.

Sometimes it would be unethical to provide or withhold a treatment on a random basis, so a true experiment is not feasible. In this case, a quasi-experiment can allow you to study the same causal relationship without the ethical issues.

The Oregon Health Study is a good example. It would be unethical to randomly provide some people with health insurance but purposely prevent others from receiving it solely for the purposes of research.

However, since the Oregon government faced financial constraints and decided to provide health insurance via lottery, studying this event after the fact is a much more ethical approach to studying the same problem.

True experimental design may be infeasible to implement or simply too expensive, particularly for researchers without access to large funding streams.

At other times, too much work is involved in recruiting and properly designing an experimental intervention for an adequate number of subjects to justify a true experiment.

In either case, quasi-experimental designs allow you to study the question by taking advantage of data that has previously been paid for or collected by others (often the government).

Quasi-experimental designs have various pros and cons compared to other types of studies.

- Higher external validity than most true experiments, because they often involve real-world interventions instead of artificial laboratory settings.

- Higher internal validity than other non-experimental types of research, because they allow you to better control for confounding variables than other types of studies do.

- Lower internal validity than true experiments—without randomization, it can be difficult to verify that all confounding variables have been accounted for.

- The use of retrospective data that has already been collected for other purposes can be inaccurate, incomplete or difficult to access.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Ecological validity

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

A quasi-experiment is a type of research design that attempts to establish a cause-and-effect relationship. The main difference with a true experiment is that the groups are not randomly assigned.

In experimental research, random assignment is a way of placing participants from your sample into different groups using randomization. With this method, every member of the sample has a known or equal chance of being placed in a control group or an experimental group.

Quasi-experimental design is most useful in situations where it would be unethical or impractical to run a true experiment .

Quasi-experiments have lower internal validity than true experiments, but they often have higher external validity as they can use real-world interventions instead of artificial laboratory settings.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Thomas, L. (2024, January 22). Quasi-Experimental Design | Definition, Types & Examples. Scribbr. Retrieved September 9, 2024, from https://www.scribbr.com/methodology/quasi-experimental-design/

Is this article helpful?

Lauren Thomas

Other students also liked, guide to experimental design | overview, steps, & examples, random assignment in experiments | introduction & examples, control variables | what are they & why do they matter, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Quasi Experimental Design Overview & Examples

By Jim Frost Leave a Comment

What is a Quasi Experimental Design?

A quasi experimental design is a method for identifying causal relationships that does not randomly assign participants to the experimental groups. Instead, researchers use a non-random process. For example, they might use an eligibility cutoff score or preexisting groups to determine who receives the treatment.

Quasi-experimental research is a design that closely resembles experimental research but is different. The term “quasi” means “resembling,” so you can think of it as a cousin to actual experiments. In these studies, researchers can manipulate an independent variable — that is, they change one factor to see what effect it has. However, unlike true experimental research, participants are not randomly assigned to different groups.

Learn more about Experimental Designs: Definition & Types .

When to Use Quasi-Experimental Design

Researchers typically use a quasi-experimental design because they can’t randomize due to practical or ethical concerns. For example:

- Practical Constraints : A school interested in testing a new teaching method can only implement it in preexisting classes and cannot randomly assign students.

- Ethical Concerns : A medical study might not be able to randomly assign participants to a treatment group for an experimental medication when they are already taking a proven drug.

Quasi-experimental designs also come in handy when researchers want to study the effects of naturally occurring events, like policy changes or environmental shifts, where they can’t control who is exposed to the treatment.

Quasi-experimental designs occupy a unique position in the spectrum of research methodologies, sitting between observational studies and true experiments. This middle ground offers a blend of both worlds, addressing some limitations of purely observational studies while navigating the constraints often accompanying true experiments.

A significant advantage of quasi-experimental research over purely observational studies and correlational research is that it addresses the issue of directionality, determining which variable is the cause and which is the effect. In quasi-experiments, an intervention typically occurs during the investigation, and the researchers record outcomes before and after it, increasing the confidence that it causes the observed changes.

However, it’s crucial to recognize its limitations as well. Controlling confounding variables is a larger concern for a quasi-experimental design than a true experiment because it lacks random assignment.

In sum, quasi-experimental designs offer a valuable research approach when random assignment is not feasible, providing a more structured and controlled framework than observational studies while acknowledging and attempting to address potential confounders.

Types of Quasi-Experimental Designs and Examples

Quasi-experimental studies use various methods, depending on the scenario.

Natural Experiments

This design uses naturally occurring events or changes to create the treatment and control groups. Researchers compare outcomes between those whom the event affected and those it did not affect. Analysts use statistical controls to account for confounders that the researchers must also measure.

Natural experiments are related to observational studies, but they allow for a clearer causality inference because the external event or policy change provides both a form of quasi-random group assignment and a definite start date for the intervention.

For example, in a natural experiment utilizing a quasi-experimental design, researchers study the impact of a significant economic policy change on small business growth. The policy is implemented in one state but not in neighboring states. This scenario creates an unplanned experimental setup, where the state with the new policy serves as the treatment group, and the neighboring states act as the control group.

Researchers are primarily interested in small business growth rates but need to record various confounders that can impact growth rates. Hence, they record state economic indicators, investment levels, and employment figures. By recording these metrics across the states, they can include them in the model as covariates and control them statistically. This method allows researchers to estimate differences in small business growth due to the policy itself, separate from the various confounders.

Nonequivalent Groups Design

This method involves matching existing groups that are similar but not identical. Researchers attempt to find groups that are as equivalent as possible, particularly for factors likely to affect the outcome.

For instance, researchers use a nonequivalent groups quasi-experimental design to evaluate the effectiveness of a new teaching method in improving students’ mathematics performance. A school district considering the teaching method is planning the study. Students are already divided into schools, preventing random assignment.

The researchers matched two schools with similar demographics, baseline academic performance, and resources. The school using the traditional methodology is the control, while the other uses the new approach. Researchers are evaluating differences in educational outcomes between the two methods.

They perform a pretest to identify differences between the schools that might affect the outcome and include them as covariates to control for confounding. They also record outcomes before and after the intervention to have a larger context for the changes they observe.

Regression Discontinuity

This process assigns subjects to a treatment or control group based on a predetermined cutoff point (e.g., a test score). The analysis primarily focuses on participants near the cutoff point, as they are likely similar except for the treatment received. By comparing participants just above and below the cutoff, the design controls for confounders that vary smoothly around the cutoff.

For example, in a regression discontinuity quasi-experimental design focusing on a new medical treatment for depression, researchers use depression scores as the cutoff point. Individuals with depression scores just above a certain threshold are assigned to receive the latest treatment, while those just below the threshold do not receive it. This method creates two closely matched groups: one that barely qualifies for treatment and one that barely misses out.

By comparing the mental health outcomes of these two groups over time, researchers can assess the effectiveness of the new treatment. The assumption is that the only significant difference between the groups is whether they received the treatment, thereby isolating its impact on depression outcomes.

Controlling Confounders in a Quasi-Experimental Design

Accounting for confounding variables is a challenging but essential task for a quasi-experimental design.

In a true experiment, the random assignment process equalizes confounders across the groups to nullify their overall effect. It’s the gold standard because it works on all confounders, known and unknown.

Unfortunately, the lack of random assignment can allow differences between the groups to exist before the intervention. These confounding factors might ultimately explain the results rather than the intervention.

Consequently, researchers must use other methods to equalize the groups roughly using matching and cutoff values or statistically adjust for preexisting differences they measure to reduce the impact of confounders.

A key strength of quasi-experiments is their frequent use of “pre-post testing.” This approach involves conducting initial tests before collecting data to check for preexisting differences between groups that could impact the study’s outcome. By identifying these variables early on and including them as covariates, researchers can more effectively control potential confounders in their statistical analysis.

Additionally, researchers frequently track outcomes before and after the intervention to better understand the context for changes they observe.

Statisticians consider these methods to be less effective than randomization. Hence, quasi-experiments fall somewhere in the middle when it comes to internal validity , or how well the study can identify causal relationships versus mere correlation . They’re more conclusive than correlational studies but not as solid as true experiments.

In conclusion, quasi-experimental designs offer researchers a versatile and practical approach when random assignment is not feasible. This methodology bridges the gap between controlled experiments and observational studies, providing a valuable tool for investigating cause-and-effect relationships in real-world settings. Researchers can address ethical and logistical constraints by understanding and leveraging the different types of quasi-experimental designs while still obtaining insightful and meaningful results.

Cook, T. D., & Campbell, D. T. (1979). Quasi-experimentation: Design & analysis issues in field settings . Boston, MA: Houghton Mifflin

Share this:

Reader Interactions

Comments and questions cancel reply.

- Privacy Policy

Home » Quasi-Experimental Research Design – Types, Methods

Quasi-Experimental Research Design – Types, Methods

Table of Contents

Quasi-Experimental Design

Quasi-experimental design is a research method that seeks to evaluate the causal relationships between variables, but without the full control over the independent variable(s) that is available in a true experimental design.

In a quasi-experimental design, the researcher uses an existing group of participants that is not randomly assigned to the experimental and control groups. Instead, the groups are selected based on pre-existing characteristics or conditions, such as age, gender, or the presence of a certain medical condition.

Types of Quasi-Experimental Design

There are several types of quasi-experimental designs that researchers use to study causal relationships between variables. Here are some of the most common types:

Non-Equivalent Control Group Design

This design involves selecting two groups of participants that are similar in every way except for the independent variable(s) that the researcher is testing. One group receives the treatment or intervention being studied, while the other group does not. The two groups are then compared to see if there are any significant differences in the outcomes.

Interrupted Time-Series Design

This design involves collecting data on the dependent variable(s) over a period of time, both before and after an intervention or event. The researcher can then determine whether there was a significant change in the dependent variable(s) following the intervention or event.

Pretest-Posttest Design

This design involves measuring the dependent variable(s) before and after an intervention or event, but without a control group. This design can be useful for determining whether the intervention or event had an effect, but it does not allow for control over other factors that may have influenced the outcomes.

Regression Discontinuity Design

This design involves selecting participants based on a specific cutoff point on a continuous variable, such as a test score. Participants on either side of the cutoff point are then compared to determine whether the intervention or event had an effect.

Natural Experiments

This design involves studying the effects of an intervention or event that occurs naturally, without the researcher’s intervention. For example, a researcher might study the effects of a new law or policy that affects certain groups of people. This design is useful when true experiments are not feasible or ethical.

Data Analysis Methods

Here are some data analysis methods that are commonly used in quasi-experimental designs:

Descriptive Statistics

This method involves summarizing the data collected during a study using measures such as mean, median, mode, range, and standard deviation. Descriptive statistics can help researchers identify trends or patterns in the data, and can also be useful for identifying outliers or anomalies.

Inferential Statistics

This method involves using statistical tests to determine whether the results of a study are statistically significant. Inferential statistics can help researchers make generalizations about a population based on the sample data collected during the study. Common statistical tests used in quasi-experimental designs include t-tests, ANOVA, and regression analysis.

Propensity Score Matching

This method is used to reduce bias in quasi-experimental designs by matching participants in the intervention group with participants in the control group who have similar characteristics. This can help to reduce the impact of confounding variables that may affect the study’s results.

Difference-in-differences Analysis

This method is used to compare the difference in outcomes between two groups over time. Researchers can use this method to determine whether a particular intervention has had an impact on the target population over time.

Interrupted Time Series Analysis

This method is used to examine the impact of an intervention or treatment over time by comparing data collected before and after the intervention or treatment. This method can help researchers determine whether an intervention had a significant impact on the target population.

Regression Discontinuity Analysis

This method is used to compare the outcomes of participants who fall on either side of a predetermined cutoff point. This method can help researchers determine whether an intervention had a significant impact on the target population.

Steps in Quasi-Experimental Design

Here are the general steps involved in conducting a quasi-experimental design:

- Identify the research question: Determine the research question and the variables that will be investigated.

- Choose the design: Choose the appropriate quasi-experimental design to address the research question. Examples include the pretest-posttest design, non-equivalent control group design, regression discontinuity design, and interrupted time series design.

- Select the participants: Select the participants who will be included in the study. Participants should be selected based on specific criteria relevant to the research question.

- Measure the variables: Measure the variables that are relevant to the research question. This may involve using surveys, questionnaires, tests, or other measures.

- Implement the intervention or treatment: Implement the intervention or treatment to the participants in the intervention group. This may involve training, education, counseling, or other interventions.

- Collect data: Collect data on the dependent variable(s) before and after the intervention. Data collection may also include collecting data on other variables that may impact the dependent variable(s).

- Analyze the data: Analyze the data collected to determine whether the intervention had a significant impact on the dependent variable(s).

- Draw conclusions: Draw conclusions about the relationship between the independent and dependent variables. If the results suggest a causal relationship, then appropriate recommendations may be made based on the findings.

Quasi-Experimental Design Examples

Here are some examples of real-time quasi-experimental designs:

- Evaluating the impact of a new teaching method: In this study, a group of students are taught using a new teaching method, while another group is taught using the traditional method. The test scores of both groups are compared before and after the intervention to determine whether the new teaching method had a significant impact on student performance.

- Assessing the effectiveness of a public health campaign: In this study, a public health campaign is launched to promote healthy eating habits among a targeted population. The behavior of the population is compared before and after the campaign to determine whether the intervention had a significant impact on the target behavior.

- Examining the impact of a new medication: In this study, a group of patients is given a new medication, while another group is given a placebo. The outcomes of both groups are compared to determine whether the new medication had a significant impact on the targeted health condition.

- Evaluating the effectiveness of a job training program : In this study, a group of unemployed individuals is enrolled in a job training program, while another group is not enrolled in any program. The employment rates of both groups are compared before and after the intervention to determine whether the training program had a significant impact on the employment rates of the participants.

- Assessing the impact of a new policy : In this study, a new policy is implemented in a particular area, while another area does not have the new policy. The outcomes of both areas are compared before and after the intervention to determine whether the new policy had a significant impact on the targeted behavior or outcome.

Applications of Quasi-Experimental Design

Here are some applications of quasi-experimental design:

- Educational research: Quasi-experimental designs are used to evaluate the effectiveness of educational interventions, such as new teaching methods, technology-based learning, or educational policies.

- Health research: Quasi-experimental designs are used to evaluate the effectiveness of health interventions, such as new medications, public health campaigns, or health policies.

- Social science research: Quasi-experimental designs are used to investigate the impact of social interventions, such as job training programs, welfare policies, or criminal justice programs.

- Business research: Quasi-experimental designs are used to evaluate the impact of business interventions, such as marketing campaigns, new products, or pricing strategies.

- Environmental research: Quasi-experimental designs are used to evaluate the impact of environmental interventions, such as conservation programs, pollution control policies, or renewable energy initiatives.

When to use Quasi-Experimental Design

Here are some situations where quasi-experimental designs may be appropriate:

- When the research question involves investigating the effectiveness of an intervention, policy, or program : In situations where it is not feasible or ethical to randomly assign participants to intervention and control groups, quasi-experimental designs can be used to evaluate the impact of the intervention on the targeted outcome.

- When the sample size is small: In situations where the sample size is small, it may be difficult to randomly assign participants to intervention and control groups. Quasi-experimental designs can be used to investigate the impact of an intervention without requiring a large sample size.

- When the research question involves investigating a naturally occurring event : In some situations, researchers may be interested in investigating the impact of a naturally occurring event, such as a natural disaster or a major policy change. Quasi-experimental designs can be used to evaluate the impact of the event on the targeted outcome.

- When the research question involves investigating a long-term intervention: In situations where the intervention or program is long-term, it may be difficult to randomly assign participants to intervention and control groups for the entire duration of the intervention. Quasi-experimental designs can be used to evaluate the impact of the intervention over time.

- When the research question involves investigating the impact of a variable that cannot be manipulated : In some situations, it may not be possible or ethical to manipulate a variable of interest. Quasi-experimental designs can be used to investigate the relationship between the variable and the targeted outcome.

Purpose of Quasi-Experimental Design

The purpose of quasi-experimental design is to investigate the causal relationship between two or more variables when it is not feasible or ethical to conduct a randomized controlled trial (RCT). Quasi-experimental designs attempt to emulate the randomized control trial by mimicking the control group and the intervention group as much as possible.

The key purpose of quasi-experimental design is to evaluate the impact of an intervention, policy, or program on a targeted outcome while controlling for potential confounding factors that may affect the outcome. Quasi-experimental designs aim to answer questions such as: Did the intervention cause the change in the outcome? Would the outcome have changed without the intervention? And was the intervention effective in achieving its intended goals?

Quasi-experimental designs are useful in situations where randomized controlled trials are not feasible or ethical. They provide researchers with an alternative method to evaluate the effectiveness of interventions, policies, and programs in real-life settings. Quasi-experimental designs can also help inform policy and practice by providing valuable insights into the causal relationships between variables.

Overall, the purpose of quasi-experimental design is to provide a rigorous method for evaluating the impact of interventions, policies, and programs while controlling for potential confounding factors that may affect the outcome.

Advantages of Quasi-Experimental Design

Quasi-experimental designs have several advantages over other research designs, such as:

- Greater external validity : Quasi-experimental designs are more likely to have greater external validity than laboratory experiments because they are conducted in naturalistic settings. This means that the results are more likely to generalize to real-world situations.

- Ethical considerations: Quasi-experimental designs often involve naturally occurring events, such as natural disasters or policy changes. This means that researchers do not need to manipulate variables, which can raise ethical concerns.

- More practical: Quasi-experimental designs are often more practical than experimental designs because they are less expensive and easier to conduct. They can also be used to evaluate programs or policies that have already been implemented, which can save time and resources.

- No random assignment: Quasi-experimental designs do not require random assignment, which can be difficult or impossible in some cases, such as when studying the effects of a natural disaster. This means that researchers can still make causal inferences, although they must use statistical techniques to control for potential confounding variables.

- Greater generalizability : Quasi-experimental designs are often more generalizable than experimental designs because they include a wider range of participants and conditions. This can make the results more applicable to different populations and settings.

Limitations of Quasi-Experimental Design

There are several limitations associated with quasi-experimental designs, which include:

- Lack of Randomization: Quasi-experimental designs do not involve randomization of participants into groups, which means that the groups being studied may differ in important ways that could affect the outcome of the study. This can lead to problems with internal validity and limit the ability to make causal inferences.

- Selection Bias: Quasi-experimental designs may suffer from selection bias because participants are not randomly assigned to groups. Participants may self-select into groups or be assigned based on pre-existing characteristics, which may introduce bias into the study.

- History and Maturation: Quasi-experimental designs are susceptible to history and maturation effects, where the passage of time or other events may influence the outcome of the study.

- Lack of Control: Quasi-experimental designs may lack control over extraneous variables that could influence the outcome of the study. This can limit the ability to draw causal inferences from the study.

- Limited Generalizability: Quasi-experimental designs may have limited generalizability because the results may only apply to the specific population and context being studied.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Exploratory Research – Types, Methods and...

Focus Groups – Steps, Examples and Guide

Research Methods – Types, Examples and Guide

Observational Research – Methods and Guide

Experimental Design – Types, Methods, Guide

Correlational Research – Methods, Types and...

Our systems are now restored following recent technical disruption, and we’re working hard to catch up on publishing. We apologise for the inconvenience caused. Find out more: https://www.cambridge.org/universitypress/about-us/news-and-blogs/cambridge-university-press-publishing-update-following-technical-disruption

We use cookies to distinguish you from other users and to provide you with a better experience on our websites. Close this message to accept cookies or find out how to manage your cookie settings .

Login Alert

- > The Cambridge Handbook of Research Methods and Statistics for the Social and Behavioral Sciences

- > Quasi-Experimental Research

Book contents

- The Cambridge Handbook of Research Methods and Statistics for the Social and Behavioral Sciences

- Cambridge Handbooks in Psychology

- Copyright page

- Contributors

- Part I From Idea to Reality: The Basics of Research

- Part II The Building Blocks of a Study

- Part III Data Collection

- 13 Cross-Sectional Studies

- 14 Quasi-Experimental Research

- 15 Non-equivalent Control Group Pretest–Posttest Design in Social and Behavioral Research

- 16 Experimental Methods

- 17 Longitudinal Research: A World to Explore

- 18 Online Research Methods

- 19 Archival Data

- 20 Qualitative Research Design

- Part IV Statistical Approaches

- Part V Tips for a Successful Research Career

14 - Quasi-Experimental Research

from Part III - Data Collection

Published online by Cambridge University Press: 25 May 2023

In this chapter, we discuss the logic and practice of quasi-experimentation. Specifically, we describe four quasi-experimental designs – one-group pretest–posttest designs, non-equivalent group designs, regression discontinuity designs, and interrupted time-series designs – and their statistical analyses in detail. Both simple quasi-experimental designs and embellishments of these simple designs are presented. Potential threats to internal validity are illustrated along with means of addressing their potentially biasing effects so that these effects can be minimized. In contrast to quasi-experiments, randomized experiments are often thought to be the gold standard when estimating the effects of treatment interventions. However, circumstances frequently arise where quasi-experiments can usefully supplement randomized experiments or when quasi-experiments can fruitfully be used in place of randomized experiments. Researchers need to appreciate the relative strengths and weaknesses of the various quasi-experiments so they can choose among pre-specified designs or craft their own unique quasi-experiments.

Access options

Save book to kindle.

To save this book to your Kindle, first ensure [email protected] is added to your Approved Personal Document E-mail List under your Personal Document Settings on the Manage Your Content and Devices page of your Amazon account. Then enter the ‘name’ part of your Kindle email address below. Find out more about saving to your Kindle .

Note you can select to save to either the @free.kindle.com or @kindle.com variations. ‘@free.kindle.com’ emails are free but can only be saved to your device when it is connected to wi-fi. ‘@kindle.com’ emails can be delivered even when you are not connected to wi-fi, but note that service fees apply.

Find out more about the Kindle Personal Document Service .

- Quasi-Experimental Research

- By Charles S. Reichardt , Daniel Storage , Damon Abraham

- Edited by Austin Lee Nichols , Central European University, Vienna , John Edlund , Rochester Institute of Technology, New York

- Book: The Cambridge Handbook of Research Methods and Statistics for the Social and Behavioral Sciences

- Online publication: 25 May 2023

- Chapter DOI: https://doi.org/10.1017/9781009010054.015

Save book to Dropbox

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Dropbox .

Save book to Google Drive

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Google Drive .

Research Methodologies Guide

- Action Research

- Bibliometrics

- Case Studies

- Content Analysis

- Digital Scholarship This link opens in a new window

- Documentary

- Ethnography

- Focus Groups

- Grounded Theory

- Life Histories/Autobiographies

- Longitudinal

- Participant Observation

- Qualitative Research (General)

Quasi-Experimental Design

- Usability Studies

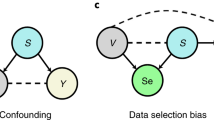

Quasi-Experimental Design is a unique research methodology because it is characterized by what is lacks. For example, Abraham & MacDonald (2011) state:

" Quasi-experimental research is similar to experimental research in that there is manipulation of an independent variable. It differs from experimental research because either there is no control group, no random selection, no random assignment, and/or no active manipulation. "

This type of research is often performed in cases where a control group cannot be created or random selection cannot be performed. This is often the case in certain medical and psychological studies.

For more information on quasi-experimental design, review the resources below:

Where to Start

Below are listed a few tools and online guides that can help you start your Quasi-experimental research. These include free online resources and resources available only through ISU Library.

- Quasi-Experimental Research Designs by Bruce A. Thyer This pocket guide describes the logic, design, and conduct of the range of quasi-experimental designs, encompassing pre-experiments, quasi-experiments making use of a control or comparison group, and time-series designs. An introductory chapter describes the valuable role these types of studies have played in social work, from the 1930s to the present. Subsequent chapters delve into each design type's major features, the kinds of questions it is capable of answering, and its strengths and limitations.

- Experimental and Quasi-Experimental Designs for Research by Donald T. Campbell; Julian C. Stanley. Call Number: Q175 C152e Written 1967 but still used heavily today, this book examines research designs for experimental and quasi-experimental research, with examples and judgments about each design's validity.

Online Resources

- Quasi-Experimental Design From the Web Center for Social Research Methods, this is a very good overview of quasi-experimental design.

- Experimental and Quasi-Experimental Research From Colorado State University.

- Quasi-experimental design--Wikipedia, the free encyclopedia Wikipedia can be a useful place to start your research- check the citations at the bottom of the article for more information.

- << Previous: Qualitative Research (General)

- Next: Sampling >>

- Last Updated: Sep 11, 2024 11:05 AM

- URL: https://instr.iastate.libguides.com/researchmethods

- - Google Chrome

Intended for healthcare professionals

- My email alerts

- BMA member login

- Username * Password * Forgot your log in details? Need to activate BMA Member Log In Log in via OpenAthens Log in via your institution

Search form

- Advanced search

- Search responses

- Search blogs

- Regression based quasi...

Regression based quasi-experimental approach when randomisation is not an option: interrupted time series analysis

- Related content

- Peer review

- Evangelos Kontopantelis , senior research fellow in biostatistics and health services research 1 2 ,

- Tim Doran , professor of public health 3 ,

- David A Springate , research fellow in health informatics 2 4 ,

- Iain Buchan , professor of health informatics 1 ,

- David Reeves , reader in statistics 2 4

- 1 Centre for Health Informatics, Institute of Population Health, University of Manchester, Manchester M13 9GB, UK

- 2 NIHR School for Primary Care Research, Centre for Primary Care, Institute of Population Health, University of Manchester, UK

- 3 Department of Health Sciences, University of York, UK

- 4 Centre for Biostatistics, Institute of Population Health, University of Manchester

- Correspondence to: E Kontopantelis e.kontopantelis{at}manchester.ac.uk

- Accepted 10 March 2015

Interrupted time series analysis is a quasi-experimental design that can evaluate an intervention effect, using longitudinal data. The advantages, disadvantages, and underlying assumptions of various modelling approaches are discussed using published examples

Summary points

Interrupted time series analysis is arguably the “next best” approach for dealing with interventions when randomisation is not possible or clinical trial data are not available

Although several assumptions need to be satisfied first, this quasi-experimental design can be useful in providing answers about population level interventions and effects

However, their implementation can be challenging, particularly for non-statisticians

Introduction

Randomised controlled trials (RCTs) are considered the ideal approach for assessing the effectiveness of interventions. However, not all interventions can be assessed with an RCT, whereas for many interventions trials can be prohibitively expensive. In addition, even well designed RCTs can be susceptible to systematic errors leading to biased estimates, particularly when generalising results to “real world” settings. For example, the external validity of clinical trials in diabetes seems to be poor; the proportion of the Scottish population that met eligibility criteria for seven major clinical trials ranged from 3.5% to 50.7%. 1 One of the greatest concerns is patients with multimorbidity, who are commonly excluded from RCTs. 2

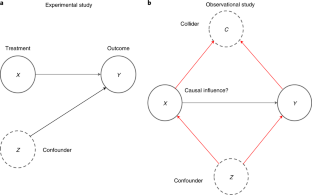

Observational studies can address some of these shortcomings, but the lack of researcher control over confounding variables and the difficulty in establishing causation mean that conclusions from studies using observational approaches are generally considered to be weaker. However, with quasi-experimental study designs researchers are able to estimate causal effects using observational approaches. Interrupted time series (ITS) analysis is a useful quasi-experimental design with which to evaluate the longitudinal effects of interventions, through regression modelling. 3 The term quasi-experimental refers to an absence of randomisation, and ITS analysis is principally a tool for analysing observational data where full randomisation, or a case-control design, is not affordable or possible. Its main advantage over alternative approaches is that it can make full use of the longitudinal nature of the data and account for pre-intervention trends (fig 1 ⇓ ). This design is particularly useful when “natural experiments” in real word settings occur—for example, when a health policy change comes into effect. However, it is not appropriate when trends are not (or cannot be transformed to be) linear, the intervention is introduced gradually or at more than one time point, there are external time varying effects or autocorrelation (for example, seasonality), or the characteristics of the population change over time—although all these can be potentially dealt with through modelling if the relevant information is known.

Fig 1 Interrupted time series analysis components in relation to the Quality and Outcomes Framework intervention

- Download figure

- Open in new tab

- Download powerpoint

Variations on this design are also known as segmented regression or regression discontinuity analysis and have been described elsewhere, 4 but we will focus on longitudinal data and practical modelling. ITS encompasses a wide range of modelling approaches and we describe the steps required to perform simple or more advanced analyses, using previously published analyses from our research group as examples.

The question

We demonstrate a range of ITS models using the “natural experiment” of the introduction of the Quality and Outcomes Framework (QOF) pay for performance scheme in UK primary care. The QOF was introduced in the 2004-05 financial year by the UK government to reward general practices for achieving clinical targets across a range of chronic conditions, as well as other more generic non-clinical targets. This large scale intervention was introduced nationally, without previous assessment in an experimental setting. Because of the great financial rewards it offered, it was adopted almost universally by general practitioners, despite its voluntary nature.

A fundamental research question concerned the effect of this national intervention on quality of care, as measured by the evidence based clinical indicators included in the incentivisation scheme. In operational form, did performance on the incentivised activities improve by the third year of the scheme (2006-07), compared with two years before its introduction (2002-03)? For our analyses we considered the year immediately before the scheme’s introduction (2003-04) to be a preparatory year, as information about the proposed targets was available to practices and this might have affected performance. A basic pre-post analysis would involve an unadjusted or adjusted comparison of mean levels of quality of care across the two comparator years—for example, with a t test or a linear regression controlling for covariates. However, such analyses would fail to account for any trends in performance before the intervention—that is, changes in levels of care from 2000-01 to 2002-03. Importantly, in the context of the QOF, previous performance trends cannot be assumed to be negligible, since quality standards for certain chronic conditions included in the scheme (for example, diabetes) were published in 2001 or earlier. This is where the strength of the ITS approach lies; to evaluate the effect of the intervention accounting for the all important pre-intervention trends (table ⇓ ).

Introduction of the Quality and Outcomes Framework, summary of examples

- View inline

We describe the processes, assumptions, and limitations across four ITS modelling approaches, starting with the simplest and concluding with the most complex. Code scripts in Stata are provided for all examples (web appendices 1-4).

In its simplest form, an ITS is modelled using a regression model (such as linear, logistic, or Poisson) that includes only three time based covariates, whose regression coefficients estimate the pre-intervention slope, the change in level at the intervention point, and the change in slope from pre-intervention to post-intervention. The pre-intervention slope quantifies the trend for the outcome before the intervention. The level change is an estimate of the change in level that can be attributed to the intervention, between the time points immediately before and immediately after the intervention, and accounting for the pre-intervention trend. The change in slope quantifies the difference between the pre-intervention and post-intervention slopes (fig 1 ⇑ ). The key assumption we have to make is that without the intervention we set out to quantify, the pre-intervention trend would continue unchanged into the post-intervention period and there are no external factors systematically affecting the trends (that is, other “interventions”).

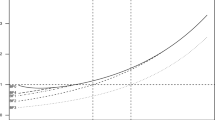

We collected performance data on asthma, diabetes, and coronary heart disease from 42 general practices for four time points: 1998 and 2003 (pre-intervention) and 2005 and 2007 (post-intervention). This was the setup for the 2009 analysis of the Quality in Practice (QuIP) study. 5 We generated the three ITS specific variables and used linear regression modelling. The analysis allowed us to quantify the effect of the intervention on recorded quality of care in the three conditions of interest, on top of what would be expected from the observed pre-intervention trend. We found that the intervention had an effect on quality of care for diabetes and asthma but not for heart disease (fig 2A ⇓ ). Since observations over time within each general practice can be treated as correlated, we used a multilevel regression model to account for clustering of observations within practices. 6 Bootstrap techniques can also be used to obtain more robust standard errors for the estimates. 7

Fig 2 Quality and Outcomes Framework (QOF) performance graphs for four presented examples. (A) Care for asthma, diabetes, and heart disease. Aggregate practice level performance across three clinical domains of interest. 5 (B) Diabetes care by number of comorbidities. Aggregate patient level performance for patients in the diabetes domain, by number of additional conditions. 8 (C) Incentivised and non-incentivised aspects of care. Aggregate practice level performance by incentivisation category and indicator type. 9 (D) Blood pressure measurement indicators. Aggregate practice level performance on blood pressure measurement indicator. 10 FI=fully incentivised, PI=partially incentivised, UI=unincentivised, PM/R=process measurement recording, PT=process treatment, I=intermediate outcome. The number of indicators in each group are in parentheses. CHD, DM, Stroke, and BP relate to the coronary heart disease, diabetes mellitus, stroke, and hypertension QOF clinical domains, respectively

Three important assumptions accompany this form of ITS analysis. Firstly, pre-intervention trends are assumed to be linear. Linearity of trends over time needs to be evaluated and confirmed firstly through visualisation and secondly with appropriate statistical tools for the ITS analysis results to have any credence. However, validating linearity can be a problem when there are only a few pre-intervention time points and is impossible with only two. Secondly, the ITS model estimates have not been controlled for covariates. The models assume that the characteristics of the populations remain unchanged throughout the study period and changes in the population base that might explain changes in the outcome are not accounted for. Thirdly, there is no comparator against which to adjust the results for changes that should not be attributed to the intervention itself.

With some modelling changes one can evaluate whether the intervention varies in relation to population characteristics (practices or patients, in the QOF context). For example, we can assess whether the impact of the QOF on performance of incentivised activities (HbA 1c control ≤7.4% or HbA 1c control ≤10% and retinal screening for patients with diabetes) varies by age group or other patient or practice characteristics. 8 To accomplish this we included “interaction terms” between the covariate (characteristics) of interest and the three ITS components relating to the pre-intervention slope, level change, and change in slope. A separate model needs to be executed for each covariate of interest.

In addition, the estimated pre-intervention slope can be used to compute predictions of what the value of the outcome would have been at post-intervention time points if the intervention had not taken place. These estimates can then be compared against observations for a specific time point, and an overall difference, or “uplift” (fig 1 ⇑ ), attributed to the intervention obtained. This comparison between predictions and observations not only applies to the advanced models, where both main and interaction effects estimates need to be considered, but to simple models as well. Using this approach we found that composite quality for patients with diabetes improved over and above the pre-incentive trend in the first post-intervention year, but by the third year improvement was smaller. The effect of the intervention did not vary by age, sex, or multimorbidity (fig 2B ⇑ ) but did for number of years living with the condition, with the smallest gains observed for newly diagnosed cases. 8 However, the linearity assumption, the lack of adjustment for changes in the population characteristics over time, and the absence of a comparator still apply.

More flexible modelling options are possible in which we can overcome some of the limitations in the basic and advanced designs. Let us assume a patient level analysis of incentivised and non-incentivised aspects of quality of care across a range of clinical indicators, with our aim being to evaluate whether the effect of the QOF on performance varies across fully incentivised and non-incentivised indicators. 9 Using regression modelling we can evaluate the relations between the outcome and covariates of interest (for example, patient age and sex), to obtain estimates that are adjusted for population changes, at specific time points. For example, to calculate the adjusted increase in the outcome above the projected trend, in the first post-intervention year. However, the modelling complexities are formidable and involve numerous steps. Using this approach we found that improvements attributed to financial incentives were achieved at the expense of small detrimental effects on non-incentivised aspects of care (fig 2C ⇑ ). 9

An alternative modelling approach can additionally incorporate “control” factors into the analyses. Let us assume we want to investigate the effect of withdrawing a financial incentive on practice performance. 10 In 2012-13, the QOF underwent a major revision and six clinical indicators were removed from the incentivisation scheme: blood pressure monitoring for coronary heart disease, diabetes, and stroke; cholesterol concentration monitoring for coronary heart disease and diabetes; blood glucose monitoring for diabetes. We used a regression based ITS to quantify the effect of the intervention, in this case the withdrawal of the incentive. We grouped the indicators by process and analysed these as separate groups, including indicators with similar characteristics that remained in the scheme and could act as “controls.” A multilevel mixed effects regression was used to model performance on all these indicators over time, controlled for covariates of interest and including an interaction term between time and indicators, but excluding post-intervention observations for the withdrawn indicators. Predictions and their standard errors were then obtained from the model, for the withdrawn indicators post-intervention and for each practice. These were compared with actual post-intervention observations using advanced meta-analysis methods, 11 to account for variability in the predictions, and obtain estimates of the differences. We found that the withdrawal of the incentive had little or no effect on quality of recorded care (fig 2D ⇑ ). 10

Although randomised controlled trials (RCTs) are considered the ideal approach for assessing the effectiveness of many interventions, we argue that observational data still need to be harnessed and utilised though robust alternative designs, even where trial evidence exists. Large scale population studies, using primary care databases, for example, can be valuable complements to well designed RCT evidence. 12 Sometimes evaluation through randomisation is not possible at all, as was the case with the UK’s primary care pay for performance scheme, which was implemented simultaneously across all UK practices. In either case, well designed observational studies can contribute greatly to the knowledge base, albeit with careful attention required to assess potential confounding and other threats to validity.

To better describe the methods, we drew on examples from our QOF research experiences. This approach allowed us to describe designs of increasing complexity, as well as present their technical details in the appendix code. However, we should also clarify that the ITS design is much more than a tool for QOF analyses, and it can investigate the effect of any policy change or intervention in a longitudinal dataset, provided the underlying assumptions are met. For example, it can investigate the decline in pneumonia admissions after routine childhood immunisation with pneumococcal conjugate vaccine in the United States, 13 the effect of 20 mph traffic zones on road injuries in London, 14 or the impact of infection control interventions and antibiotic use on hospital meticillin resistant Staphylococcus aureus (MRSA) in Scotland. 15

Quasi-experimental designs, and ITS analyses in particular, can help us unlock the potential of “real world” data, the volume and availability of which is increasing at an unprecedented rate. The limitations of quasi-experimental studies are generally well understood by the scientific community, whereas the same might not be true of the shortcomings of RCTs. Although the limitations can be daunting, including autocorrelation, time varying external effects, non-linearity, and unmeasured confounding, quasi-experimental designs are much cheaper and have the capacity, when carefully conducted, to complement trial evidence or even to map uncharted territory.

Sources and selection criteria

We chose to present examples that we ourselves have presented in major clinical journals, including The BMJ

EK and DR are experienced statisticians and health services researchers who have published numerous clinical papers using the described methods. TD is a professor of public health with considerable experience in these methods, who has co-authored most of these publications. DAS is research fellow in health informatics, a more recent addition to the research group, who co-authored our latest interrupted time series analysis. IB is professor of health informatics with wide experience in statistical methodology and its practical implementation

Cite this as: BMJ 2015;350:h2750

Contributors: EK wrote the manuscript. DR, DAS, TD, and IB critically edited the manuscript. EK is the guarantor of this work and, as such, had full access to all the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis.

Funding: MRC Health eResearch Centre grant MR/K006665/1 supported the time and facilities of EK and IB. DAS was funded by the National Institute for Health Research (NIHR) School for Primary Care Research (SPCR). The views expressed are those of the authors and not necessarily those of the NHS, the National Institute for Health Research or the Department of Health.

Competing interests: All authors have completed the ICMJE uniform disclosure form at www.icmje.org/coi_disclosure.pdf (available on request from the corresponding author) and declare: No relationships or activities not discussed in the funding statement that could appear to have influenced the submitted work.

Provenance and peer review: Not commissioned; externally peer reviewed.

This is an Open Access article distributed in accordance with the terms of the Creative Commons Attribution (CC BY 4.0) license, which permits others to distribute, remix, adapt and build upon this work, for commercial use, provided the original work is properly cited. See: http://creativecommons.org/licenses/by/4.0/ .

- ↵ Saunders C, Byrne CD, Guthrie B, et al. External validity of randomized controlled trials of glycaemic control and vascular disease: how representative are participants? Diabetic Med 2013 ; 30 : 300 -8. OpenUrl CrossRef PubMed

- ↵ Guthrie B, Payne K, Alderson P, et al. Adapting clinical guidelines to take account of multimorbidity. BMJ 2012 ; 345 : e6341 . OpenUrl FREE Full Text

- ↵ Wagner AK, Soumerai SB, Zhang F, et al. Segmented regression analysis of interrupted time series studies in medication use research. J Clin Pharm Ther 2002 ; 27 : 299 -309. OpenUrl CrossRef PubMed Web of Science

- ↵ O’Keeffe AG, Geneletti S, Baio G, et al. Regression discontinuity designs: an approach to the evaluation of treatment efficacy in primary care using observational data. BMJ 2014 ; 349 : g5293 . OpenUrl FREE Full Text

- ↵ Campbell SM, Reeves D, Kontopantelis E, et al. Effects of pay for performance on the quality of primary care in England. N Engl J Med 2009 ; 361 : 368 -78. OpenUrl CrossRef PubMed Web of Science

- ↵ Rabe-Hesketh S, Skrondal A. Multilevel and longitudinal modeling using Stata. 3rd ed. Stata Press, 2012.

- ↵ Efron B. The bootstrap and modern statistics. J Am Stat Assoc 2000 ; 95 : 1293 -6. OpenUrl CrossRef Web of Science

- ↵ Kontopantelis E, Reeves D, Valderas JM, et al. Recorded quality of primary care for patients with diabetes in England before and after the introduction of a financial incentive scheme: a longitudinal observational study. BMJ Qual Saf 2013 ; 22 : 53 -64. OpenUrl Abstract / FREE Full Text

- ↵ Doran T, Kontopantelis E, Valderas JM, et al. Effect of financial incentives on incentivised and non-incentivised clinical activities: longitudinal analysis of data from the UK Quality and Outcomes Framework. BMJ 2011 ; 342 : d3590 . OpenUrl Abstract / FREE Full Text

- ↵ Kontopantelis E, Springate D, Reeves D, et al. Withdrawing performance indicators: retrospective analysis of general practice performance under UK Quality and Outcomes Framework. BMJ 2014 ; 348 : g330 . OpenUrl Abstract / FREE Full Text

- ↵ Kontopantelis E, Springate DA, Reeves D. A re-analysis of the Cochrane Library data: the dangers of unobserved heterogeneity in meta-analyses. Plos One 2013 ; 8 : e69930 . OpenUrl CrossRef PubMed

- ↵ Silverman SL. From randomized controlled trials to observational studies. Am J Med 2009 ; 122 : 114 -20. OpenUrl CrossRef PubMed Web of Science

- ↵ Grijalva CG, Nuorti JP, Arbogast PG, et al. Decline in pneumonia admissions after routine childhood immunisation with pneumococcal conjugate vaccine in the USA: a time-series analysis. Lancet 2007 ; 369 : 1179 -86. OpenUrl CrossRef PubMed Web of Science

- ↵ Grundy C, Steinbach R, Edwards P, et al. Effect of 20 mph traffic speed zones on road injuries in London, 1986-2006: controlled interrupted time series analysis. BMJ 2009 ; 339 : b4469 . OpenUrl Abstract / FREE Full Text

- ↵ Mahamat A, MacKenzie FM, Brooker K, Monnet DL, Daures JP, Gould IM. Impact of infection control interventions and antibiotic use on hospital MRSA: a multivariate interrupted time-series analysis. Int J Antimicrob Agents 2007 ; 30 : 169 -76. OpenUrl CrossRef PubMed Web of Science

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

The Limitations of Quasi-Experimental Studies, and Methods for Data Analysis When a Quasi-Experimental Research Design Is Unavoidable

Affiliation.

- 1 Dept. of Clinical Psychopharmacology and Neurotoxicology, National Institute of Mental Health and Neurosciences, Bengaluru, Karnataka, India.

- PMID: 34584313

- PMCID: PMC8450731

- DOI: 10.1177/02537176211034707

A quasi-experimental (QE) study is one that compares outcomes between intervention groups where, for reasons related to ethics or feasibility, participants are not randomized to their respective interventions; an example is the historical comparison of pregnancy outcomes in women who did versus did not receive antidepressant medication during pregnancy. QE designs are sometimes used in noninterventional research, as well; an example is the comparison of neuropsychological test performance between first degree relatives of schizophrenia patients and healthy controls. In QE studies, groups may differ systematically in several ways at baseline, itself; when these differences influence the outcome of interest, comparing outcomes between groups using univariable methods can generate misleading results. Multivariable regression is therefore suggested as a better approach to data analysis; because the effects of confounding variables can be adjusted for in multivariable regression, the unique effect of the grouping variable can be better understood. However, although multivariable regression is better than univariable analyses, there are inevitably inadequately measured, unmeasured, and unknown confounds that may limit the validity of the conclusions drawn. Investigators should therefore employ QE designs sparingly, and only if no other option is available to answer an important research question.

Keywords: Quasi-experimental study; confounding variables; multivariable regression; research design; univariable analysis.

© 2021 Indian Psychiatric Society - South Zonal Branch.

PubMed Disclaimer

Conflict of interest statement

Declaration of Conflicting Interests: The author declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Similar articles

- Quasi-experimental study designs series-paper 9: collecting data from quasi-experimental studies. Aloe AM, Becker BJ, Duvendack M, Valentine JC, Shemilt I, Waddington H. Aloe AM, et al. J Clin Epidemiol. 2017 Sep;89:77-83. doi: 10.1016/j.jclinepi.2017.02.013. Epub 2017 Mar 29. J Clin Epidemiol. 2017. PMID: 28365305

- Quasi-experimental study designs series-paper 10: synthesizing evidence for effects collected from quasi-experimental studies presents surmountable challenges. Becker BJ, Aloe AM, Duvendack M, Stanley TD, Valentine JC, Fretheim A, Tugwell P. Becker BJ, et al. J Clin Epidemiol. 2017 Sep;89:84-91. doi: 10.1016/j.jclinepi.2017.02.014. Epub 2017 Mar 30. J Clin Epidemiol. 2017. PMID: 28365308

- A systematic review of comparisons of effect sizes derived from randomised and non-randomised studies. MacLehose RR, Reeves BC, Harvey IM, Sheldon TA, Russell IT, Black AM. MacLehose RR, et al. Health Technol Assess. 2000;4(34):1-154. Health Technol Assess. 2000. PMID: 11134917 Review.

- Quasi-experimental study designs series-paper 8: identifying quasi-experimental studies to inform systematic reviews. Glanville J, Eyers J, Jones AM, Shemilt I, Wang G, Johansen M, Fiander M, Rothstein H. Glanville J, et al. J Clin Epidemiol. 2017 Sep;89:67-76. doi: 10.1016/j.jclinepi.2017.02.018. Epub 2017 Mar 30. J Clin Epidemiol. 2017. PMID: 28365309 Review.

- Interventions for leg cramps in pregnancy. Zhou K, West HM, Zhang J, Xu L, Li W. Zhou K, et al. Cochrane Database Syst Rev. 2015 Aug 11;(8):CD010655. doi: 10.1002/14651858.CD010655.pub2. Cochrane Database Syst Rev. 2015. Update in: Cochrane Database Syst Rev. 2020 Dec 4;12:CD010655. doi: 10.1002/14651858.CD010655.pub3. PMID: 26262909 Updated. Review.

- The Effectiveness of the Chronic Disease Self-Management Program in Improving Patients' Self-Efficacy and Health-Related Behaviors: A Quasi-Experimental Study. Kerari A, Bahari G, Alharbi K, Alenazi L. Kerari A, et al. Healthcare (Basel). 2024 Apr 3;12(7):778. doi: 10.3390/healthcare12070778. Healthcare (Basel). 2024. PMID: 38610201 Free PMC article.

- Conducting and Writing Quantitative and Qualitative Research. Barroga E, Matanguihan GJ, Furuta A, Arima M, Tsuchiya S, Kawahara C, Takamiya Y, Izumi M. Barroga E, et al. J Korean Med Sci. 2023 Sep 18;38(37):e291. doi: 10.3346/jkms.2023.38.e291. J Korean Med Sci. 2023. PMID: 37724495 Free PMC article. Review.

- Observed intervention effects for mortality in randomised clinical trials: a methodological study protocol. Hansen ML, Jørgensen CK, Thabane L, Rulli E, Biagioli E, Chiaruttini M, Mbuagbaw L, Mathiesen O, Gluud C, Jakobsen JC. Hansen ML, et al. BMJ Open. 2023 Jun 14;13(6):e072550. doi: 10.1136/bmjopen-2023-072550. BMJ Open. 2023. PMID: 37316319 Free PMC article.

- Comments on "Caregiver Burden and Disability in Somatoform Disorder: An ExploratoryStudy". Andrade C, Reyazuddin M, Tharayil HM. Andrade C, et al. Indian J Psychol Med. 2022 May;44(3):320-321. doi: 10.1177/02537176221082906. Epub 2022 May 1. Indian J Psychol Med. 2022. PMID: 35656420 Free PMC article. No abstract available.

- Research Design: Case-Control Studies. Andrade C. Andrade C. Indian J Psychol Med. 2022 May;44(3):307-309. doi: 10.1177/02537176221090104. Epub 2022 May 8. Indian J Psychol Med. 2022. PMID: 35656416 Free PMC article.

- Andrade C. Propensity score matching in nonrandomized studies: A concept simply explained using antidepressant treatment during pregnancy as an example. J Clin Psychiatry, 2017; 78(2): e162–e165. - PubMed

- Thomas JK, Suresh Kumar PN, Verma AN, et al.. Psychosocial dysfunction and family burden in schizophrenia and obsessive compulsive disorder. Indian J Psychiatry, 2004; 46(3): 238–243. - PMC - PubMed

- Harave VS, Shivakumar V, Kalmady SV, et al.. Neurocognitive impairments in unaffected first-degree relatives of schizophrenia. Indian J Psychol Med, 2017; 39(3): 250–253. - PMC - PubMed

- Babyak MA. What you see may not be what you get: a brief, nontechnical introduction to overfitting in regression-type models. Psychosom Med, 2004; 66(3): 411–421. - PubMed

- Harris AD, McGregor JC, Perencevich EN, et al.. The use and interpretation of quasi-experimental studies in medical informatics. J Am Med Inform Assoc, 2006; 13(1): 16–23. - PMC - PubMed

LinkOut - more resources

Full text sources.

- Europe PubMed Central

- Ovid Technologies, Inc.

- PubMed Central

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

Experimental vs Quasi-Experimental Design: Which to Choose?

Here’s a table that summarizes the similarities and differences between an experimental and a quasi-experimental study design:

| Experimental Study (a.k.a. Randomized Controlled Trial) | Quasi-Experimental Study | |

|---|---|---|

| Objective | Evaluate the effect of an intervention or a treatment | Evaluate the effect of an intervention or a treatment |

| How participants get assigned to groups? | Random assignment | Non-random assignment (participants get assigned according to their choosing or that of the researcher) |

| Is there a control group? | Yes | Not always (although, if present, a control group will provide better evidence for the study results) |

| Is there any room for confounding? | No (although check for a detailed discussion on post-randomization confounding in randomized controlled trials) | Yes (however, statistical techniques can be used to study causal relationships in quasi-experiments) |

| Level of evidence | A randomized trial is at the highest level in the hierarchy of evidence | A quasi-experiment is one level below the experimental study in the hierarchy of evidence [ ] |

| Advantages | Minimizes bias and confounding | – Can be used in situations where an experiment is not ethically or practically feasible – Can work with smaller sample sizes than randomized trials |

| Limitations | – High cost (as it generally requires a large sample size) – Ethical limitations – Generalizability issues – Sometimes practically infeasible | Lower ranking in the hierarchy of evidence as losing the power of randomization causes the study to be more susceptible to bias and confounding |

What is a quasi-experimental design?

A quasi-experimental design is a non-randomized study design used to evaluate the effect of an intervention. The intervention can be a training program, a policy change or a medical treatment.

Unlike a true experiment, in a quasi-experimental study the choice of who gets the intervention and who doesn’t is not randomized. Instead, the intervention can be assigned to participants according to their choosing or that of the researcher, or by using any method other than randomness.

Having a control group is not required, but if present, it provides a higher level of evidence for the relationship between the intervention and the outcome.

(for more information, I recommend my other article: Understand Quasi-Experimental Design Through an Example ) .

Examples of quasi-experimental designs include:

- One-Group Posttest Only Design

- Static-Group Comparison Design

- One-Group Pretest-Posttest Design

- Separate-Sample Pretest-Posttest Design

What is an experimental design?

An experimental design is a randomized study design used to evaluate the effect of an intervention. In its simplest form, the participants will be randomly divided into 2 groups:

- A treatment group: where participants receive the new intervention which effect we want to study.

- A control or comparison group: where participants do not receive any intervention at all (or receive some standard intervention).

Randomization ensures that each participant has the same chance of receiving the intervention. Its objective is to equalize the 2 groups, and therefore, any observed difference in the study outcome afterwards will only be attributed to the intervention – i.e. it removes confounding.

(for more information, I recommend my other article: Purpose and Limitations of Random Assignment ).

Examples of experimental designs include:

- Posttest-Only Control Group Design

- Pretest-Posttest Control Group Design

- Solomon Four-Group Design

- Matched Pairs Design

- Randomized Block Design

When to choose an experimental design over a quasi-experimental design?

Although many statistical techniques can be used to deal with confounding in a quasi-experimental study, in practice, randomization is still the best tool we have to study causal relationships.

Another problem with quasi-experiments is the natural progression of the disease or the condition under study — When studying the effect of an intervention over time, one should consider natural changes because these can be mistaken with changes in outcome that are caused by the intervention. Having a well-chosen control group helps dealing with this issue.

So, if losing the element of randomness seems like an unwise step down in the hierarchy of evidence, why would we ever want to do it?

This is what we’re going to discuss next.

When to choose a quasi-experimental design over a true experiment?

The issue with randomness is that it cannot be always achievable.

So here are some cases where using a quasi-experimental design makes more sense than using an experimental one:

- If being in one group is believed to be harmful for the participants , either because the intervention is harmful (ex. randomizing people to smoking), or the intervention has a questionable efficacy, or on the contrary it is believed to be so beneficial that it would be malevolent to put people in the control group (ex. randomizing people to receiving an operation).

- In cases where interventions act on a group of people in a given location , it becomes difficult to adequately randomize subjects (ex. an intervention that reduces pollution in a given area).

- When working with small sample sizes , as randomized controlled trials require a large sample size to account for heterogeneity among subjects (i.e. to evenly distribute confounding variables between the intervention and control groups).

Further reading

- Statistical Software Popularity in 40,582 Research Papers

- Checking the Popularity of 125 Statistical Tests and Models

- Objectives of Epidemiology (With Examples)

- 12 Famous Epidemiologists and Why