Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Factorial Designs

41 setting up a factorial experiment, learning objectives.

- Explain why researchers often include multiple independent variables in their studies.

- Define factorial design, and use a factorial design table to represent and interpret simple factorial designs.

Just as it is common for studies in psychology to include multiple levels of a single independent variable (placebo, new drug, old drug), it is also common for them to include multiple independent variables. Schnall and her colleagues studied the effect of both disgust and private body consciousness in the same study. Researchers’ inclusion of multiple independent variables in one experiment is further illustrated by the following actual titles from various professional journals:

- The Effects of Temporal Delay and Orientation on Haptic Object Recognition

- Opening Closed Minds: The Combined Effects of Intergroup Contact and Need for Closure on Prejudice

- Effects of Expectancies and Coping on Pain-Induced Intentions to Smoke

- The Effect of Age and Divided Attention on Spontaneous Recognition

- The Effects of Reduced Food Size and Package Size on the Consumption Behavior of Restrained and Unrestrained Eaters

Just as including multiple levels of a single independent variable allows one to answer more sophisticated research questions, so too does including multiple independent variables in the same experiment. For example, instead of conducting one study on the effect of disgust on moral judgment and another on the effect of private body consciousness on moral judgment, Schnall and colleagues were able to conduct one study that addressed both questions. But including multiple independent variables also allows the researcher to answer questions about whether the effect of one independent variable depends on the level of another. This is referred to as an interaction between the independent variables. Schnall and her colleagues, for example, observed an interaction between disgust and private body consciousness because the effect of disgust depended on whether participants were high or low in private body consciousness. As we will see, interactions are often among the most interesting results in psychological research.

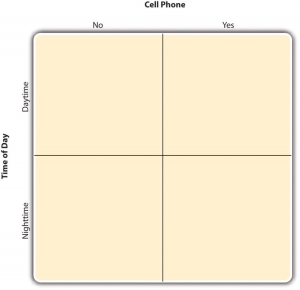

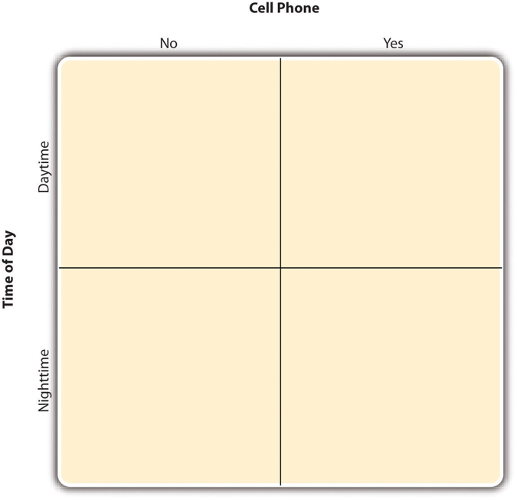

By far the most common approach to including multiple independent variables (which are often called factors) in an experiment is the factorial design. In a factorial design , each level of one independent variable is combined with each level of the others to produce all possible combinations. Each combination, then, becomes a condition in the experiment. Imagine, for example, an experiment on the effect of cell phone use (yes vs. no) and time of day (day vs. night) on driving ability. This is shown in the factorial design table in Figure 9.1. The columns of the table represent cell phone use, and the rows represent time of day. The four cells of the table represent the four possible combinations or conditions: using a cell phone during the day, not using a cell phone during the day, using a cell phone at night, and not using a cell phone at night. This particular design is referred to as a 2 × 2 (read “two-by-two”) factorial design because it combines two variables, each of which has two levels.

If one of the independent variables had a third level (e.g., using a handheld cell phone, using a hands-free cell phone, and not using a cell phone), then it would be a 3 × 2 factorial design, and there would be six distinct conditions. Notice that the number of possible conditions is the product of the numbers of levels. A 2 × 2 factorial design has four conditions, a 3 × 2 factorial design has six conditions, a 4 × 5 factorial design would have 20 conditions, and so on. Also notice that each number in the notation represents one factor, one independent variable. So by looking at how many numbers are in the notation, you can determine how many independent variables there are in the experiment. 2 x 2, 3 x 3, and 2 x 3 designs all have two numbers in the notation and therefore all have two independent variables. The numerical value of each of the numbers represents the number of levels of each independent variable. A 2 means that the independent variable has two levels, a 3 means that the independent variable has three levels, a 4 means it has four levels, etc. To illustrate a 3 x 3 design has two independent variables, each with three levels, while a 2 x 2 x 2 design has three independent variables, each with two levels.

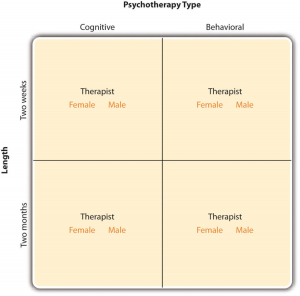

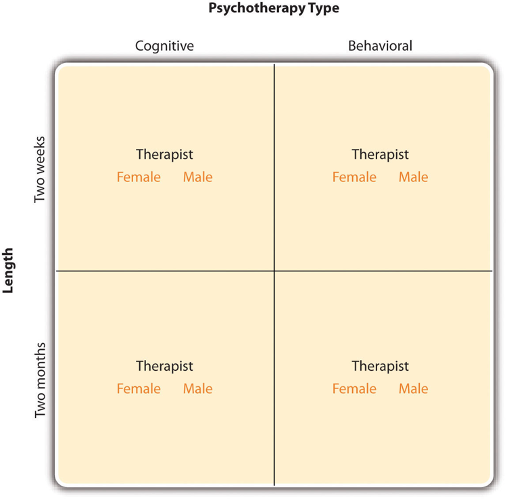

In principle, factorial designs can include any number of independent variables with any number of levels. For example, an experiment could include the type of psychotherapy (cognitive vs. behavioral), the length of the psychotherapy (2 weeks vs. 2 months), and the sex of the psychotherapist (female vs. male). This would be a 2 × 2 × 2 factorial design and would have eight conditions. Figure 9.2 shows one way to represent this design. In practice, it is unusual for there to be more than three independent variables with more than two or three levels each. This is for at least two reasons: For one, the number of conditions can quickly become unmanageable. For example, adding a fourth independent variable with three levels (e.g., therapist experience: low vs. medium vs. high) to the current example would make it a 2 × 2 × 2 × 3 factorial design with 24 distinct conditions. Second, the number of participants required to populate all of these conditions (while maintaining a reasonable ability to detect a real underlying effect) can render the design unfeasible (for more information, see the discussion about the importance of adequate statistical power in Chapter 13). As a result, in the remainder of this section, we will focus on designs with two independent variables. The general principles discussed here extend in a straightforward way to more complex factorial designs.

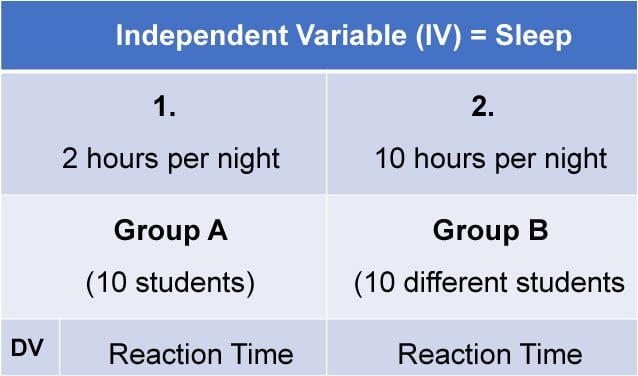

Assigning Participants to Conditions

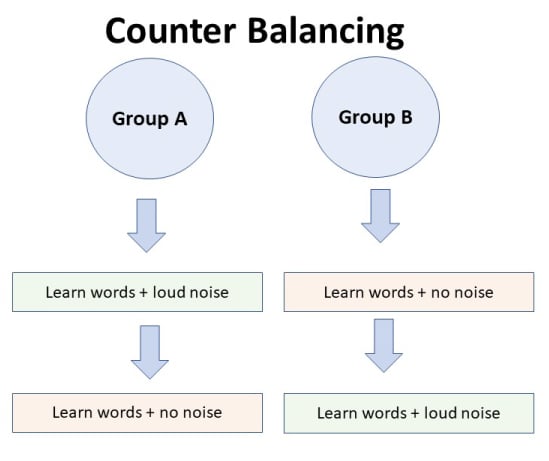

Recall that in a simple between-subjects design, each participant is tested in only one condition. In a simple within-subjects design, each participant is tested in all conditions. In a factorial experiment, the decision to take the between-subjects or within-subjects approach must be made separately for each independent variable. In a between-subjects factorial design , all of the independent variables are manipulated between subjects. For example, all participants could be tested either while using a cell phone or while not using a cell phone and either during the day or during the night. This would mean that each participant would be tested in one and only one condition. In a within-subjects factorial design, all of the independent variables are manipulated within subjects. All participants could be tested both while using a cell phone and while not using a cell phone and both during the day and during the night. This would mean that each participant would need to be tested in all four conditions. The advantages and disadvantages of these two approaches are the same as those discussed in Chapter 5. The between-subjects design is conceptually simpler, avoids order/carryover effects, and minimizes the time and effort of each participant. The within-subjects design is more efficient for the researcher and controls extraneous participant variables.

Since factorial designs have more than one independent variable, it is also possible to manipulate one independent variable between subjects and another within subjects. This is called a mixed factorial design . For example, a researcher might choose to treat cell phone use as a within-subjects factor by testing the same participants both while using a cell phone and while not using a cell phone (while counterbalancing the order of these two conditions). But they might choose to treat time of day as a between-subjects factor by testing each participant either during the day or during the night (perhaps because this only requires them to come in for testing once). Thus each participant in this mixed design would be tested in two of the four conditions.

Regardless of whether the design is between subjects, within subjects, or mixed, the actual assignment of participants to conditions or orders of conditions is typically done randomly.

Non-Manipulated Independent Variables

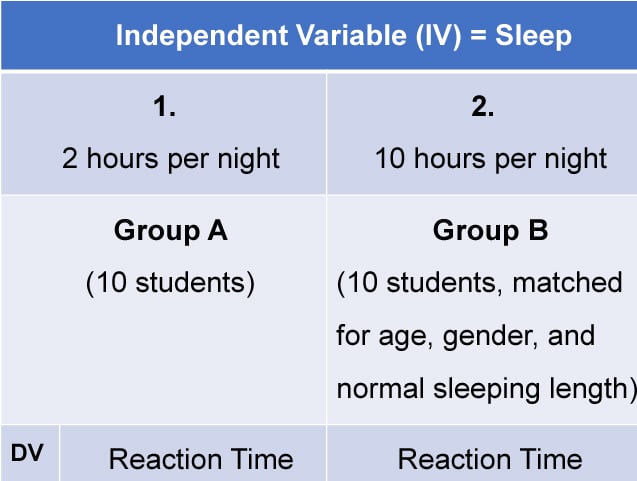

In many factorial designs, one of the independent variables is a non-manipulated independent variable . The researcher measures it but does not manipulate it. The study by Schnall and colleagues is a good example. One independent variable was disgust, which the researchers manipulated by testing participants in a clean room or a messy room. The other was private body consciousness, a participant variable which the researchers simply measured. Another example is a study by Halle Brown and colleagues in which participants were exposed to several words that they were later asked to recall (Brown, Kosslyn, Delamater, Fama, & Barsky, 1999) [1] . The manipulated independent variable was the type of word. Some were negative health-related words (e.g., tumor, coronary ), and others were not health related (e.g., election, geometry ). The non-manipulated independent variable was whether participants were high or low in hypochondriasis (excessive concern with ordinary bodily symptoms). The result of this study was that the participants high in hypochondriasis were better than those low in hypochondriasis at recalling the health-related words, but they were no better at recalling the non-health-related words.

Such studies are extremely common, and there are several points worth making about them. First, non-manipulated independent variables are usually participant variables (private body consciousness, hypochondriasis, self-esteem, gender, and so on), and as such, they are by definition between-subjects factors. For example, people are either low in hypochondriasis or high in hypochondriasis; they cannot be tested in both of these conditions. Second, such studies are generally considered to be experiments as long as at least one independent variable is manipulated, regardless of how many non-manipulated independent variables are included. Third, it is important to remember that causal conclusions can only be drawn about the manipulated independent variable. For example, Schnall and her colleagues were justified in concluding that disgust affected the harshness of their participants’ moral judgments because they manipulated that variable and randomly assigned participants to the clean or messy room. But they would not have been justified in concluding that participants’ private body consciousness affected the harshness of their participants’ moral judgments because they did not manipulate that variable. It could be, for example, that having a strict moral code and a heightened awareness of one’s body are both caused by some third variable (e.g., neuroticism). Thus it is important to be aware of which variables in a study are manipulated and which are not.

Non-Experimental Studies With Factorial Designs

Thus far we have seen that factorial experiments can include manipulated independent variables or a combination of manipulated and non-manipulated independent variables. But factorial designs can also include only non-manipulated independent variables, in which case they are no longer experiments but are instead non-experimental in nature. Consider a hypothetical study in which a researcher simply measures both the moods and the self-esteem of several participants—categorizing them as having either a positive or negative mood and as being either high or low in self-esteem—along with their willingness to have unprotected sexual intercourse. This can be conceptualized as a 2 × 2 factorial design with mood (positive vs. negative) and self-esteem (high vs. low) as non-manipulated between-subjects factors. Willingness to have unprotected sex is the dependent variable.

Again, because neither independent variable in this example was manipulated, it is a non-experimental study rather than an experiment. (The similar study by MacDonald and Martineau [2002] [2] was an experiment because they manipulated their participants’ moods.) This is important because, as always, one must be cautious about inferring causality from non-experimental studies because of the directionality and third-variable problems. For example, an effect of participants’ moods on their willingness to have unprotected sex might be caused by any other variable that happens to be correlated with their moods.

- Brown, H. D., Kosslyn, S. M., Delamater, B., Fama, A., & Barsky, A. J. (1999). Perceptual and memory biases for health-related information in hypochondriacal individuals. Journal of Psychosomatic Research, 47 , 67–78. ↵

- MacDonald, T. K., & Martineau, A. M. (2002). Self-esteem, mood, and intentions to use condoms: When does low self-esteem lead to risky health behaviors? Journal of Experimental Social Psychology, 38 , 299–306. ↵

Experiments that include more than one independent variable in which each level of one independent variable is combined with each level of the others to produce all possible combinations.

Shows how each level of one independent variable is combined with each level of the others to produce all possible combinations in a factorial design.

All of the independent variables are manipulated between subjects.

A design which manipulates one independent variable between subjects and another within subjects.

An independent variable that is measured but is non-manipulated.

Research Methods in Psychology Copyright © 2019 by Rajiv S. Jhangiani, I-Chant A. Chiang, Carrie Cuttler, & Dana C. Leighton is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Factorial Design of Experiments

Learning outcomes.

After successfully completing the Module 4 Factorial Design of Experiments, students will be able to

- Explain the Factorial design of experiments

- Explain the data structure/layout of a factorial design of experiment

- Explain the coding systems used in a factorial design of experiment

- Calculate the main and the interaction effects

- Graphically represent the design and the analysis results, including the main effects and the interaction effects

- Develop the regression equations from the effects

- Perform the regression response surface analysis to produce the contour plot and the response surface

- Interpret the main and the interaction effects using the contour plot and the response surface

- Develop the ANOVA table from the effects

- Interpret the results in the context of the problem

What is a Factorial Design of Experiment?

The factorial design of experiment is described with examples in Video 1.

Video 1. Introduction to Factorial Design of Experiment DOE and the Main Effect Calculation Explained Example .

In a Factorial Design of Experiment, all possible combinations of the levels of a factor can be studied against all possible levels of other factors. Therefore, the factorial design of experiments is also called the crossed factor design of experiments . Due to the crossed nature of the levels, the factorial design of experiments can also be called the completely randomized design (CRD) of experiments . Therefore, the proper name for the factorial design of experiments would be completely randomized factorial design of experiments .

In an easy to understand study of human comfort, two levels of the temperature factor (or independent variable), including 0 O F and 75 O F; and two levels of the humidity factor, including 0% and 35% were studied with all possible combinations (Figure 1). Therefore, the four (2X2) possible treatment combinations, and their associated responses from human subjects (experimental units) are provided in Table 1.

Table 1. Data Structure/Layout of a Factorial Design of Experiment

Coding Systems for the Factor Levels in the Factorial Design of Experiment

As the factorial design is primarily used for screening variables, only two levels are enough. Often, coding the levels as (1) low/high, (2) -/+ or (3) -1/+1 is more convenient and meaningful than the actual level of the factors, especially for the designs and analyses of the factorial experiments. These coding systems are particularly useful in developing the methods in factorial and fractional factorial design of experiments. Moreover, general formula and methods can only be developed utilizing the coding system. Coding systems are also useful in response surface methodology. Often, coded levels produce smooth, meaningful and easy to understand contour plots and response surfaces. Moreover, especially in complex designs, the coded levels such as the low- and high-level of a factor are easier to understand.

How to graphically represent the design?

An example graphical representation of a factorial design of experiment is provided in Figure 1 .

Figure 1. Factorial Design of Experiments with two levels for each factor (independent variable, x). The response (dependent variable, y) is shown using the solid black circle with the associated response values.

How to calculate the main effects?

The calculation of the main effects from a factorial design of experiment is described with examples in Video 2 (both Video 1 and Video 2 are the same).

Video 2. Introduction to Factorial Design of Experiment DOE and the Main Effect Calculation Explained Example .

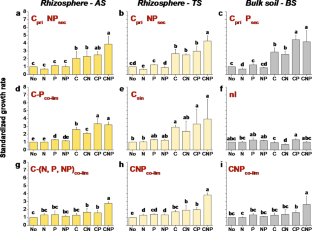

The average effect of the factor A (called the main effect of A) can be calculated from the average responses at the high level of A minus the average responses at the low level of A (Figure 2). When the main effect of A is calculated, all other factors are ignored assuming that we don’t have anything else other than the interested factor, which is A, the temperature factor.

Therefore, the main effect of the temperature factor can be calculated as A = (9+5)/2 - (2+0)/2 = 7-1 = 6. The calculation can be seen in figure 2.

= the average comfort increases by 6 on a scale of 0 (least comfortable) to 10 (most comfortable) if the temperature increases from 0- to 75-degree Fahrenheit.

Similarly, the main effect of B is calculated by ignoring all other factors assuming we don’t have anything else other than the interested factor, which is B, the humidity factor.

Therefore, the main effect of the humidity factor can be calculated as B= (2+9)/2- (5+0)/2=5.5-2.5=3. The calculation can be seen in figure 3.

= the average comfort increases by 3 on a scale of 0 (least comfortable) to 10 (most comfortable) if the relative humidity increases from 0 to 35 percent.

Figure 2. Graphical representation of the main effect of the temperature (factor A).

Figure 3. Graphical representation of the main effect of the humidity (factor B).

How to calculate the interaction effects?

The calculation of the interaction effects from a factorial design of experiment is provided in Video 3.

Video 3. How to calculate Two Factors Interaction Effect in Any Design of Experiments DOE Explained Examples .

In contrast to the main effects (the independent effect of a factor), in real world, factors (variables) may interact between each other to affect the responses. For an example, the temperature and the humidity may interact with each other to affect the human comfort.

At the low humidity level (0%), the comfort increases by 5 (=5-0) if the temperature increases from 0- to 75-degree Fahrenheit. However, at the high humidity level (35%), the comfort increases by 7 (=9-2) if the temperature increases form 0- to 75-degree Fahrenheit (Figure 4). Therefore, at different levels of the humidity factor, the changes in comfort are not the same even if the temperature change is same (from 0 to 75 degree). The effect of temperature (factor A) is different across the level of the factor B (humidity). This phenomenon is called the Interaction Effect , which is expressed by AB.

The average difference or change in comfort can be calculated as AB= (7-5)/2= 2/2=1.

= the change in comfort level increases by 1 more at the high level as compared to the low level of humidity (factor B) if the temperature (factor A) increase from the low level (0-degree) to the high level (75-degree).

Similarly, the interaction effect can be calculated for the humidity factor across the level of temperature factor as follows.

At the low level of A, effect of B = 2-0 = 2; at the high level of A, the effect of B = 9-5 = 4 (Figure 5). Therefore, the average difference or change in comfort can be calculated as AB= (4-2)/2= 2/2=1.

= the change in comfort level increases by 1 more at the high level as compared to the low level of temperature (factor A) if the humidity (factor B) increase from the low level (0 %) to the high level (35%).

The interaction effect is same whether it is calculated across the level of factor A or factor B.

Figure 4. Interaction effects of the temperature (factor A) and the humidity (factor B).

Figure 5. Interaction effects of the temperature (factor A) and the humidity (factor B).

What is a Strong or No/Absence of Interaction?

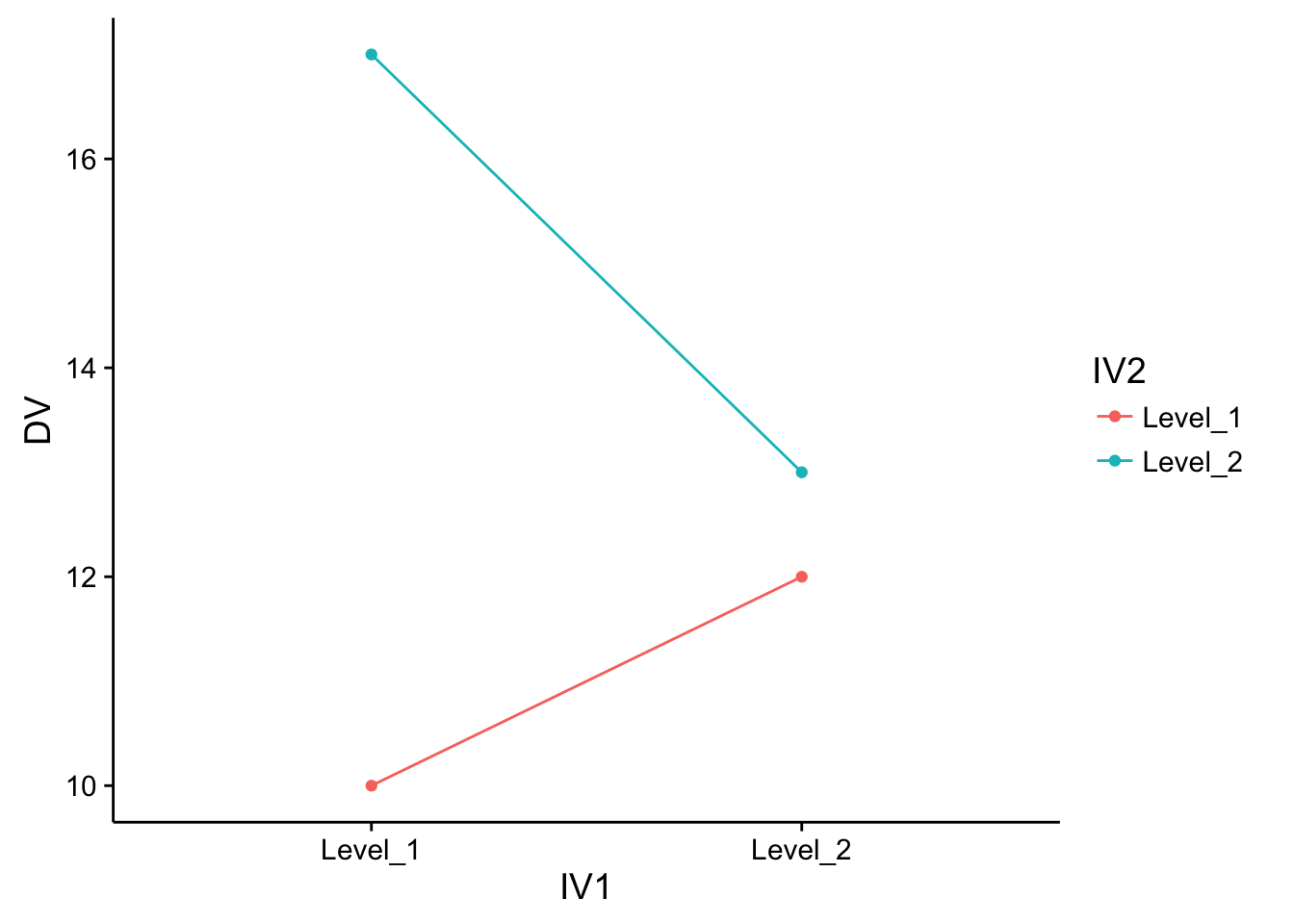

As the interaction effect is comparatively low or small in this example, the figure shows a slight or small interaction (Figure 4 & Figure 5). A strong interaction is depicted in Figure 6. No interaction effect would produce parallel lines shown in Figure 7.

Figure 6. Visualization of a strong interaction effect.

Figure 7. Visualization of no interaction effect.

How to develop the regression equation from the main and the interaction effects?

Video 4 demonstrates the process of developing regression equations from the effect estimates.

Video 4. How to Develop Regression using the Calculated Effects from a Factorial Design of Experiment .

For quantitative independent variables (factors), an estimated regression equation can be developed from the calculated main effects and the interaction effects. The full regression model with two factors (two level for each factor) with the interaction effect can be written as Equation 1.

How to estimate the regression coefficients from the main and the interaction effects.

Using the -1/+1 coding system (Figure 8), the average comfort level increases by 6 ((9+5-2-0)/2=6) if the temperature is increased by two units (-1 to 0 and then from 0 to +1). Therefore, for one unit increase of the temperature, the comfort level is increased by 3. As the estimate for the coefficients, beta for an example is the increase of the response for every one unit increase of the factor level, the estimated regression coefficient is calculated as one-half of the respective estimated effect.

The regression coefficient is one-half of the calculated effect. The regression constant is calculated by averaging all four responses. Therefore, the regression equation can be written as in Equation.

Figure 8. How to estimate the regression coefficients from the main and the interaction effects.

Video 5. Basic Response Surface Methodology RSM Factorial Design of Experiments DOE Explained With Examples .

The contour plot and the response surface representation of the regression

The contour plot and the response surface are visualized in Figure 9 & Figure 10, respectively. The comfort level increases as the temperature increase. However, the effect of humidity is not as obvious as the temperature as the estimated effect is 50% (1.5) as compared to the temperature (3). Moreover, near straight lines shows very little interaction between the temperature and the humidity to affect the human comfort.

Figure 9. Visualization of the contour plot for the effect of temperature and humidity on human comfort.

Figure 10. Visualization of the response surface for the effect of temperature and humidity on human comfort.

How to construct the ANOVA table from the main and the interaction effects?

Video 6 demonstrates the process of constructing the ANOVA table from the main and the interaction effects.

Video 6. How to Construct ANOVA Table from the Effect Estimates from the Factorial Design of Experiments DOE .

ANOVA table can be constructed using the calculated main and the interaction effects. Details will be discussed in the “Module 5: 2K factorial design of experiments.” For this particular example with only two independent variables (factors) having two levels for each factor without any replications, the sum of square is simply calculated as the square of the effects. For an example, the sum of square for the temperature variable (factor) is the square of the temperature effect of an increase in comfort level of 6, which is 36 (6 2 ). No experimental error can be calculated without replication. Therefore, the experimental error is zero (0) for this example. Therefore, the F-statistics and the p-value cannot be calculated for this example. The ANOVA table can be constructed as in Table 2.

*need replications (experimental error) to calculate these values

Table 2 ANOVA table from the calculated main and interaction effects

Practice Problem # 1

The effect of water and sunlight on the plant growth rate was measured on a scale of 0 (no growth) to 10 (highest level). Data is provided in Table 2.

- Draw the experimental diagram as in Figure 1.

- Calculate the main effect of factor A. Interpret the result in the context of the problem.

- Calculate the main effect of factor B. Interpret the result in the context of the problem.

- Calculate the interaction effect of factor AB. Interpret the result in the context of the problem.

- Produce the mean plots for the main and interaction effects.

- Produce the estimated regression equation.

- Construct the ANOVA table from the estimates.

Table 2. Factorial Design of Experiments Practice Example

2K Factorial Design of Experiments

Research Methods in Psychology

5. factorial designs ¶.

We have usually no knowledge that any one factor will exert its effects independently of all others that can be varied, or that its effects are particularly simply related to variations in these other factors. —Ronald Fisher

In Chapter 1 we briefly described a study conducted by Simone Schnall and her colleagues, in which they found that washing one’s hands leads people to view moral transgressions as less wrong [SBH08] . In a different but related study, Schnall and her colleagues investigated whether feeling physically disgusted causes people to make harsher moral judgments [SHCJ08] . In this experiment, they manipulated participants’ feelings of disgust by testing them in either a clean room or a messy room that contained dirty dishes, an overflowing wastebasket, and a chewed-up pen. They also used a self-report questionnaire to measure the amount of attention that people pay to their own bodily sensations. They called this “private body consciousness”. They measured their primary dependent variable, the harshness of people’s moral judgments, by describing different behaviors (e.g., eating one’s dead dog, failing to return a found wallet) and having participants rate the moral acceptability of each one on a scale of 1 to 7. They also measured some other dependent variables, including participants’ willingness to eat at a new restaurant. Finally, the researchers asked participants to rate their current level of disgust and other emotions. The primary results of this study were that participants in the messy room were in fact more disgusted and made harsher moral judgments than participants in the clean room—but only if they scored relatively high in private body consciousness.

The research designs we have considered so far have been simple—focusing on a question about one variable or about a statistical relationship between two variables. But in many ways, the complex design of this experiment undertaken by Schnall and her colleagues is more typical of research in psychology. Fortunately, we have already covered the basic elements of such designs in previous chapters. In this chapter, we look closely at how and why researchers combine these basic elements into more complex designs. We start with complex experiments—considering first the inclusion of multiple dependent variables and then the inclusion of multiple independent variables. Finally, we look at complex correlational designs.

5.1. Multiple Dependent Variables ¶

5.1.1. learning objectives ¶.

Explain why researchers often include multiple dependent variables in their studies.

Explain what a manipulation check is and when it would be included in an experiment.

Imagine that you have made the effort to find a research topic, review the research literature, formulate a question, design an experiment, obtain approval from teh relevant institutional review board (IRB), recruit research participants, and manipulate an independent variable. It would seem almost wasteful to measure a single dependent variable. Even if you are primarily interested in the relationship between an independent variable and one primary dependent variable, there are usually several more questions that you can answer easily by including multiple dependent variables.

5.1.2. Measures of Different Constructs ¶

Often a researcher wants to know how an independent variable affects several distinct dependent variables. For example, Schnall and her colleagues were interested in how feeling disgusted affects the harshness of people’s moral judgments, but they were also curious about how disgust affects other variables, such as people’s willingness to eat in a restaurant. As another example, researcher Susan Knasko was interested in how different odors affect people’s behavior [Kna92] . She conducted an experiment in which the independent variable was whether participants were tested in a room with no odor or in one scented with lemon, lavender, or dimethyl sulfide (which has a cabbage-like smell). Although she was primarily interested in how the odors affected people’s creativity, she was also curious about how they affected people’s moods and perceived health—and it was a simple enough matter to measure these dependent variables too. Although she found that creativity was unaffected by the ambient odor, she found that people’s moods were lower in the dimethyl sulfide condition, and that their perceived health was greater in the lemon condition.

When an experiment includes multiple dependent variables, there is again a possibility of carryover effects. For example, it is possible that measuring participants’ moods before measuring their perceived health could affect their perceived health or that measuring their perceived health before their moods could affect their moods. So the order in which multiple dependent variables are measured becomes an issue. One approach is to measure them in the same order for all participants—usually with the most important one first so that it cannot be affected by measuring the others. Another approach is to counterbalance, or systematically vary, the order in which the dependent variables are measured.

5.1.3. Manipulation Checks ¶

When the independent variable is a construct that can only be manipulated indirectly—such as emotions and other internal states—an additional measure of that independent variable is often included as a manipulation check. This is done to confirm that the independent variable was, in fact, successfully manipulated. For example, Schnall and her colleagues had their participants rate their level of disgust to be sure that those in the messy room actually felt more disgusted than those in the clean room.

Manipulation checks are usually done at the end of the procedure to be sure that the effect of the manipulation lasted throughout the entire procedure and to avoid calling unnecessary attention to the manipulation. Manipulation checks become especially important when the manipulation of the independent variable turns out to have no effect on the dependent variable. Imagine, for example, that you exposed participants to happy or sad movie music—intending to put them in happy or sad moods—but you found that this had no effect on the number of happy or sad childhood events they recalled. This could be because being in a happy or sad mood has no effect on memories for childhood events. But it could also be that the music was ineffective at putting participants in happy or sad moods. A manipulation check, in this case, a measure of participants’ moods, would help resolve this uncertainty. If it showed that you had successfully manipulated participants’ moods, then it would appear that there is indeed no effect of mood on memory for childhood events. But if it showed that you did not successfully manipulate participants’ moods, then it would appear that you need a more effective manipulation to answer your research question.

5.1.4. Measures of the Same Construct ¶

Another common approach to including multiple dependent variables is to operationalize and measure the same construct, or closely related ones, in different ways. Imagine, for example, that a researcher conducts an experiment on the effect of daily exercise on stress. The dependent variable, stress, is a construct that can be operationalized in different ways. For this reason, the researcher might have participants complete the paper-and-pencil Perceived Stress Scale and also measure their levels of the stress hormone cortisol. This is an example of the use of converging operations. If the researcher finds that the different measures are affected by exercise in the same way, then he or she can be confident in the conclusion that exercise affects the more general construct of stress.

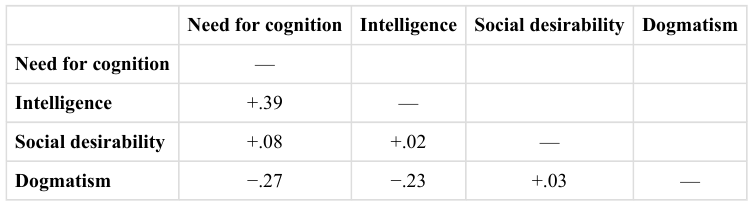

When multiple dependent variables are different measures of the same construct - especially if they are measured on the same scale - researchers have the option of combining them into a single measure of that construct. Recall that Schnall and her colleagues were interested in the harshness of people’s moral judgments. To measure this construct, they presented their participants with seven different scenarios describing morally questionable behaviors and asked them to rate the moral acceptability of each one. Although the researchers could have treated each of the seven ratings as a separate dependent variable, these researchers combined them into a single dependent variable by computing their mean.

When researchers combine dependent variables in this way, they are treating them collectively as a multiple-response measure of a single construct. The advantage of this is that multiple-response measures are generally more reliable than single-response measures. However, it is important to make sure the individual dependent variables are correlated with each other by computing an internal consistency measure such as Cronbach’s \(\alpha\) . If they are not correlated with each other, then it does not make sense to combine them into a measure of a single construct. If they have poor internal consistency, then they should be treated as separate dependent variables.

5.1.5. Key Takeaways ¶

Researchers in psychology often include multiple dependent variables in their studies. The primary reason is that this easily allows them to answer more research questions with minimal additional effort.

When an independent variable is a construct that is manipulated indirectly, it is a good idea to include a manipulation check. This is a measure of the independent variable typically given at the end of the procedure to confirm that it was successfully manipulated.

Multiple measures of the same construct can be analyzed separately or combined to produce a single multiple-item measure of that construct. The latter approach requires that the measures taken together have good internal consistency.

5.1.6. Exercises ¶

Practice: List three independent variables for which it would be good to include a manipulation check. List three others for which a manipulation check would be unnecessary. Hint: Consider whether there is any ambiguity concerning whether the manipulation will have its intended effect.

Practice: Imagine a study in which the independent variable is whether the room where participants are tested is warm (30°) or cool (12°). List three dependent variables that you might treat as measures of separate variables. List three more that you might combine and treat as measures of the same underlying construct.

5.2. Multiple Independent Variables ¶

5.2.1. learning objectives ¶.

Explain why researchers often include multiple independent variables in their studies.

Define factorial design, and use a factorial design table to represent and interpret simple factorial designs.

Distinguish between main effects and interactions, and recognize and give examples of each.

Sketch and interpret bar graphs and line graphs showing the results of studies with simple factorial designs.

Just as it is common for studies in psychology to include multiple dependent variables, it is also common for them to include multiple independent variables. Schnall and her colleagues studied the effect of both disgust and private body consciousness in the same study. The tendency to include multiple independent variables in one experiment is further illustrated by the following titles of actual research articles published in professional journals:

The Effects of Temporal Delay and Orientation on Haptic Object Recognition

Opening Closed Minds: The Combined Effects of Intergroup Contact and Need for Closure on Prejudice

Effects of Expectancies and Coping on Pain-Induced Intentions to Smoke

The Effect of Age and Divided Attention on Spontaneous Recognition

The Effects of Reduced Food Size and Package Size on the Consumption Behavior of Restrained and Unrestrained Eaters

Just as including multiple dependent variables in the same experiment allows one to answer more research questions, so too does including multiple independent variables in the same experiment. For example, instead of conducting one study on the effect of disgust on moral judgment and another on the effect of private body consciousness on moral judgment, Schnall and colleagues were able to conduct one study that addressed both variables. But including multiple independent variables also allows the researcher to answer questions about whether the effect of one independent variable depends on the level of another. This is referred to as an interaction between the independent variables. Schnall and her colleagues, for example, observed an interaction between disgust and private body consciousness because the effect of disgust depended on whether participants were high or low in private body consciousness. As we will see, interactions are often among the most interesting results in psychological research.

5.2.2. Factorial Designs ¶

By far the most common approach to including multiple independent variables in an experiment is the factorial design. In a factorial design, each level of one independent variable (which can also be called a factor) is combined with each level of the others to produce all possible combinations. Each combination, then, becomes a condition in the experiment. Imagine, for example, an experiment on the effect of cell phone use (yes vs. no) and time of day (day vs. night) on driving ability. This is shown in the factorial design table in Figure 5.1 . The columns of the table represent cell phone use, and the rows represent time of day. The four cells of the table represent the four possible combinations or conditions: using a cell phone during the day, not using a cell phone during the day, using a cell phone at night, and not using a cell phone at night. This particular design is referred to as a 2 x 2 (read “two-by- two”) factorial design because it combines two variables, each of which has two levels. If one of the independent variables had a third level (e.g., using a hand-held cell phone, using a hands-free cell phone, and not using a cell phone), then it would be a 3 x 2 factorial design, and there would be six distinct conditions. Notice that the number of possible conditions is the product of the numbers of levels. A 2 x 2 factorial design has four conditions, a 3 x 2 factorial design has six conditions, a 4 x 5 factorial design would have 20 conditions, and so on.

Fig. 5.1 Factorial Design Table Representing a 2 x 2 Factorial Design ¶

In principle, factorial designs can include any number of independent variables with any number of levels. For example, an experiment could include the type of psychotherapy (cognitive vs. behavioral), the length of the psychotherapy (2 weeks vs. 2 months), and the sex of the psychotherapist (female vs. male). This would be a 2 x 2 x 2 factorial design and would have eight conditions. Figure 5.2 shows one way to represent this design. In practice, it is unusual for there to be more than three independent variables with more than two or three levels each.

This is for at least two reasons: For one, the number of conditions can quickly become unmanageable. For example, adding a fourth independent variable with three levels (e.g., therapist experience: low vs. medium vs. high) to the current example would make it a 2 x 2 x 2 x 3 factorial design with 24 distinct conditions. Second, the number of participants required to populate all of these conditions (while maintaining a reasonable ability to detect a real underlying effect) can render the design unfeasible (for more information, see the discussion about the importance of adequate statistical power in Chapter 13 ). As a result, in the remainder of this section we will focus on designs with two independent variables. The general principles discussed here extend in a straightforward way to more complex factorial designs.

Fig. 5.2 Factorial Design Table Representing a 2 x 2 x 2 Factorial Design ¶

5.2.3. Assigning Participants to Conditions ¶

Recall that in a simple between-subjects design, each participant is tested in only one condition. In a simple within-subjects design, each participant is tested in all conditions. In a factorial experiment, the decision to take the between-subjects or within-subjects approach must be made separately for each independent variable. In a between-subjects factorial design, all of the independent variables are manipulated between subjects. For example, all participants could be tested either while using a cell phone or while not using a cell phone and either during the day or during the night. This would mean that each participant was tested in one and only one condition. In a within-subjects factorial design, all of the independent variables are manipulated within subjects. All participants could be tested both while using a cell phone and while not using a cell phone and both during the day and during the night. This would mean that each participant was tested in all conditions. The advantages and disadvantages of these two approaches are the same as those discussed in Chapter 4 ). The between-subjects design is conceptually simpler, avoids carryover effects, and minimizes the time and effort of each participant. The within-subjects design is more efficient for the researcher and help to control extraneous variables.

It is also possible to manipulate one independent variable between subjects and another within subjects. This is called a mixed factorial design. For example, a researcher might choose to treat cell phone use as a within-subjects factor by testing the same participants both while using a cell phone and while not using a cell phone (while counterbalancing the order of these two conditions). But he or she might choose to treat time of day as a between-subjects factor by testing each participant either during the day or during the night (perhaps because this only requires them to come in for testing once). Thus each participant in this mixed design would be tested in two of the four conditions.

Regardless of whether the design is between subjects, within subjects, or mixed, the actual assignment of participants to conditions or orders of conditions is typically done randomly.

5.2.4. Non-manipulated Independent Variables ¶

In many factorial designs, one of the independent variables is a non-manipulated independent variable. The researcher measures it but does not manipulate it. The study by Schnall and colleagues is a good example. One independent variable was disgust, which the researchers manipulated by testing participants in a clean room or a messy room. The other was private body consciousness, a variable which the researchers simply measured. Another example is a study by Halle Brown and colleagues in which participants were exposed to several words that they were later asked to recall [BKD+99] . The manipulated independent variable was the type of word. Some were negative, health-related words (e.g., tumor, coronary), and others were not health related (e.g., election, geometry). The non-manipulated independent variable was whether participants were high or low in hypochondriasis (excessive concern with ordinary bodily symptoms). Results from this study suggested that participants high in hypochondriasis were better than those low in hypochondriasis at recalling the health-related words, but that they were no better at recalling the non-health-related words.

Such studies are extremely common, and there are several points worth making about them. First, non-manipulated independent variables are usually participant characteristics (private body consciousness, hypochondriasis, self-esteem, and so on), and as such they are, by definition, between-subject factors. For example, people are either low in hypochondriasis or high in hypochondriasis; they cannot be in both of these conditions. Second, such studies are generally considered to be experiments as long as at least one independent variable is manipulated, regardless of how many non-manipulated independent variables are included. Third, it is important to remember that causal conclusions can only be drawn about the manipulated independent variable. For example, Schnall and her colleagues were justified in concluding that disgust affected the harshness of their participants’ moral judgments because they manipulated that variable and randomly assigned participants to the clean or messy room. But they would not have been justified in concluding that participants’ private body consciousness affected the harshness of their participants’ moral judgments because they did not manipulate that variable. It could be, for example, that having a strict moral code and a heightened awareness of one’s body are both caused by some third variable (e.g., neuroticism). Thus it is important to be aware of which variables in a study are manipulated and which are not.

5.2.5. Graphing the Results of Factorial Experiments ¶

The results of factorial experiments with two independent variables can be graphed by representing one independent variable on the x-axis and representing the other by using different kinds of bars or lines. (The y-axis is always reserved for the dependent variable.)

Fig. 5.3 Two ways to plot the results of a factorial experiment with two independent variables ¶

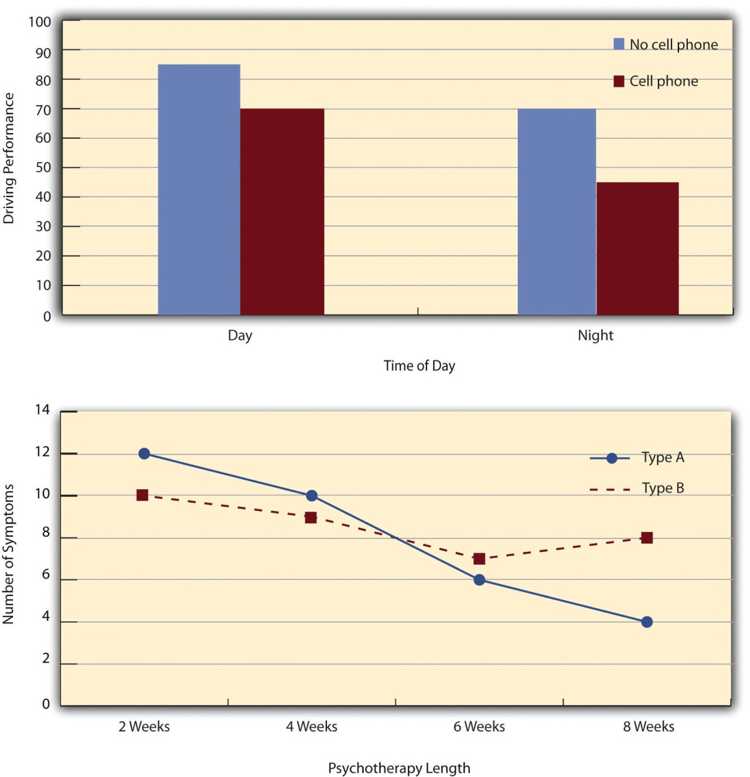

Figure 5.3 shows results for two hypothetical factorial experiments. The top panel shows the results of a 2 x 2 design. Time of day (day vs. night) is represented by different locations on the x-axis, and cell phone use (no vs. yes) is represented by different-colored bars. It would also be possible to represent cell phone use on the x-axis and time of day as different-colored bars. The choice comes down to which way seems to communicate the results most clearly. The bottom panel of Figure 5.3 shows the results of a 4 x 2 design in which one of the variables is quantitative. This variable, psychotherapy length, is represented along the x-axis, and the other variable (psychotherapy type) is represented by differently formatted lines. This is a line graph rather than a bar graph because the variable on the x-axis is quantitative with a small number of distinct levels. Line graphs are also appropriate when representing measurements made over a time interval (also referred to as time series information) on the x-axis.

5.2.6. Main Effects and Interactions ¶

In factorial designs, there are two kinds of results that are of interest: main effects and interactions. A main effect is the statistical relationship between one independent variable and a dependent variable-averaging across the levels of the other independent variable(s). Thus there is one main effect to consider for each independent variable in the study. The top panel of Figure 5.4 shows a main effect of cell phone use because driving performance was better, on average, when participants were not using cell phones than when they were. The blue bars are, on average, higher than the red bars. It also shows a main effect of time of day because driving performance was better during the day than during the night-both when participants were using cell phones and when they were not. Main effects are independent of each other in the sense that whether or not there is a main effect of one independent variable says nothing about whether or not there is a main effect of the other. The bottom panel of Figure 5.4 , for example, shows a clear main effect of psychotherapy length. The longer the psychotherapy, the better it worked.

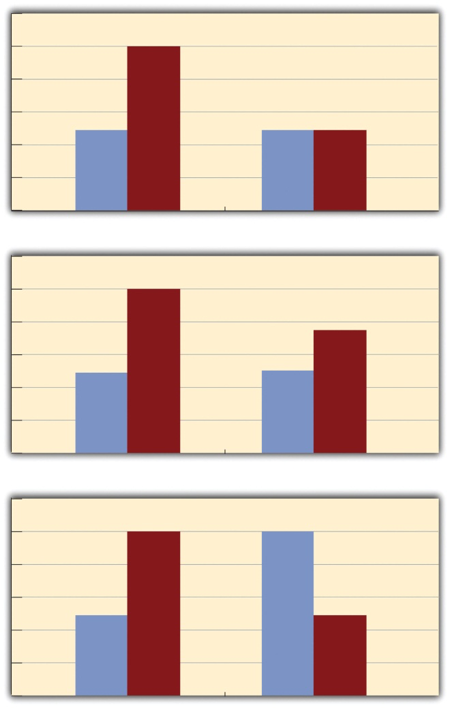

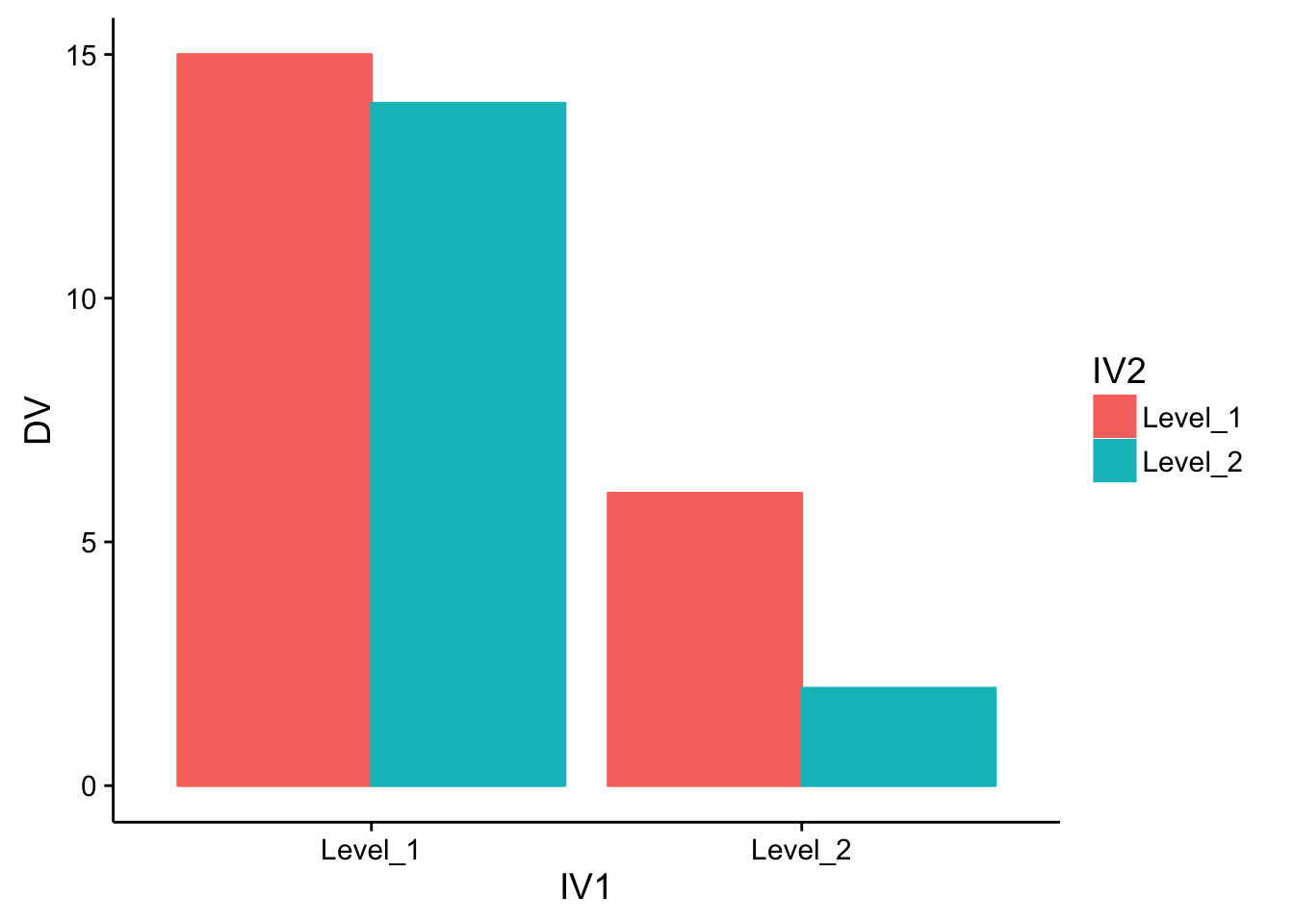

Fig. 5.4 Bar graphs showing three types of interactions. In the top panel, one independent variable has an effect at one level of the second independent variable but not at the other. In the middle panel, one independent variable has a stronger effect at one level of the second independent variable than at the other. In the bottom panel, one independent variable has the opposite effect at one level of the second independent variable than at the other. ¶

There is an interaction effect (or just “interaction”) when the effect of one independent variable depends on the level of another. Although this might seem complicated, you already have an intuitive understanding of interactions. It probably would not surprise you, for example, to hear that the effect of receiving psychotherapy is stronger among people who are highly motivated to change than among people who are not motivated to change. This is an interaction because the effect of one independent variable (whether or not one receives psychotherapy) depends on the level of another (motivation to change). Schnall and her colleagues also demonstrated an interaction because the effect of whether the room was clean or messy on participants’ moral judgments depended on whether the participants were low or high in private body consciousness. If they were high in private body consciousness, then those in the messy room made harsher judgments. If they were low in private body consciousness, then whether the room was clean or messy did not matter.

The effect of one independent variable can depend on the level of the other in several different ways. This is shown in Figure 5.5 .

Fig. 5.5 Line Graphs Showing Three Types of Interactions. In the top panel, one independent variable has an effect at one level of the second independent variable but not at the other. In the middle panel, one independent variable has a stronger effect at one level of the second independent variable than at the other. In the bottom panel, one independent variable has the opposite effect at one level of the second independent variable than at the other. ¶

In the top panel, independent variable “B” has an effect at level 1 of independent variable “A” but no effect at level 2 of independent variable “A” (much like the study of Schnall in which there was an effect of disgust for those high in private body consciousness but not for those low in private body consciousness). In the middle panel, independent variable “B” has a stronger effect at level 1 of independent variable “A” than at level 2. This is like the hypothetical driving example where there was a stronger effect of using a cell phone at night than during the day. In the bottom panel, independent variable “B” again has an effect at both levels of independent variable “A”, but the effects are in opposite directions. This is what is called called a crossover interaction. One example of a crossover interaction comes from a study by Kathy Gilliland on the effect of caffeine on the verbal test scores of introverts and extraverts [Gil80] . Introverts perform better than extraverts when they have not ingested any caffeine. But extraverts perform better than introverts when they have ingested 4 mg of caffeine per kilogram of body weight.

In many studies, the primary research question is about an interaction. The study by Brown and her colleagues was inspired by the idea that people with hypochondriasis are especially attentive to any negative health-related information. This led to the hypothesis that people high in hypochondriasis would recall negative health-related words more accurately than people low in hypochondriasis but recall non-health-related words about the same as people low in hypochondriasis. And this is exactly what happened in this study.

5.2.7. Key Takeaways ¶

Researchers often include multiple independent variables in their experiments. The most common approach is the factorial design, in which each level of one independent variable is combined with each level of the others to create all possible conditions.

In a factorial design, the main effect of an independent variable is its overall effect averaged across all other independent variables. There is one main effect for each independent variable.

There is an interaction between two independent variables when the effect of one depends on the level of the other. Some of the most interesting research questions and results in psychology are specifically about interactions.

5.2.8. Exercises ¶

Practice: Return to the five article titles presented at the beginning of this section. For each one, identify the independent variables and the dependent variable.

Practice: Create a factorial design table for an experiment on the effects of room temperature and noise level on performance on the MCAT. Be sure to indicate whether each independent variable will be manipulated between-subjects or within-subjects and explain why.

Practice: Sketch 8 different bar graphs to depict each of the following possible results in a 2 x 2 factorial experiment:

No main effect of A; no main effect of B; no interaction

Main effect of A; no main effect of B; no interaction

No main effect of A; main effect of B; no interaction

Main effect of A; main effect of B; no interaction

Main effect of A; main effect of B; interaction

Main effect of A; no main effect of B; interaction

No main effect of A; main effect of B; interaction

No main effect of A; no main effect of B; interaction

5.3. Factorial designs: Round 2 ¶

Factorial designs require the experimenter to manipulate at least two independent variables. Consider the light-switch example from earlier. Imagine you are trying to figure out which of two light switches turns on a light. The dependent variable is the light (we measure whether it is on or off). The first independent variable is light switch #1, and it has two levels, up or down. The second independent variable is light switch #2, and it also has two levels, up or down. When there are two independent variables, each with two levels, there are four total conditions that can be tested. We can describe these four conditions in a 2x2 table.

Switch 1 Up | Switch 1 Down | |

|---|---|---|

Switch 2 Up | Light ? | Light ? |

Switch 2 Down | Light ? | Light ? |

This kind of design has a special property that makes it a factorial design. That is, the levels of each independent variable are each manipulated across the levels of the other indpendent variable. In other words, we manipulate whether switch #1 is up or down when switch #2 is up, and when switch numebr #2 is down. Another term for this property of factorial designs is “fully-crossed”.

It is possible to conduct experiments with more than independent variable that are not fully-crossed, or factorial designs. This would mean that each of the levels of one independent variable are not necessarilly manipulated for each of the levels of the other independent variables. These kinds of designs are sometimes called unbalanced designs, and they are not as common as fully-factorial designs. An example, of an unbalanced design would be the following design with only 3 conditions:

Switch 1 Up | Switch 1 Down | |

|---|---|---|

Switch 2 Up | Light ? | Light ? |

Switch 2 Down | Light ? | NOT MEASURED |

Factorial designs are often described using notation such as AXB, where A indicates the number of levels for the first independent variable, and B indicates the number of levels for the second independent variable. The fully-crossed version of the 2-light switch experiment would be called a 2x2 factorial design. This notation is convenient because by multiplying the numbers in the equation we can find the number of conditions in the design. For example 2x2 = 4 conditions.

More complicated factorial designs have more indepdent variables and more levels. We use the same notation describe these designs. Each number represents the number of levels for one of the independent variables, and the number of numbers represents the number of variables. So, a 2x2x2 design has three independent variables, and each one has 2 levels, for a total of 2x2x2=6 conditions. A 3x3 design has two independent variables, each with three levels, for a total of 9 conditions. Designs can get very complicated, such as a 5x3x6x2x7 experiment, with five independent variables, each with differing numbers of levels, for a total of 1260 conditions. If you are considering a complicated design like that one, you might want to consider how to simplify it.

5.3.1. 2x2 Factorial designs ¶

For simplicity, we will focus mainly on 2x2 factorial designs. As with simple designs with only one independent variable, factorial designs have the same basic empirical question. Did manipulation of the independent variables cause changes in the dependent variables? However, 2x2 designs have more than one manipulation, so there is more than one way that the dependent variable can change. So, we end up asking the basic empirical question more than once.

More specifically, the analysis of factorial designs is split into two parts: main effects and interactions. Main effects occur when the manipulation of one independent variable cause a change in the dependent variable. In a 2x2 design, there are two independent variables, so there are two possible main effects: the main effect of independent variable 1, and the main effect of independent variable 2. An interaction occurs when the effect of one independent variable depends on the levels of the other independent variable. My experience in teaching the concept of main effects and interactions is that they are confusing. So, I expect that these definitions will not be very helpful, and although they are clear and precise, they only become helpful as definitions after you understand the concepts…so they are not useful for explaining the concepts. To explain the concepts we will go through several different kinds of examples.

To briefly add to the confusion, or perhaps to illustrate why these two concepts can be confusing, we will look at the eight possible outcomes that could occur in a 2x2 factorial experiment.

Possible outcome | IV1 main effect | IV2 main effect | Interaction |

|---|---|---|---|

1 | yes | yes | yes |

2 | yes | no | yes |

3 | no | yes | yes |

4 | no | no | yes |

5 | yes | yes | no |

6 | yes | no | no |

7 | no | yes | no |

8 | no | no | no |

In the table, a yes means that there was statistically significant difference for one of the main effects or interaction, and a no means that there was not a statisically significant difference. As you can see, just by adding one more independent variable, the number of possible outcomes quickly become more complicated. When you conduct a 2x2 design, the task for analysis is to determine which of the 8 possibilites occured, and then explain the patterns for each of the effects that occurred. That’s a lot of explaining to do.

5.3.2. Main effects ¶

Main effects occur when the levels of an independent variable cause change in the measurement or dependent variable. There is one possible main effect for each independent variable in the design. When we find that independent variable did influence the dependent variable, then we say there was a main effect. When we find that the independent variable did not influence the dependent variable, then we say there was no main effect.

The simplest way to understand a main effect is to pretend that the other independent variables do not exist. If you do this, then you simply have a single-factor design, and you are asking whether that single factor caused change in the measurement. For a 2x2 experiment, you do this twice, once for each independent variable.

Let’s consider a silly example to illustrate an important property of main effects. In this experiment the dependent variable will be height in inches. The independent variables will be shoes and hats. The shoes independent variable will have two levels: wearing shoes vs. no shoes. The hats independent variable will have two levels: wearing a hat vs. not wearing a hat. The experimenter will provide the shoes and hats. The shoes add 1 inch to a person’s height, and the hats add 6 inches to a person’s height. Further imagine that we conduct a within-subjects design, so we measure each person’s height in each of the fours conditions. Before we look at some example data, the findings from this experiment should be pretty obvious. People will be 1 inch taller when they wear shoes, and 6 inches taller when they where a hat. We see this in the example data from 10 subjects presented below:

NoShoes-NoHat | Shoes-NoHat | NoShoes-Hat | Shoes-Hat |

|---|---|---|---|

57 | 58 | 63 | 64 |

58 | 59 | 64 | 65 |

58 | 59 | 64 | 65 |

58 | 59 | 64 | 65 |

59 | 60 | 65 | 66 |

58 | 59 | 64 | 65 |

57 | 58 | 63 | 64 |

59 | 60 | 65 | 66 |

57 | 58 | 63 | 64 |

58 | 59 | 64 | 65 |

The mean heights in each condition are:

Condition | Mean |

|---|---|

NoShoes-NoHat | 57.9 |

Shoes-NoHat | 58.9 |

NoShoes-Hat | 63.9 |

Shoes-Hat | 64.9 |

To find the main effect of the shoes manipulation we want to find the mean height in the no shoes condition, and compare it to the mean height of the shoes condition. To do this, we collapse , or average over the observations in the hat conditions. For example, looking only at the no shoes vs. shoes conditions we see the following averages for each subject.

NoShoes | Shoes |

|---|---|

60 | 61 |

61 | 62 |

61 | 62 |

61 | 62 |

62 | 63 |

61 | 62 |

60 | 61 |

62 | 63 |

60 | 61 |

61 | 62 |

The group means are:

Shoes | Mean |

|---|---|

No | 60.9 |

Yes | 61.9 |

As expected, we see that the average height is 1 inch taller when subjects wear shoes vs. do not wear shoes. So, the main effect of wearing shoes is to add 1 inch to a person’s height.

We can do the very same thing to find the main effect of hats. Except in this case, we find the average heights in the no hat vs. hat conditions by averaging over the shoe variable.

NoHat | Hat |

|---|---|

57.5 | 63.5 |

58.5 | 64.5 |

58.5 | 64.5 |

58.5 | 64.5 |

59.5 | 65.5 |

58.5 | 64.5 |

57.5 | 63.5 |

59.5 | 65.5 |

57.5 | 63.5 |

58.5 | 64.5 |

Hat | Mean |

|---|---|

No | 58.4 |

Yes | 64.4 |

As expected, we the average height is 6 inches taller when the subjects wear a hat vs. do not wear a hat. So, the main effect of wearing hats is to add 1 inch to a person’s height.

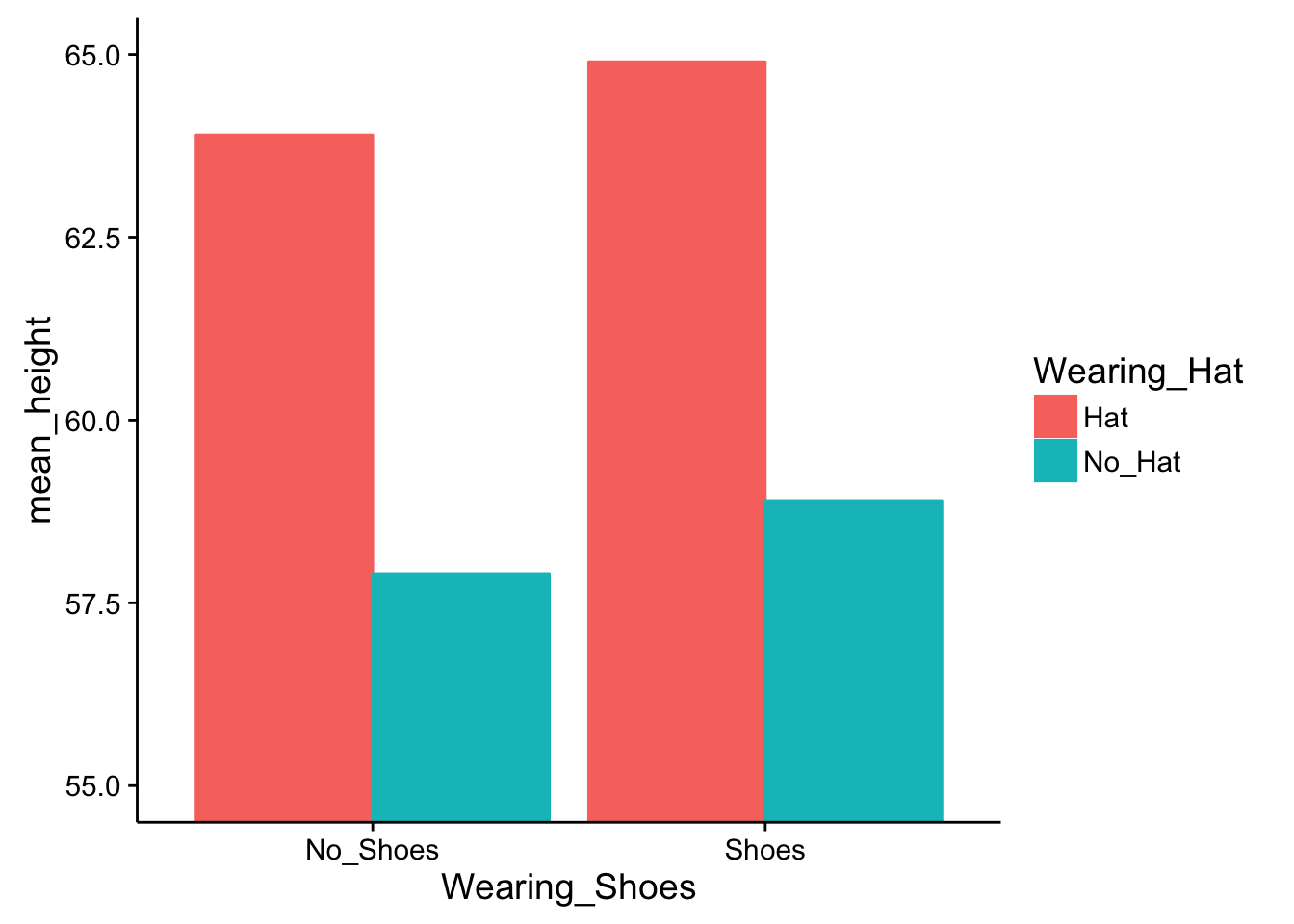

Instead of using tables to show the data, let’s use some bar graphs. First, we will plot the average heights in all four conditions.

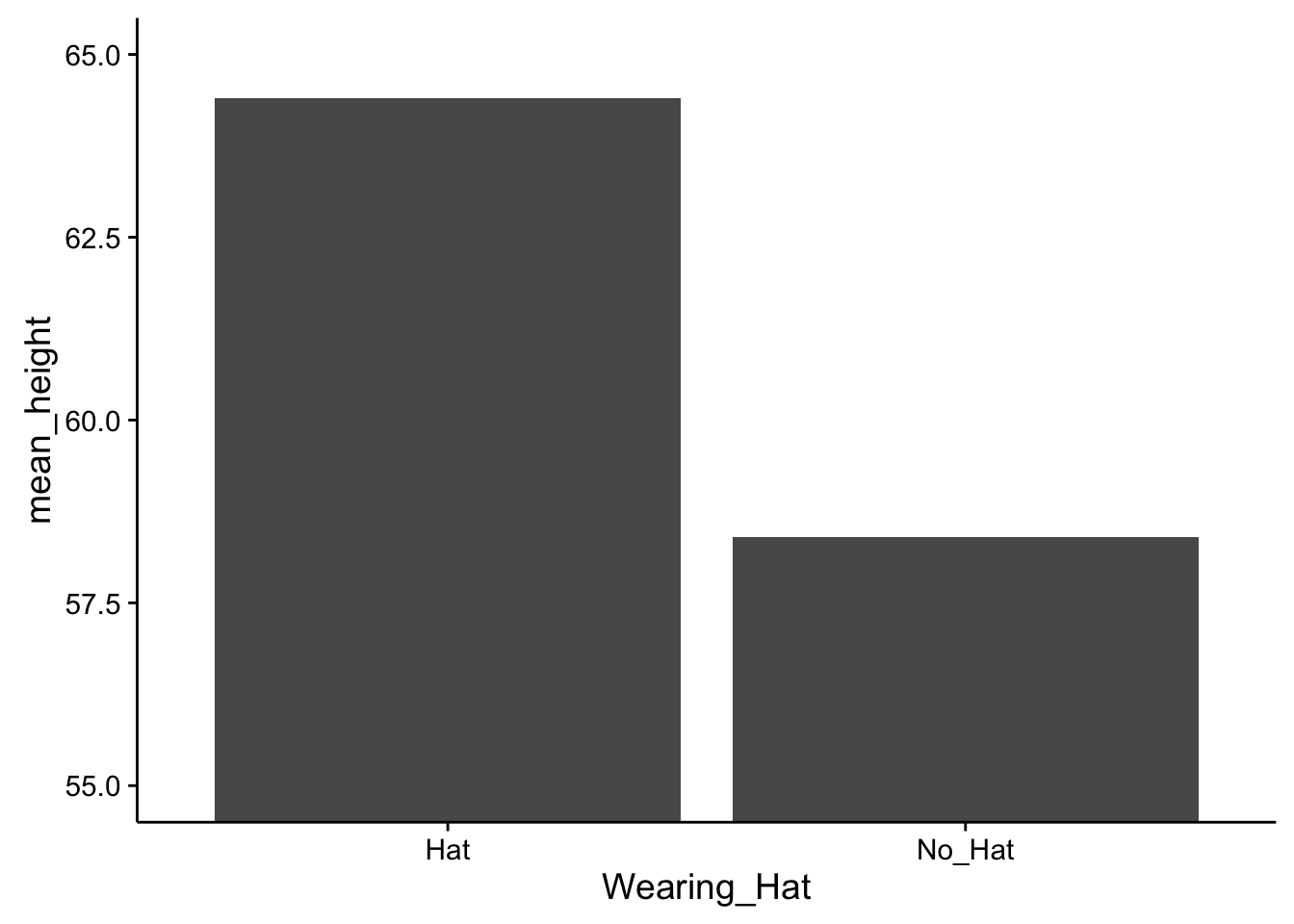

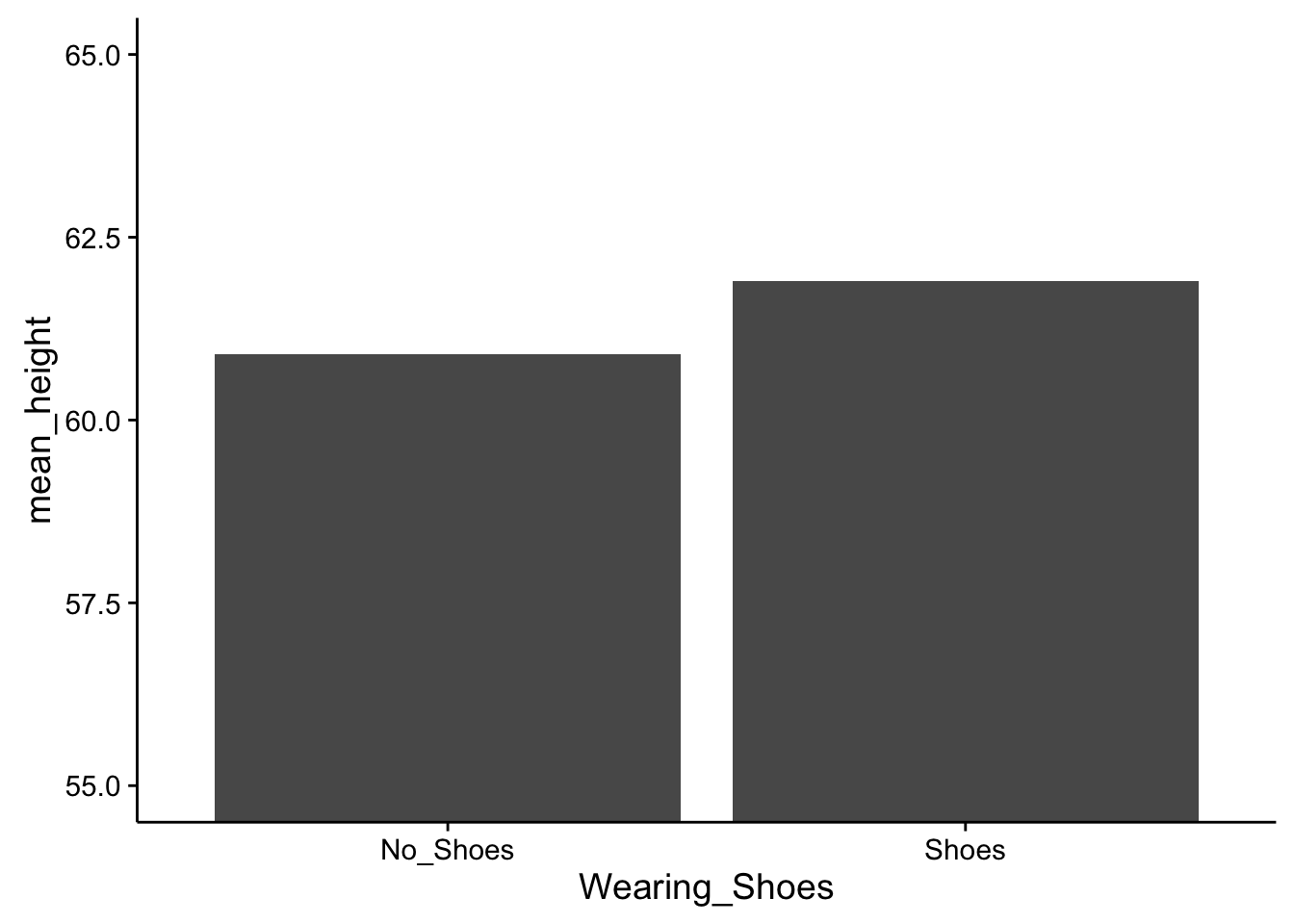

Fig. 5.6 Means from our experiment involving hats and shoes. ¶

Some questions to ask yourself are 1) can you identify the main effect of wearing shoes in the figure, and 2) can you identify the main effet of wearing hats in the figure. Both of these main effects can be seen in the figure, but they aren’t fully clear. You have to do some visual averaging.

Perhaps the most clear is the main effect of wearing a hat. The red bars show the conditions where people wear hats, and the green bars show the conditions where people do not wear hats. For both levels of the wearing shoes variable, the red bars are higher than the green bars. That is easy enough to see. More specifically, in both cases, wearing a hat adds exactly 6 inches to the height, no more no less.

Less clear is the main effect of wearing shoes. This is less clear because the effect is smaller so it is harder to see. How to find it? You can look at the red bars first and see that the red bar for no-shoes is slightly smaller than the red bar for shoes. The same is true for the green bars. The green bar for no-shoes is slightly smaller than the green bar for shoes.

Fig. 5.7 Means of our Hat and No-Hat conditions (averaging over the shoe condition). ¶

Fig. 5.8 Means of our Shoe and No-Shoe conditions (averaging over the hat condition). ¶

Data from 2x2 designs is often present in graphs like the one above. An advantage of these graphs is that they display means in all four conditions of the design. However, they do not clearly show the two main effects. Someone looking at this graph alone would have to guesstimate the main effects. Or, in addition to the main effects, a researcher could present two more graphs, one for each main effect (however, in practice this is not commonly done because it takes up space in a journal article, and with practice it becomes second nature to “see” the presence or absence of main effects in graphs showing all of the conditions). If we made a separate graph for the main effect of shoes we should see a difference of 1 inch between conditions. Similarly, if we made a separate graph for the main effect of hats then we should see a difference of 6 between conditions. Examples of both of those graphs appear in the margin.

Why have we been talking about shoes and hats? These independent variables are good examples of variables that are truly independent from one another. Neither one influences the other. For example, shoes with a 1 inch sole will always add 1 inch to a person’s height. This will be true no matter whether they wear a hat or not, and no matter how tall the hat is. In other words, the effect of wearing a shoe does not depend on wearing a hat. More formally, this means that the shoe and hat independent variables do not interact. It would be very strange if they did interact. It would mean that the effect of wearing a shoe on height would depend on wearing a hat. This does not happen in our universe. But in some other imaginary universe, it could mean, for example, that wearing a shoe adds 1 to your height when you do not wear a hat, but adds more than 1 inch (or less than 1 inch) when you do wear a hat. This thought experiment will be our entry point into discussing interactions. A take-home message before we begin is that some independent variables (like shoes and hats) do not interact; however, there are many other independent variables that do.

5.3.3. Interactions ¶

Interactions occur when the effect of an independent variable depends on the levels of the other independent variable. As we discussed above, some independent variables are independent from one another and will not produce interactions. However, other combinations of independent variables are not independent from one another and they produce interactions. Remember, independent variables are always manipulated independently from the measured variable (see margin note), but they are not necessarilly independent from each other.

Independence

These ideas can be confusing if you think that the word “independent” refers to the relationship between independent variables. However, the term “independent variable” refers to the relationship between the manipulated variable and the measured variable. Remember, “independent variables” are manipulated independently from the measured variable. Specifically, the levels of any independent variable do not change because we take measurements. Instead, the experimenter changes the levels of the independent variable and then observes possible changes in the measures.

There are many simple examples of two independent variables being dependent on one another to produce an outcome. Consider driving a car. The dependent variable (outcome that is measured) could be how far the car can drive in 1 minute. Independent variable 1 could be gas (has gas vs. no gas). Independent variable 2 could be keys (has keys vs. no keys). This is a 2x2 design, with four conditions.

Gas | No Gas | |

|---|---|---|

Keys | can drive | x |

No Keys | x | x |

Importantly, the effect of the gas variable on driving depends on the levels of having a key. Or, to state it in reverse, the effect of the key variable on driving depends on the levesl of the gas variable. Finally, in plain english. You need the keys and gas to drive. Otherwise, there is no driving.

5.3.4. What makes a people hangry? ¶

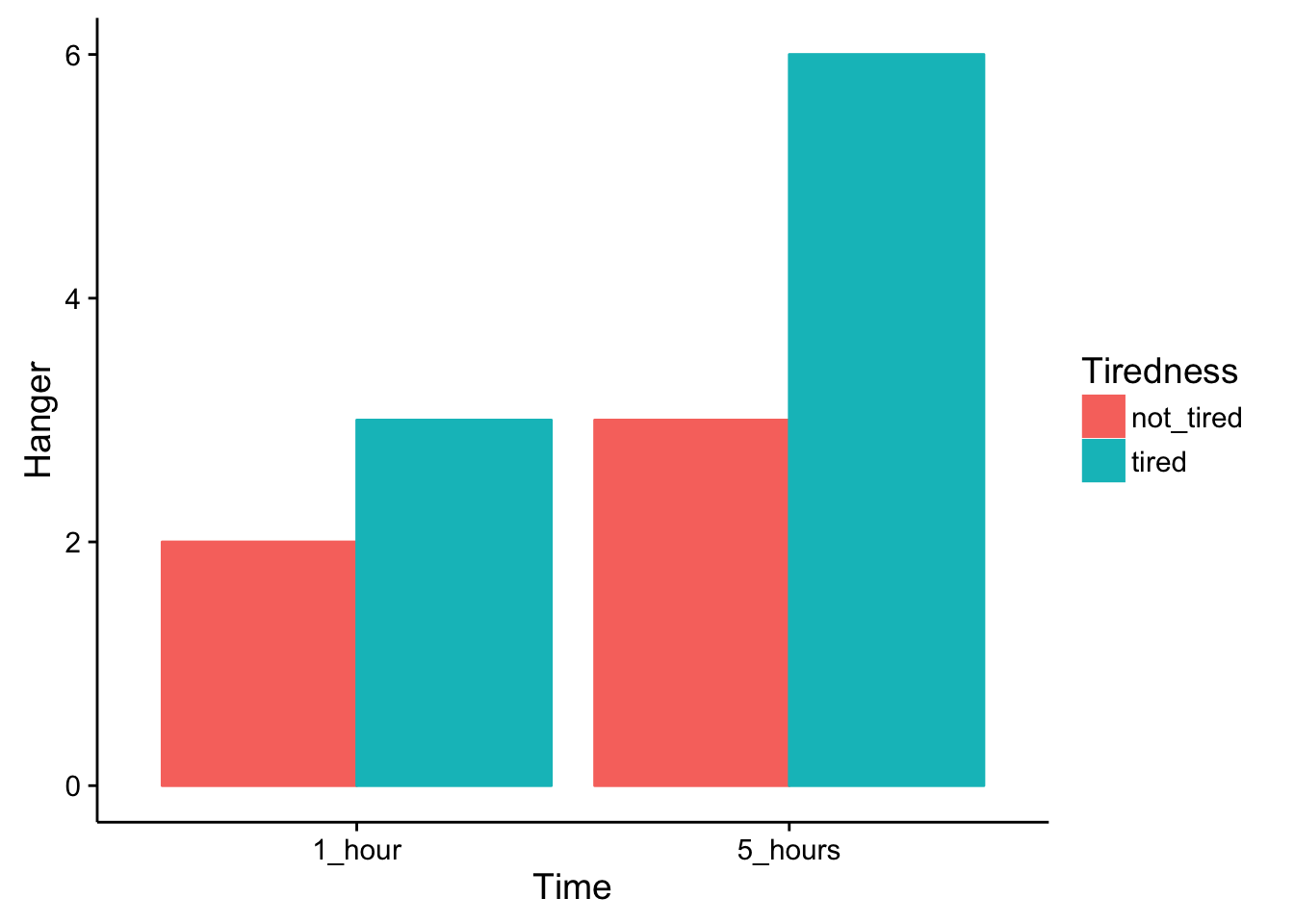

To continue with more examples, let’s consider an imaginary experiment examining what makes people hangry. You may have been hangry before. It’s when you become highly irritated and angry because you are very hungry…hangry. I will propose an experiment to measure conditions that are required to produce hangriness. The pretend experiment will measure hangriness (we ask people how hangry they are on a scale from 1-10, with 10 being most hangry, and 0 being not hangry at all). The first independent variable will be time since last meal (1 hour vs. 5 hours), and the second independent variable will be how tired someone is (not tired vs very tired). I imagine the data could look something the following bar graph.

Fig. 5.9 Means from our study of hangriness. ¶

The graph shows clear evidence of two main effects, and an interaction . There is a main effect of time since last meal. Both the bars in the 1 hour conditions have smaller hanger ratings than both of the bars in the 5 hour conditions. There is a main effect of being tired. Both of the bars in the “not tired” conditions are smaller than than both of the bars in the “tired” conditions. What about the interaction?

Remember, an interaction occurs when the effect of one independent variable depends on the level of the other independent variable. We can look at this two ways, and either way shows the presence of the very same interaction. First, does the effect of being tired depend on the levels of the time since last meal? Yes. Look first at the effect of being tired only for the “1 hour condition”. We see the red bar (tired) is 1 unit lower than the green bar (not tired). So, there is an effect of 1 unit of being tired in the 1 hour condition. Next, look at the effect of being tired only for the “5 hour” condition. We see the red bar (tired) is 3 units lower than the green bar (not tired). So, there is an effect of 3 units for being tired in the 5 hour condition. Clearly, the size of the effect for being tired depends on the levels of the time since last meal variable. We call this an interaction.

The second way of looking at the interaction is to start by looking at the other variable. For example, does the effect of time since last meal depend on the levels of the tired variable? The answer again is yes. Look first at the effect of time since last meal only for the red bars in the “not tired” condition. The red bar in the 1 hour condition is 1 unit smaller than the red bar in the 5 hour condition. Next, look at the effect of time since last meal only for the green bars in the “tired” condition. The green bar in the 1 hour condition is 3 units smaller than the green bar in the 5 hour condition. Again, the size of the effect of time since last meal depends on the levels of the tired variable.No matter which way you look at the interaction, we get the same numbers for the size of the interaction effect, which is 2 units (i.e., the difference between 3 and 1). The interaction suggests that something special happens when people are tired and haven’t eaten in 5 hours. In this condition, they can become very hangry. Whereas, in the other conditions, there are only small increases in being hangry.

5.3.5. Identifying main effects and interactions ¶

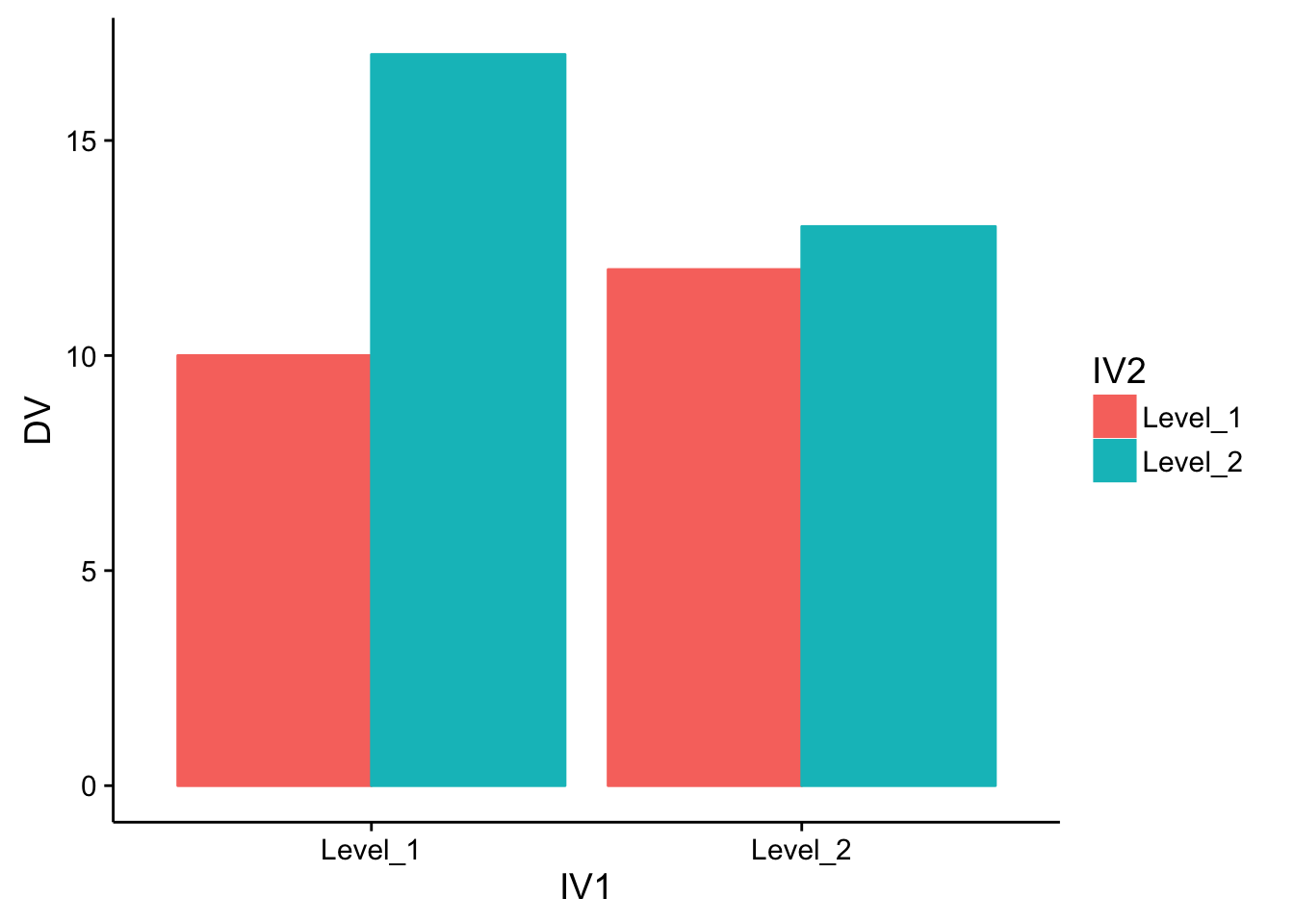

Research findings are often presented to readers using graphs or tables. For example, the very same pattern of data can be displayed in a bar graph, line graph, or table of means. These different formats can make the data look different, even though the pattern in the data is the same. An important skill to develop is the ability to identify the patterns in the data, regardless of the format they are presented in. Some examples of bar and line graphs are presented in the margin, and two example tables are presented below. Each format displays the same pattern of data.

Fig. 5.10 Data from a 2x2 factorial design summarized in a bar plot. ¶

Fig. 5.11 The same data from above, but instead summarized in a line plot. ¶

After you become comfortable with interpreting data in these different formats, you should be able to quickly identify the pattern of main effects and interactions. For example, you would be able to notice that all of these graphs and tables show evidence for two main effects and one interaction.

As an exercise toward this goal, we will first take a closer look at extracting main effects and interactions from tables. This exercise will how the condition means are used to calculate the main effects and interactions. Consider the table of condition means below.

IV1 | |||

|---|---|---|---|

A | B | ||

IV2 | 1 | 4 | 5 |

2 | 3 | 8 |

5.3.6. Main effects ¶

Main effects are the differences between the means of single independent variable. Notice, this table only shows the condition means for each level of all independent variables. So, the means for each IV must be calculated. The main effect for IV1 is the comparison between level A and level B, which involves calculating the two column means. The mean for IV1 Level A is (4+3)/2 = 3.5. The mean for IV1 Level B is (5+8)/2 = 6.5. So the main effect is 3 (6.5 - 3.5). The main effect for IV2 is the comparison between level 1 and level 2, which involves calculating the two row means. The mean for IV2 Level 1 is (4+5)/2 = 4.5. The mean for IV2 Level 2 is (3+8)/2 = 5.5. So the main effect is 1 (5.5 - 4.5). The process of computing the average for each level of a single independent variable, always involves collapsing, or averaging over, all of the other conditions from other variables that also occured in that condition

5.3.7. Interactions ¶

Interactions ask whether the effect of one independent variable depends on the levels of the other independent variables. This question is answered by computing difference scores between the condition means. For example, we look the effect of IV1 (A vs. B) for both levels of of IV2. Focus first on the condition means in the first row for IV2 level 1. We see that A=4 and B=5, so the effect IV1 here was 5-4 = 1. Next, look at the condition in the second row for IV2 level 2. We see that A=3 and B=8, so the effect of IV1 here was 8-3 = 5. We have just calculated two differences (5-4=1, and 8-3=5). These difference scores show that the size of the IV1 effect was different across the levels of IV2. To calculate the interaction effect we simply find the difference between the difference scores, 5-1=4. In general, if the difference between the difference scores is different, then there is an interaction effect.

5.3.8. Example bar graphs ¶

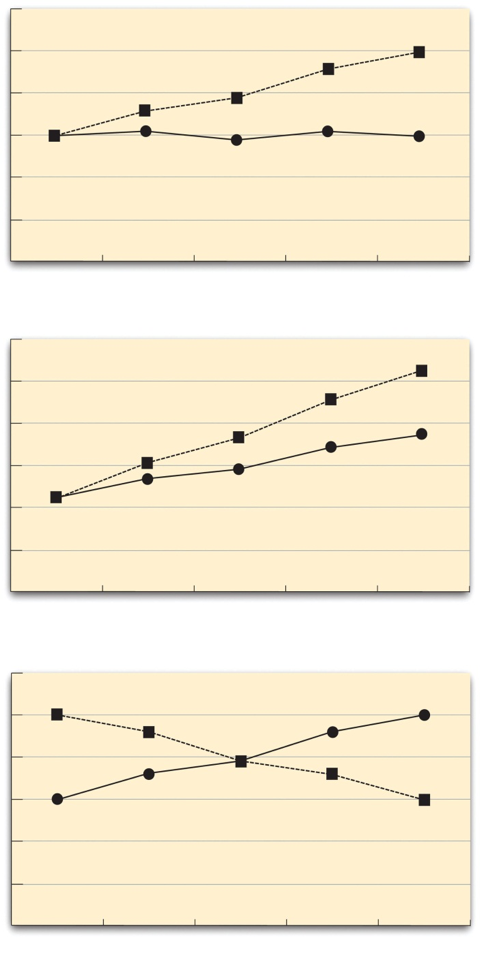

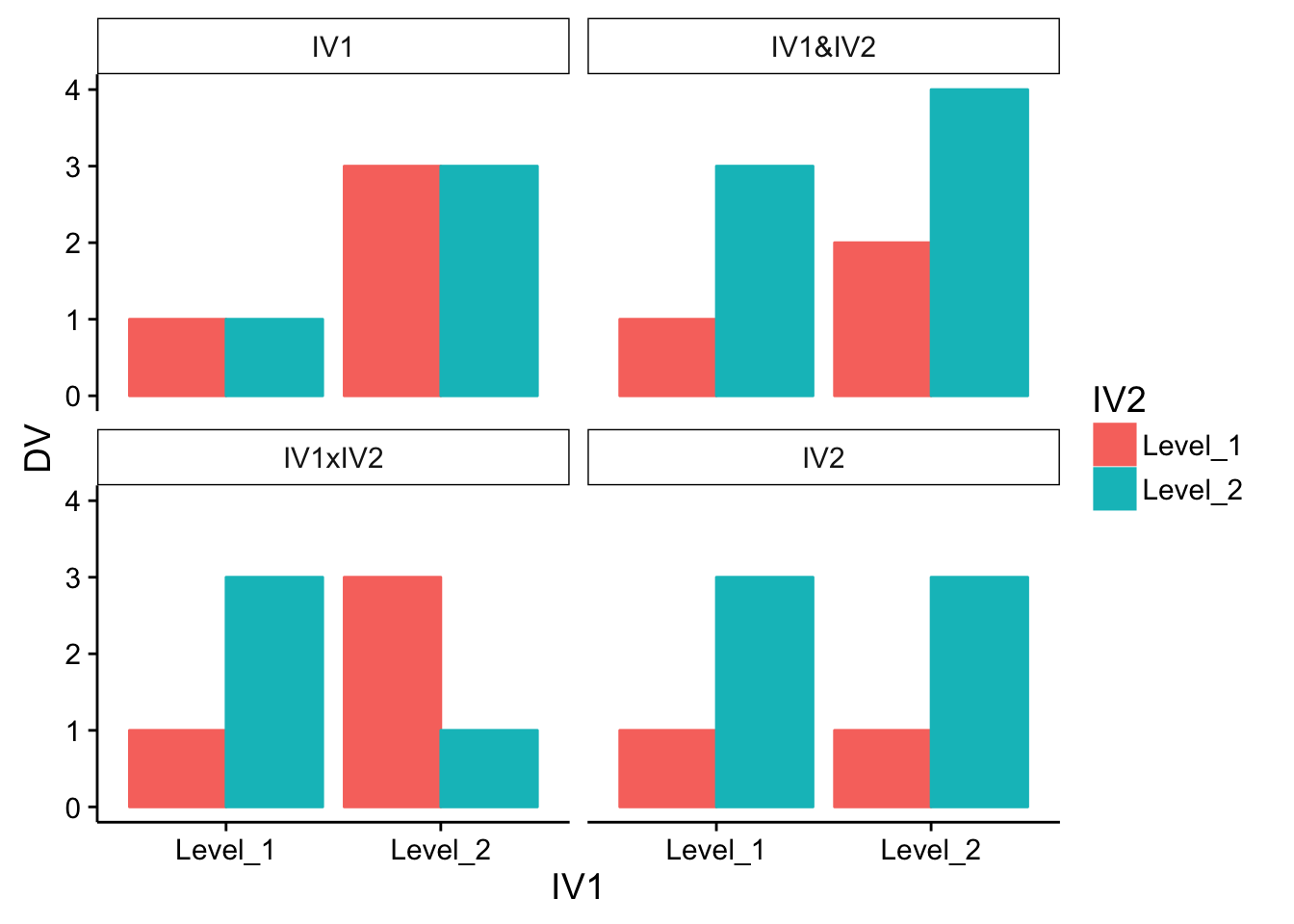

Fig. 5.12 Four patterns that could be observed in a 2x2 factorial design. ¶

The IV1 shows a main effect only for IV1 (both red and green bars are lower for level 1 than level 2). The IV1&IV2 graphs shows main effects for both variables. The two bars on the left are both lower than the two on the right, and the red bars are both lower than the green bars. The IV1xIV2 graph shows an example of a classic cross-over interaction. Here, there are no main effects, just an interaction. There is a difference of 2 between the green and red bar for Level 1 of IV1, and a difference of -2 for Level 2 of IV1. That makes the differences between the differences = 4. Why are their no main effects? Well the average of the red bars would equal the average of the green bars, so there is no main effect for IV2. And, the average of the red and green bars for level 1 of IV1 would equal the average of the red and green bars for level 2 of IV1, so there is no main effect. The bar graph for IV2 shows only a main effect for IV2, as the red bars are both lower than the green bars.

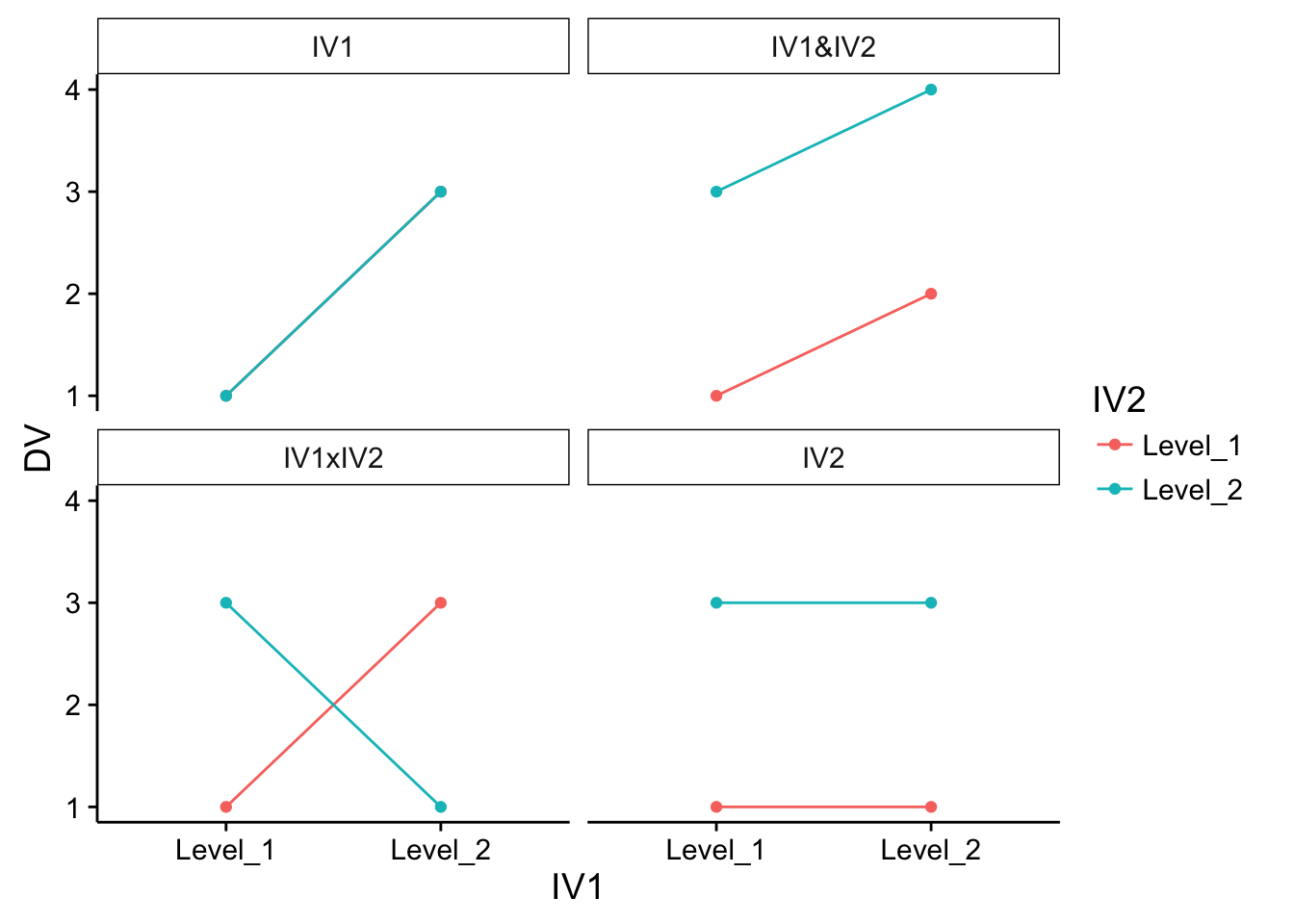

5.3.9. Example line graphs ¶

You may find that the patterns of main effects and interaction looks different depending on the visual format of the graph. The exact same patterns of data plotted up in bar graph format, are plotted as line graphs for your viewing pleasure. Note that for the IV1 graph, the red line does not appear because it is hidden behind the green line (the points for both numbers are identical).

Fig. 5.13 Four patterns that could be observed in a 2x2 factorial design, now depicted using line plots. ¶

5.3.10. Interpreting main effects and interactions ¶

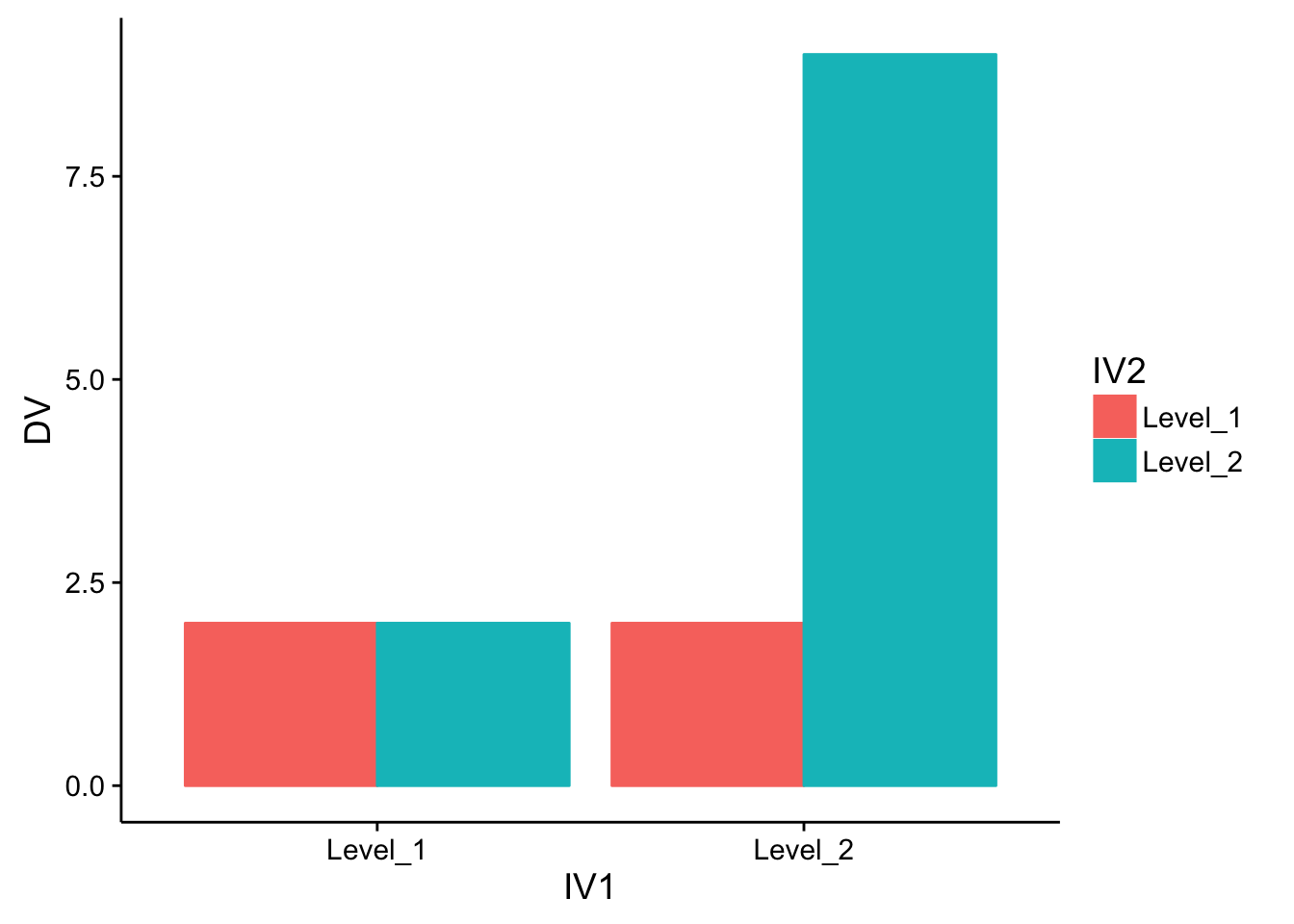

The presence of an interaction, particularly a strong interaction, can sometimes make it challenging to interpet main effects. For example, take a look at Figure 5.14 , which indicates a very strong interaction.

Fig. 5.14 A clear interaction effect. But what about the main effects? ¶