Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

- Learning English

- Ask a Teacher

[Grammar] After many years of research, they found the solution at last/ at the end.

- Thread starter wotcha

- Start date Aug 27, 2012

- Views : 30,992

Senior Member

- Aug 27, 2012

wotcha said: My grammar book says the answer to the above sentence is ' at last ' and I know both 'at last' and 'at the end' are same in meaning. Click to expand...

charliedeut

Hi Wotcha, Maybe you were thinking of "in the end"? charliedeut

Similar threads

- Jun 12, 2024

- Jun 5, 2024

- Editing & Writing Topics

- Coffee Break

- Sep 19, 2023

- hardyweineberg

- May 28, 2023

- Mar 12, 2023

If you have a question about the English language and would like to ask one of our many English teachers and language experts, please click the button below to let us know:

Share this page

share this!

August 8, 2024

This article has been reviewed according to Science X's editorial process and policies . Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

written by researcher(s)

Multiple goals, multiple solutions, plenty of second-guessing and revising—here's how science really works

by Soazig Le Bihan, The Conversation

A man in a lab coat bends under a dim light, his strained eyes riveted onto a microscope. He's powered only by caffeine and anticipation.

This solitary scientist will stay on task until he unveils the truth about the cause of the dangerous disease quickly spreading through his vulnerable city. Time is short, the stakes are high, and only he can save everyone.…

That kind of romanticized picture of science was standard for a long time. But it's as far from actual scientific practice as a movie's choreographed martial arts battle is from a real fistfight.

For most of the 20th century, philosophers of science like me maintained somewhat idealistic claims about what good science looks like. Over the past few decades, however, many of us have revised our views to better mirror actual scientific practice .

An update on what to expect from actual science is overdue. I often worry that when the public holds science to unrealistic standards, any scientific claim failing to live up to them arouses suspicion. While public trust is globally strong and has been for decades, it has been eroding. In November 2023, Americans' trust in scientists was 14 points lower than it had been just prior to the COVID-19 pandemic, with its flurry of confusing and sometimes contradictory science-related messages.

When people's expectations are not met about how science works, they may blame scientists. But modifying our expectations might be more useful. Here are three updates I think can help people better understand how science actually works. Hopefully, a better understanding of actual scientific practice will also shore up people's trust in the process.

The many faces of scientific research

First, science is a complex endeavor involving multiple goals and associated activities.

Some scientists search for the causes underlying some observable effect, such as a decimated pine forest or the Earth's global surface temperature increase .

Others may investigate the what rather than the why of things. For example, ecologists build models to estimate gray wolf abundance in Montana . Spotting predators is incredibly challenging. Counting all of them is impractical. Abundance models are neither complete nor 100% accurate—they offer estimates deemed good enough to set harvesting quotas. Perfect scientific models are just not in the cards .

Beyond the what and the why, scientists may focus on the how. For instance, the lives of people living with chronic illnesses can be improved by research on strategies for managing disease —to mitigate symptoms and improve function, even if the true causes of their disorders largely elude current medicine.

It's understandable that some patients may grow frustrated or distrustful of medical providers unable to give clear answers about what causes their ailment. But it's important to grasp that lots of scientific research focuses on how to effectively intervene in the world to reach some specific goals.

Simplistic views represent science as solely focused on providing causal explanations for the various phenomena we observe in this world. The truth is that scientists tackle all kinds of problems, which are best solved using different strategies and approaches and only sometimes involve full-fledged explanations.

Complex problems call for complex solutions

The second aspect of scientific practice worth underscoring is that, because scientists tackle complex problems, they don't typically offer one unique, complete and perfect answer. Instead they consider multiple, partial and possibly conflicting solutions.

Scientific modeling strategies illustrate this point well. Scientific models typically are partial, simplified and sometimes deliberately unrealistic representations of a system of interest. Models can be physical, conceptual or mathematical. The critical point is that they represent target systems in ways that are useful in particular contexts of inquiry. Interestingly, considering multiple possible models is often the best strategy to tackle complex problems.

Scientists consider multiple models of biodiversity , atomic nuclei or climate change . Returning to wolf abundance estimates, multiple models can also fit the bill. Such models rely on various types of data, including acoustic surveys of wolf howls, genetic methods that use fecal samples from wolves, wolf sightings and photographic evidence, aerial surveys, snow track surveys and more.

Weighing the pros and cons of various possible solutions to the problem of interest is part and parcel of the scientific process. Interestingly, in some cases, using multiple conflicting models allows for better predictions than trying to unify all the models into one.

The public may be surprised and possibly suspicious when scientists push forward multiple models that rely on conflicting assumptions and make different predictions. People often think "real science" should provide definite, complete and foolproof answers to their questions. But given various limitations and the world's complexity, keeping multiple perspectives in play is most often the best way for scientists to reach their goals and solve the problems at hand.

Science as a collective, contrarian endeavor

Finally, science is a collective endeavor, where healthy disagreement is a feature, not a bug.

The romanticized version of science pictures scientists working in isolation and establishing absolute truths. Instead, science is a social and contrarian process in which the community's scrutiny ensures we have the best available knowledge. "Best available" does not mean "definitive," but the best we have until we find out how to improve it. Science almost always allows for disagreements among experts.

Controversies are core to how science works at its best and are as old as Western science itself. In the 1600s, Descartes and Leibniz fought over how to best characterize the laws of dynamics and the nature of motion.

The long history of atomism provides a valuable perspective on how science is an intricate and winding process rather than a fast-delivery system of results set in stone. As Jean Baptiste Perrin conducted his 1908 experiments that seemingly settled all discussion regarding the existence of atoms and molecules, the questions of the atom's properties were about to become the topic of decades of controversies with the birth of quantum physics.

The nature and structure of fundamental particles and associated fields have been the subject of scientific research for more than a century. Lively academic discussions abound concerning the difficult interpretation of quantum mechanics , the challenging unification of quantum physics and relativity , and the existence of the Higgs boson , among others.

Distrusting researchers for having healthy scientific disagreements is largely misguided.

A very human practice

To be clear, science is dysfunctional in some respects and contexts. Current institutions have incentives for counterproductive practices, including maximizing publication numbers . Like any human endeavor, science includes people with bad intent, including some trying to discredit legitimate scientific research . Finally, science is sometimes inappropriately influenced by various values in problematic ways.

These are all important considerations when evaluating the trustworthiness of particular scientific claims and recommendations. However, it is unfair, sometimes dangerous, to mistrust science for doing what it does at its best. Science is a multifaceted endeavor focused on solving complex problems that typically just don't have simple solutions. Communities of experts scrutinize those solutions in hopes of providing the best available approach to tackling the problems of interest.

Science is also a fallible and collective process. Ignoring the realities of that process and holding science up to unrealistic standards may result in the public calling science out and losing trust in its reliability for the wrong reasons.

Provided by The Conversation

Explore further

Feedback to editors

Nearly 25% of European landscape could be rewilded, say researchers

4 minutes ago

Wildly divergent skinks provide a window into how evolution works

21 minutes ago

Astronomers identify more than one thousand new star cluster candidates

34 minutes ago

New research expands our understanding of how electrons move in complex fluids

Skin cell discovery could help Atlantic salmon fend off sea lice

35 minutes ago

Method to separate microplastics from water could also speed up blood analyses

50 minutes ago

Enhanced two-photon microscopy method could reveal insights into neural dynamics and neurological diseases

Implementing a new pathway to measure and value biodiversity

Surprise finding in study of environmental bacteria could advance search for better antibiotics

Cactus dreams: Revealing the secrets of mescaline making

2 hours ago

Relevant PhysicsForums posts

Cover songs versus the original track, which ones are better.

9 hours ago

Why are ABBA so popular?

11 hours ago

Biographies, history, personal accounts

Aug 13, 2024

Is "applausive" implied terminology?

"trolling" in new england, for ww2 buffs.

More from Art, Music, History, and Linguistics

Related Stories

Researchers describe how philosophers can bridge the gap between science and policy

May 16, 2024

To accelerate biosphere science, researchers say reconnect three scientific cultures

Apr 23, 2024

Why some people don't trust science—and how to change their minds

Dec 31, 2023

A means for searching for new solutions in mathematics and computer science using an LLM and an evaluator

Dec 15, 2023

How much trust do people have in different types of scientists?

Apr 25, 2024

Study finds American trust in scientific expertise survived polarization and previous administration's attack on science

Mar 8, 2024

Recommended for you

Singing from memory unlocks a surprisingly common musical superpower

Study suggests five-second break can diffuse an argument between coupled partners

23 hours ago

Findings suggest empowering women is key to both sustainable energy and gender justice

Exploring the evolution of social norms with a supercomputer

Aug 9, 2024

Study shows people associate kindness with religious belief

Research demonstrates genetically diverse crowds are wiser

Aug 8, 2024

Let us know if there is a problem with our content

Use this form if you have come across a typo, inaccuracy or would like to send an edit request for the content on this page. For general inquiries, please use our contact form . For general feedback, use the public comments section below (please adhere to guidelines ).

Please select the most appropriate category to facilitate processing of your request

Thank you for taking time to provide your feedback to the editors.

Your feedback is important to us. However, we do not guarantee individual replies due to the high volume of messages.

E-mail the story

Your email address is used only to let the recipient know who sent the email. Neither your address nor the recipient's address will be used for any other purpose. The information you enter will appear in your e-mail message and is not retained by Phys.org in any form.

Newsletter sign up

Get weekly and/or daily updates delivered to your inbox. You can unsubscribe at any time and we'll never share your details to third parties.

More information Privacy policy

Donate and enjoy an ad-free experience

We keep our content available to everyone. Consider supporting Science X's mission by getting a premium account.

E-mail newsletter

Defining the Problem to Find the Solution

“Given one hour to save the world, I would spend 55 minutes defining the problem and 5 minutes finding the solution.” ~ Albert Einstein

When Judith Rodin took over as president of The Rockefeller Foundation in 2005, she had a vision to re-imagine how the Foundation understood and intervened in the pressing challenges that confronted a 21st-century world. This transformation would require the Foundation to re-think its entire strategy model, from the issues it focused on, to the process of finding, testing, and funding solutions.

To do so, under the care of managing director Claudia Juech , who joined in 2007, the Foundation developed a highly strategic, analytical search process appropriately, and fondly, referred to as “Search.” In 2012–13, Juech’s strategic research team led 11 topic searches, which involved coordinating internal staff, consultants, and nearly 200 outside experts.

But the process is not as important as its outputs, and whether or not the Foundation decided to deploy funding into a particular space, the research yielded insights that could benefit the entire social impact field. And that’s exactly what you’ll find in the pages to follow and the additional resources offered online at rockefellerfoundation.org/insights .

In a conversation with Insights , Juech talks of how searches are framed, why the process is unique, and the team’s guiding motto.

Every search is framed around an identified set of “problem spaces.” How does The Rockefeller Foundation define a problem space?

CJ: Albert Einstein, a Rockefeller Foundation grantee, said “given one hour to save the world, I would spend 55 minutes defining the problem and 5 minutes finding the solution.”

This approach is deeply embedded into our process: Before we can solve a problem, we need to know exactly what the problem is, and we should put a good amount of thinking and resources into understanding it. And because today’s problems are so complex, we know they can’t be solved by being broken down into specific components.

“Before we can solve a problem, we need to know exactly what the problem is, and we should put a good amount of thinking and resources into understanding it. “

And so, “problem space” is just a fancy phrase for the framework through which we study a particular challenge, which includes a number of interlinking and underlying issues that must be addressed in order to find a solution. For example, if our central problem is food waste and spoilage, we must also think about farmers’ limited access to finance and reliable buyers for their crops—those are part of the problem space.

We identify problem spaces based on a wide range of inputs, using broad, sweeping horizon-scanning activities, alongside secondary research, expert interviews, and the work Rockefeller has done to date. Through this process, we often find connections and inter-relations among several trends that surface across problem spaces.

How do you assess the potential for innovation in a particular problem space?

CJ: There is very little research that has focused on how to measure innovation potential in a sector with regard to a problem space, so we are really entering new territory here. We have adopted approaches that assess innovation potential at a national level or at an organizational level, but will support further research in the future to refine our frameworks. For now, we are looking at the enabling environment—is there evidence of cross-pollination, e.g., are ideas being shared or replicated across actors, sectors, or geographies? What’s the innovation capacity? Are there active change-makers around or organizations that are capable of testing or scaling innovations? And what’s the institutional environment? Are there legal, policy, or business structures in place that promote or hinder innovation in this area?

How does this process differ from or improve upon what was done before?

CJ: For years, philanthropy has dedicated an enormous amount of resources to evaluating the impact of specific grants after the fact. What we’re trying to do with Search is to make sure the same rigor and critical thought is applied to our strategies from the outset. We also need to be innovative in where we go for information so that we are getting the freshest information—to make sure we are getting the thing that is emerging in the moment and that may lead to significant progress.

What are some other ways in which the Foundation’s process is unique?

CJ: From my experience, one of the most important distinctions is that we don’t start out with a pre-determined point of view or a dominant idea of an answer to a problem. In our process, we investigate an issue and truly test it. Then we come back, and more times than not, we find there’s not the opportunity for us there to achieve impact as major as there may be with another issue that is being reviewed at the same time.

Obviously, the Foundation cannot commit funding to every problem area you research. What kind of criteria does The Rockefeller Foundation consider when you choose where to focus your interventions?

CJ: Ultimately, the Foundation tries to determine whether there is potential for us to have significant impact at scale on a problem that is critical for poor or vulnerable populations or the ecosystems that they depend on. So it is a combination of three different criteria: We want to make sure that we work on problems integral to the lives of poor populations; we want to understand how the specific engagement and investment by The Rockefeller Foundation would make a significant difference; and we are trying to get a sense of whether the context is conducive to transformative change, i.e., is there innovative activity or momentum in regulation or private sector activity that could be catalyzed in order to achieve significant impact.

So while some options don’t move forward, it doesn’t mean they aren’t worthwhile or pressing—it simply means it isn’t the right area in which the Foundation should intervene at this time. But the process is valuable in other ways—in many instances, the findings have contributed to ongoing work or even informed future searches. And we have a robust body of research that we can share widely to help other foundations or other actors who care about social impact to make decisions about their own strategies, or perhaps to illuminate an area they hadn’t considered funding before.

What insight or insights surprised you most from the first rounds of searches?

CJ: The future trajectory of many of the problem spaces that we investigated truly requires a call to action, especially as some of these don’t yet receive the level of attention they deserve. For example, unhealthy food markets and the health implications of different diets will be severe, particularly in Africa.

Many professions have aspirational mottos that are supposed to guide them in their work. The famous one for physicians, for example, is “First, do no harm.” What would be the motto you would hang over your office door?

CJ: Well, the physician motto would be a good one, but I think that for the work we are engaged in it would be “Give a clear-eyed view.” We want to provide decision-making intelligence that is as informed and as objective as possible so that the most good can come from a range of funding sources.

- philanthropy

- strategic philanthropy

Leave a comment

Every print subscription comes with full digital access

Science News

We’ve covered science for 100 years. here’s how it has — and hasn’t — changed.

There’s more detail and sophistication, but some of the questions remain the same

By Tom Siegfried

Contributing Correspondent

April 2, 2021 at 6:00 am

A century ago, articles in the precursor to Science News frequently focused on astronomy and space, exploring such issues as whether other planets existed beyond Neptune.

SCIEPRO/Science Photo Library/Getty Images

Share this:

A century ago, people needed help to understand science. Much as they do today.

Then as now, it wasn’t always easy to sort the accurate from the erroneous. Mainstream media, then as now, regarded science as secondary to other aspects of their mission. And when science made the news, it was often (then as now) garbled, naïve or dangerously misleading.

E.W. Scripps, a prominent newspaper publisher, and William Emerson Ritter, a biologist, perceived a need. They envisioned a service that would provide reliable news about science to the world, dedicated to truth and clarity. For Scripps and Ritter, science journalism had a noble purpose: “To discover the truth about all sorts of things of human concern, and to report it truthfully and in language comprehensible to those whose welfare is involved.”

And so Science Service was born, 100 years ago — soon to give birth to the magazine now known as Science News .

In its first year of existence, Science Service delivered its weekly dispatches to newspapers in the form of mimeographed packets. By 1922 those packets became available to the public by subscription, giving birth to Science News-Letter , the progenitor of Science News . Then as now, the magazine’s readers feasted on a smorgasbord of delicious tidbits from a menu encompassing all flavors of science — from the atom to outer space, from agriculture to oceanography, from transportation to, of course, food and nutrition.

In those early days, much of the new enterprise’s coverage focused on space, such as the possibility of planets beyond Neptune. Experts shared their views on whether spiral-shaped clouds in deep space were far-off entire galaxies of stars , like the Milky Way, or embryonic solar systems just now forming within the Milky Way. Articles explored the latest speculation about life on Venus ( here and here ) or on Mars .

Regular coverage was also devoted to new technologies — particularly radio. One Science Service dispatch informed readers on how to make their own home radio set — for $6 . And in 1922 Science News-Letter reported on an astounding radio breakthrough: a set that could operate without a battery . You could just plug it in to an electrical outlet.

To celebrate our upcoming 100th anniversary, we’ve launched a series that highlights some of the biggest advances in science over the last century. Visit our Century of Science site to see the series as it unfolds.

Much of the century’s scientific future was presaged in those early reports. In May 1921, an article on recent subatomic experiments noted the “dream of scientist and novelist alike that man would one day learn how … to utilize the vast stores of energy inside of atoms .” In 1922 Science Service editor Edwin Slosson speculated that the “smallest unit of positive electricity” (the proton) might “be a complex of many positive and negative particles ,” a dim but prescient preview of the existence of quarks.

True, some prognostications did not age so well. A 1921 prediction that the United States would be forced to adopt the metric system for commercial transactions is still awaiting fulfillment. A simple, common, international auxiliary language — “confidently predicted” in 1921 to become “a part of every educated person’s equipment” — remains unestablished today. And despite serious considerations of calendar reform by astronomers and church dignitaries reported in May 1922, well over 1,000 of the same old months have since passed without the slightest alteration.

On the other hand, “the favorite fruit of Americans of the generations to follow us will be the avocado,” as predicted in 1921, is possibly arguable, though there was no mention of toast — just the suggestion that “a few crackers and an avocado sprinkled with a little salt make a hearty and well-balanced lunch.”

One happily false prognostication was the repeated forecast of the rise of eugenics as a “scientific” endeavor.

“The organization of an artificial selection is only a question of time. It will be possible to renew as a whole, in a few centuries, all humanity, and to replace the mass by another much superior mass,” a “distinguished authority on anthropo-sociology” declared in a Science Service news item from 1921 . Another eugenicist proclaimed that “Eugenic Science” should be applied to “shed the light of reason on the primeval instinct of reproduction,” so that “disgenic marriages” would be banned just as bigamy and incest are.

In the century since, thanks to saner and more sophisticated knowledge of genetics (and more social enlightenment in general), eugenics has been disavowed by science and is now revived in spirit only by the ignorant or malevolent. And during that time, real science has progressed to an elevated degree of sophistication in many other ways, to an extent almost unimaginable to the scientists and journalists of the 1920s.

It turns out that the past century’s groundbreaking experimental discoveries, revolutionary theoretical revelations and prescient speculations have not eliminated science’s familiarity with false starts, unfortunate missteps and shortsighted prejudices.

When Science Service (now Society for Science) launched its mission, astronomers were unaware of the extent of the universe. No biologist knew what DNA did, or how brain chemistry regulated behavior. Geologists saw that Earth’s continents looked like separated puzzle pieces, but declared that to be a coincidence.

Modern scientists know better. Scientists now understand a lot more about the details of the atom’s interior, the molecules of life, the intricacies of the brain , the innards of the Earth and the expanse of the cosmos .

Yet somehow scientists still pursue the same questions, if now on higher levels of theoretical abstraction rooted in deeper layers of empirical evidence. We know how the molecules of life work, but not always how they react to novel diseases. We know how the brain works, except for those afflicted by dementia or depression (or when consciousness is part of the question). We know a lot about how the Earth works, but not enough to always foresee how it will respond to what humans are doing to it. We think we know a lot about the universe, but we’re not sure if ours is the only one, and we can’t explain how gravity, the dominant force across the cosmos, can coexist with the forces governing atoms.

It turns out that the past century’s groundbreaking experimental discoveries, revolutionary theoretical revelations and prescient speculations have not eliminated science’s familiarity with false starts, unfortunate missteps and shortsighted prejudices. Researchers today have expanded the scope of the reality they can explore, yet still stumble through the remaining uncharted jungles of nature’s facts and laws, seeking further clues to how the world works.

To paraphrase an old philosophy joke, science is more like it is today than it ever has been. In other words, science remains as challenging as ever to human inquiry. And the need to communicate its progress, perceived by Scripps and Ritter a century ago, remains as essential now as then.

Trustworthy journalism comes at a price.

Scientists and journalists share a core belief in questioning, observing and verifying to reach the truth. Science News reports on crucial research and discovery across science disciplines. We need your financial support to make it happen – every contribution makes a difference.

More Stories from Science News on Science & Society

Astronauts actually get stuck in space all the time

Scientists are getting serious about UFOs. Here’s why

‘Then I Am Myself the World’ ponders what it means to be conscious

Twisters asks if you can 'tame' a tornado. We have the answer

The world has water problems. This book has solutions

Does social status shape height?

In ‘Warming Up,’ the sports world’s newest opponent is climate change

‘After 1177 B.C.’ describes how societies fared when the Bronze Age ended

Subscribers, enter your e-mail address for full access to the Science News archives and digital editions.

Not a subscriber? Become one now .

Biomedical Beat Blog – National Institute of General Medical Sciences

Follow the process of discovery

Search this blog

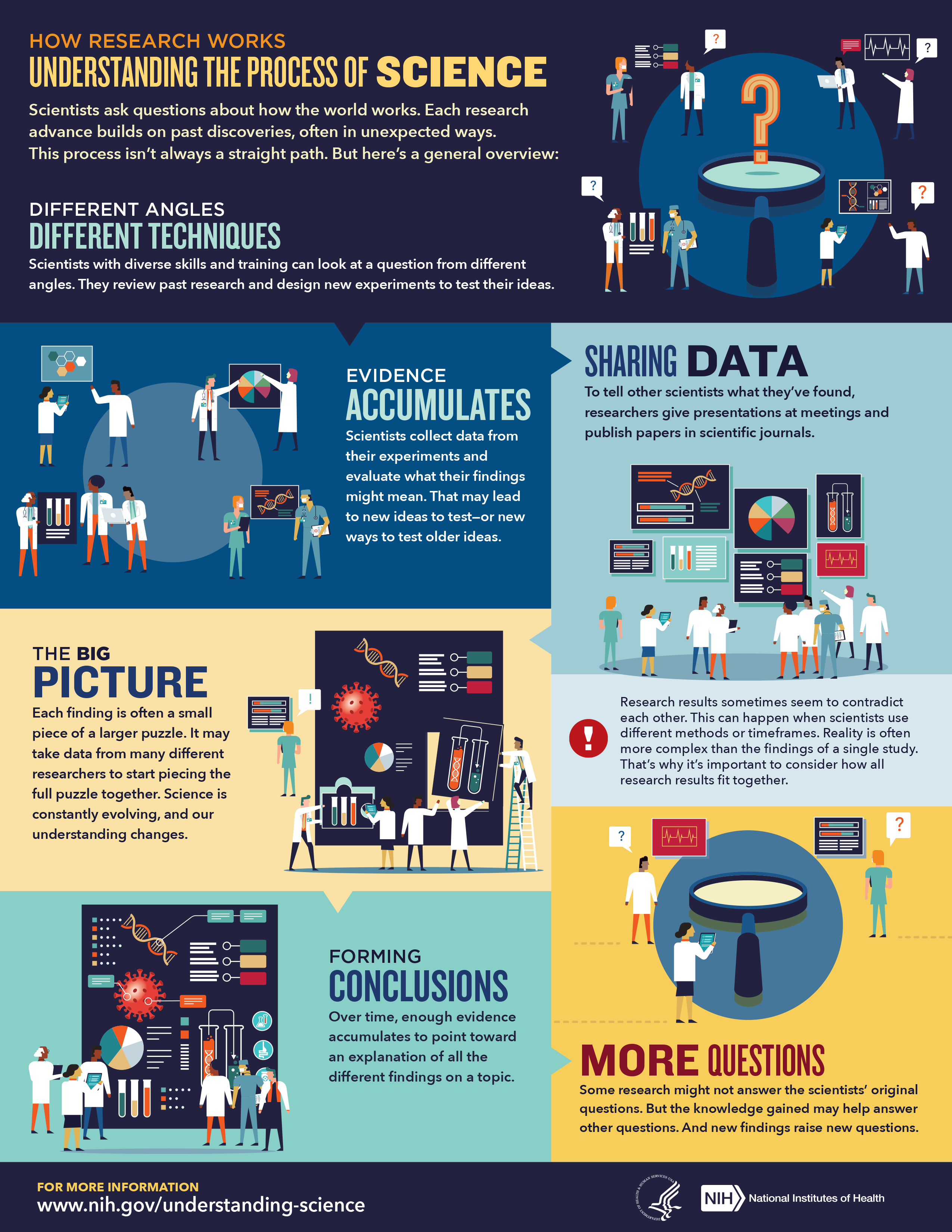

How research works: understanding the process of science.

Have you ever wondered how research works? How scientists make discoveries about our health and the world around us? Whether they’re studying plants, animals, humans, or something else in our world, they follow the scientific method. But this method isn’t always—or even usually—a straight line, and often the answers are unexpected and lead to more questions. Let’s dive in to see how it all works.

The Question Scientists start with a question about something they observe in the world. They develop a hypothesis, which is a testable prediction of what the answer to their question will be. Often their predictions turn out to be correct, but sometimes searching for the answer leads to unexpected outcomes.

The Techniques To test their hypotheses, scientists conduct experiments. They use many different tools and techniques, and sometimes they need to invent a new tool to fully answer their question. They may also work with one or more scientists with different areas of expertise to approach the question from other angles and get a more complete answer to their question.

The Evidence Throughout their experiments, scientists collect and analyze their data. They reach conclusions based on those analyses and determine whether their results match the predictions from their hypothesis. Often these conclusions trigger new questions and new hypotheses to test.

Researchers share their findings with one another by publishing papers in scientific journals and giving presentations at meetings. Data sharing is very important for the scientific field, and although some results may seem insignificant, each finding is often a small piece of a larger puzzle. That small piece may spark a new question and ultimately lead to new findings.

Sometimes research results seem to contradict each other, but this doesn’t necessarily mean that the results are wrong. Instead, it often means that the researchers used different tools, methods, or timeframes to obtain their results. The results of a single study are usually unable to fully explain the complex systems in the world around us. We must consider how results from many research studies fit together. This perspective gives us a more complete picture of what’s really happening.

Even if the scientific process doesn’t answer the original question, the knowledge gained may help provide other answers that lead to new hypotheses and discoveries.

Learn more about the importance of communicating how this process works in the NIH News in Health article, “ Explaining How Research Works .”

This post is a great supplement to Pathways: The Basic Science Careers Issue.

Pathways introduces the important role that scientists play in understanding the world around us, and all scientists use the scientific method as they make discoveries—which is explained in this post.

Learn more in our Educator’s Corner .

2 Replies to “How Research Works: Understanding the Process of Science”

Nice basic explanation. I believe informing the lay public on how science works, how parts of the body interact, etc. is a worthwhile endeavor. You all Rock! Now, we need to spread the word ‼️❗️‼️ Maybe eith a unique app. And one day, with VR and incentives to read & answer a couple questions.

As you know, the importance of an informed population is what will keep democracy alive. Plus it will improve peoples overall wellness & life outcomes.

Thanks for this clear explanation for the person who does not know science. Without getting too technical or advanced, it might be helpful to follow your explanation of replication with a reference to meta-analysis. You might say something as simple as, “Meta-analysis is a method for doing research on all the best research; meta-analytic research confirms the overall trend in results, even when the best studies show different results.”

Comments are closed.

Subscribe to Biomedical Beat

Get our latest blog posts delivered straight to your inbox! Sign Up Here

Featured Topics

Featured series.

A series of random questions answered by Harvard experts.

Explore the Gazette

Read the latest, should kids play wordle.

How moms may be affecting STEM gender gap

The answer to your search may depend on where you live

How did life begin on earth a lightning strike of an idea..

Yahya Chaudhry

Harvard Correspondent

Researchers mimic early conditions on barren planet to test hypothesis of ancient electrochemistry

About four billion years ago, Earth resembled the set of a summer sci-fi blockbuster. The planet’s surface was a harsh and barren landscape, recovering from hellish asteroid strikes, teeming with volcanic eruptions, and lacking enough nutrients to sustain even the simplest forms of life.

The atmosphere was composed predominantly of inert gases like nitrogen and carbon dioxide, meaning they did not easily engage in chemical reactions necessary to form the complex organic molecules that are the building blocks of life. Scientists have long sought to discover the key factors that enabled the planet’s chemistry to change enough to form and sustain life.

Now, new research zeroes in on how lightning strikes may have served as a vital spark, transforming the atmosphere of early Earth into a hotbed of chemical activity. In the study, published in Proceedings of the National Academy of Sciences , a team of Harvard scientists identified lightning-induced plasma electrochemistry as a potential source of reactive carbon and nitrogen compounds necessary for the emergence and survival of early life.

“The origin of life is one of the great unanswered questions facing chemistry,” said George M. Whitesides, senior author and the Woodford L. and Ann A. Flowers University Research Professor in the Department of Chemistry and Chemical Biology. How the fundamental building blocks of “nucleic acids, proteins, and metabolites emerged spontaneously remains unanswered.”

One of the most popular answers to this question is summarized in the so-called RNA World hypothesis, Whitesides said. That is the idea that available forms of the elements, such as water, soluble electrolytes, and common gases, formed the first biomolecules. In their study, the researchers found that lightning could provide accessible forms of nitrogen and carbon that led to the emergence and survival of biomolecules.

A plasma vessel used to mimic cloud-to-ground lightning and its resulting electrochemical reactions. The setup uses two electrodes, with one in the gas phase and the other submerged in water enriched with inorganic salts.

Credit: Haihui Joy Jiang

Researchers designed a plasma electrochemical setup that allowed them to mimic conditions of the early Earth and study the role lightning strikes might have had on its chemistry. They were able to generate high-energy sparks between gas and liquid phases — akin to the cloud-to-ground lightning strikes that would have been common billions of years ago.

The scientists discovered that their simulated lightning strikes could transform stable gases like carbon dioxide and nitrogen into highly reactive compounds. They found that carbon dioxide could be reduced to carbon monoxide and formic acid, while nitrogen could be converted into nitrate, nitrite, and ammonium ions.

These reactions occurred most efficiently at the interfaces between gas, liquid, and solid phases — regions where lightning strikes would naturally concentrate these products. This suggests that lightning strikes could have locally generated high concentrations of these vital molecules, providing diverse raw materials for the earliest forms of life to develop and thrive.

“Given what we’ve shown about interfacial lightning strikes, we are introducing different subsets of molecules, different concentrations, and different plausible pathways to life in the origin of life community,” said Thomas C. Underwood, co-lead author and Whitesides Lab postdoctoral fellow. “As opposed to saying that there’s one mechanism to create chemically reactive molecules and one key intermediate, we suggest that there is likely more than one reactive molecule that might have contributed to the pathway to life.”

The findings align with previous research suggesting that other energy sources, such as ultraviolet radiation, deep-sea vents, volcanoes, and asteroid impacts, could have also contributed to the formation of biologically relevant molecules. However, the unique advantage of cloud-to-ground lightning is its ability to drive high-voltage electrochemistry across different interfaces, connecting the atmosphere, oceans, and land.

The research adds a significant piece to the puzzle of life’s origins. By demonstrating how lightning could have contributed to the availability of essential nutrients, the study opens new avenues for understanding the chemical pathways that led to the emergence of life on Earth. As the research team continues to explore these reactions, they hope to uncover more about the early conditions that made life possible and to improve modern applications.

“Building on our work, we are now experimentally looking at how plasma electrochemical reactions may influence nitrogen isotopes in products, which has a potential geological relevance,” said co-lead author Haihui Joy Jiang, a former Whitesides lab postdoctoral fellow. “We are also interested in this research from an energy-efficiency and environmentally friendly perspective on chemical production. We are studying plasma as a tool to develop new methods of making chemicals and to drive green chemical processes, such as producing fertilizer used today.”

Harvard co-authors included Professor Dimitar D. Sasselov in the Department of Astronomy and Professor James G. Anderson in the Department of Chemistry and Chemical Biology, Department of Earth and Planetary Sciences, and the Harvard John A. Paulson School of Engineering and Applied Sciences.

The study not only sheds light on the past but also has implications for the search for life on other planets. Processes the researchers described could potentially contribute to the emergence of life beyond Earth.

“Lightning has been observed on Jupiter and Saturn; plasmas and plasma-induced chemistry can exist beyond our solar system,” Jiang said. “Moving forward, our setup is useful for mimicking environmental conditions of different planets, as well as exploring reaction pathways triggered by lightning and its analogs.”

Share this article

You might like.

Early childhood development expert has news for parents who think the popular online game will turn their children into super readers

Research suggests encouragement toward humanities appears to be very influential for daughters

Researchers find ‘language bias’ in various site algorithms, raising concerns about fallout for social divisions among nations

Garber to serve as president through 2026-27 academic year

Search for successor will launch in 2026

Finding right mix on campus speech policies

Legal, political scholars discuss balancing personal safety, constitutional rights, academic freedom amid roiling protests, cultural shifts

Good genes are nice, but joy is better

Harvard study, almost 80 years old, has proved that embracing community helps us live longer, and be happier

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

Identifying problems and solutions in scientific text

Kevin heffernan.

Department of Computer Science and Technology, University of Cambridge, 15 JJ Thomson Avenue, Cambridge, CB3 0FD UK

Simone Teufel

Research is often described as a problem-solving activity, and as a result, descriptions of problems and solutions are an essential part of the scientific discourse used to describe research activity. We present an automatic classifier that, given a phrase that may or may not be a description of a scientific problem or a solution, makes a binary decision about problemhood and solutionhood of that phrase. We recast the problem as a supervised machine learning problem, define a set of 15 features correlated with the target categories and use several machine learning algorithms on this task. We also create our own corpus of 2000 positive and negative examples of problems and solutions. We find that we can distinguish problems from non-problems with an accuracy of 82.3%, and solutions from non-solutions with an accuracy of 79.7%. Our three most helpful features for the task are syntactic information (POS tags), document and word embeddings.

Introduction

Problem solving is generally regarded as the most important cognitive activity in everyday and professional contexts (Jonassen 2000 ). Many studies on formalising the cognitive process behind problem-solving exist, for instance (Chandrasekaran 1983 ). Jordan ( 1980 ) argues that we all share knowledge of the thought/action problem-solution process involved in real life, and so our writings will often reflect this order. There is general agreement amongst theorists that state that the nature of the research process can be viewed as a problem-solving activity (Strübing 2007 ; Van Dijk 1980 ; Hutchins 1977 ; Grimes 1975 ).

One of the best-documented problem-solving patterns was established by Winter ( 1968 ). Winter analysed thousands of examples of technical texts, and noted that these texts can largely be described in terms of a four-part pattern consisting of Situation, Problem, Solution and Evaluation. This is very similar to the pattern described by Van Dijk ( 1980 ), which consists of Introduction-Theory, Problem-Experiment-Comment and Conclusion. The difference is that in Winter’s view, a solution only becomes a solution after it has been evaluated positively. Hoey changes Winter’s pattern by introducing the concept of Response in place of Solution (Hoey 2001 ). This seems to describe the situation in science better, where evaluation is mandatory for research solutions to be accepted by the community. In Hoey’s pattern, the Situation (which is generally treated as optional) provides background information; the Problem describes an issue which requires attention; the Response provides a way to deal with the issue, and the Evaluation assesses how effective the response is.

An example of this pattern in the context of the Goldilocks story can be seen in Fig. 1 . In this text, there is a preamble providing the setting of the story (i.e. Goldilocks is lost in the woods), which is called the Situation in Hoey’s system. A Problem in encountered when Goldilocks becomes hungry. Her first Response is to try the porridge in big bear’s bowl, but she gives this a negative Evaluation (“too hot!”) and so the pattern returns to the Problem. This continues in a cyclic fashion until the Problem is finally resolved by Goldilocks giving a particular Response a positive Evaluation of baby bear’s porridge (“it’s just right”).

Example of problem-solving pattern when applied to the Goldilocks story.

Reproduced with permission from Hoey ( 2001 )

It would be attractive to detect problem and solution statements automatically in text. This holds true both from a theoretical and a practical viewpoint. Theoretically, we know that sentiment detection is related to problem-solving activity, because of the perception that “bad” situations are transformed into “better” ones via problem-solving. The exact mechanism of how this can be detected would advance the state of the art in text understanding. In terms of linguistic realisation, problem and solution statements come in many variants and reformulations, often in the form of positive or negated statements about the conditions, results and causes of problem–solution pairs. Detecting and interpreting those would give us a reasonably objective manner to test a system’s understanding capacity. Practically, being able to detect any mention of a problem is a first step towards detecting a paper’s specific research goal. Being able to do this has been a goal for scientific information retrieval for some time, and if successful, it would improve the effectiveness of scientific search immensely. Detecting problem and solution statements of papers would also enable us to compare similar papers and eventually even lead to automatic generation of review articles in a field.

There has been some computational effort on the task of identifying problem-solving patterns in text. However, most of the prior work has not gone beyond the usage of keyword analysis and some simple contextual examination of the pattern. Flowerdew ( 2008 ) presents a corpus-based analysis of lexio-grammatical patterns for problem and solution clauses using articles from professional and student reports. Problem and solution keywords were used to search their corpora, and each occurrence was analysed to determine grammatical usage of the keyword. More interestingly, the causal category associated with each keyword in their context was also analysed. For example, Reason–Result or Means-Purpose were common causal categories found to be associated with problem keywords.

The goal of the work by Scott ( 2001 ) was to determine words which are semantically similar to problem and solution, and to determine how these words are used to signal problem-solution patterns. However, their corpus-based analysis used articles from the Guardian newspaper. Since the domain of newspaper text is very different from that of scientific text, we decided not to consider those keywords associated with problem-solving patterns for use in our work.

Instead of a keyword-based approach, Charles ( 2011 ) used discourse markers to examine how the problem-solution pattern was signalled in text. In particular, they examined how adverbials associated with a result such as “thus, therefore, then, hence” are used to signal a problem-solving pattern.

Problem solving also has been studied in the framework of discourse theories such as Rhetorical Structure Theory (Mann and Thompson 1988 ) and Argumentative Zoning (Teufel et al. 2000 ). Problem- and solutionhood constitute two of the original 23 relations in RST (Mann and Thompson 1988 ). While we concentrate solely on this aspect, RST is a general theory of discourse structure which covers many intentional and informational relations. The relationship to Argumentative Zoning is more complicated. The status of certain statements as problem or solutions is one important dimension in the definitions of AZ categories. AZ additionally models dimensions other than problem-solution hood (such as who a scientific idea belongs to, or which intention the authors might have had in stating a particular negative or positive statement). When forming categories, AZ combines aspects of these dimensions, and “flattens” them out into only 7 categories. In AZ it is crucial who it is that experiences the problems or contributes a solution. For instance, the definition of category “CONTRAST” includes statements that some research runs into problems, but only if that research is previous work (i.e., not if it is the work contributed in the paper itself). Similarly, “BASIS” includes statements of successful problem-solving activities, but only if they are achieved by previous work that the current paper bases itself on. Our definition is simpler in that we are interested only in problem solution structure, not in the other dimensions covered in AZ. Our definition is also more far-reaching than AZ, in that we are interested in all problems mentioned in the text, no matter whose problems they are. Problem-solution recognition can therefore be seen as one aspect of AZ which can be independently modelled as a “service task”. This means that good problem solution structure recognition should theoretically improve AZ recognition.

In this work, we approach the task of identifying problem-solving patterns in scientific text. We choose to use the model of problem-solving described by Hoey ( 2001 ). This pattern comprises four parts: Situation, Problem, Response and Evaluation. The Situation element is considered optional to the pattern, and so our focus centres on the core pattern elements.

Goal statement and task

Many surface features in the text offer themselves up as potential signals for detecting problem-solving patterns in text. However, since Situation is an optional element, we decided to focus on either Problem or Response and Evaluation as signals of the pattern. Moreover, we decide to look for each type in isolation. Our reasons for this are as follows: It is quite rare for an author to introduce a problem without resolving it using some sort of response, and so this is a good starting point in identifying the pattern. There are exceptions to this, as authors will sometimes introduce a problem and then leave it to future work, but overall there should be enough signal in the Problem element to make our method of looking for it in isolation worthwhile. The second signal we look for is the use of Response and Evaluation within the same sentence. Similar to Problem elements, we hypothesise that this formulation is well enough signalled externally to help us in detecting the pattern. For example, consider the following Response and Evaluation: “One solution is to use smoothing”. In this statement, the author is explicitly stating that smoothing is a solution to a problem which must have been mentioned in a prior statement. In scientific text, we often observe that solutions implicitly contain both Response and Evaluation (positive) elements. Therefore, due to these reasons there should be sufficient external signals for the two pattern elements we concentrate on here.

When attempting to find Problem elements in text, we run into the issue that the word “problem” actually has at least two word senses that need to be distinguished. There is a word sense of “problem” that means something which must be undertaken (i.e. task), while another sense is the core sense of the word, something that is problematic and negative. Only the latter sense is aligned with our sense of problemhood. This is because the simple description of a task does not predispose problemhood, just a wish to perform some act. Consider the following examples, where the non-desired word sense is being used:

- “Das and Petrov (2011) also consider the problem of unsupervised bilingual POS induction”. (Chen et al. 2011 ).

- “In this paper, we describe advances on the problem of NER in Arabic Wikipedia”. (Mohit et al. 2012 ).

Here, the author explicitly states that the phrases in orange are problems, they align with our definition of research tasks and not with what we call here ‘problematic problems’. We will now give some examples from our corpus for the desired, core word sense:

- “The major limitation of supervised approaches is that they require annotations for example sentences.” (Poon and Domingos 2009 ).

- “To solve the problem of high dimensionality we use clustering to group the words present in the corpus into much smaller number of clusters”. (Saha et al. 2008 ).

When creating our corpus of positive and negative examples, we took care to select only problem strings that satisfy our definition of problemhood; “ Corpus creation ” section will explain how we did that.

Corpus creation

Our new corpus is a subset of the latest version of the ACL anthology released in March, 2016 1 which contains 22,878 articles in the form of PDFs and OCRed text. 2

The 2016 version was also parsed using ParsCit (Councill et al. 2008 ). ParsCit recognises not only document structure, but also bibliography lists as well as references within running text. A random subset of 2500 papers was collected covering the entire ACL timeline. In order to disregard non-article publications such as introductions to conference proceedings or letters to the editor, only documents containing abstracts were considered. The corpus was preprocessed using tokenisation, lemmatisation and dependency parsing with the Rasp Parser (Briscoe et al. 2006 ).

Definition of ground truth

Our goal was to define a ground truth for problem and solution strings, while covering as wide a range as possible of syntactic variations in which such strings naturally occur. We also want this ground truth to cover phenomena of problem and solution status which are applicable whether or not the problem or solution status is explicitly mentioned in the text.

To simplify the task, we only consider here problem and solution descriptions that are at most one sentence long. In reality, of course, many problem descriptions and solution descriptions go beyond single sentence, and require for instance an entire paragraph. However, we also know that short summaries of problems and solutions are very prevalent in science, and also that these tend to occur in the most prominent places in a paper. This is because scientists are trained to express their contribution and the obstacles possibly hindering their success, in an informative, succinct manner. That is the reason why we can afford to only look for shorter problem and solution descriptions, ignoring those that cross sentence boundaries.

To define our ground truth, we examined the parsed dependencies and looked for a target word (“problem/solution”) in subject position, and then chose its syntactic argument as our candidate problem or solution phrase. To increase the variation, i.e., to find as many different-worded problem and solution descriptions as possible, we additionally used semantically similar words (near-synonyms) of the target words “problem” or “solution” for the search. Semantic similarity was defined as cosine in a deep learning distributional vector space, trained using Word2Vec (Mikolov et al. 2013 ) on 18,753,472 sentences from a biomedical corpus based on all full-text Pubmed articles (McKeown et al. 2016 ). From the 200 words which were semantically closest to “problem”, we manually selected 28 clear synonyms. These are listed in Table 1 . From the 200 semantically closest words to “solution” we similarly chose 19 (Table 2 ). Of the sentences matching our dependency search, a subset of problem and solution candidate sentences were randomly selected.

Selected words for use in problem candidate phrase extraction

| Bottleneck | Caveat | Challenge | Complication | Conundrum | Difficulty |

| Flaw | Impediment | Issue | Limitation | Mistake | Obstacle |

| Riddle | Shortcoming | Struggle | Subproblem | Threat | Tragedy |

| Dilemma | Disadvantage | Drawback | Fault | Pitfall | Problem |

| Uncertainty | Weakness | Trouble | Quandary |

Selected words for use in solution candidate phrase extraction

| Solution | Alternative | Suggestion | Idea | Way | Proposal |

| Technique | Remedy | Task | Step | Answer | Approach |

| Approaches | Strategy | Method | Methodology | Scheme | Answers |

| Workaround |

An example of this is shown in Fig. 2 . Here, the target word “drawback” is in subject position (highlighted in red), and its clausal argument (ccomp) is “(that) it achieves low performance” (highlighted in purple). Examples of other arguments we searched for included copula constructions and direct/indirect objects.

Example of our extraction method for problems using dependencies. (Color figure online)

If more than one candidate was found in a sentence, one was chosen at random. Non-grammatical sentences were excluded; these might appear in the corpus as a result of its source being OCRed text.

800 candidates phrases expressing problems and solutions were automatically extracted (1600 total) and then independently checked for correctness by two annotators (the two authors of this paper). Both authors found the task simple and straightforward. Correctness was defined by two criteria:

- An unexplained phenomenon or a problematic state in science; or

- A research question; or

- An artifact that does not fulfil its stated specification.

- The phrase must not lexically give away its status as problem or solution phrase.

The second criterion saves us from machine learning cues that are too obvious. If for instance, the phrase itself contained the words “lack of” or “problematic” or “drawback”, our manual check rejected it, because it would be too easy for the machine learner to learn such cues, at the expense of many other, more generally occurring cues.

Sampling of negative examples

We next needed to find negative examples for both cases. We wanted them not to stand out on the surface as negative examples, so we chose them so as to mimic the obvious characteristics of the positive examples as closely as possible. We call the negative examples ‘non-problems’ and ‘non-solutions’ respectively. We wanted the only differences between problems and non-problems to be of a semantic nature, nothing that could be read off on the surface. We therefore sampled a population of phrases that obey the same statistical distribution as our problem and solution strings while making sure they really are negative examples. We started from sentences not containing any problem/solution words (i.e. those used as target words). From each such sentence, we at random selected one syntactic subtree contained in it. From these, we randomly selected a subset of negative examples of problems and solutions that satisfy the following conditions:

- The distribution of the head POS tags of the negative strings should perfectly match the head POS tags 3 of the positive strings. This has the purpose of achieving the same proportion of surface syntactic constructions as observed in the positive cases.

- The average lengths of the negative strings must be within a tolerance of the average length of their respective positive candidates e.g., non-solutions must have an average length very similar (i.e. + / - small tolerance) to solutions. We chose a tolerance value of 3 characters.

Again, a human quality check was performed on non-problems and non-solutions. For each candidate non-problem statement, the candidate was accepted if it did not contain a phenomenon, a problematic state, a research question or a non-functioning artefact. If the string expressed a research task, without explicit statement that there was anything problematic about it (i.e., the ‘wrong’ sense of “problem”, as described above), it was allowed as a non-problem. A clause was confirmed as a non-solution if the string did not represent both a response and positive evaluation.

If the annotator found that the sentence had been slightly mis-parsed, but did contain a candidate, they were allowed to move the boundaries for the candidate clause. This resulted in cleaner text, e.g., in the frequent case of coordination, when non-relevant constituents could be removed.

From the set of sentences which passed the quality-test for both independent assessors, 500 instances of positive and negative problems/solutions were randomly chosen (i.e. 2000 instances in total). When checking for correctness we found that most of the automatically extracted phrases which did not pass the quality test for problem-/solution-hood were either due to obvious learning cues or instances where the sense of problem-hood used is relating to tasks (cf. “ Goal statement and task ” section).

Experimental design

In our experiments, we used three classifiers, namely Naïve Bayes, Logistic Regression and a Support Vector Machine. For all classifiers an implementation from the WEKA machine learning library (Hall et al. 2009 ) was chosen. Given that our dataset is small, tenfold cross-validation was used instead of a held out test set. All significance tests were conducted using the (two-tailed) Sign Test (Siegel 1956 ).

Linguistic correlates of problem- and solution-hood

We first define a set of features without taking the phrase’s context into account. This will tell us about the disambiguation ability of the problem/solution description’s semantics alone. In particular, we cut out the rest of the sentence other than the phrase and never use it for classification. This is done for similar reasons to excluding certain ‘give-away’ phrases inside the phrases themselves (as explained above). As the phrases were found using templates, we know that the machine learner would simply pick up on the semantics of the template, which always contains a synonym of “problem” or “solution”, thus drowning out the more hidden features hopefully inherent in the semantics of the phrases themselves. If we allowed the machine learner to use these stronger features, it would suffer in its ability to generalise to the real task.

ngrams Bags of words are traditionally successfully used for classification tasks in NLP, so we included bags of words (lemmas) within the candidate phrases as one of our features (and treat it as a baseline later on). We also include bigrams and trigrams as multi-word combinations can be indicative of problems and solutions e.g., “combinatorial explosion”.

Polarity Our second feature concerns the polarity of each word in the candidate strings. Consider the following example of a problem taken from our dataset: “very conservative approaches to exact and partial string matches overgenerate badly”. In this sentence, words such as “badly” will be associated with negative polarity, therefore being useful in determining problem-hood. Similarly, solutions will often be associated with a positive sentiment e.g. “smoothing is a good way to overcome data sparsity” . To do this, we perform word sense disambiguation of each word using the Lesk algorithm (Lesk 1986 ). The polarity of the resulting synset in SentiWordNet (Baccianella et al. 2010 ) was then looked up and used as a feature.

Syntax Next, a set of syntactic features were defined by using the presence of POS tags in each candidate. This feature could be helpful in finding syntactic patterns in problems and solutions. We were careful not to base the model directly on the head POS tag and the length of each candidate phrase, as these are defining characteristics used for determining the non-problem and non-solution candidate set.

Negation Negation is an important property that can often greatly affect the polarity of a phrase. For example, a phrase containing a keyword pertinent to solution-hood may be a good indicator but with the presence of negation may flip the polarity to problem-hood e.g., “this can’t work as a solution”. Therefore, presence of negation is determined.

Exemplification and contrast Problems and solutions are often found to be coupled with examples as they allow the author to elucidate their point. For instance, consider the following solution: “Once the translations are generated, an obvious solution is to pick the most fluent alternative, e.g., using an n-gram language model”. (Madnani et al. 2012 ). To acknowledge this, we check for presence of exemplification. In addition to examples, problems in particular are often found when contrast is signalled by the author (e.g. “however, “but”), therefore we also check for presence of contrast in the problem and non-problem candidates only.

Discourse Problems and solutions have also been found to have a correlation with discourse properties. For example, problem-solving patterns often occur in the background sections of a paper. The rationale behind this is that the author is conventionally asked to objectively criticise other work in the background (e.g. describing research gaps which motivate the current paper). To take this in account, we examine the context of each string and capture the section header under which it is contained (e.g. Introduction, Future work). In addition, problems and solutions are often found following the Situation element in the problem-solving pattern (cf. “ Introduction ” section). This preamble setting up the problem or solution means that these elements are likely not to be found occurring at the beginning of a section (i.e. it will usually take some sort of introduction to detail how something is problematic and why a solution is needed). Therefore we record the distance from the candidate string to the nearest section header.

Subcategorisation and adverbials Solutions often involve an activity (e.g. a task). We also model the subcategorisation properties of the verbs involved. Our intuition was that since problematic situations are often described as non-actions, then these are more likely to be intransitive. Conversely solutions are often actions and are likely to have at least one argument. This feature was calculated by running the C&C parser (Curran et al. 2007 ) on each sentence. C&C is a supertagger and parser that has access to subcategorisation information. Solutions are also associated with resultative adverbial modification (e.g. “thus, therefore, consequently”) as it expresses the solutionhood relation between the problem and the solution. It has been seen to occur frequently in problem-solving patterns, as studied by Charles ( 2011 ). Therefore, we check for presence of resultative adverbial modification in the solution and non-solution candidate only.

Embeddings We also wanted to add more information using word embeddings. This was done in two different ways. Firstly, we created a Doc2Vec model (Le and Mikolov 2014 ), which was trained on ∼ 19 million sentences from scientific text (no overlap with our data set). An embedding was created for each candidate sentence. Secondly, word embeddings were calculated using the Word2Vec model (cf. “ Corpus creation ” section). For each candidate head, the full word embedding was included as a feature. Lastly, when creating our polarity feature we query SentiWordNet using synsets assigned by the Lesk algorithm. However, not all words are assigned a sense by Lesk, so we need to take care when that happens. In those cases, the distributional semantic similarity of the word is compared to two words with a known polarity, namely “poor” and “excellent”. These particular words have traditionally been consistently good indicators of polarity status in many studies (Turney 2002 ; Mullen and Collier 2004 ). Semantic similarity was defined as cosine similarity on the embeddings of the Word2Vec model (cf. “ Corpus creation ” section).

Modality Responses to problems in scientific writing often express possibility and necessity, and so have a close connection with modality. Modality can be broken into three main categories, as described by Kratzer ( 1991 ), namely epistemic (possibility), deontic (permission / request / wish) and dynamic (expressing ability).

Problems have a strong relationship to modality within scientific writing. Often, this is due to a tactic called “hedging” (Medlock and Briscoe 2007 ) where the author uses speculative language, often using Epistemic modality, in an attempt to make either noncommital or vague statements. This has the effect of allowing the author to distance themselves from the statement, and is often employed when discussing negative or problematic topics. Consider the following example of Epistemic modality from Nakov and Hearst ( 2008 ): “A potential drawback is that it might not work well for low-frequency words”.

To take this linguistic correlate into account as a feature, we replicated a modality classifier as described by (Ruppenhofer and Rehbein 2012 ). More sophisticated modality classifiers have been recently introduced, for instance using a wide range of features and convolutional neural networks, e.g, (Zhou et al. 2015 ; Marasović and Frank 2016 ). However, we wanted to check the effect of a simpler method of modality classification on the final outcome first before investing heavily into their implementation. We trained three classifiers using the subset of features which Ruppenhofer et al. reported as performing best, and evaluated them on the gold standard dataset provided by the authors 4 . The results of the are shown in Table 3 . The dataset contains annotations of English modal verbs on the 535 documents of the first MPQA corpus release (Wiebe et al. 2005 ).

Modality classifier results (precision/recall/f-measure) using Naïve Bayes (NB), logistic regression, and a support vector machine (SVM)

| Modality | Classification accuracy | ||

|---|---|---|---|

| NB | LR | SVM | |

| Epistemic | .74/.74/.74 | .75/.85/.80 | |

| Deontic | .94/.72/.81 | .86/.81/.83 | |

| Dynamic | .69/.70/.70 | ||

Italicized results reflect highest f-measure reported per modal category

Logistic Regression performed best overall and so this model was chosen for our upcoming experiments. With regards to the optative and concessive modal categories, they can be seen to perform extremely poorly, with the optative category receiving a null score across all three classifiers. This is due to a limitation in the dataset, which is unbalanced and contains very few instances of these two categories. This unbalanced data also is the reason behind our decision of reporting results in terms of recall, precision and f-measure in Table 3 .

The modality classifier was then retrained on the entirety of the dataset used by Ruppenhofer and Rehbein ( 2012 ) using the best performing model from training (Logistic Regression). This new model was then used in the upcoming experiment to predict modality labels for each instance in our dataset.

As can be seen from Table 4 , we are able to achieve good results for distinguishing a problematic statement from non-problematic one. The bag-of-words baseline achieves a very good performance of 71.0% for the Logistic Regression classifier, showing that there is enough signal in the candidate phrases alone to distinguish them much better than random chance.

Results distinguishing problems from non-problems using Naïve Bayes (NB), logistic regression (LR) and a support vector machine (SVM)

| Feature sets | Classification accuracy | |||

|---|---|---|---|---|

| NB | SVM | LR | ||

| 1 | Baseline | 65.6 | 67.8 | 71.0 |

| 2 | Bigrams | 61.3 | 60.5 | 59.0 |

| 3 | Contrast | 50.6 | 50.8 | 50.5 |

| 4 | Discourse | 60.3 | 60.2 | 60.0 |

| 5 | Doc2vec | 72.9* | 72.7 | 72.3 |

| 6 | Exemplification | 50.3 | 50.2 | 50.0 |

| 7 | Modality | 52.3 | 52.3 | 50.3 |

| 8 | Negation | 59.9 | 59.9 | 59.9 |

| 9 | Polarity | 60.2 | 66.3 | 65.5 |

| 10 | Syntax | 73.6* | 76.2** | 74.4 |

| 11 | Subcategorisation | 46.9 | 47.3 | 49.1 |

| 12 | Trigrams | 57.7 | 51.2 | 54.0 |

| 13 | Word2vec | 57.9 | 64.1 | 64.7 |

| 14 | Word2vec | 76.2*** | 77.2** | 76.6 |

| 15 | All features | 79.3*** | 81.8*** | ** |

| 16 | All features-{2,3,7,12} | 79.0** | ||

Each feature set’s performance is shown in isolation followed by combinations with other features. Tenfold stratified cross-validation was used across all experiments. Statistical significance with respect to the baseline at the p < 0.05 , 0.01, 0.001 levels is denoted by *, ** and *** respectively

Taking a look at Table 5 , which shows the information gain for the top lemmas,

Information gain (IG) in bits of top lemmas from the bag-of-words baseline in Table 4

| IG | Features |

|---|---|

| 0.048 | Not |

| 0.019 | Do |

| 0.018 | Single |

| 0.013 | Limited, experiment |

| 0.010 | Data, information |

| 0.009 | Error, many |

| 0.008 | Take, explosion |

we can see that the top lemmas are indeed indicative of problemhood (e.g. “limit”,“explosion”). Bigrams achieved good performance on their own (as did negation and discourse) but unfortunately performance deteriorated when using trigrams, particularly with the SVM and LR. The subcategorisation feature was the worst performing feature in isolation. Upon taking a closer look at our data, we saw that our hypothesis that intransitive verbs are commonly used in problematic statements was true, with over 30% of our problems (153) using them. However, due to our sampling method for the negative cases we also picked up many intransitive verbs (163). This explains the almost random chance performance (i.e. 50%) given that the distribution of intransitive verbs amongst the positive and negative candidates was almost even.

The modality feature was the most expensive to produce, but also didn’t perform very well is isolation. This surprising result may be partly due to a data sparsity issue

where only a small portion (169) of our instances contained modal verbs. The breakdown of how many types of modal senses which occurred is displayed in Table 6 . The most dominant modal sense was epistemic. This is a good indicator of problemhood (e.g. hedging, cf. “ Linguistic correlates of problem- and solution-hood ” section) but if the accumulation of additional data was possible, we think that this feature may have the potential to be much more valuable in determining problemhood. Another reason for the performance may be domain dependence of the classifier since it was trained on text from different domains (e.g. news). Additionally, modality has also shown to be helpful in determining contextual polarity (Wilson et al. 2005 ) and argumentation (Becker et al. 2016 ), so using the output from this modality classifier may also prove useful for further feature engineering taking this into account in future work.

Number of instances of modal senses

| No. of instances | |

|---|---|

| Epistemic | 97 |

| Deontic | 22 |

| Dynamic | 50 |

Polarity managed to perform well but not as good as we hoped. However, this feature also suffers from a sparsity issue resulting from cases where the Lesk algorithm (Lesk 1986 ) is not able to resolve the synset of the syntactic head.

Knowledge of syntax provides a big improvement with a significant increase over the baseline results from two of the classifiers.

Examining this in greater detail, POS tags with high information gain mostly included tags from open classes (i.e. VB-, JJ-, NN- and RB-). These tags are often more associated with determining polarity status than tags such as prepositions and conjunctions (i.e. adverbs and adjectives are more likely to be describing something with a non-neutral viewpoint).

The embeddings from Doc2Vec allowed us to obtain another significant increase in performance (72.9% with Naïve Bayes) over the baseline and polarity using Word2Vec provided the best individual feature result (77.2% with SVM).

Combining all features together, each classifier managed to achieve a significant result over the baseline with the best result coming from the SVM (81.8%). Problems were also better classified than non-problems as shown in the confusion matrix in Table 7 . The addition of the Word2Vec vectors may be seen as a form of smoothing in cases where previous linguistic features had a sparsity issue i.e., instead of a NULL entry, the embeddings provide some sort of value for each candidate. Particularly wrt. the polarity feature, cases where Lesk was unable to resolve a synset meant that a ZERO entry was added to the vector supplied to the machine learner. Amongst the possible combinations, the best subset of features was found by combining all features with the exception of bigrams, trigrams, subcategorisation and modality. This subset of features managed to improve results in both the Naïve Bayes and SVM classifiers with the highest overall result coming from the SVM (82.3%).

Confusion matrix for problems

| Predicted | ||

|---|---|---|

| Problem | Non-problem | |

| Actual | ||

| Problem | 414 | 86 |

| Non-problem | 91 | 409 |